Cover

Half-title

Title

Copyright

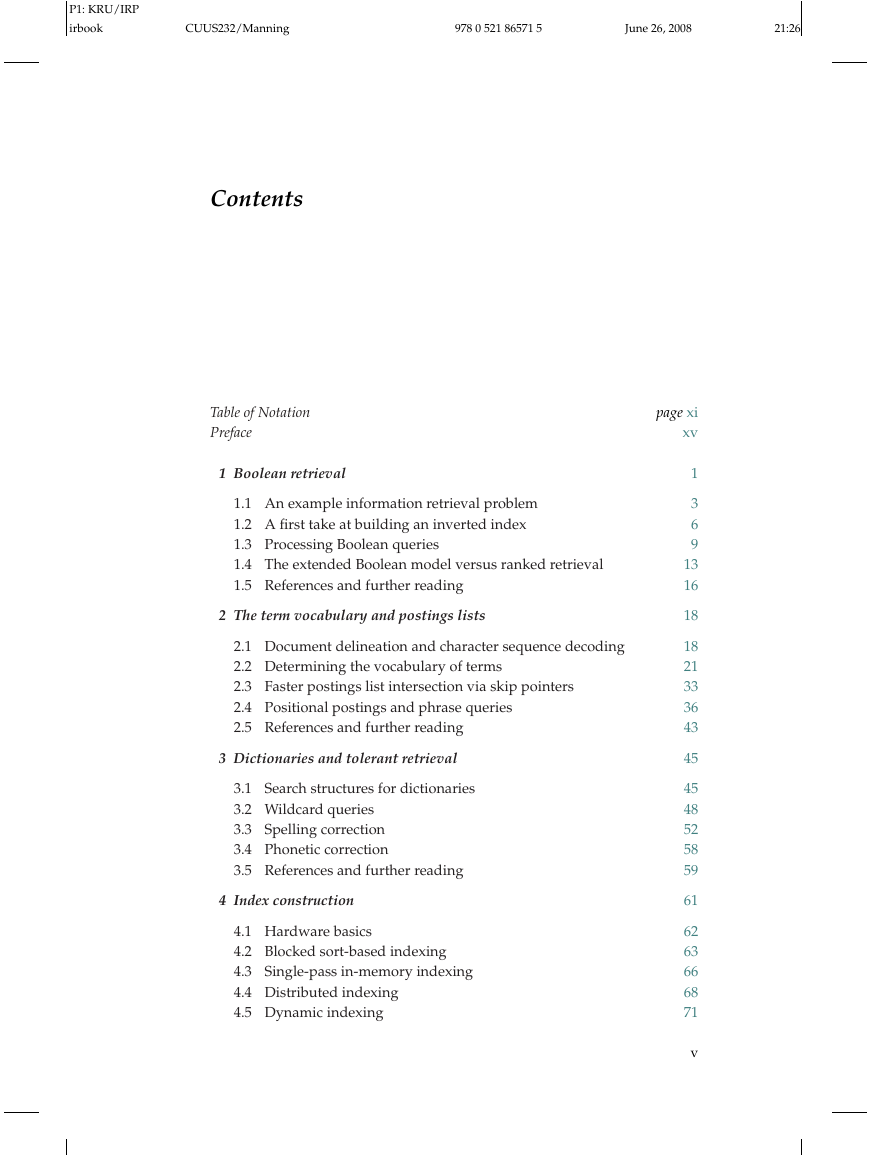

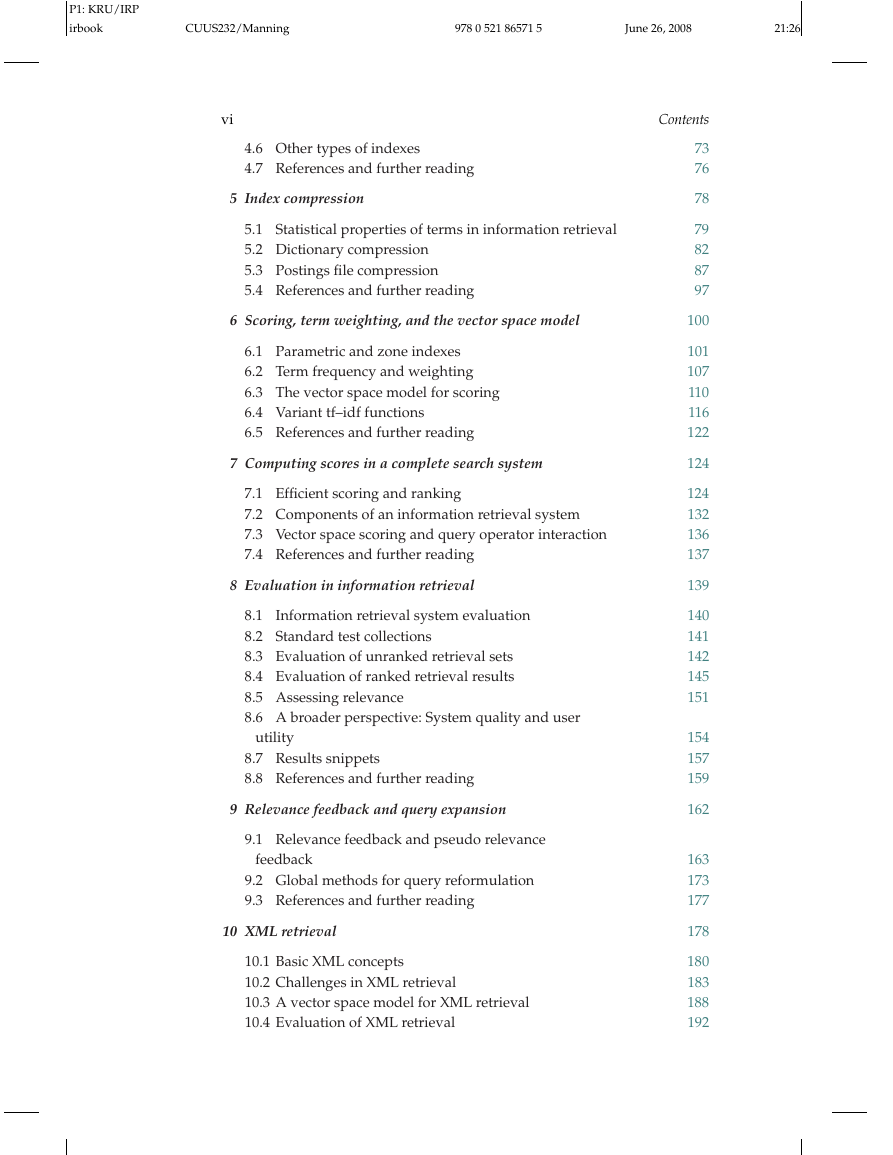

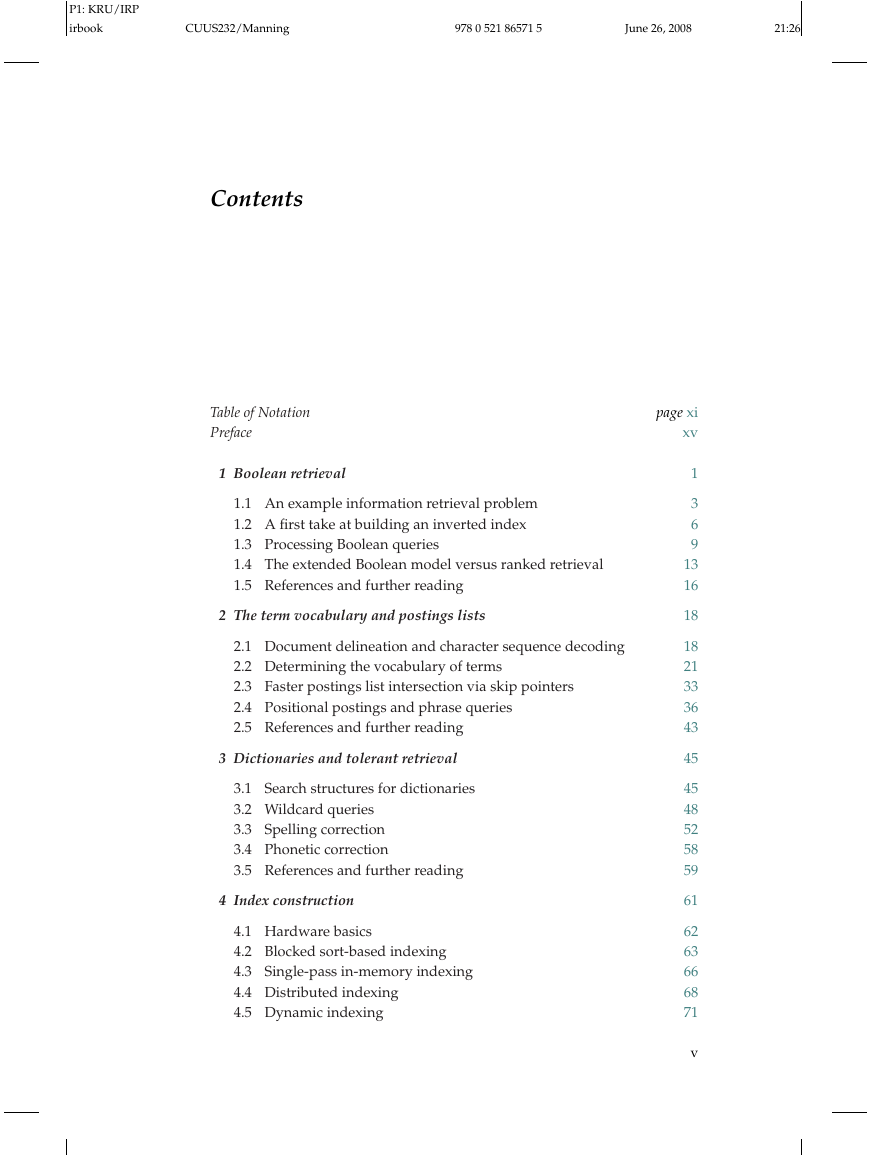

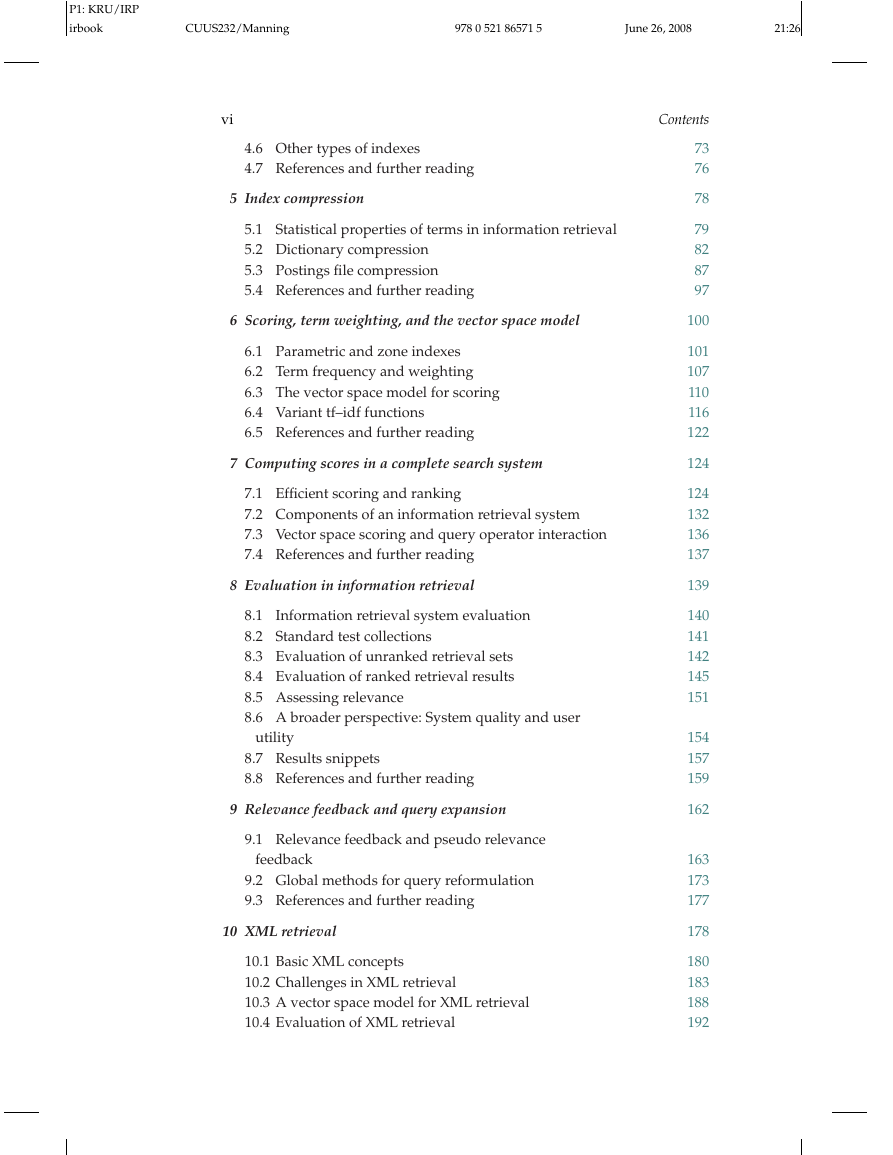

Contents

Table of Notation

Preface

1 Boolean retrieval

1.1 An example information retrieval problem

1.2 A first take at building an inverted index

1.3 Processing Boolean queries

1.4 The extended Boolean model versus ranked retrieval

1.5 References and further reading

2 The term vocabulary and postings lists

2.1 Document delineation and character sequence decoding

2.1.1 Obtaining the character sequence in a document

2.1.2 Choosing a document unit

2.2 Determining the vocabulary of terms

2.2.1 Tokenization

2.2.2 Dropping common terms: stop words

2.2.3 Normalization (equivalence classing of terms)

2.2.4 Stemming and lemmatization

2.3 Faster postings list intersection via skip pointers

2.4 Positional postings and phrase queries

2.4.1 Biword indexes

2.4.2 Positional indexes

2.4.3 Combination schemes

2.5 References and further reading

3 Dictionaries and tolerant retrieval

3.1 Search structures for dictionaries

3.2 Wildcard queries

3.2.1 General wildcard queries

3.2.2 k-gram indexes for wildcard queries

3.3 Spelling correction

3.3.1 Implementing spelling correction

3.3.2 Forms of spelling correction

3.3.3 Edit distance

3.3.4 k-gram indexes for spelling correction

3.3.5 Context-sensitive spelling correction

3.4 Phonetic correction

3.5 References and further reading

4 Index construction

4.1 Hardware basics

4.2 Blocked sort-based indexing

4.3 Single-pass in-memory indexing

4.4 Distributed indexing

4.5 Dynamic indexing

4.6 Other types of indexes

4.7 References and further reading

5 Index compression

5.1 Statistical properties of terms in information retrieval

5.1.1 Heaps' law: Estimating the number of terms

5.1.2 Zipf's law: Modeling the distribution of terms

5.2 Dictionary compression

5.2.1 Dictionary as a string

5.2.2 Blocked storage

5.3 Postings file compression

5.3.1 Variable byte codes

5.3.2 Gamma codes

5.4 References and further reading

6 Scoring, term weighting, and the vector space model

6.1 Parametric and zone indexes

6.1.1 Weighted zone scoring

6.1.2 Learning weights

6.1.3 The optimal weight g

6.2 Term frequency and weighting

6.2.1 Inverse document frequency

6.2.2 Tf--idf weighting

6.3 The vector space model for scoring

6.3.1 Dot products

6.3.2 Queries as vectors

6.3.3 Computing vector scores

6.4 Variant tf--idf functions

6.4.1 Sublinear tf scaling

6.4.2 Maximum tf normalization

6.4.3 Document and query weighting schemes

6.4.4 Pivoted normalized document length

6.5 References and further reading

7 Computing scores in a complete search system

7.1 Efficient scoring and ranking

7.1.1 Inexact top K document retrieval

7.1.2 Index elimination

7.1.3 Champion lists

7.1.4 Static quality scores and ordering

7.1.5 Impact ordering

7.1.6 Cluster pruning

7.2 Components of an information retrieval system

7.2.1 Tiered indexes

7.2.2 Query term proximity

7.2.3 Designing parsing and scoring functions

7.2.4 Putting it all together

7.3 Vector space scoring and query operator interaction

7.4 References and further reading

8 Evaluation in information retrieval

8.1 Information retrieval system evaluation

8.2 Standard test collections

8.3 Evaluation of unranked retrieval sets

8.4 Evaluation of ranked retrieval results

8.5 Assessing relevance

8.5.1 Critiques and justifications of the concept of relevance

8.6 A broader perspective: System quality and user utility

8.6.1 System issues

8.6.2 User utility

8.6.3 Refining a deployed system

8.7 Results snippets

8.8 References and further reading

9 Relevance feedback and query expansion

9.1 Relevance feedback and pseudo relevancefeedback

9.1.1 The Rocchio algorithm for relevance feedback

9.1.2 Probabilistic relevance feedback

9.1.3 When does relevance feedback work?

9.1.4 Relevance feedback on the web

9.1.5 Evaluation of relevance feedback strategies

9.1.6 Pseudo relevance feedback

9.1.7 Indirect relevance feedback

9.1.8 Summary

9.2 Global methods for query reformulation

9.2.1 Vocabulary tools for query reformulation

9.2.2 Query expansion

9.2.3 Automatic thesaurus generation

9.3 References and further reading

10 XML retrieval

10.1 Basic XML concepts

10.2 Challenges in XML retrieval

10.3 A vector space model for XML retrieval

10.4 Evaluation of XML retrieval

10.5 Text-centric versus data-centric XML retrieval

10.6 References and further reading

11 Probabilistic information retrieval

11.1 Review of basic probability theory

11.2 The probability ranking principle

11.2.1 The 1/0 loss case

11.2.2 The probability ranking principle with retrieval costs

11.3 The binary independence model

11.3.1 Deriving a ranking function for query terms

11.3.2 Probability estimates in theory

11.3.3 Probability estimates in practice

11.3.4 Probabilistic approaches to relevance feedback

11.4 An appraisal and some extensions

11.4.1 An appraisal of probabilistic models

11.4.2 Tree-structured dependencies between terms

11.4.3 Okapi BM25: A nonbinary model

11.4.4 Bayesian network approaches to information retrieval

11.5 References and further reading

12 Language models for information retrieval

12.1 Language models

12.1.1 Finite automata and language models

12.1.2 Types of language models

12.1.3 Multinomial distributions over words

12.2 The query likelihood model

12.2.1 Using query likelihood language models in IR

12.2.2 Estimating the query generation probability

12.2.3 Ponte and Croft's experiments

12.3 Language modeling versus other approachesin information retrieval

12.4 Extended language modeling approaches

12.5 References and further reading

13 Text classification and Naive Bayes

13.1 The text classification problem

13.2 Naive Bayes text classification

13.2.1 Relation to multinomial unigram language model

13.3 The Bernoulli model

13.4 Properties of Naive Bayes

13.4.1 A variant of the multinomial model

13.5 Feature selection

13.5.1 Mutual information

13.5.2 Chi2 Feature selection

13.5.3 Frequency-based feature selection

13.5.4 Feature selection for multiple classifiers

13.5.5 Comparison of feature selection methods

13.6 Evaluation of text classification

13.7 References and further reading

14 Vector space classification

14.1 Document representations and measures of relatedness in vector spaces

14.2 Rocchio classification

14.3 k nearest neighbor

14.3.1 Time complexity and optimality of k nearest neighbor

14.4 Linear versus nonlinear classifiers

14.5 Classification with more than two classes

14.6 The bias--variance tradeoff

14.7 References and further reading

15 Support vector machines and machine learning on documents

15.1 Support vector machines: The linearly separable case

15.2 Extensions to the support vector machine model

15.2.1 Soft margin classification

15.2.2 Multiclass support vector machines

15.2.3 Nonlinear support vector machines

15.2.4 Experimental results

15.3 Issues in the classification of text documents

15.3.1 Choosing what kind of classifier to use

15.3.2 Improving classifier performance

15.4 Machine-learning methods in ad hoc information retrieval

15.4.1 A simple example of machine-learned scoring

15.4.2 Result ranking by machine learning

15.5 References and further reading

16 Flat clustering

16.1 Clustering in information retrieval

16.2 Problem statement

16.2.1 Cardinality -- The number of clusters

16.3 Evaluation of clustering

16.4 K-means

16.4.1 Cluster cardinality in K-means

16.5 Model-based clustering

16.6 References and further reading

17 Hierarchical clustering

17.1 Hierarchical agglomerative clustering

17.2 Single-link and complete-link clustering

17.2.1 Time complexity

17.3 Group-average agglomerative clustering

17.4 Centroid clustering

17.5 Optimality of hierarchical agglomerative clustering

17.6 Divisive clustering

17.7 Cluster labeling

17.8 Implementation notes

17.9 References and further reading

18 Matrix decompositions and latent semantic indexing

18.1 Linear algebra review

18.1.1 Matrix decompositions

18.2 Term--document matrices and singular value decompositions

18.3 Low-rank approximations

18.4 Latent semantic indexing

18.5 References and further reading

19 Web search basics

19.1 Background and history

19.2 Web characteristics

19.2.1 The web graph

19.2.2 Spam

19.3 Advertising as the economic model

19.4 The search user experience

19.4.1 User query needs

19.5 Index size and estimation

19.6 Near-duplicates and shingling

19.7 References and further reading

20 Web crawling and indexes

20.1 Overview

20.1.1 Features a crawler must provide

20.1.2 Features a crawler should provide

20.2 Crawling

20.2.1 Crawler architecture

20.2.2 DNS resolution

20.2.3 The URL frontier

20.3 Distributing indexes

20.4 Connectivity servers

20.5 References and further reading

21 Link analysis

21.1 The Web as a graph

21.1.1 Anchor text and the web graph

21.2 PageRank

21.2.1 Markov chains

21.2.2 The PageRank computation

21.2.3 Topic-specific PageRank

21.3 Hubs and authorities

21.3.1 Choosing the subset of the Web

21.4 References and further reading

Bibliography

Index

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc