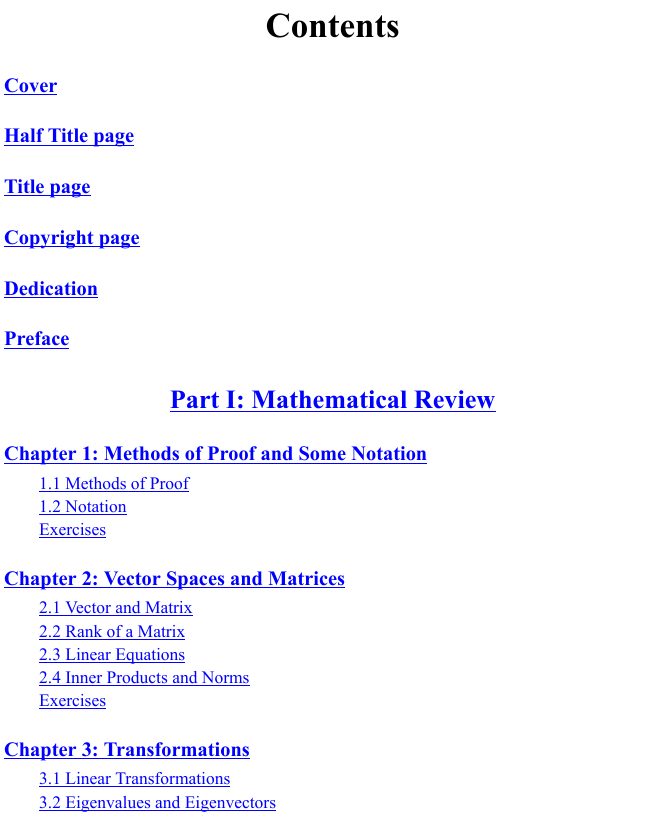

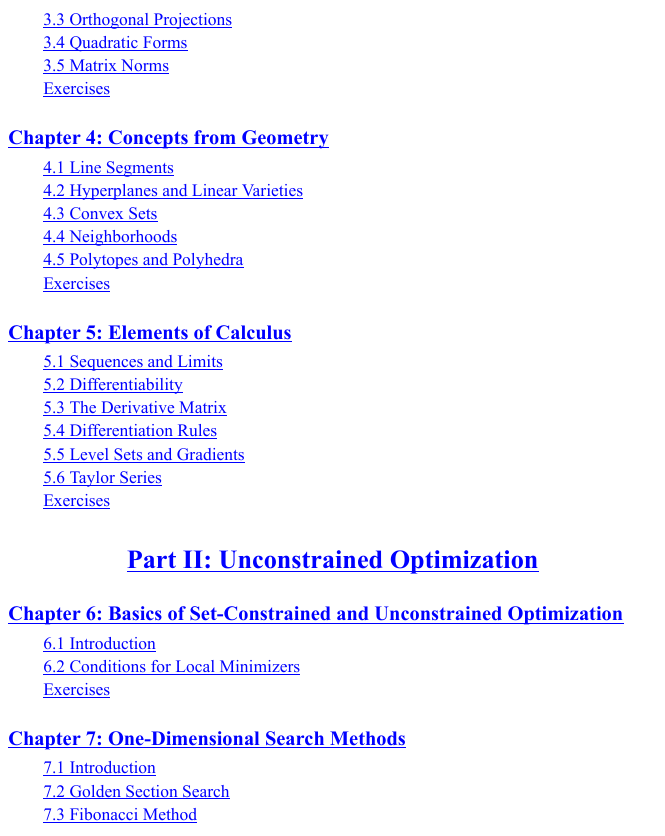

PART I

MATHEMATICAL REVIEW

CHAPTER 1

METHODS OF PROOF AND SOME NOTATION

1.1 Methods of Proof

1.2 Notation

EXERCISES

CHAPTER 2

VECTOR SPACES AND MATRICES

2.1 Vector and Matrix

2.2 Rank of a Matrix

2.3 Linear Equations

2.4 Inner Products and Norms

EXERCISES

CHAPTER 3

TRANSFORMATIONS

3.1 Linear Transformations

3.2 Eigenvalues and Eigenvectors

3.3 Orthogonal Projections

3.4 Quadratic Forms

3.5 Matrix Norms

EXERCISES

Chapter 4

CONCEPTS FROM GEOMETRY

4.1 Line Segments

4.2 Hyperplanes and Linear Varieties

4.3 Convex Sets

4.4 Neighborhoods

4.5 Polytopes and Polyhedra

EXERCISES

CHAPTER 5

ELEMENTS OF CALCULUS

5.1 Sequences and Limits

5.2 Differentiability

5.3 The Derivative Matrix

5.4 Differentiation Rules

5.5 Level Sets and Gradients

5.6 Taylor Series

EXERCISES

PART II

UNCONSTRAINED OPTIMIZATION

CHAPTER 6

BASICS OF SET-CONSTRAINED AND UNCONSTRAINED OPTIMIZATION

6.1 Introduction

6.2 Conditions for Local Minimizers

EXERCISES

CHAPTER 7

ONE-DIMENSIONAL SEARCH METHODS

7.1 Introduction

7.2 Golden Section Search

7.3 Fibonacci Method

7.4 Bisection Method

7.5 Newton’s Method

7.6 Secant Method

7.7 Bracketing

7.8 Line Search in Multidimensional Optimization

EXERCISES

CHAPTER 8

GRADIENT METHODS

8.1 Introduction

8.2 The Method of Steepest Descent

8.3 Analysis of Gradient Methods

Convergence

Convergence Rate

EXERCISES

CHAPTER 9

NEWTON’S METHOD

9.1 Introduction

9.2 Analysis of Newton’s Method

9.3 Levenberg-Marquardt Modification

9.4 Newton’s Method for Nonlinear Least Squares

EXERCISES

CHAPTER 10

CONJUGATE DIRECTION METHODS

10.1 Introduction

10.2 The Conjugate Direction Algorithm

10.3 The Conjugate Gradient Algorithm

10.4 The Conjugate Gradient Algorithm for Nonquadratic Problems

EXERCISES

CHAPTER 11

QUASI-NEWTON METHODS

11.1 Introduction

11.2 Approximating the Inverse Hessian

11.3 The Rank One Correction Formula

11.4 The DFP Algorithm

11.5 The BFGS Algorithm

EXERCISES

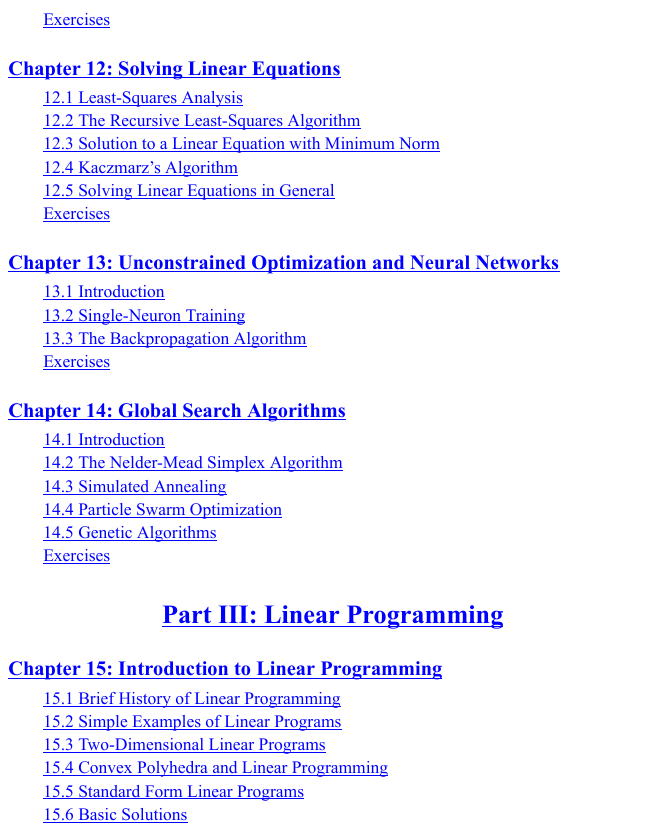

CHAPTER 12

SOLVING LINEAR EQUATIONS

12.1 Least-Squares Analysis

12.2 The Recursive Least-Squares Algorithm

12.3 Solution to a Linear Equation with Minimum Norm

12.4 Kaczmarz’s Algorithm

12.5 Solving Linear Equations in General

EXERCISES

CHAPTER 13

UNCONSTRAINED OPTIMIZATION AND NEURAL NETWORKS

13.1 Introduction

13.2 Single-Neuron Training

13.3 The Backpropagation Algorithm

EXERCISES

CHAPTER 14

GLOBAL SEARCH ALGORITHMS

14.1 Introduction

14.2 The Nelder-Mead Simplex Algorithm

14.3 Simulated Annealing

Randomized Search

Simulated Annealing Algorithm

14.4 Particle Swarm Optimization

Basic PSO Algorithm

Variations

14.5 Genetic Algorithms

Basic Description

Analysis of Genetic Algorithms

Real-Number Genetic Algorithms

EXERCISES

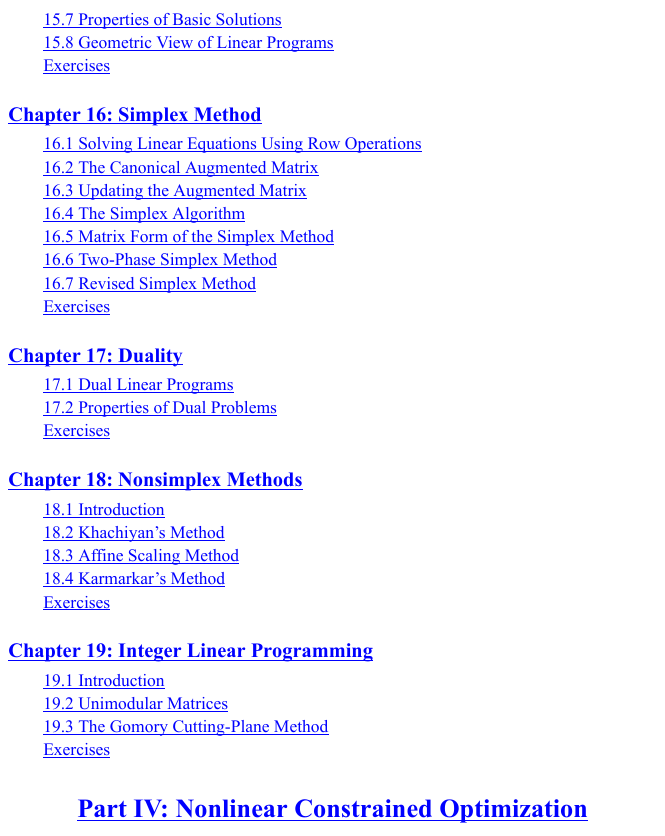

PART III

LINEAR PROGRAMMING

CHAPTER 15

INTRODUCTION TO LINEAR PROGRAMMING

15.1 Brief History of Linear Programming

15.2 Simple Examples of Linear Programs

15.3 Two-Dimensional Linear Programs

15.4 Convex Polyhedra and Linear Programming

15.5 Standard Form Linear Programs

15.6 Basic Solutions

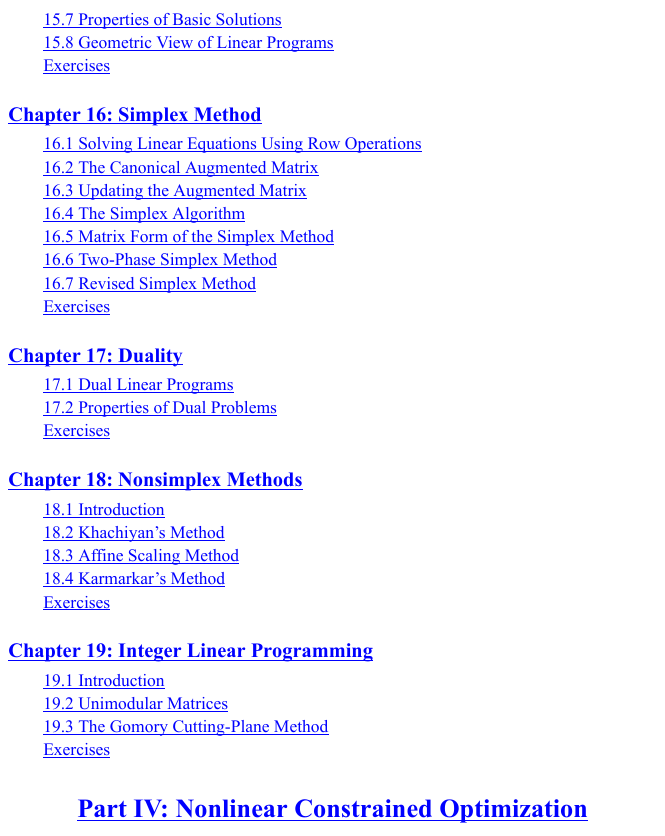

15.7 Properties of Basic Solutions

15.8 Geometric View of Linear Programs

EXERCISES

CHAPTER 16

SIMPLEX METHOD

16.1 Solving Linear Equations Using Row Operations

16.2 The Canonical Augmented Matrix

16.3 Updating the Augmented Matrix

16.4 The Simplex Algorithm

16.5 Matrix Form of the Simplex Method

16.6 Two-Phase Simplex Method

16.7 Revised Simplex Method

Revised Simplex Method

EXERCISES

CHAPTER 17

DUALITY

17.1 Dual Linear Programs

17.2 Properties of Dual Problems

EXERCISES

CHAPTER 18

NONSIMPLEX METHODS

18.1 Introduction

18.2 Khachiyan’s Method

18.3 Affine Scaling Method

Basic Algorithm

Two-Phase Method

18.4 Karmarkar’s Method

Basic Ideas

Karmarkar’s Canonical Form

Karmarkar’s Restricted Problem

From General Form to Karmarkar’s Canonical Form

The Algorithm

EXERCISES

CHAPTER 19

INTEGER LINEAR PROGRAMMING

19.1 Introduction

19.2 Unimodular Matrices

19.3 The Gomory Cutting-Plane Method

EXERCISES

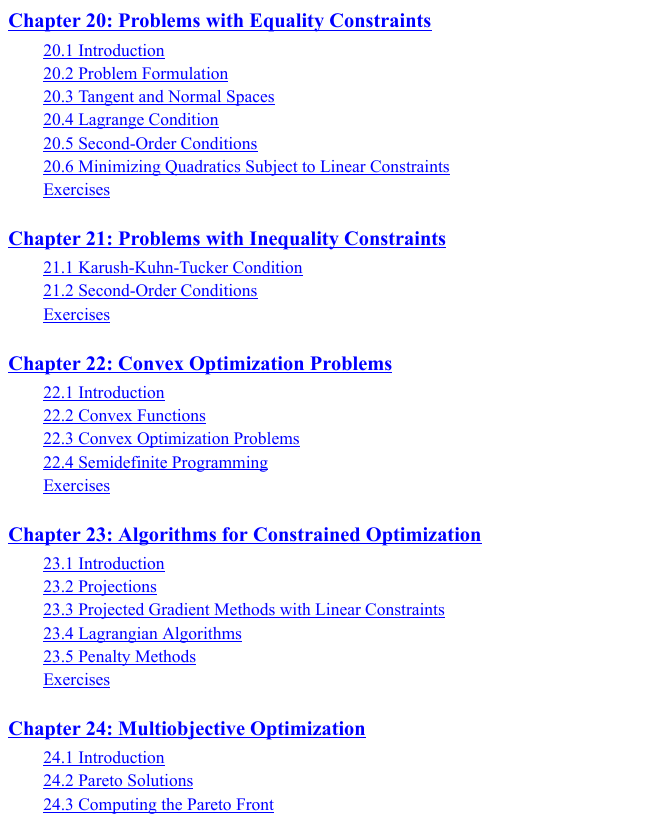

PART IV NONLINEAR CONSTRAINED OPTIMIZATION

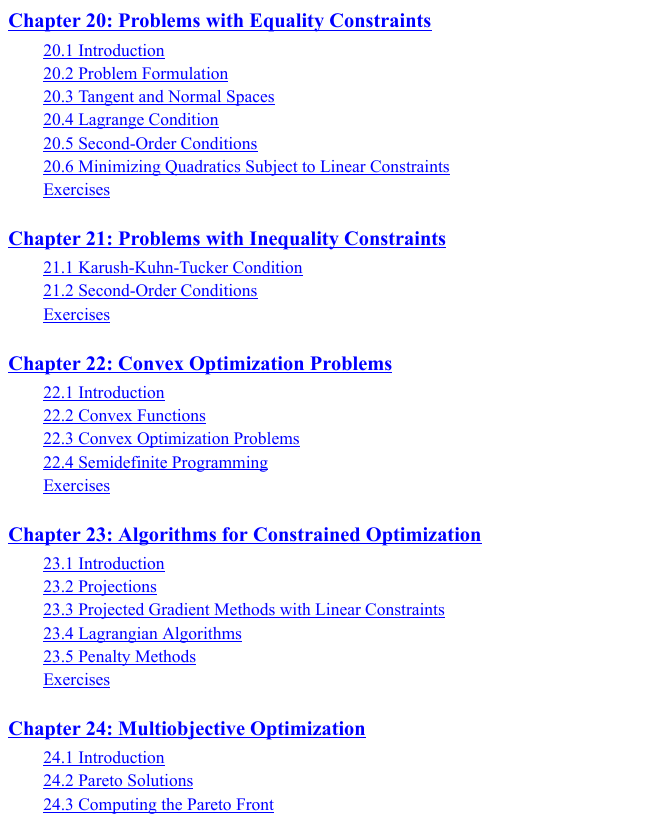

CHAPTER 20 PROBLEMS WITH EQUALITY CONSTRAINTS

20.1 Introduction

20.2 Problem Formulation

20.3 Tangent and Normal Spaces

20.4 Lagrange Condition

20.5 Second-Order Conditions

20.6 Minimizing Quadratics Subject to Linear Constraints

EXERCISES

CHAPTER 21 PROBLEMS WITH INEQUALITY CONSTRAINTS

21.1 Karush-Kuhn-Tucker Condition

21.2 Second-Order Conditions

EXERCISES

CHAPTER 22 CONVEX OPTIMIZATION PROBLEMS

22.1 Introduction

22.2 Convex Functions

22.3 Convex Optimization Problems

22.4 Semidefinite Programming

Linear Matrix Inequalities and Their Properties

LMI Solvers

Finding a Feasible Solution Under LMI Constraints

EXERCISES

CHAPTER 23 ALGORITHMS FOR CONSTRAINED OPTIMIZATION

23.1 Introduction

23.2 Projections

23.3 Projected Gradient Methods with Linear Constraints

23.4 Lagrangian Algorithms

Lagrangian Algorithm for Equality Constraints

Lagrangian Algorithm for Inequality Constraints

23.5 Penalty Methods

EXERCISES

CHAPTER 24 MULTIOBJECTIVE OPTIMIZATION

24.1 Introduction

24.2 Pareto Solutions

24.3 Computing the Pareto Front

24.4 From Multiobjective to Single-Objective Optimization

24.5 Uncertain Linear Programming Problems

Uncertain Constraints

Uncertain Objective Function Coefficients

Uncertain Constraint Coefficients

General Uncertainties

EXERCISES

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc