Michael Margaliot

MMiMMMMiMiMiMiMiMMiMiMMiMiMiMiMiMMiMiMMiMiMiMMMMMiMiMiMMiMiMiMiMMiiMiMMiMiiMiMMiiMMiMiMMMMMiMiiMiMiiMMiiMM cchchchchchchchchcchchchchchchchchchchchchchchchchchchchchchchhchchchchccchhhhhhhccchhhcchchaeaeaeaeaeaeaeaeaeaeaeaeaeaeaeaeaeaeaeaaaeaaaeaaeaaeaeaeaeeaeaeaeaaeael l l l l ll ll lll lllllllllllllllllllllll MaMaMaMaMaMaMaMaMaMaMaMaMaMaMaMaMaMaMaMaMaMaMMaMaMaMaMMMMaMaMMaMMaMMaMaMMaMaMMMMaMaMMMaMMMargrgrgrgrgrgrgrggrgrgrgrgrgrgrgrgrgrgrgrgrgrgrgrgrgggrgrgrgrgrgrggrgrgrgrrggggggalalalalalalalalalalalalallalalalalalalallalalallalaalallalalaaaaala ioioioioioioioioioioioioiiooioioioioioioioioioioiioioiioioioiooioiooiiotttttttttttttttttttttttttttttt

Tel Aviv University, Israel

TeTelTelTelTelTelTelTelTeTelTelTelTelTelTelTeTelTelTTTelTelTelT lTelTelTelTellTeTeTTellelTeTelTelTeTelTelTelTTTeelTeelTTelTTTelelelTeeee A AvAAvAA Av Av Av Av Av Av AvAvAv AvAvA Av AvAvAAvAvAvAv Av Av AvAAAvAvAvvAAvAv AvA AAAvAAA iviviv iv iv iviv iviv iv iv ivvv iviviv iv iviviviv iviviiviv vivivivivvviivvii UniUniUniUniUniUniUniUniUniUnUnUniUUniUniUniUniUniUniUniUniUnnUniUniUniUniUUniUniUniUniUUUnUniUniiUUniUnUUUUnUUnUUnUnnUUniiUUnUnUnn verververververververververververververveveververververvevververereververererververeverveverererrveervvvvvvevvve sitsitsitsitsitsitsitsitsitsitsitsitsitsitsitsitsitsitsitsitsititsitisitsitsisssisittsitsittsittitiiity, y,y, y, y, y, y, y, y, yy, yy, y, yy, yyyy, y, y,y,y, y,y,y,y, y, y, y, y, yy,y,yy,yyy,y,yyy IsrIsrIsrIsrIsrIsrIsrIsrIssrIsrIsrssrIsrIsIsIsrIIsrIsrIIIsrIIIsrIsrIsrsrIsIsrIsrIsrsrIsIsrrs aelaelaeaelaelaelaelaelaelaelaelaelaelaelaelaeelaelelaelaeaeaelaelaellllaelaellaelaaaelaelaelaeleellaellelaaeleeaela

Adaptive Dynamic

Programming: An Introduction

Fei-Yue Wang, Chinese Academy of Sciences,

CHINA and University of Arizona, USA

Huaguang Zhang, Northeastern University, CHINA

and Derong Liu, Chinese Academy of Sciences, CHINA

Digital Object Identifier 10.1109/MCI.2009.932261

©STOCKBYTE

Abstract: In this article, we introduce some recent research trends

within the field of adaptive/approximate dynamic programming

(ADP), including the variations on the structure of ADP

schemes, the development of ADP algorithms and applications

of ADP schemes. For ADP algorithms, the point of focus is that

iterative algorithms of ADP can be sorted into two classes: one

class is the iterative algorithm with initial stable policy; the other

is the one without the requirement of initial stable policy. It is

generally believed that the latter one has less computation at the

cost of missing the guarantee of system stability during iteration

process. In addition, many recent papers have provided conver-

gence analysis associated with the algorithms developed. Fur-

thermore, we point out some topics for future studies.

1556-603X/09/$25.00©2009IEEE

MAY 2009 | IEEE COMPUTATIONAL INTELLIGENCE MAGAZINE 39

�

Introduction

A

s is well known, there are many methods for designing

stable control for nonlinear systems. However, stability

is only a bare minimum requirement in a system

design. Ensuring optimality guarantees the stability of

the nonlinear system. Dynamic programming is a very useful

tool in solving optimization and optimal control problems by

employing the principle of optimality. In [16], the principle of

optimality is expressed as: “An optimal policy has the property

that whatever the initial state and initial decision are, the

remaining decisions must constitute an optimal policy with

regard to the state resulting from the first decision.” There are

several spectrums about the dynamic programming. One can

consider discrete-time systems or continuous-time systems, lin-

ear systems or nonlinear systems, time-invariant systems or

time-varying systems, deterministic systems or stochastic sys-

tems, etc.

We first take a look at nonlinear discrete-time (time-

varying) dynamical (deterministic) systems. Time-varying non-

linear systems cover most of the application areas and

discrete-time is the basic consideration for digital computation.

Suppose that one is given a discrete-time nonlinear (time-

varying) dynamical system

x1k 1 12 5 F 31x1k2, u1k2, k4, k 5 0, 1, c

(1)

where x [ Rn represents the state vector of the system and

u [ Rm denotes the control action and F is the system func-

tion. Suppose that one associates with this system the perfor-

mance index (or cost)

J 3x1i2, i4 5 a`

g k2iU 3x1k2, u1k2, k4

(2)

k5i

where U is called the utility function and g is the discount

factor with 0 , g # 1. Note that the function J is dependent

on the initial time i and the initial state x( i ), and it is referred to

as the cost-to-go of state x( i ). The objective of dynamic pro-

gramming problem is to choose a control sequence

u(k), k 5 i, i 1 1, c, so that the function J (i.e., the cost) in

(2) is minimized. According to Bellman, the optimal cost from

time k is equal to

J *1x1k22 5 min

EU 1x1k2, u1k22 1 g J *1x1k 1 122F. (3)

u1k2

The optimal control u *1k2 at time k is the u1k2 which achieves

EU 1x1k2, u1k22 1 gJ *1x1k 1 122F. (4)

u*1k2 5 arg min

u1k2

this minimum, i.e.,

Equation (3) is the principle of optimality for discrete-time

systems. Its importance lies in the fact that it allows one to

optimize over only one control vector at a time by working

backward in time.

In nonlinear continuous-time case, the system can be

described by

40 IEEE COMPUTATIONAL INTELLIGENCE MAGAZINE | MAY 2009

The cost in this case is defined as

For continuous-time systems, Bellman’s principle of opti-

mality can be applied, too. The optimal cost J *(x0) 5 min

J (x0, u(t )) will satisfy the Hamilton-Jacobi-Bellman Equation

'J *1x1t22

't

t

x

#1t2 5 F 3x1t2, u1t2, t4, t $ t0.

J 1x1t22 53 `

U1x1 t2, u1 t22dt.

bU 1x1t2, u1t2, t2 1a'J *1x1t22

'x1t2

3 F 1x1t2, u1t2, t2r

5 U1x1t2, u*1t2, t2 1a'J *1x1t22

b T

'x1t2

3 F 1x1t2, u*1t2, t2.

5 min

u[U

b T

(5)

(6)

(7)

2

Equations (3) and (7) are called the optimality equations

of dynamic programming which are the basis for implemen-

tation of dynamic programming. In the above, if the func-

tion F in (1) or (5) and the cost function J in (2) or (6) are

known, the solution of u(k ) becomes a simple optimization

problem. If the system is modeled by linear dynamics and

the cost function to be minimized is quadratic in the state

and control, then the optimal control is a linear feedback of

the states, where the gains are obtained by solving a standard

Riccati equation [47]. On the other hand, if the system is

modeled by nonlinear dynamics or the cost function is non-

quadratic, the optimal state feedback control will depend

upon solutions to the Hamilton-Jacobi-Bellman (HJB)

equation [48] which is generally a nonlinear partial differ-

ential equation or difference equation. However, it is often

computationally untenable to run true dynamic program-

ming due to the backward numerical process required for its

solutions, i.e., as a result of the well-known “curse of dimen-

sionality” [16], [28]. In [69], three curses are displayed in

resource management and control problems to show the

cost function J, which is the theoretical solution of the

Hamilton-Jacobi- Bellman equation, is very difficult to

obtain, except for systems satisfying some very good condi-

tions. Over the years, progress has been made to circumvent

the “curse of dimensionality” by building a system, called

“critic”, to approximate the cost function in dynamic pro-

gramming (cf. [10], [60], [61], [63], [70], [78], [92], [94],

[95]). The idea is to approximate dynamic programming

solutions by using a function approximation structure such

as neural networks to approximate the cost function.

The Basic Structures of ADP

In recent years, adaptive/approximate dynamic programming

(ADP) has gained much attention from many researchers in order

to obtain approximate solutions of the HJB equation,

�

cf. [2], [3], [5], [8], [11]–[13], [21], [22], [25], [30], [31], [34],

[35], [40], [46], [49], [52], [54], [55], [63], [70], [76], [80],

[83], [95], [96], [99], [100]. In 1977, Werbos [91] introduced an

approach for ADP that was later called adaptive critic designs

(ACDs). ACDs were proposed in [91], [94], [97] as a way for

solving dynamic programming problems forward-in-time. In the

literature, there are several synonyms used for “Adaptive Critic

Designs” [10], [24], [39], [43], [54], [70], [71], [87], including

“Approximate Dynamic Programming” [69], [82], [95], “Asymp-

totic Dynamic Programming” [75], “Adaptive Dynamic Pro-

gramming” [63], [64], “Heuristic Dynamic Programming” [46],

[93], “Neuro-Dynamic Programming” [17], “Neural Dynamic

Programming” [82], [101], and “Reinforcement Learning” [84].

Bertsekas and Tsitsiklis gave an overview of the neuro-

dynamic programming in their book [17]. They provided the

background, gave a detailed introduction to dynamic program-

ming, discussed the neural network architectures and methods for

training them, and developed general convergence theorems for

stochastic approximation methods as the foundation for analysis of

various neuro-dynamic programming algorithms. They provided

the core neuro-dynamic programming methodology, including

many mathematical results and methodological insights. They sug-

gested many useful methodologies for applications to neuro-

dynamic programming, like Monte Carlo simulation, on-line and

off-line temporal difference methods, Q-learning algorithm, opti-

mistic policy iteration methods, Bellman error methods, approxi-

mate linear programming, approximate dynamic programming

with cost-to-go function, etc. A particularly impressive success that

greatly motivated subsequent research, was the development of a

backgammon playing program by Tesauro [85]. Here a neural

network was trained to approximate the optimal cost-to-go func-

tion of the game of backgammon by using simulation, that is, by

letting the program play against itself. Unlike chess programs, this

program did not use lookahead of many steps, so its success can

be attributed primarily to the use of a properly trained approxi-

mation of the optimal cost-to-go function.

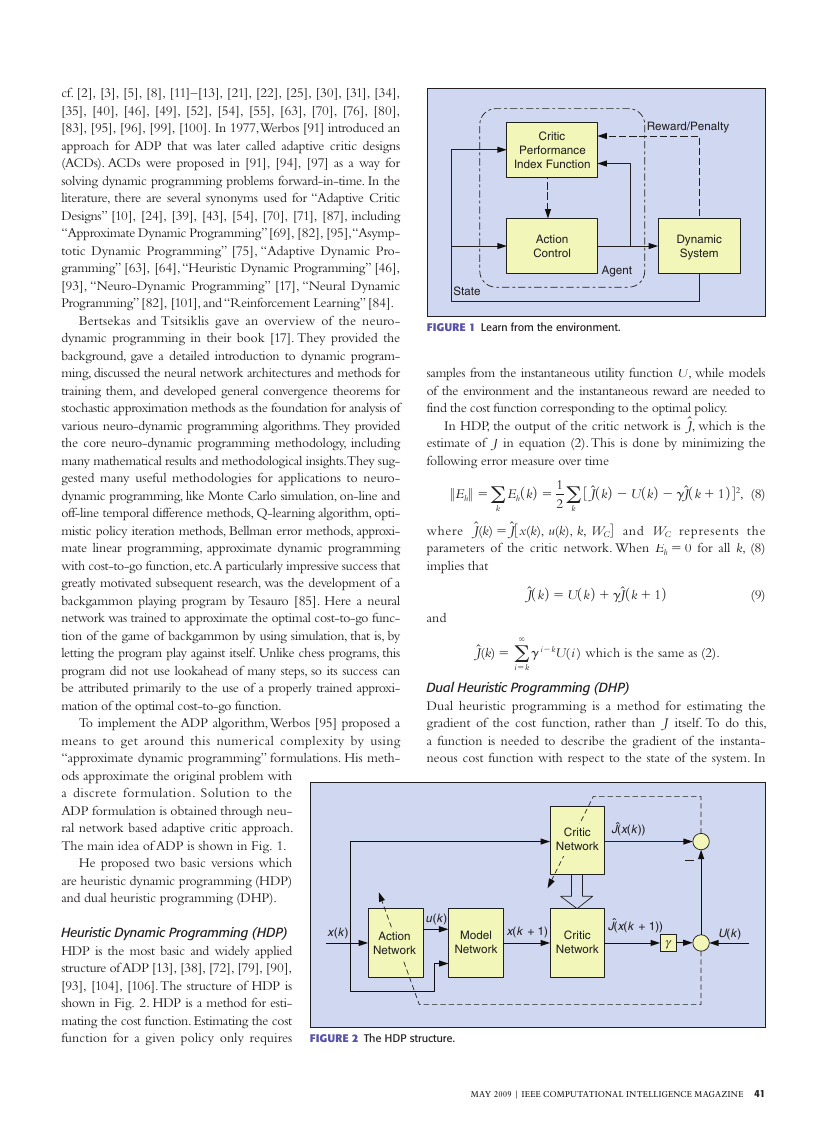

To implement the ADP algorithm, Werbos [95] proposed a

means to get around this numerical complexity by using

“approximate dynamic programming” formulations. His meth-

ods approximate the original problem with

a discrete formulation. Solution to the

ADP formulation is obtained through neu-

ral network based adaptive critic approach.

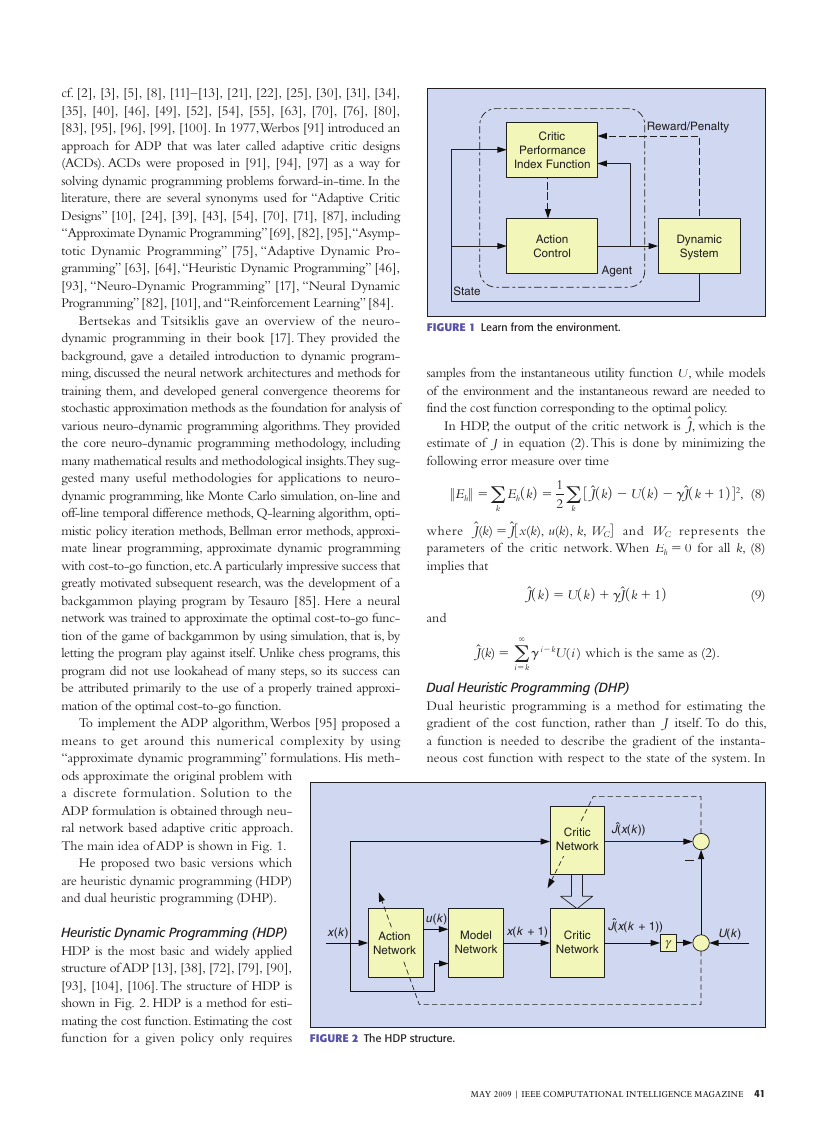

The main idea of ADP is shown in Fig. 1.

He proposed two basic versions which

are heuristic dynamic programming (HDP)

and dual heuristic programming (DHP).

Reward/Penalty

Critic

Performance

Index Function

Action

Control

Dynamic

System

Agent

State

FIGURE 1 Learn from the environment.

samples from the instantaneous utility function U, while models

of the environment and the instantaneous reward are needed to

find the cost function corresponding to the optimal policy.

In HDP, the output of the critic network is J^, which is the

estimate of J in equation (2). This is done by minimizing the

following error measure over time

3 J^1k2 2 U1k2 2 gJ^1k 1 1242, (8)

4 and WC represents the

7Eh7 5a

where J^(k) 5 J^3x(k), u(k), k, WC

1k2 5

J^1k2 5 U1k2 1 gJ^1k 1 12

parameters of the critic network. When Eh 5 0 for all k, (8)

implies that

a

k

1

2

(9)

Eh

k

and

J^(k) 5 a`

i5k

g i2kU( i ) which is the same as (2).

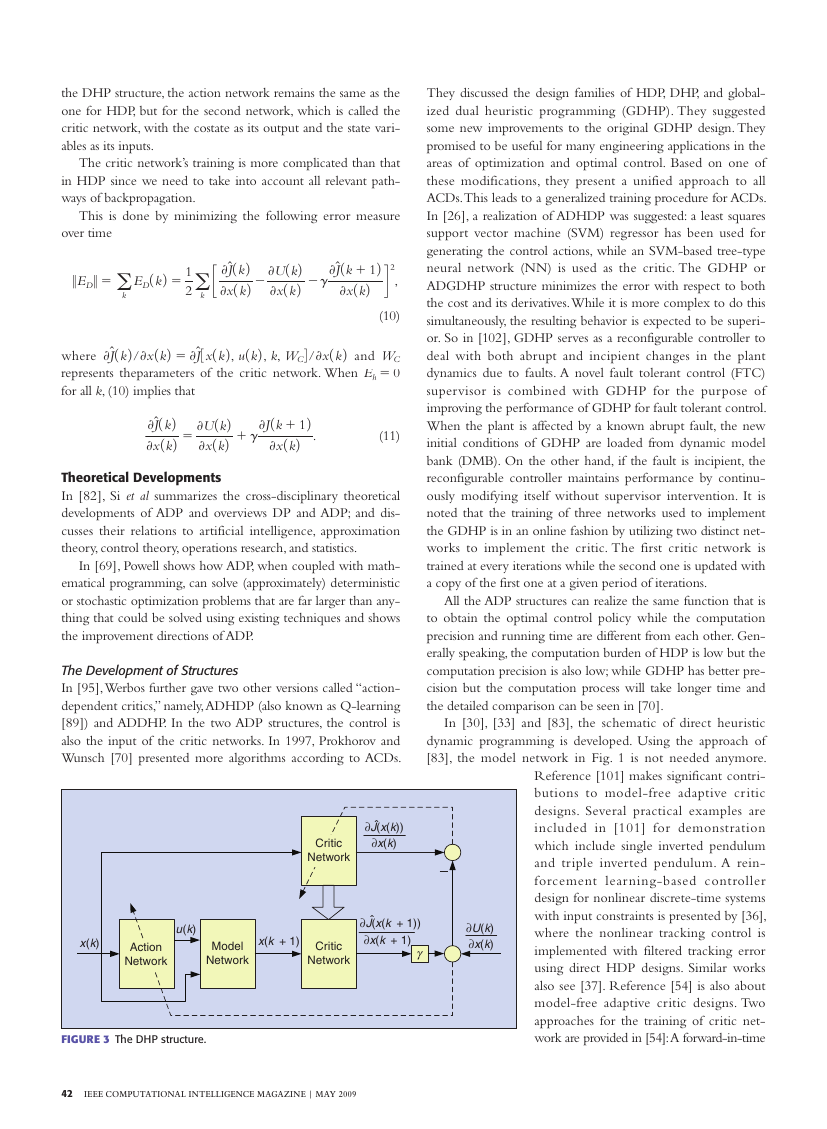

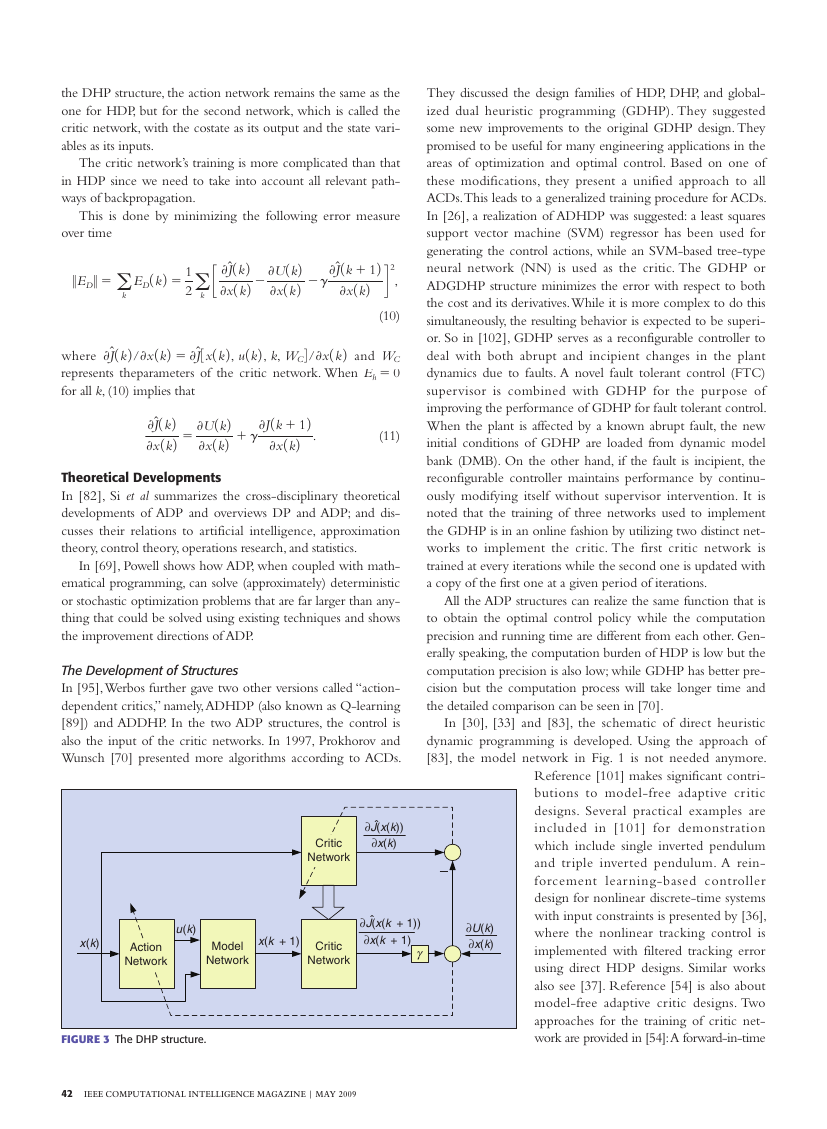

Dual Heuristic Programming (DHP)

Dual heuristic programming is a method for estimating the

gradient of the cost function, rather than J itself. To do this,

a function is needed to describe the gradient of the instanta-

neous cost function with respect to the state of the system. In

J(x(k))›

Critic

Network

Heuristic Dynamic Programming (HDP)

HDP is the most basic and widely applied

structure of ADP [13], [38], [72], [79], [90],

[93], [104], [106]. The structure of HDP is

shown in Fig. 2. HDP is a method for esti-

mating the cost function. Estimating the cost

function for a given policy only requires

u(k)

x(k)

Action

Network

Model

Network

x(k + 1)

Critic

Network

›

J(x(k + 1))

γ

U(k)

FIGURE 2 The HDP structure.

MAY 2009 | IEEE COMPUTATIONAL INTELLIGENCE MAGAZINE 41

�

the DHP structure, the action network remains the same as the

one for HDP, but for the second network, which is called the

critic network, with the costate as its output and the state vari-

ables as its inputs.

over time

a

k

1

2

The critic network’s training is more complicated than that

in HDP since we need to take into account all relevant path-

ways of backpropagation.

1k2 5

This is done by minimizing the following error measure

'J^1k 1 12

c 'J^1k2

'U1k2

7ED7 5 a

d 2

'x1k2

'x1k2 2 g

'x1k2 2

where 'J^1k2 /'x1k2 5 'J^3x1k2, u1k2, k, WC

4/'x1k2 and WC

'J1k 1 12

'x1k2

represents theparameters of the critic network. When Eh 5 0

for all k, (10) implies that

'U1k2

'x1k2 1 g

'J^1k2

'x1k2 5

,

(10)

.

(11)

ED

k

Theoretical Developments

In [82], Si et al summarizes the cross-disciplinary theoretical

developments of ADP and overviews DP and ADP; and dis-

cusses their relations to artificial intelligence, approximation

theory, control theory, operations research, and statistics.

In [69], Powell shows how ADP, when coupled with math-

ematical programming, can solve (approximately) deterministic

or stochastic optimization problems that are far larger than any-

thing that could be solved using existing techniques and shows

the improvement directions of ADP.

The Development of Structures

In [95], Werbos further gave two other versions called “action-

dependent critics,” namely, ADHDP (also known as Q-learning

[89]) and ADDHP. In the two ADP structures, the control is

also the input of the critic networks. In 1997, Prokhorov and

Wunsch [70] presented more algorithms according to ACDs.

∂J(x(k))›

∂x(k)

Critic

Network

›

∂J(x(k + 1))

∂x(k + 1)

γ

u(k)

x(k)

Action

Network

Model

Network

x(k + 1)

Critic

Network

FIGURE 3 The DHP structure.

42 IEEE COMPUTATIONAL INTELLIGENCE MAGAZINE | MAY 2009

They discussed the design families of HDP, DHP, and global-

ized dual heuristic programming (GDHP). They suggested

some new improvements to the original GDHP design. They

promised to be useful for many engineering applications in the

areas of optimization and optimal control. Based on one of

these modifications, they present a unified approach to all

ACDs. This leads to a generalized training procedure for ACDs.

In [26], a realization of ADHDP was suggested: a least squares

support vector machine (SVM) regressor has been used for

generating the control actions, while an SVM-based tree-type

neural network (NN) is used as the critic. The GDHP or

ADGDHP structure minimizes the error with respect to both

the cost and its derivatives. While it is more complex to do this

simultaneously, the resulting behavior is expected to be superi-

or. So in [102], GDHP serves as a reconfigurable controller to

deal with both abrupt and incipient changes in the plant

dynamics due to faults. A novel fault tolerant control (FTC)

supervisor is combined with GDHP for the purpose of

improving the performance of GDHP for fault tolerant control.

When the plant is affected by a known abrupt fault, the new

initial conditions of GDHP are loaded from dynamic model

bank (DMB). On the other hand, if the fault is incipient, the

reconfigurable controller maintains performance by continu-

ously modifying itself without supervisor intervention. It is

noted that the training of three networks used to implement

the GDHP is in an online fashion by utilizing two distinct net-

works to implement the critic. The first critic network is

trained at every iterations while the second one is updated with

a copy of the first one at a given period of iterations.

All the ADP structures can realize the same function that is

to obtain the optimal control policy while the computation

precision and running time are different from each other. Gen-

erally speaking, the computation burden of HDP is low but the

computation precision is also low; while GDHP has better pre-

cision but the computation process will take longer time and

the detailed comparison can be seen in [70].

In [30], [33] and [83], the schematic of direct heuristic

dynamic programming is developed. Using the approach of

[83], the model network in Fig. 1 is not needed anymore.

Reference [101] makes significant contri-

butions to model-free adaptive critic

designs. Several practical examples are

included in [101] for demonstration

which include single inverted pendulum

and triple inverted pendulum. A rein-

forcement learning-based controller

design for nonlinear discrete-time systems

with input constraints is presented by [36],

where the nonlinear tracking control is

implemented with filtered tracking error

using direct HDP designs. Similar works

also see [37]. Reference [54] is also about

model-free adaptive critic designs. Two

approaches for the training of critic net-

work are provided in [54]: A forward-in-time

∂U(k)

∂x(k)

�

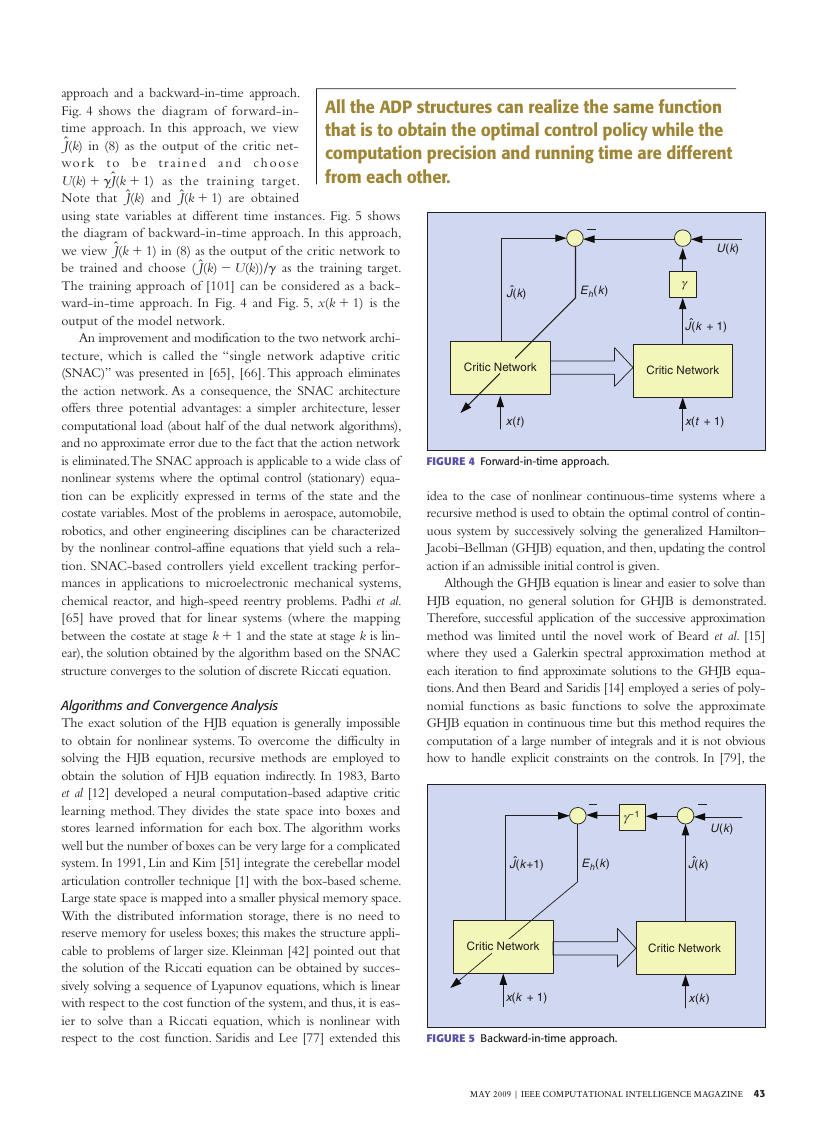

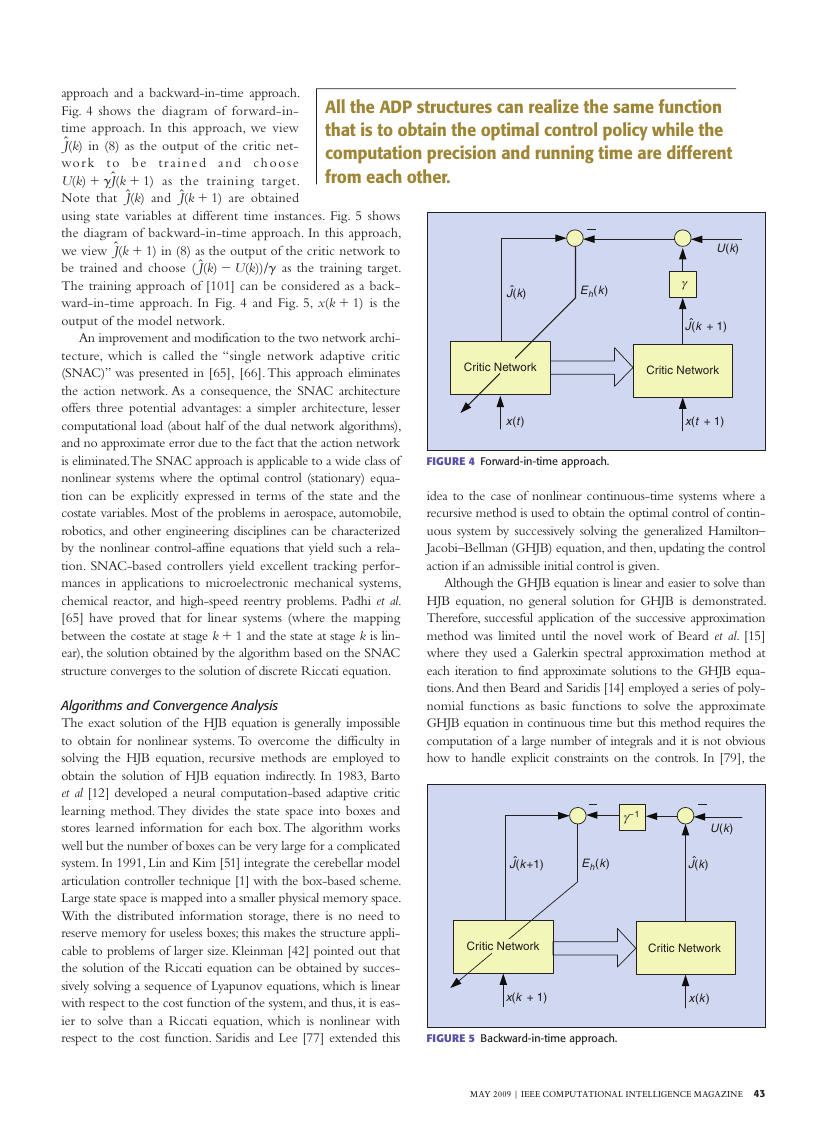

approach and a backward-in-time approach.

Fig. 4 shows the diagram of forward-in-

time approach. In this approach, we view

J^(k) in (8) as the output of the critic net-

wo r k t o b e t r a i n e d a n d c h o o s e

U(k) 1 gJ^(k 1 1) as the training target.

Note that J^(k) and J^(k 1 1) are obtained

using state variables at different time instances. Fig. 5 shows

the diagram of backward-in-time approach. In this approach,

we view J^(k 1 1) in (8) as the output of the critic network to

be trained and choose ( J^(k) 2 U(k))/g as the training target.

The training ap proach of [101] can be considered as a back-

ward-in-time ap proach. In Fig. 4 and Fig. 5, x(k 1 1) is the

output of the model network.

An improvement and modification to the two network archi-

tecture, which is called the “single network adaptive critic

(SNAC)” was presented in [65], [66]. This approach eliminates

the action network. As a consequence, the SNAC architecture

offers three potential advantages: a simpler architecture, lesser

computational load (about half of the dual network algorithms),

and no approximate error due to the fact that the action network

is eliminated. The SNAC approach is applicable to a wide class of

nonlinear systems where the optimal control (stationary) equa-

tion can be explicitly expressed in terms of the state and the

costate variables. Most of the problems in aerospace, automobile,

robotics, and other engineering disciplines can be characterized

by the nonlinear control-affine equations that yield such a rela-

tion. SNAC-based controllers yield excellent tracking perfor-

mances in applications to microelectronic mechanical systems,

chemical reactor, and high-speed reentry problems. Padhi et al.

[65] have proved that for linear systems (where the mapping

between the costate at stage k 1 1 and the state at stage k is lin-

ear), the solution obtained by the algorithm based on the SNAC

structure converges to the solution of discrete Riccati equation.

Algorithms and Convergence Analysis

The exact solution of the HJB equation is generally impossible

to obtain for nonlinear systems. To overcome the difficulty in

solving the HJB equation, recursive methods are employed to

obtain the solution of HJB equation indirectly. In 1983, Barto

et al [12] developed a neural computation-based adaptive critic

learning method. They divides the state space into boxes and

stores learned information for each box. The algorithm works

well but the number of boxes can be very large for a complicated

system. In 1991, Lin and Kim [51] integrate the cerebellar model

articulation controller technique [1] with the box-based scheme.

Large state space is mapped into a smaller physical memory space.

With the distributed information storage, there is no need to

reserve memory for useless boxes; this makes the structure appli-

cable to problems of larger size. Kleinman [42] pointed out that

the solution of the Riccati equation can be obtained by succes-

sively solving a sequence of Lyapunov equations, which is linear

with respect to the cost function of the system, and thus, it is eas-

ier to solve than a Riccati equation, which is nonlinear with

respect to the cost function. Saridis and Lee [77] extended this

All the ADP structures can realize the same function

that is to obtain the optimal control policy while the

computation precision and running time are different

from each other.

›

J(k)

Eh(k)

U(k)

γ

›

J(k + 1)

Critic Network

Critic Network

x(t)

x(t + 1)

FIGURE 4 Forward-in-time approach.

idea to the case of nonlinear continuous-time systems where a

recursive method is used to obtain the optimal control of contin-

uous system by successively solving the generalized Hamilton–

Jacobi–Bellman (GHJB) equation, and then, updating the control

action if an admissible initial control is given.

Although the GHJB equation is linear and easier to solve than

HJB equation, no general solution for GHJB is demonstrated.

Therefore, successful application of the successive approximation

method was limited until the novel work of Beard et al. [15]

where they used a Galerkin spectral approximation method at

each iteration to find approximate solutions to the GHJB equa-

tions. And then Beard and Saridis [14] employed a series of poly-

nomial functions as basic functions to solve the approximate

GHJB equation in continuous time but this method requires the

computation of a large number of integrals and it is not obvious

how to handle explicit constraints on the controls. In [79], the

γ –1

U(k)

›

J(k+1)

Eh(k)

›

J(k)

Critic Network

Critic Network

x(k + 1)

x(k)

FIGURE 5 Backward-in-time approach.

MAY 2009 | IEEE COMPUTATIONAL INTELLIGENCE MAGAZINE 43

�

Equations (3) and (7) are called the optimality

equations of dynamic programming which are the

basis for implementation of dynamic programming.

method, two action networks and one critic net-

work are used that are adaptively tuned in forward

time using adaptive critic methods without the

system model information. The convergence anal-

ysis is also given to guarantee the cost function to

reach the saddle point of the game.

HJB equations are motivated and proven on time scales. The

authors connected the calculus of time scales and stochastic con-

trol via ADP algorithm and further pointed out three significant

directions for the investigation of ADP on time scales. Park [68]

employed interpolating wavelets as the basic functions. On the

other hand, Lewis and Abu-Khalaf presented how to formulate

the associated Hamilton–Jacobi–Isaac (HJI) equation using special

nonquadratic supply rates to obtain the nonlinear state feedback

control in [9]. Next, the fixed-final-time-constrained optimal

control of nonlinear systems is studied in [22], [23] based on the

neural network solution of the GHJB equation. In order to

enhance learning speed and final performance, Wiering and Has-

selt combined multiple different reinforcement learning algo-

rithms to design and implement four different ensemble methods

in [98]. In [41], a new algorithm for the closed loop parallel opti-

mal control of weakly coupled nonlinear systems is developed

using the successive Galerkin approximation. In [45], the author

inspired researchers to develop the experience-based approach

which selected a controller that is appropriate to the current situ-

ation from a repository of existing controller solutions.

Although many papers have discussed the GHJB method

for continuous-time systems, there is very minimal work avail-

able on the GHJB method for discrete-time nonlinear systems.

Discrete-time version of the approximate GHJB-equation-

based control is important since all the controllers are typically

implemented by using embedded digital hardware. In [21] a

successive approximation method using GHJB equation is pro-

posed to solve the near-optimal control problem for affine

nonlinear discrete-time systems, which requires small perturba-

tion assumption and an initially stable policy. The theory of

GHJB in discrete-time has also been applied to the linear dis-

crete-time case which indicates that the optimal control is

nothing but the solution of the standard Riccati equation.

On the other hand, in [19], Bradtke et al. implemented a

Q-learning policy iteration method for the discrete-time linear

quadratic optimal control problem which required an initial

stable policy. Furthermore, Landelius [44] applied HDP, DHP,

ADHDP and ADDHP techniques to the discrete-time linear

quadratic optimal control problem without the initial stable

conditions and discussed their convergence.

Based on the work of [44], the improvement of ADP to the

discrete-time linear quadratic zero-sum game that appearing in

the H` optimal control problem is concerned in [2], [4]. The

optimal strategies for discrete-time quadratic zero-sum games

related to the H` optimal control problem are solved in forward

time. The idea is to solve for an action dependent cost function

Q(x, u, w) of the zero-sum game instead of solving for the state

dependent cost function J(x) which satisfies a corresponding

game algebraic Riccati equation (GARE). Using the Kronecker

44 IEEE COMPUTATIONAL INTELLIGENCE MAGAZINE | MAY 2009

with the cost function

(12)

(13)

Moreover, in [3], a greedy HDP iteration scheme is pro-

posed for solving the optimal control problem for nonlinear

discrete-time systems with known mathematical model, which

does not require an initially stable policy. The discrete-time

system can be written as

x1k 1 12 5 f 1x1k22 1 g1x1k22u1k2,

1xT1i2Qx1i2 1 u T1i2Ru1i22,

J1x1k22 5 a1`

1x2 5 0, define

1x1k22 5 2

R21gT1x1k22c 'Vi

µ ui

1x1k22 5 xT1k2Qx1k2 1 u i

1x1k 1 122

where Q [ Rn3n and R [ Rn3n are positive definite matri-

ces. Similar to [78], an iterative process, which is referred as

Heuristic Dynamic Programming (HDP, cf. [95]), is pro-

posed to obtain the optimal control law. Starting from

V0

1x1k 1 122

d T

'x1k 1 12

1x1k22

T1k21x1k22Rui

,

Vi11

i5k

1

2

1 Vi

(14)

where x(k 1 1) 5 f (x(k)) 1 g(x(k)) ui(x(k)). Al-Tamimi and

Lewis [3] also provided a proof to show the cost function

converges to the optimal one satisfying discrete-time Hamil-

ton-Jacobi-Bellman (DT HJB). Zhang, Wei and Luo [104]

applied the greedy iterative HDP algorithm to solve the opti-

mal tracking problem. In [104], a new performance index is

introduced to obtain a better tracking results. Using a system

transformation, the optimal tracking problem is changed into

an optimal regulator problem and then the greedy iterative

HDP algorithm is introduced to obtain the optimal control for

the transformed system. In [105], Zhang and Luo proposed a

iteration scheme without requirement of initial stable policy,

and proved that the cost function sequence will converge to

the optimal cost function when the number of iteration steps

goes to infinity. And the critic and action networks can be

tuned adaptively without the system plant information via a

model network. On the other hand, the optimal control prob-

lem for linear continuous-time systems without initial stable

policy is studied in [88].

Murray et al. [63] proposed an iterative ADP scheme

for continuous-time nonlinear systems with respect to

quadratic cost function and succeeded to improve the

autolanding control of aircraft. The iteration is required to

begin with an initial stable policy, and after each iteration

the cost function is updated. So the iterative policy is also

�

2 5 x0,

called “cost iteration”. The system is described by the fol-

lowing continuous-time differential equation

#

x

5 F1x2 1 B1x2u, x1t0

U1x1 t2, u1 t22dt,

(15)

with the cost function

J1x2 53 `

where U1x, u2 5 q1x2 1 u Tr1x2u is a nonnegative function

(16)

t0

and r (x) . 0. Similar to [78], an iterative process is proposed to

obtain the control law. In this case, the optimal control can be

simplified to

.

(17)

u *1x2 5 2

1x2 5 2

1

2

1

2

r 211x2B T1x2c dJ *1x2

d T

1x2

r 211x2B T1x2c dJi

d T

dx

Starting from any stable Lyapunov function J0 (or alternatively,

starting from an arbitrary stable controller u0 ) and replacing J *

by Ji, (17) becomes

,

dx

(18)

ui

where Ji 5 et0

1`U(xi21, ui21)dt is the cost of the trajectory

xi21(t) of plant (15) under the input u(t) 5 ui21(t). Furthermore,

Murray et al. gave the convergence analysis of the iterative ADP

scheme and the stability proof of the system. Before that most of

the ADP analysis is based on the Riccati equation for linear sys-

tems. In [8], based on the work of Lyshevski [58], [59], an iterative

ADP method is used to obtain an approximate solution of the cost

function of the HJB equation using neural networks (NNs). A

monotonic odd function is introduced to change the saturating

actuators into a nonsaturating one. This in turn results in a nearly

optimal constrained input state feedback controller suitable for sat-

urated actuators. Different from the iterative ADP scheme in [63],

the iterative scheme in [8] adopt policy iteration which means

that after each iteration the policy (or control) function is updated.

The convergence and stability analysis can also be found in [8].

Vrabie et al. [88] proposed a new policy iteration technique to

solve online the continuous-time LQR problem for a partially

model-free system (internal dynamics unknown). They presented

an online adaptive critic algorithm in which the actor performs

continuous-time control, whereas the critic’s correction of the

actor’s behavior is discrete in time until best performance is

obtained. The critic evaluates the actor’s performance over a peri-

od of time and formulates it in a parameterized form. Policy

update is a function of the critic’s evaluation of the actor. Conver-

gence of the proposed algorithm is established by proving equiva-

lence with an established algorithm [42]. Numerical results using

the short period dynamics of an F-16 aircraft are presented. In

[32], a novel linear parameter-varying (LPV) approach for design-

ing the ADP neural network controllers is presented. The control

performance and the closed-loop stability of the LPV regime are

formulated as a set of design equations that are linear with respect

to matrix functions of NN parameters.

Applications

As for industrial applications of ADP algorithms, focuses have

been on missile systems [18], autopilot [31], [50], generators

[67], power systems [62], [72], communication systems [55],

biochemical processes [57] and so on. In [103], an improved

reinforcement learning methods are proposed to perform navi-

gation in dynamic environments. The difficulties of the tradi-

tional reinforcement learning are presented in autonomous

navigating and three effective solutions are proposed to over-

come these difficulties which are forgetting Q-learning, feature

based Q-learning, and hierarchical Q-learning, respectively.

Forgetting Q-learning is proposed to improve performance in

a dynamic environment by maintaining possible navigation

paths that would be considered unacceptable by traditional

Q-learning. Hierarchical Q-learning is proposed as a method

of subdividing the problem domain into a set of more manage-

able ones. Feature based Q-learning is proposed as a method of

enhancing hierarchical Q-learning. In [27], an incoherent con-

trol scheme for accomplishing the state control of a class of

quantum systems which have wavefunction-controllable sub-

spaces is proposed. This incoherent control scheme provides an

alternative quantum engineering strategy for locally controlla-

ble quantum systems. In the scheme, the initial states can be

unknown identical states, and the controlled system is not nec-

essarily initially controllable.

Applications of adaptive critics in the continuous-time

domain were mainly done by using discretization and well-

established discrete-time results (e.g., [86]). Various schemes of

continuous-time dynamic reinforcement learning were dis-

cussed in Campos and Lewis [20] and Rovithakis [74], where

the derivative of Lyapunov function is approximated.

Lu, Si and Xie [56] applied a direct heuristic dynamic pro-

gramming (direct HDP) to a large power system stability con-

trol problem. A direct HDP controller learns to cope with

model deficiencies for nonlinearities and uncertainties on the

basis of real system responses instead of a system model. Ray

et al. [73] reported a comparison of adaptive critic-based and

classical wide-area controllers for power systems. Liu et al. [53]

demonstrated a good engine torque and exhaust air-fuel ratio

(AFR) control with adaptive critic techniques for an engine

application. The design was based on neural networks to auto-

matically learn the inherent dynamics and it advanced the

development of a virtual powertrain to improve its perfor-

mance during the actual vehicle operations.

Enns and Si [29] presented an article on model-free

approach to helicopter control. Jagannathan [81] has extended

stability proofs for systems with observers in the feedback

loop and applied to spark engine EGR operation on the basis

of reinforcement learning dual control [37]. Al-Tamimi, Abu-

Khalaf and Lewis [2] used HDP and DHP structures to solve

problems formulated with game theoretic notions. Their for-

mulation leads to a forward-in-time reinforcement learning

algorithm that converges to the Nash equilibrium of the cor-

responding zero-sum game and they have provided perfor-

mance comparisons with an F-16 autopilot problem.

MAY 2009 | IEEE COMPUTATIONAL INTELLIGENCE MAGAZINE 45

�

Al-Tamimi et al. [6], [7] extended these results to a model-

free environment for linear systems for the control of a power

system generator. In these papers, they presented online mod-

el-free adaptive critic schemes to solve optimal control prob-

lems in both discrete-time and continuous-time domains for

linear systems with unknown dynamics. In the discrete-time

case, the solution process leads to solving the underlying game

algebraic Riccati equation (GARE) of the corresponding

optimal control problem or zero-sum game. In the continu-

ous-time domain, the ADP scheme solves the underlying

ARE of the optimal control problem. It is shown that contin-

uous-time ADP scheme is nothing but a quasi-Newton

method to solve the ARE. Either in continuous-time domain

or in discrete-time domain, the adaptive critic algorithms are

easy to implement the fact that initial policies are not required

to be stabilizing. For the model-based paper, the authors have

proved the convergence of the presented algorithm.

Concluding Remarks

In this article, we presented the variations on the structure of

ADP schemes and stated the development on the iterative ADP

algorithms, and at last we summarized industrial applications of

ADP schemes. In the future, the study of ADP algorithms for

nonlinear continuous-time systems without the requirement of

initially stable policy is important. And also how to extend the

ADP algorithms to time-variant and time-delay uncertain non-

linear systems with stability guarantee is another interesting

topic. In addition, practical applications of ADP with significant

economic impact are of great demand.

Acknowledgment

The authors would like to thank Dr. Ning Jin and Dr. Qinglai

Wei for their help in preparing this manuscript.

References

[1] J. S. Albus, “A new approach to manipulator control: The cerebellar model articulation

controller (CMAC),” Trans. ASME, J. Dyn. Syst., Meas., Control, vol. 97, pp. 220–227,

Sept. 1975.

[2] A. Al-Tamimi, M. Abu-Khalaf, and F. L. Lewis, “Adaptive critic designs for discrete-

time zero-sum games with application to H` control,” IEEE Trans. Syst., Man, Cybern. B,

vol. 37, no. 1, pp. 240–247, Feb. 2007.

[3] A. Al-Tamimi and F. L. Lewis, “Discrete-time nonlinear HJB solution using approxi-

mate dynamic programming: convergence proof,” in Proc. IEEE Int. Symp. Approximate

Dynamic Programming and Reinforcement Learning, Honolulu, HI, Apr. 2007, pp. 38–43.

[4] A. Al-Tamimi, F. L. Lewis, and M. Abu-Khalaf, “Model-free Q-learning designs for

linear discrete-time zero-sum games with application to H-infinity control,” Automatica,

vol. 43, no. 3, pp. 473–481, 2007.

[5] A. Al-Tamimi, F. L. Lewis, and M. Abu-Khalaf, “Discrete-time nonlinear HJB solu-

tion using approximate dynamic programming: Convergence proof,” IEEE Trans. Syst.,

Man., Cybern. B, vol. 38, no. 4, pp. 943–949, Aug. 2008.

[6] A. Al-Tamimi, F. L. Lewis, and Y. Wang, “Model-free H-infinity loadfrequency

controller design for power systems,” in Proc. IEEE Int. Symp. Intelligent Control, 2007,

pp. 118–125.

[7] A. Al-Tamimi, D. Vrabie, M. Abu-Khalaf, and F. L. Lewis, “Model-free approximate

dynamic programming schemes for linear systems,” in Proc. IJCNN, 2007, pp. 371–378.

[8] M. Abu-Khalaf and F. L. Lewis, “Nearly optimal control laws for nonlinear systems

with saturating actuators using a neural network HJB approach,” Automatica, vol. 41, no.

5, pp. 779–791, 2005.

[9] M. Abu-Khalaf, F. L. Lewis, and J. Huang, “Policy iterations on the Hamilton-Jacobi-

Isaacs equation for state feedback control with input saturation,” IEEE Trans. Automat.

Contr., vol. 51, no. 12, pp. 1989–1995, Dec. 2006.

[10] S. N. Balakrishnan and V. Biega, “Adaptive-critic-based neural networks for aircraft

optimal control,” J. Guid. Control Dyn., vol. 19, pp. 893–898, July 1996.

[11] S. N. Balakrishnan, J. Ding, and F. L. Lewis, “Issues on stability of ADP feedback

controllers for dynamical systems,” IEEE Trans. Syst., Man., Cybern. B, vol. 38, no. 4, pp.

913–917, Aug. 2008.

46 IEEE COMPUTATIONAL INTELLIGENCE MAGAZINE | MAY 2009

[12] A. G. Barto, R. S. Sutton, and C. W. Anderson, “Neuronlike adaptive elements that

can solve difficult learning control problems,” IEEE Trans. Syst., Man., Cybern., vol. 13,

no. 5, pp. 835–846, 1983.

[13] R. W. Beard, “Improving the closed-loop performance of nonlinear systems,” Ph.D. disserta-

tion, Elect. Eng. Dept., Rensselaer Polytech. Inst., Troy, NY, 1995.

[14] R. W. Beard and G. N. Saridis, “Approximate solutions to the timeinvariant Hamil-

ton-Jacobi-Bellman equation,” J. Optim. Theory Appl., vol. 96, no. 3, pp. 589–626, 1998.

[15] R. W. Beard, G. N. Saridis, and J. T. Wen, “Galerkin approximations of the gener-

alized Hamilton-Jacobi-Bellman equation,” Automatica, vol. 33, no. 12, pp. 2159–2177,

1997.

[16] R. E. Bellman, Dynamic Programming. Princeton, NJ: Princeton Univ. Press, 1957.

[17] D. P. Bertsekas and J. N. Tsitsiklis, Neuro-Dynamic Programming. Belmont, MA: Ath-

ena Scientific, 1996.

[18] D. P. Bertsekas, M. L. Homer, D. A. Logan, S. D. Patek, and N. R. Sandell, “Missile

defense and interceptor allocation by neuro-dynamic programming,” IEEE Trans. Syst.,

Man, Cybern. A, vol. 30, no. 1, pp. 42–51, Jan. 2000.

[19] S. J. Bradtke, B. E. Ydestie, and A. G. Barto, “Adaptive linear quadratic control

using policy iteration,” in Proc. American Control Conf., Baltimore, MD, June 1994, pp.

3475–3476.

[20] J. Campos and F. L. Lewis, “Adaptive critic neural network for feedforward compen-

sation,” in Proc. American Control Conf., San Diego, CA, June 1999, pp. 2813–2818.

[21] Z. Chen and S. Jagannathan, “Generalized Hamilton-Jacobi-Bellman formulation-

based neural network control of affine nonlinear discrete-time systems,” IEEE Trans.

Neural Networks, vol. 19, no. 1, pp. 90–106, Jan. 2008.

[22] T. Cheng, F. L. Lewis, and M. Abu-Khalaf, “Fixed-final-time-constrained optimal

control of nonlinear systems using neural network HJB approach,” IEEE Trans. Neural

Networks, vol. 18, no. 6, pp. 1725–1736, Nov. 2007.

[23] T. Cheng, F. L. Lewis, and M. Abu-Khalaf, “A neural network solution for fixed-

final time optimal control of nonlinear systems,” Automatica, vol. 43, no. 3, pp. 482–490,

2007.

[24] J. Dalton and S. N. Balakrishnan, “A neighboring optimal adaptive critic for missile

guidance,” Math. Comput. Model., vol. 23, pp. 175–188, Jan. 1996.

[25] J. Dankert, Y. Lei, and J. Si, “A performance gradient perspective on approximate

dynamic programming and its application to partially observable markov decision pro-

cesses,” in Proc. Int. Symp. Intelligent Control, Munich, Oct. 2006, pp. 458–463.

[26] A. K. Deb, Jayadeva, M. Gopal, and S. Chandra, “SVM-based tree-type neural net-

works as a critic in adaptive critic designs for control,” IEEE Trans. Neural Networks, vol.

18, no. 4, pp. 1016–1030, July 2007.

[27] D. Dong, C. Chen, T. J. Tarn, A. Pechen, and H. Rabitz, “Incoherent control of

quantum systems with wavefunction-controllable subspaces via quantum reinforcement

learning,” IEEE Trans. Syst., Man, Cybern. B, vol. 38, no. 4, pp. 957–962, Aug. 2008.

[28] S. E. Dreyfus and A. M. Law, The Art and Theory of Dynamic Programming. New York,

NY: Academic, 1977.

[29] R. Enns and J. Si, “Apache helicopter stabilization using neural dynamic program-

ming,” J. Guid. Control Dyn., vol. 25, no. 1, pp. 19–25, 2002.

[30] R. Enns and J. Si, “Helicopter trimming and tracking control using direct neural dy-

namic programming,” IEEE Trans. Neural Networks, vol. 14, no. 4, pp. 929–939, July 2003.

[31] S. Ferrari and R. F. Stengel, “Online adaptive critic flight control,” J. Guid. Control

Dyn., vol. 27, no. 5, pp. 777–786, 2004.

[32] S. Ferrari, J. E. Steck, and R. Chandramohan, “Adaptive feedback control by con-

strained approximate dynamic programming,” IEEE Trans. Syst., Man., Cybern. B, vol.

38, no. 4, pp. 982–987, Aug. 2008.

[33] J. Govindhassamy, S. Mcloone, and G. Irwin, “Second-order training of adaptive

critics for online process control,” IEEE Trans. Syst., Man., Cybern. B, vol. 35, no. 2, pp.

381–385, Apr. 2005.

[34] T. Hanselmann, L. Noakes, and A. Zaknich, “Continuous-time adaptive critics,”

IEEE Trans. Neural Networks, vol. 18, no. 3, pp. 631–647, May 2007.

[35] R. Havira and J. Lewis, “Computation of quantized controls using differential dy-

namic programming,” IEEE Trans. Automat. Contr., vol. 17, no. 2, pp. 191–196, Apr.

1972.

[36] P. He and S. Jagannathan, “Reinforcement learning-based output feedback control

of nonlinear systems with input constraints,” IEEE Trans. Syst., Man., Cybern. B, vol. 35,

no. 1, pp. 150–154, Jan. 2005.

[37] P. He and S. Jagannathan, “Reinforcement learning neural-network-based control-

ler for nonlinear discrete-time systems with input constraints,” IEEE Trans. Syst., Man.,

Cybern. B, vol. 37, no. 2, pp. 425–436, Apr. 2007.

[38] Z. G. Hou and C. P. Wu, “A dynamic programming neural network for large-scale

optimization problems,” Acta Autom. Sin., vol. 25, no. 1, pp. 46–51, 2005.

[39] H. Javaherian, D. Liu, Y. Zhang, and O. Kovalenko, “Adaptive critic learning tech-

niques for automotive engine control,” in Proc. American Control Conf., Boston, MA, June

2004, pp. 4066–4071.

[40] N. Jin, D. Liu, T. Huang, and Z. Pang, “Discrete-time adaptive dynamic program-

ming using wavelet basis function neural networks,” in Proc. IEEE Int. Symp. Approximate

Dynamic Programming and Reinforcement Learning, Honolulu, HI, Apr. 2007, pp. 135–142.

[41] Y. J. Kim and M. T. Lim, “Parallel optimal control for weakly coupled nonlinear

systems using successive Galerkin approximation,” IEEE Trans. Automat. Contr., vol. 53,

no. 6, pp. 1542–1547, July 2008.

[42] D. Kleinman, “On a iterative technique for Riccati equation computations,” IEEE

Trans. Automat. Contr., vol. 13, no. 1, pp. 114–115, Feb. 1968.

[43] N. V. Kulkarni and K. KrishnaKumar, “Intelligent engine control using an adaptive

critic,” IEEE Trans. Contr. Syst. Technol., vol. 11, pp. 164–173, Mar. 2003.

[44] T. Landelius, “Reinforcement learning and distributed local model synthesis,” Ph.D. dis-

sertation, Linkoping University, Sweden, 1997.

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc