Sentiment Analysis For Short Chinese Text Based

On Character-level Methods

Yanxin An, Xinhuai Tang

School of Software Engineering

Shanghai Jiao Tong University

Shanghai, China

xm ayx@sjtu.edu.cn, tang-xh@sjtu.edu.cn

Bin Xie

Department of System and Platform

The 32rd Research Institute of CETC

Shanghai, China

xiebin sh@163.com

Abstract—To date, analyzing the sentiment of user-generated

reviews is an important way to get timely feedbacks from

customers. In order to solve the task, many semantics methods

and machine learning algorithms are applied. However, most of

them are based on word-level features. Segmenting a sentence

into words is a much harder process in tonal languages, like

Chinese and Thai, than the others, like English. Thus in this

paper, we propose several methods only based on character-

level features to avoid the problem. We collect reviews of three

different kinds of products from the Internet as data sets, and

test our methods to show the effectiveness.

Keywords—sentiment analysis, character-level features, ma-

chine learning, deep learning.

I. INTRODUCTION

Sentiment analysis, which is also known as opinion min-

ing, aims at extracting people’s attitudes from some texts.

Currently, thanks to the rapid development of the Internet,

millions of user-generated reviews about products or services

were posted online everyday. With sentiment analysis for

such reviews from e-commerce or social websites, companies

could easily get timely feedbacks about things they or their

competitors provided directly from customers,

thus make

improvement.

To date, as a typical natural

language processing task,

several methods were applied to solve the sentiment analysis

problem.

On one hand, some researchers investigated this topic based

on semantic knowledge of given texts. Baccianella and et. al.

[1] described SENTIWORDNET, which scoring each synset

of WordNet from three aspects: positivity, negativity and

objectivity. Turney [2] proposed a method based on pointwise

mutual information (PMI) and latent semantic analysis (LSA)

to infer the semantic orientation of a word. Narayanan and et.

al. [3] studied sentiment analysis of conditional sentences with

linguistic knowledge. Yuen and et. al. [4] applied a method

based on PMI presented by Turney [2] and morphemes in

Chinese, which giving high precision and recall in decid-

ing semantic orientation of Chinese words. And with polar

lexical items found by Yuen [4], Tsou [5] brought out an

algorithm exploring the spread, density and intensity of polar

lexical items, and classified news articles regarding political

figures with good performance. Ku and Chen [6] collected a

Chinese sentiment words dictionary called NTUSD, which is

978-1-4673-9077-4/17/$31.00 c 2017 IEEE

78

widly used nowadays, and determined sentiment polarity of a

sentence by identifying sentiment words, negation operators

and opinion holders in it. Other research results [7]–[9] were

there based on HowNet, which is another famouse Chinese

sentiment words dictionary.

On the other hand, some researchers applied machine

learning algorithms to obtain sentiment analysis models. Pang

and Lee [10] experimented three starndard algorithms: Naive

Bayes classification, maximum entropy classification, and sup-

port vector machines (SVM), and it turned out that SVM

performs best

in most situations. Pak and Paroubek [11]

collected a corpus from Twitter and developed a sentiment

classifier based on it. CRFs with hidden variables were used

by Nakagawa and et. al. [12]; they designed a dependency

tree-based method where interactions between words were

considered. Zou and et. al. [13] exploited sentence syntax

tree to enrich features of a simple words-bag, and they finally

got a better result with SVM based on Pang’s [10] datasets.

Similarly, machine learning methods [14]–[16] were applied

on Chinese microblogs and product reviews, and some good

results were gained.

However, since no word delimiters (like a blank) are there,

word segmentation becomes an important step in processing

natural language in Chinese, which is another difficult task

and will bring in complexity and uncertainty. To date, almost

all Chinese sentiments analysis researches are based on words.

Thus none of them could avoid the problem above. Fortunately,

methods using character-level features for language processing

achived some good results this years. Nakov and et. al. [17]

combined word-level and character-level models for machine

translation between closely-related languages. Kanaris and et.

al. [18] used character-level n-grams in anti-spam filtering.

Zhang and Zhao [19] proposed a method based on character-

level convolutional neural network (CNN) for text classifica-

tion.

Applying machine learning methods fully with character-

level features can help us dealing with Chinese language text

without any syntactic knowledge. In this paper, we implement

several machine learning methods based on character-level

features to analyze sentiment polarity for short chinese text,

which is more common among current network society. And

we will compare them with methods based on word-level

�

features to see if character-levels work out.

The remaining of this paper is organized as follows: Section

II explains the proposed methods including Naive Bayes, SVM

and CNN with character-level features; Section III describes

the data sets we used in this experiment followed by discussion

about the result; Section IV concludes the whole paper.

II. THE PROPOSED METHODS

At the beginning, we would like to introduce sign function

sign(x) and indicator function I(x), which are used in the

following descriptions of our methods.

+1, x ≥ 0

−1, x < 0

sign(x) =

I(x) =

1, x is true

0, x is f alse

A. Character-level n-gram model

In general, n-gram means a contiguous sequence of items

from a given piece of text. Always, the granularity of item is

a word. In this paper, we choose a finer unit - a character,

which means we will extract all substrings with length n from

the text as features. Here’s an example in Table I explaining

our design.

AN EXAMPLE OF CHARACTER-LEVEL N-GRAMS

TABLE I

sentence

words

word-level

English

I have a pen.

I/have/a/pen

Chinese

我有一支钢笔。a

我/有/一支/钢笔

I have/have a/a pen 我有/有一支/一支钢笔

n-grams (n=2)

character-level

n-grams (n=2)

It means “I have a pen” in English.

/e / a/a / p/pe/en/n.

I / h/ha/av/ve

a

我有/有一/一支

/支钢/钢笔/笔。

Let D1, D2,··· , DN be all the N documents in training set,

and let Gd,r be the set of all distinct substrings of length r

in document d, which means all r-grams in d. Then, by going

through all documents in training set and collecting substrings

of length l1 to l2 together, we get N × (l2 − l1 + 1) sets of

strings. Finally, we combine them to build a dictionary S; let’s

suppose its size is k:

l2

N

S =

r=l1

i=1

GDi,r = {s1, s2,··· , sk}

When vectorizing the features of document d,

indepen-

dence from length of the document must be considered. So

we design two different stragety to define a features vector

x = (x(1), x(2),··· , x(k))T.

One is using persence of the items,

in which x(i) =

I(si appears in d). The other is based on frequency of

the items. We use tf-idf to represent it, in which x(i) =

tf (si, d) × idf (si, D1, D2,··· , DN ) and

tf (s, d) =

times of (s appears in d)

l2

r=l1 count(r − grams in d)

count(s ∈ Di)

N

idf (s, D1, D2,··· , DN ) = log

B. Naive Bayes

Let X = (x1, x2,··· , xN )T, where xi is the features vector

of training document Di; and Y = (y1, y2,··· , yN )T, where

yi =

1, Di is labeled positive

0, Di is labeled negative

Then, we can estimate P (x(i)|y) and P (y) with Maximum

Likelihood:

(r)

P (x

= a|y = c) =

P (y = c) =

N

i=1 I(x

N

N

i=1 I(yi = c)

N

(r)

i = a, yi = c)

i=1 I(yi = c)

When given an document d with its features vector x, we can

estimate the probability it is labeled c with Bayes’ Theorem:

P (y = c|x) =

=

=

P (y = c) · P (x|y = c)

P (y = c) · P (x(1)x(2) ···x (k)|y = c)

P (x)

k

i=1 P (x(i)|y = c)

P (x)

P (x)

P (y = c)

where P(x) has nothing to do with determin the label c. Though

in our character-level n-gram model, features absolutely obey

the condition independce assumption, we still try it since some

privious researchs [20] found that Naive Bayes also works well

with some highly dependent features.

C. Support vector machines

Support vector machines (SVM) is a really effective model

in binary classification. In this paper, with a given document

d, its features vector x and its labeled class y, where

y =

+1, d is labeled positive

−1, d is labeled negative

we can construct a hyperplane w · x + b = 0 with parameter

w and b, and estimate the class with sign function:

y = sign(w · x + b)

With training set X = (x1, x2,··· , xN )T and Y =

(y1, y2,··· , yN )T, we can calculate w and b by solving the

optimization problem:

1w

max

w,b

yi · (w · xi + b) − 1 ≥ 0

(i = 1, 2,··· , N )

s.t.

79

�

The vectors in X which make the equality are called support

vectors.

D. Convolutional neural networks

Convolutional neural networks (CNN) are models based

on multi-layers features extracting; they were found useful

in computer vision applications. Commonly, a convolution

neural network has several convolutional layers and pooling

layers, and is finished with some fully-connected layers. A

convolutional layer used shared weights to extract features

while a pooling layer used maximum or average function to

reduce data size thus make a deep network computable. Fully-

connected layers work in the same way as layers in normal

neural networks. Fig 1 gives an illustration.

Fig. 1.

g

Illustration of convolutional neural networks

TABLE II

THE DESIGN OF CONVOLUTIONAL LAYERS

Layer

Feature Kernel

Pool(maximum)

1

2

3

4

1024

1024

1024

1024

5

5

3

3

2

2

N/A

2

TABLE III

THE DESIGN OF FULLY-CONNECTED LAYERS

Layer

1

2

Input

1024 × 59

2048

Output

2048

2

III. EXPERIMENT

A. Data Sets

The three data sets we use in this experiment are collected

from some e-commerce websites. They are reviews of laptops,

books, and hotels. Each of them has 2000 positive reviews

and 2000 negative reviews separately. For our experiment, all

reviews are preprocessed to create input features vectors or

raw signals streams. From each set, we randomly pick 600

positve reviews and 600 negative reviews as testing set; the

rest are treated as training set.

B. Implements

In this experiment, we implement our three algorithms,

character-level NB, character-level SVM and character-level

CNN to test the effect of character-level features. And we

implement

two word-level algorithms, word-level NB and

word-level SVM as comparison. As for word-level methods,

HanLP1 is used to segment sentences. All the NBs and SVMs

are implemented based on Spark MLlib2 while the CNN is

implemented with Torch73.

When implementing character-level methods,

the lower

bound l1 is set as 2 and the upper bound l2 is set as 5,

since in Chinese language, most words with length of 2 or

3 and we use the minimum as lower bound and the sum as

upper bound. Bigram model is used when implement word-

level methods. And we apply Laplace Smoothing on both NBs.

As for SVMs, Gradient Descent is used as optimizer and both

models are trained with up to 200 iterations. The CNN is also

trained with 200 iterations based on Backpropagation. All of

the five algorithms are trained and tested with each of data set

described above separately.

a length of 500.

After preprocessing the training set, we can use those se-

quences to train our network model, which is 6 layers deep

with 4 convolutional layers (Table II) and 2 fully-connected

layers (Table III).

1. https://github.com/hankcs/HanLP

2. http://spark.apache.org/

3. http://torch.ch/

80

In this paper, we describe a dictionary with all characters

appearing in the training set. So that we can transform a given

document into raw signals with steps shown below:

1) Cut the document to at most length 500 and discard all

characters after that.

2) Use the serial numbers (1 - M) of characters in dictio-

nary to turn the document into a sequence of numbers;

if there’s a character that’s not in the dictionary, number

zero is inserted.

3) Fill zeros to the tail of the sequence so that it matches

�

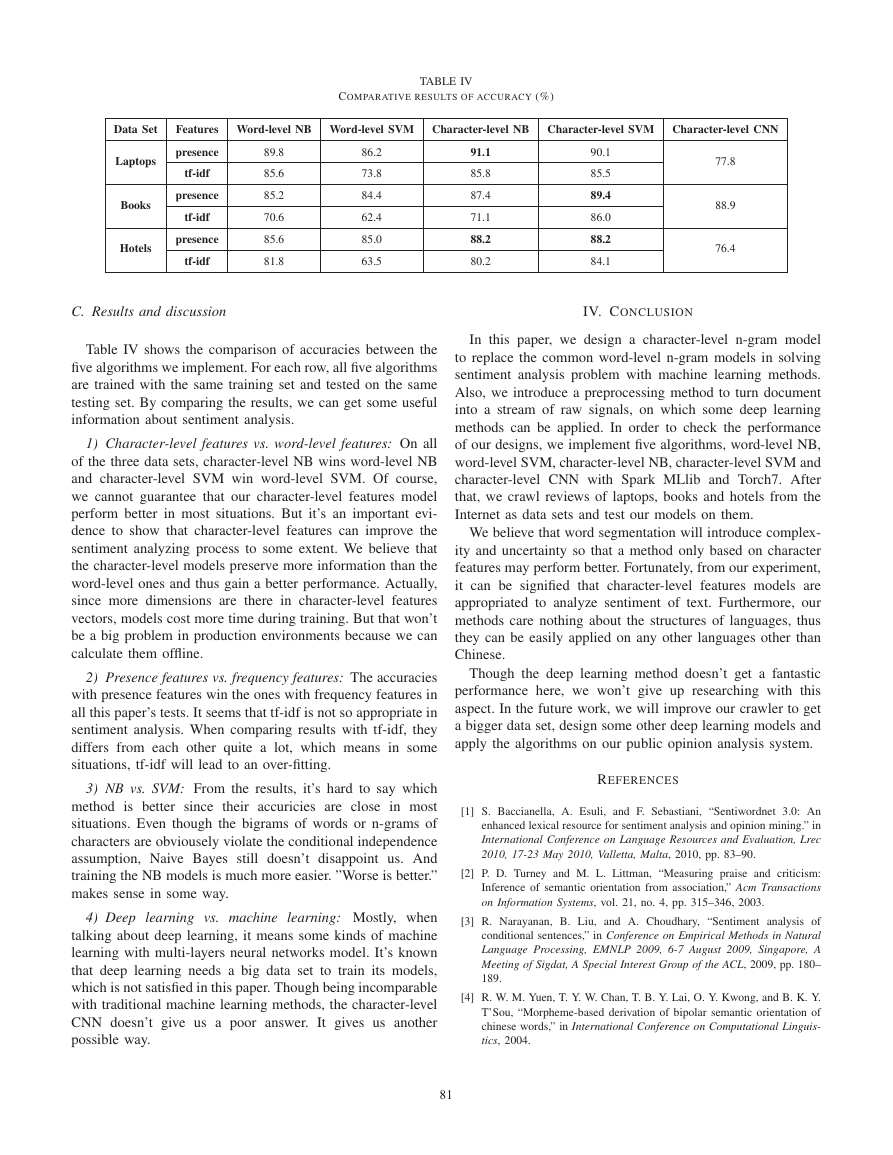

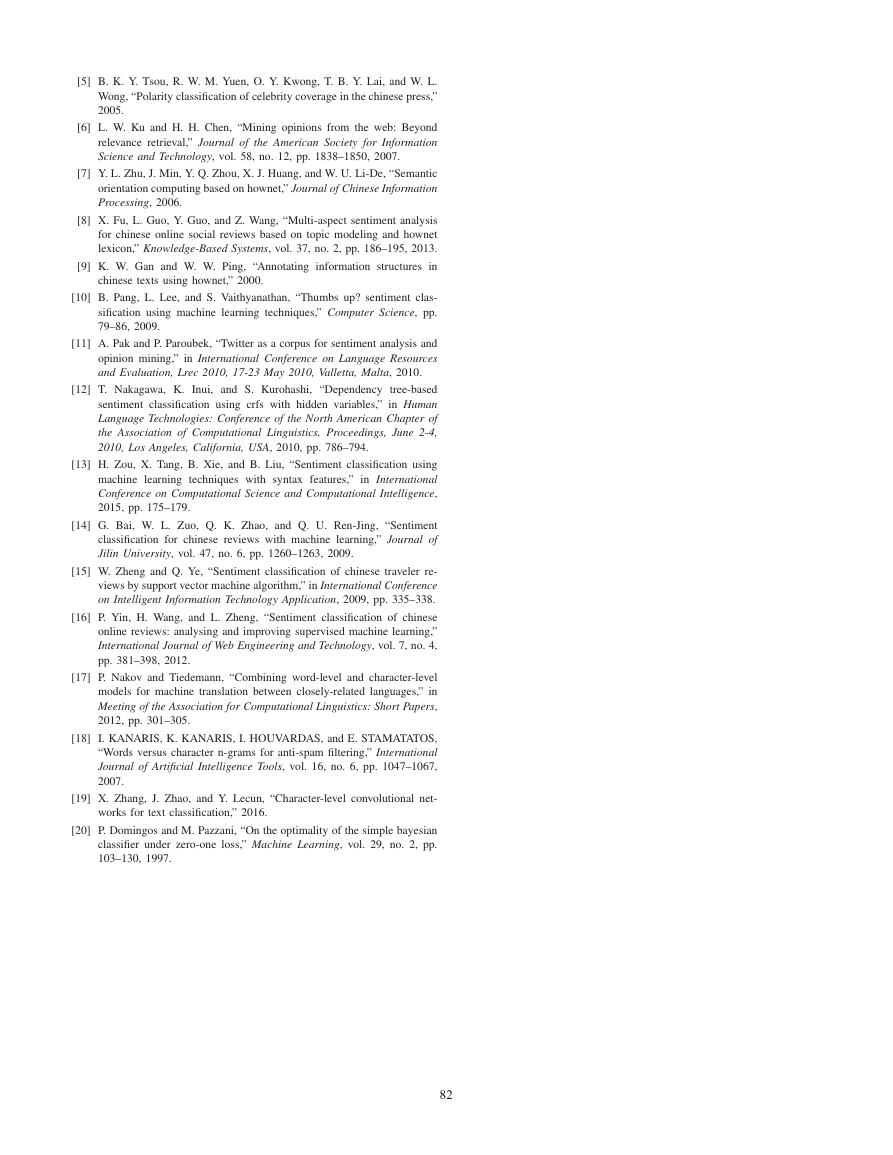

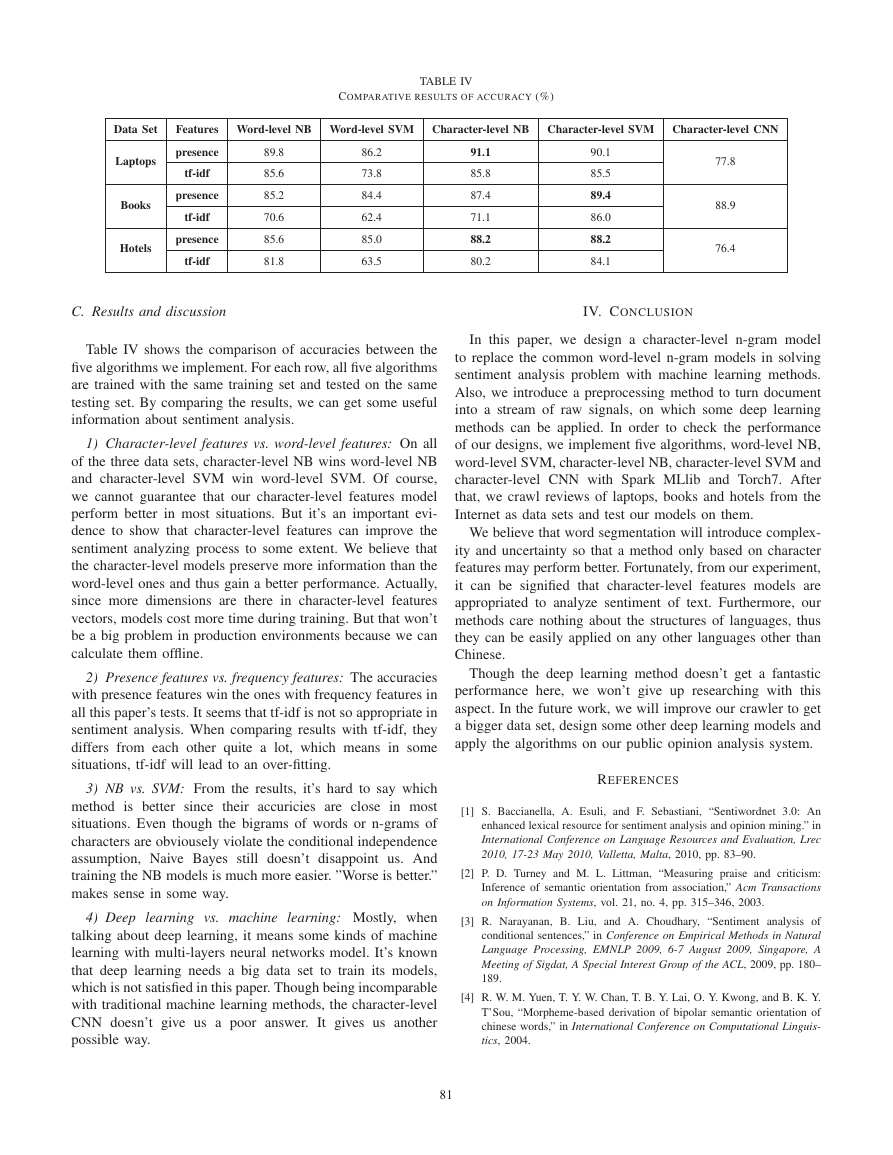

TABLE IV

COMPARATIVE RESULTS OF ACCURACY (%)

Data Set

Laptops

Books

Hotels

Features Word-level NB Word-level SVM Character-level NB

presence

91.1

89.8

86.2

tf-idf

presence

tf-idf

presence

tf-idf

85.6

85.2

70.6

85.6

81.8

73.8

84.4

62.4

85.0

63.5

85.8

87.4

71.1

88.2

80.2

Character-level SVM Character-level CNN

90.1

85.5

89.4

86.0

88.2

84.1

77.8

88.9

76.4

C. Results and discussion

IV. CONCLUSION

Table IV shows the comparison of accuracies between the

five algorithms we implement. For each row, all five algorithms

are trained with the same training set and tested on the same

testing set. By comparing the results, we can get some useful

information about sentiment analysis.

1) Character-level features vs. word-level features: On all

of the three data sets, character-level NB wins word-level NB

and character-level SVM win word-level SVM. Of course,

we cannot guarantee that our character-level features model

perform better in most situations. But it’s an important evi-

dence to show that character-level features can improve the

sentiment analyzing process to some extent. We believe that

the character-level models preserve more information than the

word-level ones and thus gain a better performance. Actually,

since more dimensions are there in character-level features

vectors, models cost more time during training. But that won’t

be a big problem in production environments because we can

calculate them offline.

2) Presence features vs. frequency features: The accuracies

with presence features win the ones with frequency features in

all this paper’s tests. It seems that tf-idf is not so appropriate in

sentiment analysis. When comparing results with tf-idf, they

differs from each other quite a lot, which means in some

situations, tf-idf will lead to an over-fitting.

3) NB vs. SVM: From the results, it’s hard to say which

method is better since their accuricies are close in most

situations. Even though the bigrams of words or n-grams of

characters are obviousely violate the conditional independence

assumption, Naive Bayes still doesn’t disappoint us. And

training the NB models is much more easier. ”Worse is better.”

makes sense in some way.

4) Deep learning vs. machine learning: Mostly, when

talking about deep learning, it means some kinds of machine

learning with multi-layers neural networks model. It’s known

that deep learning needs a big data set to train its models,

which is not satisfied in this paper. Though being incomparable

with traditional machine learning methods, the character-level

CNN doesn’t give us a poor answer. It gives us another

possible way.

In this paper, we design a character-level n-gram model

to replace the common word-level n-gram models in solving

sentiment analysis problem with machine learning methods.

Also, we introduce a preprocessing method to turn document

into a stream of raw signals, on which some deep learning

methods can be applied. In order to check the performance

of our designs, we implement five algorithms, word-level NB,

word-level SVM, character-level NB, character-level SVM and

character-level CNN with Spark MLlib and Torch7. After

that, we crawl reviews of laptops, books and hotels from the

Internet as data sets and test our models on them.

We believe that word segmentation will introduce complex-

ity and uncertainty so that a method only based on character

features may perform better. Fortunately, from our experiment,

it can be signified that character-level features models are

appropriated to analyze sentiment of text. Furthermore, our

methods care nothing about the structures of languages, thus

they can be easily applied on any other languages other than

Chinese.

Though the deep learning method doesn’t get a fantastic

performance here, we won’t give up researching with this

aspect. In the future work, we will improve our crawler to get

a bigger data set, design some other deep learning models and

apply the algorithms on our public opinion analysis system.

REFERENCES

[1] S. Baccianella, A. Esuli, and F. Sebastiani, “Sentiwordnet 3.0: An

enhanced lexical resource for sentiment analysis and opinion mining.” in

International Conference on Language Resources and Evaluation, Lrec

2010, 17-23 May 2010, Valletta, Malta, 2010, pp. 83–90.

[2] P. D. Turney and M. L. Littman, “Measuring praise and criticism:

Inference of semantic orientation from association,” Acm Transactions

on Information Systems, vol. 21, no. 4, pp. 315–346, 2003.

[3] R. Narayanan, B. Liu, and A. Choudhary, “Sentiment analysis of

conditional sentences,” in Conference on Empirical Methods in Natural

Language Processing, EMNLP 2009, 6-7 August 2009, Singapore, A

Meeting of Sigdat, A Special Interest Group of the ACL, 2009, pp. 180–

189.

[4] R. W. M. Yuen, T. Y. W. Chan, T. B. Y. Lai, O. Y. Kwong, and B. K. Y.

T’Sou, “Morpheme-based derivation of bipolar semantic orientation of

chinese words,” in International Conference on Computational Linguis-

tics, 2004.

81

�

[5] B. K. Y. Tsou, R. W. M. Yuen, O. Y. Kwong, T. B. Y. Lai, and W. L.

Wong, “Polarity classification of celebrity coverage in the chinese press,”

2005.

[6] L. W. Ku and H. H. Chen, “Mining opinions from the web: Beyond

relevance retrieval,” Journal of the American Society for Information

Science and Technology, vol. 58, no. 12, pp. 1838–1850, 2007.

[7] Y. L. Zhu, J. Min, Y. Q. Zhou, X. J. Huang, and W. U. Li-De, “Semantic

orientation computing based on hownet,” Journal of Chinese Information

Processing, 2006.

[8] X. Fu, L. Guo, Y. Guo, and Z. Wang, “Multi-aspect sentiment analysis

for chinese online social reviews based on topic modeling and hownet

lexicon,” Knowledge-Based Systems, vol. 37, no. 2, pp. 186–195, 2013.

[9] K. W. Gan and W. W. Ping, “Annotating information structures in

chinese texts using hownet,” 2000.

[10] B. Pang, L. Lee, and S. Vaithyanathan, “Thumbs up? sentiment clas-

sification using machine learning techniques,” Computer Science, pp.

79–86, 2009.

[11] A. Pak and P. Paroubek, “Twitter as a corpus for sentiment analysis and

opinion mining,” in International Conference on Language Resources

and Evaluation, Lrec 2010, 17-23 May 2010, Valletta, Malta, 2010.

[12] T. Nakagawa, K. Inui, and S. Kurohashi, “Dependency tree-based

sentiment classification using crfs with hidden variables,” in Human

Language Technologies: Conference of the North American Chapter of

the Association of Computational Linguistics, Proceedings, June 2-4,

2010, Los Angeles, California, USA, 2010, pp. 786–794.

[13] H. Zou, X. Tang, B. Xie, and B. Liu, “Sentiment classification using

machine learning techniques with syntax features,” in International

Conference on Computational Science and Computational Intelligence,

2015, pp. 175–179.

[14] G. Bai, W. L. Zuo, Q. K. Zhao, and Q. U. Ren-Jing, “Sentiment

classification for chinese reviews with machine learning,” Journal of

Jilin University, vol. 47, no. 6, pp. 1260–1263, 2009.

[15] W. Zheng and Q. Ye, “Sentiment classification of chinese traveler re-

views by support vector machine algorithm,” in International Conference

on Intelligent Information Technology Application, 2009, pp. 335–338.

[16] P. Yin, H. Wang, and L. Zheng, “Sentiment classification of chinese

online reviews: analysing and improving supervised machine learning,”

International Journal of Web Engineering and Technology, vol. 7, no. 4,

pp. 381–398, 2012.

[17] P. Nakov and Tiedemann, “Combining word-level and character-level

models for machine translation between closely-related languages,” in

Meeting of the Association for Computational Linguistics: Short Papers,

2012, pp. 301–305.

[18] I. KANARIS, K. KANARIS, I. HOUVARDAS, and E. STAMATATOS,

“Words versus character n-grams for anti-spam filtering,” International

Journal of Artificial Intelligence Tools, vol. 16, no. 6, pp. 1047–1067,

2007.

[19] X. Zhang, J. Zhao, and Y. Lecun, “Character-level convolutional net-

works for text classification,” 2016.

[20] P. Domingos and M. Pazzani, “On the optimality of the simple bayesian

classifier under zero-one loss,” Machine Learning, vol. 29, no. 2, pp.

103–130, 1997.

82

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc