1 Introduction

2 High-Dimensional Space

2.2 The Law of Large Numbers

2.3 The Geometry of High Dimensions

2.4 Properties of the Unit Ball

2.5 Generating Points Uniformly at Random from a Ball

2.6 Gaussians in High Dimension

2.7 Random Projection and Johnson-Lindenstrauss Lemma

2.8 Separating Gaussians

2.9 Fitting a Spherical Gaussian to Data

2.10 Bibliographic Notes

3 Best-Fit Subspaces and Singular Value Decomposition (SVD)

3.1 Introduction

3.2 Preliminaries

3.3 Singular Vectors

3.4 Singular Value Decomposition (SVD)

3.5 Best Rank-k Approximations

3.6 Left Singular Vectors

3.7 Power Method for Singular Value Decomposition

3.8 Singular Vectors and Eigenvectors

3.9 Applications of Singular Value Decomposition

3.9.1 Centering Data

3.9.2 Principal Component Analysis

3.9.3 Clustering a Mixture of Spherical Gaussians

3.9.4 Ranking Documents and Web Pages

3.9.5 An Illustrative Application of SVD

3.9.6 An Application of SVD to a Discrete Optimization Problem

3.10 Bibliographic Notes

4 Random Walks and Markov Chains

5 Machine Learning

5.1 Introduction

5.2 The Perceptron Algorithm

5.3 Kernel Functions and Non-linearly Separable Data

5.4 Generalizing to New Data

5.4.1 Overfitting and Uniform Convergence

5.4.2 Occam’s Razor

5.4.3 Regularization: Penalizing Complexity

5.5 VC-Dimension

5.5.1 Definitions and Key Theorems

5.5.2 VC-Dimension of Some Set Systems

5.5.3 Shatter Function for Set Systems of Bounded VC-Dimension

5.5.4 VC-Dimension of Combinations of Concepts

5.5.5 The Key Theorem

5.6 VC-dimension and Machine Learning

5.7 Other Measures of Complexity

5.8 Deep Learning

5.8.1 Generative Adversarial Networks (GANs)

5.9 Gradient Descent

5.9.1 Stochastic Gradient Descent

5.9.2 Regularizer

5.10 Online Learning

5.10.1 An Example: Learning Disjunctions

5.10.2 The Halving Algorithm

5.10.3 The Perceptron Algorithm

5.10.4 Inseparable Data and Hinge Loss

.10.5 Online to Batch Conversion

5.10.6 Combining (Sleeping) Expert Advice

5.11 Boosting

5.12 Further Current Directions

5.12.1 Semi-Supervised Learning

5.12.2 Active Learning

5.12.3 Multi-Task Learning

5.13 Bibliographic Notes

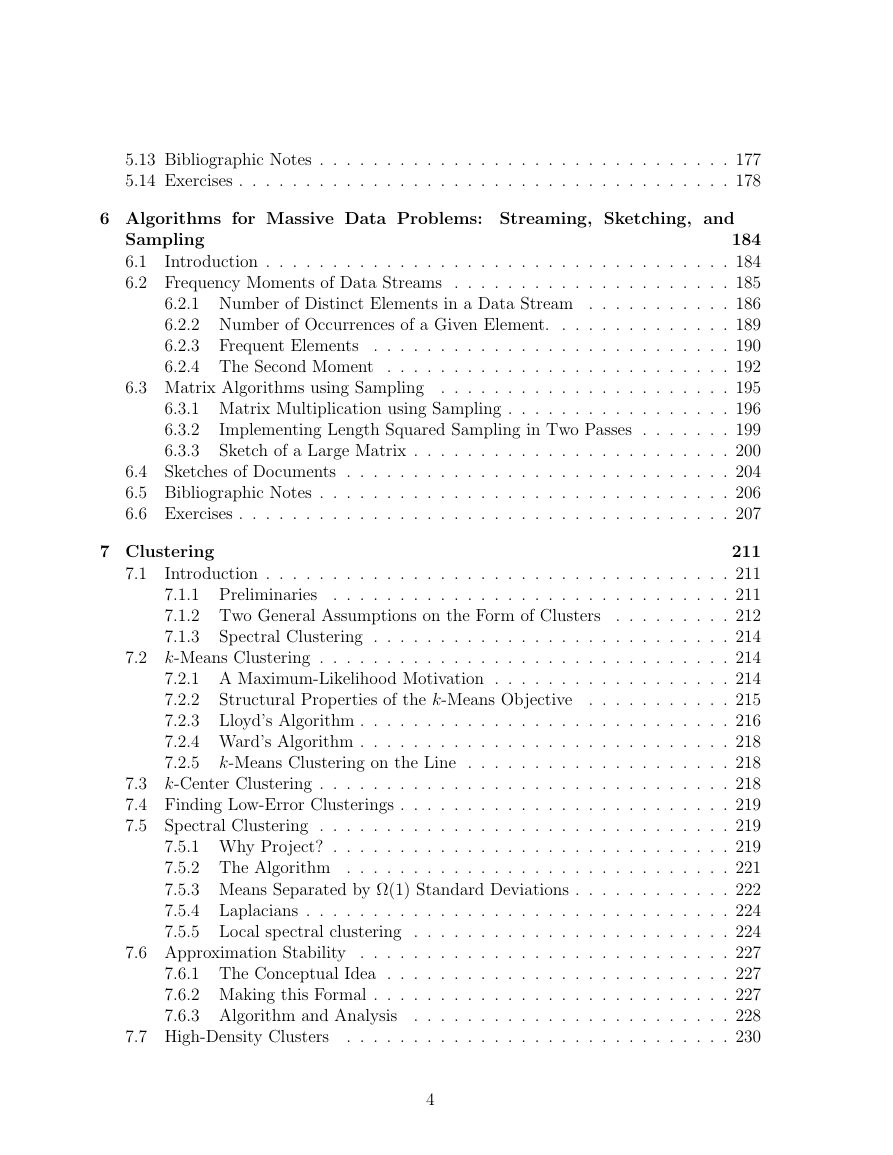

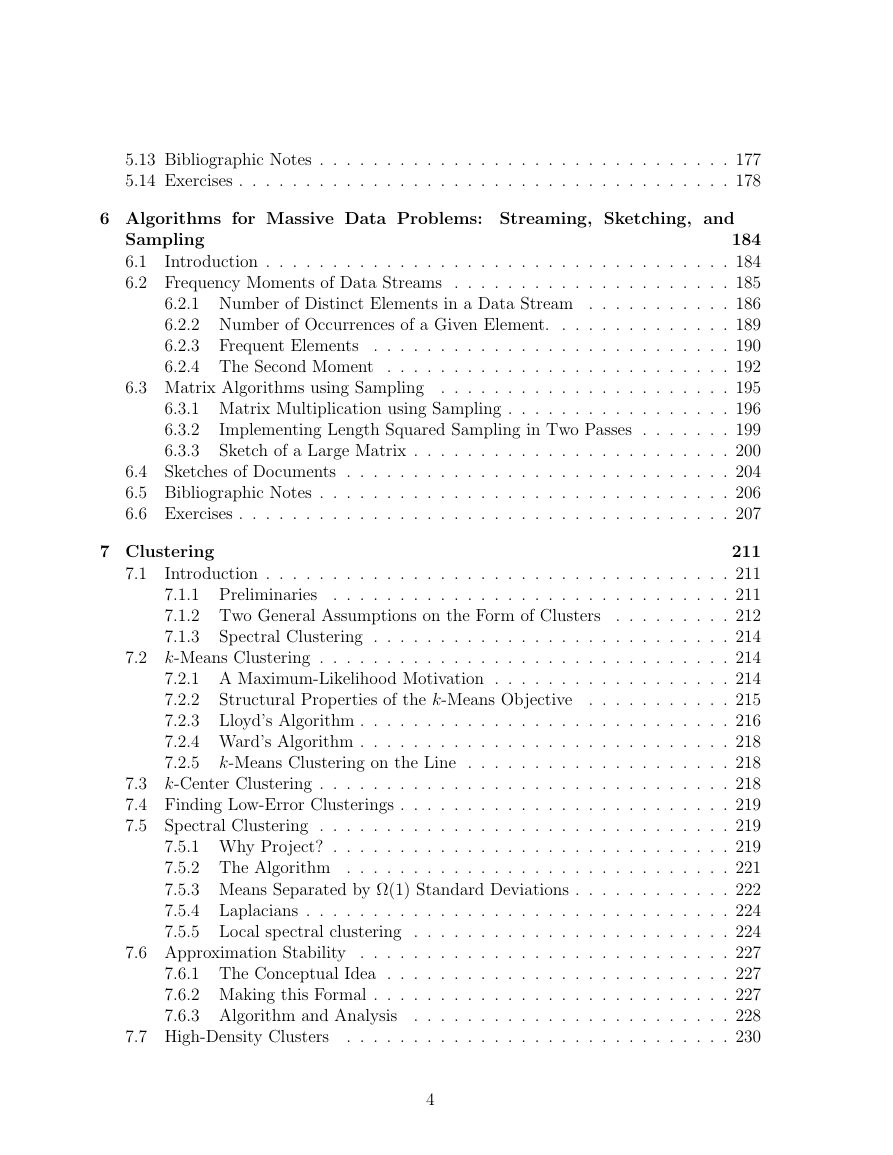

6 Algorithms for Massive Data Problems: Streaming, Sketching, and Sampling

6.1 Introduction

6.2 Frequency Moments of Data Streams

6.3 Matrix Algorithms using Sampling

6.4 Sketches of Documents

6.5 Bibliographic Notes

6.6 Exercises

7 Clustering

7.1 Introduction

7.2 k-Means Clustering

7.3 k-Center Clustering

7.4 Finding Low-Error Clusterings

7.5 Spectral Clustering

7.6 Approximation Stability

7.7 High-Density Clusters

7.8 Kernel Methods

7.9 Recursive Clustering Based on Sparse Cuts

7.10 Dense Submatrices and Communities

7.11 Community Finding and Graph Partitioning

7.12 Spectral Clustering Applied to Social Networks

7.13 Bibliographic Notes

7.14 Exercises

8 Random Graphs

8.1 The G(n; p) Model

8.2 Phase Transitions

8.3 Giant Component

8.4 Cycles and Full Connectivity

8.5 Phase Transitions for Increasing Properties

8.6 Branching Processes

8.7 CNF-SAT

8.8 Non-uniform Models of Random Graphs

8.9 Growth Models

8.10 Small World Graphs

8.11 Bibliographic Notes

9 Topic Models, Non-negative Matrix Factorization,Hidden Markov Models, and Graphical Models

9.1 Topic Models

9.2 An Idealized Model

9.3 Non-negative Matrix Factorization - NMF

9.4 NMF with Anchor Terms

9.5 Hard and Soft Clustering

9.6 The Latent Dirichlet Allocation Model for Topic Modeling

9.7 The Dominant Admixture Model

9.8 Formal Assumptions

9.9 Finding the Term-Topic Matrix

9.10 Hidden Markov Models

9.11 Graphical Models and Belief Propagation

9.12 Bayesian or Belief Networks

9.13 Markov Random Fields

9.14 Factor Graphs

9.15 Tree Algorithms

9.16 Message Passing in General Graphs

9.16.1 Graphs with a Single Cycle

9.16.2 Belief Update in Networks with a Single Loop

9.16.3 Maximum Weight Matching

9.17 Warning Propagation

9.18 Correlation Between Variables

9.19 Bibliographic Notes

10 Other Topics

10.5 Gradient

10.6 Linear Programming

10.7 Integer Optimization

10.8 Semi-Definite Programming

10.9 Bibliographic Notes

10.10 Exercises

11 Wavelets

11.1 Dilation

11.2 The Haar Wavelet

11.3 Wavelet Systems

11.4 Solving the Dilation Equation

11.5 Conditions on the Dilation Equation

11.6 Derivation of the Wavelets from the Scaling Function

11.7 Sufficient Conditions for the Wavelets to be Orthogonal

11.8 Expressing a Function in Terms of Wavelets

11.9 Designing a Wavelet System

11.10 Applications

11.11 Bibliographic Notes

12 Appendix

12.1 Definitions and Notation

12.2 Useful Relations

12.3 Useful Inequalities

Tails of Gaussians

12.4 Probability

12.5 Bounds on Tail Probability

12.6 Applications of the Tail Bound

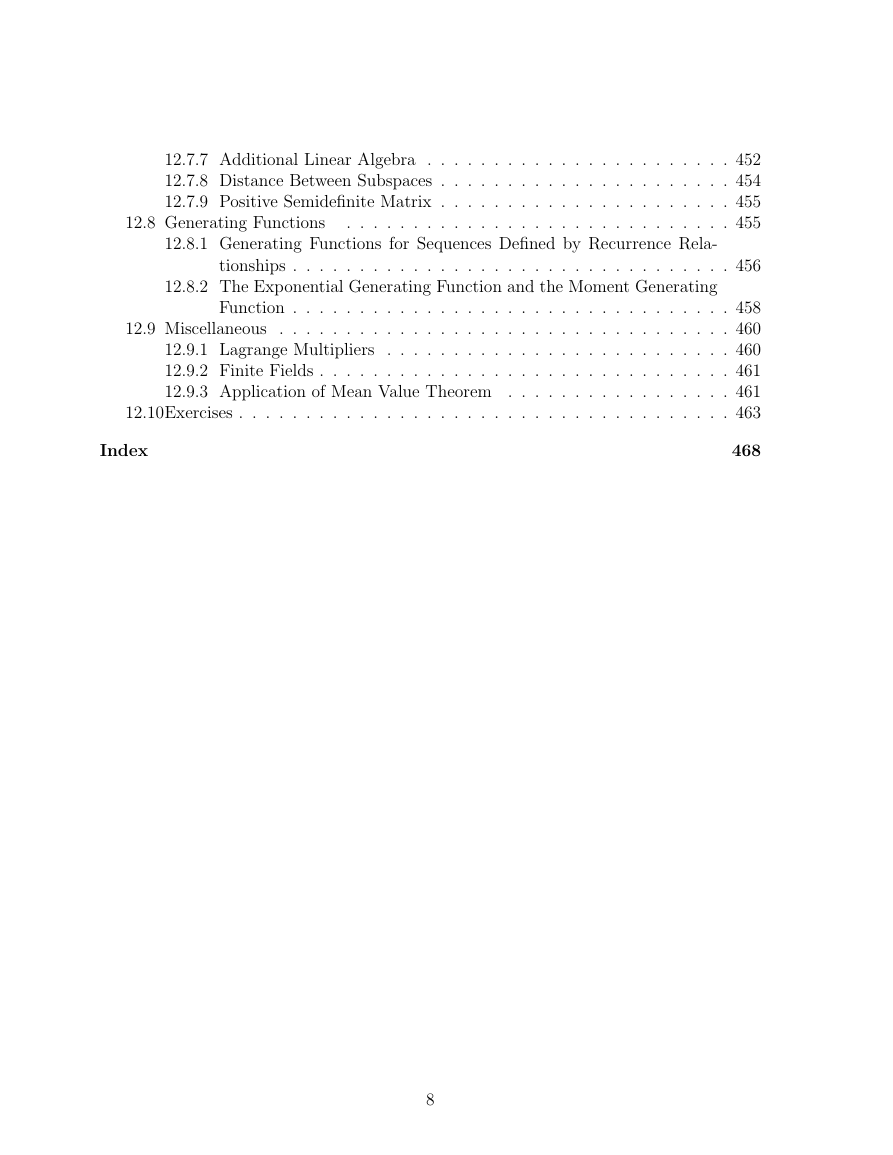

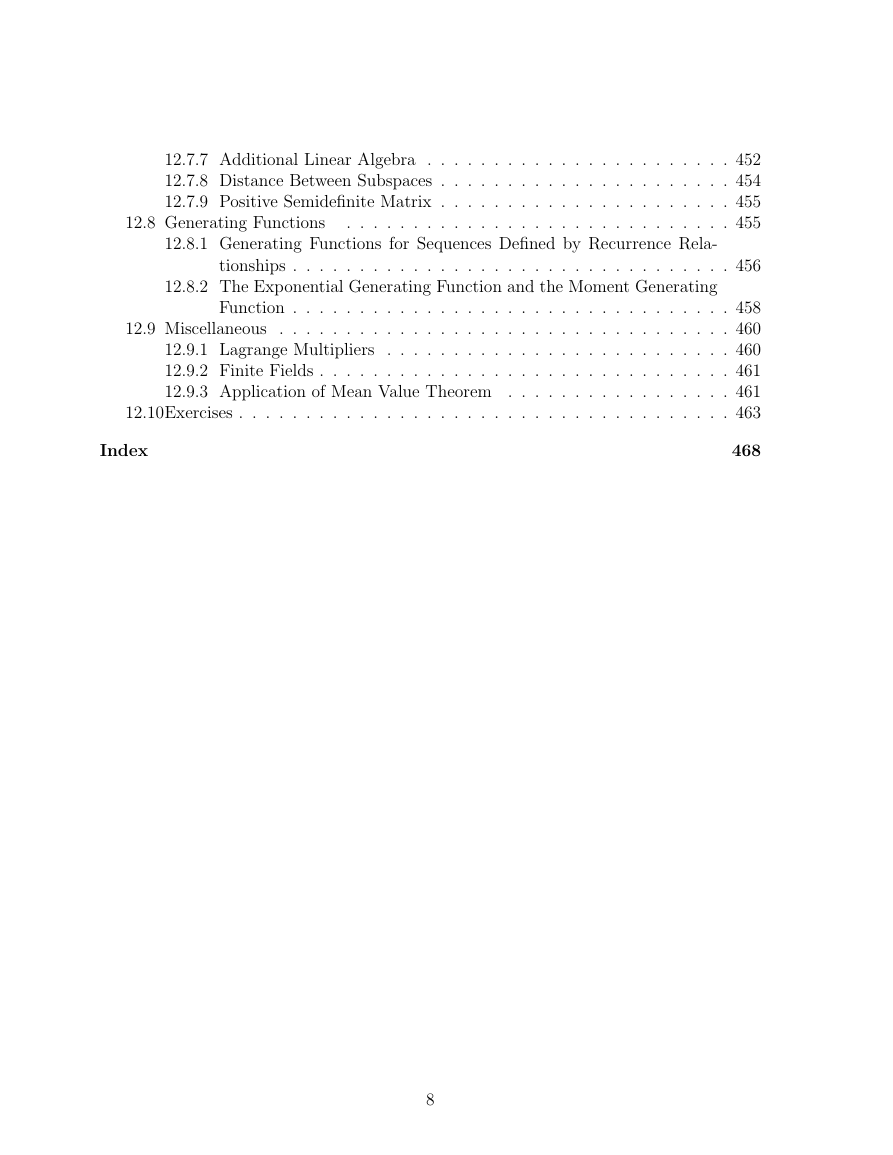

12.7 Eigenvalues and Eigenvectors

12.8 Generating Functions

12.9 Miscellaneous

12.10 Exercises

References

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc