NEON support in the RealView compiler

William Munns

18 June 2007

Introduction

This paper provides a simple introduction to the NEONTM Vector-SIMD architecture. It

continues by looking at the compiler support for SIMD, both through automatic recognition

and through the use of intrinsic functions.

NEON is a hybrid 64/128 bit SIMD architecture extension to the ARM v7-A profile,

targeted at multimedia applications. Positioning NEON within the processor allows it to

share the CPU resources for integer operation, loop control, and caching, significantly

reducing the area and power cost compared with a CPU plus hardware accelerator

combination. SIMD (Single Instruction Multiple Data) is where one instruction acts on

multiple data items, usually carrying out the same operation for all data.

The use of NEON instead of a CPU plus hardware accelerator combination allows savings

to be made in software development time as it creates a much simpler programming model

without forcing the programmer to search for ad-hoc concurrency and scheduling points.

On the ARM Cortex™-A8 the NEON unit is positioned in the pipeline so that loads can

come directly from the L2 cache. This means that a much larger dataset can be held in the

cache than would be allowed when executing ARM or Thumb®-2 code.

The NEON instruction set was designed to be an easy target for a compiler, including low

cost promotion/demotion and structure loads capable of accessing data from their natural

locations rather than forcing alignment to the vector size.

The RealView Development Tools® Suite version 3.1 supports NEON both in the standard

release using intrinsic functions and assembler, as well as through the vectorizing compiler

add-on which can recognise code sequences and automatically generate SIMD code. The

vectorizing compiler greatly reduces porting time, as well as reducing the requirement for

deep architectural knowledge.

© 2007 ARM Limited. All Rights Reserved.

ARM and RealView logo are registered

trademarks of ARM Ltd. All other trademarks

are the property of their respective owners and

are acknowledged

- 1 -

�

Overview of NEON Vector SIMD

SIMD is the name of the process for operating on multiple data items in parallel using the

same instruction. In the NEON extension, the data is organized into very long registers (64

or 128 bits wide). These registers can hold "vectors" of items which are 8, 16, 32 or 64 bits.

The traditional advice when optimizing or porting algorithms written in C/C++ is to use the

128 bit Q register

4 x 32 bit Data

8 x 16 bit Data

16 x 8 bit Data

natural type of the machine for data handling (in the case of ARM 32 bits). The unwanted

bits can then be discarded by casting and/or shifting before storing to memory. The ability

of NEON to specify the data width in the instruction and hence use the whole register

width for useful information means keeping the natural type for the algorithm is both

possible and preferable. Keeping with the algorithms natural type reduces the cost of

porting an algorithm from one architecture to another and allows more data items to be

simultaneously operated on.

NEON appears to the programmer to have two banks of registers, 64 bit D registers and

128 bit Q registers. In reality the D and Q registers alias each other, so the 64 bit registers

D0 and D1 map against the same physical bits as the register Q0.

When an operation is performed on the registers the instruction specifies the layout of the

data contained in the source and, in certain cases, destination registers.

- 2 -

�

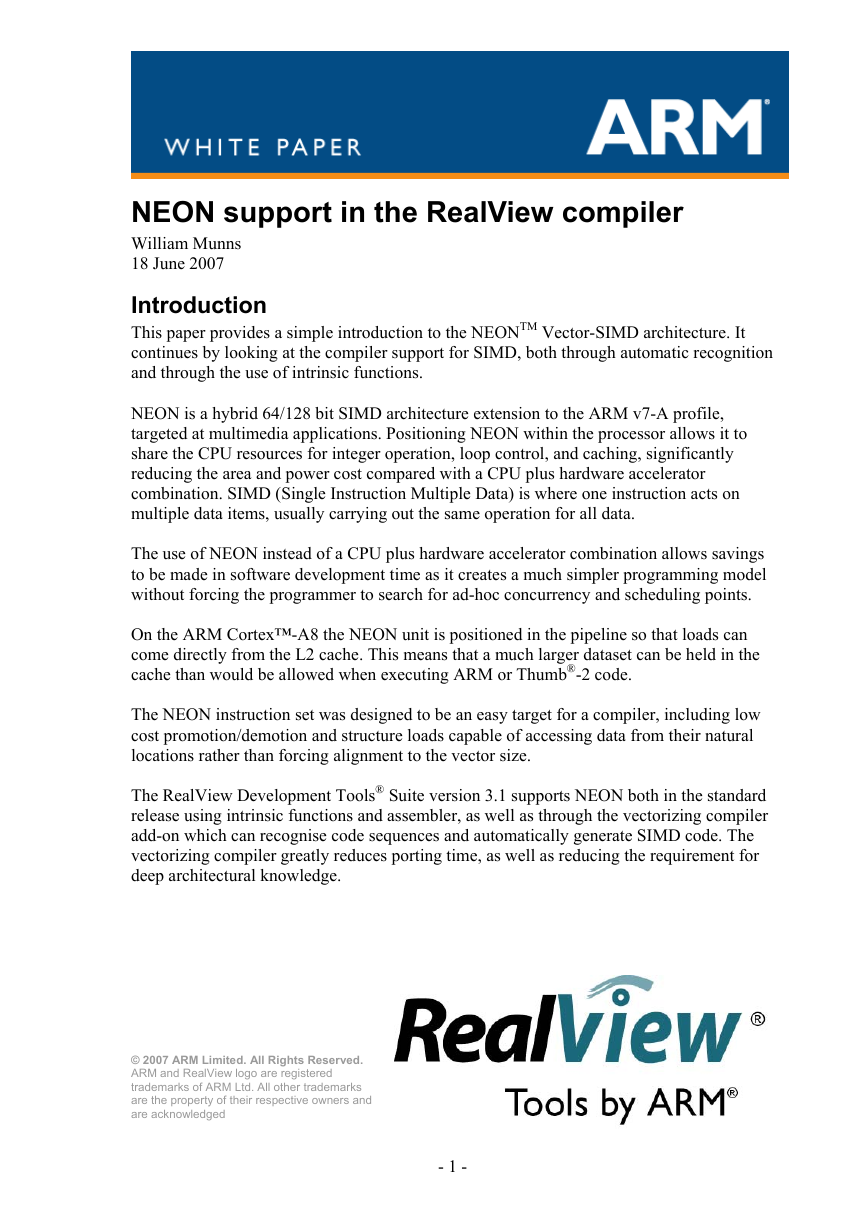

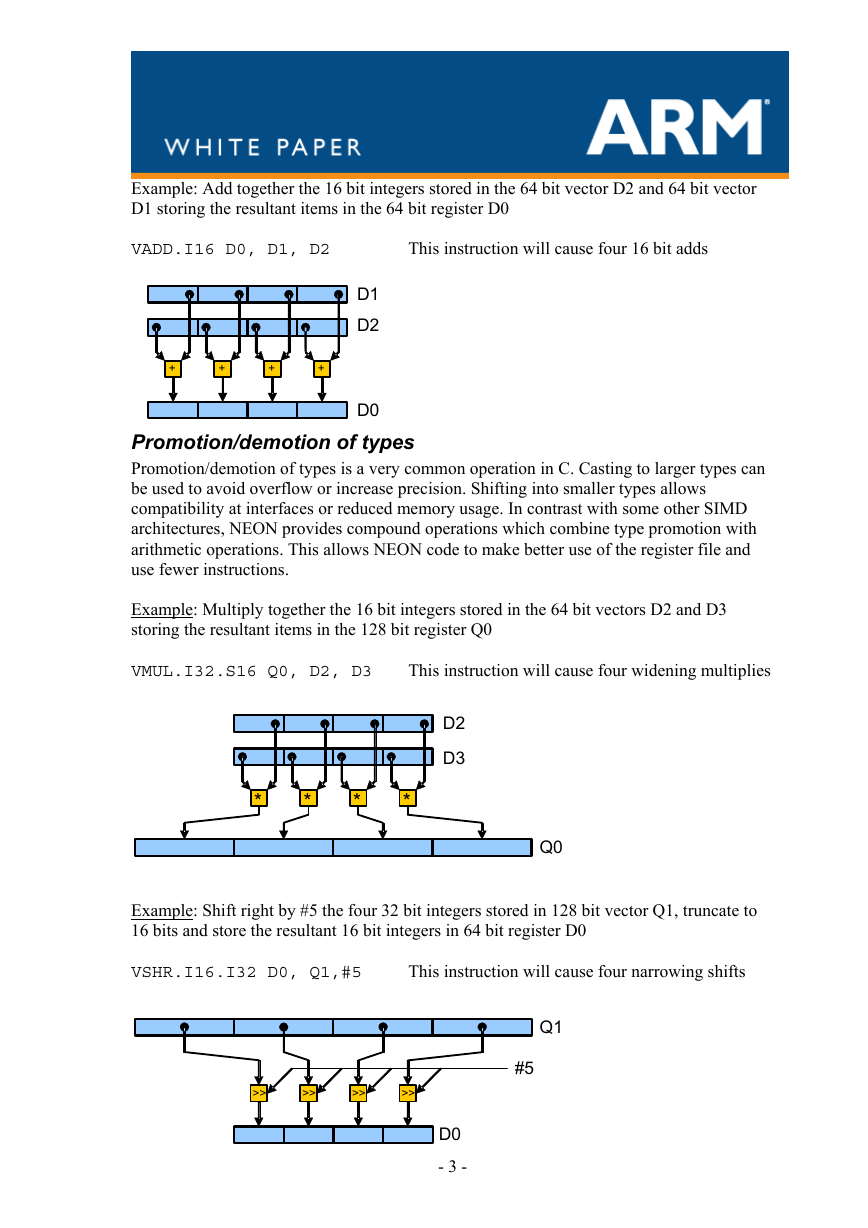

Example: Add together the 16 bit integers stored in the 64 bit vector D2 and 64 bit vector

D1 storing the resultant items in the 64 bit register D0

VADD.I16 D0, D1, D2

This instruction will cause four 16 bit adds

+

+

+

+

D1

D2

D0

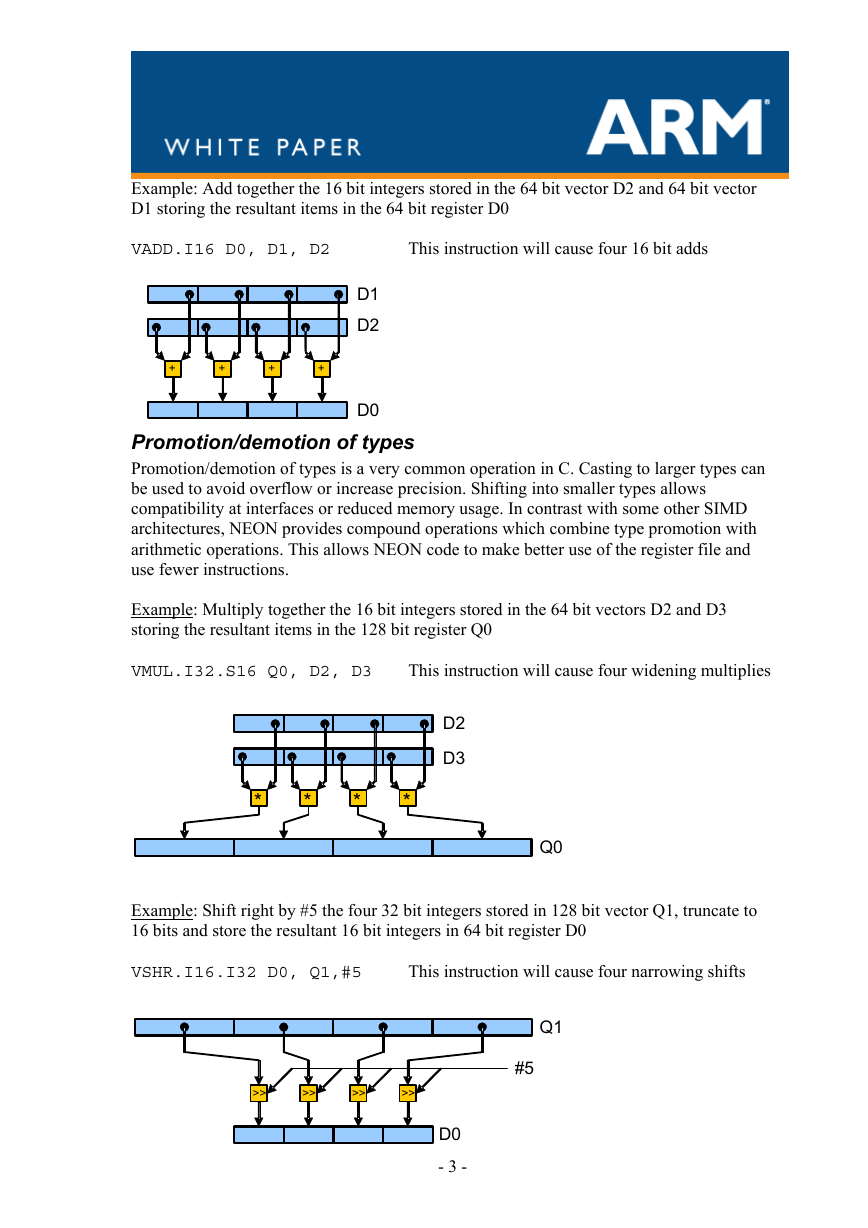

Promotion/demotion of types

Promotion/demotion of types is a very common operation in C. Casting to larger types can

be used to avoid overflow or increase precision. Shifting into smaller types allows

compatibility at interfaces or reduced memory usage. In contrast with some other SIMD

architectures, NEON provides compound operations which combine type promotion with

arithmetic operations. This allows NEON code to make better use of the register file and

use fewer instructions.

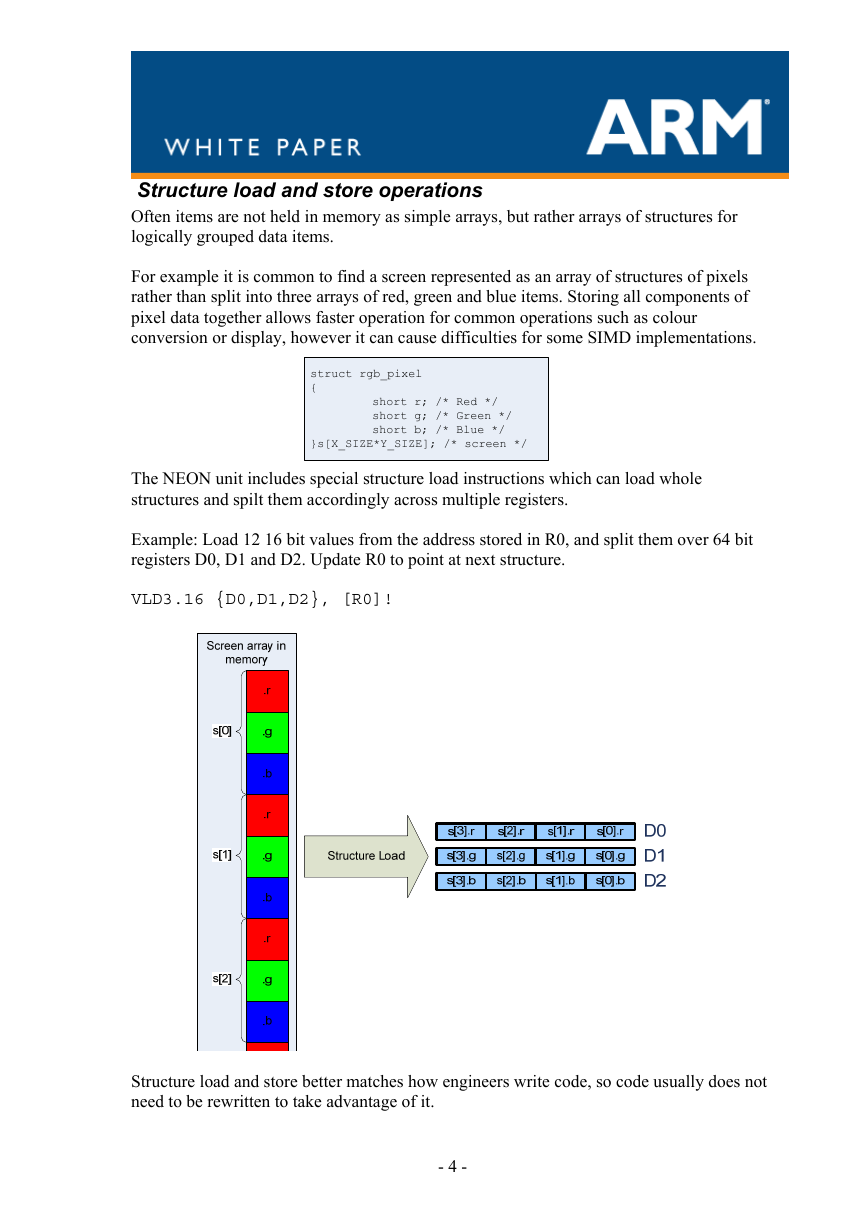

Example: Multiply together the 16 bit integers stored in the 64 bit vectors D2 and D3

storing the resultant items in the 128 bit register Q0

VMUL.I32.S16 Q0, D2, D3

This instruction will cause four widening multiplies

*

*

*

*

D2

D3

Q0

Example: Shift right by #5 the four 32 bit integers stored in 128 bit vector Q1, truncate to

16 bits and store the resultant 16 bit integers in 64 bit register D0

VSHR.I16.I32 D0, Q1,#5

This instruction will cause four narrowing shifts

>>

>>

>>

>>

Q1

#

#5

D

D0

- 3 -

�

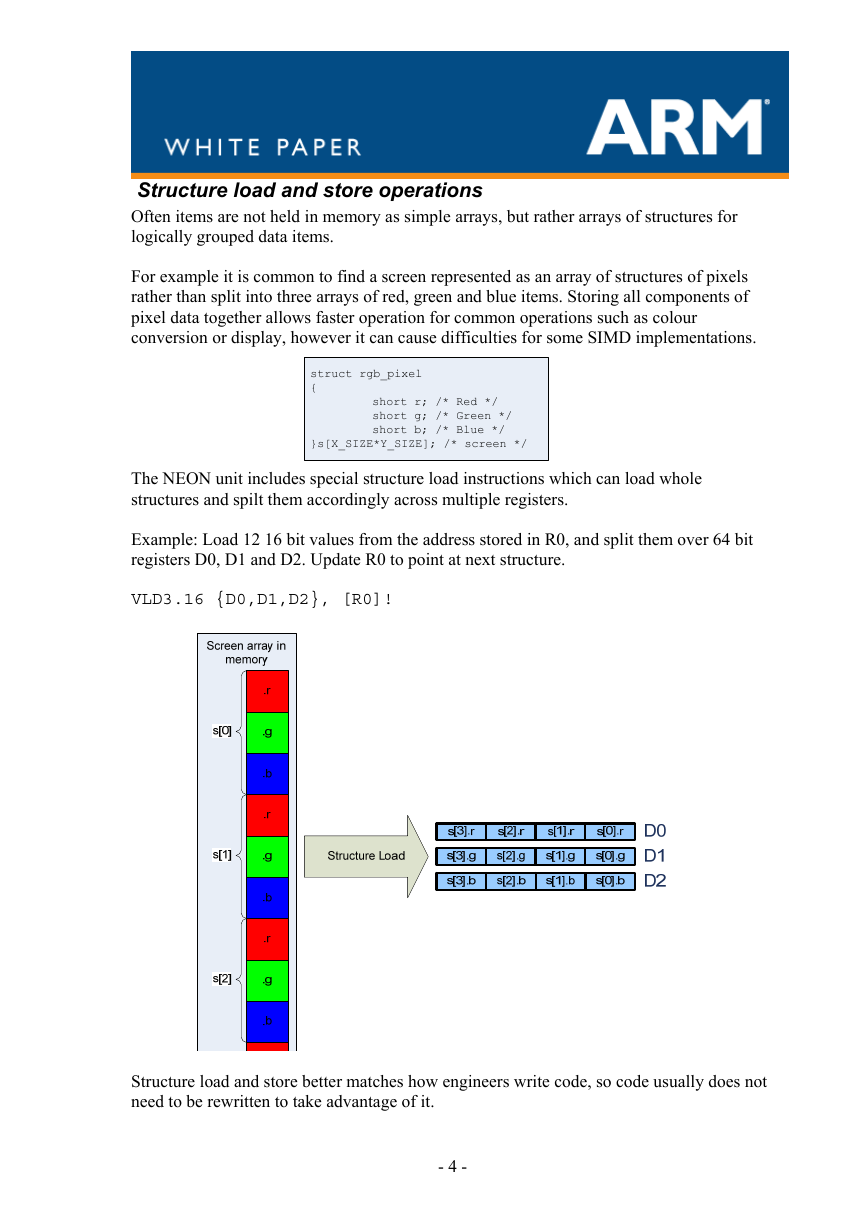

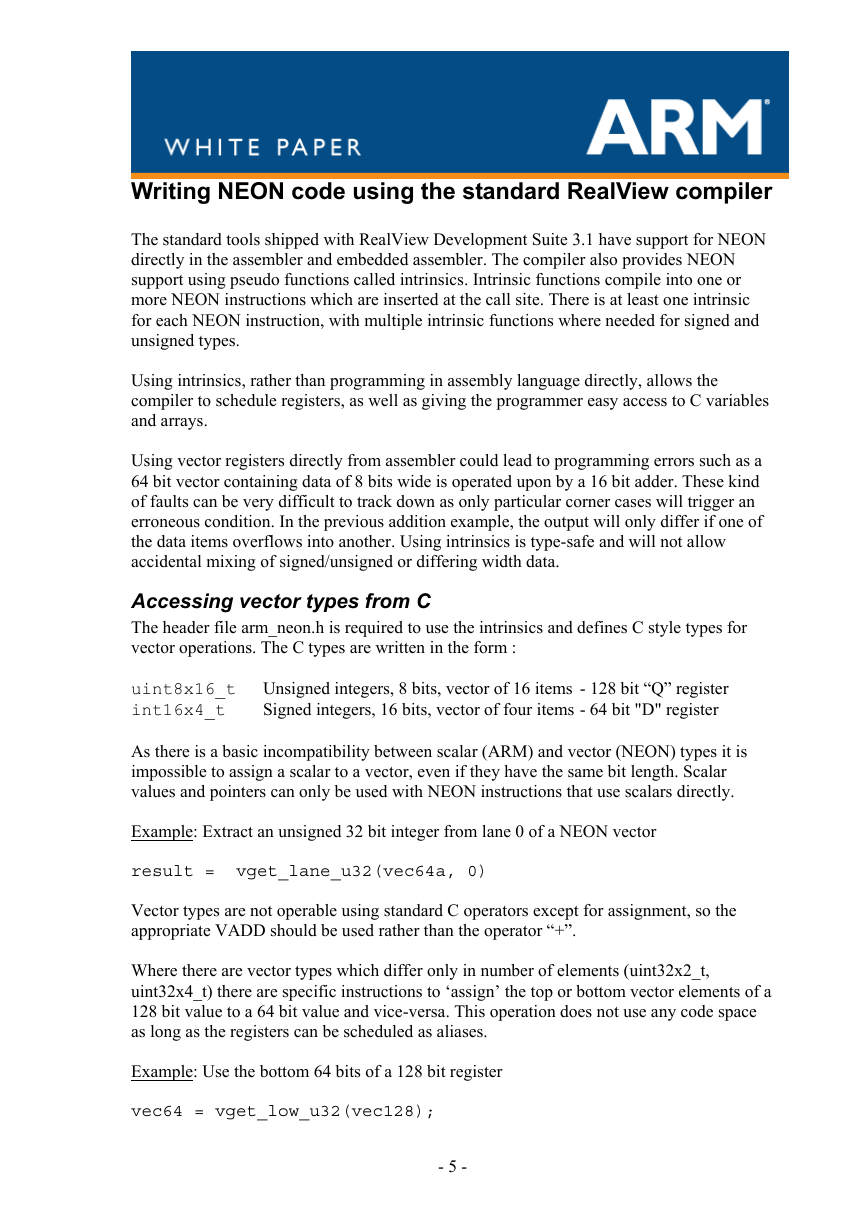

Structure load and store operations

Often items are not held in memory as simple arrays, but rather arrays of structures for

logically grouped data items.

For example it is common to find a screen represented as an array of structures of pixels

rather than split into three arrays of red, green and blue items. Storing all components of

pixel data together allows faster operation for common operations such as colour

conversion or display, however it can cause difficulties for some SIMD implementations.

struct rgb_pixel

{

short r; /* Red */

short g; /* Green */

short b; /* Blue */

}s[X_SIZE*Y_SIZE]; /* screen */

The NEON unit includes special structure load instructions which can load whole

structures and spilt them accordingly across multiple registers.

Example: Load 12 16 bit values from the address stored in R0, and split them over 64 bit

registers D0, D1 and D2. Update R0 to point at next structure.

VLD3.16 {D0,D1,D2}, [R0]!

Structure load and store better matches how engineers write code, so code usually does not

need to be rewritten to take advantage of it.

- 4 -

�

Writing NEON code using the standard RealView compiler

The standard tools shipped with RealView Development Suite 3.1 have support for NEON

directly in the assembler and embedded assembler. The compiler also provides NEON

support using pseudo functions called intrinsics. Intrinsic functions compile into one or

more NEON instructions which are inserted at the call site. There is at least one intrinsic

for each NEON instruction, with multiple intrinsic functions where needed for signed and

unsigned types.

Using intrinsics, rather than programming in assembly language directly, allows the

compiler to schedule registers, as well as giving the programmer easy access to C variables

and arrays.

Using vector registers directly from assembler could lead to programming errors such as a

64 bit vector containing data of 8 bits wide is operated upon by a 16 bit adder. These kind

of faults can be very difficult to track down as only particular corner cases will trigger an

erroneous condition. In the previous addition example, the output will only differ if one of

the data items overflows into another. Using intrinsics is type-safe and will not allow

accidental mixing of signed/unsigned or differing width data.

Accessing vector types from C

The header file arm_neon.h is required to use the intrinsics and defines C style types for

vector operations. The C types are written in the form :

uint8x16_t Unsigned integers, 8 bits, vector of 16 items - 128 bit “Q” register

int16x4_t

Signed integers, 16 bits, vector of four items - 64 bit "D" register

As there is a basic incompatibility between scalar (ARM) and vector (NEON) types it is

impossible to assign a scalar to a vector, even if they have the same bit length. Scalar

values and pointers can only be used with NEON instructions that use scalars directly.

Example: Extract an unsigned 32 bit integer from lane 0 of a NEON vector

result = vget_lane_u32(vec64a, 0)

Vector types are not operable using standard C operators except for assignment, so the

appropriate VADD should be used rather than the operator “+”.

Where there are vector types which differ only in number of elements (uint32x2_t,

uint32x4_t) there are specific instructions to ‘assign’ the top or bottom vector elements of a

128 bit value to a 64 bit value and vice-versa. This operation does not use any code space

as long as the registers can be scheduled as aliases.

Example: Use the bottom 64 bits of a 128 bit register

vec64 = vget_low_u32(vec128);

- 5 -

�

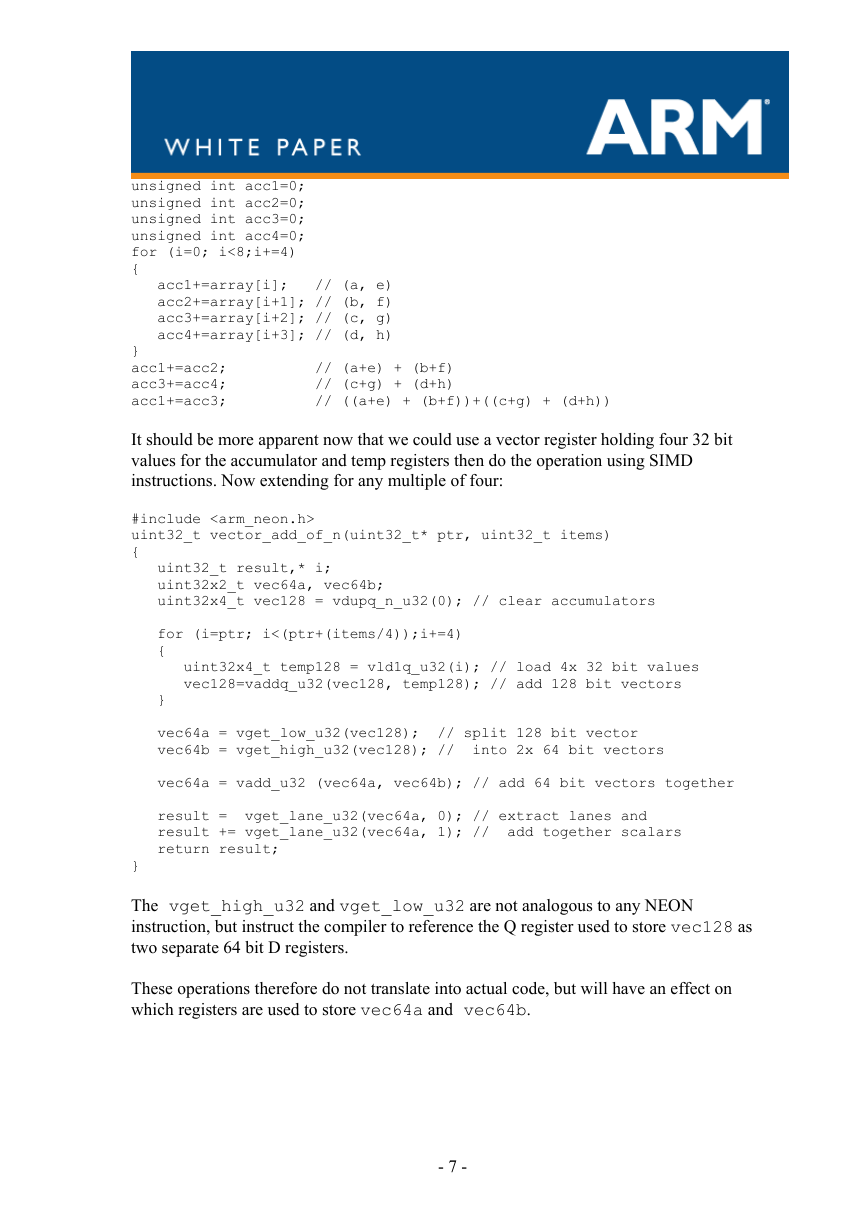

Access to NEON instructions using C

To the programmer intrinsics look like function calls. The function calls are specified to

describe the target NEON instruction as well as information about the source and

destination types.

Example: To add two vectors of 8 bytes, putting the result in a vector of 8 bytes requires

the instruction

VADD.I8 dx, dy, dz

Which can be provoked by using either of the following intrinsic functions

int8x8_t vadd_s8(int8x8_t a, int8x8_t b);

uint8x8_t vadd_u8(uint8x8_t a, uint8x8_t b);

The use of separate intrinsics for each type means that it is difficult to accidentally perform

an operation on incompatible types because the compiler will keep track of which types are

held in which registers. The compiler can also reschedule program flow and use alternative

faster instructions; there is no guarantee that the instructions that are generated will match

the instructions implied by the intrinsic. This is especially useful when moving from one

micro-architecture to another.

Programming using NEON intrinsics

The process of writing optimal NEON code directly in the assembler or by using the

intrinsic function interface requires a deep understanding of the data types used as well as

the NEON instructions available.

Possible SIMD operations become more obvious if you look at how an algorithm can be

split into parallel operations.

Commutative operations (add, min, max) are particularly easy from a SIMD point of view.

Example: Add 8 numbers from an array

unsigned int acc=0;

for (i=0; i<8;i+=1)

{

acc+=array[i]; // a + b + c + d + e + f + g + h

}

could be split into several adds ((a+e) + (b+f))+((c+g) + (d+h))

and recoded in C as:

Continued on next page…

- 6 -

�

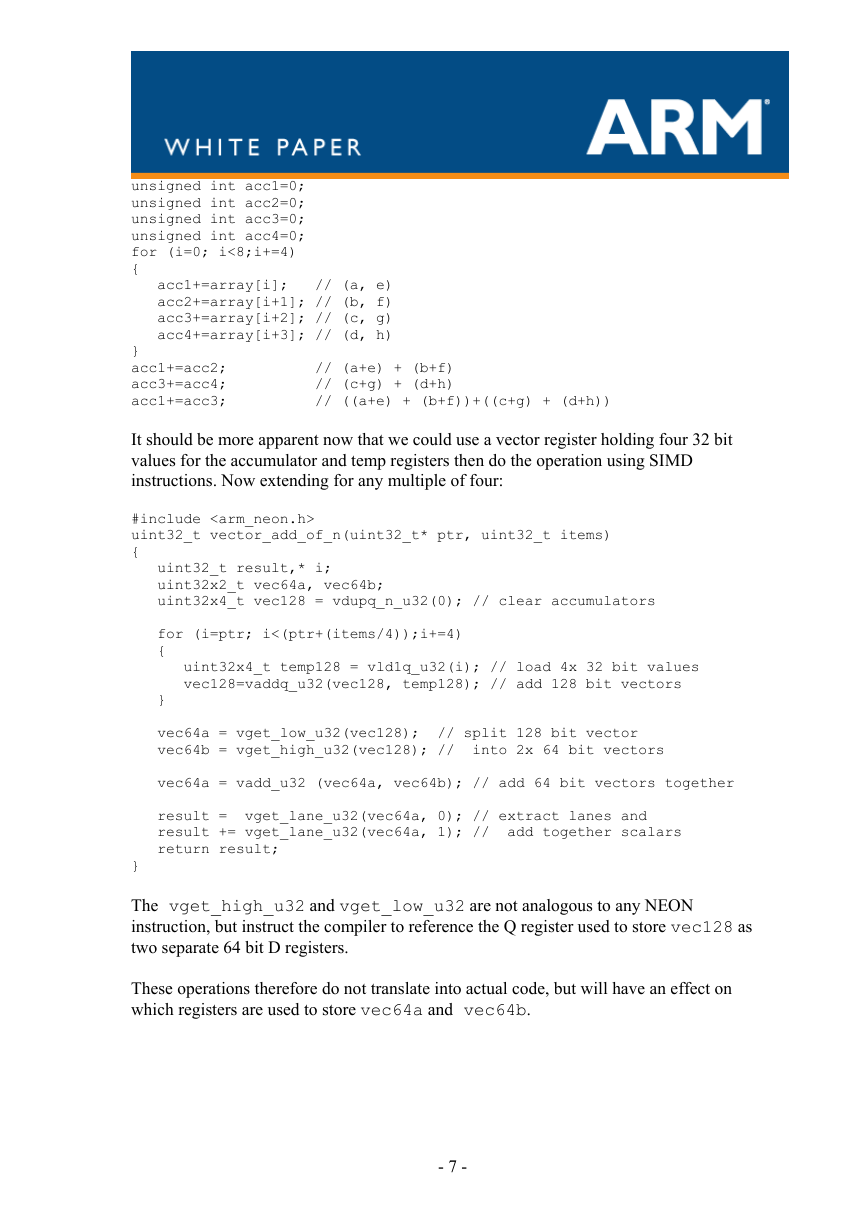

unsigned int acc1=0;

unsigned int acc2=0;

unsigned int acc3=0;

unsigned int acc4=0;

for (i=0; i<8;i+=4)

{

acc1+=array[i]; // (a, e)

acc2+=array[i+1]; // (b, f)

acc3+=array[i+2]; // (c, g)

acc4+=array[i+3]; // (d, h)

}

acc1+=acc2; // (a+e) + (b+f)

acc3+=acc4; // (c+g) + (d+h)

acc1+=acc3; // ((a+e) + (b+f))+((c+g) + (d+h))

It should be more apparent now that we could use a vector register holding four 32 bit

values for the accumulator and temp registers then do the operation using SIMD

instructions. Now extending for any multiple of four:

#include

uint32_t vector_add_of_n(uint32_t* ptr, uint32_t items)

{

uint32_t result,* i;

uint32x2_t vec64a, vec64b;

uint32x4_t vec128 = vdupq_n_u32(0); // clear accumulators

for (i=ptr; i<(ptr+(items/4));i+=4)

{

uint32x4_t temp128 = vld1q_u32(i); // load 4x 32 bit values

vec128=vaddq_u32(vec128, temp128); // add 128 bit vectors

}

vec64a = vget_low_u32(vec128); // split 128 bit vector

vec64b = vget_high_u32(vec128); // into 2x 64 bit vectors

vec64a = vadd_u32 (vec64a, vec64b); // add 64 bit vectors together

result = vget_lane_u32(vec64a, 0); // extract lanes and

result += vget_lane_u32(vec64a, 1); // add together scalars

return result;

}

The vget_high_u32 and vget_low_u32 are not analogous to any NEON

instruction, but instruct the compiler to reference the Q register used to store vec128 as

two separate 64 bit D registers.

These operations therefore do not translate into actual code, but will have an effect on

which registers are used to store vec64a and vec64b.

- 7 -

�

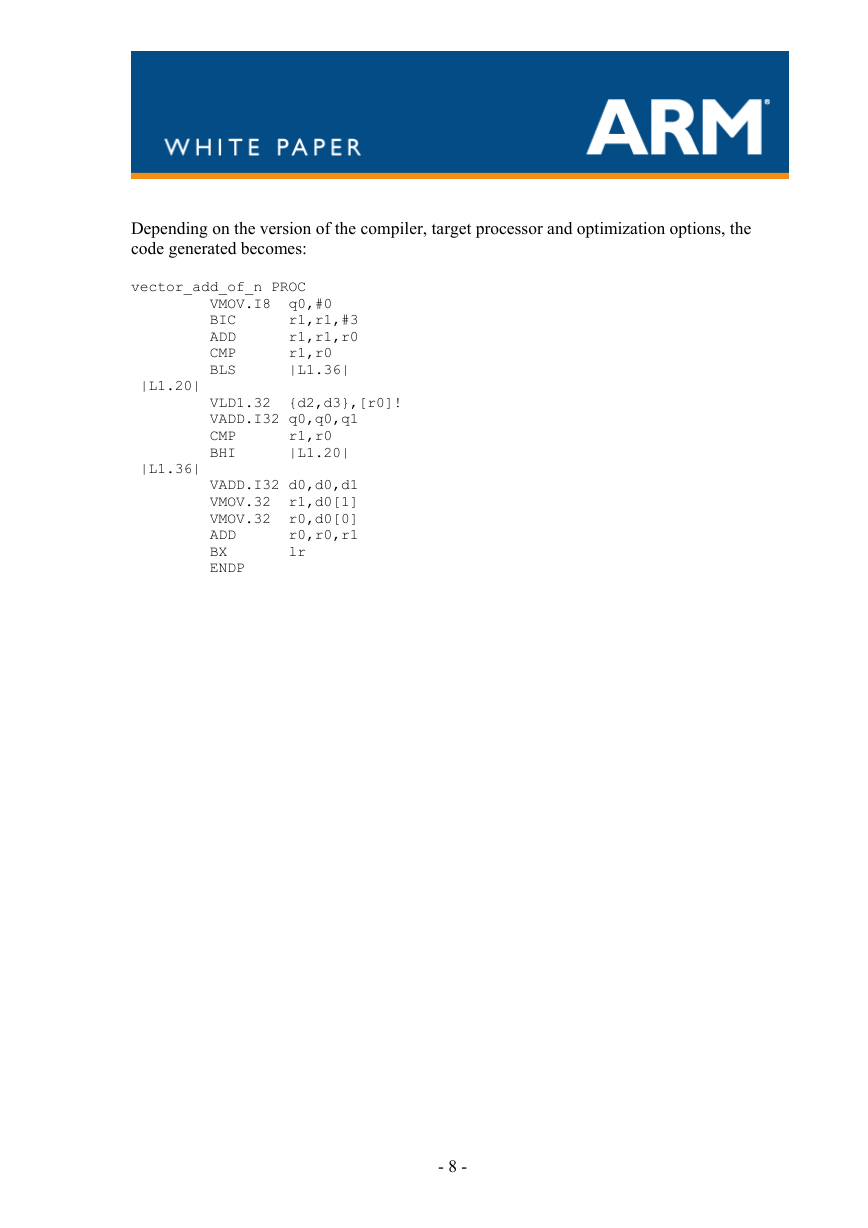

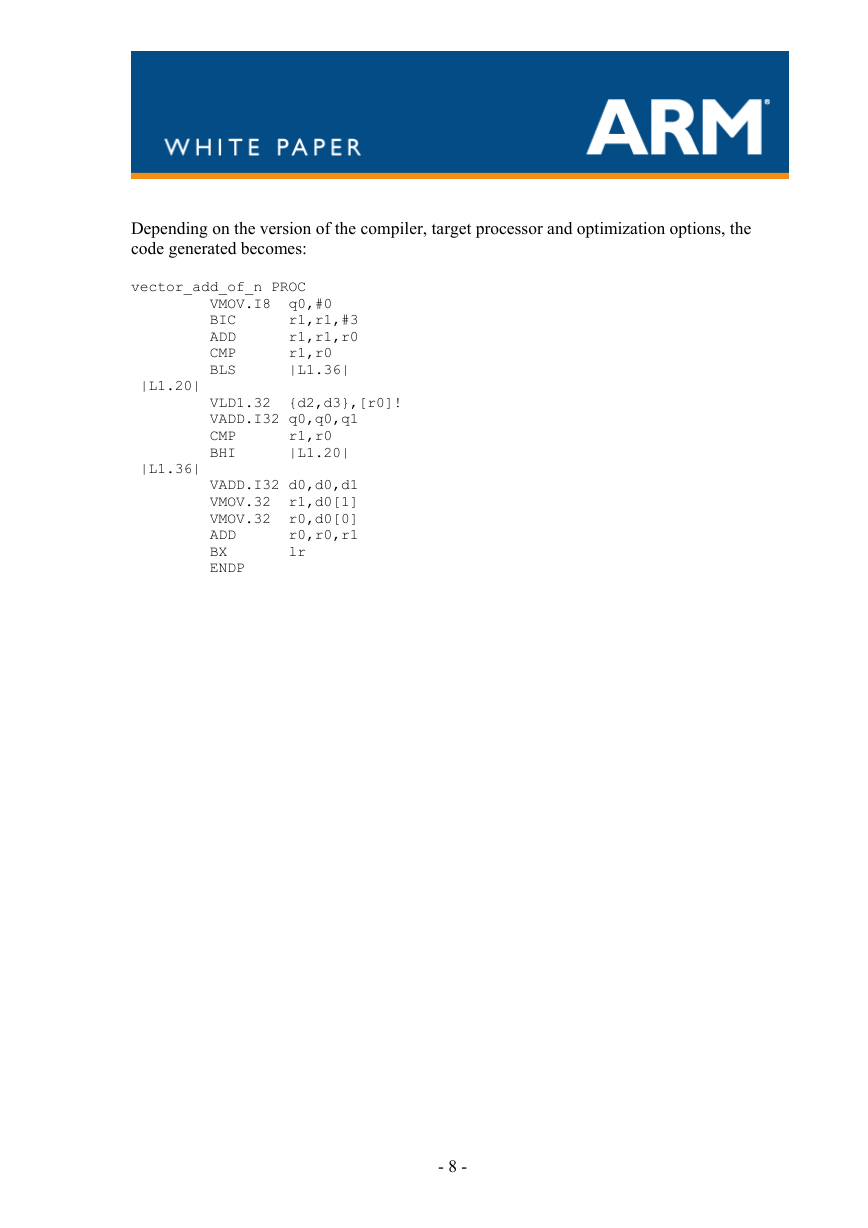

Depending on the version of the compiler, target processor and optimization options, the

code generated becomes:

vector_add_of_n PROC

VMOV.I8 q0,#0

BIC r1,r1,#3

ADD r1,r1,r0

CMP r1,r0

BLS |L1.36|

|L1.20|

VLD1.32 {d2,d3},[r0]!

VADD.I32 q0,q0,q1

CMP r1,r0

BHI |L1.20|

|L1.36|

VADD.I32 d0,d0,d1

VMOV.32 r1,d0[1]

VMOV.32 r0,d0[0]

ADD r0,r0,r1

BX lr

ENDP

- 8 -

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc