Cover

Title Page

Copyright Page

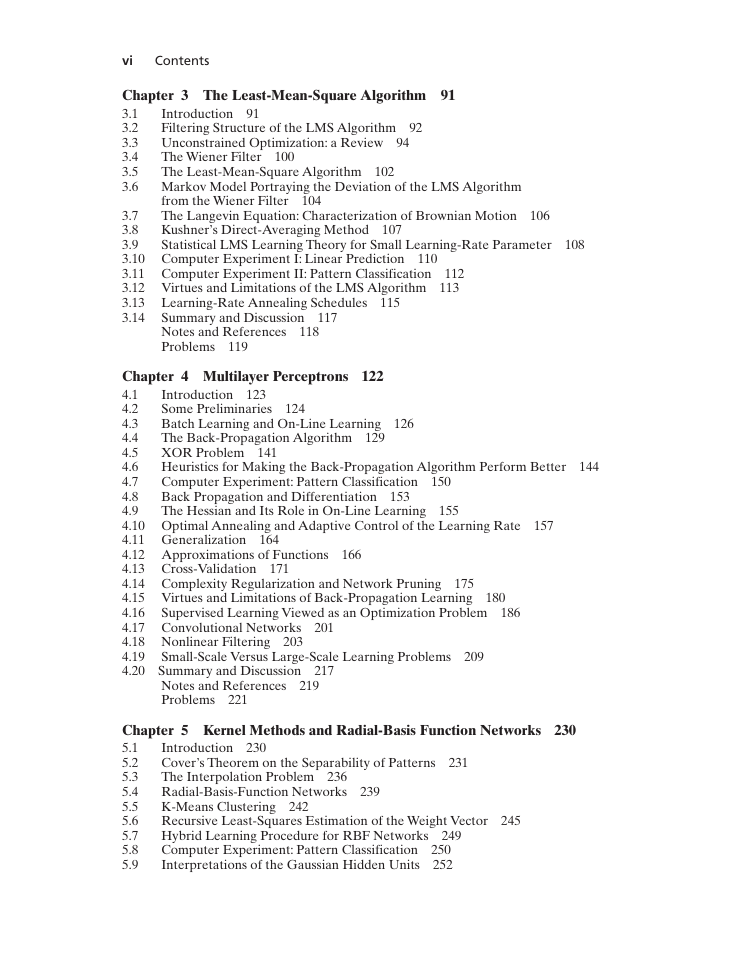

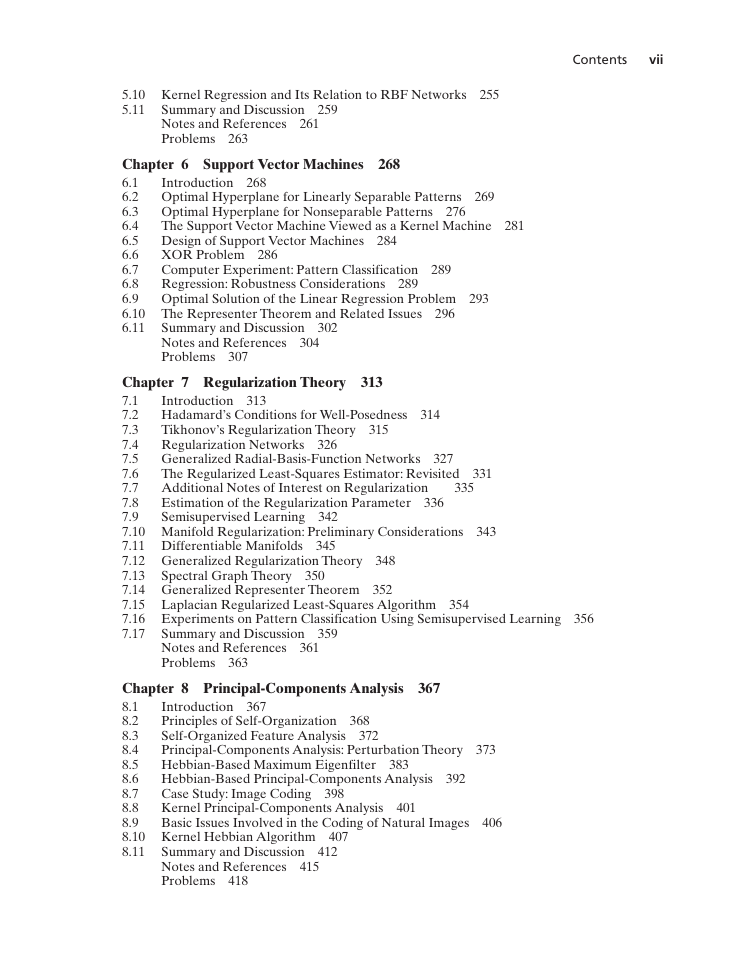

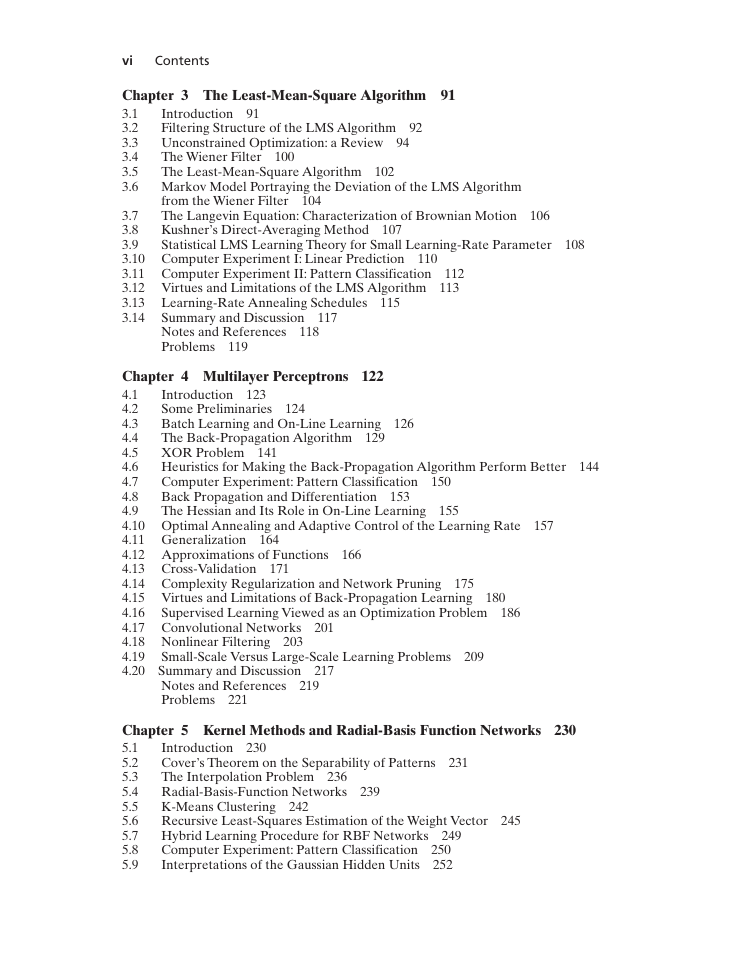

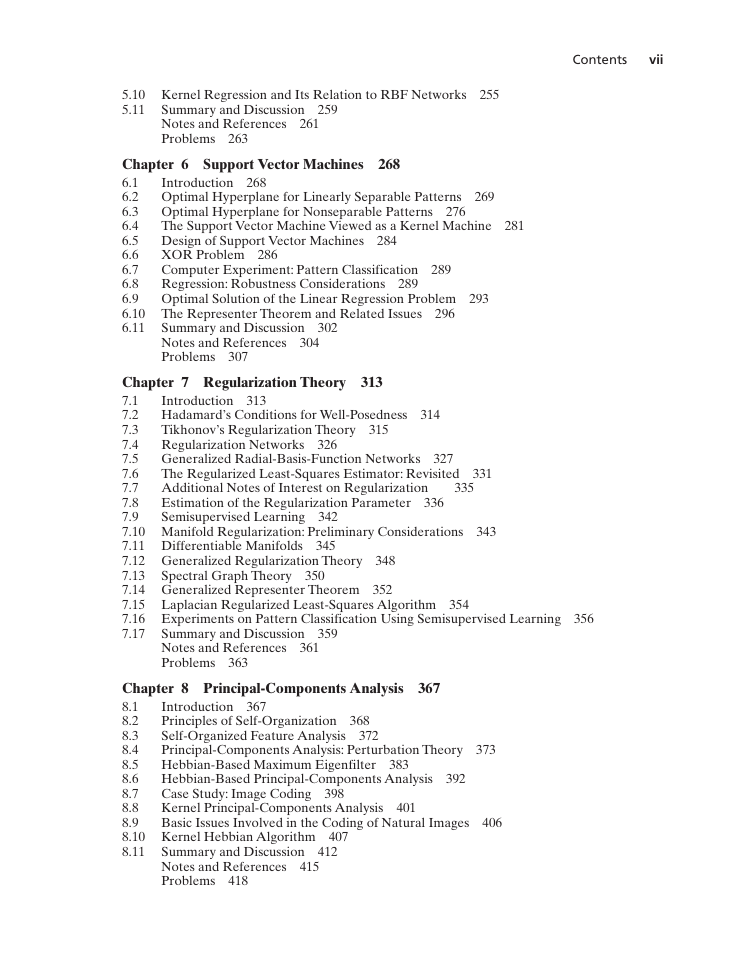

Contents

Preface

Acknowledgments

GLOSSARY

Introduction

1. What is a Neural Network?

2. The Human Brain

3. Models of a Neuron

4. Neural Networks Viewed As Directed Graphs

5. Feedback

6. Network Architectures

7. Knowledge Representation

8. Learning Processes

9. Learning Tasks

10. Concluding Remarks

Notes and References

Chapter 1 Rosenblatt's Perceptron

1.1 Introduction

1.2. Perceptron

1.3. The Perceptron Convergence Theorem

1.4. Relation Between the Perceptron and Bayes Classifier for a Gaussian Environment

1.5. Computer Experiment: Pattern Classification

1.6. The Batch Perceptron Algorithm

1.7. Summary and Discussion

Notes and References

Problems

Chapter 2 Model Building through Regression

2.1 Introduction

2.2 Linear Regression Model: Preliminary Considerations

2.3 Maximum a Posteriori Estimation of the Parameter Vector

2.4 Relationship Between Regularized Least-Squares Estimation and MAP Estimation

2.5 Computer Experiment: Pattern Classification

2.6 The Minimum-Description-Length Principle

2.7 Finite Sample-Size Considerations

2.8 The Instrumental-Variables Method

2.9 Summary and Discussion

Notes and References

Problems

Chapter 3 The Least-Mean-Square Algorithm

3.1 Introduction

3.2 Filtering Structure of the LMS Algorithm

3.3 Unconstrained Optimization: a Review

3.4 The Wiener Filter

3.5 The Least-Mean-Square Algorithm

3.6 Markov Model Portraying the Deviation of the LMS Algorithm from the Wiener Filter

3.7 The Langevin Equation: Characterization of Brownian Motion

3.8 Kushner's Direct-Averaging Method

3.9 Statistical LMS Learning Theory for Small Learning-Rate Parameter

3.10 Computer Experiment I: Linear Prediction

3.11 Computer Experiment II: Pattern Classification

3.12 Virtues and Limitations of the LMS Algorithm

3.13 Learning-Rate Annealing Schedules

3.14 Summary and Discussion

Notes and References

Problems

Chapter 4 Multilayer Perceptrons

4.1 Introduction

4.2 Some Preliminaries

4.3 Batch Learning and On-Line Learning

4.4 The Back-Propagation Algorithm

4.5 XOR Problem

4.6 Heuristics for Making the Back-Propagation Algorithm Perform Better

4.7 Computer Experiment: Pattern Classification

4.8 Back Propagation and Differentiation

4.9 The Hessian and Its Role in On-Line Learning

4.10 Optimal Annealing and Adaptive Control of the Learning Rate

4.11 Generalization

4.12 Approximations of Functions

4.13 Cross-Validation

4.14 Complexity Regularization and Network Pruning

4.15 Virtues and Limitations of Back-Propagation Learning

4.16 Supervised Learning Viewed as an Optimization Problem

4.17 Convolutional Networks

4.18 Nonlinear Filtering

4.19 Small-Scale Versus Large-Scale Learning Problems

4.20 Summary and Discussion

Notes and References

Problems

Chapter 5 Kernel Methods and Radial-Basis Function Networks

5.1 Introduction

5.2 Cover's Theorem on the Separability of Patterns

5.3 The Interpolation Problem

5.4 Radial-Basis-Function Networks

5.5 K-Means Clustering

5.6 Recursive Least-Squares Estimation of the Weight Vector

5.7 Hybrid Learning Procedure for RBF Networks

5.8 Computer Experiment: Pattern Classification

5.9 Interpretations of the Gaussian Hidden Units

5.10 Kernel Regression and Its Relation to RBF Networks

5.11 Summary and Discussion

Notes and References

Problems

Chapter 6 Support Vector Machines

6.1 Introduction

6.2 Optimal Hyperplane for Linearly Separable Patterns

6.3 Optimal Hyperplane for Nonseparable Patterns

6.4 The Support Vector Machine Viewed as a Kernel Machine

6.5 Design of Support Vector Machines

6.6 XOR Problem

6.7 Computer Experiment: Pattern Classification

6.8 Regression: Robustness Considerations

6.9 Optimal Solution of the Linear Regression Problem

6.10 The Representer Theorem and Related Issues

6.11 Summary and Discussion

Notes and References

Problems

Chapter 7 Regularization Theory

7.1 Introduction

7.2 Hadamard's Conditions for Well-Posedness

7.3 Tikhonov's Regularization Theory

7.4 Regularization Networks

7.5 Generalized Radial-Basis-Function Networks

7.6 The Regularized Least-Squares Estimator: Revisited

7.7 Additional Notes of Interest on Regularization

7.8 Estimation of the Regularization Parameter

7.9 Semisupervised Learning

7.10 Manifold Regularization: Preliminary Considerations

7.11 Differentiable Manifolds

7.12 Generalized Regularization Theory

7.13 Spectral Graph Theory

7.14 Generalized Representer Theorem

7.15 Laplacian Regularized Least-Squares Algorithm

7.16 Experiments on Pattern Classification Using Semisupervised Learning

7.17 Summary and Discussion

Notes and References

Problems

Chapter 8 Principal-Components Analysis

8.1 Introduction

8.2 Principles of Self-Organization

8.3 Self-Organized Feature Analysis

8.4 Principal-Components Analysis: Perturbation Theory

8.5 Hebbian-Based Maximum Eigenfilter

8.6 Hebbian-Based Principal-Components Analysis

8.7 Case Study: Image Coding

8.8 Kernel Principal-Components Analysis

8.9 Basic Issues Involved in the Coding of Natural Images

8.10 Kernel Hebbian Algorithm

8.11 Summary and Discussion

Notes and References

Problems

Chapter 9 Self-Organizing Maps

9.1 Introduction

9.2 Two Basic Feature-Mapping Models

9.3 Self-Organizing Map

9.4 Properties of the Feature Map

9.5 Computer Experiments I: Disentangling Lattice Dynamics Using SOM

9.6 Contextual Maps

9.7 Hierarchical Vector Quantization

9.8 Kernel Self-Organizing Map

9.9 Computer Experiment II: Disentangling Lattice Dynamics Using Kernel SOM

9.10 Relationship Between Kernel SOM and Kullback–Leibler Divergence

9.11 Summary and Discussion

Notes and References

Problems

Chapter 10 Information-Theoretic Learning Models

10.1 Introduction

10.2 Entropy

10.3 Maximum-Entropy Principle

10.4 Mutual Information

10.5 Kullback–Leibler Divergence

10.6 Copulas

10.7 Mutual Information as an Objective Function to be Optimized

10.8 Maximum Mutual Information Principle

10.9 Infomax and Redundancy Reduction

10.10 Spatially Coherent Features

10.11 Spatially Incoherent Features

10.12 Independent-Components Analysis

10.13 Sparse Coding of Natural Images and Comparison with ICA Coding

10.14 Natural-Gradient Learning for Independent-Components Analysis

10.15 Maximum-Likelihood Estimation for Independent-Components Analysis

10.16 Maximum-Entropy Learning for Blind Source Separation

10.17 Maximization of Negentropy for Independent-Components Analysis

10.18 Coherent Independent-Components Analysis

10.19 Rate Distortion Theory and Information Bottleneck

10.20 Optimal Manifold Representation of Data

10.21 Computer Experiment: Pattern Classification

10.22 Summary and Discussion

Notes and References

Problems

Chapter 11 Stochastic Methods Rooted in Statistical Mechanics

11.1 Introduction

11.2 Statistical Mechanics

11.3 Markov Chains

11.4 Metropolis Algorithm

11.5 Simulated Annealing

11.6 Gibbs Sampling

11.7 Boltzmann Machine

11.8 Logistic Belief Nets

11.9 Deep Belief Nets

11.10 Deterministic Annealing

11.11 Analogy of Deterministic Annealing with Expectation-Maximization Algorithm

11.12 Summary and Discussion

Notes and References

Problems

Chapter 12 Dynamic Programming

12.1 Introduction

12.2 Markov Decision Process

12.3 Bellman's Optimality Criterion

12.4 Policy Iteration

12.5 Value Iteration

12.6 Approximate Dynamic Programming: Direct Methods

12.7 Temporal-Difference Learning

12.8 Q-Learning

12.9 Approximate Dynamic Programming: Indirect Methods

12.10 Least-Squares Policy Evaluation

12.11 Approximate Policy Iteration

12.12 Summary and Discussion

Notes and References

Problems

Chapter 13 Neurodynamics

13.1 Introduction

13.2 Dynamic Systems

13.3 Stability of Equilibrium States

13.4 Attractors

13.5 Neurodynamic Models

13.6 Manipulation of Attractors as a Recurrent Network Paradigm

13.7 Hopfield Model

13.8 The Cohen–Grossberg Theorem

13.9 Brain-State-In-A-Box Model

13.10 Strange Attractors and Chaos

13.11 Dynamic Reconstruction of a Chaotic Process

13.12 Summary and Discussion

Notes and References

Problems

Chapter 14 Bayseian Filtering for State Estimation of Dynamic Systems

14.1 Introduction

14.2 State-Space Models

14.3 Kalman Filters

14.4 The Divergence-Phenomenon and Square-Root Filtering

14.5 The Extended Kalman Filter

14.6 The Bayesian Filter

14.7 Cubature Kalman Filter: Building on the Kalman Filter

14.8 Particle Filters

14.9 Computer Experiment: Comparative Evaluation of Extended Kalman and Particle Filters

14.10 Kalman Filtering in Modeling of Brain Functions

14.11 Summary and Discussion

Notes and References

Problems

Chapter 15 Dynamically Driven Recurrent Networks

15.1 Introduction

15.2 Recurrent Network Architectures

15.3 Universal Approximation Theorem

15.4 Controllability and Observability

15.5 Computational Power of Recurrent Networks

15.6 Learning Algorithms

15.7 Back Propagation Through Time

15.8 Real-Time Recurrent Learning

15.9 Vanishing Gradients in Recurrent Networks

15.10 Supervised Training Framework for Recurrent Networks Using Nonlinear Sequential State Estimators

15.11 Computer Experiment: Dynamic Reconstruction of Mackay–Glass Attractor

15.12 Adaptivity Considerations

15.13 Case Study: Model Reference Applied to Neurocontrol

15.14 Summary and Discussion

Notes and References

Problems

Bibliography

Index

A

B

C

D

E

F

G

H

I

J

K

L

M

N

O

P

Q

R

S

T

U

V

W

Z

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc