Cover

Artificial Intelligence in the Age of Neural Networks and Brain Computing

Copyright

List of Contributors

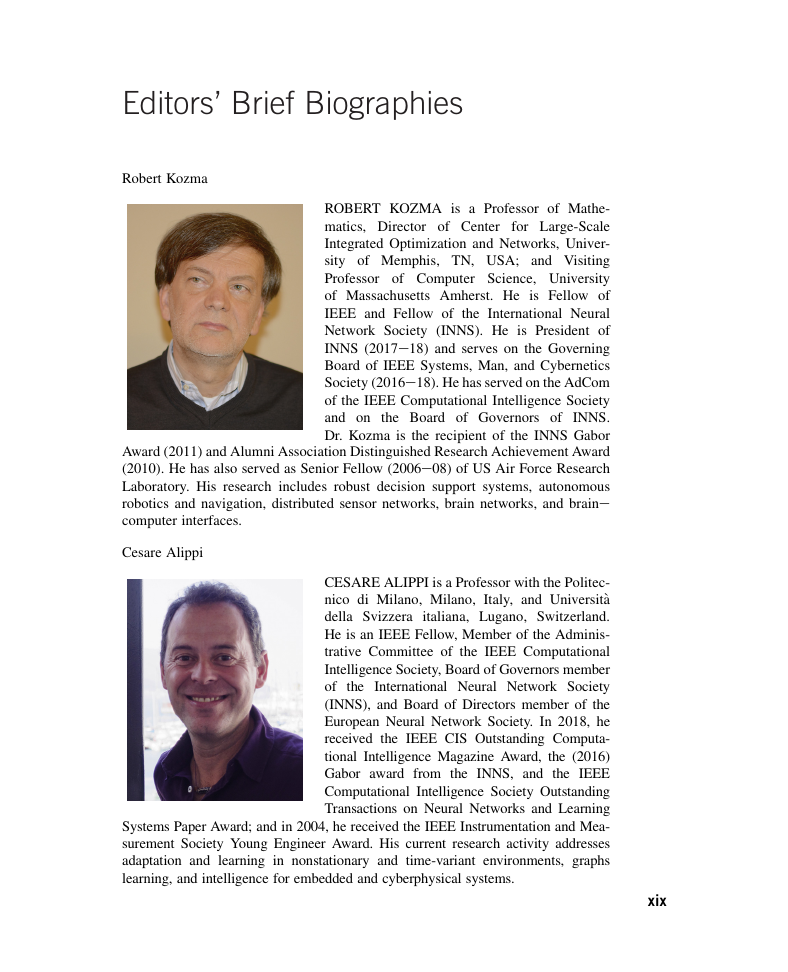

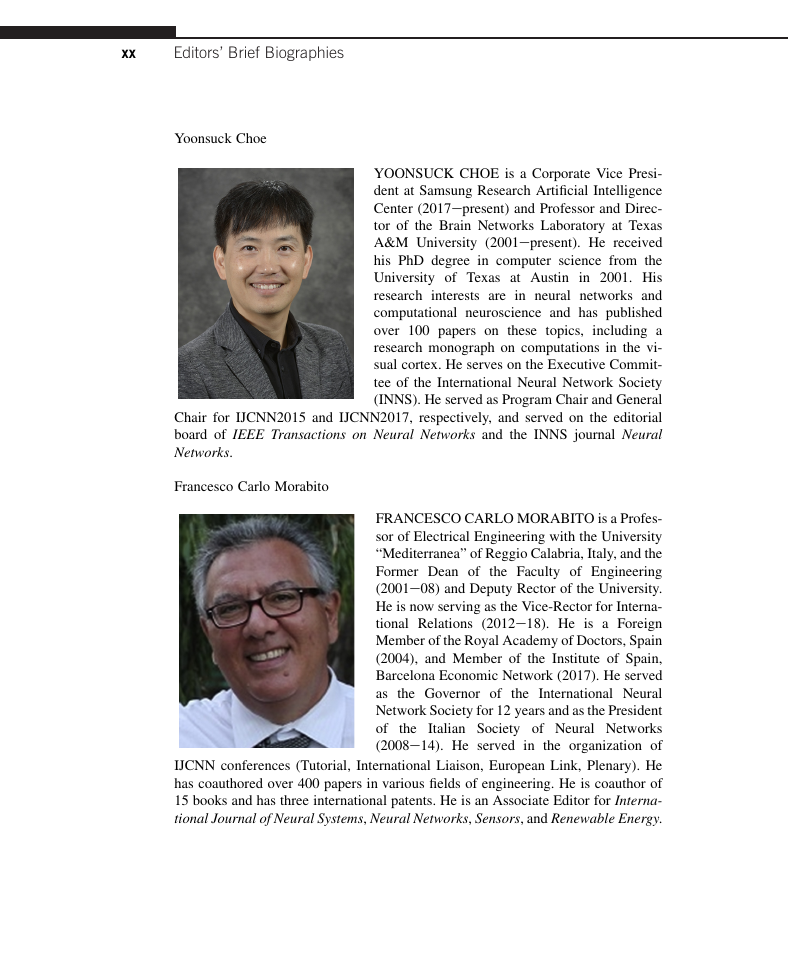

Editors' Brief Biographies

Introduction

1 -

Nature's Learning Rule: The Hebbian-LMS Algorithm

1. Introduction

2. ADALINE and the LMS Algorithm, From the 1950s

3. Unsupervised Learning With Adaline, From the 1960s

4. Robert Lucky's Adaptive Equalization, From the 1960s

5. Bootstrap Learning With a Sigmoidal Neuron

6. Bootstrap Learning With a More “Biologically Correct” Sigmoidal Neuron

6.1 Training a Network of Hebbian-LMS Neurons

7. Other Clustering Algorithms

7.1 K-Means Clustering

7.2 Expectation-Maximization Algorithm

7.3 Density-Based Spatial Clustering of Application With Noise Algorithm

7.4 Comparison Between Clustering Algorithms

8. A General Hebbian-LMS Algorithm

9. The Synapse

10. Postulates of Synaptic Plasticity

11. The Postulates and the Hebbian-LMS Algorithm

12. Nature's Hebbian-LMS Algorithm

13. Conclusion

Appendix: Trainable Neural Network Incorporating Hebbian-LMS Learning

ACKNOWLEDGMENTS

References

2 -

A Half Century of Progress Toward a Unified Neural Theory of Mind and Brain With Applications to Autonomous Adaptive Agents ...

1. Towards a Unified Theory of Mind and Brain

2. A Theoretical Method for Linking Brain to Mind: The Method of Minimal Anatomies

3. Revolutionary Brain Paradigms: Complementary Computing and Laminar Computing

4. The What and Where Cortical Streams Are Complementary

5. Adaptive Resonance Theory

6. Vector Associative Maps for Spatial Representation and Action

7. Homologous Laminar Cortical Circuits for All Biological Intelligence: Beyond Bayes

8. Why a Unified Theory Is Possible: Equations, Modules, and Architectures

9. All Conscious States Are Resonant States

10. The Varieties of Brain Resonances and the Conscious Experiences That They Support

11. Why Does Resonance Trigger Consciousness?

12. Towards Autonomous Adaptive Intelligent Agents and Clinical Therapies in Society

References

3 -

Third Gen AI as Human Experience Based Expert Systems

1. Introduction

2. Third Gen AI

2.1 Maxwell–Boltzmann Homeostasis [8]

2.2 The Inverse Is Convolution Neural Networks

2.3 Fuzzy Membership Function (FMF and Data Basis)

3. MFE Gradient Descent

3.1 Unsupervised Learning Rule

4. Conclusion

ACKNOWLEDGMENT

References

Further Reading

4 -

The Brain-Mind-Computer Trichotomy: Hermeneutic Approach

1. Dichotomies

1.1 The Brain-Mind Problem

1.2 The Brain-Computer Analogy/Disanalogy

1.3 The Computational Theory of Mind

2. Hermeneutics

2.1 Second-Order Cybernetics

2.2 Hermeneutics of the Brain

2.3 The Brain as a Hermeneutic Device

2.4 Neural Hermeneutics

3. Schizophrenia: A Broken Hermeneutic Cycle

3.1 Hermeneutics, Cognitive Science, Schizophrenia

4. Toward the Algorithms of Neural/Mental Hermeneutics

4.1 Understanding Situations: Needs Hermeneutic Interpretation

ACKNOWLEDGMENTS

References

Further Reading

5 -

From Synapses to Ephapsis: Embodied Cognition and Wearable Personal Assistants

1. Neural Networks and Neural Fields

2. Ephapsis

3. Embodied Cognition

4. Wearable Personal Assistants

References

6 -

Evolving and Spiking Connectionist Systems for Brain-Inspired Artificial Intelligence

1. From Aristotle's Logic to Artificial Neural Networks and Hybrid Systems

1.1 Aristotle's Logic and Rule-Based Systems for Knowledge Representation and Reasoning

1.2 Fuzzy Logic and Fuzzy Rule–Based Systems

1.3 Classical Artificial Neural Networks (ANN)

1.4 Integrating ANN With Rule-Based Systems: Hybrid Connectionist Systems

1.5 Evolutionary Computation (EC): Learning Parameter Values of ANN Through Evolution of Individual Models as Part of Populatio ...

2. Evolving Connectionist Systems (ECOS)

2.1 Principles of ECOS

2.2 ECOS Realizations and AI Applications

3. Spiking Neural Networks (SNN) as Brain-Inspired ANN

3.1 Main Principles, Methods, and Examples of SNN and Evolving SNN (eSNN)

3.2 Applications and Implementations of SNN for AI

4. Brain-Like AI Systems Based on SNN. NeuCube. Deep Learning Algorithms

4.1 Brain-Like AI Systems. NeuCube

4.2 Deep Learning and Deep Knowledge Representation in NeuCube SNN Models: Methods and AI Applications [6]

4.2.1 Supervised Learning for Classification of Learned Patterns in a SNN Model

4.2.2 Semisupervised Learning

5. Conclusion

ACKNOWLEDGMENT

References

7 -

Pitfalls and Opportunities in the Development and Evaluation of Artificial Intelligence Systems

1. Introduction

2. AI Development

2.1 Our Data Are Crap

2.2 Our Algorithm Is Crap

3. AI Evaluation

3.1 Use of Data

3.2 Performance Measures

3.3 Decision Thresholds

4. Variability and Bias in Our Performance Estimates

5. Conclusion

ACKNOWLEDGMENT

References

8 -

The New AI: Basic Concepts, and Urgent Risks and Opportunities in the Internet of Things

1. Introduction and Overview

1.1 Deep Learning and Neural Networks Before 2009–11

1.2 The Deep Learning Cultural Revolution and New Opportunities

1.3 Need and Opportunity for a Deep Learning Revolution in Neuroscience

1.4 Risks of Human Extinction, Need for New Paradigm for Internet of Things

2. Brief History and Foundations of the Deep Learning Revolution

2.1 Overview of the Current Landscape

2.2 How the Deep Revolution Actually Happened

2.3 Backpropagation: The Foundation Which Made This Possible

2.4 CoNNs, ﹥3 Layers, and Autoencoders: The Three Main Tools of Today's Deep Learning

3. From RNNs to Mouse-Level Computational Intelligence: Next Big Things and Beyond

3.1 Two Types of Recurrent Neural Network

3.2 Deep Versus Broad: A Few Practical Issues

3.3 Roadmap for Mouse-Level Computational Intelligence (MLCI)

3.4 Emerging New Hardware to Enhance Capability by Orders of Magnitude

4. Need for New Directions in Understanding Brain and Mind

4.1 Toward a Cultural Revolution in Hard Neuroscience

4.2 From Mouse Brain to Human Mind: Personal Views of the Larger Picture

5. Information Technology (IT) for Human Survival: An Urgent Unmet Challenge

5.1 Examples of the Threat from Artificial Stupidity

5.2 Cyber and EMP Threats to the Power Grid

5.3 Threats From Underemployment of Humans

5.4 Preliminary Vision of the Overall Problem, and of the Way out

References

9 -

Theory of the Brain and Mind: Visions and History

1. Early History

2. Emergence of Some Neural Network Principles

3. Neural Networks Enter Mainstream Science

4. Is Computational Neuroscience Separate From Neural Network Theory?

5. Discussion

References

10 -

Computers Versus Brains: Game Is Over or More to Come?

1. Introduction

2. AI Approaches

3. Metastability in Cognition and in Brain Dynamics

4. Multistability in Physics and Biology

5. Pragmatic Implementation of Complementarity for New AI

ACKNOWLEDGMENTS

References

11 - Deep Learning Approaches to Electrophysiological Multivariate Time-Series Analysis

1. Introduction

2. The Neural Network Approach

3. Deep Architectures and Learning

3.1 Deep Belief Networks

3.2 Stacked Autoencoders

3.3 Convolutional Neural Networks

4. Electrophysiological Time-Series

4.1 Multichannel Neurophysiological Measurements of the Activity of the Brain

4.2 Electroencephalography (EEG)

4.3 High-Density Electroencephalography

4.4 Magnetoencephalography

5. Deep Learning Models for EEG Signal Processing

5.1 Stacked Autoencoders

5.2 Summary of the Proposed Method for EEG Classification

5.3 Deep Convolutional Neural Networks

5.4 Other DL Approaches

6. Future Directions of Research

6.1 DL Interpretability

6.2 Advanced Learning Approaches in DL

6.3 Robustness of DL Networks

7. Conclusions

References

Further Reading

12 -

Computational Intelligence in the Time of Cyber-Physical Systems and the Internet of Things

1. Introduction

2. System Architecture

3. Energy Harvesting and Management

3.1 Energy Harvesting

3.2 Energy Management and Research Challenges

4. Learning in Nonstationary Environments

4.1 Passive Adaptation Modality

4.2 Active Adaptation Modality

4.3 Research Challenges

5. Model-Free Fault Diagnosis Systems

5.1 Model-Free Fault Diagnosis Systems

5.2 Research Challenges

6. Cybersecurity

6.1 How Can CPS and IoT Be Protected From Cyberattacks?

6.2 Case Study: Darknet Analysis to Capture Malicious Cyberattack Behaviors

7. Conclusions

ACKNOWLEDGMENTS

References

13 -

Multiview Learning in Biomedical Applications

1. Introduction

2. Multiview Learning

2.1 Integration Stage

2.2 Type of Data

2.3 Types of Analysis

3. Multiview Learning in Bioinformatics

3.1 Patient Subtyping

3.2 Drug Repositioning

4. Multiview Learning in Neuroinformatics

4.1 Automated Diagnosis Support Tools for Neurodegenerative Disorders

4.2 Multimodal Brain Parcellation

5. Deep Multimodal Feature Learning

5.1 Deep Learning Application to Predict Patient’s Survival

5.2 Multimodal Neuroimaging Feature Learning With Deep Learning

6. Conclusions

References

14 -

Meaning Versus Information, Prediction Versus Memory, and Question Versus Answer

1. Introduction

2. Meaning Versus Information

3. Prediction Versus Memory

4. Question Versus Answer

5. Discussion

6. Conclusion

ACKNOWLEDGMENTS

References

15 -

Evolving Deep Neural Networks

1. Introduction

2. Background and Related Work

3. Evolution of Deep Learning Architectures

3.1 Extending NEAT to Deep Networks

3.2 Cooperative Coevolution of Modules and Blueprints

3.3 Evolving DNNs in the CIFAR-10 Benchmark

4. Evolution of LSTM Architectures

4.1 Extending CoDeepNEAT to LSTMs

4.2 Evolving DNNs in the Language Modeling Benchmark

5. Application Case Study: Image Captioning for the Blind

5.1 Evolving DNNs for Image Captioning

5.2 Building the Application

5.3 Image Captioning Results

6. Discussion and Future Work

7. Conclusion

References

Index

A

B

C

D

E

F

G

H

I

J

K

L

M

N

O

P

Q

R

S

T

U

V

W

X

Back Cover

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc