PPLS/D: Parallel Pareto Local Search based on

Decomposition

Jialong Shi, Qingfu Zhang, and Jianyong Sun

1

Abstract—Pareto Local Search (PLS)

is a basic building

block in many metaheuristics for Multiobjective Combinatorial

Optimization Problem (MCOP). In this paper, an enhanced

PLS variant called Parallel Pareto Local Search based on

Decomposition (PPLS/D) is proposed. PPLS/D improves the effi-

ciency of PLS using the techniques of parallel computation and

problem decomposition. It decomposes the original search space

into L subregions and executes L parallel processes searching

in these subregions simultaneously. Inside each subregion, the

PPLS/D process is guided by a unique scalar objective function.

PPLS/D differs from the well-known Two Phase Pareto Local

Search (2PPLS) in that it uses the scalar objective function

to guide every move of the PLS procedure in a fine-grained

manner. In the experimental studies, PPLS/D is compared against

the basic PLS and a recently proposed PLS variant on the

multiobjective Unconstrained Binary Quadratic Programming

problems (mUBQPs) and the multiobjective Traveling Salesman

Problems (mTSPs) with at most four objectives. The experimental

results show that, no matter whether the initial solutions are

randomly generated or generated by heuristic methods, PPLS/D

always performs significantly better than the other two PLS

variants.

I. INTRODUCTION

A Multiobjective Combinatorial Optimization Problem

(MCOP) is defined as follows:

maximize / minimize

subject to

F (x) = (f1(x), . . . , fm(x))

x ∈ S

(1)

where the solution space S is a finite set and F : S → Rm

is the objective vector function which contains m objectives.

In the following discussions we focus on the maximization

MCOPs. There exists a trade-off between different objectives.

Usually, no single solution can optimize all the objectives. The

following definitions are used in multiobjective optimization:

• Definition 1 A vector u = (u1, . . . , um) is said to

dominate a vector v = (v1, . . . , vm), if and only if uk ≥

vk, ∀k ∈ {1, . . . , m} ∧ ∃k ∈ {1, . . . , m} : uk > vk,

denoted as u ≻ v.

• Definition 2 If u is not dominated by v and v is not

dominated by u, we say that u and v are non-dominated

to each other, denoted as u ⊀ v or v ⊀ u. A solution set

A is called a non-dominated set if and only if for any

x, y ∈ A, F (x) ⊀ F (y).

Jialong Shi is with the School of Mathematics and Statistics, Xi’an Jiaotong

University, Xi’an, China (e-mail: jialong.shi@xjtu.edu.cn).

Qingfu Zhang is with the Department of Computer Science, City University

of Hong Kong, Hong Kong SAR (e-mail: qingfu.zhang@cityu.edu.hk).

Jianyong Sun is with the School of Mathematics and Statistics, Xi’an

Jiaotong University, Xi’an, China (e-mail: jy.sun@xjtu.edu.cn).

).

• Definition 3 A feasible solution x

∗ ∈ S is called a Pareto

optimal solution, if and only if @y ∈ S such that F (y) ≻

∗

F (x

• Definition 4 For problem (1), the set of all the Pareto

optimal solutions is called the Pareto Set (PS), denoted

as PS = {x ∈ S|@y ∈ S, F (y) ≻ F (x)} and the Pareto

front (PF) is defined as PF = {F (x)|x ∈ PS}.

The PS represents the best trade-off solutions and the PF rep-

resents their objective values. Hence the PS and PF constitute

highly valuable information to the decision maker. The goal

of a multiobjective metaheuristic is to approximate the PS and

PF.

Many state-of-the-art multiobjective metaheuristics use

Pareto Local Search (PLS), which was first proposed in [1],

as a basic building block [2], [4], [14]. PLS can be applied

at the very beginning of a metaheuristic to obtain a set of

high quality solutions as the starting points of the following

optimization procedure. PLS also can be applied in the

middle or final stage of a metaheuristic to further improve a

set of high quality solutions. The main drawback of PLS is

that it requires a long time to reach a good approximation

of the PF. To overcome this drawback, several sequential

speed-up strategies have been proposed [2]–[4].

In this

paper, we propose to use parallel computation and problem

decomposition to speed up PLS. The proposed parallel

PLS variant is called Parallel PLS based on Decomposition

(PPLS/D), which has the following features:

• PPLS/D decomposes the original search space into L

subregions by defining L weight vectors in the objective

space.

• PPLS/D executes L processes simultaneously to exploit

the computational resource of multi-core computers. Each

process maintains a unique archive of solutions and

searches in its own subregion.

• During the search, each PPLS/D process is guided by a u-

nique scalar objective function (generated by Tchebycheff

approach) in a fine-grained manner. PPLS/D is different

from the well-known Two-Phase Pareto Local Search

(2PPLS) [5] which combines a scalar objective heuristic

phase and a PLS phase in a coarse-grained manner. In

PPLS/D, the scalar function influences every move of the

algorithm during the entire search process.

In the experimental studies,

the multiobjective Uncon-

strained Binary Quadratic Programming problem (mUBQP)

and the multiobjective Traveling Salesman Problem (mTSP)

with m = 2, 3 and 4 are selected as the test suites. In addi-

tion, two application scenarios are considered: starting from

�

randomly generated solutions and starting from high quality

solutions. In both scenarios, the performance of PPLS/D with

different process numbers is compared with that of the basic

PLS and a speed-up PLS variant proposed by [2]. The results

show that PPLS/D is significantly faster than the other two PLS

variants on both problems and both scenarios. The influence

of the process number in PPLS/D also is investigated.

Some preliminary work of this paper has been published in

[6]. The work in this paper differs from the work in [6] in the

following aspects.

• The PLS speed-up strategies proposed in [6] can only

handle bi-objective MCOPs. In this paper, the proposed

PPLS/D can handle more than two objectives by using the

region decomposition method proposed by Liu et al. [7].

• In [6], the scalar objective functions that guide the search

are generated by the weighted sum approach, while in

this paper the scalar objective functions are generated

by the Tchebycheff approach. The advantages of using

the Tchebycheff scalar objective function are discussed

in Section III-A.

• In [6], several speed-up strategies are proposed for PLS,

but the details of the strategies are not given. In this

paper, a formal algorithm, PPLS/D, is proposed based

on the strategies proposed in [6] and the aforementioned

improvements. The detailed pseudo-code of PPLS/D is

given in this paper.

• In the experimental studies of [6], the test problem is

the bi-objective mUBQP (maximization problem) and the

algorithms are started from randomly generated solutions.

In this paper, the mUBQP and the mTSP (minimization

problem) with two, three and four objectives are used

as the test suites, and the algorithms are started from

randomly generated solutions and high quality solutions.

• In [6], there is no investigation about how the algorithm

performance changes with different process numbers,

while in this paper we analysis the influence of the

process number.

The rest of this paper is organized as follows. Section II

introduces the recent works on improving the basic PLS

algorithm. In Section III the details of the proposed PPLS/D

are presented. In Section IV the experimental studies have

been conducted to show that PPLS/D can significantly speed

up the basic PLS. Section V concludes this paper.

II. RELATED WORKS

PLS is a problem-independent search method to approxi-

mate the PF. It is an extension of the single objective local

search on MCOPs. Algorithm 1 shows the procedure of the

′, A) means adding

basic PLS [1]. In Algorithm 1, Update(x

′ to the archive A and deleting the solutions in A that are

x

′.

dominated by x

The main drawback of the basic version of PLS (Algo-

rithm 1) is that it needs a large amount of time to find a

good approximation of the PF. Recently, several sequential

PLS variants have been proposed to speed up the basic PLS.

Inja et al. [3] proposed the Queued PLS (QPLS). In QPLS

a queue of high-quality unsuccessful candidate solutions are

2

Algorithm 1 Pareto Local Search

1: input: An initial set of non-dominated solutions A0

2: ∀x ∈ A0, set Explored(x) ← FALSE

3: A ← A0

4: while A0 ̸= ∅ do

x0 ← a randomly selected solution from A0

for each x

′ in the neighborhood of x0 do

if x

′ is not dominated by any solution in A then

A ← Update(x

′

Explored(x

′

) ← FALSE

, A)

5:

6:

7:

8:

9:

10:

11:

12:

end if

end for

Explored(x0) ← TRUE

13: A0 ← {x ∈ A | Explored(x) == FALSE}

14: end while

15: return A

maintained and their neighborhoods are explored. In the work

of Liefooghe et al. [4], the PLS procedure is partitioned into

three algorithmic components. For each algorithmic compo-

nent, several alternatives are proposed. By combining the

alternatives of different algorithmic components, a number of

PLS variants are proposed and tested in [4]. Dubois-Lacoste et

al. [2] improved the basic PLS in a way similar to [4], but the

differences are that Dubois-Lacoste et al. decomposed the PLS

into four algorithmic components and the PLS variants they

proposed can switch strategies during a single run. In addition,

some archive size limiting strategies are proposed in [2] for

the bi-objective optimization case. Shi et al. [6] investigated

several parallel strategies of PLS and tested their performance

on bi-objective problems.

Since Zhang and Li [8] proposed the Multiobjective Evo-

lutionary Algorithm based on Decomposition (MOEA/D), the

decomposition based framework has attracted some research

effort

in evolutionary multiobjective optimization commu-

nity [9]. Liu et al. [7] proposed MOEA/D-M2M which

decomposes the original search space into a number of

subregions by calculating the acute angles to a set of pre-

defined weight vectors. For each subregion, MOEA/D-M2M

maintains a unique sub-population and all sub-populations

evolve in a collaborative way. Recently, several variants of

MOEA/D-M2M [10], [11] have been proposed in which the

subregions are adaptively adjusted during the evolutionary

process. In a parallel NSGA-II variant proposed by Branke

et al. [12], the search space also is decomposed into several

subregions. However in [12] the decomposition is based on

cone separation and it only can be applied to the problems with

not more than three objectives. Derbel et al. [13] combined

the single-objective local search move strategies with the

MOEA/D framework to optimize the bi-objective traveling

salesman problems. Ke et al. [14] proposed the Multiobjective

Memetic Algorithm based on Decomposition (MOMAD),

which is one of the state-of-the-art algorithms for MCOPs.

At each iteration of MOMAD, a PLS process and multiple

scalar objective search processes are executed successively.

There are some studies that try to improve the basic PLS

by involving a higher-level control mechanism. Alsheddy and

�

Tsang [15] propose the Guided Pareto Local Search (GPLS),

in which a penalization mechanism is applied to prevent PLS

from premature. Based on a variable neighborhood search

framework, Geiger [16] proposed the Pareto Iterated Local

Search (PILS) to improve the result quality of PLS. The

Two-Phase Pareto Local Search (2PPLS) is proposed by Lust

and Teghem [5], in which the PLS process starts from the

high-quality solutions generated by a heuristic method. Two

enhanced 2PPLS variants can be found in [17], [18]. In the

work of Drugan and Thierens [19], different neighborhood ex-

ploration strategies and restart strategies of PLS are discussed.

III. PARALLEL PARETO LOCAL SEARCH BASED ON

DECOMPOSITION

In this section we introduce the mechanism of PPLS/D on

a maximization MCOP case. For the minimization MCOPs,

one can first convert

into a maximization problem and

then apply the PPLS/D. The key component of PPLS/D is

its decomposition strategy.

it

A. Decomposition Strategy

The decomposition strategy of PPLS/D is inspired by

MOEA/D [8] and MOEA/D-M2M [7]. In MOEA/D, the origi-

nal MOP is decomposed into a number of scalar subproblems.

In MOEA/D-M2M, the original search space is decomposed

into a number of subregions. PPLS/D integrates these two

decomposition strategies.

By defining multiple weight vectors in the objective space,

PPLS/D first decomposes the original search space into several

subregions. Then each subregion is searched by an assigned

parallel process. Due to the partition of subregions, each

parallel process only needs to approximate a part of the PF.

In addition, inside each subregion, the weight vector defines

a scalar objective function to guide every move of the search

process. Under the guidance of the scaler objective function,

each parallel process can quickly approach its corresponding

PF part.

m

k=1 λℓ

1, . . . , λℓ

m) satisfies

PPLS/D first defines L weight vectors λ1, . . . , λL. Each

weight vector λℓ = (λℓ

k = 1

> 0 for all k ∈ {1, . . . , m}. These weight vectors

and λℓ

k

determine the partition of the subregions and the scalar

objective function inside each subregion. Hence the number

of weight vectors L also is the number of subregions, the

number of scalar objective functions and the number of parallel

processes in PPLS/D. Here L is related to the predefined

parameter H ∈ N+. Given an H value, L =

weight

vectors are generated, in which each individual weight takes a

value from {0/H, 1/H, . . . , H/H}. Fig. 1 shows an example

in which L=15 weight vectors are generated when m=3 and

H=4.

H+m−1

m−1

(

)

∑

For each parallel process ℓ, a subregion Ωℓ is defined in the

objective space:

∗

Ωℓ =

(2)

{u∈Rm | ⟨u−z

where the operator ⟨·,·⟩ calculates the acute angle between

two vectors, λℓ is the weight vector of the process ℓ, z

=

, λj⟩, for any j=1,. . . ,L},

, λℓ⟩≤⟨u−z

∗

∗

3

Fig. 1. L = 15 weight vectors are generated when m = 3 and H = 4

Fig. 2. Relationship between the weight vector ℓ and the subregion Ωℓ in

the two objective case

∗

1 , . . . , z

∗

m) is the reference point. Note here that z

∗ must

(z

be the same in all processes. In this way, the objective space

can be perfectly separated even when the objective number

m is larger than 2. Fig. 2 shows the relationship between the

weight vector λℓ and the subregion Ωℓ when m = 2. For

convenience, in the following when we state that a solution x

is in a subregion Ωℓ, we mean that F (x) ∈ Ωℓ.

Inside the assigned subregion, each process is guided by a

scalar objective function. In PPLS/D, the scalar objective func-

tion of process ℓ is generated by the Tchebycheff approach:

f te(x|λℓ, z

∗

(fk(x) − z

k)},

∗

{ 1

λℓ

k

maximize:

) = min

1≤k≤m

(3)

∗ is the reference point also defined in Equation (2).

where z

Compared to the widely-used weighted sum scalar objective

function, the Tchebycheff scalar objective function can handle

the case that the shape of PF is not convex. In addition,

using the Tchebycheff scalar objective function can keep each

PPLS/D process on the central line of the subregion at the

early stage of the search (as shown in Fig. 8(f) and Fig. 8(c)).

Obviously, each scalar objective function f te represents a

direction to approach the PF. In PPLS/D, f te influences the

search mechanism from the following two aspects:

• At the beginning of each iteration, a new solution is

selected from all the unexplored solutions of the archive.

Then the neighborhood of the selected solution is ex-

plored. In the basic version of PLS,

this solution is

randomly selected, while in PPLD/D, the solution that

has the largest f te value is selected.

• When exploring the neighborhood of the selected so-

lution, the basic PLS evaluates all of the neighboring

solutions and accepts the solutions that are not dominated

by any solution in the archive. PPLS/D only accepts the

00.250.50.75100.250.50.75100.250.50.75000.250.500.750.50.2500.50.250000.750.50.25λ1λ2λ3λ4λ5λ6λ7λ8λ9λ10λ11λ12λ150.25000.25λ13λ14λlΩ�z*f2f1λl-1λl+1�

Algorithm 2 Parallel Pareto Local Search based on

Decomposition (PPLS/D))

1: input: MCOP

Stopping criterion

N (·): neighborhood structure

A0: initial set of non-dominated solutions

L: process number

{λ1,··· , λL}: weight vectors

∗: reference point

z

2: For each process ℓ ∈ {1, . . . , L}, do independently in

parallel:

3: Aℓ,0 ← A0

4: ∀x ∈ Aℓ,0, Explored(x) ← FALSE

5: Aℓ ← Aℓ,0

6: while Aℓ,0 ̸= ∅ and the stopping criterion is not met do

// Initialization

f te(x | λℓ, z

x0 ← arg max

x∈Aℓ,0

SuccessF lag ← FALSE

′ ∈ N (x0) do

for each x

∗

)

if AcceptanceCriterion1(x

Aℓ←Update(x

′

SuccessF lag←TRUE

break

, Aℓ)

end if

end for

if SuccessF lag == FALSE then

′

// 1st round exploration

, ℓ) == TRUE then

7:

8:

9:

10:

11:

12:

13:

14:

15:

16:

17:

18:

19:

20:

21:

22:

23:

29:

30:

31:

32:

33:

34:

35:

′ ∈ N (x0) do

′

for each x

, ℓ) == TRUE then

// 2nd round exploration

′

if AcceptanceCriterion2(x

Aℓ←Update(x

end if

end for

end if

Explored(x0) ← TRUE

, Aℓ)

24: Aℓ,0←{x ∈ Aℓ | Explored(x)==FALSE}

25: end while

26: Aℓ,0 ← Aℓ

// Re-check the archive

27: ∀x ∈ Aℓ,0, Explored(x) ← FALSE

28: while Aℓ,0 ̸= ∅ and the stopping criterion is not met do

′

, ℓ) == TRUE then

∗

)

x0 ← arg max

x∈Aℓ,0

for each x

f te(x | λℓ, z

′ ∈ N (x0) do

if AcceptanceCriterion2(x

Aℓ←Update(x

′

end if

end for

Explored(x0) ← TRUE

, Aℓ)

36: Aℓ,0←{x ∈ Aℓ | Explored(x)==FALSE}

37: end while

38: return non-dominated-sol(∪L

ℓ=1Aℓ)

solutions whose f te value is larger than the largest f te

value in the archive. After finding an acceptable solution,

PPLS/D stops evaluating the rest neighboring solutions

immediately and marks the current solution as explored.

B. Procedure

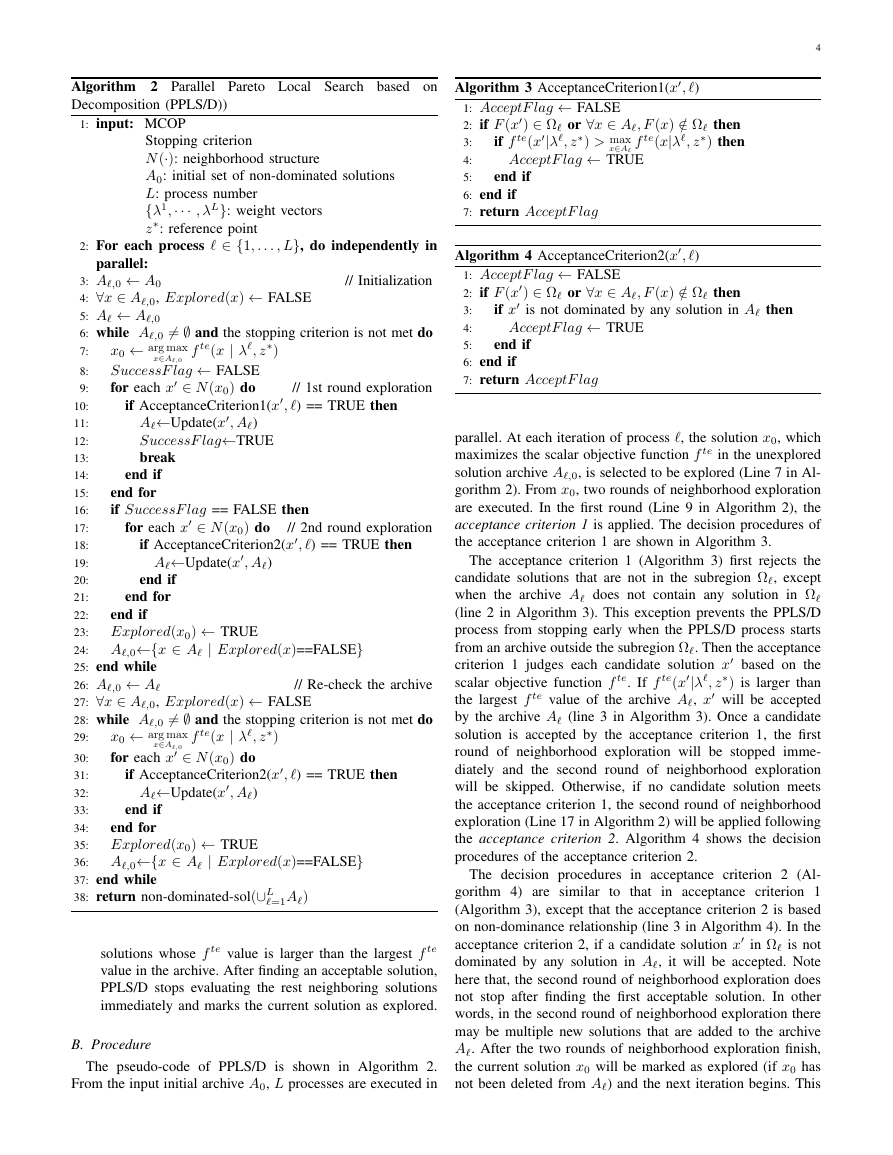

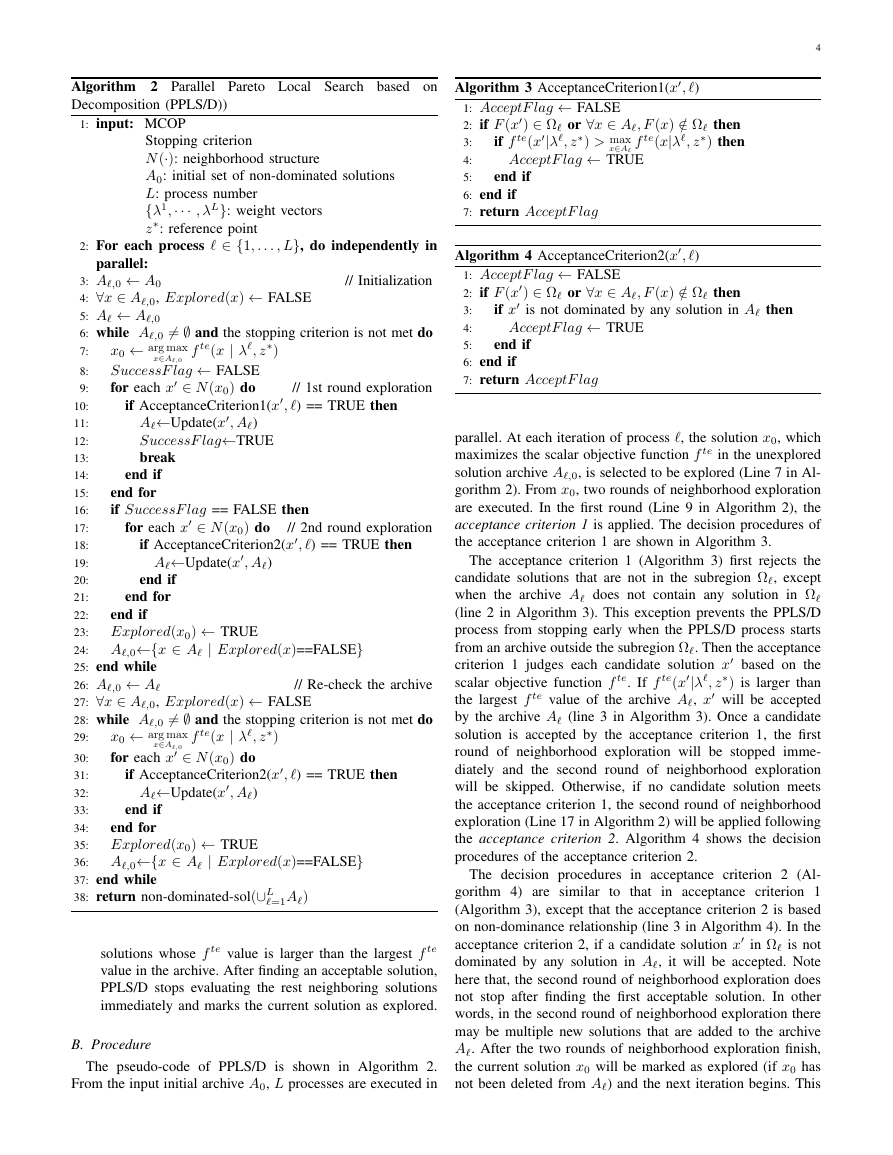

The pseudo-code of PPLS/D is shown in Algorithm 2.

From the input initial archive A0, L processes are executed in

4

, ℓ)

′

Algorithm 3 AcceptanceCriterion1(x

1: AcceptF lag ← FALSE

′

2: if F (x

3:

4:

5:

6: end if

7: return AcceptF lag

) ∈ Ωℓ or ∀x ∈ Aℓ, F (x) /∈ Ωℓ then

) then

) > max

x∈Aℓ

AcceptF lag ← TRUE

f te(x|λℓ, z

′|λℓ, z

if f te(x

end if

∗

∗

, ℓ)

′

Algorithm 4 AcceptanceCriterion2(x

1: AcceptF lag ← FALSE

) ∈ Ωℓ or ∀x ∈ Aℓ, F (x) /∈ Ωℓ then

′

2: if F (x

′ is not dominated by any solution in Aℓ then

if x

3:

AcceptF lag ← TRUE

4:

5:

6: end if

7: return AcceptF lag

end if

parallel. At each iteration of process ℓ, the solution x0, which

maximizes the scalar objective function f te in the unexplored

solution archive Aℓ,0, is selected to be explored (Line 7 in Al-

gorithm 2). From x0, two rounds of neighborhood exploration

are executed. In the first round (Line 9 in Algorithm 2), the

acceptance criterion 1 is applied. The decision procedures of

the acceptance criterion 1 are shown in Algorithm 3.

The acceptance criterion 1 (Algorithm 3) first rejects the

candidate solutions that are not in the subregion Ωℓ, except

when the archive Aℓ does not contain any solution in Ωℓ

(line 2 in Algorithm 3). This exception prevents the PPLS/D

process from stopping early when the PPLS/D process starts

from an archive outside the subregion Ωℓ. Then the acceptance

′ based on the

criterion 1 judges each candidate solution x

′|λℓ, z

∗

scalar objective function f te. If f te(x

) is larger than

′ will be accepted

the largest f te value of the archive Aℓ, x

by the archive Aℓ (line 3 in Algorithm 3). Once a candidate

solution is accepted by the acceptance criterion 1, the first

round of neighborhood exploration will be stopped imme-

diately and the second round of neighborhood exploration

will be skipped. Otherwise, if no candidate solution meets

the acceptance criterion 1, the second round of neighborhood

exploration (Line 17 in Algorithm 2) will be applied following

the acceptance criterion 2. Algorithm 4 shows the decision

procedures of the acceptance criterion 2.

The decision procedures in acceptance criterion 2 (Al-

gorithm 4) are similar to that

in acceptance criterion 1

(Algorithm 3), except that the acceptance criterion 2 is based

on non-dominance relationship (line 3 in Algorithm 4). In the

′ in Ωℓ is not

acceptance criterion 2, if a candidate solution x

dominated by any solution in Aℓ, it will be accepted. Note

here that, the second round of neighborhood exploration does

not stop after finding the first acceptable solution. In other

words, in the second round of neighborhood exploration there

may be multiple new solutions that are added to the archive

Aℓ. After the two rounds of neighborhood exploration finish,

the current solution x0 will be marked as explored (if x0 has

not been deleted from Aℓ) and the next iteration begins. This

�

iterated procedure stops when the given stopping criterion is

met or when all the solutions in the archive are marked as

explored.

In the first round of neighborhood solution, a solution may

be marked as explored before all of its neighboring solutions

are evaluated. Hence, at the final stage of each parallel process

there is a re-check phase (Line 26 in Algorithm 2). The re-

check phase first marks all the solutions in the archive Aℓ as

unexplored. Then it uses the acceptance criterion 2 to check

all the neighboring solutions of all the solution in Aℓ. The

neighboring solutions that satisfy the acceptance criterion 2

will be accepted by the archive Aℓ and the solutions in Aℓ

that are dominated by the newly accepted solutions will be

removed. After the re-check phase, the archive Aℓ becomes

locally optimal, i.e., all the neighboring solutions of all the

solutions in Aℓ are dominated by at least one solution in Aℓ.

After all the L processes are finished, PPLS/D combines the L

archives and removes the solutions in the aggregated archive

that are dominated by the other members. Then the resulting

aggregated archive is the output of PPLS/D.

C. Remarks

As mentioned before, the decomposition strategy of PPLS/D

is based on two aspects. Firstly, the search space is decom-

posed into L subregions {Ω1, . . . , ΩL}, so that each process

only need to focus on a small search region. Secondly, inside

each subregion Ωℓ, the search is guided by the scalar objective

function f te in a fine-grained manner. At each iteration, the

solution in Aℓ,0 with the largest f te value is selected to be

explored, because its neighboring solutions may have large f te

values too. In the main search phase, PPLS/D only accepts

the candidate solution that

improves the current best f te

value. Once an acceptable solution is found and accepted, the

neighborhood exploration stops immediately and the newly

accepted solution will be selected to be explored in the next

iteration because it has the current largest f te value and it

is unexplored. In such a way, the f te value is improved fast

at the early stage. If the algorithm cannot find a candidate

solution that improves the current best f te value, the second

round of neighborhood exploration begins and tries to find

and accept all of the solutions that are non-dominated by the

archive Aℓ in the neighborhood of the current solution. In

such a way, the archive keeps accepting new solutions and

the search continues. After the main search phase, there may

be some solutions in the archive whose neighborhood has not

been fully explored, so PPLS/D conducts the re-check phase

to make sure that the final archive is truly locally optimal.

IV. EXPERIMENTAL STUDIES

In the experimental studies we compare the performance

of PPLS/D against

that of the basic PLS and the PLS

with Anytime Behavior Improvement (PLS-ABI) proposed

in [2]. The test suites are the multiobjective Unconstrained

Binary Quadratic Programming problem (mUBQP) and the

multiobjective Traveling Salesman Problem (mTSP). For both

kinds of problems, the maximum objective number is four.

Fig. 3. The 2-Opt neighborhood move in the TSP

A. Test Problems

The mUBQP problem can be formalized as follows

fk(x) = xT Qkx, k = 1, . . . , m

maximize

subject to x ∈ {0, 1}n,

∑

∑

n

i=1

n

j=1 qk

where a solution x = (x1, . . . , xn) is a vector of n binary

ij] is a n × n matrix for the

(0-1) variables and Qk = [qk

kth objective. Hence the kth objective function is calculated

by fk(x) =

ijxixj. The UBQP is NP-hard and

it represents the problems appearing in a lot of areas, such

as financial analysis [20], social psychology [21], machine

scheduling [22], computer aided design [23] and cellular

radio channel allocation [24]. In this paper, the neighborhood

structure in the mUBQP is taken as the 1-bit-flip, which is

directly related to a Hamming distance of 1.

In the mTSP, G = (V, E) is a fully connected graph where

V is its node set and E the edge set. Each edge e ∈ E is

corresponded to m different costs {ce,1, ce,2, . . . , ce,m} and

ce,k > 0 for all k ∈ {1, . . . , m}. A feasible solution x of the

mTSP is a Hamilton cycle passing through every node in V

exactly once. The mTSP problem can be formalized as follows

5

(4)

∑

minimize

fk(x) =

ce,k, k = 1, . . . , m

e∈x

(5)

subject to x is a Hamilton cycle in G,

The mTSP is one of the most widely used test problems in

the area of multiobjective combinatorial optimization. In this

paper, the neighborhood move in the mTSP is based on the

2-Opt move, in which two edges in the current solution are

replaced by two other edges, as illustrated in Fig. 3.

the mUBQP generator which

In our experiment, three mUBQP instances are generated

using

at

http://mocobench.sourceforge.net and six mTSP instances are

generated by combining the single objective TSP instances

in TSPLIB [25]. Table I shows the information of the test

instances.

available

is

B. Compared Algorithm

Dubois-Lacoste et al. [2] proposed several speed-up strate-

gies to improve the anytime behavior of PLS. An algorithm

with good anytime behavior can return high quality solutions

even if it is stopped early. Besides the basic PLS, we compare

PPLS/D with a sequential PLS variant which we call PLS

with Anytime Behavior Improvement (PLS-ABI). PLS-ABI

applies two sequential speed-up strategies proposed in [2]: the

“≻⊀” strategy and the “1*” strategy. In the “≻⊀” strategy,

the acceptance criterion includes two rounds. In the first round,

only the candidate solutions that dominate the current solution

are accepted. If no solution is accepted in the first round, then

the second round begins and the candidate solutions that are

�

6

TABLE I

TEST INSTANCES

Problem

mUBQP

mTSP

Instance

mubqp 2 200

mubqp 3 200

mubqp 4 200

kroAB100

kroAD100

kroAB150

kroAB200

kroABC100

kroBCDE100

m

2

3

4

2

2

2

2

3

4

n

200

200

200

100

100

150

200

100

100

Remarks

Objective correlation: 0, Q matrix density: 0.8

Objective correlation: 0, Q matrix density: 0.8

Objective correlation: 0, Q matrix density: 0.8

Combination of TSPLIB instances kroA100 and kroB100

Combination of TSPLIB instances kroA100 and kroD100

Combination of TSPLIB instances kroA150 and kroB150

Combination of TSPLIB instances kroA200 and kroB200

Combination of TSPLIB instances kroA100, kroB100 and kroC100

Combination of TSPLIB instances kroB100, kroC100, kroD100 and kroE100

not dominated by any solution in the archive are accepted. In

the “1*”, the first-improvement neighborhood exploration is

applied. After all solutions in the archive have been marked as

explored using the first-improvement rule, the algorithm marks

all solutions in the archive as unexplored and explores them

again using the best-improvement rule. Here we do not use

the “OHI” strategy and the “Dyngrid-HV” strategy proposed

in [2] because they only apply to bi-objective problems.

C. Performance Metric

hypervolume

The

used

indicator

[26]

quality

the

algorithms.

to measure

different

is

as

the metric

archive

the

of

obtained

by

test

For

each

}

and

let F max

instance,

, . . . , f max

} respectively the maximum

F min = {f min

, f min

and minimum objective values ever found during all algorithm

executions. For the mUBQP problem, which is a maximization

problem, the reference point of the hypervolume is

= {f max

, . . . , f min

, f max

2

1

1

2

m

m

F min − 0.1 · (F max − F min).

For convenience, on the mUBQP we normalized the hyper-

volume value by dividing the hypervolume of the maximum

objective vector Fmax. For the mTSP problem, which is a

minimization problem, the reference point is

F max + 0.1 · (F max − F min)

and the final hypervolume is normalized by dividing the

hypervolume of F min.

D. Algorithm Performance from Random Solutions

We argue that PPLS/D is an efficient building block for mul-

tiobjective metaheuristics. From randomly generated solutions,

PPLS/D can be used to quickly obtain a set of high quality

solutions as the initial solutions of a metaheuristic. It can also

be applied in the middle or final stage of a metaheuristic to

further improve a high quality solution population. In this

section, we test the performance of PPLS/D and the compared

algorithms from randomly generated initial solutions.

In our experiment, we set H = 6, 8, 10. For each {m, H}

combination, the corresponding process number L is shown

in Table II. Hence, on each test

instance, five algorithms

are compared, which are PLS, PLS-ABI, PPLS/D(H=6), P-

PLS/D(H=8) and PPLS/D(H=10). Each algorithm is executed

20 runs on each instance and the maximum runtime of each run

is 100s. Each run starts from a randomly generated solution.

∗ is the zero vector

On mUBQP instances the reference point z

∗ is the objective vector of the initial

and on mTSP instance z

solution. All algorithms are implemented in GNU C++. In the

PPLS/D implementation, the parallel processes are executed

in a sequential order and the entire search history is recorded.

After all processes are finished, we integrate the recorded data

to simulate a parallel scenario. The computing platform is

two 6-core 2.00GHz Intel Xeon E5-2620 CPUs (24 Logical

Processors) under Ubuntu system.

SETTINGS OF PARALLEL PROCESS NUMBER L IN PPLS/D

TABLE II

m = 2

m = 3

m = 4

H = 6

L = 7

L = 28

L = 84

H=8

L = 9

L = 45

L = 165

H=10

L = 11

L = 66

L = 286

For each run, we record the entire search history and

calculate the normalized hypervolume indicator at certain time

points. Fig. 4 shows the hypervolume values attained by the

algorithms over time on the test instances. From Fig. 4 we

can see that, on all test instances the hypervolume increment

speed of PPLS/D is much higher than that of PLS and PLS-

ABI. For example, on the instance mubqp 2 200 (Fig. 4(a)),

PPLS/D(H=6) takes 0.04s to reach an average hypervolume

value higher than 0.8, while PLS-ABI needs more than 1s to

reach the same average hypervolume value and PLS needs

more than 1.58s. On mUBQP instances, when the objective

number m increases, the superiority of PPLS/D against PLS

and PLS-ABI increases. The same phenomenon also can be

found on mTSP instances. This means that PPLS/D can handle

high dimensional problems better than PLS and PLS-ABI.

Hence, we conclude that the proposed PPLS/D is much more

efficient than PLS and PLS-ABI when the initial solutions are

randomly generated.

E. Algorithm Performance from High Quality Solutions

In this section, we test the performance of PPLS/D and

the compared algorithms from high quality initial solutions.

The high quality solutions are generated by executing single

objective local search on the Tchebycheff scalar objective

functions (Equation (3)) with different weight vectors. Specif-

ically, we set H = 6 and generate L =

weight

vectors. For each test instance, a random solution is generated

and L different local search procedures start from it. Each

local search procedure is based on the Tchebycheff scalar

H+m−1

m−1

(

)

�

7

(a) mUBQP: mubqp 2 200 (m=2)

(b) mUBQP: mubqp 3 200 (m=3)

(c) mUBQP: mubqp 4 200 (m=4)

(d) mTSP: kroAB100 (m=2)

(e) mTSP: kroAD100 (m=2)

(f) mTSP: kroAB150 (m=2)

(g) mTSP: kroAB200 (m=2)

(h) mTSP: kroABC100 (m=3)

(i) mTSP: kroBCDE100 (m=4)

Fig. 4. The hypervolume attained by PLS, PLS-ABI, PPLS/D(H=6), PPLS/D(H=8) and PPLS/D(H=10) at different time points. Each algorithm is executed

20 runs from different randomly generated solutions.

objective function defined by a unique weight vector. For

the mUBQP instances, the 1-bit-flip first-improvement local

search is applied. For the mTSP instances, the 2-Opt first-

improvement

local search is applied. The L local search

procedures return L high quality solutions. After removing

the solutions that are dominated by other solutions, we get

the initial solution set of the algorithms.

From the high quality solution set, we test the performance

in

of PPLS/D(H=6), PLS and PLS-ABI. Note here that,

this experiment PLS is equivalent

to the well-known 2P-

PLS [5], where PLS is started from locally optimal solutions of

weighted sum problems. Hence this experiment also compares

the proposed PPLS/D against 2PPLS. The other experiment

settings are same as the settings in the previous section.

Fig. 5 shows the hypervolume values attained by the

algorithms over time on the test instances. From Fig. 5 we can

see that when the initial solutions are high quality solutions,

the proposed PPLS/D outperforms PLS and PLS-ABI on

most instances. Considering that in this experiment PLS is

equivalent to the well-known 2PPLS, the results show that

PPLS/D can outperform 2PPLS on the tested mUBQP and

mTSP instances. In addition, when the objective number m

increases, the superiority of PPLS/D against PLS and PLS-ABI

increases. Hence, we conclude that PPLS/D is more efficient

than PLS and PLS-ABI when the initial solutions are high

quality solutions.

F. Comparison against Parallel PLS-ABI

In the previous sections, the proposed PPLS/D is compared

against the sequential PLS and PLS-ABI. In this section, we

compare PPLS/D against the parallel implementation of PLS-

ABI (P-PLS-ABI). In P-PLS-ABI, L PLS-ABI processes are

executed simultaneously with different random seeds. After

the L parallel processes finish, P-PLS-ABI combines the L

sub-archives and deletes the solutions that are duplicated

or dominated by other solutions to get

the final archive.

In the comparison experiment, both PPLS/D and P-PLS-

ABI are implemented in a real parallel scenario using the

widely-used parallelization tool MPI. In other words, each

run of PPLS/D and P-PLS-ABI occupies L cores to execute

L processes simultaneously. Each process is executed on

one unique core. We set

the stopping criterion of each

process to be 106 function evaluations. For the PPLS/D

implementation, we set H = 6, which means that PPLS/D

contains L = 7 parallel processes on two-objective instances,

L = 28 parallel processes on three-objective instances and

L = 84 parallel processes on four-objective instances. The

P-PLS-ABI implementation contains the same number of

parallel processes as the PPLS/D implementation on each

instance. Both PPLS/D and P-PLS-ABI are executed 20 runs

on each instance and each run is started from a randomly

generated solution. The experimental platform is the Tianhe-2

supercomputer which is the 4th ranked supercomputer in the

world [27]. The other experimental settings are same as the

settings in the previous sections.

Fig. 6 shows the hypervolume values attained by PPLS/D

and P-PLS-ABI versus function evaluations. From Fig. 6

we can see that the proposed PPLS/D performs significantly

better than P-PLS-ABI on all of the test instances. Hence,

we conclude that the proposed PPLS/D can outperform both

sequential and parallel implementations of PLS-ABI, which

means that PPLS/D is a highly efficient building block for

multiobjective combinatorial metaheuristics.

0.010.1 1 10 100 Time (s)00.10.20.30.40.50.60.70.80.9HypervolumePLSPLS-ABIPPLS/D(H=6)PPLS/D(H=8)PPLS/D(H=10)0.010.1 1 10 100 Time (s)00.10.20.30.40.50.60.70.8HypervolumePLSPLS-ABIPPLS/D(H=6)PPLS/D(H=8)PPLS/D(H=10)0.010.1 1 10 100 Time (s)00.10.20.30.40.50.6HypervolumePLSPLS-ABIPPLS/D(H=6)PPLS/D(H=8)PPLS/D(H=10)0.010.1 1 10 100 Time (s)00.10.20.30.40.50.60.70.80.91HypervolumePLSPLS-ABIPPLS/D(H=6)PPLS/D(H=8)PPLS/D(H=10)0.010.1 1 10 100 Time (s)00.10.20.30.40.50.60.70.80.91HypervolumePLSPLS-ABIPPLS/D(H=6)PPLS/D(H=8)PPLS/D(H=10)0.010.1 1 10 100 Time (s)00.10.20.30.40.50.60.70.80.91HypervolumePLSPLS-ABIPPLS/D(H=6)PPLS/D(H=8)PPLS/D(H=10)0.010.1 1 10 100 Time (s)00.10.20.30.40.50.60.70.80.91HypervolumePLSPLS-ABIPPLS/D(H=6)PPLS/D(H=8)PPLS/D(H=10)0.010.1 1 10 100 Time (s)00.10.20.30.40.50.60.70.80.9HypervolumePLSPLS-ABIPPLS/D(H=6)PPLS/D(H=8)PPLS/D(H=10)0.010.1 1 10 100 Time (s)00.10.20.30.40.50.60.7HypervolumePLSPLS-ABIPPLS/D(H=6)PPLS/D(H=8)PPLS/D(H=10)�

8

(a) mUBQP: mubqp 2 200 (m=2)

(b) mUBQP: mubqp 3 200 (m=3)

(c) mUBQP: mubqp 4 200 (m=4)

(d) mTSP: kroAB100 (m=2)

(e) mTSP: kroAD100 (m=2)

(f) mTSP: kroAB150 (m=2)

(g) mTSP: kroAB200 (m=2)

(h) mTSP: kroABC100 (m=3)

(i) mTSP: kroBCDE100 (m=4)

Fig. 5.

solutions which are generated by executing local search on Tchebycheff aggregated functions (Equation (3)) with different weight vectors.

The hypervolume attained by PLS, PLS-ABI and PPLS/D(H=6) at different time points. Each algorithm is executed 20 runs from high quality

tree becomes very large. As for PPLS/D, we can see that at

the early stage each parallel process leaves a single trajectory

when approaching the PF. Because PPLS/D is guided by the

Tchebycheff scalar functions, we can see that at the early stage

the trajectory of each PPLS/D process is mainly located in the

central line of the subregion. In the middle and final stage, the

trajectory tree of PPLS/D contains fewer branches than PLS

and PLS-ABI but it still can approximate the PF ideally. In

addition, because PPLS/D contains multiple parallel processes

and each process only needs to approximate a part of the PF,

PPLS/D converges much faster than PLS and PLS-ABI. In the

Appendix, we show more examples of the trajectory trees.

H. Effect of Process Number L

In Fig. 4 there is an interesting phenomenon that

the

PPLS/D algorithm with a lower H value has a higher hy-

pervolume value at the early stage. This phenomenon is very

obvious on the mUBQP and mTSP instances with more than

two objectives. For example, on mubqp 4 200 (Fig. 4(c))

PPLS/D(H=6) gets the highest hypervolume among all the

three PPLS/D algorithms at the 0.1s. Considering that a low

H value means a small process number L, this phenomenon

seems to be contrary to the common knowledge that more

parallel processes can bring a higher hypervolume value. A

possible explanation is that a higher parallel process number

means a narrower search region for each process. As illustrated

in Fig. 9, solution x0 is the current solution of a PPLS/D

process running on a bi-objective problem. We assume that

x0 totally has 10 neighboring solutions which are uniformly

distributed around x0 in the objective space. In Fig. 9(a), the

search region is relatively wide and three of x0’s neighboring

solutions are acceptable. Meanwhile in Fig. 9(b), the search

region is relatively narrow and only one neighboring solution

Fig. 7. A example of the trajectory tree diagram

G. Algorithm Behavior Investigation

Shi et al. [6] proposed a diagram called “trajectory tree” to

show the behavior of a PLS process. For a PLS process, the

trajectory tree plots all the solutions that were ever accepted

by the archive and the neighborhood relationship among them

on the objective space. For example, the trajectory tree in

Fig. 7 shows that from the solution x0, three neighboring

solutions x1, x2 and x3 were accepted by the archive. Then

the neighborhood of x2 was explored and x4, x5 and x6 were

accepted by the archive.

Figure 8(a), 8(b) and 8(c) show the trajectory trees of a

PLS run, a PLS-ABI run and a PPLS/D(H=6) run on the

bi-objective mUBQP instance mubqp 2 200. Fig. 8(d), 8(e)

and 8(f) show the trajectory trees of the three algorithms

on the bi-objective mTSP instance kroAB100. We can see

that the trajectory tree of PLS contains many branches at its

early stage. It means that PLS accepts many solutions into its

archive at the early stage. It is unnecessary to accept so many

solutions at the early stage because their quality is relatively

low. Meanwhile PLS-ABI leaves a single trajectory at its early

stage and approaches the PF faster than PLS. However, after

the early stage, the branch number of the PLS-ABI trajectory

0.010.1 1 10 100 Time (s)0.70.720.740.760.780.80.820.840.860.88HypervolumePLSPLS-ABIPPLS/D(H=6)0.010.1 1 10 100 Time (s)0.450.50.550.60.650.70.75HypervolumePLSPLS-ABIPPLS/D(H=6)0.010.1 1 10 100 Time (s)0.250.30.350.40.450.50.55HypervolumePLSPLS-ABIPPLS/D(H=6)0.010.1 1 10 100 Time (s)0.840.850.860.870.880.890.90.910.920.930.94HypervolumePLSPLS-ABIPPLS/D(H=6)0.010.1 1 10 100 Time (s)0.830.840.850.860.870.880.890.90.910.920.93HypervolumePLSPLS-ABIPPLS/D(H=6)0.010.1 1 10 100 Time (s)0.850.860.870.880.890.90.910.920.930.94HypervolumePLSPLS-ABIPPLS/D(H=6)0.010.1 1 10 100 Time (s)0.850.860.870.880.890.90.91HypervolumePLSPLS-ABIPPLS/D(H=6)0.010.1 1 10 100 Time (s)0.640.660.680.70.720.740.760.78HypervolumePLSPLS-ABIPPLS/D(H=6)0.010.1 1 10 100 Time (s)0.450.50.550.6HypervolumePLSPLS-ABIPPLS/D(H=6)x0x1x2x3x4x5x6f2f1�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc