April 2012, 19(2): 107–115

The Journal of China

Universities of Posts and

Telecommunications

www.sciencedirect.com/science/journal/10058885 http://jcupt.xsw.bupt.cn

Simultaneous image classification and annotation based on

probabilistic model

LI Xiao-xu (*), SUN Chao-bo, LU Peng, WANG Xiao-jie, ZHONG Yi-xin

Center for Intelligence Science and Technology, Beijing University of Posts and Telecommunications, Beijing 1000876, China

Abstract

The paper proposes a novel probabilistic generative model for simultaneous image classification and annotation. The

model considers the fact that the category information can provide valuable information for image annotation. Once the

category of an image is ascertained, the scope of annotation words can be narrowed, and the probability of generating

irrelevant annotation words can be reduced. To this end, the idea that annotates images according to class is introduced in

the model. Using variational methods, the approximate inference and parameters estimation algorithms of the model are

derived, and efficient approximations for classifying and annotating new images are also given. The power of our model is

demonstrated on two real world datasets: a 1 600-images LabelMe dataset and a 1 791-images UIUC-Sport dataset. The

experiment results show that the classification performance is on par with several state-of-the-art classification models,

while the annotation performance is better than that of several state-of-the-art annotation models.

Keywords image classification, image annotation, probabilistic model, variational inference

1 Introduction

With the sharp increase in the number of images stored

on the Internet, it has become increasingly important to

organize and index these resources effectively. Therefore,

research on image classification and annotation in

computer vision is essential. Image classification involves

automatically assigning a class label to a digital image; the

label may refer to the objects, events or scenes depicted.

Image annotation refers to annotating an image with text in

the form of captions or keywords that describe pertinent

objects or scenes in the image.

Much work has been done on image classification and

annotation. Existing techniques can be classified to two

classes: generative and discriminative techniques. Among

the generative methods, a family of models based on latent

dirichlet allocation (LDA) [1] has received significant

attention. These models postulate the existence of a small

set of hidden factors that govern the association between

images and categories (i.e., image classification [2–6]) and

Received date: 12-07-2011

Corresponding author: LI Xiao-xu, E-mail: xiaoxulibupt@gmail.com

DOI: 10.1016/S1005-8885(11)60254-9

between images and annotation words (i.e., image

annotation [3,5,7–8]). For image classification, in Ref. [2]

Li et al. provides the LDA-based seed work. In the study,

each category is identified with its own Dirichlet prior,

which is optimized to distinguish between each other. In

Ref. [3], Wang et al. constructs a module that models the

distribution over categories conditioned on the input of image

topics, and plugs the module into the original LDA model;

as a result, the study proposes a model multi-class (MC)

supervised LDA (sLDA) (MC-sLDA). The model has been

reported to have best performance for modeling

categorized images. Regarding image annotation, in Ref.

[7], Blei et al. proposes the LDA-based classical method

known as corr-LDA, which assumes that image and

annotation words share the same latent topic variable and

that annotation words are generated from subsets of

empirical image topics. These assumptions are made so

that the textual modal and image modal correspond. In Ref.

[8], Putthividhya et al. treats annotation as a classification

problem and proposes sLDA-bin, which is based on the

two models sLDA [9] and corr-LDA. In Ref. [10],

Putthividhya et al. captures varying degrees of correlations

�

108 The Journal of China Universities of Posts and Telecommunications 2012

and relaxes the constraint in previous models that the

number of image topics must be the same as the number of

annotation topics.

In Ref. [3], Wang et al. considers the two tasks of image

classification and annotation simultaneously, although they

are still treated independently in many cases. The study

treats category and annotation attached to a given image as

a global description and local description, respectively; see

Fig. 1. And it proposes the model multi-class sLDA with

annotation by combining a supervised topic model and a

probabilistic model for image annotation so that these two

tasks can be done simultaneously. So far, this model has

achieved the best performance on image classification and

annotation.

Class: forest

Annotations: tree trunk, trunk occluded, ground

grass trees, woman walking, umbrella, dog

(a) An example image from forest class in LabelMe in Ref. [11]

Class: highway

Annotations: sky, sign, ground, trees, road, car, van rear,

streetlight, central reservation, car rear

(b) An example image from highway class in LabelMe in Ref. [11]

Class: croquet

Annotations: tree, plant, athlete, mallet, grass, ball,

wicket

(c) An example image from croquet class in UIUC-Sport in Ref. [5]

Class: badminton

Annotations: badminton racket, playing field,

shuttlecock, athlete

(d) An example image from badminton class in UIUC-Sport in

Ref. [5]

Fig. 1 Example images with class labels and annotations

In this work, we build on several previous studies [3,7],

and we also try to achieve image classification and

annotation simultaneously. We draw on the fact that once

the category of an image is ascertained, the scope of

annotation words for the image can be narrowed.

Meanwhile, the probability of generating irrelevant

annotation words can be reduced. As such, we think not

only can these two tasks of image classification and image

annotation can be performed simutaneously, but also they

can be implemented in ways that improve one another.

Based on this intuition, we propose a novel probabilistic

generative model for simultaneous image classification

and annotation. The performance of our model is

demonstrated on two real-word datasets, and the results

show that our model provides a competitive classification

performance with several benchmark classification models,

while it shows a better annotation performance than other

�

Issue 2 LI Xiao-xu, et al. / Simultaneous image classification and annotation based on probabilistic model 109

benchmark annotation models.

The remaining sections of the paper are organized as

follows. The basic notation, terminology and our model

are introduced in Sect. 2. The framework for parameter

estimation using variational inference is given in Sect. 3.

The classification and annotation performance of our

model based on two real-world image datasets is shown in

Sect. 4. Finally, the conclusions of the study are presented

in Section 5.

2 Modeling images, labels and annotations

2.1 Data representation

(

1

)

M

=Vvv

v

,,...,

2

Suppose that there are D images with class labels and

A. We adopt bag-of-word

sV (see Sect. 4 for additional details). An

mv is a unit basis vector of size

annotations in the dataset

representation for images and annotations text. For images,

we extract features from all images, and then we perform

clustering. The centers of the clusters are used to construct

the image vocabulary, and the length of the vocabulary is

denoted as

sV . Each

image word

image is reduced as a collection of M image words, which

is denoted as

. For annotation text, all

annotation words construct the textual vocabulary, the

nw

tV . An annotation word

length of which is denoted as

tV . The annotation text for

is a unit basis vector of size

(

=Www

each image are denoted as

. The class

label is represented as a unit basis vector of size J, which

A

is denoted as

consisting of

represented as

is to find a model that fits the dataset and then to use the

model to predict the class label and annotation text for new

images.

=c

cc

,,...,

1

2

D

image-class-annotation triples is

{

(

)

Vc W

Ad

D

,,1,2,...,

=

dd

d

. Thus, the dataset

. Our task

w

,,...,

2

{

˛

}

}

(

)

)

N

J

c

1

J

)

c

)

=W (

cc

,,...,

1

2

w

(

=c

ww

,,...,

1

2

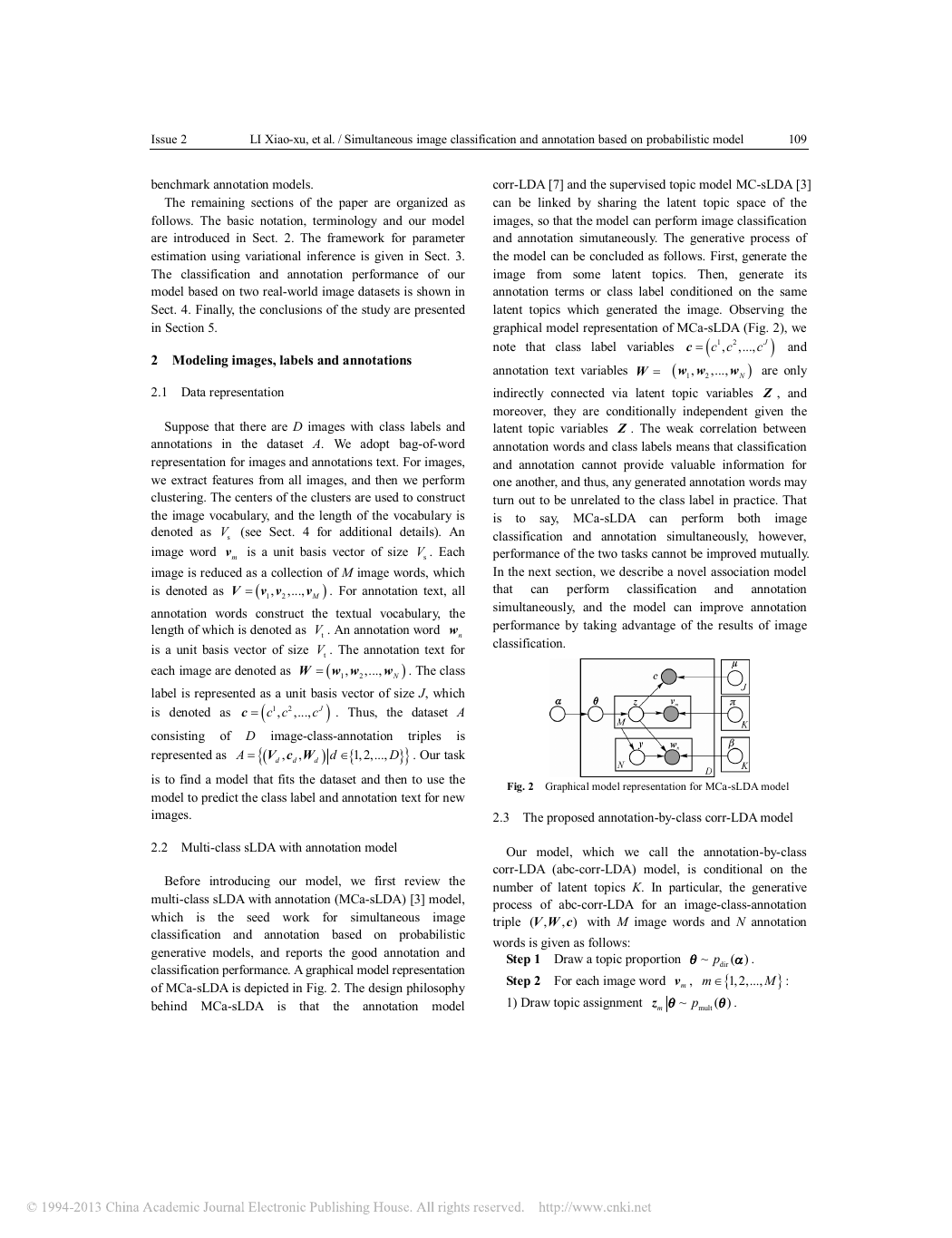

corr-LDA [7] and the supervised topic model MC-sLDA [3]

can be linked by sharing the latent topic space of the

images, so that the model can perform image classification

and annotation simutaneously. The generative process of

the model can be concluded as follows. First, generate the

image from some latent topics. Then, generate its

annotation terms or class label conditioned on the same

latent topics which generated the image. Observing the

graphical model representation of MCa-sLDA (Fig. 2), we

and

note that class label variables

annotation text variables

are only

Z , and

indirectly connected via latent topic variables

moreover, they are conditionally independent given the

latent topic variables Z . The weak correlation between

annotation words and class labels means that classification

and annotation cannot provide valuable information for

one another, and thus, any generated annotation words may

turn out to be unrelated to the class label in practice. That

is to say, MCa-sLDA can perform both image

classification and annotation simultaneously, however,

performance of the two tasks cannot be improved mutually.

In the next section, we describe a novel association model

that can perform

classification and annotation

simultaneously, and the model can improve annotation

performance by taking advantage of the results of image

classification.

N

Fig. 2 Graphical model representation for MCa-sLDA model

2.3 The proposed annotation-by-class corr-LDA model

2.2 Multi-class sLDA with annotation model

Our model, which we call the annotation-by-class

Before introducing our model, we first review the

multi-class sLDA with annotation (MCa-sLDA) [3] model,

which is the seed work for simultaneous image

classification and annotation based on probabilistic

generative models, and reports the good annotation and

classification performance. A graphical model representation

of MCa-sLDA is depicted in Fig. 2. The design philosophy

behind MCa-sLDA is that the annotation model

corr-LDA (abc-corr-LDA) model, is conditional on the

number of latent topics K. In particular, the generative

process of abc-corr-LDA for an image-class-annotation

triple (,, )VW c

words is given as follows:

with M image words and N annotation

Step 1 Draw a topic proportion

Step 2 For each image word

1) Draw topic assignment

~(

mv ,

m

p

~( )

)pq

a .

dir

{

1,2,...,

˛

q .

z q

m

mult

M

}

:

�

110 The Journal of China Universities of Posts and Telecommunications 2012

2) Draw image word

m

Step 3 Draw class label

v

z

m

p

~(

cz

)

mult

~(,

p .

z

)p

softmax

m

z m , where

z

=

(

1

M

)

M

m

1

=

z is the empirical frequencies of the topics.

m

The formulation of the softmax function is:

p

(

c z

,

)

m

=

(

exp

exp

J

m

T

c

(

T

m

l

z

)

z

l

1

=

(1)

)

}

:

N

n

y

n

.

unif

˛

{

1,2,...,

}

~n

dirp ’, ‘

p b z

multc,

p

softmax

nw ,

n

{

N˛

.

(

Step 4 For each annotation word

1) Draw topic identifier

2) Draw annotation word

In the above formulation, ‘

unifp

)y

’, ‘ multp

’ represent Dirichlet, Softmax, Multinomial and

nypn

~, 1,2,...,

w z

‘

Uniform distribution, respectively. Our model specifies a

joint distribution over latent variables and observation

variables. Let

}

E = VW c

,

(

)

pEHOppp

, then

(

)

,

O = amp b

)

c z μ

{

}

z yq

,

z θ

mm

z π

H =

{

}

{

=

,,

,,

,

(

)

(

)

(

v

,

,

,

m

and

’ and

M

θ α

p

m

1

=

N

(

pyMP

,,

,

nn

n

1

=

)

n

(

w

y

z β c (2)

)

And a graphical representation of the model is depicted

in Fig. 3.

Fig. 3 Graphical model representation for abc-corr-LDA model

In the generative process, Steps 1 and 2 assume that the

image words of each triple arise from a set of topics. An

image-topic is a distribution over the image vocabulary,

and all topics are shared by entire image collection. Each

image has its own proportion of topics

q , which is

randomly drawn from a Dirichlet distribution. The two

steps describe how to generate image parts of a triple.

Step 3 describes the generative process of the class label,

and the class label is drawn from Softmax regression

conditioned on empirical frequencies of the topics of an

image z . In the Softmax, each parameter can be thought

as a template of the corresponding category, and the class

label of the template most similar to an image will be

assigned to the image.

The above three steps introduce the procedures

n

n

,

,

)

(

p

, the part

(

pEH O

generating image words and class label; we now turn to

Step 4 generating annotation words. In the joint

)

yw

z β c

,,

distribution

shows a direct dependence is designed between class label

c and annotation text w. Given a class c, annotation text

will be generated from the corresponding topic of

annotation terms. In particular, the class label c must first

ny is drawn

be chosen, and an identifier of image topic

from some image empirical topic. Finally, an annotation

word

bc z

, yn

The generative process of our model can be summarized

as follows. First generate an image from some latent topics,

and then generate the class label of the image according to

the latent topics. Finally, generate the annotation words of

the image according to the class label and the latent topics

of the image. Comparing with MCa-sLDA, our model

focuses more on modeling the relationship between class

label and annotation so that classification can be used to

improve annotation.

nw are drawn from the class-annotation-topic

.

3 Variational inference and parameter estimation

3.1 Variational inference

The posterior distribution of the latent variables

q

q

)

)

(

-

)

ø

ß

ØøØ

ºßº

)

,ln,ln

=

(3)

conditioned on a

triple image-class-annotation

p H Vc W is intractable to compute. In this study, we

(,,

use a variational inference method in Ref. [12] to

approximate this distribution. We begin by finding the

lower bound of the log probability given a triple:

(

(

LIOEpEHOEqH I

where q is a variational distribution over the latent

variables

represents variational

parameters. In particular, q is defined as the factorized

distribution:

(

(

qHIqqq y

=

where g is a K-dimensional Dirichlet parameter,

a K-dimensional multinomial parameter, and

M-dimensional multinomial parameter.

}

Given a model

}

I = γ φ λ

,

(4)

and a triplet

O = amp b

)

mφ is

nλ is a

z φ

mmn

and

θ γ

{

{

,,

(

)

)

)

(

λ

1

=

1

=

M

,

,

m

N

n

n

E = VW c

,

,

{

}

, we maximize the lower bound with respect

�

Issue 2 LI Xiao-xu, et al. / Simultaneous image classification and annotation based on probabilistic model 111

}

{

,

,

I = gj l , which is

to the variational parameters

equivalent to minimizing the KL-divergence between this

factorized distribution and the true posterior. We use

coordinate ascent, repeatedly optimizing with respect to

each parameter while holding the other parameters fixed.

g , the

To update the posterior Dirichlet parameter

procedure is the same as in Ref. [1]:

ga

=

iimi

M

+ (5)

j

m

1

=

To update the parameter

mφ , we adopt similar

optimization to Ref. [3], and choose the terms including

mφ from L:

K

(

=-+

i

1

=

V

s

p

j

1

=

φ

mmiimij

jygy

)

+

ln

g

L

v

)

(

1

=

K

j

j

j

Ł

Ł

V

t

N

C

j

1

=

lnexpln

Ł

nl

11

==

Ø

E

Œ

qlmimi

º

ł

1

M

K

l

c

w

l

nmnlij

j

ln

b

+

ł

μ φ

T

c m

-

J

l

1

=

(

μ z

T

)

ł

ø

œ

ß

-

j

i

1

=

(

j

)

Maximizing the above equation under the constraint

=

leads to

j

mi

1

K

i

1

=

jp

miivci

m

exp

(

a φ

old

T

m

N

Ł

) 1

ia-

nl

11

==

ł

J

V

t

j

1

=

c

l

w

ln

lby g

nmnlij

j

+

i

(

)

m

+

1

M

-

(6)

where the notation ‘ ’ means ‘is proportional to ’, and

a

=

ifiljli

J

Ł

M

K

jm

1

expexp

M

ŁłŁ

Ł

j

mφ is previous value.

m

ł

ł

1

=

l

1

=

old

f m

„

Note that

The terms, including

Lagrange multiplier are:

1

M

ł

nmλ

, with the approximate

L[]lnln

tV

c

l

l

JK

=-+

ljlbllh

nmminmnlijnmnmnnm

lij

111

===

Setting L[]

nmnml

¶¶

=

tV

K

J

expln

b

Ł

li

11

==

1

=

j

lj

nmminlij

-

j

w

1

l

0

leads to

M

Ł

m

1

=

ł

l

c

j

w

(7)

ł

Updating γ requires φ , updating φ requires λ and

γ , and updating λ requires φ , which naturally leads to

an inference algorithm. Eqs. (5) –(7) are therefore invoked

repeatedly until the lower bound of the log probability in

Eq. (3) converges.

3.2 Parameter estimation

We use variational expectation-maximization (EM)

,

,,

}

{

of

model

algorithm framework to obtain approximate the maximum

likelihood estimation

parameters

O = amp b . During the E-step, we use variational

inference to obtain approximate posterior distributions for

each triple, which simplifies optimization in the M-step.

During the M-step, we maximize the lower bound on the

log probability of the collection A, that is, maximizing

D

(

LALI O

with respect to the model parameters

d

1

=

,,

,

O = amp b . The variational EM algorithm alternates

between these two steps until

(

L A converges.

We isolate the terms, including

ijπ , from

add the appropriate Lagrange multipliers, then

(

L A , and

)

{

}

=

(

)

)

)

;

d

L[]ln

D

M

d

p

=+

pjph

ijdmiiviij

dm

11

==

ßπ

Let L

ø

Ø

=º

¶¶

ijij

dMD

-

1

m

j

V

s

Ł

1

=

π

0

, leads to

ł

p

j

v

j

ijdmidm

d

The terms, including

1

=

1

=

m

lijb , are:

(8)

L[]ln

bjl

d

D

N M

b

lijdndmidnmlij

m

1

11

==

=

=

dn

d

j

c w

l

Maximizing the above equation under the constraint

=

leads to

b

lij

1

tV

j

1

=

d

N M

D

bj l

dn

lijddndmidnm

11

==

1

=

m

d

c w

l

j

(9)

dM

K

=

cddmici

m

1

=

i

1

=

Ł

j

exp

Ł

1

M

d

m

ł

ł

for convenience,

Let

K

then

M

L[]ln

DK

mj m

=

idmicicd

dmi

1

M

111

===

d

d

J

d

1

=

c

-

K

(10)

Taking the derivative with respect to cim yields:

]

m

i

L[

¶

m

ci

DK

1

=-

M

dmi

d

111

===

Ł

dM

J

c

c

j

dmidcd

c

1

=

Ł

K

-

1

ł

M

d

M

K

1

=

cd

m

d

m

1

=

Ł

j

dmici

exp

1

M

Ł

M K

dmd

m

d

ł

łł

(11)

This is not a closed-form solution. Therefore, we adopt

�

112 The Journal of China Universities of Posts and Telecommunications 2012

the conjugate gradient to optimize

cim [13].

3.3 Image classification and annotation

In the previous sections, we introduced our model and

provided a scheme to estimate the model ’s parameters. In

this section, we introduce the procedures used to predict

class label and annotations. In the test sets, the images are

not labeled or annotated. For every image in the test sets,

we first use the LDA [1] inference step to solve for

(

zqg j

q

including l , from Eq. (4), and removing the terms,

including μ , from Eq. (6).

. This is equivalent to removing the terms,

)

,

,

For classification, we classify the images by using the

z . The class

empirical frequencies of the image-topics

label with the maximum probability is assigned to the

given image, which is equivalent to choosing a class label

. Let

which maximizes the expectation of

Tμ z

φ

m

, which results in the following

M

)

=

φ

M

(

1

m

1

=

formulation:

c

*

=

argmaxargmaxqc

Ø

º

E

{

}

cJc

J

1,2,...,1,2,...,

˛

μ z

T

=

ø

ß

{

˛

c

}

μ φ (12)

T

N

For annotation, we use the following formulation:

(

)

wvcw z

pp

,,,

Note that the class label

) (

c is obtained from the

»

q

1 z

=

(13)

β c

(

)

z

n

n

n

n

procedure used to predict classification.

4 Experiments

We test our model on a 1 600-image subset of the

LabelMe dataset from Ref. [11] and a 1 791-image subset

of the UIUC-Sport dataset from Ref. [5]. The LabelMe

data contains eight classes:

‘street’, ‘tall building ’,

‘highway’ ‘open country’, ‘inside city’, ‘“forest’, ‘coast’

and ‘mountain.’ Each class contains 200 images. The

UIUC-Sport data contains eight classes:

‘badminton’,

‘polo’, ‘croquet’, ‘bocce’, ‘rock climbing ’, ‘sailing’,

‘rowing’ and ‘snowboarding’. The number of images in

each class varies from 137 (bocce) to 329 (croquet), and

the total number of the dataset is 1 791.

For the LabelMe data, the preprocessing steps are:

1) Apply grid-sampling technique (the grid size is

5 5· ). Extract a 1616·

centers, and then represent each patch using a

128-dimensional SIFT [14] region descriptor.

patch from each of the grid

2) Run the

k-means algorithm [15] over these

descriptors, and set the number of the centers as 240. Then

all of the centers constitute a codebook of images.

Construct the annotation vocabulary using all of the

different annotation words.

3) Finally, remove the annotation terms that occurred

less than three times for the two data sets, and evenly split

each class to create training and testing sets.

For the UIUC-Sport data, we extract 2 500 patches

uniformly for each image in this dataset. The size of each

patch is 3232·

LableMe data. Note that all testing is on images that are

not labeled or annotated.

. The other steps are same as for the

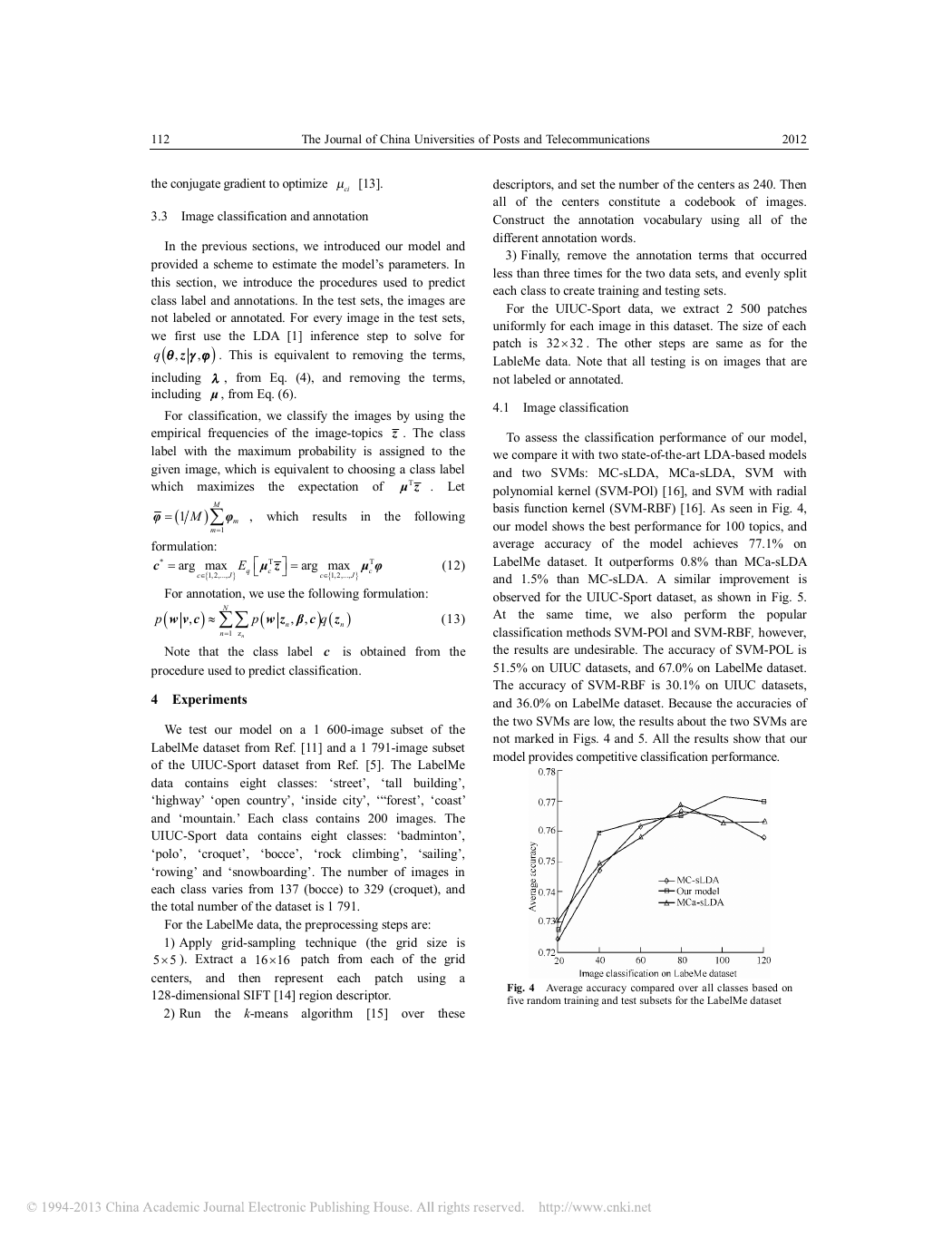

4.1 Image classification

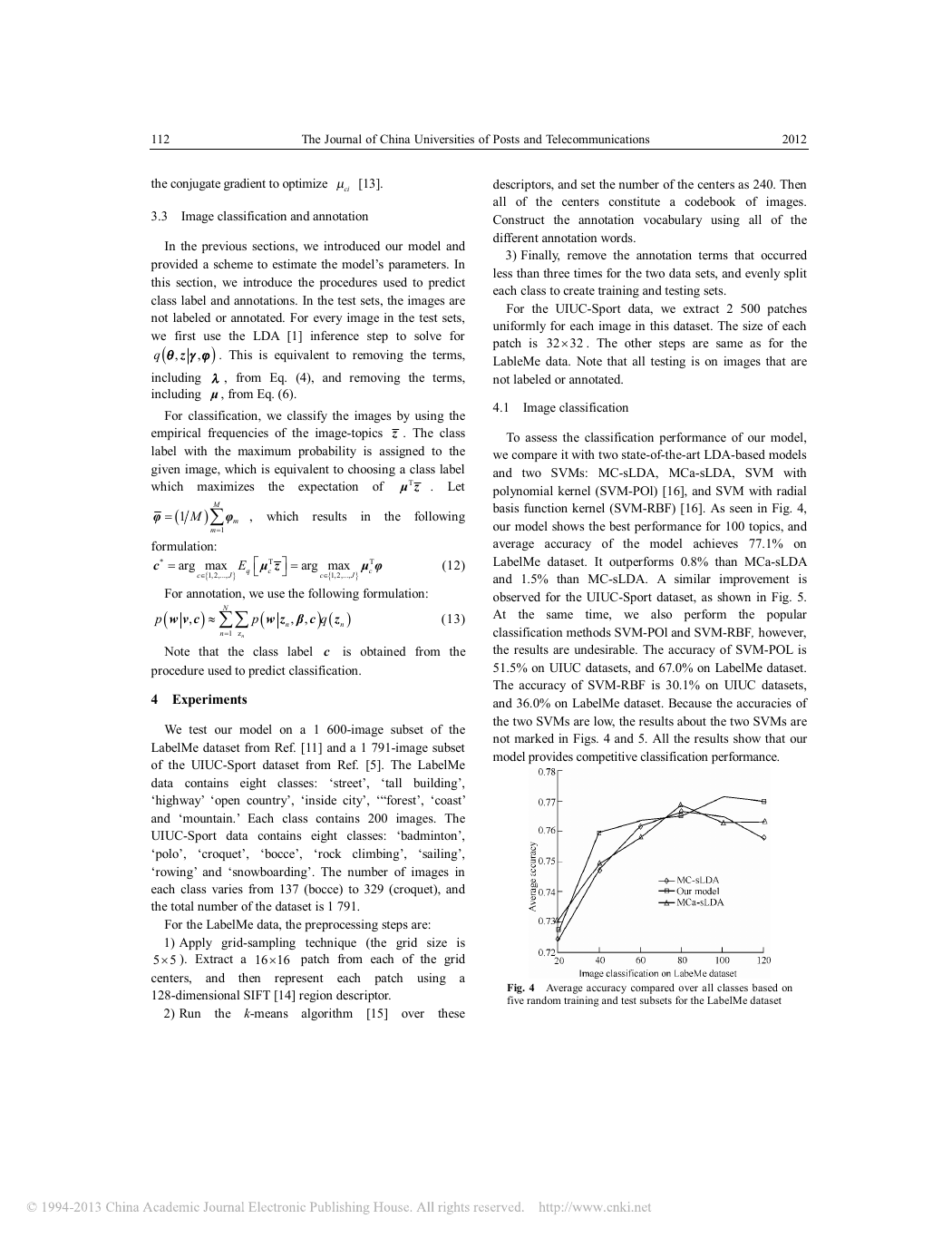

To assess the classification performance of our model,

we compare it with two state-of-the-art LDA-based models

and two SVMs: MC-sLDA, MCa-sLDA, SVM with

polynomial kernel (SVM-POl) [16], and SVM with radial

basis function kernel (SVM-RBF) [16]. As seen in Fig. 4,

our model shows the best performance for 100 topics, and

average accuracy of the model achieves 77.1% on

LabelMe dataset. It outperforms 0.8% than MCa-sLDA

and 1.5% than MC-sLDA. A similar improvement is

observed for the UIUC-Sport dataset, as shown in Fig. 5.

At the same time, we also perform the popular

classification methods SVM-POl and SVM-RBF, however,

the results are undesirable. The accuracy of SVM-POL is

51.5% on UIUC datasets, and 67.0% on LabelMe dataset.

The accuracy of SVM-RBF is 30.1% on UIUC datasets,

and 36.0% on LabelMe dataset. Because the accuracies of

the two SVMs are low, the results about the two SVMs are

not marked in Figs. 4 and 5. All the results show that our

model provides competitive classification performance.

Fig. 4 Average accuracy compared over all classes based on

five random training and test subsets for the LabelMe dataset

�

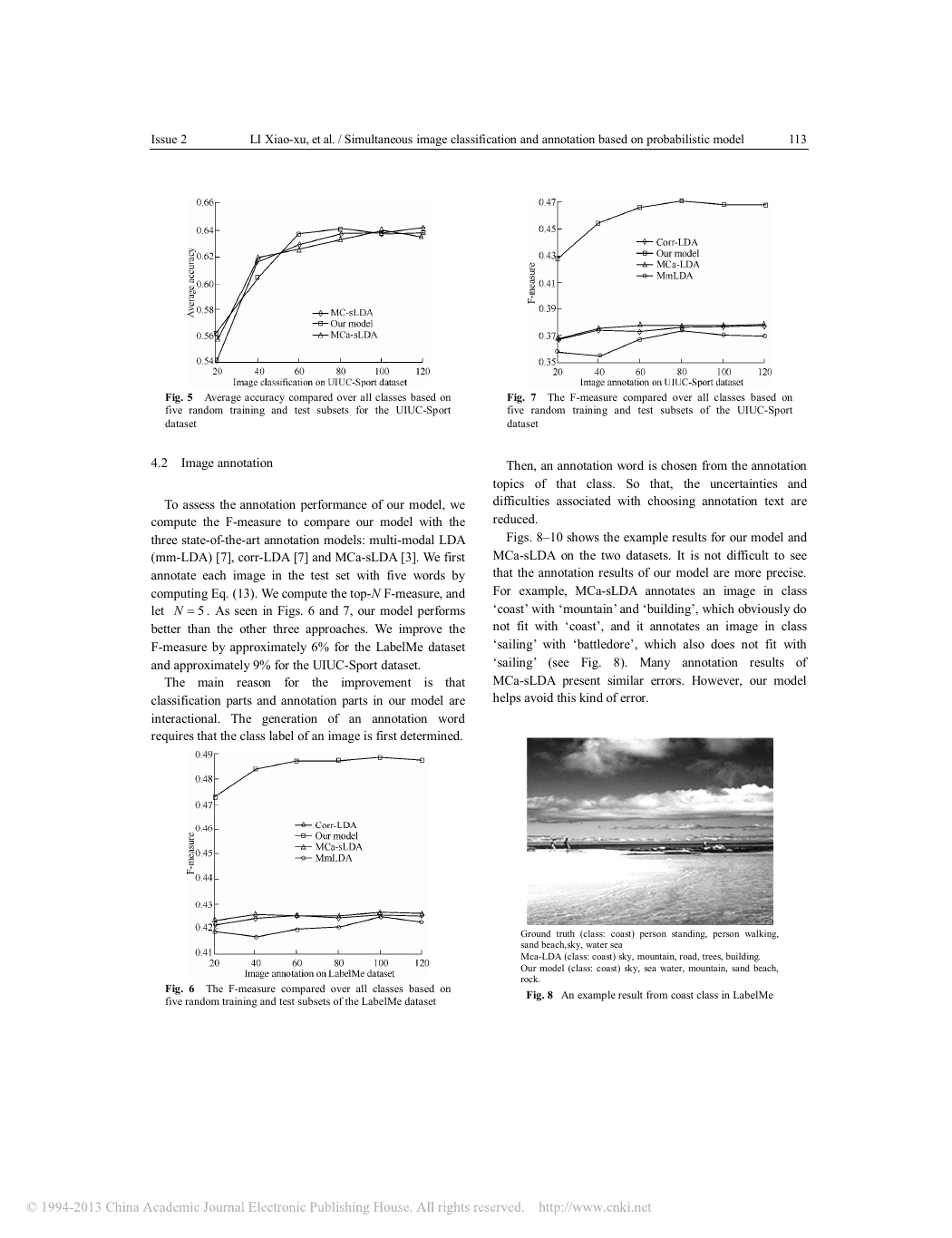

Issue 2 LI Xiao-xu, et al. / Simultaneous image classification and annotation based on probabilistic model 113

Fig. 5 Average accuracy compared over all classes based on

five random training and test subsets for the UIUC-Sport

dataset

4.2 Image annotation

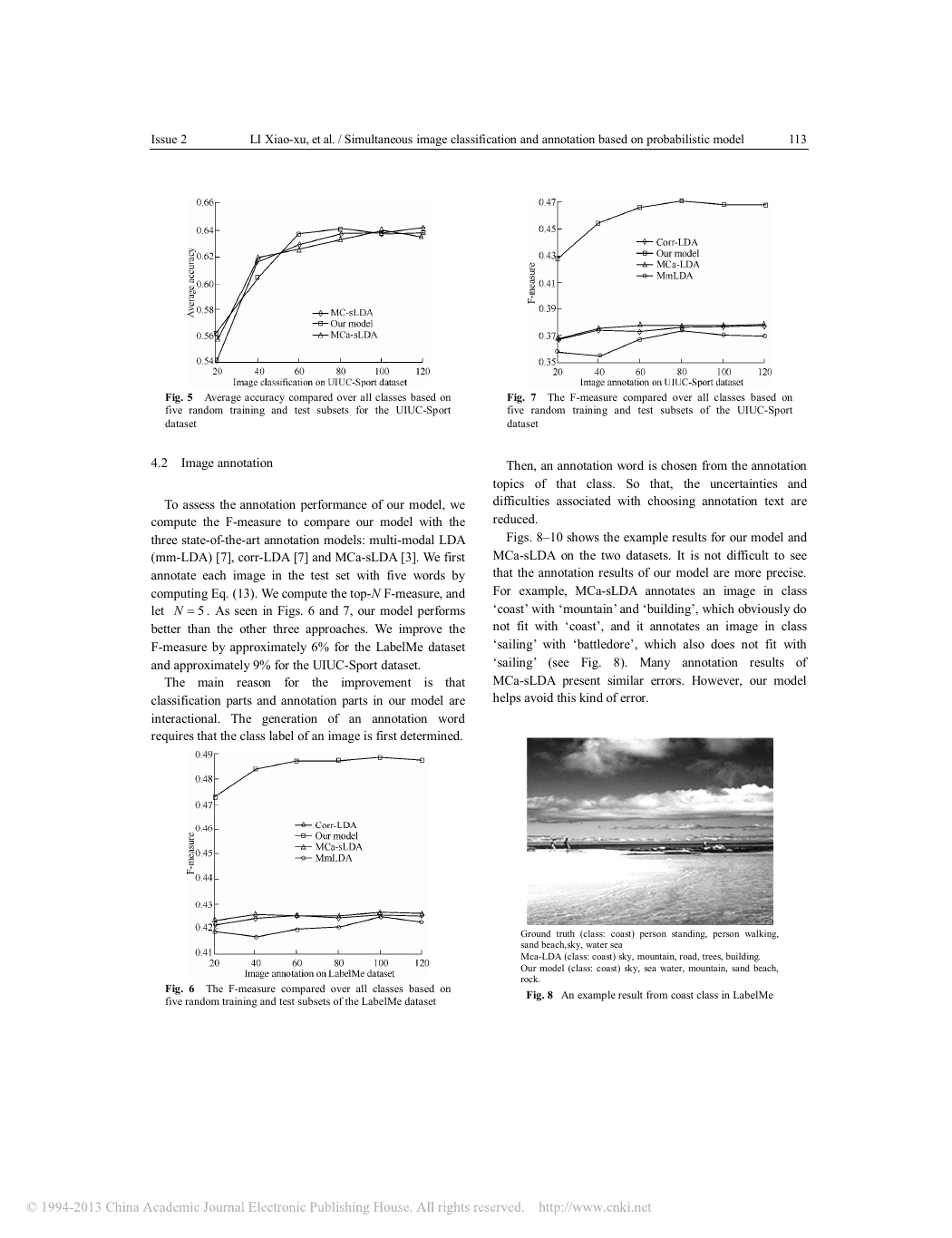

To assess the annotation performance of our model, we

compute the F-measure to compare our model with the

three state-of-the-art annotation models: multi-modal LDA

(mm-LDA) [7], corr-LDA [7] and MCa-sLDA [3]. We first

annotate each image in the test set with five words by

computing Eq. (13). We compute the top-N F-measure, and

let

better than the other three approaches. We improve the

F-measure by approximately 6% for the LabelMe dataset

and approximately 9% for the UIUC-Sport dataset.

5N = . As seen in Figs. 6 and 7, our model performs

The main reason for the improvement is that

classification parts and annotation parts in our model are

interactional. The generation of an annotation word

requires that the class label of an image is first determined.

Fig. 7 The F-measure compared over all classes based on

five random training and test subsets of the UIUC-Sport

dataset

Then, an annotation word is chosen from the annotation

topics of that class. So that, the uncertainties and

difficulties associated with choosing annotation text are

reduced.

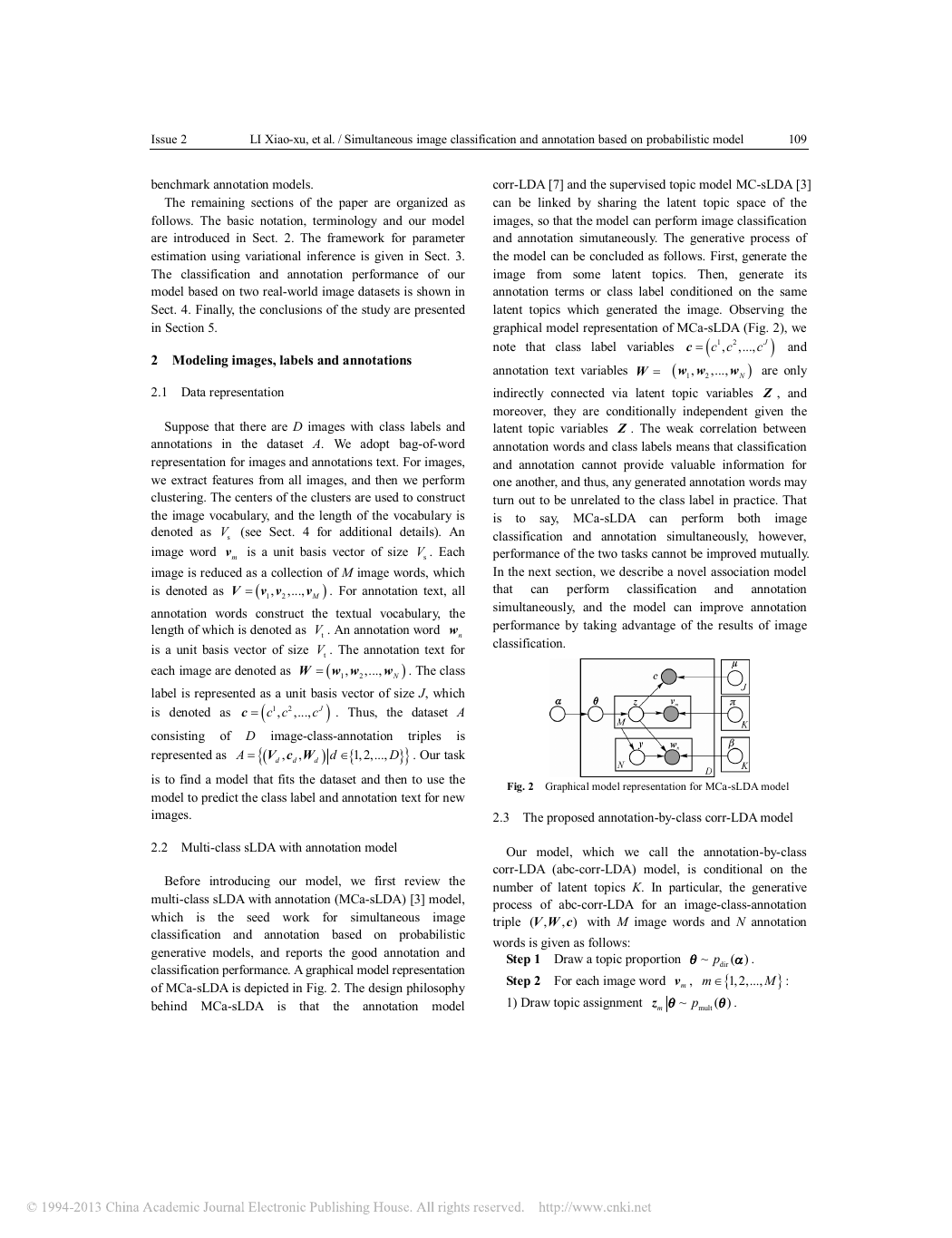

Figs. 8–10 shows the example results for our model and

MCa-sLDA on the two datasets. It is not difficult to see

that the annotation results of our model are more precise.

For example, MCa-sLDA annotates an image in class

‘coast’ with ‘mountain’ and ‘building’, which obviously do

‘coast’, and it annotates an image in class

not fit with

‘sailing’ with ‘battledore’, which also does not fit with

‘sailing’ (see Fig. 8). Many annotation results of

MCa-sLDA present similar errors. However, our model

helps avoid this kind of error.

Fig. 6 The F-measure compared over all classes based on

five random training and test subsets of the LabelMe dataset

Ground truth (class: coast) person standing, person walking,

sand beach,sky, water sea

Mca-LDA (class: coast) sky, mountain, road, trees, building .

Our model (class: coast) sky, sea water, mountain, sand beach,

rock.

Fig. 8 An example result from coast class in LabelMe

�

114 The Journal of China Universities of Posts and Telecommunications 2012

results also show the appropriateness of our approach.

Based on the proposed model ’s generative process, its

predicting procedure and the experiment results, we

confirm that classification provides valuable information

for annotation, but annotation only has a small effect on

classification. In future work, we plan to study how to

utilize annotation procedures to improve classification

performance so as to iteratively enhance performance of

both classification and annotation processes.

Acknowledgements

This work was supported by the Major Research Plan of the

National Natural Science Foundation of China (90920006).

References

1. Blei D M, Ng A Y, Jordan M I. Latent Dirichlet allocation. Journal of

Machine Learning Research, 2003, 3(4/5): 993-1022

2. Li F F, Perona P. A Bayesian hierarchical model for learning natural scene

categories. Proceedings of the IEEE Conference on Computer Vision and

Pattern Recognition (CVPR’05): Vol 2, Jun 20 -25, 2005, San Diego, CA,

USA. Los Alamitos, CA,USA: IEEE Computer Society, 2005: 524-531

3. Wang C, Blei D M, Li F F. Simultaneous image classification and

annotation. Proceedings of the IEEE Conference on Computer Vision and

Pattern Recognition (CVPR ’09), Jun 20-25, 2009, Miami, FL, USA. Los

Alamitos, CA, USA: IEEE Computer Society, 2009: 1903-1910

4. Cao L L, Li F F. Spatially coherent latent topic model for concurrent

segmentation and classification of object and scenes. Proceedings of the

11th IEEE International Conference on Computer Vision (ICCV ’07), Oct

14-21, 2007, Rio de Janeiro, Brasil. Piscataway, NJ,USA: IEEE, 2007: 8p

5. Li L J, Li F F. What, where and who? Classifying event by scene and object

recognition. Proceedings of the 11th IEEE International Conference on

Computer Vision (ICCV ’07), Oct 14 -21, 2007, Rio de Janeiro, Brasil.

Piscataway, NJ, USA: IEEE, 2007: 8p

6. Quelhas P, Monay F, Odobez J M, et al. Modeling scenes with local

descriptors and latent aspects. Proceedings of the 10th IEEE International

Conference on Computer Vision (ICCV ’05): Vol 1, Oct 17 -21, 2005,

Beijing, China. Piscataway, NJ, USA: IEEE, 2005: 883-890

7. Blei D M, Jordan M I. Modeling annotated data. Proceedings of 26th

International Conference on Research and Development in Information

Retrieval (SIGIR’03), Jul 28 -Aug 1, 2003, Toronto, Canada. New York,

NY, USA: ACM, 2003: 127-134

8. Putthividhya D, Attias H T, Nagarajan S S. Supervised topic model for

automatic image annotation. Proceedings of the 35th IEEE International

Conference on Acoustics, Speech, and Signal Processing (ICASSP’10), Mar

14-19, 2010, Dallas, TX, USA. Piscataway, NJ, USA: IEEE, 2010:

1894-1897

9. Blei D M, McAuliffe J D. Supervised topic models. Proceedings of the 21st

Annual Conference on Neural Information Processing Systems

(NIPS’07), Dec 7-8, 2007, Whistler, Canada. Cambridge, MA, USA: MIT

Press, 2007: 121-128

10. Putthividhya D, Attias H T, Nagarajan S S. Topic regression multi-modal

latent Dirichlet allocation for image annotation. Proceedings of the IEEE

Conference on Computer Vision and Pattern Recognition (CVPR ’10), Jun

13-18, 2010, San Francisco, CA, USA. Los Alamitos, CA, USA: IEEE

Computer Society, 2010: 3408-3415

Ground truth (class: rowing) Athlete, bank, boat, lake, oar, post,

railing, spectator, tree, wall.

Mca-LDA (class: rowing) athlete, sky, tree, water, rowboat.

Our model (class: rowing) athlete, oar , rowboat, water, lake.

Fig. 9 An example result from rowing class in UIUC

Ground truth (class: sailing) athlete, bank, floater, sailing boat,

sky, water.

Mca-LDA (class: sailing) athlete, sky, sailing boat, water,

battledore

Our model (class: sailing) sky, water, athlete, sailing boat,

floater.

Fig. 10 An example result from sailing class in UIUC

5 Conclusions

This paper proposes a novel probabilistic model called

annotation-by-class corr-LDA for simultaneous image

classification and annotation. The approximate inference

and parameter estimation algorithms of the model are

derived, and efficient approximations for classifying and

annotating new images are also given. Based on previous

work, our model introduces the insight that once a

category is assigned to an image, the scope of annotation

can be reduced. As such, the model can reduce the

probability of generating unrelated annotation words, so

that it can improve the annotation performance based on

category information. The performance of the model is

demonstrated on two real-word datasets. The classification

performance is on par with several state-of-the-art

classification models, while the annotation performance is

superior to several state-of-the-art annotation models. The

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc