564

IEEE TRANSACTIONS ON HUMAN-MACHINE SYSTEMS, VOL. 47, NO. 4, AUGUST 2017

Toward an Enhanced Human–Machine Interface for

Upper-Limb Prosthesis Control With Combined

EMG and NIRS Signals

Weichao Guo, Student Member, IEEE, Xinjun Sheng, Member, IEEE, Honghai Liu, Senior Member, IEEE,

and Xiangyang Zhu, Member, IEEE

Abstract—Advanced myoelectric prosthetic hands are currently

limited due to the lack of sufficient signal sources on amputation

residual muscles and inadequate real-time control performance.

This paper presents a novel human–machine interface for pros-

thetic manipulation that combines the advantages of surface elec-

tromyography (EMG) and near-infrared spectroscopy (NIRS) to

overcome the limitations of myoelectric control. Experiments in-

cluding 13 able-bodied and three amputee subjects were carried out

to evaluate both offline classification accuracy (CA) and online per-

formance of the forearm motion recognition system based on three

types of sensors (EMG-only, NIRS-only, and hybrid EMG-NIRS).

The experimental results showed that both the offline CA and real-

time performance for controlling a virtual prosthetic hand were

significantly (p < 0.05) improved by combining EMG and NIRS.

These findings suggest that fusion of EMG and NIRS is feasible to

improve the control of upper-limb prostheses, without increasing

the number of sensor nodes or complexity of signal processing. The

outcomes of this study have great potential to promote the devel-

opment of dexterous prosthetic hands for transradial amputees.

Index Terms—Near-infrared

tern recognition, prosthesis control,

electromyography (EMG).

pat-

surface

spectroscopy

(NIRS),

sensor fusion,

I. INTRODUCTION

U PPER-LIMB deficiency severely affects the ability of

transradial amputees to perform activities of daily liv-

ing (ADL). To improve their independence and quality of life,

surface electromyography (EMG)-based prosthesis control has

been widely investigated for several decades [1], [2]. It is

grounded on the assumption that the extracted features of EMG

signals from muscle contractions can be mapped to intended

Manuscript received March 16, 2016; revised July 9, 2016 and September 20,

2016; accepted November 30, 2016. Date of publication January 4, 2017; date of

current version July 13, 2017. This work was supported by the National Natural

Science Foundation of China under Grant 51375296, Grant 51620105002, and

Grant 51421092. This paper was recommended by Associate Editor K. Li.

(Corresponding authors: Xiangyang Zhu and Xinjun Sheng.)

W. Guo, X. Sheng, and X. Zhu are with the State Key Laboratory of Mechan-

ical Systems and Vibration, School of Mechanical Engineering, Shanghai Jiao

Tong University, Shanghai 200240, China (e-mail: guoweichao90@gmail.com;

xjsheng@sjtu.edu.cn; mexyzhu@sjtu.edu.cn).

H. Liu is with the State Key Laboratory of Mechanical Systems and Vi-

bration, School of Mechanical Engineering, Shanghai Jiao Tong University,

Shanghai 200240, China, and also with the School of Computing, University of

Portsmouth, Portsmouth, PO1 3HE, U.K. (e-mail: honghai.liu@port.ac.uk).

Color versions of one or more of the figures in this paper are available online

at http://ieeexplore.ieee.org.

Digital Object Identifier 10.1109/THMS.2016.2641389

motions of hand or wrist. Once the subject’s motor intention has

been identified, a control command is sent to a prosthetic hand

to perform the desired action. Because this control approach is

intuitive, it has attracted the interest of many researchers [3]–

[18]. It is now accepted that with proper combination of EMG

features and classifiers, it is possible to attain over 95% of of-

fline classification accuracy (CA) for more than ten wrist and

hand motions [18]–[20].

Despite the promising offline CA, however, it was still rela-

tively unclear whether the excellent offline performance could

be successfully transferred to equivalent online control perfor-

mance [15]–[17], [20]. For example, by using the Motion Test

protocol [11], the average offline CA over five subjects was 94%

for the intact arm; in contrast, the online motion completion rate

(CR) was only 81.2% [15]. In addition, the experimental results

in [17] showed that the offline CA could be as high as 92.1%;

nevertheless, the real-time accuracy (RA) was below 67.4% and

the online CR was below 87.3%. Thus, there existed a per-

formance discrepancy between the offline evaluation and the

online testing. Due to the inadequate performance using EMG

alone, other ways of improving the real-time prosthesis control

performance would be of great practical significance.

It was potential to improve the control performance by adding

more EMG sensor nodes [8], [18], but this way was impractical

for amputees due to the lack of enough remnant muscles [9],

[21]. The remnant forearm of amputation patient often provided

limited surface area to place over too many sensor channels.

Moreover, adding sensor nodes would also enhance the com-

plexity, weight, and cost to a prosthesis [15]. To be clinically

relevant, an ideal upper-limb prosthesis control interface should

be based on a minimal number of sensor channels and limited

computational complexity [22]. Alternatively, combining EMG

with other complementary sensor modalities would be benefi-

cial for improved prosthetic hand control [23]–[28]. Through

the fusion of artificial vision and myoelectric information, the

hybrid systems of [24] and [25] offered simple and effective

control for a dexterous prosthetic hand, while needing extra ex-

pense of adding an additional vision device. It was also proved

that the combination of EMG and speech signals was a feasible

approach to effectively enhance the prosthesis control perfor-

mance [26]; however, the user’s speech signal was easily inter-

fered by ambient noise or any speech command of other person.

2168-2291 © 2017 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission.

See http://www.ieee.org/publications standards/publications/rights/index.html for more information.

�

GUO et al.: TOWARD AN ENHANCED HUMAN–MACHINE INTERFACE FOR UPPER-LIMB PROSTHESIS CONTROL

565

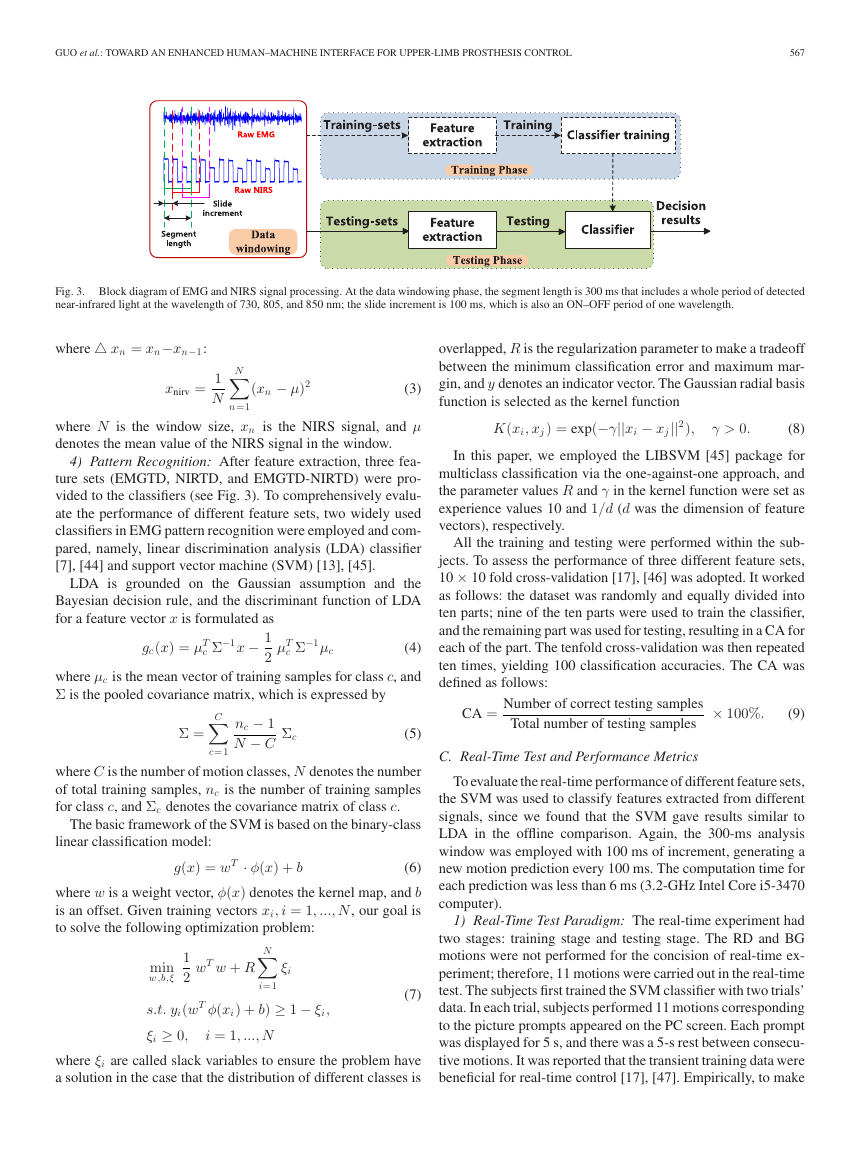

PROS AND CONS OF DIFFERENT BIOSENSORS

TABLE I

Biosensors

Advantages

Disadvantages

EMG

US

MMG

NIRS

good temporal resolution

high spatial resolution

robust to sensor–skin

interface

high spatial resolution

sensitive to crosstalk and

electronic interference

low device applicability

susceptible to movement

artifact or ambient noise

sensitive to muscle fatigue and

optical noise

Additionally, using combined EMG and kinematic signals was

valuable to provide adequate prosthesis control [27]; neverthe-

less, it should be noted that the kinematic signal was susceptible

to movement artifact. Combining the advantages of electroen-

cephalography (EEG) and EMG was a promising approach for

biorobotics applications [28], such as the control of prostheses

and exoskeletons. Fusion of EMG and EEG signals was pro-

posed as a hybrid brain–computer interface for disabled users,

achieving good control performance even in the case of muscu-

lar fatigue [29]. However, the decoding of EEG signals was still

not perfect due to difficulties such as signal acquisition, low data

transfer rate, low accuracy, and low user adaptability [28], [30]–

[32]; therefore, the hybrid EMG-EEG signals were inadequate

for upper-limb prosthesis control. Thus, the above-mentioned

sensor fusion approaches are difficult to meet practical require-

ments due to their inherent drawbacks.

It seems necessary to combine EMG with other biosensors

to fulfill the requirements. Sonomyography, also known as ul-

trasound (US) imaging, was adopted by researchers to visual-

ize muscle structures [33]. Akhlaghi et al. [34] demonstrated

that the translation of morphological changes of forearm mus-

cles could be used to control a prosthetic hand. However, the

cumbersome US devices (especially probes) were difficult to

integrate with prostheses. Mechanomyography (MMG) signals

were the mechanical oscillations generated by contracting mus-

cles in form of low-frequency vibration or sound, which could

be measured by low-mass accelerometers or microphones [35].

Alves and Chau [36] presented that different types of muscle

activations were manifested as discernable MMG patterns show-

ing a CA of 90 ± 4% to identify 7 ± 1 hand motions, which was

feasible for prosthesis control. Nevertheless, MMG signals were

susceptible to movement artifact or ambient noise that limited

the practical application. Near-infrared spectroscopy (NIRS) al-

lowed monitoring of muscle oxygenation and perfusion during

muscle contraction and could be potentially used for prosthesis

control [37]. Although NIRS signal was sensitive to muscle fa-

tigue and optical noise, it offered a very good spatial resolution

[38] that could provide supplementary for myoelectric control.

Table I outlines the advantages and disadvantages of aforemen-

tioned biosensors. The authors have recently developed a hybrid

EMG/NIRS sensor system [39], [40] that could be small enough

to be integrated to the prostheses. Combining the advantages of

EMG and NIRS to obtain a more advanced performance would

be of great importance to fulfill the requirements of adequate

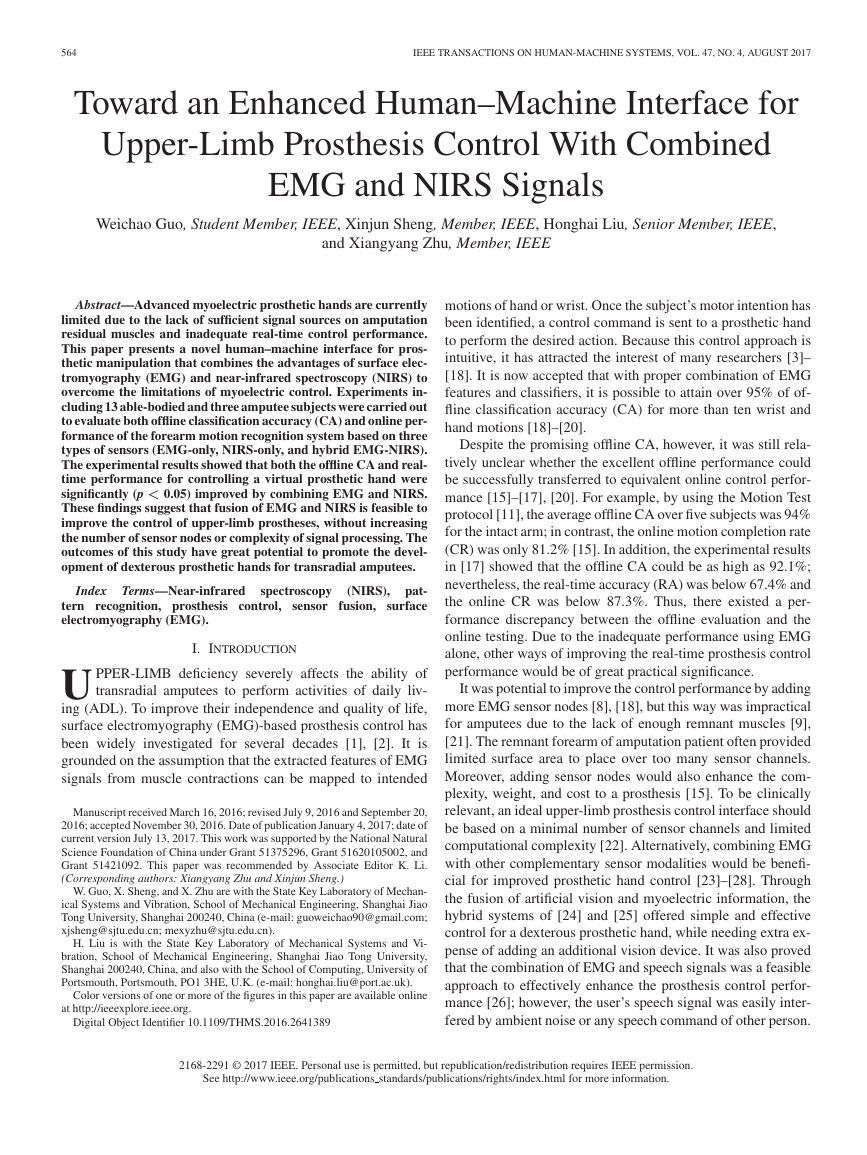

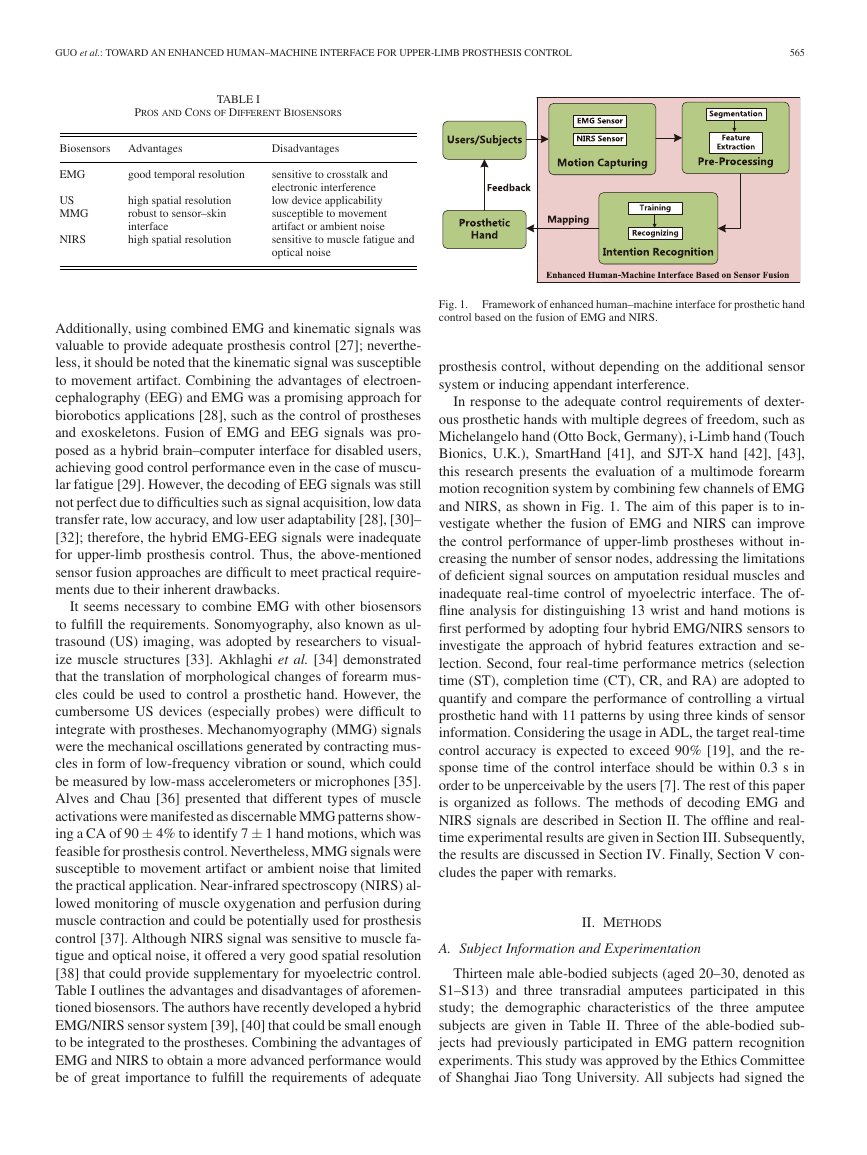

Fig. 1.

control based on the fusion of EMG and NIRS.

Framework of enhanced human–machine interface for prosthetic hand

prosthesis control, without depending on the additional sensor

system or inducing appendant interference.

In response to the adequate control requirements of dexter-

ous prosthetic hands with multiple degrees of freedom, such as

Michelangelo hand (Otto Bock, Germany), i-Limb hand (Touch

Bionics, U.K.), SmartHand [41], and SJT-X hand [42], [43],

this research presents the evaluation of a multimode forearm

motion recognition system by combining few channels of EMG

and NIRS, as shown in Fig. 1. The aim of this paper is to in-

vestigate whether the fusion of EMG and NIRS can improve

the control performance of upper-limb prostheses without in-

creasing the number of sensor nodes, addressing the limitations

of deficient signal sources on amputation residual muscles and

inadequate real-time control of myoelectric interface. The of-

fline analysis for distinguishing 13 wrist and hand motions is

first performed by adopting four hybrid EMG/NIRS sensors to

investigate the approach of hybrid features extraction and se-

lection. Second, four real-time performance metrics (selection

time (ST), completion time (CT), CR, and RA) are adopted to

quantify and compare the performance of controlling a virtual

prosthetic hand with 11 patterns by using three kinds of sensor

information. Considering the usage in ADL, the target real-time

control accuracy is expected to exceed 90% [19], and the re-

sponse time of the control interface should be within 0.3 s in

order to be unperceivable by the users [7]. The rest of this paper

is organized as follows. The methods of decoding EMG and

NIRS signals are described in Section II. The offline and real-

time experimental results are given in Section III. Subsequently,

the results are discussed in Section IV. Finally, Section V con-

cludes the paper with remarks.

II. METHODS

A. Subject Information and Experimentation

Thirteen male able-bodied subjects (aged 20–30, denoted as

S1–S13) and three transradial amputees participated in this

study; the demographic characteristics of the three amputee

subjects are given in Table II. Three of the able-bodied sub-

jects had previously participated in EMG pattern recognition

experiments. This study was approved by the Ethics Committee

of Shanghai Jiao Tong University. All subjects had signed the

�

566

IEEE TRANSACTIONS ON HUMAN-MACHINE SYSTEMS, VOL. 47, NO. 4, AUGUST 2017

DEMOGRAPHIC CHARACTERISTICS OF THE THREE AMPUTEE SUBJECTS

TABLE II

Subject ID

Gender

Age (years)

Affected side

Residual stump length

Cause of amputation

Time since amputation

Prosthesis usage

A1

A2

A3

Male

Male

Male

37

38

62

Right

Right

Left

23 cm

16 cm

16 cm

Tumor

Traumatic

Traumatic

9 years

10 years

9 years

All day, cosmetic

Half day, myoelectric

Half day, cosmetic

nm), and the near-infrared LEDs were switched ON and OFF

sequentially driven by a pulse train so that the detected signals

at each wavelength could be separated. The pulse frequency was

10 Hz, and the duty ratio was 50% [39].

2) Data Acquisition: During the experiments, the subjects

were required to naturally drop down their arms toward the

ground, and the following 13 types of contractions were per-

formed for ten steady-state repetitions: wrist flexion (WF), wrist

extension (WE), radial deviation (RD), ulnar deviation (UD),

pronation (PN), supination (SN), fist (FS), hand open (HO), in-

dex point (IP), fine pinch (FP), tripod grasp (TG), ball grasp

(BG), and rest. These motions were selected as they were fre-

quently encountered in ADL [9]. Each contraction was held for

5 s during a repetition to generate sufficient data for further of-

fline analysis including feature extraction and pattern classifica-

tion, which was widely adopted in similar studies [8], [13], [14].

Self-evaluated moderate contraction was used for all subjects,

and there was a 2-min break between two adjacent repetitions

to avoid muscle fatigue.

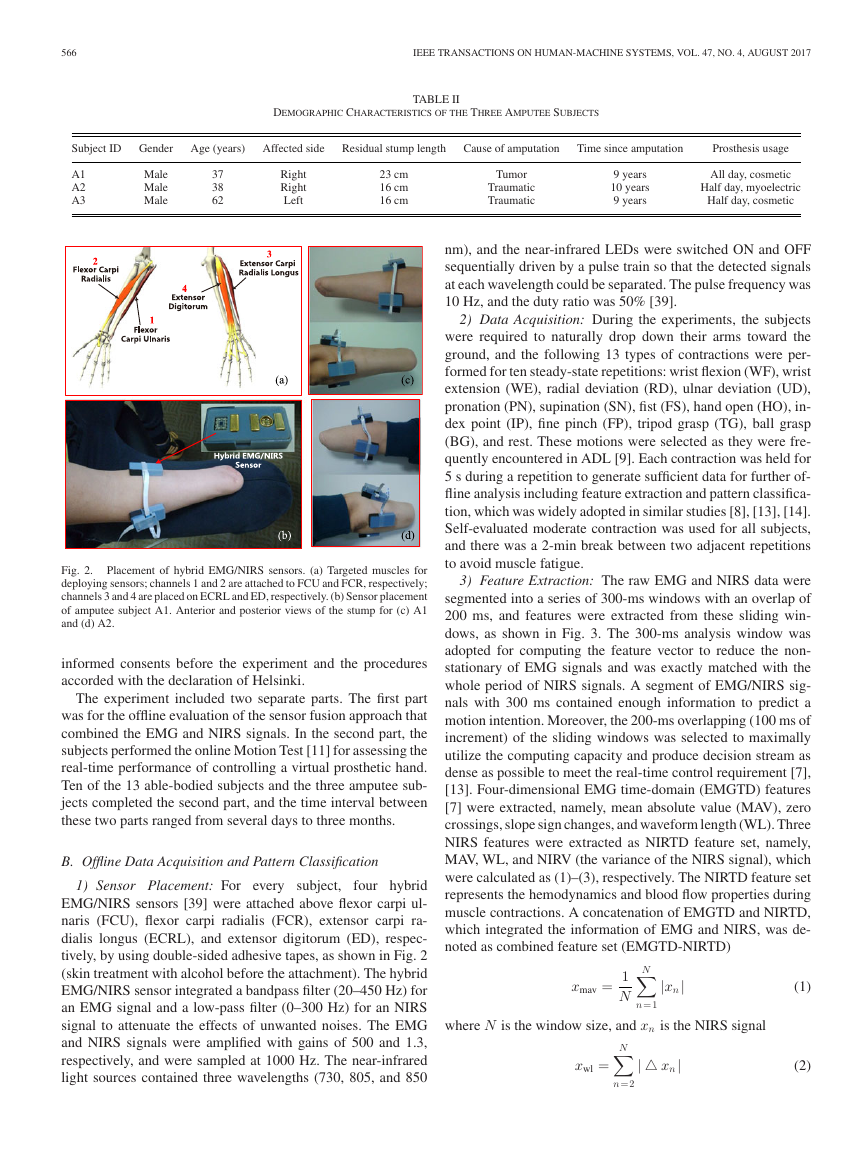

3) Feature Extraction: The raw EMG and NIRS data were

segmented into a series of 300-ms windows with an overlap of

200 ms, and features were extracted from these sliding win-

dows, as shown in Fig. 3. The 300-ms analysis window was

adopted for computing the feature vector to reduce the non-

stationary of EMG signals and was exactly matched with the

whole period of NIRS signals. A segment of EMG/NIRS sig-

nals with 300 ms contained enough information to predict a

motion intention. Moreover, the 200-ms overlapping (100 ms of

increment) of the sliding windows was selected to maximally

utilize the computing capacity and produce decision stream as

dense as possible to meet the real-time control requirement [7],

[13]. Four-dimensional EMG time-domain (EMGTD) features

[7] were extracted, namely, mean absolute value (MAV), zero

crossings, slope sign changes, and waveform length (WL). Three

NIRS features were extracted as NIRTD feature set, namely,

MAV, WL, and NIRV (the variance of the NIRS signal), which

were calculated as (1)–(3), respectively. The NIRTD feature set

represents the hemodynamics and blood flow properties during

muscle contractions. A concatenation of EMGTD and NIRTD,

which integrated the information of EMG and NIRS, was de-

noted as combined feature set (EMGTD-NIRTD)

xmav =

1

N

N

n=1

|xn|

where N is the window size, and xn is the NIRS signal

xwl =

N

n=2

| xn|

(1)

(2)

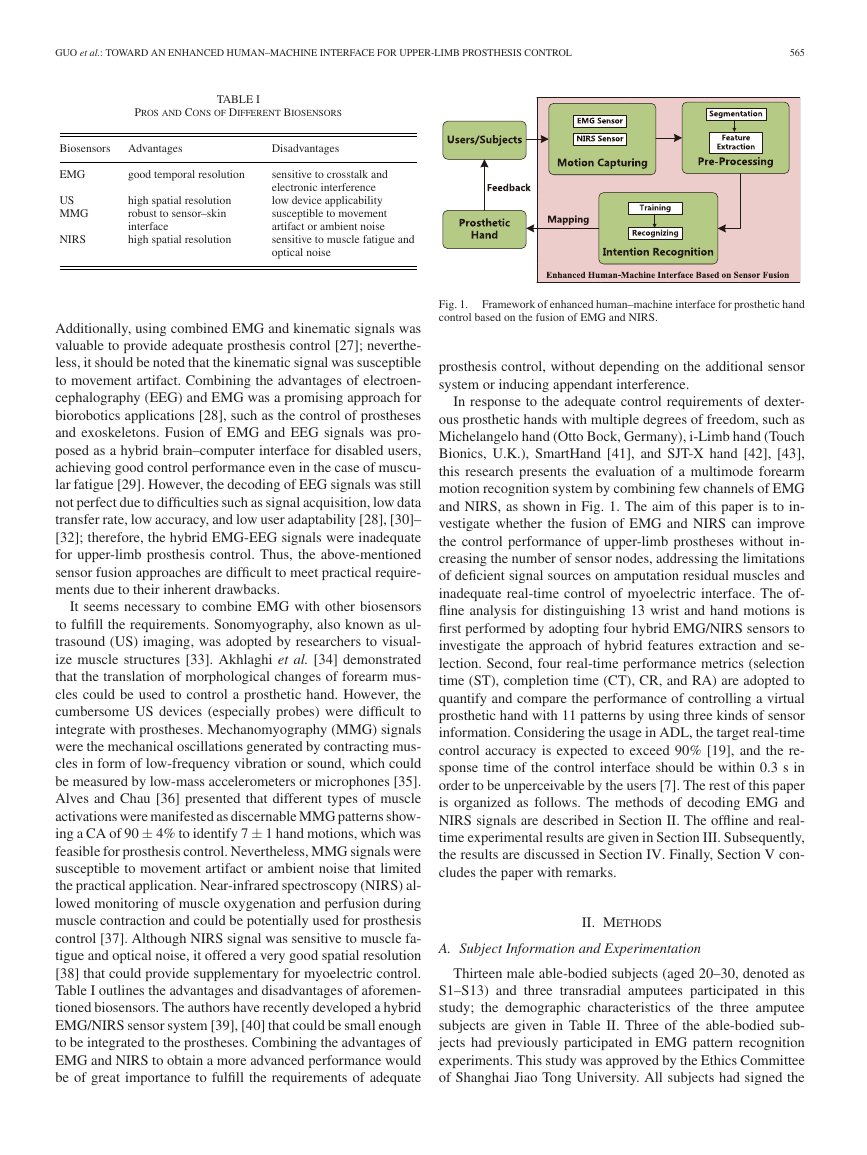

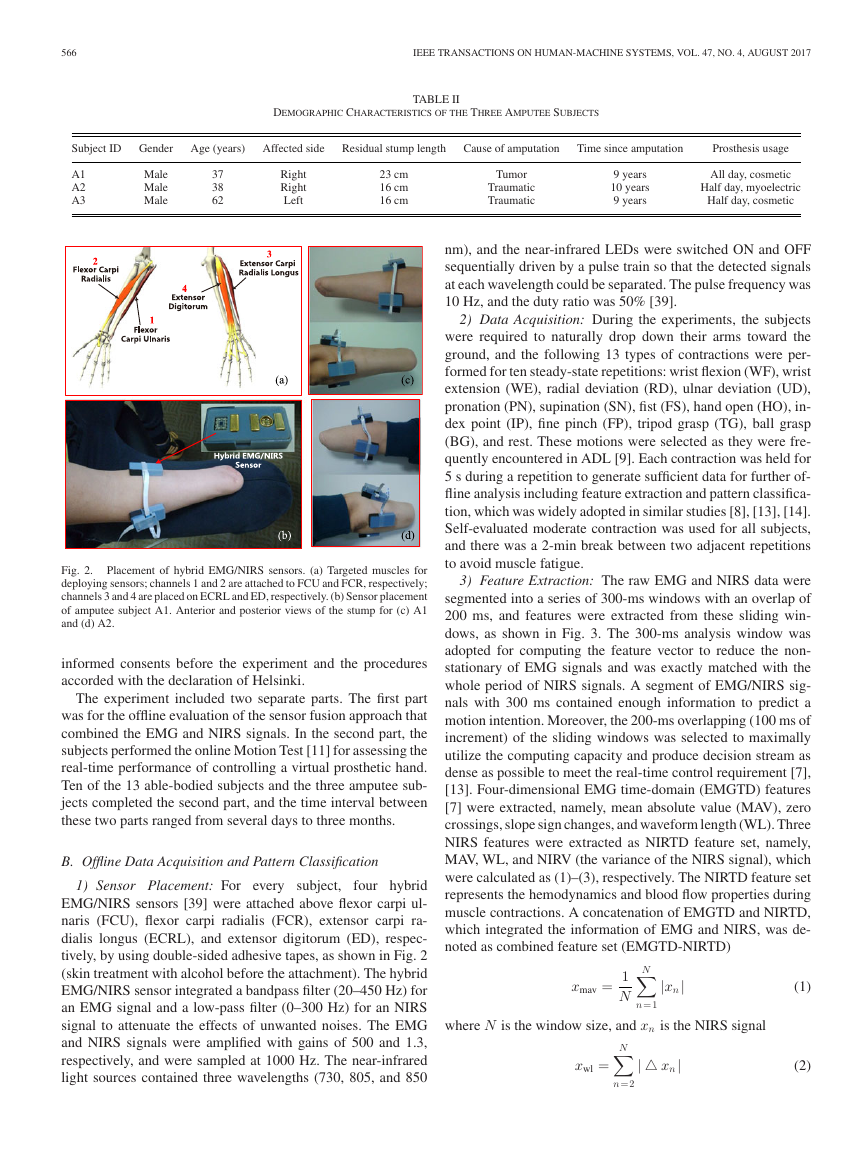

Fig. 2.

Placement of hybrid EMG/NIRS sensors. (a) Targeted muscles for

deploying sensors; channels 1 and 2 are attached to FCU and FCR, respectively;

channels 3 and 4 are placed on ECRL and ED, respectively. (b) Sensor placement

of amputee subject A1. Anterior and posterior views of the stump for (c) A1

and (d) A2.

informed consents before the experiment and the procedures

accorded with the declaration of Helsinki.

The experiment included two separate parts. The first part

was for the offline evaluation of the sensor fusion approach that

combined the EMG and NIRS signals. In the second part, the

subjects performed the online Motion Test [11] for assessing the

real-time performance of controlling a virtual prosthetic hand.

Ten of the 13 able-bodied subjects and the three amputee sub-

jects completed the second part, and the time interval between

these two parts ranged from several days to three months.

B. Offline Data Acquisition and Pattern Classification

1) Sensor Placement: For every subject,

four hybrid

EMG/NIRS sensors [39] were attached above flexor carpi ul-

naris (FCU), flexor carpi radialis (FCR), extensor carpi ra-

dialis longus (ECRL), and extensor digitorum (ED), respec-

tively, by using double-sided adhesive tapes, as shown in Fig. 2

(skin treatment with alcohol before the attachment). The hybrid

EMG/NIRS sensor integrated a bandpass filter (20–450 Hz) for

an EMG signal and a low-pass filter (0–300 Hz) for an NIRS

signal to attenuate the effects of unwanted noises. The EMG

and NIRS signals were amplified with gains of 500 and 1.3,

respectively, and were sampled at 1000 Hz. The near-infrared

light sources contained three wavelengths (730, 805, and 850

�

GUO et al.: TOWARD AN ENHANCED HUMAN–MACHINE INTERFACE FOR UPPER-LIMB PROSTHESIS CONTROL

567

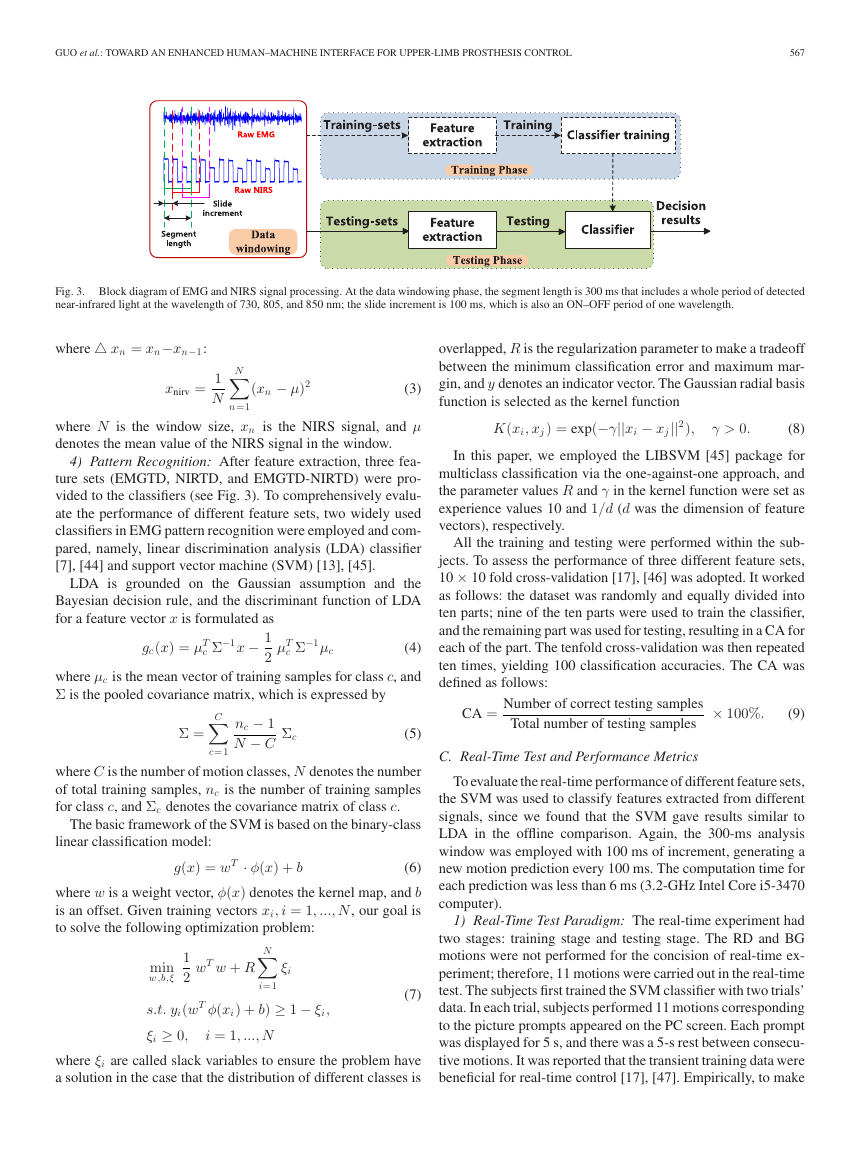

Fig. 3. Block diagram of EMG and NIRS signal processing. At the data windowing phase, the segment length is 300 ms that includes a whole period of detected

near-infrared light at the wavelength of 730, 805, and 850 nm; the slide increment is 100 ms, which is also an ON–OFF period of one wavelength.

where xn = xn−xn−1:

xnirv =

1

N

N

n=1

(xn − μ)2

(3)

where N is the window size, xn is the NIRS signal, and μ

denotes the mean value of the NIRS signal in the window.

4) Pattern Recognition: After feature extraction, three fea-

ture sets (EMGTD, NIRTD, and EMGTD-NIRTD) were pro-

vided to the classifiers (see Fig. 3). To comprehensively evalu-

ate the performance of different feature sets, two widely used

classifiers in EMG pattern recognition were employed and com-

pared, namely, linear discrimination analysis (LDA) classifier

[7], [44] and support vector machine (SVM) [13], [45].

LDA is grounded on the Gaussian assumption and the

Bayesian decision rule, and the discriminant function of LDA

for a feature vector x is formulated as

x − 1

gc(x) = μT

Σ−1

Σ−1

(4)

2 μT

c

μc

c

where μc is the mean vector of training samples for class c, and

Σ is the pooled covariance matrix, which is expressed by

Σ =

C

c=1

nc − 1

N − C

Σc

(5)

where C is the number of motion classes, N denotes the number

of total training samples, nc is the number of training samples

for class c, and Σc denotes the covariance matrix of class c.

The basic framework of the SVM is based on the binary-class

linear classification model:

g(x) = wT · φ(x) + b

(6)

where w is a weight vector, φ(x) denotes the kernel map, and b

is an offset. Given training vectors xi, i = 1, ..., N, our goal is

to solve the following optimization problem:

1

2 wT w + R

min

w ,b,ξ

N

ξi

i=1

s.t. yi(wT φ(xi) + b) ≥ 1 − ξi,

ξi ≥ 0,

i = 1, ..., N

(7)

where ξi are called slack variables to ensure the problem have

a solution in the case that the distribution of different classes is

overlapped, R is the regularization parameter to make a tradeoff

between the minimum classification error and maximum mar-

gin, and y denotes an indicator vector. The Gaussian radial basis

function is selected as the kernel function

K(xi, xj ) = exp(−γ||xi − xj||2),

γ > 0.

(8)

In this paper, we employed the LIBSVM [45] package for

multiclass classification via the one-against-one approach, and

the parameter values R and γ in the kernel function were set as

experience values 10 and 1/d (d was the dimension of feature

vectors), respectively.

All the training and testing were performed within the sub-

jects. To assess the performance of three different feature sets,

10 × 10 fold cross-validation [17], [46] was adopted. It worked

as follows: the dataset was randomly and equally divided into

ten parts; nine of the ten parts were used to train the classifier,

and the remaining part was used for testing, resulting in a CA for

each of the part. The tenfold cross-validation was then repeated

ten times, yielding 100 classification accuracies. The CA was

defined as follows:

CA = Number of correct testing samples

Total number of testing samples

× 100%.

(9)

C. Real-Time Test and Performance Metrics

To evaluate the real-time performance of different feature sets,

the SVM was used to classify features extracted from different

signals, since we found that the SVM gave results similar to

LDA in the offline comparison. Again, the 300-ms analysis

window was employed with 100 ms of increment, generating a

new motion prediction every 100 ms. The computation time for

each prediction was less than 6 ms (3.2-GHz Intel Core i5-3470

computer).

1) Real-Time Test Paradigm: The real-time experiment had

two stages: training stage and testing stage. The RD and BG

motions were not performed for the concision of real-time ex-

periment; therefore, 11 motions were carried out in the real-time

test. The subjects first trained the SVM classifier with two trials’

data. In each trial, subjects performed 11 motions corresponding

to the picture prompts appeared on the PC screen. Each prompt

was displayed for 5 s, and there was a 5-s rest between consecu-

tive motions. It was reported that the transient training data were

beneficial for real-time control [17], [47]. Empirically, to make

�

568

IEEE TRANSACTIONS ON HUMAN-MACHINE SYSTEMS, VOL. 47, NO. 4, AUGUST 2017

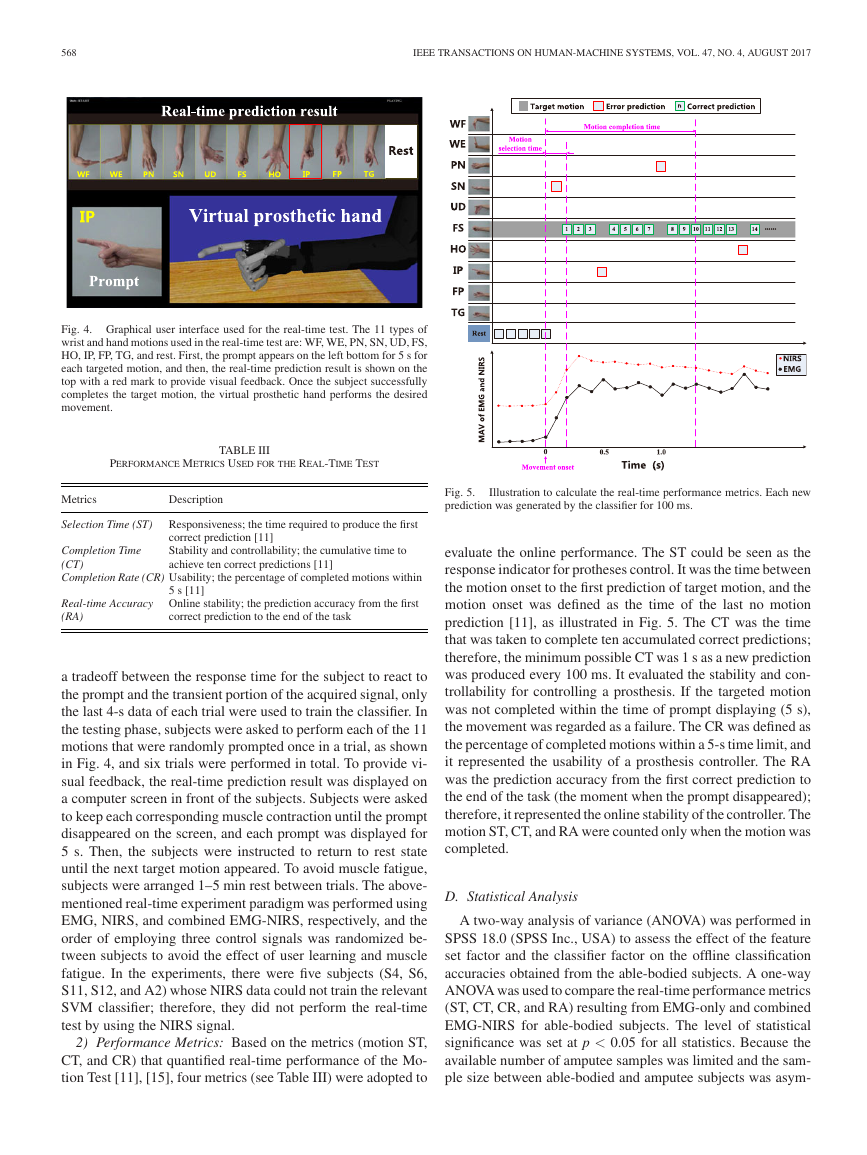

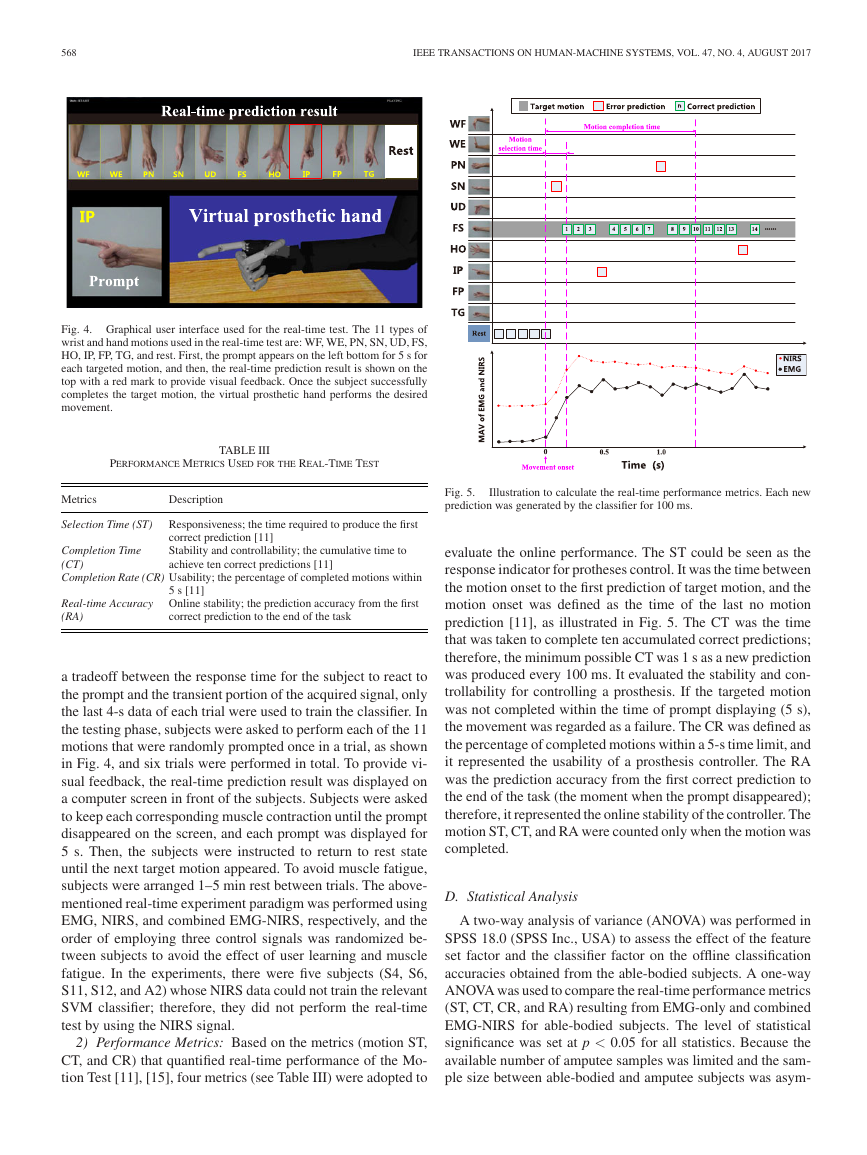

Fig. 4. Graphical user interface used for the real-time test. The 11 types of

wrist and hand motions used in the real-time test are: WF, WE, PN, SN, UD, FS,

HO, IP, FP, TG, and rest. First, the prompt appears on the left bottom for 5 s for

each targeted motion, and then, the real-time prediction result is shown on the

top with a red mark to provide visual feedback. Once the subject successfully

completes the target motion, the virtual prosthetic hand performs the desired

movement.

PERFORMANCE METRICS USED FOR THE REAL-TIME TEST

TABLE III

Metrics

Description

Fig. 5.

prediction was generated by the classifier for 100 ms.

Illustration to calculate the real-time performance metrics. Each new

Selection Time (ST)

Responsiveness; the time required to produce the first

correct prediction [11]

Stability and controllability; the cumulative time to

achieve ten correct predictions [11]

Completion Time

(CT)

Completion Rate (CR) Usability; the percentage of completed motions within

Real-time Accuracy

(RA)

5 s [11]

Online stability; the prediction accuracy from the first

correct prediction to the end of the task

a tradeoff between the response time for the subject to react to

the prompt and the transient portion of the acquired signal, only

the last 4-s data of each trial were used to train the classifier. In

the testing phase, subjects were asked to perform each of the 11

motions that were randomly prompted once in a trial, as shown

in Fig. 4, and six trials were performed in total. To provide vi-

sual feedback, the real-time prediction result was displayed on

a computer screen in front of the subjects. Subjects were asked

to keep each corresponding muscle contraction until the prompt

disappeared on the screen, and each prompt was displayed for

5 s. Then, the subjects were instructed to return to rest state

until the next target motion appeared. To avoid muscle fatigue,

subjects were arranged 1–5 min rest between trials. The above-

mentioned real-time experiment paradigm was performed using

EMG, NIRS, and combined EMG-NIRS, respectively, and the

order of employing three control signals was randomized be-

tween subjects to avoid the effect of user learning and muscle

fatigue. In the experiments, there were five subjects (S4, S6,

S11, S12, and A2) whose NIRS data could not train the relevant

SVM classifier; therefore, they did not perform the real-time

test by using the NIRS signal.

2) Performance Metrics: Based on the metrics (motion ST,

CT, and CR) that quantified real-time performance of the Mo-

tion Test [11], [15], four metrics (see Table III) were adopted to

evaluate the online performance. The ST could be seen as the

response indicator for protheses control. It was the time between

the motion onset to the first prediction of target motion, and the

motion onset was defined as the time of the last no motion

prediction [11], as illustrated in Fig. 5. The CT was the time

that was taken to complete ten accumulated correct predictions;

therefore, the minimum possible CT was 1 s as a new prediction

was produced every 100 ms. It evaluated the stability and con-

trollability for controlling a prosthesis. If the targeted motion

was not completed within the time of prompt displaying (5 s),

the movement was regarded as a failure. The CR was defined as

the percentage of completed motions within a 5-s time limit, and

it represented the usability of a prosthesis controller. The RA

was the prediction accuracy from the first correct prediction to

the end of the task (the moment when the prompt disappeared);

therefore, it represented the online stability of the controller. The

motion ST, CT, and RA were counted only when the motion was

completed.

D. Statistical Analysis

A two-way analysis of variance (ANOVA) was performed in

SPSS 18.0 (SPSS Inc., USA) to assess the effect of the feature

set factor and the classifier factor on the offline classification

accuracies obtained from the able-bodied subjects. A one-way

ANOVA was used to compare the real-time performance metrics

(ST, CT, CR, and RA) resulting from EMG-only and combined

EMG-NIRS for able-bodied subjects. The level of statistical

significance was set at p < 0.05 for all statistics. Because the

available number of amputee samples was limited and the sam-

ple size between able-bodied and amputee subjects was asym-

�

GUO et al.: TOWARD AN ENHANCED HUMAN–MACHINE INTERFACE FOR UPPER-LIMB PROSTHESIS CONTROL

569

metrical, quantitative comparisons between the two populations

were not performed.

III. RESULTS

A. Offline CA

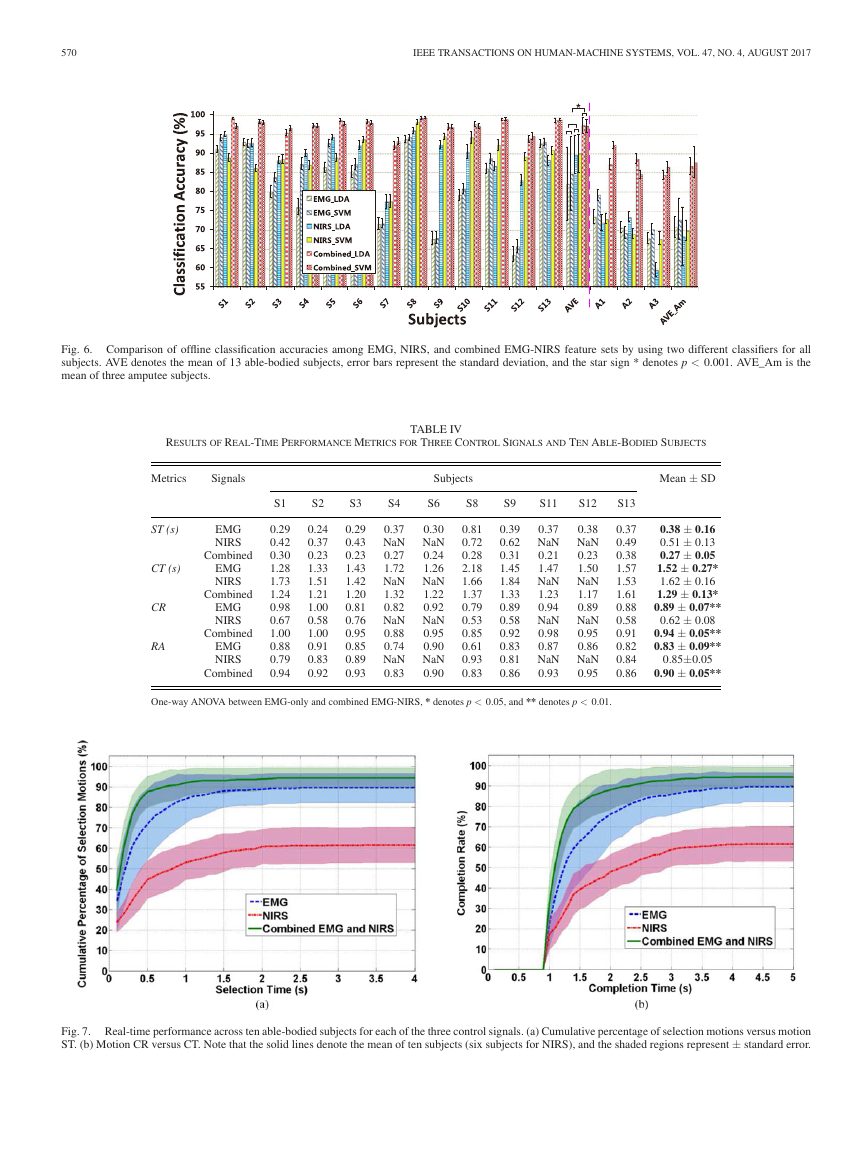

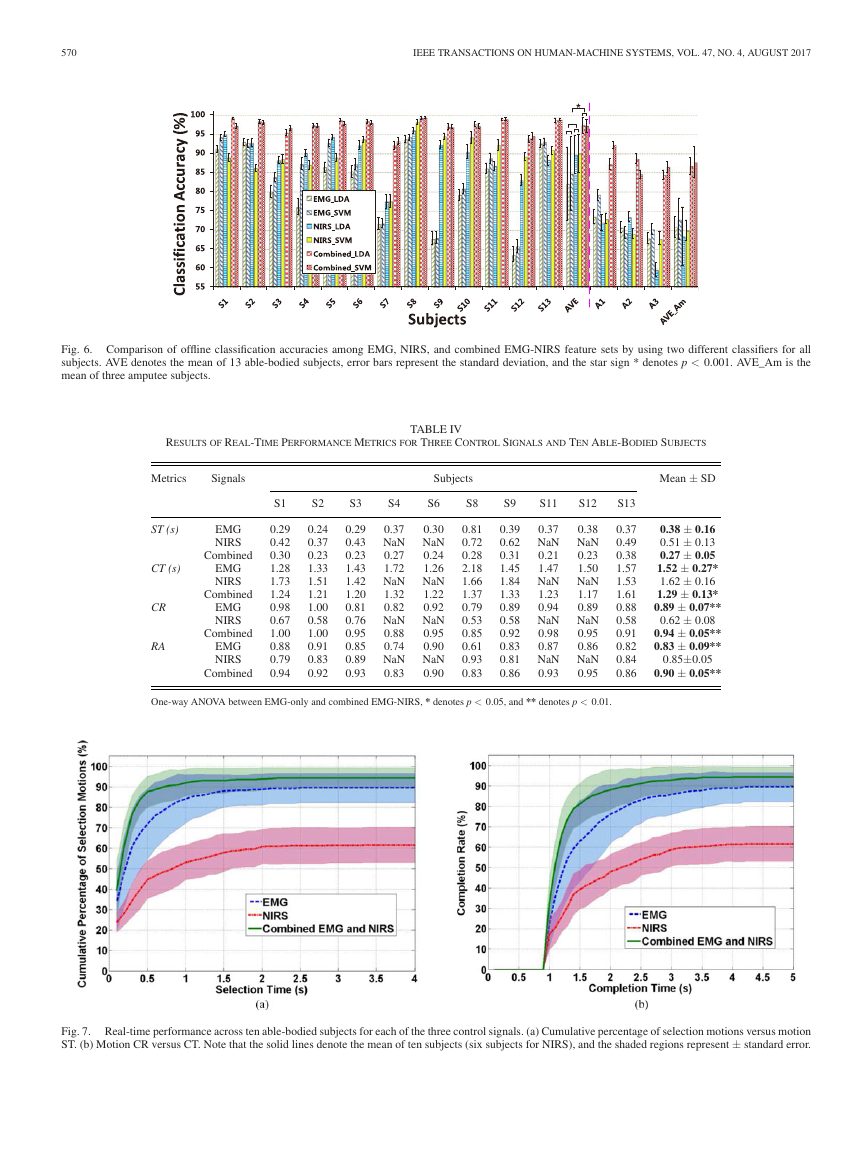

The offline classification results are shown in Fig. 6. Gener-

ally, for able-bodied subjects, the CA was remarkably improved

by using combined EMG-NIRS feature set (>97%), compared

with EMG or NIRS feature set (<90%). The two-way ANOVA

results showed that the accuracy was significantly improved (p

< 0.001) when NIRS information (NIRTD) was combined with

the EMG features (EMGTD), while the classifier factor (LDA

or SVM) had no significant effect on the CA (p > 0.7). Thus, the

selection of classifier would not impact the classification results,

while the performance was significantly improved by combining

EMG-NIRS features. For the three amputee subjects, with the

combination of EMG and NIRS, the average accuracy was im-

proved from 70.7% (EMG-only) to 86.7% (enhanced by 16%)

or from 72.8% (EMG-only) to 87.7% (enhanced by 14.9%) by

adopting LDA or SVM classifier, respectively. In spite of sta-

tistical tests not being carried out for amputee subjects because

the available number of samples was limited, the classification

results had consistent tendency with able-bodied subjects. The

results suggested some consistency in performance between the

two populations. Specifically, it was the combined EMG-NIRS

feature set rather than the classifier that impacted the classifica-

tion performance.

B. Real-Time Performance

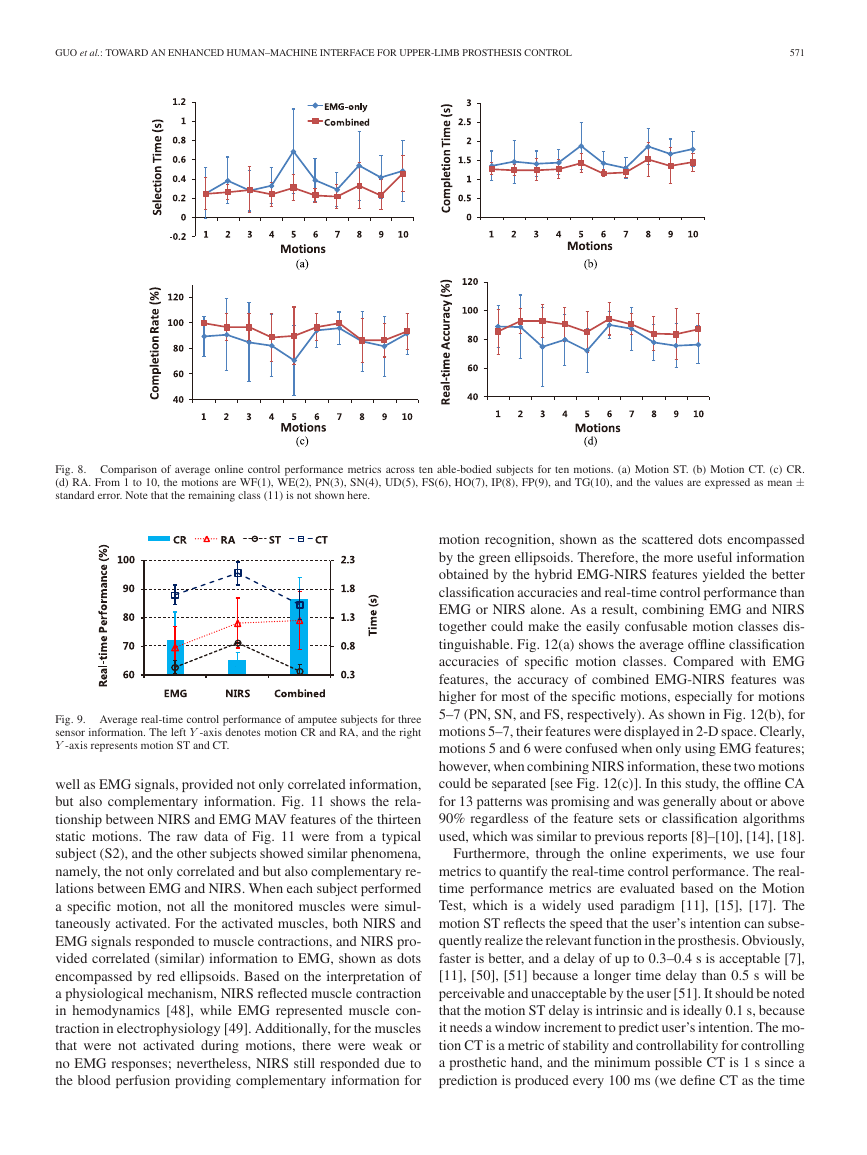

For able-bodied subjects, Table IV outlines the four real-time

performance metrics for EMG-only, NIRS-only, and combined

EMG-NIRS, respectively. Fig. 7 displays the real-time perfor-

mance in aspect of speed and robustness for virtual prosthetic

hand control.

1) Motion ST: The motion ST of combined EMG-NIRS was

lower than that of EMG-only (0.27 ± 0.05 s versus 0.38 ±

0.16 s, p = 0.0503). Although the p-value was greater than 0.05

and stated statistical significance was not achieved, the p-value

close to 0.05 suggested a strong trend of better performance us-

ing EMG-NIRS. Additionally, the respond time to intentioned

motion of NIRS (0.51 ± 0.13 s) was longer than other two con-

trol signals. Moreover, the relationship between the cumulative

percentage of selection motions and motion ST also showed

that motions were selected more quickly with combined control

signals than EMG-only or NIRS-only [see Fig. 7(a)].

2) Motion CT: The average time to complete a wrist or hand

motion was 1.52 ± 0.27 s for EMG only and 1.29 ± 0.13 s for

combined EMG-NIRS signals. The motion CT was significantly

(p = 0.0164) decreased when combining NIRS information with

EMG. On average, it took the longest time for NIRS (1.62 ±

0.16 s) to complete a motion. Furthermore, the relation curve

of CT and motion CR indicated that motions were completed

faster by using combined control signals than using EMG alone

[see Fig. 7(b)].

3) Motion CR: The motion CR by using combined EMG-

NIRS signals was significantly higher than that of EMG-only

(0.94 ± 0.05 versus 0.89 ± 0.07, p = 0.004). Moreover, as

shown in Fig. 7(b), more motions could be completed within

the same time limit when combining NIRS with EMG, while

the fewest motions were completed by using NIRS alone.

4) Real-Time Accuracy: The RA was only counted if the

motion was successfully completed within 5 s. As presented in

Table IV, the RA was significantly improved by using combined

EMG-NIRS compared to EMG (0.90 ± 0.05 versus 0.83 ± 0.09,

p = 0.007). The average RA of NIRS-only (0.85 ± 0.05) was

higher than that of EMG.

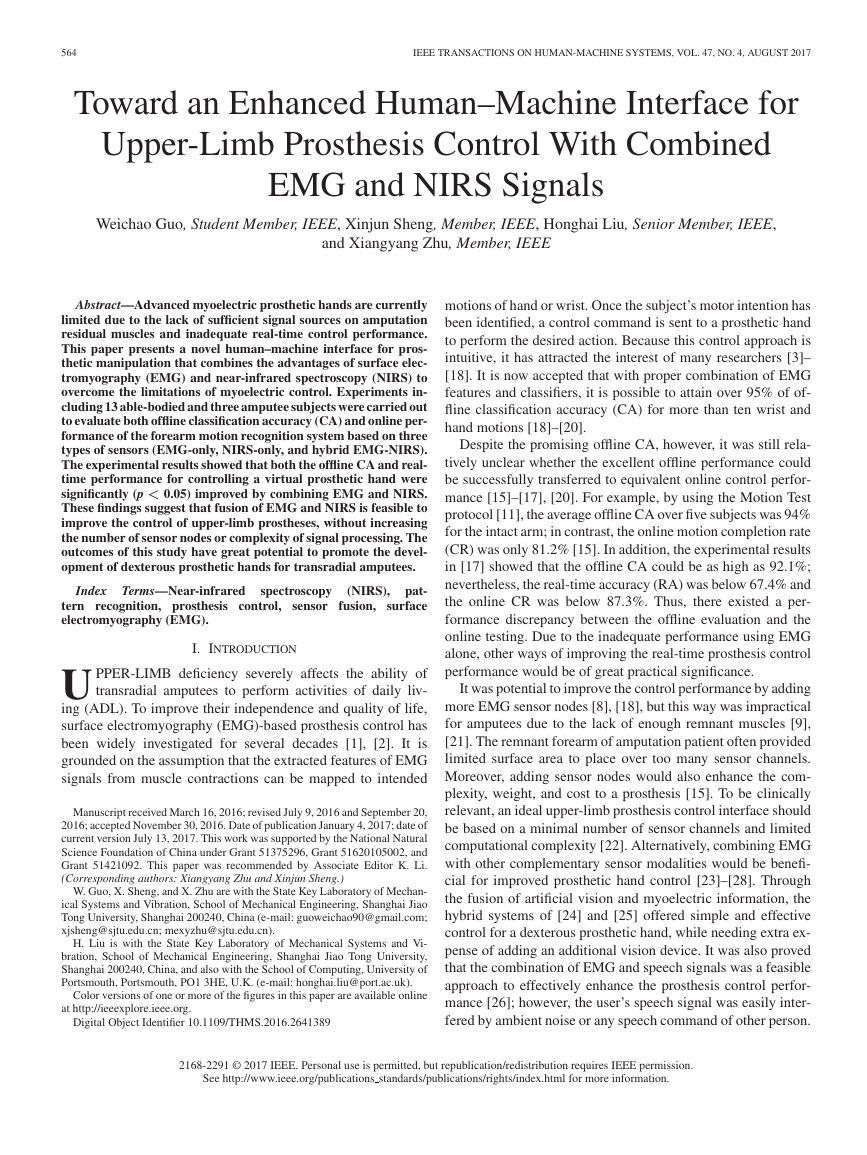

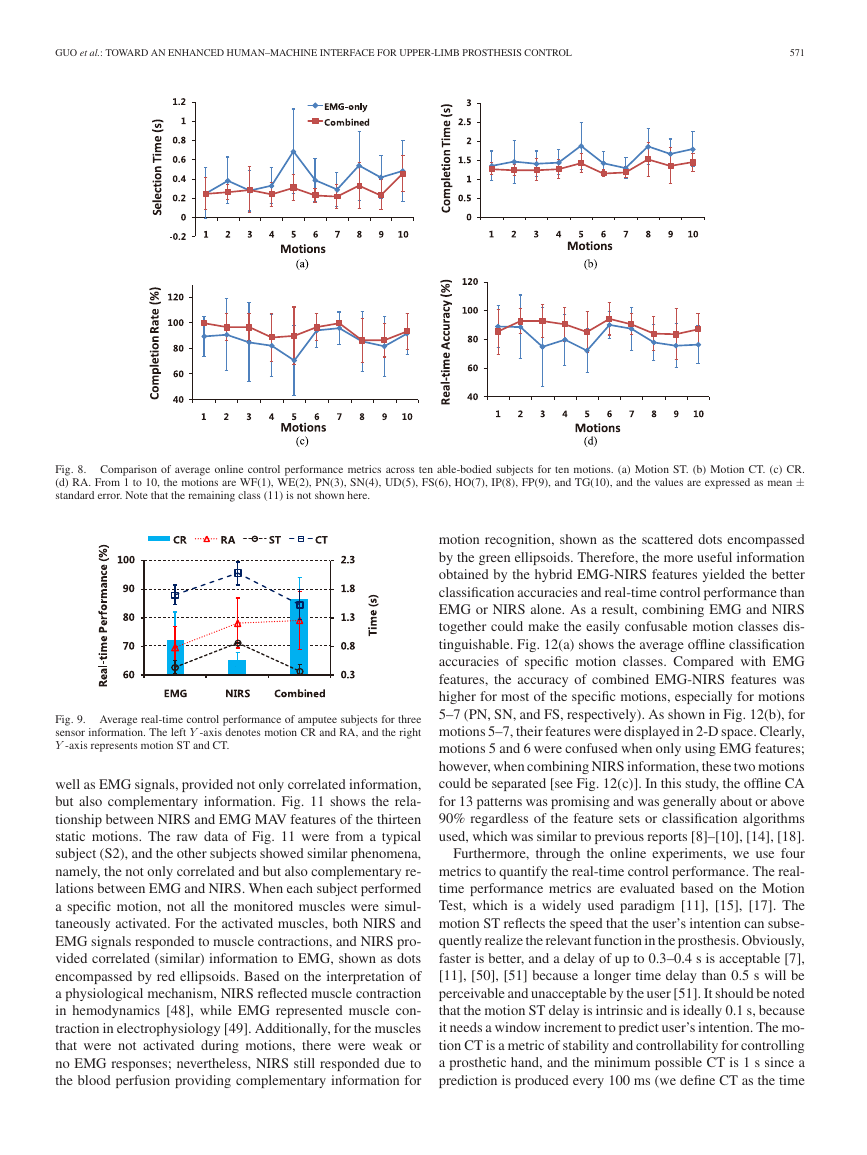

The average real-time performance parameters by using

EMG-only and combined EMG-NIRS for each of ten motions

(except the rest class) are illustrated in Fig. 8. The combined

signals outperformed EMG for almost all the motions with re-

spect to motion ST, CT, CR, and RA. Taking the UD (motion

5) for instance, the online performance was poor when adopt-

ing EMG-only, and the improvement was remarkable when the

NIRS information was fused.

Fig. 9 reports the four real-time performance metrics for the

three amputee subjects. Because the available number of sam-

ples was limited, the statistical test was not performed to confirm

the significance of changes between different features. Never-

theless, the results were seemingly consistent with able-bodied

subjects, namely, the real-time control performance was im-

proved by the fusion of EMG and NIRS compared to EMG or

NIRS alone. Specifically, the average CR of three amputee sub-

jects was increased from 72.2% (EMG-only) or 65.3% (NIRS-

only) to 86.4%, and the CT was decreased from 1.7 s (EMG-

only) or 2.1 s (NIRS-only) to 1.53 s, benefiting from the combi-

nation of EMG and NIRS. The ST and RA of combined signals

were 0.37 s and 79%, respectively, outperforming that of EMG

(0.43 s, 69.5%) or NIRS (0.86 s, 78%). Fig. 10 presents the

real-time control speed and robustness with different sensor in-

formation for the three amputee subjects. Combined EMG and

NIRS showed the best behavior from the perspective of response

speed and control efficiency.

Further analysis was performed on whether there was a high

correlation between offline CA and real-time control perfor-

mance. Consistent with the literature [15]–[17], no high corre-

lation was found between offline accuracy and real-time perfor-

mance metrics. However, both the offline accuracy and real-time

performance were significantly improved by taking advantage

of the combination of EMG and NIRS.

IV. DISCUSSION

This study presented a hybrid EMG-NIRS control interface

for improving the control of upper-limb prostheses to overcome

the drawbacks of myoelectric approaches. It demonstrated a

significantly enhanced offline CA and much better real-time

performance by combining EMG and NIRS.

Offline CA, a commonly reported performance indicator that

evaluated the pattern recognition approach, showed that com-

bining EMG and NIRS was superior to EMG alone, as shown

in Fig. 6. As mentioned in our previous work [39], NIRS, as

�

570

IEEE TRANSACTIONS ON HUMAN-MACHINE SYSTEMS, VOL. 47, NO. 4, AUGUST 2017

Fig. 6. Comparison of offline classification accuracies among EMG, NIRS, and combined EMG-NIRS feature sets by using two different classifiers for all

subjects. AVE denotes the mean of 13 able-bodied subjects, error bars represent the standard deviation, and the star sign * denotes p < 0.001. AVE_Am is the

mean of three amputee subjects.

RESULTS OF REAL-TIME PERFORMANCE METRICS FOR THREE CONTROL SIGNALS AND TEN ABLE-BODIED SUBJECTS

TABLE IV

Metrics

Signals

Subjects

S1

S2

S3

S4

S6

S8

S9

S11

S12

S13

ST (s)

CT (s)

CR

RA

EMG

NIRS

Combined

EMG

NIRS

Combined

EMG

NIRS

Combined

EMG

NIRS

Combined

0.29

0.42

0.30

1.28

1.73

1.24

0.98

0.67

1.00

0.88

0.79

0.94

0.24

0.37

0.23

1.33

1.51

1.21

1.00

0.58

1.00

0.91

0.83

0.92

0.29

0.43

0.23

1.43

1.42

1.20

0.81

0.76

0.95

0.85

0.89

0.93

0.37

NaN

0.27

1.72

NaN

1.32

0.82

NaN

0.88

0.74

NaN

0.83

0.30

NaN

0.24

1.26

NaN

1.22

0.92

NaN

0.95

0.90

NaN

0.90

0.81

0.72

0.28

2.18

1.66

1.37

0.79

0.53

0.85

0.61

0.93

0.83

0.39

0.62

0.31

1.45

1.84

1.33

0.89

0.58

0.92

0.83

0.81

0.86

0.37

NaN

0.21

1.47

NaN

1.23

0.94

NaN

0.98

0.87

NaN

0.93

0.38

NaN

0.23

1.50

NaN

1.17

0.89

NaN

0.95

0.86

NaN

0.95

0.37

0.49

0.38

1.57

1.53

1.61

0.88

0.58

0.91

0.82

0.84

0.86

One-way ANOVA between EMG-only and combined EMG-NIRS, * denotes p < 0.05, and ** denotes p < 0.01.

Mean ± SD

0.38 ± 0.16

0.51 ± 0.13

0.27 ± 0.05

1.52 ± 0.27*

1.62 ± 0.16

1.29 ± 0.13*

0.89 ± 0.07**

0.62 ± 0.08

0.94 ± 0.05**

0.83 ± 0.09**

0.85±0.05

0.90 ± 0.05**

Fig. 7. Real-time performance across ten able-bodied subjects for each of the three control signals. (a) Cumulative percentage of selection motions versus motion

ST. (b) Motion CR versus CT. Note that the solid lines denote the mean of ten subjects (six subjects for NIRS), and the shaded regions represent ± standard error.

�

GUO et al.: TOWARD AN ENHANCED HUMAN–MACHINE INTERFACE FOR UPPER-LIMB PROSTHESIS CONTROL

571

Fig. 8. Comparison of average online control performance metrics across ten able-bodied subjects for ten motions. (a) Motion ST. (b) Motion CT. (c) CR.

(d) RA. From 1 to 10, the motions are WF(1), WE(2), PN(3), SN(4), UD(5), FS(6), HO(7), IP(8), FP(9), and TG(10), and the values are expressed as mean ±

standard error. Note that the remaining class (11) is not shown here.

motion recognition, shown as the scattered dots encompassed

by the green ellipsoids. Therefore, the more useful information

obtained by the hybrid EMG-NIRS features yielded the better

classification accuracies and real-time control performance than

EMG or NIRS alone. As a result, combining EMG and NIRS

together could make the easily confusable motion classes dis-

tinguishable. Fig. 12(a) shows the average offline classification

accuracies of specific motion classes. Compared with EMG

features, the accuracy of combined EMG-NIRS features was

higher for most of the specific motions, especially for motions

5–7 (PN, SN, and FS, respectively). As shown in Fig. 12(b), for

motions 5–7, their features were displayed in 2-D space. Clearly,

motions 5 and 6 were confused when only using EMG features;

however, when combining NIRS information, these two motions

could be separated [see Fig. 12(c)]. In this study, the offline CA

for 13 patterns was promising and was generally about or above

90% regardless of the feature sets or classification algorithms

used, which was similar to previous reports [8]–[10], [14], [18].

Furthermore, through the online experiments, we use four

metrics to quantify the real-time control performance. The real-

time performance metrics are evaluated based on the Motion

Test, which is a widely used paradigm [11], [15], [17]. The

motion ST reflects the speed that the user’s intention can subse-

quently realize the relevant function in the prosthesis. Obviously,

faster is better, and a delay of up to 0.3–0.4 s is acceptable [7],

[11], [50], [51] because a longer time delay than 0.5 s will be

perceivable and unacceptable by the user [51]. It should be noted

that the motion ST delay is intrinsic and is ideally 0.1 s, because

it needs a window increment to predict user’s intention. The mo-

tion CT is a metric of stability and controllability for controlling

a prosthetic hand, and the minimum possible CT is 1 s since a

prediction is produced every 100 ms (we define CT as the time

Fig. 9. Average real-time control performance of amputee subjects for three

sensor information. The left Y -axis denotes motion CR and RA, and the right

Y -axis represents motion ST and CT.

well as EMG signals, provided not only correlated information,

but also complementary information. Fig. 11 shows the rela-

tionship between NIRS and EMG MAV features of the thirteen

static motions. The raw data of Fig. 11 were from a typical

subject (S2), and the other subjects showed similar phenomena,

namely, the not only correlated and but also complementary re-

lations between EMG and NIRS. When each subject performed

a specific motion, not all the monitored muscles were simul-

taneously activated. For the activated muscles, both NIRS and

EMG signals responded to muscle contractions, and NIRS pro-

vided correlated (similar) information to EMG, shown as dots

encompassed by red ellipsoids. Based on the interpretation of

a physiological mechanism, NIRS reflected muscle contraction

in hemodynamics [48], while EMG represented muscle con-

traction in electrophysiology [49]. Additionally, for the muscles

that were not activated during motions, there were weak or

no EMG responses; nevertheless, NIRS still responded due to

the blood perfusion providing complementary information for

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc