武汉科技大学本科毕业论文外文翻译

Scale-up x Scale-out: A Case Study using Nutch/Lucene

Maged Michael, José E. Moreira, Doron Shiloach, Robert W. Wisniewski

IBM Thomas J. Watson Research Center

Yorktown Heights, NY 10598-0218

向上向外扩展:关于研究Nutch/Lucene的互操作性

摘要

在过去几年中,多处理系统提高运行能力的解决方案一直困扰着主流的商业

计算。主要的服务器供应商继续提供越来越强悍的机器,而近期,向外扩展的解

决方案,规模较小的机器集群的形式,更加被商业计算所接受。向外扩展的解决

方案是以网络为中心高吞吐量的特别有效的应用。在本文中,我们调查了向上扩

展和向外扩展这两种相对的方法在一个新兴的搜索应用程序中并行的情况。我们

的结论表明,向外扩展的策略即使在向上扩展的机器中依然可以表现良好。此外,

向外扩展的解决方案提供更好的价格/性能比,虽然增加了管理的复杂性。

1 简介

在过去10年里的商业计算中,我们目睹了计算机系统单处理器到多处理器的

全面换代。80年代初期引发的计算机行业的科技革命导致它占领了90年代商业计

算大部分的市场。

我们可以根据不同的做法,将采用多处理器系统的运算(包括商业和技术/

科学)分为两个大组:

·向上扩展:大型共享存储服务器的部署应用(多处理系统)。

·向外扩展:多个小相互服务器应用程序的部署(网络集群)。

在第一阶段的商业计算革命中,向上扩展的优势是显而易见的。多处理系统

规模的增加,处理器时钟速率的提高,提供更高的计算能力来处理事物的需要,

即使是目前最大的公司也面临这些问题. 对称多处理系统是目前的主流商业计

算。IBM 、惠普和Sun这样的公司每一代人都投入巨资以建设更大、更好多处理

系统。

最近,针对于商业计算的向外扩展越来越被关注。对于许多新的以网络产品

为主的企业(例如谷歌、雅虎、eBay、亚马逊),使用向外扩展是是解决必要计

算能力唯一的办法。另外,计算机制造商更容易部署基于机架最佳化和刀片服务

器的向外扩展解决方案。向外扩展在过去好多年一直是大规模科学计算的唯一可

行方案,我们可以观察世界500强系统的发展。

在此论文中,我们研究的是一个新兴的商业应用,非结构化数据的检索,根

�

武汉科技大学本科毕业论文外文翻译

据两个不同的系统:一个是以向上扩展为基础的超线程酷睿POWER5处理器。另

一种是基于IBMBlade Center刀片服务器向外扩展系统。这两个系统配置价格差

不多(约20万美元),从而可以公平的进行性价比的比较。

其中一个重要的结论,我们的工作是一个“纯粹”的向上扩展的方案而不是

很有效的利用所有的处理器在一个大型的对称多处理。在纯粹的向上扩展中,我

们只有一个实例运行的应用程序中的SMP,并使用该实例的所有可用资源(处理

器)。我们更擅长于开发Power5的对称多处理与“基于abox向外扩展”的方案。

在这种情况下,多个实例方法同时运行在一个单操作系统下。后一种做法显著提

高性能,同时又能保持单一系统形象,是一个很具优势的对称多处理系统。

我们的另外一个结论是,同样的价格尺度系统的情况下,向外扩展的系统能

够实现4倍的性能。在我们的应用案例中,这一业绩的衡量精确到了秒。向外扩

展系统需要使用多个系统的图像,因此,可以有效方便的降低管理成本。不同情

况下,这点或许能改善性能。

其余部分本文安排如下.Section 2叙述了向上扩展系统和向外扩展系统荣在我

们的研究中的配置。第3节介绍了Nutch / Lucene在我们的系统中运行的工作量。

第4节介绍我们的结论。

2 向上和向外扩展系统

在IBM的产品线,系统z,p和i全部建立在具有跨度范围广泛的计算能力的多

处理系统上。我们选择了Power5的p5 575机器作为代表着目前技术水平的系统。

这个8位或16位系统已经由于其低成本,高性能,小型化(2U或3.5英寸的高24

英寸机架)已经吸引了不少客户。POWER5的p5 575是图片如图1所示。

我们所用的特殊p5 575测试系统拥有16个8位酷睿单元和32GB(1G= 1,073 ,

741824字节)的主存。每个核心是双线程,因此这个操作系统相当于一个32位的

SMP。处理器速度是1.5G赫兹。另外,p5 575connects有两个Gigabit/s以太网接

口。它也有自己的专用DS4100存储控制器。(见下面的说明DS4100)

向外扩展系统有许多不同的形状和形式,但它们一般包括多个相互关联的节

点,每一个节点代表一个独立的操作系统。我们选择的BladeCenter作为我们的

向外扩展平台。这是这个平台基于向外扩展方向的一个自然选择。

第一种在商业计算成为流行的向外扩展系统是机架式集群。IBM

BladeCenter,解决方案(和类似的系统公司,如惠普和戴尔)引领着下一步机

架式集群向外扩展系统的商业计算。BladeCenter的刀片服务器使用和机架式集

群服务器相似的能力: 4处理器的配置, 16-32培养基的最大内存,内置以太网,

并扩展卡两种光纤通道,Infiniband的, Myrinet的,或10Gbit/s以太网。同时

还提供有多达8个处理器的双宽叶片配置和额外的内存。BladeCenter-H是最新的

�

武汉科技大学本科毕业论文外文翻译

IBM BladeCenter机架。与之前的BladeCenter – 1机架相比,它有14个刀片插

槽的刀片服务器。它也有多达两个管理单元, 4个交换机模块,四桥模块和四个

高速交换机模块的空间。(在机架上交换机模块3与4和桥梁模块3与4均共享相同

的插槽。)我们在每个机架配备两个1-Gbit/s以太网交换机模块和2个光纤通道

交换机模块。

三种不同叶片中使用了我们的集群:JS21( PowerPC处理器),HS21 (英

特尔Woodcrest处理器)和LS21( AMD Opteron处理器)。每一个刀片(JS21,HS21,

或LS21)既有本地磁盘驱动器(73 GB的容量)也有双光纤通道的网络适配器。

在光纤通道适配器,两个用于连接的刀片光纤通道交换机,都被插入机架。大约

一半的集群(4底盘)组成JS21刀片。这是四处理器(双插槽,双核心)的PowerPC

970片,运行在2.5 GHz。每一个刀片有8GiB的内存。在本文中的结论报告中,我

们着重关注这些JS21刀片。

DS4100存储子系统包括双存储控制器,每一个都配有2Gb/s的光纤通道接口,

并且在主要抽屉中容纳了14个SATA驱动器。尽管每个DS4100是搭配一个专门的

BladeCenter-H机架,但由于我们运行的光纤通道网络,集群中的任何刀片都可

以可以查看到存储系统的每个逻辑单元。

3 Nutch / Lucene的工作量

Nutch / Lucene是一种执行搜索应用的框架。这是基于非结构化数据(网页)

搜索的应用程序日益增多的表现。我们已经习惯了谷歌和雅虎这样开放互联网运

作的搜索引擎。然而,搜索也是公司局域网、内部网络的一个重要的运作。Nutch

/ Lucene完全是基于Java和其代码的开源性。Nutch / Lucene,作为一个典型的搜

寻工作,有三个主要部分组成:(1)检索,(2)索引,和(3)查询。在本文

中,我们列出查询结果的组成部分。为了完整性,我们简要介绍了其他组成部分。

抓取操作是浏览和检索信息的网页,然后输入将要搜索的文本信息。这一套

文件在搜索术语称为语料库。爬行可以同时在内部网络(内联网)以及外部网络

(因特网)内执行。检索,尤其是在互联网,是一个复杂的工作。无论是有意还

是无意,总有许多的网站难以检索到。检索的性能通常是被检索系统和被检索系

统之间的网络带宽给制约着。

在Nutch / Lucene的搜索框架包含一个使用MapReduce编程模型的并行索引

操作书面。MapReduce提供了一个方便的方式处理一个重要的(尽管有限)类,

通过程序员在现实生活中的商业应用并行和容错性问题让他们关注问题域。

MapReduce在2004年出版了谷歌网站,并迅速成为这类工作量分析的标准。

MapReduce模式的并行索引操作如下。首先,将要建立的数据分割成大致相同大

小的部分。每一部分,按照既定的方式进行处理,生成(键,值),其中KEY

�

武汉科技大学本科毕业论文外文翻译

是查询索引关键字,value是包含关键字的一整套文档(和储存关键字的文档)。

这相当于在地图阶段,用MapReduce 。在下一阶段,在减少的阶段,每一个减

速任务收集所有对某一特定的关键字,从而产生一个单一的指数表的关键字。当

所有的按键都处理后,我们有完整的关键字集作为整个数据集。

在大多数的搜索应用程序中,查询绝大多数代表着运算能力。执行查询功能

的时候,索引格式被提交给搜索引擎,然后检索文件,得到最符合要求的结果。

Nutch / Lucene的并行查询引擎的总体结构如图3所示。查询引擎部分包含一个或

多个前台,一个或多个后台。每个后台都包含该分类完整的数据集。驱动作为外

围用户的代表也是衡量查询性能的一个关键点,每秒查询(qps)。查询操作的

方式如下:驱动程序提交特定查询(索引格式)的任意一个前台。前台紧接着分

派查询任务给所有的后台。每个后台负责执行对数据段的查询并返回最符合查询

要求的结果文件列表(通常是10个)。每个文件返回一个百分数值,以此量化查

询匹配度。前台收集所有后台的回复两端产生一个单一的顶端文件列表(通常是

10条最佳匹配结果)。一旦前台产生了该列表,它会练习后台根据索引目录检索

文章的片段。只有顶端文件的片段会被检索。前台一次只能与一个后台建立联系,

从后台数据段对应的文档中回复片段。

4 总结

我们的工作的第一个结论是,相对于向上扩展来说,向外扩展的解决方案在

检索工作量方面毋庸置疑有着很高的性价比优势。高度并行性的工作量,再加上

在处理器、网络和存储的可扩展性方面的可预测性,使得向外扩展成为搜索方面

的完美候选。

此外,即使在向上扩展系统中,在处理器利用效率方面采取“在单位空间内

向外扩展”的方法比单纯的向上扩展效果要好的多。这与目前已有的大型共享存

储系统的科技计算已经没有太大的差别。这些机器中,在机器中运行MPI(向外

扩展)应用往往比依赖于共享内存(向上扩展)编程更加有效。

向外扩展的系统在系统管理方面仍然不如向上扩展。使用传统的管理观念消

耗的镜像成本成比例的增加,很明显,向外扩展的解决方案比向上扩展要耗费更

高的管理成本。

�

武汉科技大学本科毕业论文外文翻译

外文文献原文:

Abstract

Scale-up solutions in the form of large SMPs have represented the mainstream of commercial

computing for the past several years. The major server vendors continue to provide increasingly

larger and more powerful machines. More recently, scale-out solutions,in the form of clusters of

smaller machines, have gained increased acceptance for commercial computing. Scale-out

solutions are particularly effective in high-throughput web-centric applications.In this paper, we

investigate the behavior of two competing approaches to parallelism, scale-up and scale-out, in an

emerging search application. Our conclusions show that a scale-out strategy can be the key to

good performance even on a scale-up machine. Furthermore, scale-out solutions offer better

price/performance, although at an increase in management complexity.

1

Introduction

During the last 10 years of commercial computing,we have witnessed the complete

replacement of uniprocessor computing systems by multiprocessor ones. The revolution that

started in the early to mideighties in scientific and technical computing finally caught up with the

bulk of the marketplace in the midnineties.

We can classify the different approaches to employmultiprocessor systems for computing

(both commercial and technical/scientific) into two large groups:

·Scale-up: The deployment of applications on large shared-memory servers (large SMPs).

·Scale-out: The deployment of applications on multiple small interconnected servers (clusters).

During the first phase of the multiprocessor revolution in commercial computing, the

dominance of scale-up was clear. SMPs of increasing size, with processors of increasing clock rate,

could offer ever more computing power to handle the needs of even the largest corporations.SMPs

currently represent the mainstream of commercial computing. Companies like IBM, HP and Sun

invest heavily in building bigger and better SMPs with each generation.

More recently, there has been an increase in interest in scale-out for commercial computing.

For many of the new web-based enterprises (e.g., Google, Yahoo, eBay, Amazon), a scale out

approach is the only way to deliver the necessary computational power. Also, computer

manufacturers have made it easier to deploy scale-out solutions with rack-optimized and bladed

servers. (Scale-out has been the only viable alternative for large scale technical scientific

computing for several years, as we observe in the evolution of the TOP500 systems.)

In this paper, we study the behavior of an emerging commercial application, search of

unstructured data, in two distinct systems: One is a modern scale-up system based on the

POWER5 multi-core/multi-threaded processor [8],[9]. The other is a typical scale-out

system based on IBM BladeCenter [3]. The systems were configured to have approximately the

same list price (approximately $200,000), allowing a fair performance and price-performance

comparison.

One of the more important conclusions of our work is that a “pure” scale-up approach is not

very effective in using all the processors in a large SMP. In pure scale-up, we run just one instance

of our application in the SMP, and that instance uses all the resources (processors) available. We

were more successful in exploiting the POWER5 SMP with a “scale-out-in-abox” approach. In

that case, multiple instances of the application run concurrently, within a single operating system.

This latter approach resulted in significant gains in performance while maintaining the single

�

武汉科技大学本科毕业论文外文翻译

system image that is one of the great advantages of large SMPs.

Another conclusion of our work is that a scale-out system can achieve about four times the

performance of a similarly priced scale-up system. In the case of our application, this performance

is measured in terms of queries per second. The scale-out system requires the use of multiple

system images, so the gain in performance comes at a convenience and management cost.

Depending on the situation, that may be worth the improvement in performance or not.

The rest of this paper is organized as follows.Section 2 describes the configuration of the

scale-out and scale-up systems we used in our study. Section 3 presents the Nutch/Lucene

workload that ran in our systems. Section 4 reports our experimental findings.Finally, Section 5

presents our conclusions.

2 Scale-up and scale-out systems

In the IBM product line, Systems z, p, and i are allbased on SMPs of different sizes that span a

widespectrum of computational capabilities. As an example of a state-of-the art scale-up system

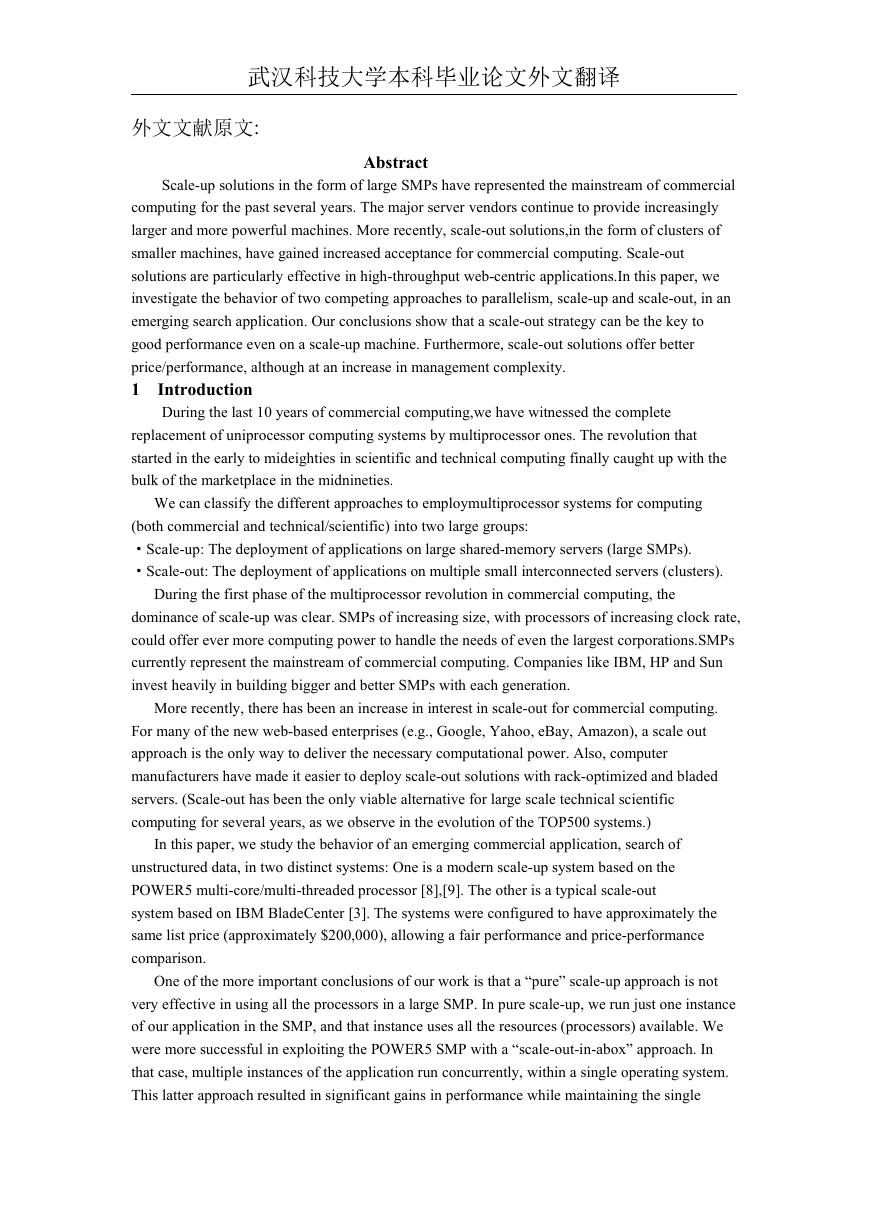

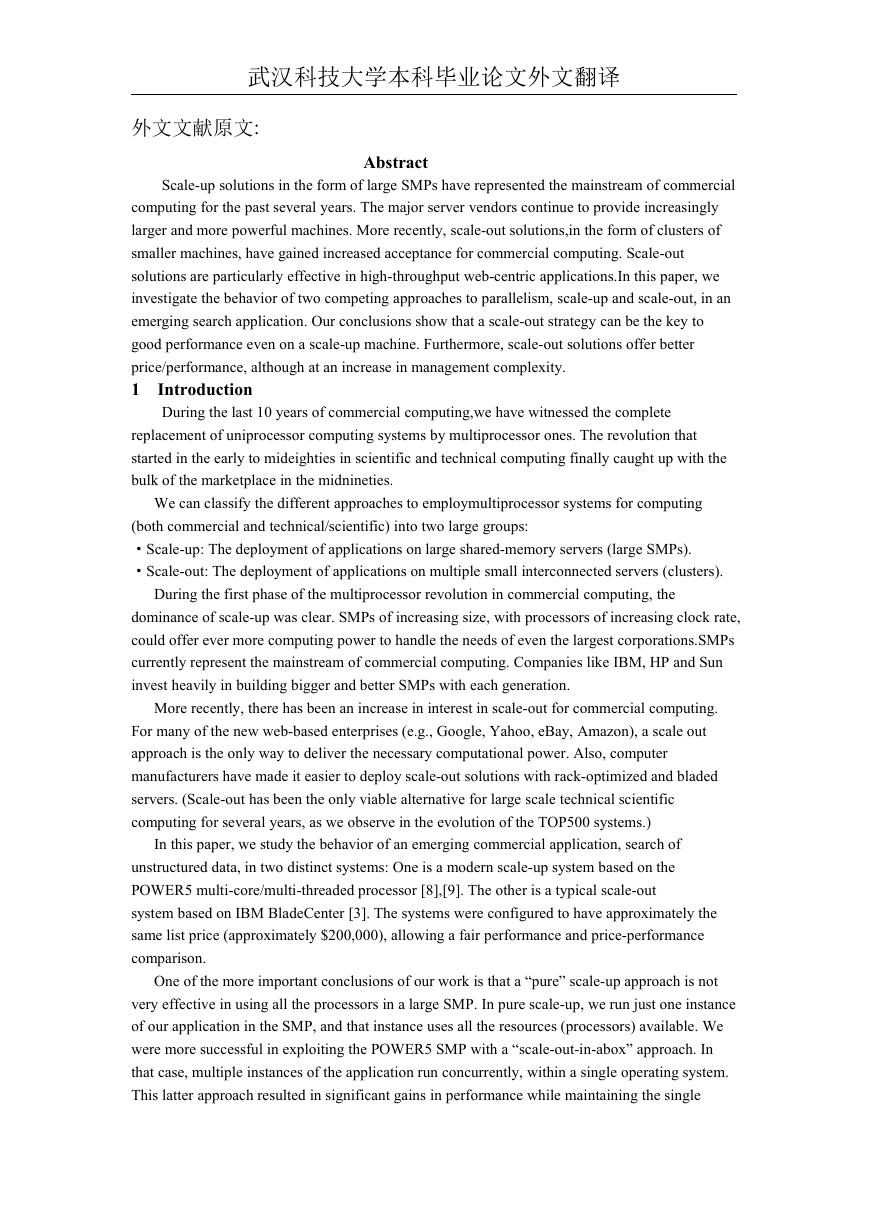

we adopted the POWER5 p5 575 machine [7]. This 8- or 16-way system has been very attractive

to customers due to its low-cost, high-performance and small form factor (2U or 3.5-inch high in a

24-inch rack). A picture of a POWER5 p5 575 is shown in Figure 1.

The particular p5 575 that we used

for our scale-up measurements has 16

POWER5 processors in 8 dualcore

modules and 32 GiB (1 GiB = 1,07

3 ,741,824bytes) of main memory. Each

core is dual-threaded, so to the operating

system the machine appears as a 32-

way SMP. The processor speed is 1.5

GHz. The p5 575connects to the outside

world through two (2) Gigabit/s Ethernet

interfaces. It also has its own dedicated

DS4100 storage controller. (See below

for a description of the DS4100.)

Scale-out systems come in many

different shapes and forms, but they

generally consist of multiple interconnected nodes with a self-contained operating system in each

node. We chose BladeCenter as our platform for scale-out. This was a natural choice given

the scale-out orientation of this platform.

The first form of scale-out systems to become popular in commercial computing was the

rackmounted cluster. The IBM BladeCenter, solution (and similar systems from companies such

as HP and Dell) represents the next step after rack-mounted clusters in scale-out systems for

commercial computing. The blade servers used in BladeCenter are similar in capability to the

densest rack-mounted cluster servers: 4-processor configurations, 16-32 GiB of maximum

memory, built-in Ethernet, and expansion cards for either Fiber Channel, Infiniband, Myrinet, or

10 Gbit/s Ethernet. Also offered are double-wide blades with up to 8-processor configurations and

additional memory.

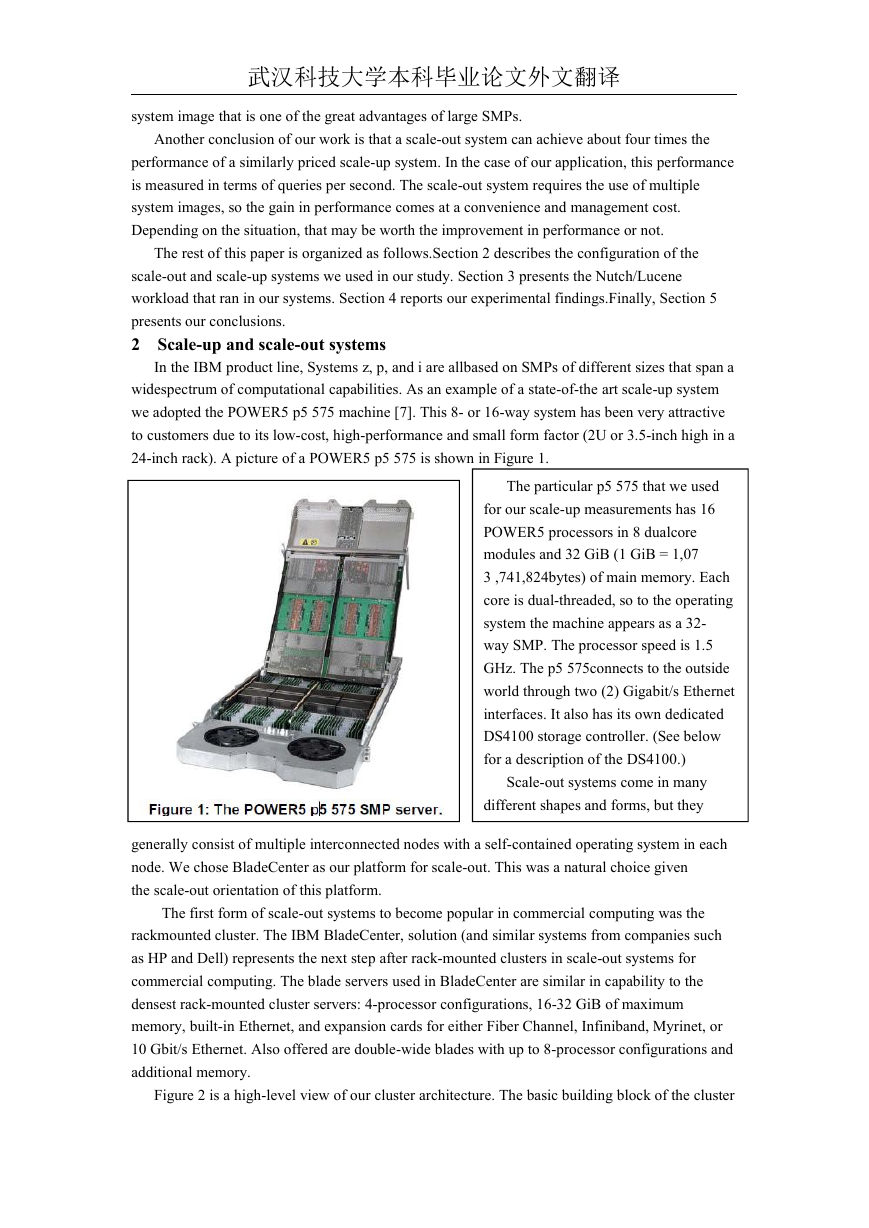

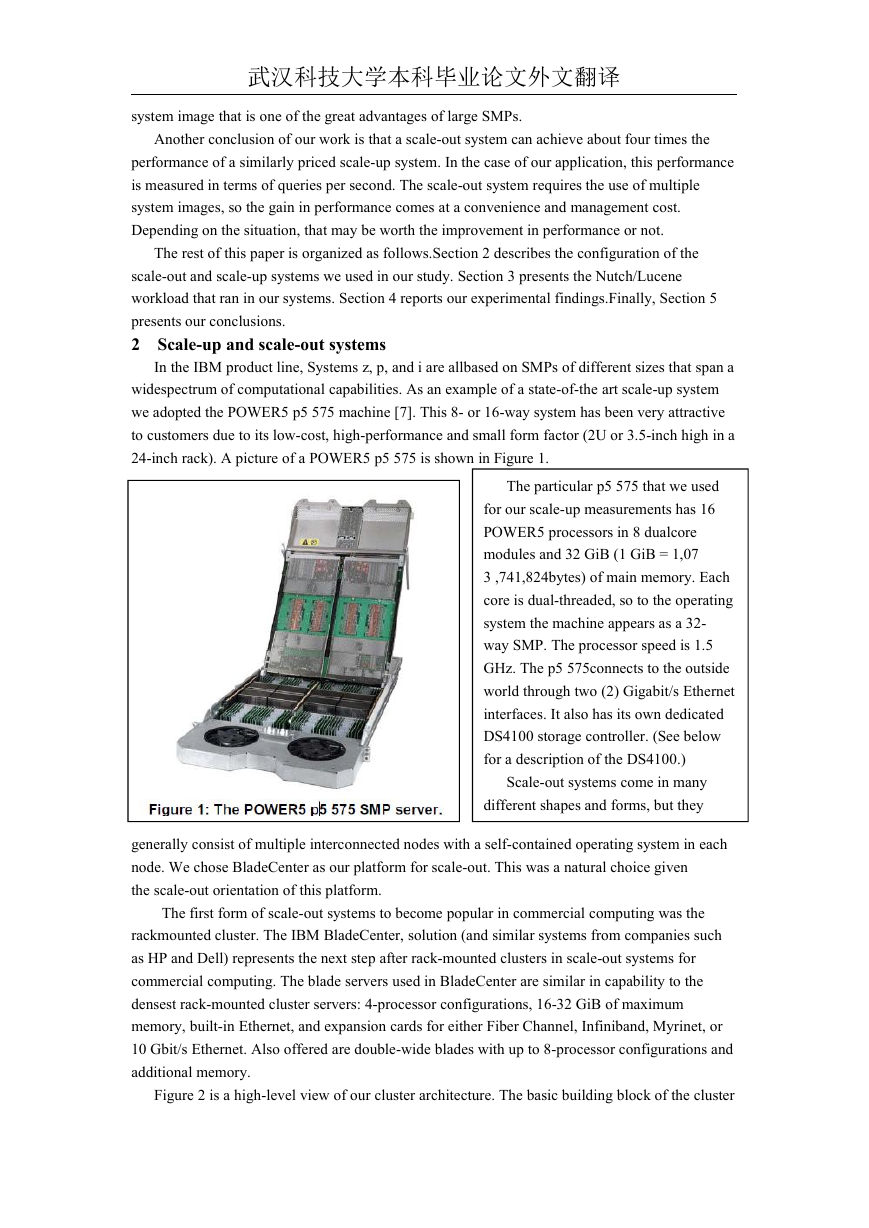

Figure 2 is a high-level view of our cluster architecture. The basic building block of the cluster

�

武汉科技大学本科毕业论文外文翻译

is a BladeCenter-H (BC-H) chassis. We couple each BC-H chassis with one DS4100 storage

controller through a 2-Gbit/s Fiber Channel link. The chassis themselves are interconnected

through two nearest-neighbor networks. One of the networks is a 4-Gbit/s Fiber Channel network

and the other is a 1-Gbit/s Ethernet network. The cluster consists of 8 chassis of blades (112

blades in total) and eight DS4100 storage subsystems.

The BladeCenter-H chassis is the newest BladeCenter chassis from IBM. As with the previous

BladeCenter-1 chassis, it has 14 blade slots for blade servers. It also has space for up to two

management modules, four switch modules, four bridge modules, and four high-speed switch

modules. (Switch modules 3 and 4 and bridge modules 3 and 4 share the same slots in the chassis.)

We have populated each of our chassis with two 1-Gbit/s Ethernet switch modules and two Fiber

Channel switch modules.

Three different kinds of blades were used in our cluster: JS21 (PowerPC processors), HS21

(Intel Woodcrest processors), and LS21 (AMD Opteron processors). Each blade (JS21, HS21, or

LS21) has both a local disk drive (73 GB of capacity) and a dual Fiber Channel network adapter.

The Fiber Channel adapter is used to connect the blades to two Fiber Channel switches that are

plugged in each chassis. Approximately half of the cluster (4 chassis) is composed of JS21 blades.

These are quad-processor (dual-socket, dual-core) PowerPC 970 blades, running at 2.5 GHz. Each

blade has 8 GiB of memory. For the experiments reported in this paper, we focus on these JS21

blades.

The DS4100 storage subsystem consists of dual storage controllers, each with a 2 Gb/s Fiber

Channel interface, and space for 14 SATA drives in the main drawer. Although each DS4100 is

paired with a specific BladeCenter-H chassis, any blade in the cluster can see any of the LUNs in

the storage system, thanks to the Fiber Channel network we implement.

3 The Nutch/Lucene workload

Nutch/Lucene [4] is a framework for implementing search applications. It is representative of

a growing class of applications that are based on search of unstructured data (web pages). We are all

used to search engines like Google and Yahoo that operate on the open Internet. However, search

is also an important operation within Intranets, the internal networks of companies. Nutch/Lucene

is all implemented in Java and its code is open source. Nutch/Lucene, as a typical search frame-

work, has three major components: (1) crawling, (2) indexing, and (3) query. In this paper, we

present our results for the query component. For completeness, we briefly describe the other

components.

Crawling is the operation that navigates and retrieves the information in web pages, populating

the set of documents that will be searched. This set of documents is called the corpus, in search

terminology.Crawling can be performed on internal networks (Intranet) as well as external

networks (Internet).Crawling, particularly in the Internet, is a complex operation. Either

intentionally or unintentionally, many web sites are difficult to crawl. The performance of

crawling is usually limited by the bandwidth of the network between the system doing the

crawling and the system being crawled.

The Nutch/Lucene search framework includes a parallel indexing operation written using the

MapReduce programming model [2]. MapReduce provides a convenient way of addressing an

important(though limited)class of real-life commercial applications by hiding parallelism and

fault-tolerance issues from the programmers, letting them focus on the problem domain.

MapReduce was published by Google in 2004 and quickly became a de-facto standard for this

�

武汉科技大学本科毕业论文外文翻译

kind of workloads. Parallel indexing operations in the MapReduce model works as follows. First,

the data to be indexed is partitioned into segments of approximately equal size. Each segment is

then processed by a mapper task that generates the (key, value) pairs for that segment, where key

is an indexing term and value is the set of documents that contain that term (and the location of the

term in the document). This corresponds to the map phase, in MapReduce. In the next phase, the

reduce phase, each reducer task collects all the pairs for a given key, thus producing a single index

table for that key. Once all the keys are processed, we have the complete index for the entire

data set.

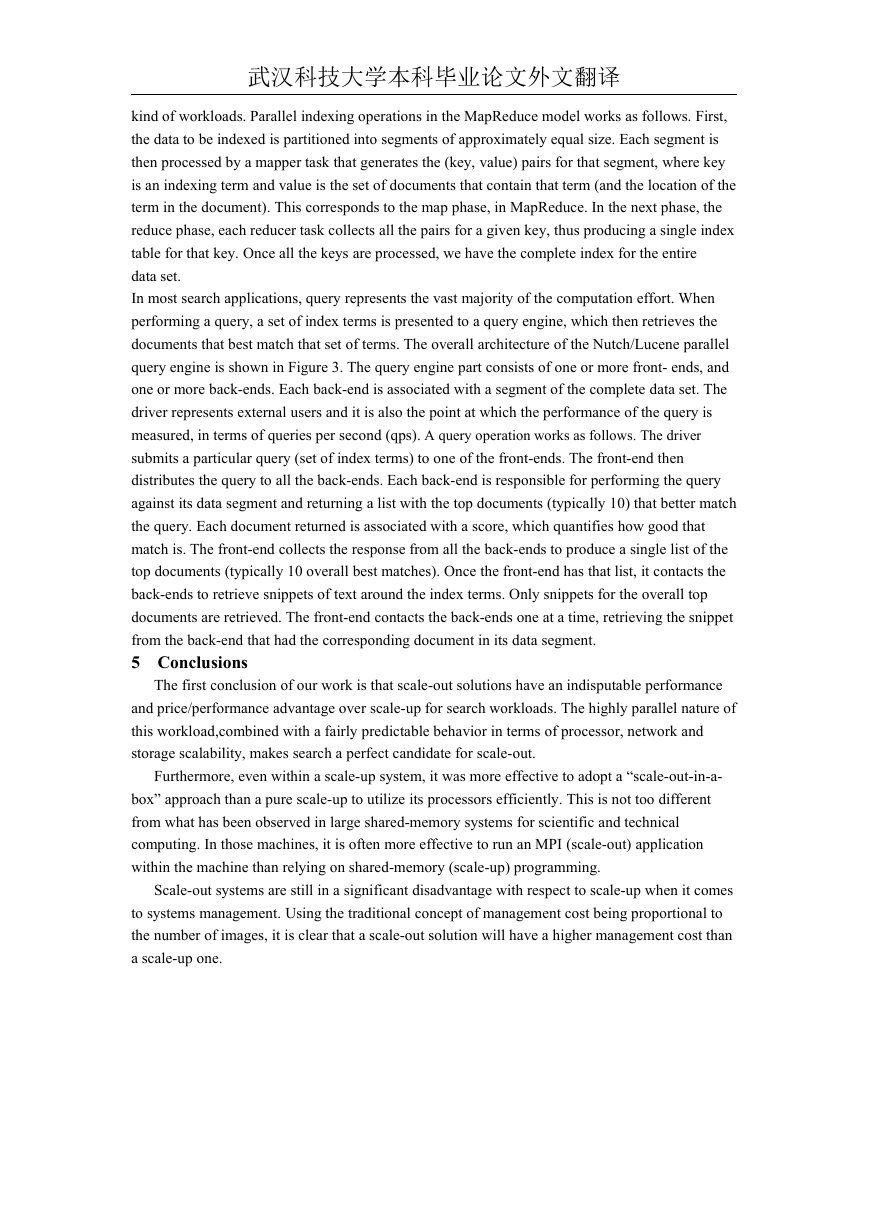

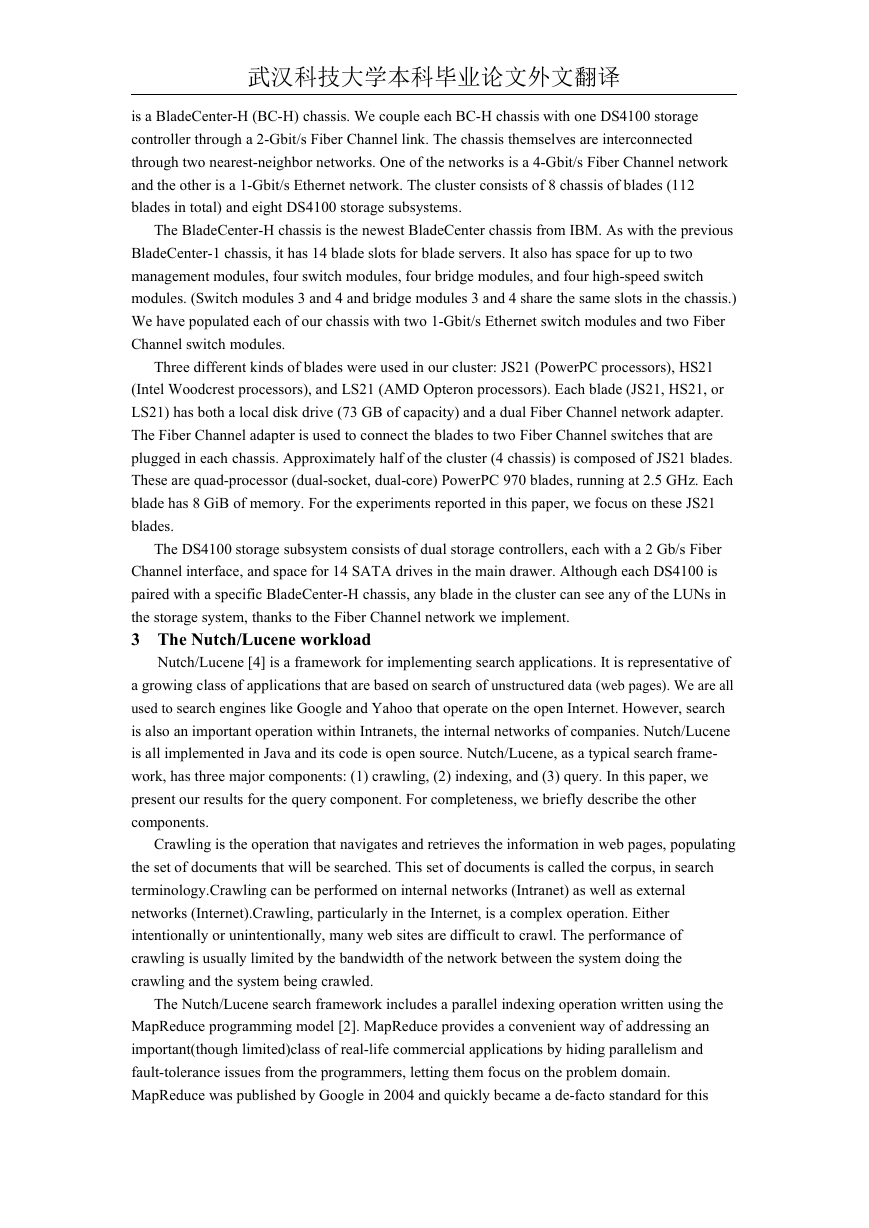

In most search applications, query represents the vast majority of the computation effort. When

performing a query, a set of index terms is presented to a query engine, which then retrieves the

documents that best match that set of terms. The overall architecture of the Nutch/Lucene parallel

query engine is shown in Figure 3. The query engine part consists of one or more front- ends, and

one or more back-ends. Each back-end is associated with a segment of the complete data set. The

driver represents external users and it is also the point at which the performance of the query is

measured, in terms of queries per second (qps). A query operation works as follows. The driver

submits a particular query (set of index terms) to one of the front-ends. The front-end then

distributes the query to all the back-ends. Each back-end is responsible for performing the query

against its data segment and returning a list with the top documents (typically 10) that better match

the query. Each document returned is associated with a score, which quantifies how good that

match is. The front-end collects the response from all the back-ends to produce a single list of the

top documents (typically 10 overall best matches). Once the front-end has that list, it contacts the

back-ends to retrieve snippets of text around the index terms. Only snippets for the overall top

documents are retrieved. The front-end contacts the back-ends one at a time, retrieving the snippet

from the back-end that had the corresponding document in its data segment.

5 Conclusions

The first conclusion of our work is that scale-out solutions have an indisputable performance

and price/performance advantage over scale-up for search workloads. The highly parallel nature of

this workload,combined with a fairly predictable behavior in terms of processor, network and

storage scalability, makes search a perfect candidate for scale-out.

Furthermore, even within a scale-up system, it was more effective to adopt a “scale-out-in-a-

box” approach than a pure scale-up to utilize its processors efficiently. This is not too different

from what has been observed in large shared-memory systems for scientific and technical

computing. In those machines, it is often more effective to run an MPI (scale-out) application

within the machine than relying on shared-memory (scale-up) programming.

Scale-out systems are still in a significant disadvantage with respect to scale-up when it comes

to systems management. Using the traditional concept of management cost being proportional to

the number of images, it is clear that a scale-out solution will have a higher management cost than

a scale-up one.

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc