May 2, 2018

Rancher 2.0:

Technical Architecture

1

�

Contents

Background .................................................................................................................................................... 3

Kubernetes is Everywhere ............................................................................................................................. 3

Rancher 2.0: Built on Kubernetes .................................................................................................................. 3

Rancher Kubernetes Engine (RKE) ............................................................................................................. 4

Unified Cluster Management .................................................................................................................... 4

Application Workload Management ......................................................................................................... 4

High-level Architecture .................................................................................................................................. 5

Rancher Server Components ......................................................................................................................... 6

Rancher API Server .................................................................................................................................... 6

Cluster Controller and Agents ................................................................................................................... 6

Authentication Proxy ................................................................................................................................. 6

Rancher API Server ........................................................................................................................................ 6

Authentication and Authorization ................................................................................................................. 7

User and group management .................................................................................................................... 8

Projects ...................................................................................................................................................... 8

Role management ...................................................................................................................................... 8

Authentication provider integration ......................................................................................................... 8

Cross-cluster management ........................................................................................................................ 8

Upgrade ......................................................................................................................................................... 9

High Availability ............................................................................................................................................. 9

Scalability ....................................................................................................................................................... 9

Scalability of Kubernetes Clusters ............................................................................................................. 9

Scalability of Rancher Server ..................................................................................................................... 9

Appendix A: How Rancher API v3/Cluster objects are implemented ........................................................... 9

Step 1. Define the object and generate the schema/controller interfaces .............................................. 9

Step 2: Add custom logic to API validation .............................................................................................. 10

Step 3: Define object management logic using custom controller ......................................................... 10

3.1 Object lifecycle management ........................................................................................................ 10

3.2 Generic controller .......................................................................................................................... 11

3.3 Object conditions management .................................................................................................... 11

2

�

Background

We developed the Rancher container management platform to address the need to manage containers

in production. Container technologies are developing quickly and, as a result, the Rancher architecture

continues to evolve.

When Rancher 1.0 shipped in early 2016, it included an easy-to-use container orchestration framework

called Cattle. It also supported a variety of industry-standard container orchestrators, including Swarm,

Mesos, and Kubernetes. Early Rancher users loved the idea of adopting a management platform that

gave them the choice of container orchestration frameworks.

In the last year, however, the growth of Kubernetes has far outpaced other orchestrators. Rancher users

are increasingly demanding better user experience and more functionality on top of Kubernetes. We

have, therefore, decided to re-architect Rancher 2.0 to focus solely on Kubernetes technology.

Kubernetes is Everywhere

When we started to build Kubernetes support into Rancher in 2015, the biggest challenge we faced was

how to install and set up Kubernetes clusters. Off-the-shelf Kubernetes scripts and tools were difficult to

use and unreliable. Rancher made it easy to set up a Kubernetes cluster with a click of a button. Better

yet, Rancher enabled you to set up Kubernetes clusters on any infrastructure, including public cloud,

vSphere clusters, and bare metal servers. As a result, Rancher quickly became one of the most popular

ways to launch Kubernetes clusters.

In early 2016, numerous off-the-shelf and third-party installers for Kubernetes became available. The

challenge was no longer how to install and configure Kubernetes, but how to operate and upgrade

Kubernetes clusters on an on-going basis. Rancher made it easy to operate and upgrade Kubernetes

clusters and its associated etcd database.

By the end of 2016, we started to notice that the value of Kubernetes operations software was rapidly

diminishing. Two factors contributed to this trend. First, open-source tools, such as Kubernetes

Operations (kops), have reached a level of maturity that made it easy for many organizations to operate

Kubernetes on AWS. Second, Kubernetes-as-a-service started to gain popularity. A Google Cloud user,

for example, no longer needed to set up and operate their own clusters. They could use Google

Container Engine (GKE) instead.

The popularity of Kubernetes continues to grow in 2017. The momentum is not slowing. Amazon Web

Services (AWS) announced Elastic Container Service for Kubernetes (EKS) in November 2017.

Kubernetes-as-a-service is available from all major cloud providers. Unless they use VMware clusters

and bare metal servers, DevOps teams will no longer need to operate Kubernetes clusters themselves.

The only remaining challenge will be how to manage and utilize Kubernetes clusters, which are available

everywhere.

Rancher 2.0: Built on Kubernetes

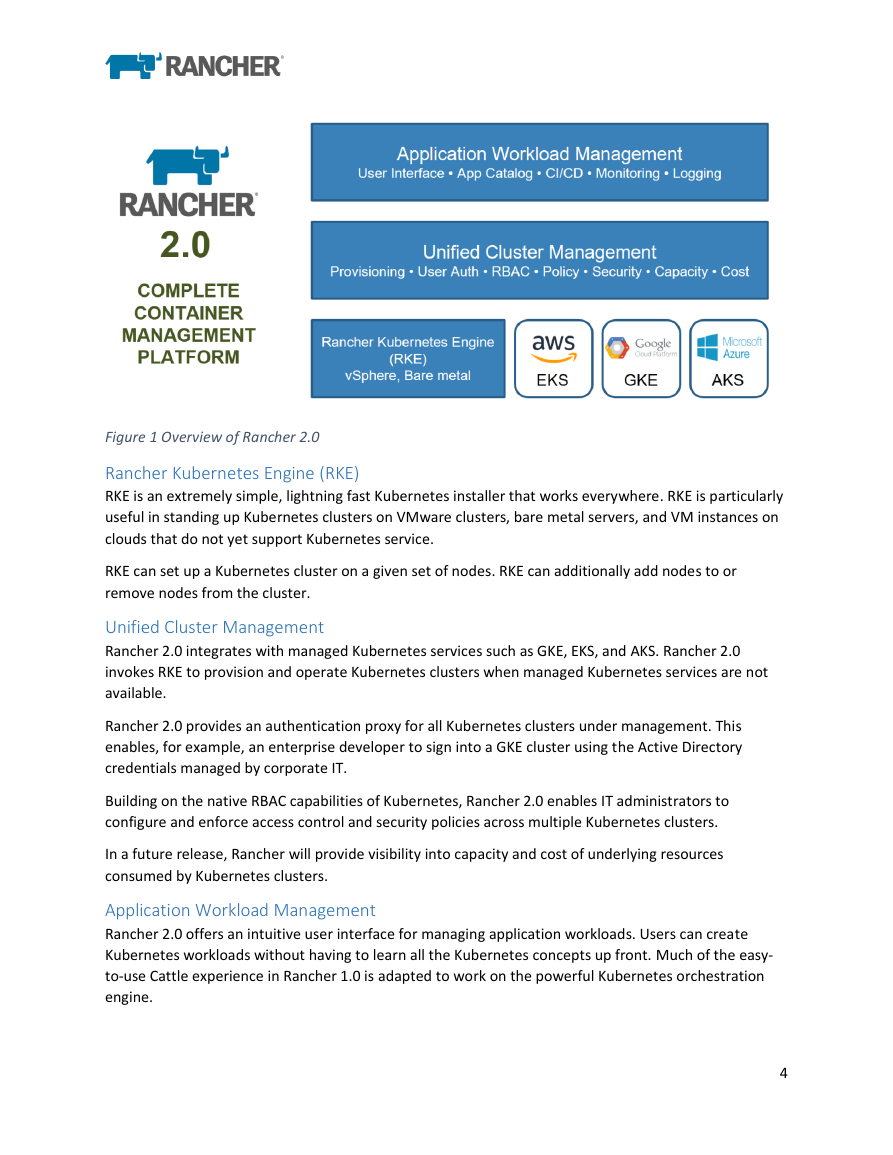

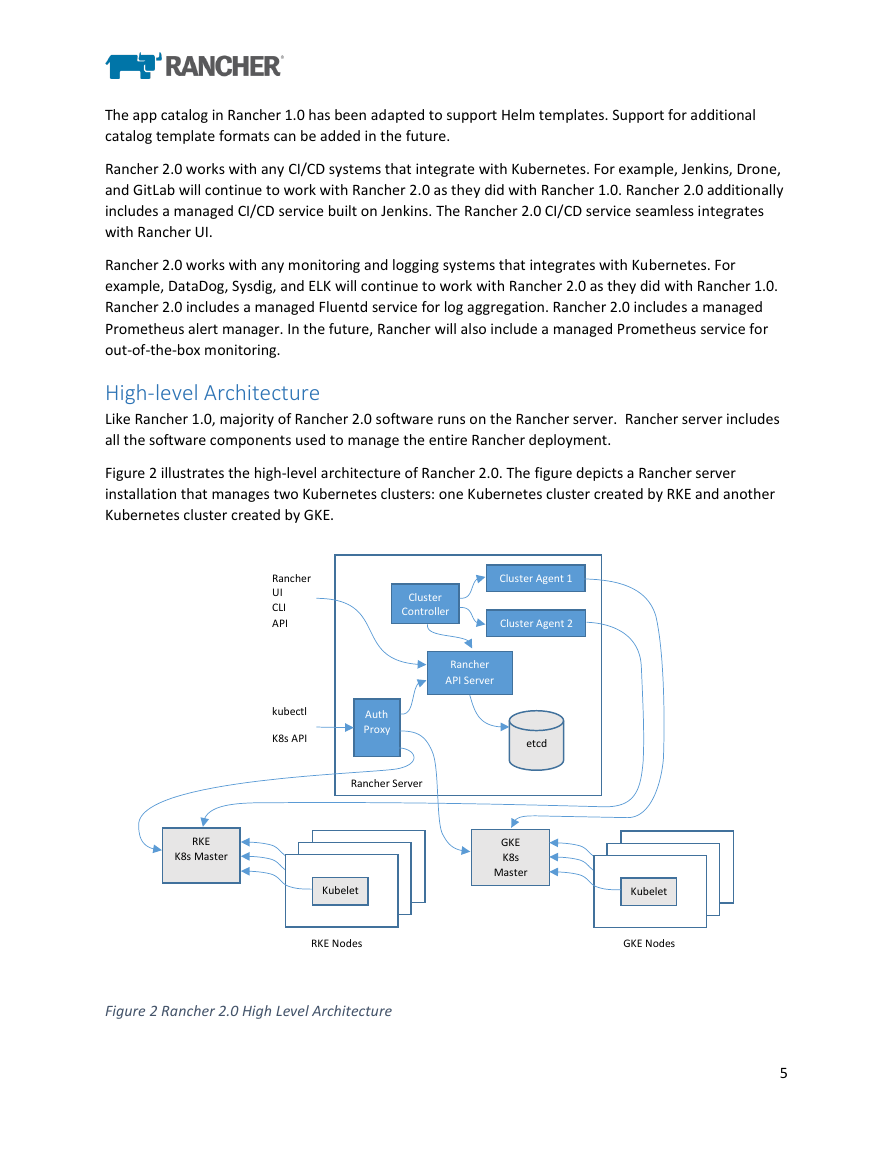

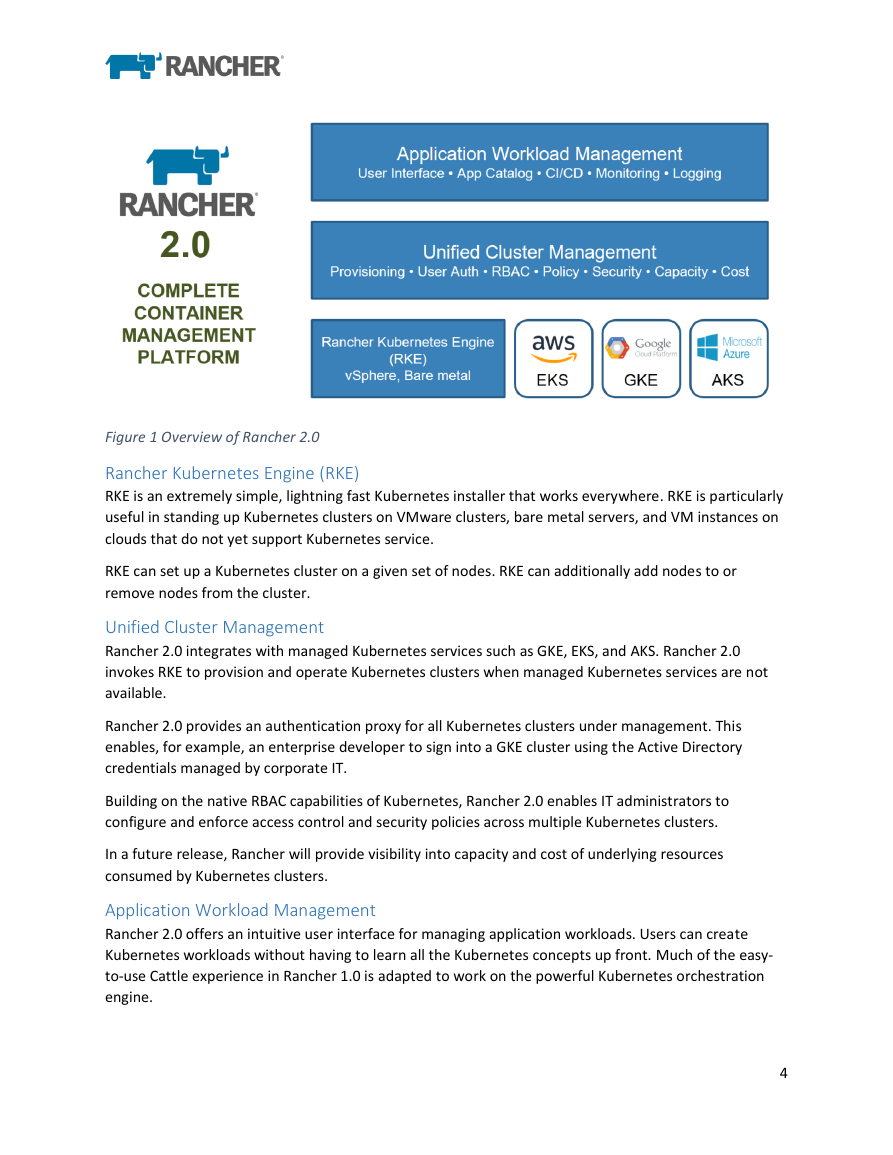

Rancher 2.0 is a complete container management platform built on Kubernetes. As illustrated in Figure

1, Rancher 2.0 contains three major components.

3

�

Figure 1 Overview of Rancher 2.0

Rancher Kubernetes Engine (RKE)

RKE is an extremely simple, lightning fast Kubernetes installer that works everywhere. RKE is particularly

useful in standing up Kubernetes clusters on VMware clusters, bare metal servers, and VM instances on

clouds that do not yet support Kubernetes service.

RKE can set up a Kubernetes cluster on a given set of nodes. RKE can additionally add nodes to or

remove nodes from the cluster.

Unified Cluster Management

Rancher 2.0 integrates with managed Kubernetes services such as GKE, EKS, and AKS. Rancher 2.0

invokes RKE to provision and operate Kubernetes clusters when managed Kubernetes services are not

available.

Rancher 2.0 provides an authentication proxy for all Kubernetes clusters under management. This

enables, for example, an enterprise developer to sign into a GKE cluster using the Active Directory

credentials managed by corporate IT.

Building on the native RBAC capabilities of Kubernetes, Rancher 2.0 enables IT administrators to

configure and enforce access control and security policies across multiple Kubernetes clusters.

In a future release, Rancher will provide visibility into capacity and cost of underlying resources

consumed by Kubernetes clusters.

Application Workload Management

Rancher 2.0 offers an intuitive user interface for managing application workloads. Users can create

Kubernetes workloads without having to learn all the Kubernetes concepts up front. Much of the easy-

to-use Cattle experience in Rancher 1.0 is adapted to work on the powerful Kubernetes orchestration

engine.

4

�

The app catalog in Rancher 1.0 has been adapted to support Helm templates. Support for additional

catalog template formats can be added in the future.

Rancher 2.0 works with any CI/CD systems that integrate with Kubernetes. For example, Jenkins, Drone,

and GitLab will continue to work with Rancher 2.0 as they did with Rancher 1.0. Rancher 2.0 additionally

includes a managed CI/CD service built on Jenkins. The Rancher 2.0 CI/CD service seamless integrates

with Rancher UI.

Rancher 2.0 works with any monitoring and logging systems that integrates with Kubernetes. For

example, DataDog, Sysdig, and ELK will continue to work with Rancher 2.0 as they did with Rancher 1.0.

Rancher 2.0 includes a managed Fluentd service for log aggregation. Rancher 2.0 includes a managed

Prometheus alert manager. In the future, Rancher will also include a managed Prometheus service for

out-of-the-box monitoring.

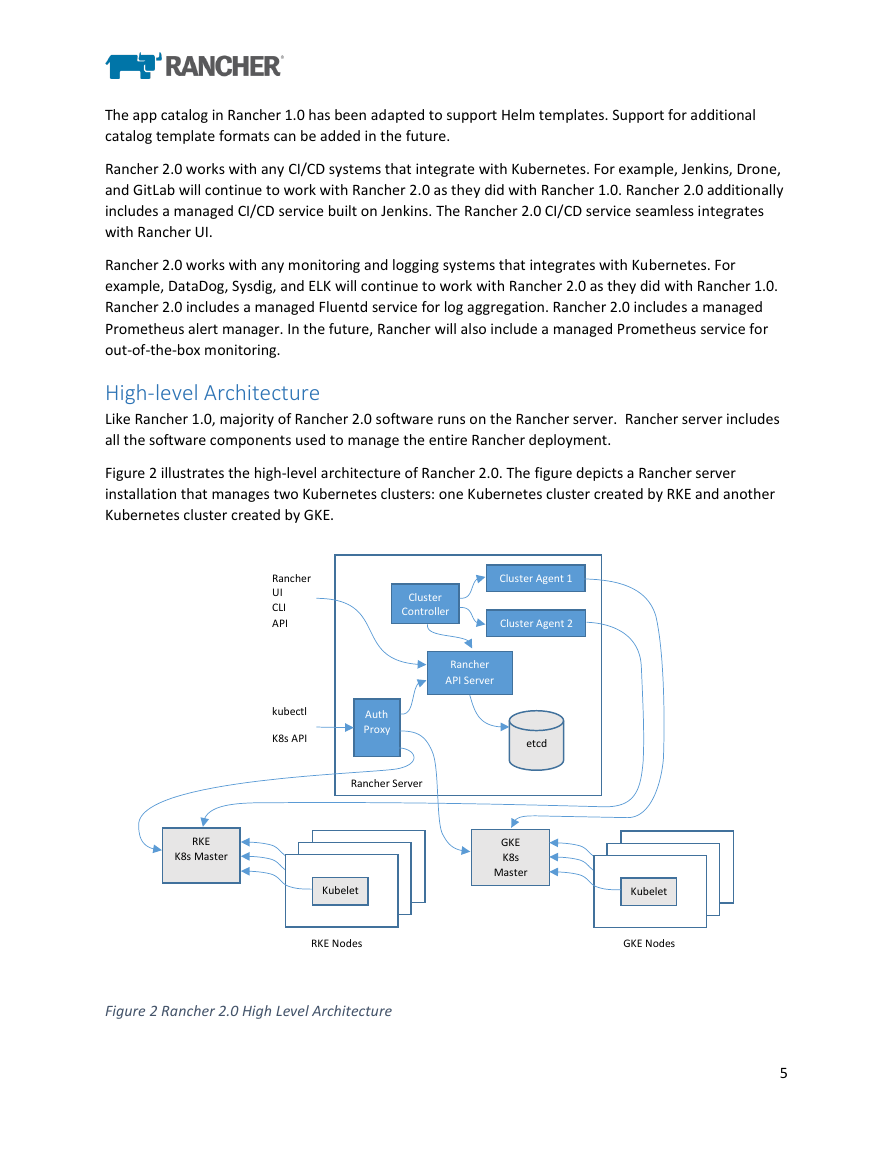

High-level Architecture

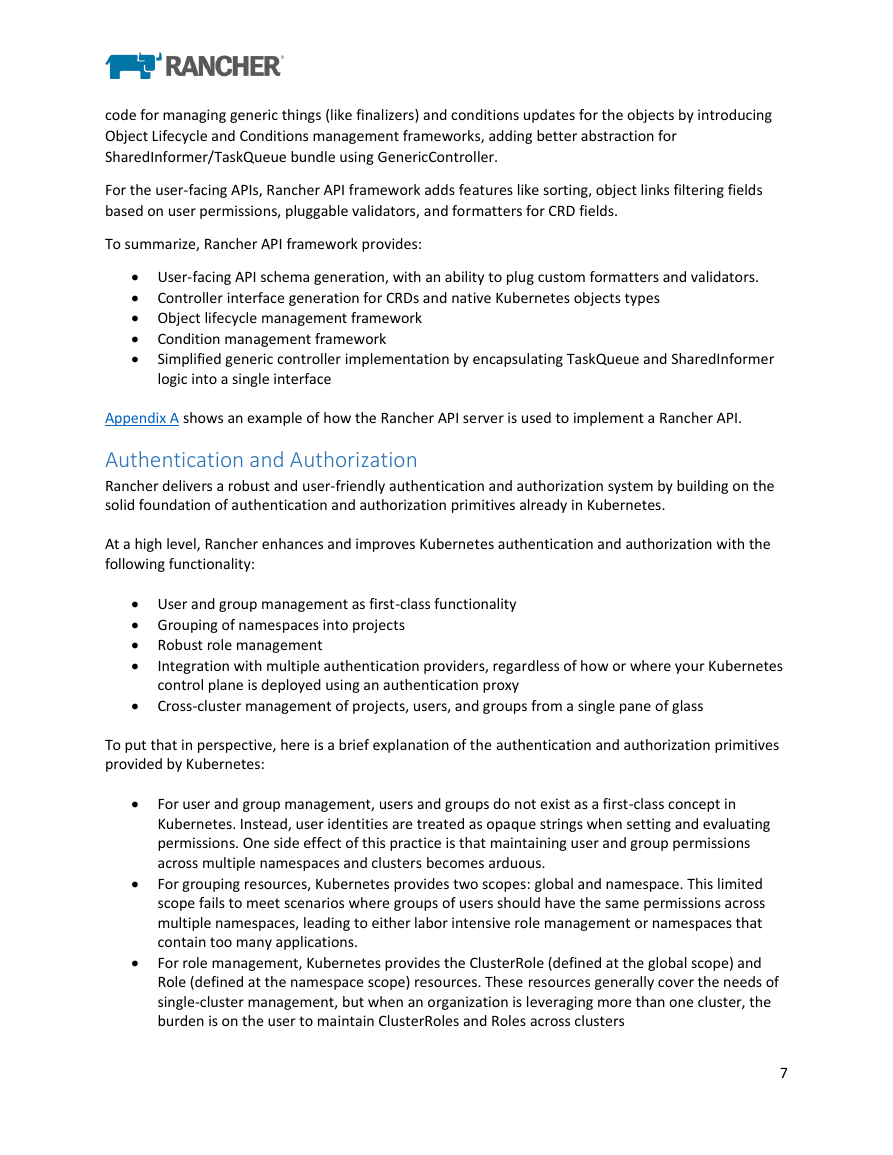

Like Rancher 1.0, majority of Rancher 2.0 software runs on the Rancher server. Rancher server includes

all the software components used to manage the entire Rancher deployment.

Figure 2 illustrates the high-level architecture of Rancher 2.0. The figure depicts a Rancher server

installation that manages two Kubernetes clusters: one Kubernetes cluster created by RKE and another

Kubernetes cluster created by GKE.

Rancher

UI

CLI

API

Cluster

Controller

Cluster Agent 1

Cluster Agent 2

Rancher

API Server

etcd

GKE

K8s

Master

kubectl

K8s API

Auth

Proxy

Rancher Server

RKE

K8s Master

Kubelet

RKE Nodes

Figure 2 Rancher 2.0 High Level Architecture

Kubelet

GKE Nodes

5

�

Rancher Server Components

In this section we describe the functionalities of each Rancher server components.

Rancher API Server

Rancher API server is built on top of an embedded Kubernetes API server and etcd database. It

implements the following functionalities:

1. User management. Rancher API server manages user identities that correspond to external

authentication providers like Active Directory or GitHub.

2. Authorization. Rancher API server manages access control and security policies.

3. Projects. A project is a grouping of multiple namespaces within a cluster.

4. Nodes. Rancher API server tracks identities of all the nodes in all clusters.

Cluster Controller and Agents

The cluster controller and cluster agents implement the business logic required to manage Kubernetes

clusters. All the logic that is global to the entire Rancher install is implemented by the cluster controller.

A separate cluster agent instance implements the logic required for the corresponding cluster.

Cluster agents perform the following activities:

1. Manage workload. This includes, for example, creating pods and deployments in each cluster.

2. Applying roles and bindings that are defined in global policies into every cluster.

3. Propagate information from cluster to Rancher server: events, stats, node info, and health.

The cluster controller performs the following activities:

1. Configures access control policies to clusters and projects.

2. Provisions clusters by invoking the necessary Docker machine drivers and invoking Kubernetes

engines like RKE and GKE.

Authentication Proxy

The authentication proxy handles all Kubernetes API calls. It integrates with authentication services like

local authentication, Active Directory, and GitHub. On every Kubernetes API call, the authentication

proxy authenticates the caller and sets the proper Kubernetes impersonation headers before forwarding

the call to Kubernetes masters. Rancher communicates with Kubernetes clusters using a service account.

Rancher API Server

Rancher API server is built on top of an embedded Kubernetes API server and etcd database. All

Rancher-specific resources created using the Rancher API get translated to CRD (Custom Resource

Definition) objects, with their lifecycles being managed by one or several Rancher controllers.

When it comes to Kubernetes resource management, there are several code patterns that the

Kubernetes open-source community follows for controller development in Go programming language,

most of them involving use of the client-go library (https://github.com/kubernetes/client-go ). The

library has nice utilities like Informer/SharedInformer/TaskQueue that make it easy to watch and react

on resource changes, as well as maintain in-memory cache to minimize the number of direct calls to the

API server. Rancher API framework extends client-go functionality to save users from writing custom

6

�

code for managing generic things (like finalizers) and conditions updates for the objects by introducing

Object Lifecycle and Conditions management frameworks, adding better abstraction for

SharedInformer/TaskQueue bundle using GenericController.

For the user-facing APIs, Rancher API framework adds features like sorting, object links filtering fields

based on user permissions, pluggable validators, and formatters for CRD fields.

To summarize, Rancher API framework provides:

• User-facing API schema generation, with an ability to plug custom formatters and validators.

• Controller interface generation for CRDs and native Kubernetes objects types

• Object lifecycle management framework

• Condition management framework

• Simplified generic controller implementation by encapsulating TaskQueue and SharedInformer

logic into a single interface

Appendix A shows an example of how the Rancher API server is used to implement a Rancher API.

Authentication and Authorization

Rancher delivers a robust and user-friendly authentication and authorization system by building on the

solid foundation of authentication and authorization primitives already in Kubernetes.

At a high level, Rancher enhances and improves Kubernetes authentication and authorization with the

following functionality:

• User and group management as first-class functionality

• Grouping of namespaces into projects

• Robust role management

•

Integration with multiple authentication providers, regardless of how or where your Kubernetes

control plane is deployed using an authentication proxy

• Cross-cluster management of projects, users, and groups from a single pane of glass

To put that in perspective, here is a brief explanation of the authentication and authorization primitives

provided by Kubernetes:

• For user and group management, users and groups do not exist as a first-class concept in

Kubernetes. Instead, user identities are treated as opaque strings when setting and evaluating

permissions. One side effect of this practice is that maintaining user and group permissions

across multiple namespaces and clusters becomes arduous.

• For grouping resources, Kubernetes provides two scopes: global and namespace. This limited

scope fails to meet scenarios where groups of users should have the same permissions across

multiple namespaces, leading to either labor intensive role management or namespaces that

contain too many applications.

• For role management, Kubernetes provides the ClusterRole (defined at the global scope) and

Role (defined at the namespace scope) resources. These resources generally cover the needs of

single-cluster management, but when an organization is leveraging more than one cluster, the

burden is on the user to maintain ClusterRoles and Roles across clusters

7

�

• For integration with authentication providers, Kubernetes has an excellent plugin mechanism

that allows teams deploying their own clusters to integrate with many providers. However, this

feature is unavailable to those wishing to leverage cloud-based Kubernetes offerings such as

GKE.

• Cross-cluster management of resources is still in its nascent stage in native Kubernetes and the

long-term direction the technology will take is still unknown.

The following sections describe each of Rancher’s authentication and authorization features in greater

detail.

User and group management

Through the Rancher API and UI, users can be created, viewed, updated, and deleted. Users can be

associated with external authentication provider identities (like GitHub or LDAP) and a local username

and password. This provides greater flexibility and guards against downtime at the authentication

provider. Similarly, groups can be created, viewed, updated, and removed from the Rancher API and UI.

A group membership can be a mix of explicitly defined users or group identities from the authentication

provider. For example, you can map easily map a LDAP group to a Rancher group.

Projects

Using the Rancher API or UI, users can create projects and assign users or groups to them. Users can be

assigned different roles within a project and across multiple projects. For example, a developer can be

given full create/read/update/delete privilege in a "dev" project but just read-only access in the staging

and production projects. Furthermore, with Rancher, namespaces are scoped to projects. So, users can

only create, modify, or delete namespaces in the projects they are a member of. This authorization

greatly enhances the multi-tenant self-service functionality of Kubernetes.

Role management

The primary value that Rancher offers with role management is the ability to manage these roles (and to

whom they are assigned) from a single global interface that spans clusters, projects, and namespaces. To

achieve this global role management, Rancher has introduced the concept of "role templates" that are

synced across all clusters.

Authentication provider integration

As stated previously, while Kubernetes has a good authentication provider plugin framework, that

functionality simply isn't available when using Kubernetes from a cloud provider. To overcome this

shortcoming, Rancher provides an authentication proxy that sits in front of Kubernetes. This means that

regardless of where or how your clusters are deployed, the same authentication provider can be used.

Cross-cluster management

Kubernetes is largely focused on single-cluster deployments at this point. In contrast, Rancher assumes

multi-cluster deployments from the start. As a result, all authentication and authorization features are

designed to span large multi-cluster deployments. This increased scope greatly simplifies the burden of

onboarding and managing new users and teams.

8

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc