RESEARCH ON DATA MIGRATION OPTIMIZATION OF CEPH

MANQI HUANG, LAN LUO, YOU LI, LIANG LIANG

Chengdu University of Electronic Science and Technology University, Chengdu 611730, China

E-MAIL: qiluoli@126.com

Abstract:

Benefiting from its excellent performance and scalability,

Ceph distributed storage is also confronting the problems, i.e.

the unnecessary transfer of the data leading to the increasing

consumption of the system which is triggered by the additions

and deletions on the equipment. Aiming at the implement

method of Ceph in system algorithm and logical layout, this

paper has carried on research, analyzing its defects in the

aspect of data transfer and resource consumption and puts

forward the handling method of the failure node when the

cluster storage device fails. Through the establishment of the

cluster device flag in the process of data transfer, the

optimized scheme of the data migration in the environment of

production is realized, and the utilization of the system

resources is improved. The experimental test results have

shown that the scheme can reduce about the 30% -40% of

transfer volume, effectively lower the resources consumption

of Ceph distributed storage, as well as prevent invalid and

excessive data transfer.

Keywords:

Logical layout

Distributed storage; Data migration; Ceph storage;

1.

Introduction

The network era has developed tremendously with the

cloud computing, the global data volume has explosively

increased, and the demands of the big data storage have

undergone the tremendous changes. The size of the data

level has increased from Level PB to Level ZB and still

been growing. The development of big data has also

contributed to the rapid development of computing

network and storage technologies. Enterprises take the

depth analysis of data as the supporting point of profit

growth. The demands of analytical application of big data

are affecting the development of data storage infrastructure

[1]. In the terms of storage, Ceph is one of the admitted

excellent open source solutions now whose carrying-out

idea is SDS(Software-defined Storage). Ceph organizes the

resources of multiple machines and provides unified, large

capacity, high performance, and high reliable file services

for the outside to meet the needs of the large scale

978-1-5386-1010-7/17/$31.00 ©2017 IEEE 83

applications, so the architecture design can be easily

extended to PB level [2].

The optimization techniques for Ceph distributed

storage has also been concerned. The document [3]

proposes an adaptive disk speed reduction algorithm of

Ceph OSD (object stored device). The algorithm aims at

every single OSD, reducing the corresponding disk speed in

the low load and entering the energy saving condition. They

work only for saving energy on the part of OSD disks, so

the impact on the energy consumption of the whole system

is very limited. The document combines the characteristics

of the Crush algorithm and introduces the bucket of

configuration group PG (Power Group) in Crush Map to

redivide the set of fault domains. It is also called that data

replicas are first to be distributed among different power

groups before they are placed in different fault domains.

The nodes of the same power group are in the same energy

consumption condition, and the number of power groups is

equal to that of the copies [4]. However, these methods

cannot optimize the transfer number of PG during the

process of transfer.

This paper is optimized for the high performance data

migration in Ceph storage system which is the most popular

distributed open source cloud storage, and has a test

comparison between the optimized operation and the

original operation to verify the effectiveness of the

optimization. The optimization method proposed from this

paper can solve the excessive load of data migration caused

by Ceph storage, avoid the data loss caused by node failure

and improve the availability of Ceph object storage cluster.

2. Ceph systems and related algorithms

In a Ceph cluster, in order to store and manage the

data better, the data location is not obtained by look-up

table or index. Instead, it is calculated by the CRUSH, the

Controlled Replication under Scalable Hashing. Because

the simple HASH distribution algorithm cannot deal with

the change of the number of devices effectively, it will lead

to a great deal of data migration [5].

�

2.1. Introduction to CRUSH algorithm

Ceph developed the CRUSH algorithm to distribute

copies of objects efficiently in hierarchical storage clusters.

CRUSH implements a pseudo random (deterministic)

function whose parameters are object id or object group id,

and returns to a set of storage devices (used to save the

object copy OSD). The implementation of the CRUSH

algorithm requires Cluster Map (describes the hierarchical

structure of the storage cluster) and replica distribution

policy (rule) [6].

Thus, the CRUSH algorithm affects the distribution of

all the data in the cluster. In distributed storage system, how

to store data uniformly in each node, and keep low

consumption in data migration is an important index to

evaluate a distributed storage system.

2.2. Influence factors of CRUSH algorithm

There are two factors that affect the results of the

CRUSH algorithm. One is the structure of Cluster Map, and

the other is CRUSH Rule.

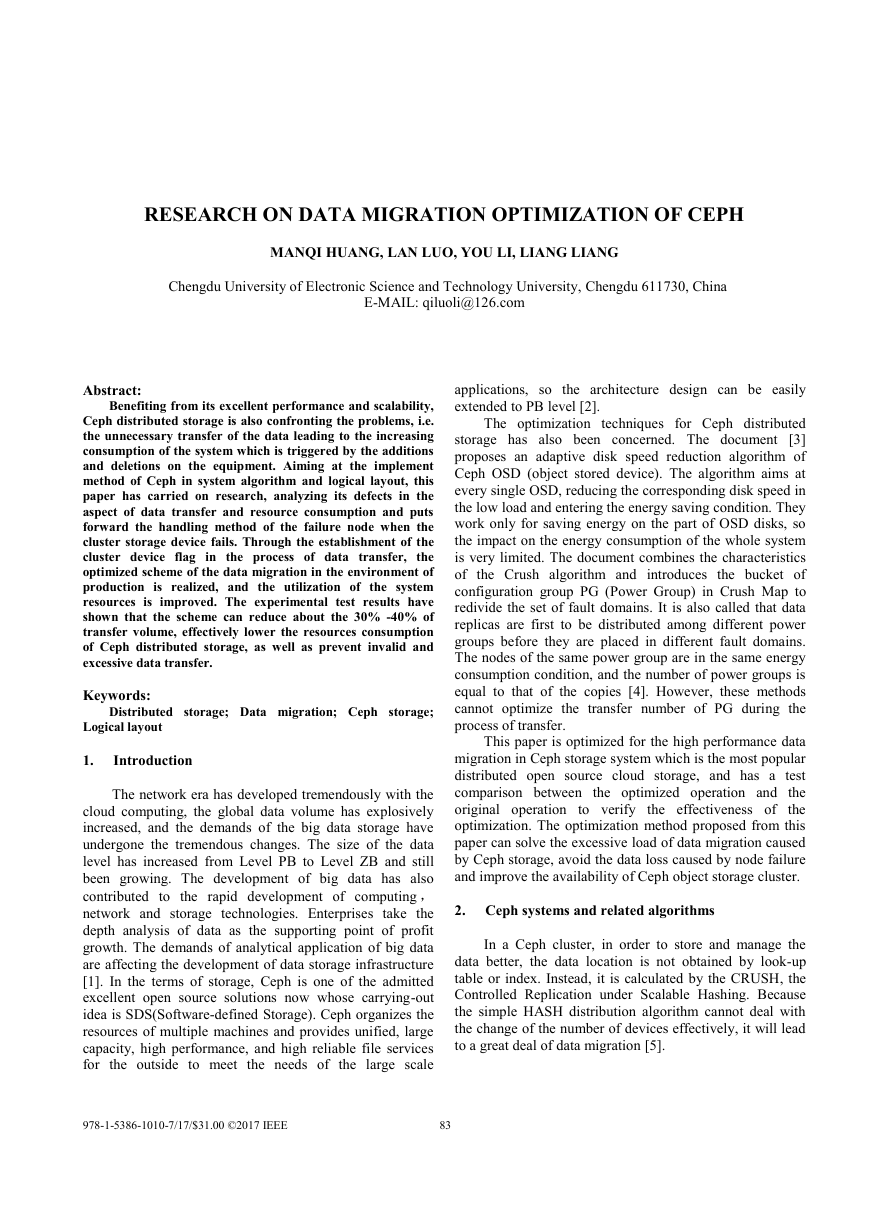

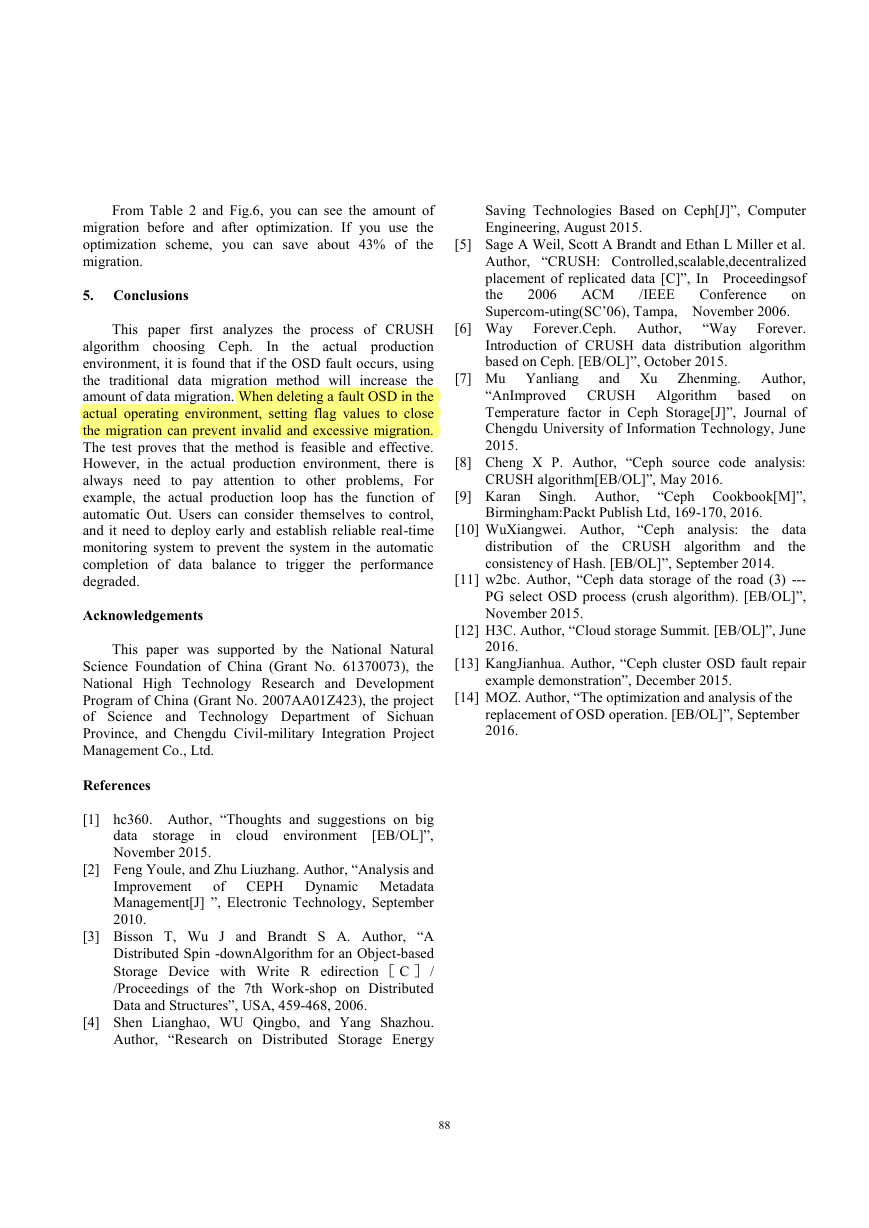

Cluster Map manages all the OSD in the current Ceph,

and Cluster Map specifies a range of the CRUSH algorithm

in which OSD is selected. Cluster Map is a tree structure

whose leaf nodes represent device (it is also called OSD)

and other nodes are called bucket nodes which are

imaginary nodes and can be abstracted according to their

physical structure. The tree structure has only one final root

node called the root node, and the virtual bucket node in the

middle can be the data center abstraction, machine room

abstraction, frame abstraction, and host abstraction. Each

node has a weight value that equals to the sum of the

weights of all the child nodes. The weight of the leaf node

is determined by the capacity of the OSD, and generally

setting the weight of the 1T is 1. This weight value also

plays an important role in the CRUSH algorithm. The data

layout of OSD selected through CRUSH is shown in Fig.1

[7].

Fig.1 Cluster Map Structure drawing

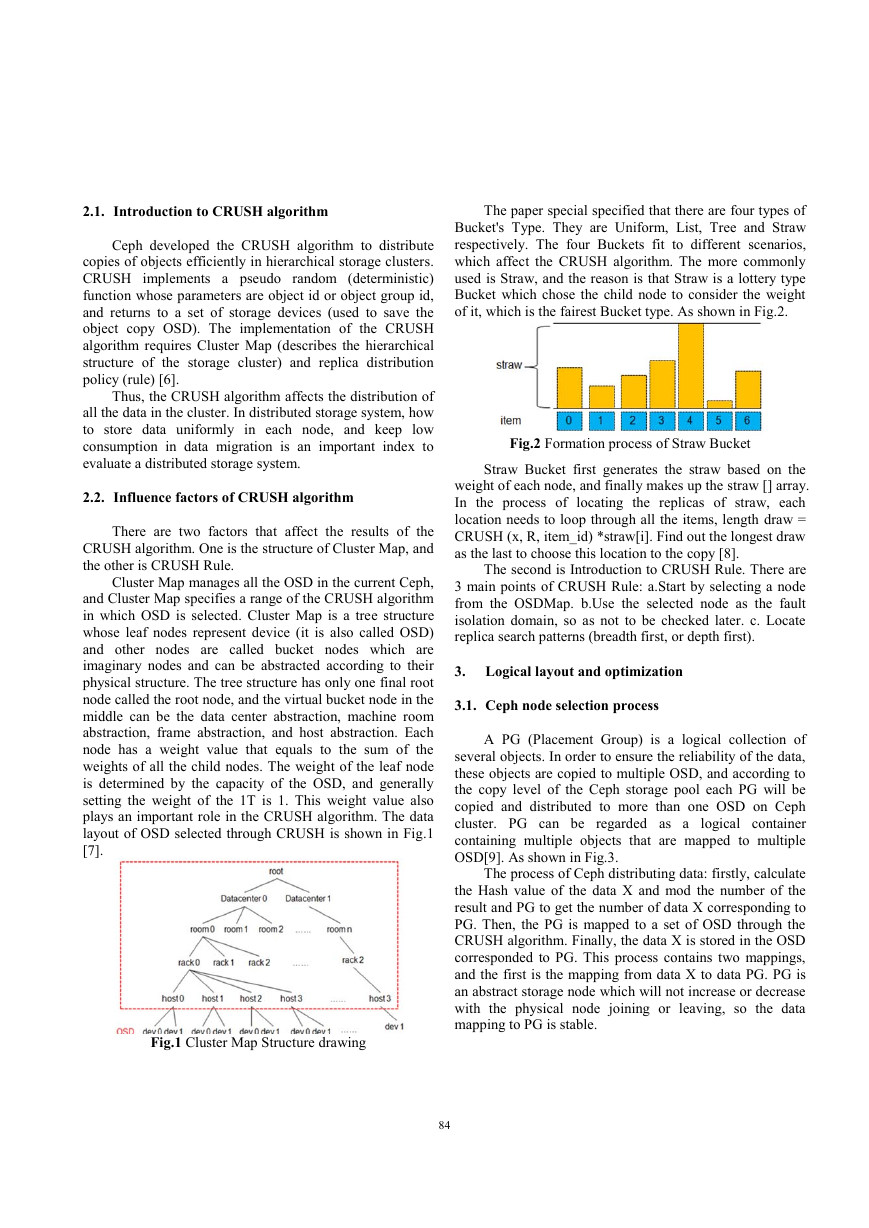

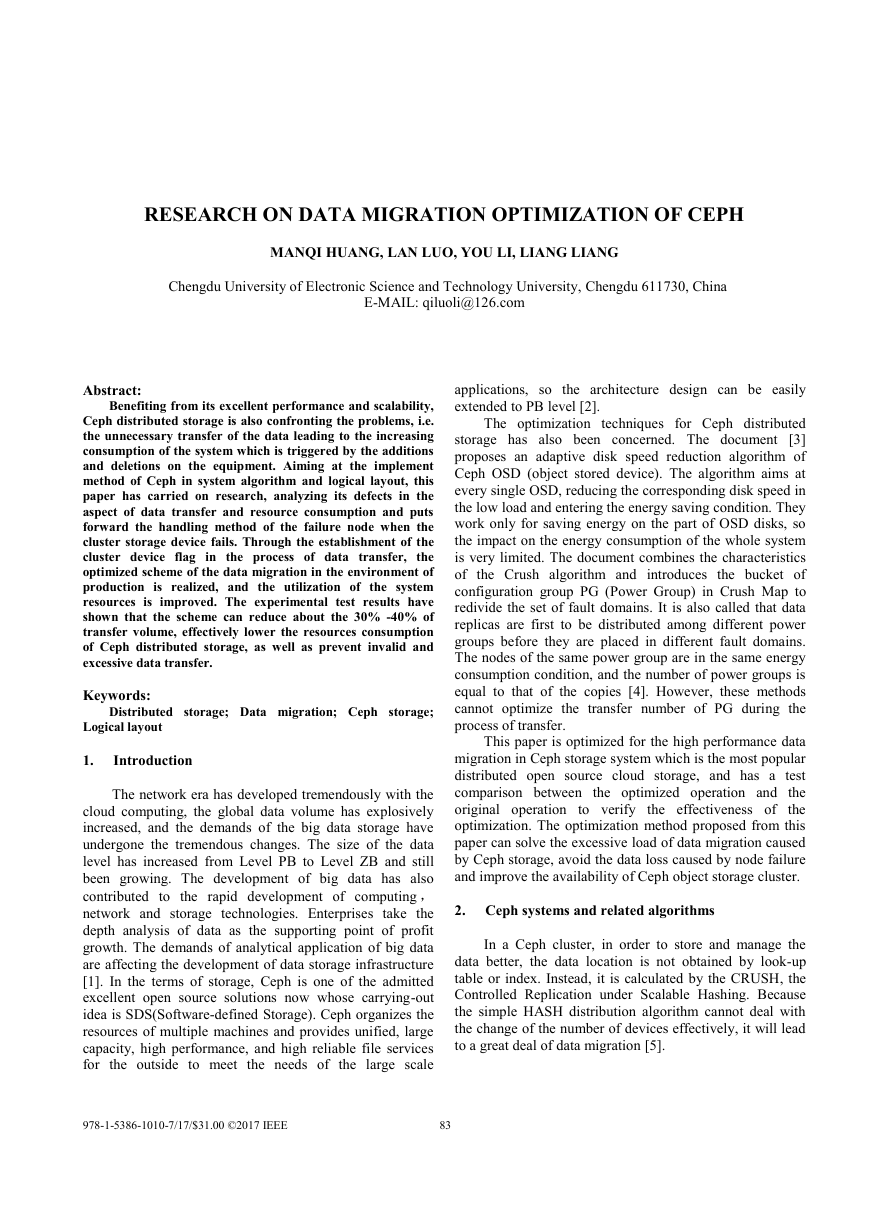

The paper special specified that there are four types of

Bucket's Type. They are Uniform, List, Tree and Straw

respectively. The four Buckets fit to different scenarios,

which affect the CRUSH algorithm. The more commonly

used is Straw, and the reason is that Straw is a lottery type

Bucket which chose the child node to consider the weight

of it, which is the fairest Bucket type. As shown in Fig.2.

Fig.2 Formation process of Straw Bucket

Straw Bucket first generates the straw based on the

weight of each node, and finally makes up the straw [] array.

In the process of locating the replicas of straw, each

location needs to loop through all the items, length draw =

CRUSH (x, R, item_id) *straw[i]. Find out the longest draw

as the last to choose this location to the copy [8].

The second is Introduction to CRUSH Rule. There are

3 main points of CRUSH Rule: a.Start by selecting a node

from the OSDMap. b.Use the selected node as the fault

isolation domain, so as not to be checked later. c. Locate

replica search patterns (breadth first, or depth first).

3. Logical layout and optimization

3.1. Ceph node selection process

A PG (Placement Group) is a logical collection of

several objects. In order to ensure the reliability of the data,

these objects are copied to multiple OSD, and according to

the copy level of the Ceph storage pool each PG will be

copied and distributed to more than one OSD on Ceph

cluster. PG can be regarded as a logical container

containing multiple objects that are mapped to multiple

OSD[9]. As shown in Fig.3.

The process of Ceph distributing data: firstly, calculate

the Hash value of the data X and mod the number of the

result and PG to get the number of data X corresponding to

PG. Then, the PG is mapped to a set of OSD through the

CRUSH algorithm. Finally, the data X is stored in the OSD

corresponded to PG. This process contains two mappings,

and the first is the mapping from data X to data PG. PG is

an abstract storage node which will not increase or decrease

with the physical node joining or leaving, so the data

mapping to PG is stable.

84

�

selects an OSD under host. As shown in Fig.4.

This is a PG (x0) mapping selection process.

1.

rep=0r=0,c(root,x0,0)=host0

c(host0.x0,0)=OSD.0,ok

rep=1r=1,c(root,x0,1)=host2

c(host2.x0,1)=OSD.8,ok

rep=2r=2,c(root,x0,2)=host1

c(host1.x0,2)=OSD.3,ok

2.

3.

Eventually, the PG is mapped to [0,8,3].

Fig.3 The logical diagram of PG

In the process of (osd0, osd1, osd2 … osdn) =

CRUSH(x), PG played two roles: the first is to partition the

data partitions. Each data range managed by PG is same, so

the data can be evenly distributed over the PG. The second

function is to play the role of Token, which determines the

location of the partition [10].

In the process of selecting OSD from the client PG,

first of all, you need to know which node in Cluster Map

starts to find from rules, and the entry point defaults to

default that is the root node. Then the isolated domain is

host node (that is to say, the same host cannot select two

child nodes) [11]. In the selection process from default to

host, the default selects the next child node based on the

bucket type of the node. According to the types of them, the

child node never stops until it chooses the host, and then

3.2. Problems arise

Throughout the operation, the OSD daemon of Ceph

checks the heartbeat of each OSD, and report to Ceph's

Monitor. If a node's OSD is broken, Monitor will set the

state of the OSD to Down.

When the OSD.0 data is corrupted, we would only

have expected to migrate OSD.0. However, due to the

backup redundancy of Ceph 3 copies’ form, when the

OSD.0 state is Down, needing to select an additional OSD

on the host0. Then select one copy from (OSD.3, OSD.8).

However, because the other PG OSD.3 and OSD.8 data

may migrate with additional 30% to 70% migration

increased. In extreme cases, when the OSD.0 is DOWN and

OSD.8 serves as Primary, two another new OSD are added

will cause a large amount of data load. If the appropriate

OSD is not selected, it will drop this node and select again,

while Choose does not consider the following steps when

selecting Bucket, determining directly after the selection.

This requires us to do some optimizations on the problem

of node replacement.

Previously, the problem of replacing the faulty nodes

in the Ceph cluster as follows.

Fig.4 Ceph structure diagram

85

�

/etc/init.d/Ceph stop

4. Application and test

a) Stop

the OSD process:

OSD.0

b) Mark node status as Out: Ceph OSD out OSD.0

Tell Monitor that the node is already out of service, and

that data needs to be restored on other OSD.

c) Remove nodes in CRUSH: Ceph OSD CRUSH

remove OSD.0

Let the clustering CRUSH be recalculated at a time,

otherwise the CRUSH Weight of the node will affect the

current host's Host CRUSH Weight.

d) Delete node: Ceph OSD rm OSD.0.

The operation removes the record of this node from

the cluster.

e) Delete node authentication, and numbers will be

occupied without deleting: Ceph auth del OSD.0

This operation removes information about this node

from authentication

The above operation will trigger the two migrations,

one after the node OSD and the other after the CRUSH

Remove, and the two migrations are very bad for the

cluster.

3.3. Configuration optimization

a) Make multiple tags for the cluster to prevent

migration.

Norebalance, this mark bit will make the CEPH cluster

not doing any cluster rebalancing.

Nobackfill, this mark bit will make the CEPH cluster

not doing any data backfill.

Norecover, this mark bit will make the CEPH cluster

not doing any cluster rebalance [9].

Remarks: there will be some places behind talking

about to remove these settings

b) CRUSH Reweight specifies the OSD value is 0.

Stop the OSD process to tell the cluster that this OSD

is no longer mapping data and no more serving. Because

there is no weight, it will not affect the overall distribution,

and there will be no migration.

c) CRUSH Remove specifies the OSD.

Delete the specified OSD. Deleting from CRUSH, and

it is already 0, so there is no impact on the host's weight,

thus there is no migration.

Delete node: Ceph OSD rm OSD.0

Delete the record of this node from the cluster.

d) Add new OSD.

e) Remove flag.

Because the middle state is only marked and no data

migration occurs, the migration of data occurs only after the

tag is lifted [14].

The basic environment consists of 3 nodes. Each node

has 3 OSD (50G). The number of copies is set to 3, and the

number of PG is set to 664.

4.1. Application test

4.1.1.

Original method

set noout

Ceph osd set noout

# Ceph pg dump pgs|awk '{print $1,$15}'|grep -v pg >

pg1.txt

stop osd process

# /etc/init.d/Ceph stop osd.4

out osd

# Ceph osd out 4

wait rebalance

# Ceph pg dump pgs|awk '{print $1,$15}'|grep -v pg >

pg2.txt

# diff -y -W 100 pg1.txt pg2.txt

--suppress-common-lines

# diff -y -W 100 pg1.txt pg2.txt

--suppress-common-lines|wc -l

531

remove crush and osd

# Ceph osd crush remove osd.4

Ceph auth del osd.1

Ceph osd rm 1

wait rebalance

# Ceph pg dump pgs|awk '{print $1,$15}'|grep -v

pg > pg3.txt

# diff -y -W 100 pg2.txt pg3.txt

--suppress-common-lines|wc -l

90

add osd

# Ceph-deploy osd prepare --zap-disk Ceph1:/dev/vdd

# Ceph-deploy osd activate-all

wait rebalance

# Ceph pg dump pgs|awk '{print $1,$15}'|grep -v pg >

pg4.txt

86

�

number

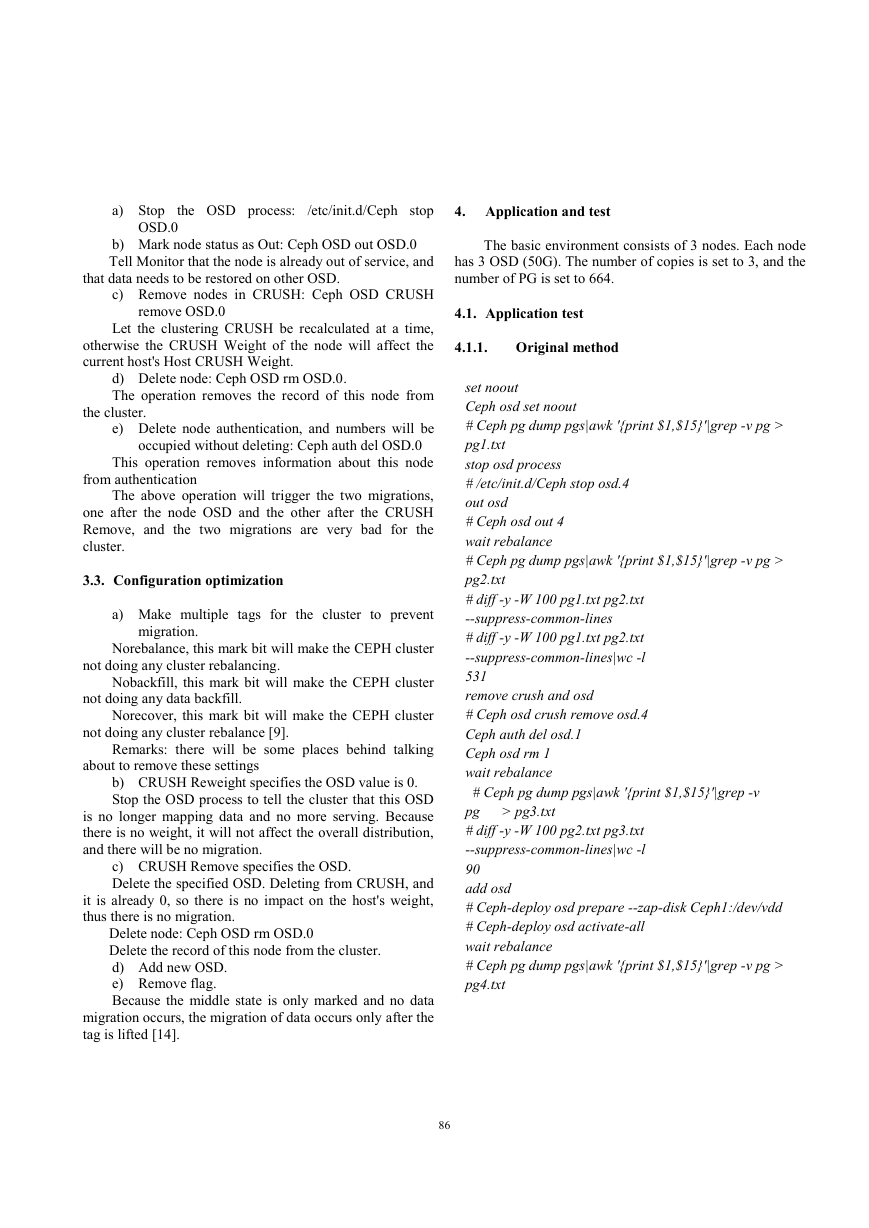

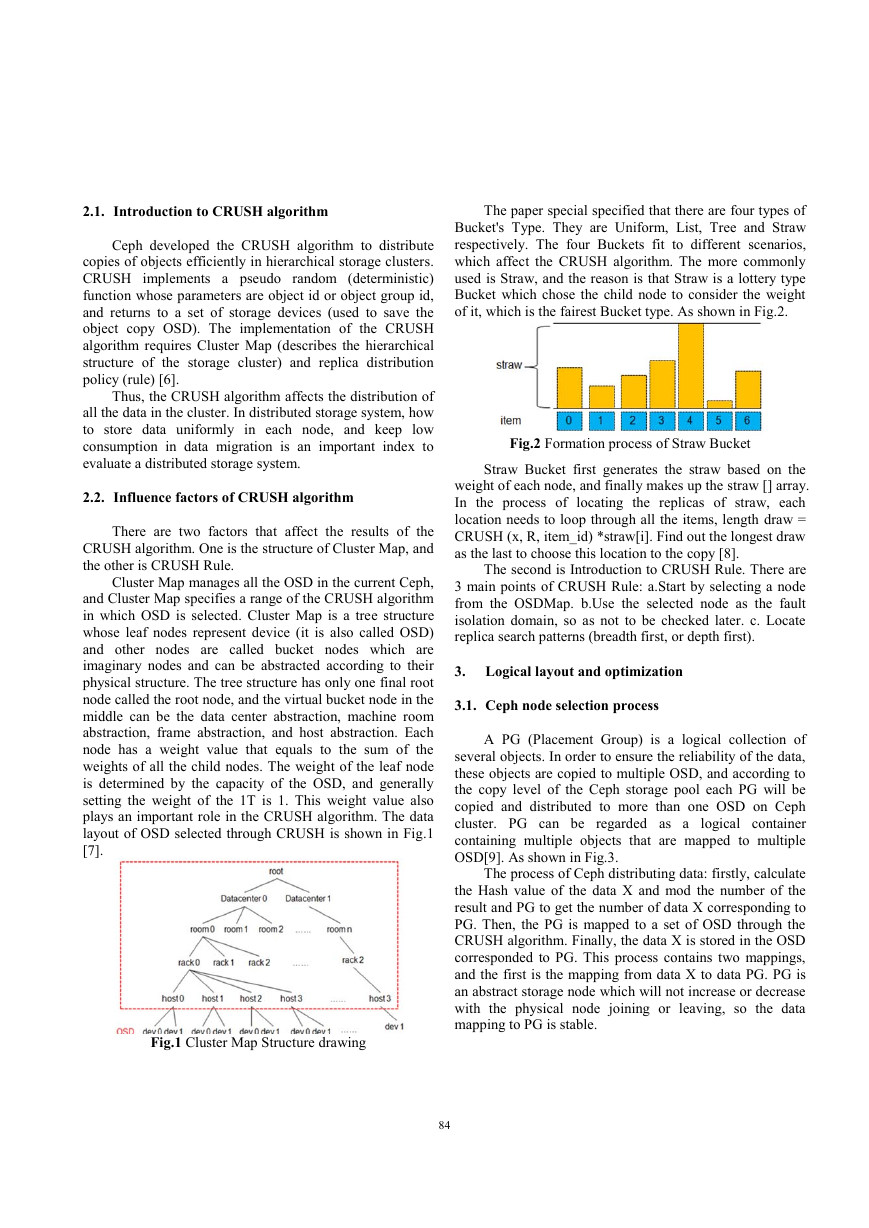

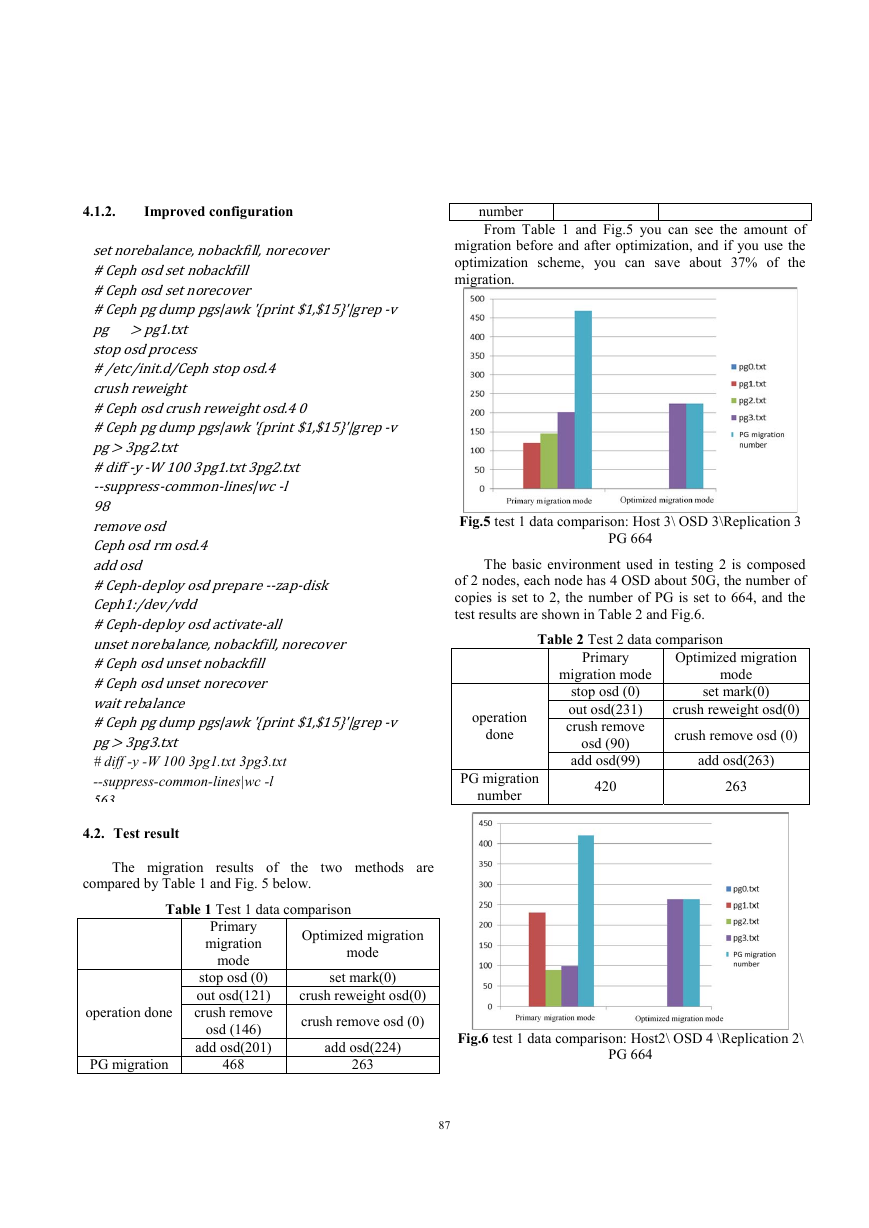

From Table 1 and Fig.5 you can see the amount of

migration before and after optimization, and if you use the

optimization scheme, you can save about 37% of the

migration.

Fig.5 test 1 data comparison: Host 3\ OSD 3\Replication 3

PG 664

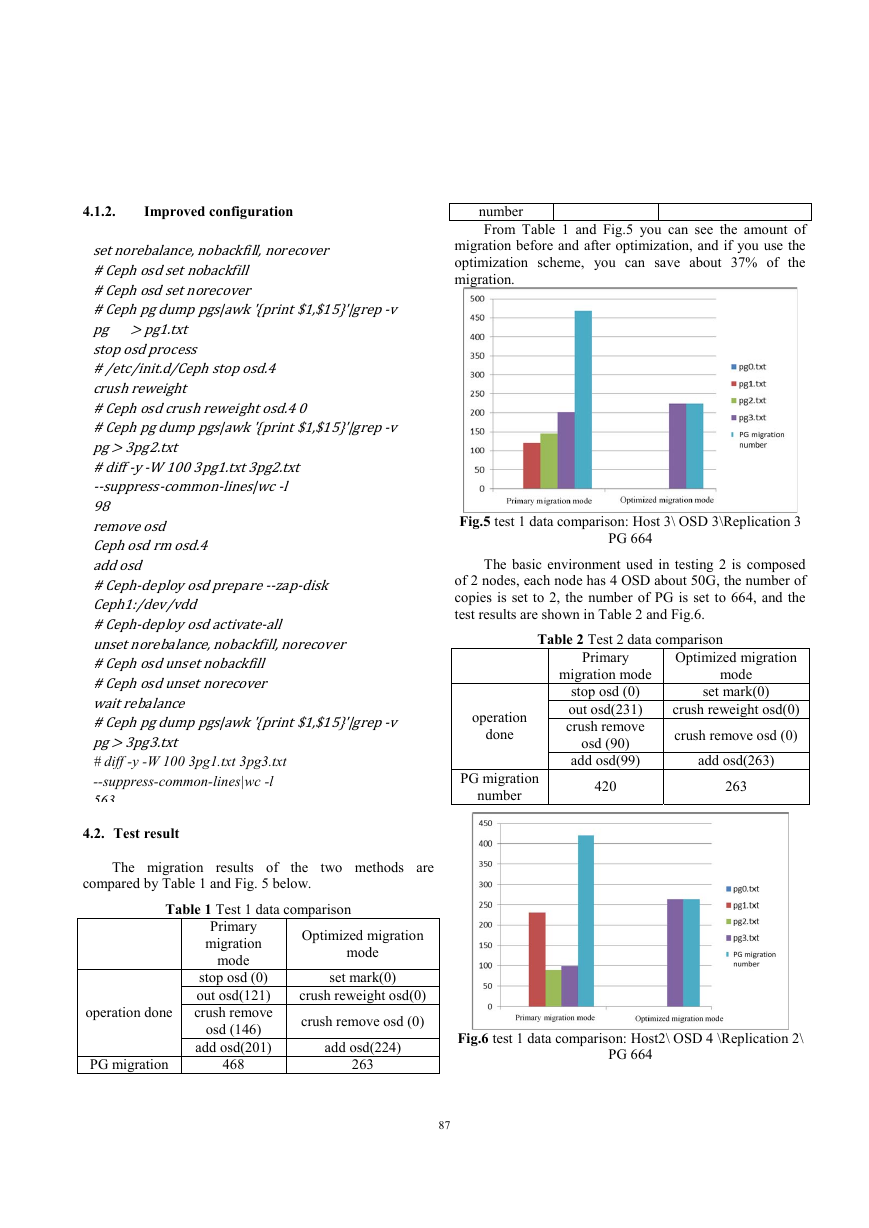

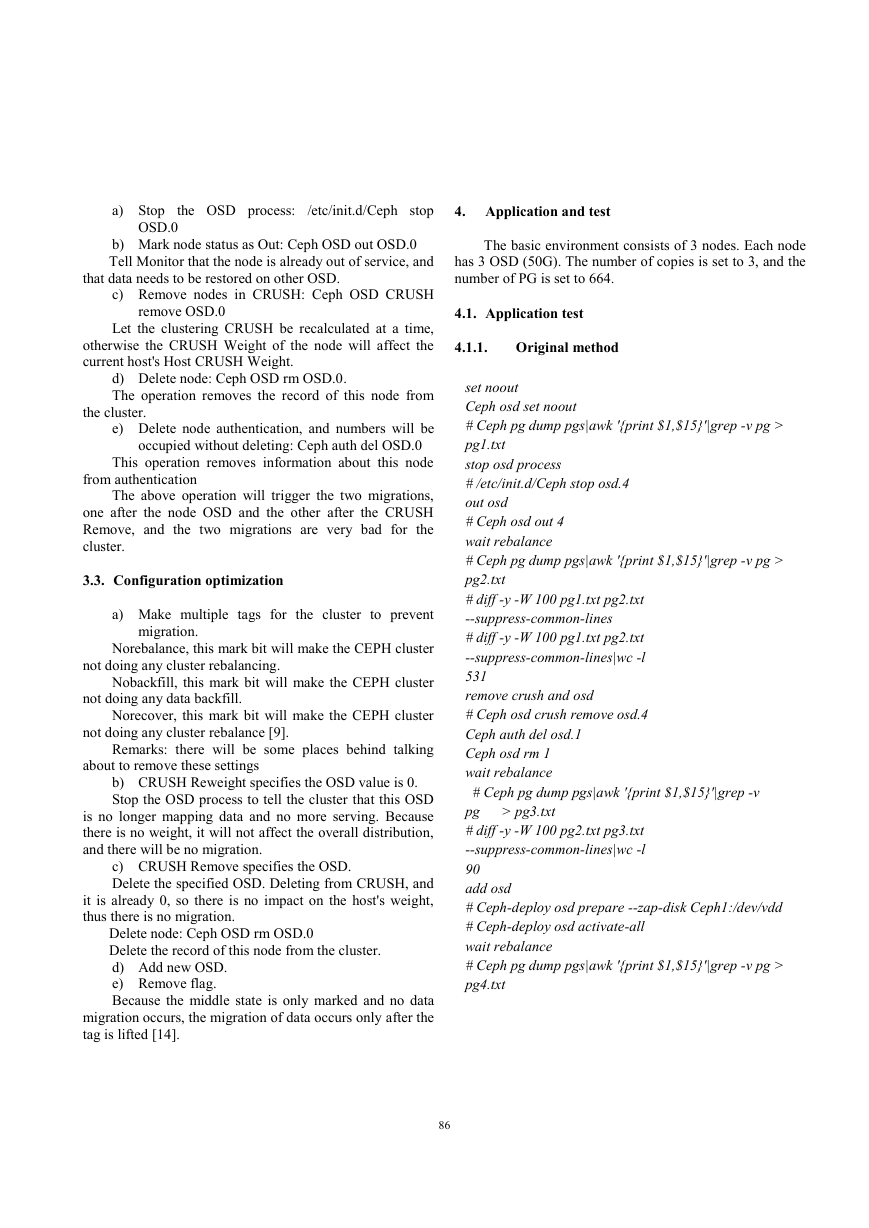

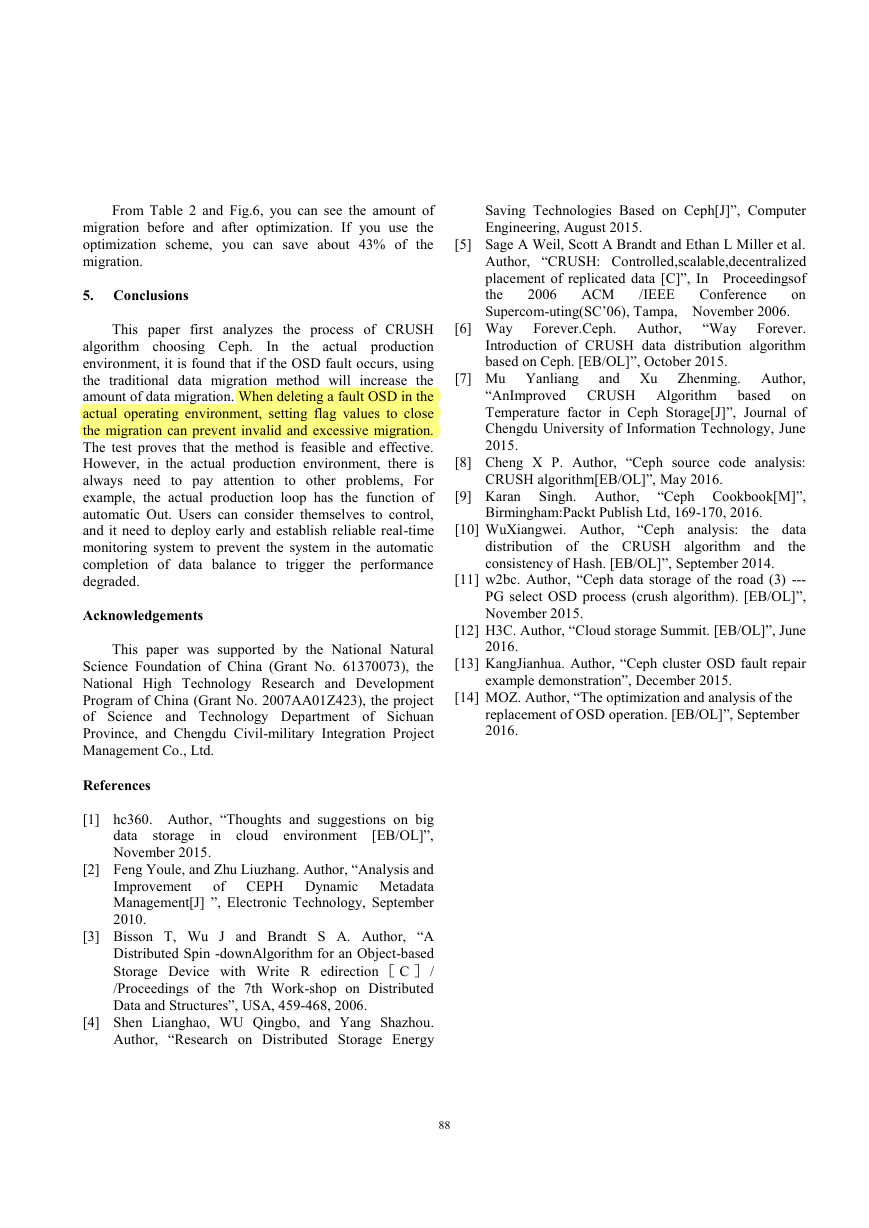

The basic environment used in testing 2 is composed

of 2 nodes, each node has 4 OSD about 50G, the number of

copies is set to 2, the number of PG is set to 664, and the

test results are shown in Table 2 and Fig.6.

operation

done

Table 2 Test 2 data comparison

Primary

migration mode

stop osd (0)

out osd(231)

crush remove

osd (90)

add osd(99)

420

Optimized migration

mode

set mark(0)

crush reweight osd(0)

crush remove osd (0)

add osd(263)

263

PG migration

number

4.1.2.

Improved configuration

set norebalance, nobackfill, norecover

# Ceph osd set nobackfill

# Ceph osd set norecover

# Ceph pg dump pgs|awk '{print $1,$15}'|grep -v

pg > pg1.txt stop osd process # /etc/init.d/Ceph stop osd.4

crush reweight # Ceph osd crush reweight osd.4 0

# Ceph pg dump pgs|awk '{print $1,$15}'|grep -v

pg > 3pg2.txt # diff -y -W 100 3pg1.txt 3pg2.txt

--suppress-common-lines|wc -l

98 remove osd Ceph osd rm osd.4

add osd # Ceph-deploy osd prepare --zap-disk

Ceph1:/dev/vdd # Ceph-deploy osd activate-all

unset norebalance, nobackfill, norecover

# Ceph osd unset nobackfill

# Ceph osd unset norecover

wait rebalance # Ceph pg dump pgs|awk '{print $1,$15}'|grep -v

pg > 3pg3.txt

# diff -y -W 100 3pg1.txt 3pg3.txt

--suppress-common-lines|wc -l

563

4.2. Test result

The migration results of

the

compared by Table 1 and Fig. 5 below.

two methods are

Table 1 Test 1 data comparison

operation done

PG migration

Primary

migration

mode

stop osd (0)

out osd(121)

crush remove

osd (146)

add osd(201)

468

Optimized migration

mode

set mark(0)

crush reweight osd(0)

crush remove osd (0)

add osd(224)

263

87

Fig.6 test 1 data comparison: Host2\ OSD 4 \Replication 2\

PG 664

�

Saving Technologies Based on Ceph[J]”, Computer

Engineering, August 2015.

[5] Sage A Weil, Scott A Brandt and Ethan L Miller et al.

Author, “CRUSH: Controlled,scalable,decentralized

placement of replicated data [C]”, In Proceedingsof

the

on

Supercom-uting(SC’06), Tampa, November 2006.

Conference

/IEEE

ACM

2006

[6] Way

[7] Mu Yanliang

“Way

Forever.Ceph. Author,

Forever.

Introduction of CRUSH data distribution algorithm

based on Ceph. [EB/OL]”, October 2015.

and Xu Zhenming. Author,

“AnImproved CRUSH Algorithm

on

Temperature factor in Ceph Storage[J]”, Journal of

Chengdu University of Information Technology, June

2015.

based

[8] Cheng X P. Author, “Ceph source code analysis:

CRUSH algorithm[EB/OL]”, May 2016.

[9] Karan Singh. Author,

“Ceph Cookbook[M]”,

Birmingham:Packt Publish Ltd, 169-170, 2016.

[10] WuXiangwei. Author, “Ceph analysis:

distribution of

the CRUSH algorithm and

consistency of Hash. [EB/OL]”, September 2014.

the data

the

[11] w2bc. Author, “Ceph data storage of the road (3) ---

PG select OSD process (crush algorithm). [EB/OL]”,

November 2015.

[12] H3C. Author, “Cloud storage Summit. [EB/OL]”, June

2016.

[13] KangJianhua. Author, “Ceph cluster OSD fault repair

example demonstration”, December 2015.

[14] MOZ. Author, “The optimization and analysis of the

replacement of OSD operation. [EB/OL]”, September

2016.

From Table 2 and Fig.6, you can see the amount of

migration before and after optimization. If you use the

optimization scheme, you can save about 43% of the

migration.

5. Conclusions

This paper first analyzes the process of CRUSH

algorithm choosing Ceph. In

the actual production

environment, it is found that if the OSD fault occurs, using

the traditional data migration method will increase the

amount of data migration. When deleting a fault OSD in the

actual operating environment, setting flag values to close

the migration can prevent invalid and excessive migration.

The test proves that the method is feasible and effective.

However, in the actual production environment, there is

always need to pay attention to other problems, For

example, the actual production loop has the function of

automatic Out. Users can consider themselves to control,

and it need to deploy early and establish reliable real-time

monitoring system to prevent the system in the automatic

completion of data balance to trigger the performance

degraded.

Acknowledgements

This paper was supported by the National Natural

Science Foundation of China (Grant No. 61370073), the

National High Technology Research and Development

Program of China (Grant No. 2007AA01Z423), the project

of Science and Technology Department of Sichuan

Province, and Chengdu Civil-military Integration Project

Management Co., Ltd.

References

[1] hc360. Author, “Thoughts and suggestions on big

[EB/OL]”,

in cloud environment

storage

data

November 2015.

[2] Feng Youle, and Zhu Liuzhang. Author, “Analysis and

Improvement

of CEPH Dynamic Metadata

Management[J] ”, Electronic Technology, September

2010.

[3] Bisson T, Wu J and Brandt S A. Author, “A

Distributed Spin -downAlgorithm for an Object-based

Storage Device with Write R edirection C /

/Proceedings of the 7th Work-shop on Distributed

Data and Structures”, USA, 459-468, 2006.

[4] Shen Lianghao, WU Qingbo, and Yang Shazhou.

Author, “Research on Distributed Storage Energy

88

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc