DNNDK User Guide

Revision History

Table of Contents

Ch. 1: Quick Start

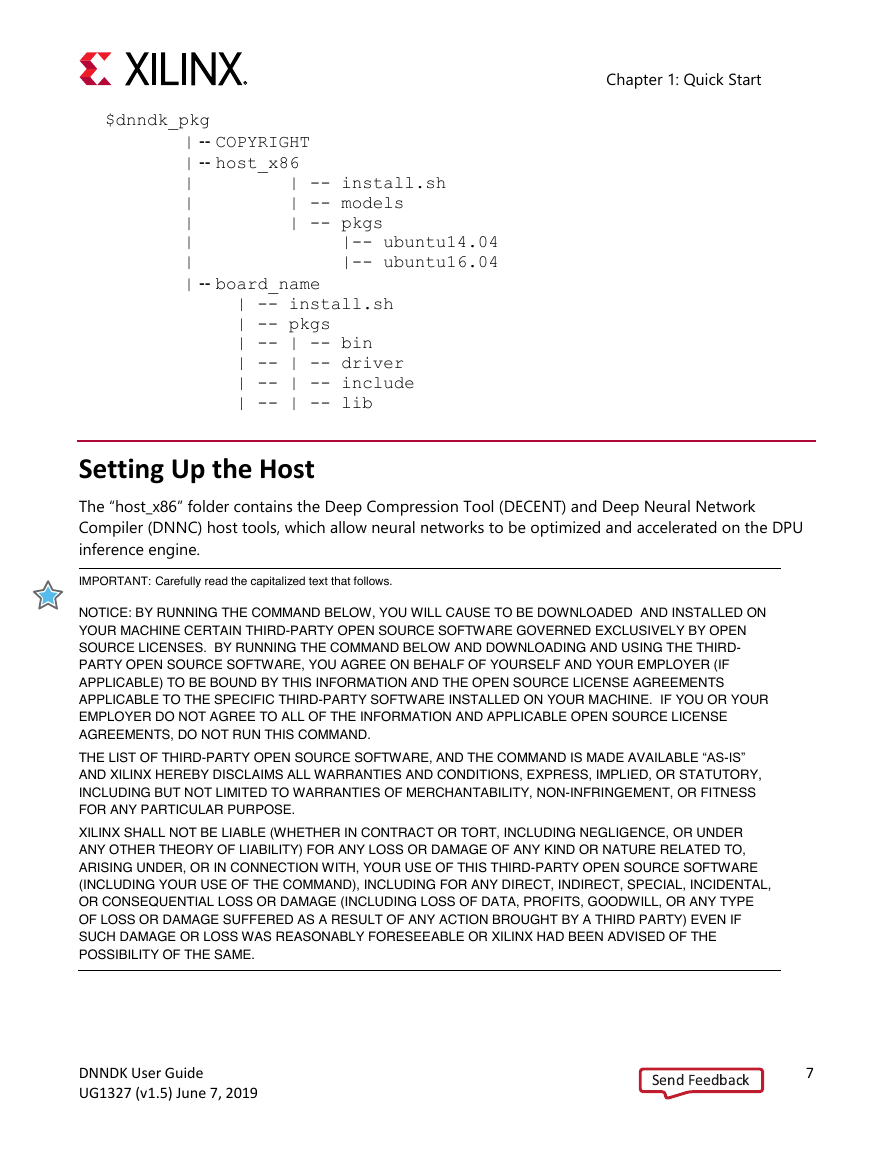

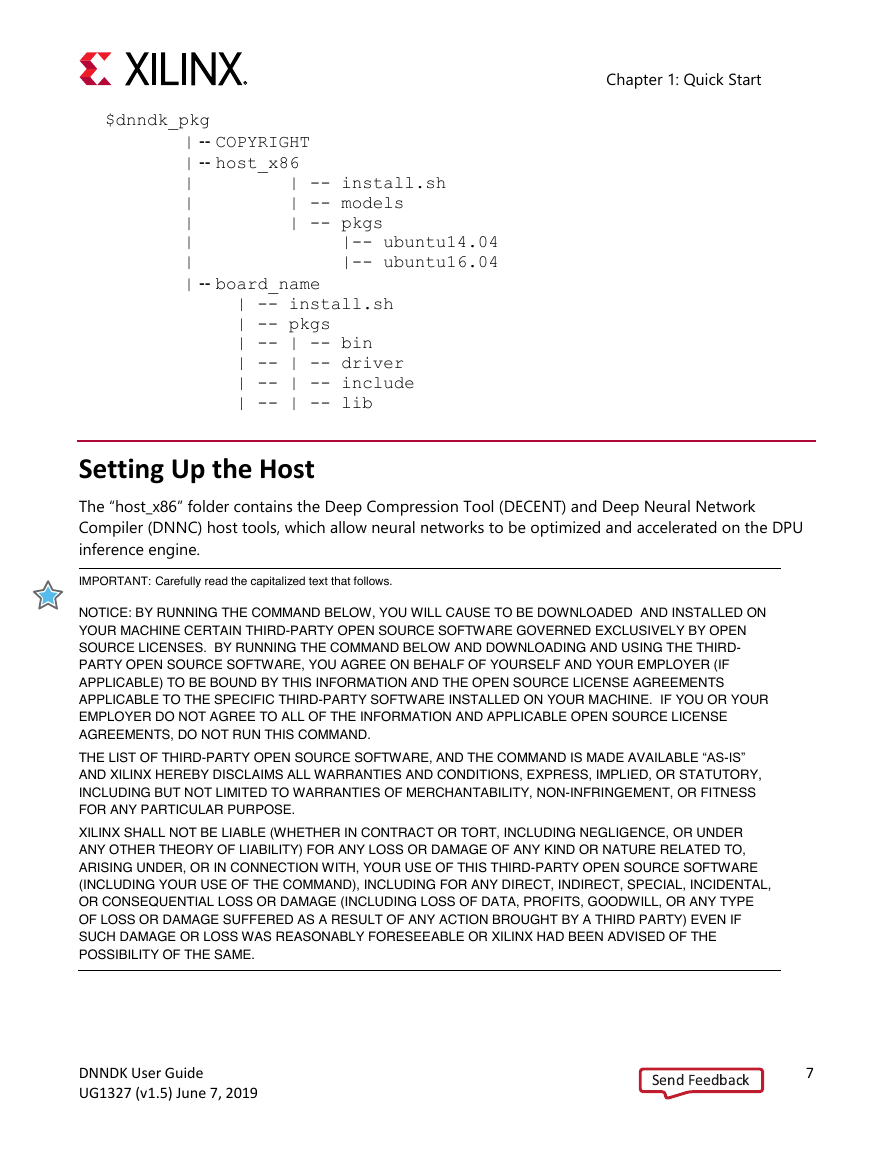

Downloading DNNDK

Setting Up the Host

Installing the GPU Platform Software

Caffe Version: Installing Dependent Libraries

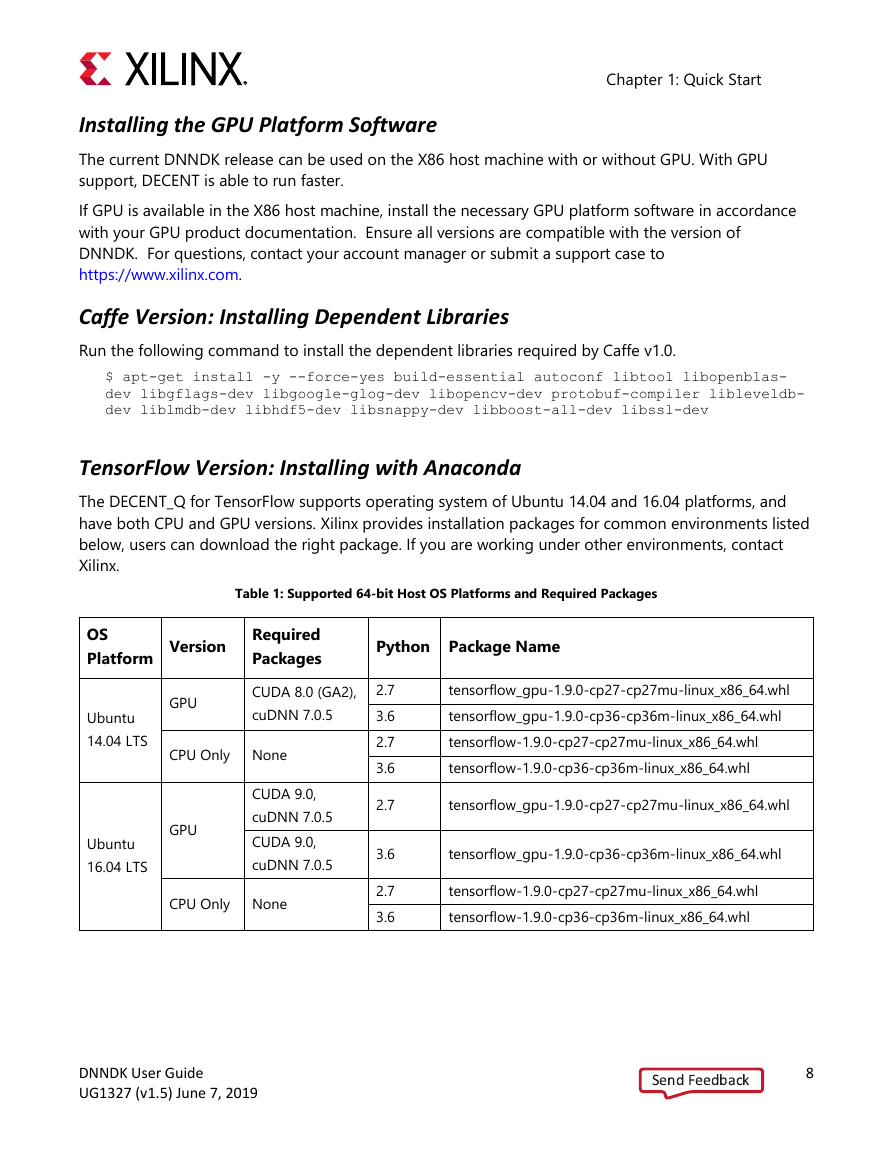

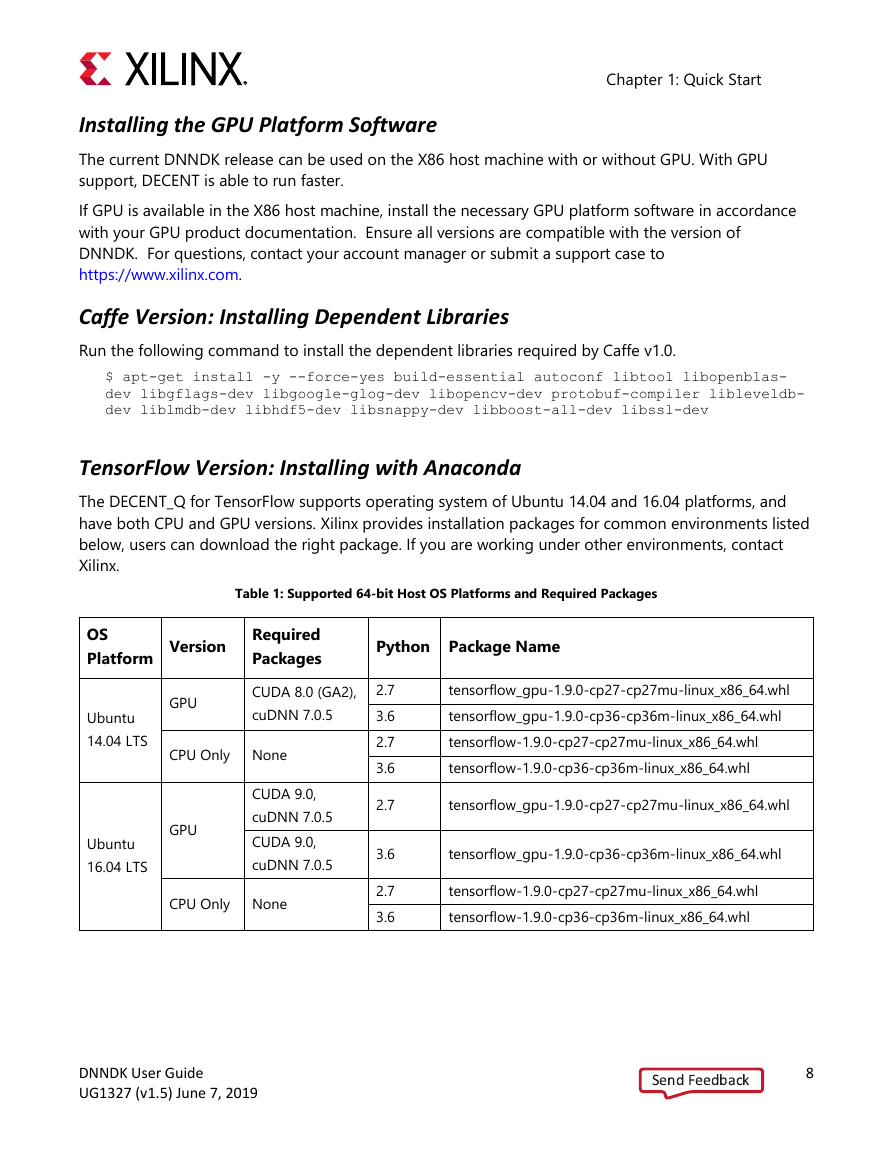

TensorFlow Version: Installing with Anaconda

Installing the DNNDK Host Tools

Setting Up the Evaluation Board

Supported Evaluation Boards

Setting Up the Ultra96 Board

Setting Up the ZCU102 Evaluation Board

Setting Up the ZCU104 Evaluation Board

Flash the OS Image to the SD Card

Booting the Evaluation Board

Accessing the Evaluation Board

Configuring UART

Using the Ethernet Interface

Using the ZCU102 as a Standalone Embedded System

Copying DNNDK Tools to the Evaluation Board

Support

Ch. 2: Copyright and Version

Copyright

Version

Host Package

Ultra96 Board Version Information

ZCU102 Board Version Information

ZCU104 Board Version Information

Target Package

Ultra96 Component Versions

ZCU102 Component Versions

ZCU104 Component Versions

Ch. 3: Upgrading and Porting

Since v3.0

Toolchain Changes

DECENT

DNNC

Example Changes

Since v2.08

Toolchain Changes

DECENT

DNNC

Exception Handling Changes

API Changes

Example Changes

Since v2.07

Toolchain Changes

DNNC

Since v2.06

Toolchain Changes

DECENT

DNNC

DExplorer

Changes in API

Example Changes

Since v1.10

Toolchain Changes

DECENT

DNNC

DExplorer

DSight

Changes in API

New API

Changed API

Upgrading from Previous Versions

From v1.10 to v2.06

From v1.07 to v1.10

Ch. 4: DNNDK

Overview

Deep-Learning Processor Unit

DNNDK Framework

DECENT

DNNC

N2Cube

DNNAS

Profiler

Ch. 5: Network Deployment Overview

Overview

Network Compression (Caffe Version)

Network Compression (TensorFlow Version)

Network Compilation

Compiling Caffe ResNet-50

Compiling TensorFlow ResNet-50

Output Kernels

Programming with DNNDK

Compiling the Hybrid Executable

Running the Application

Ch. 6: Network Compression

DECENT Overview

DECENT Working Flow

DECENT (Caffe Version) Usage

DECENT (Caffe Version) Working Flow

Prepare the Neural Network Model

Run DECENT

Output

DECENT (TensorFlow Version) Usage

Preparing the Neural Network Model

How to Get the Frozen Graph

How to Get the Calibration Dataset and Input Function

Pre-Defined Input Function

Run DECENT_Q

Output

Ch. 7: Network Compilation

DNNC Overview

Using DNNC

DNNC Options

DNNC Compilation Mode

Compiling ResNet50

Ch. 8: Programming with DNNDK

Programming Model

DPU Kernel

DPU Task

DPU Node

DPU Tensor

Programming Interface

Ch. 9: Hybrid Compilation

Ch. 10: Running

Ch. 11: Utilities

DExplorer

Check DNNDK version

Check DPU status

Configure DPU Running Mode

Normal Mode

Profile Mode

Debug Mode

DPU Signature

DSight

Ch. 12: Programming APIs

Library libn2cube

Overview

APIs

dpuOpen()

dpuClose()

dpuLoadKernel()

dpuDestroyKernel()

dpuCreateTask()

dpuDestroyTask()

dpuRunTask()

dpuEnableTaskProfile()

dpuEnableTaskDump()

dpuGetTaskProfile()

dpuGetNodeProfile()

dpuGetInputTensorCnt()

dpuGetInputTensor()

dpuGetInputTensorAddress()

dpuGetInputTensorSize()

dpuGetInputTensorScale()

dpuGetInputTensorHeight()

dpuGetInputTensorWidth()

dpuGetInputTensorChannel()

dpuGetOutputTensorCnt()

dpuGetOutputTensor()

dpuGetOutputTensorAddress()

dpuGetOutputTensorSize()

dpuGetOutputTensorScale()

dpuGetOutputTensorHeight()

dpuGetOutputTensorWidth()

dpuGetOutputTensorChannel()

dpuGetTensorSize()

dpuGetTensorScale()

dpuGetTensorHeight()

dpuGetTensorWidth()

dpuGetTensorChannel()

dpuSetInputTensorInCHWInt8()

dpuSetInputTensorInCHWFP32()

dpuSetInputTensorInHWCInt8()

dpuSetInputTensorInHWCFP32()

dpuGetOutputTensorInCHWInt8()

dpuGetOutputTensorInCHWFP32()

dpuGetOutputTensorInHWCInt8()

dpuGetOutputTensorInHWCFP32()

dpuRunSoftmax()

dpuSetExceptionMode()

dpuGetExceptionMode()

dpuGetExceptionMessage()

Library libdputils

Overview

APIs

dpuSetInputImage()

dpuSetInputImage2()

dpuSetInputImageWithScale()

Appx. A: Legal Notices

Please Read: Important Legal Notices

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc