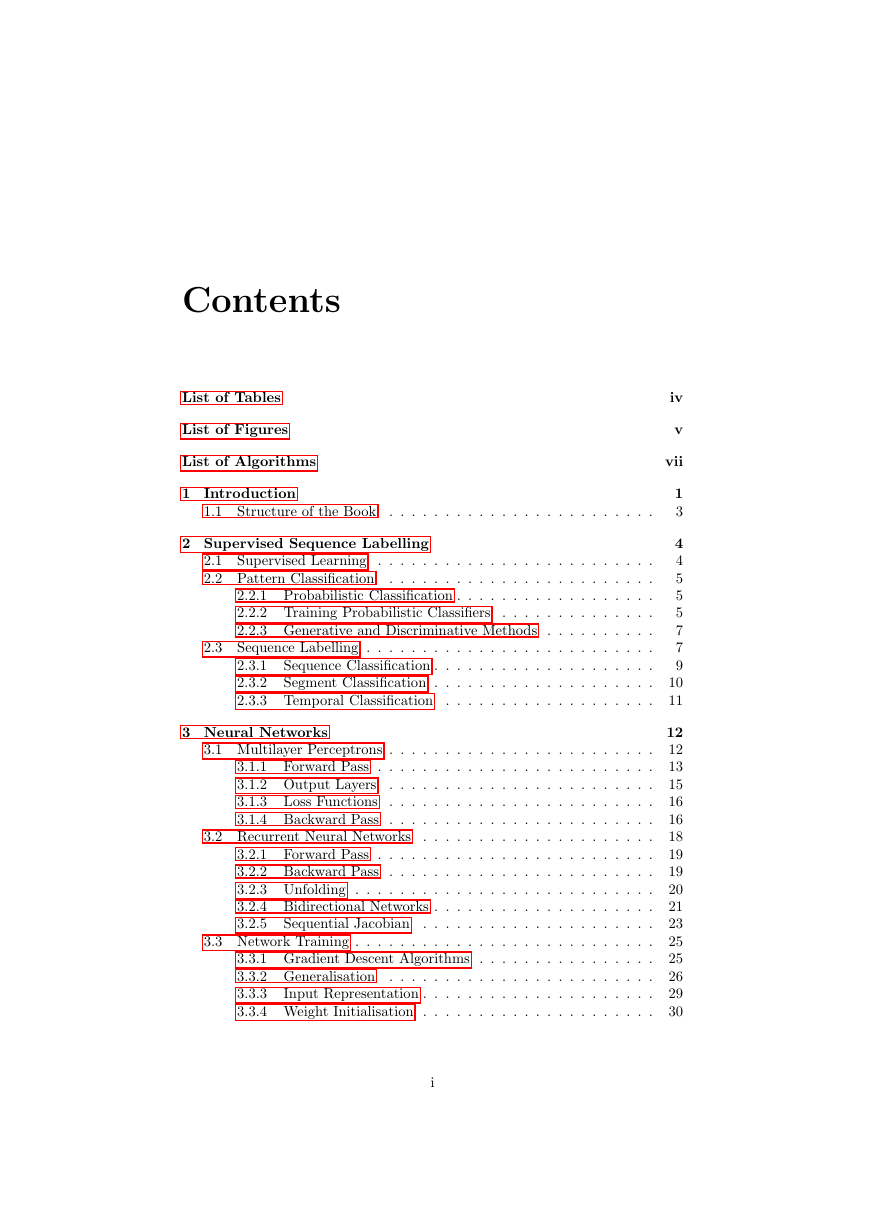

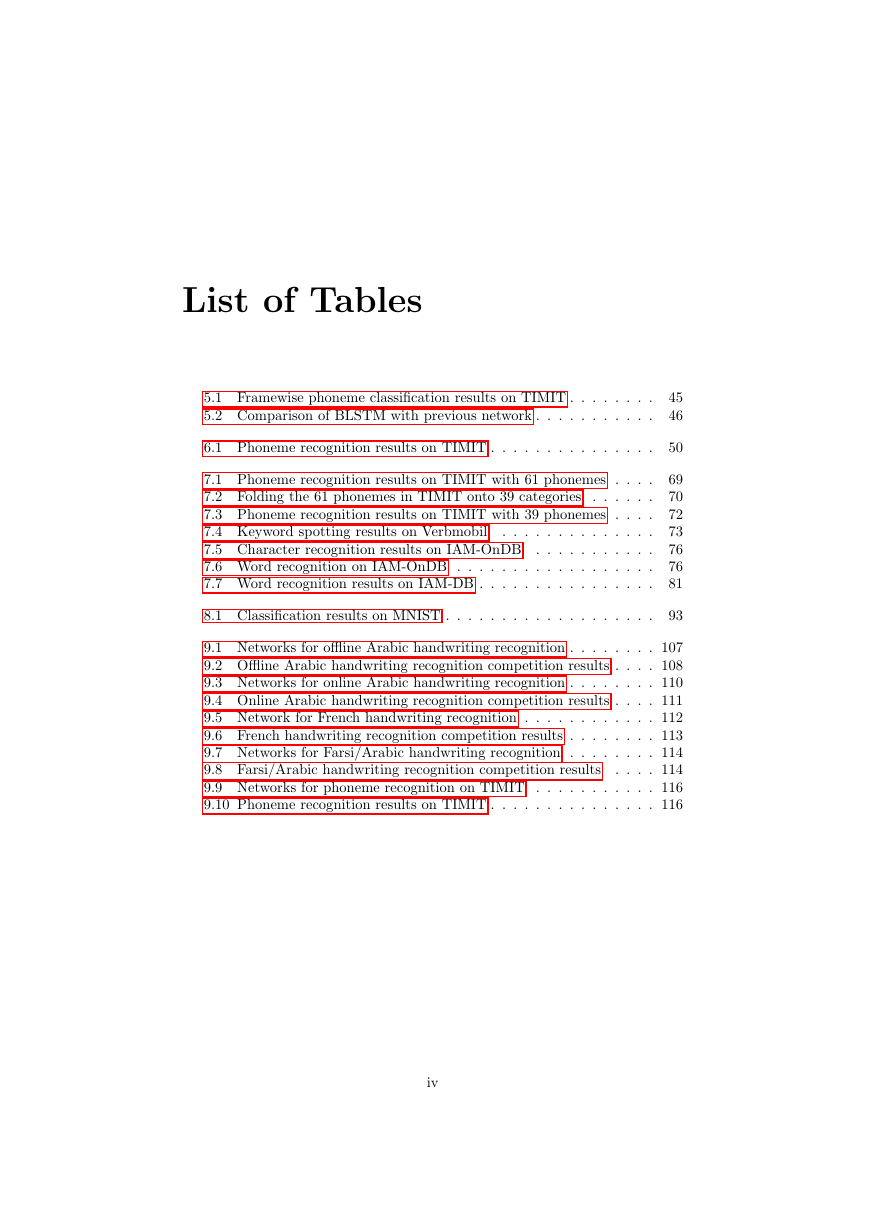

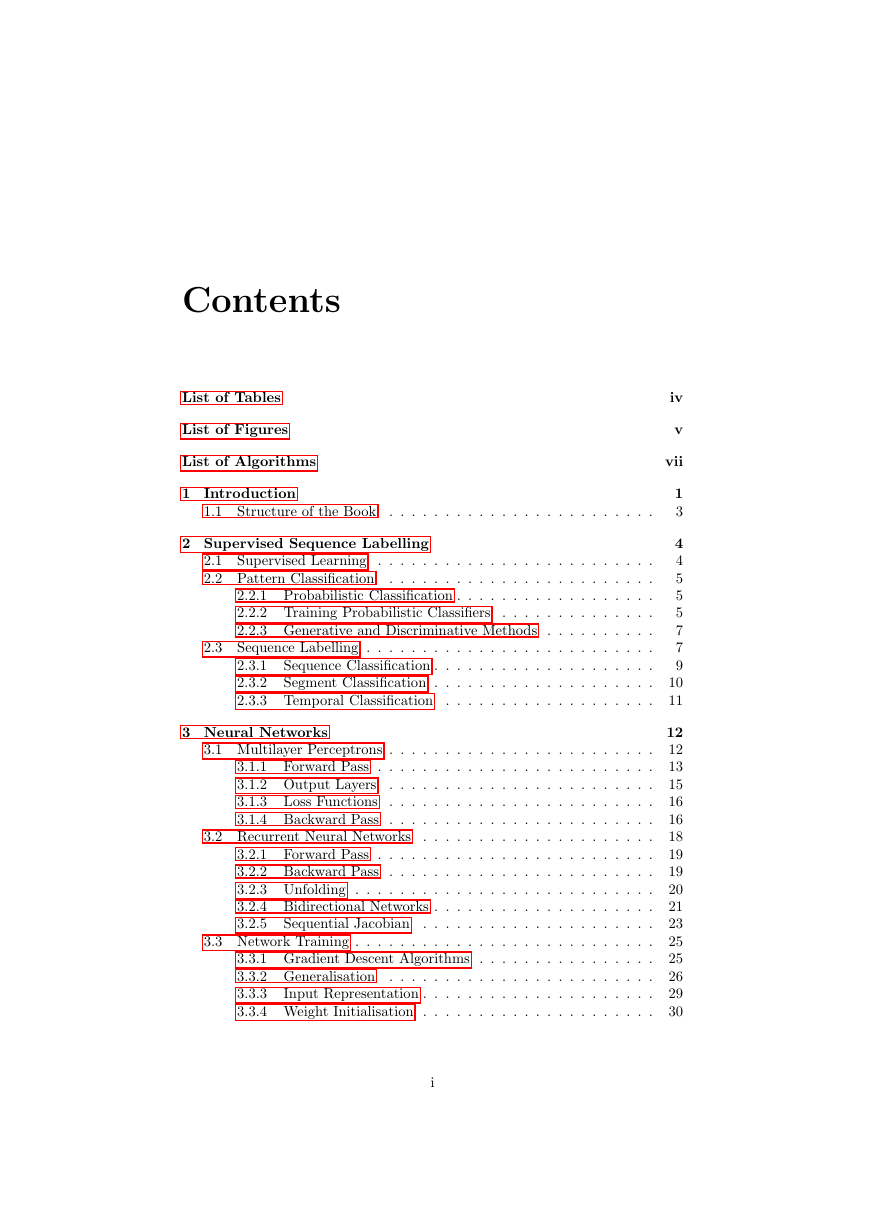

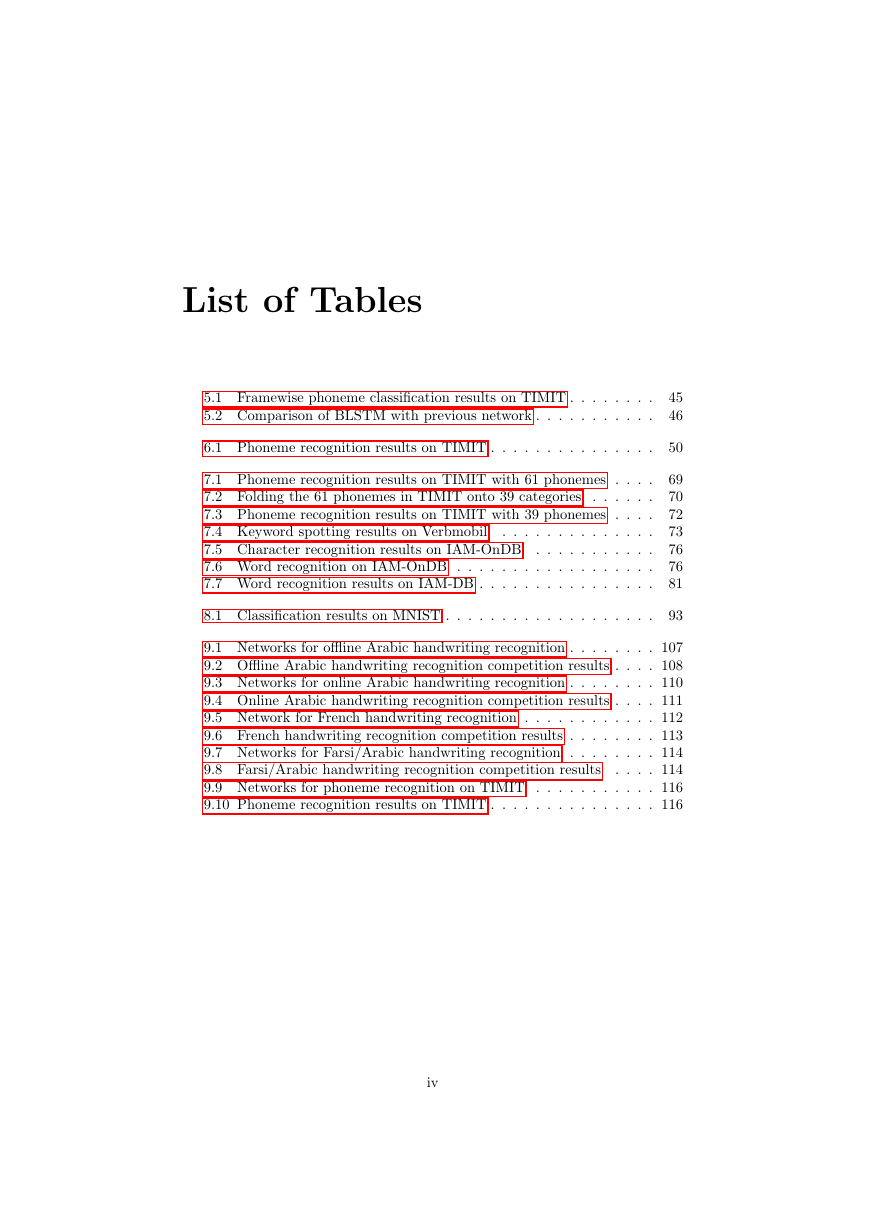

List of Tables

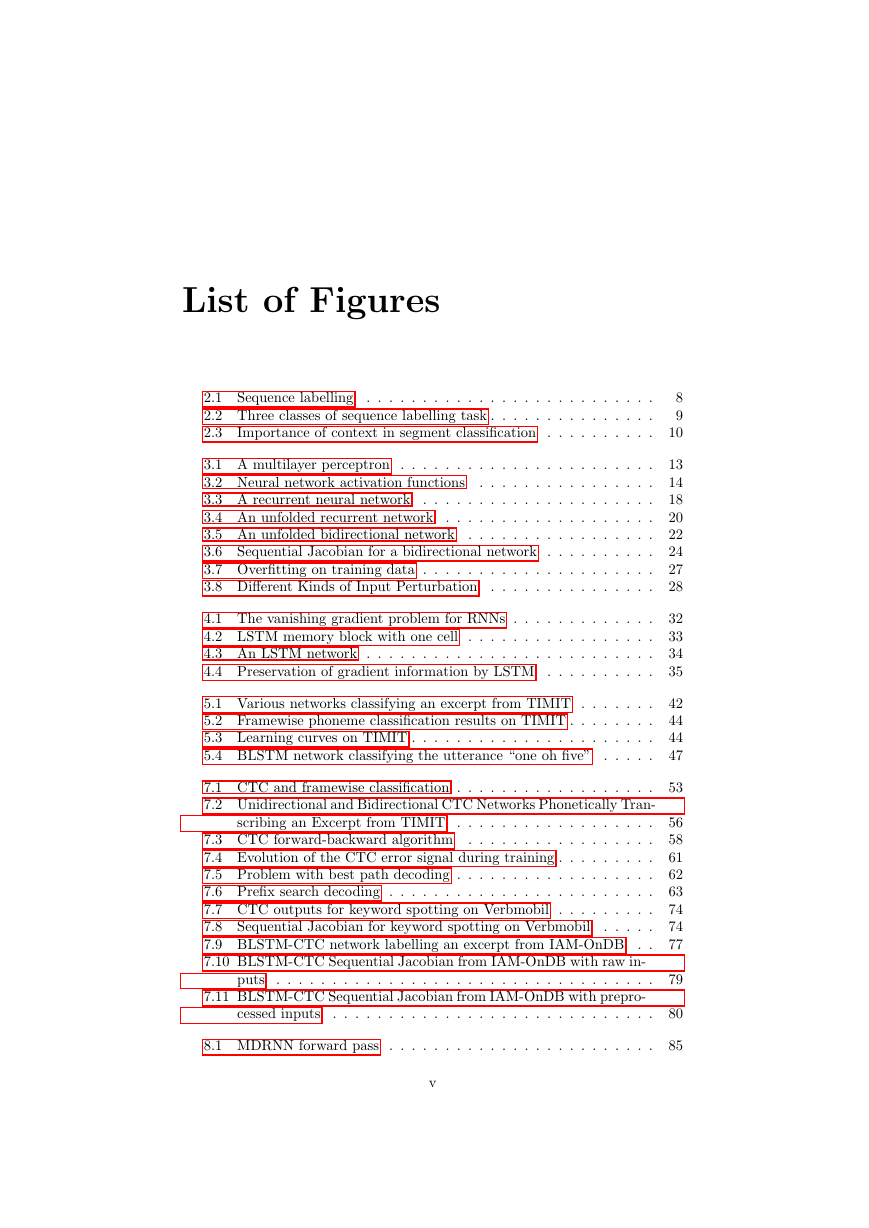

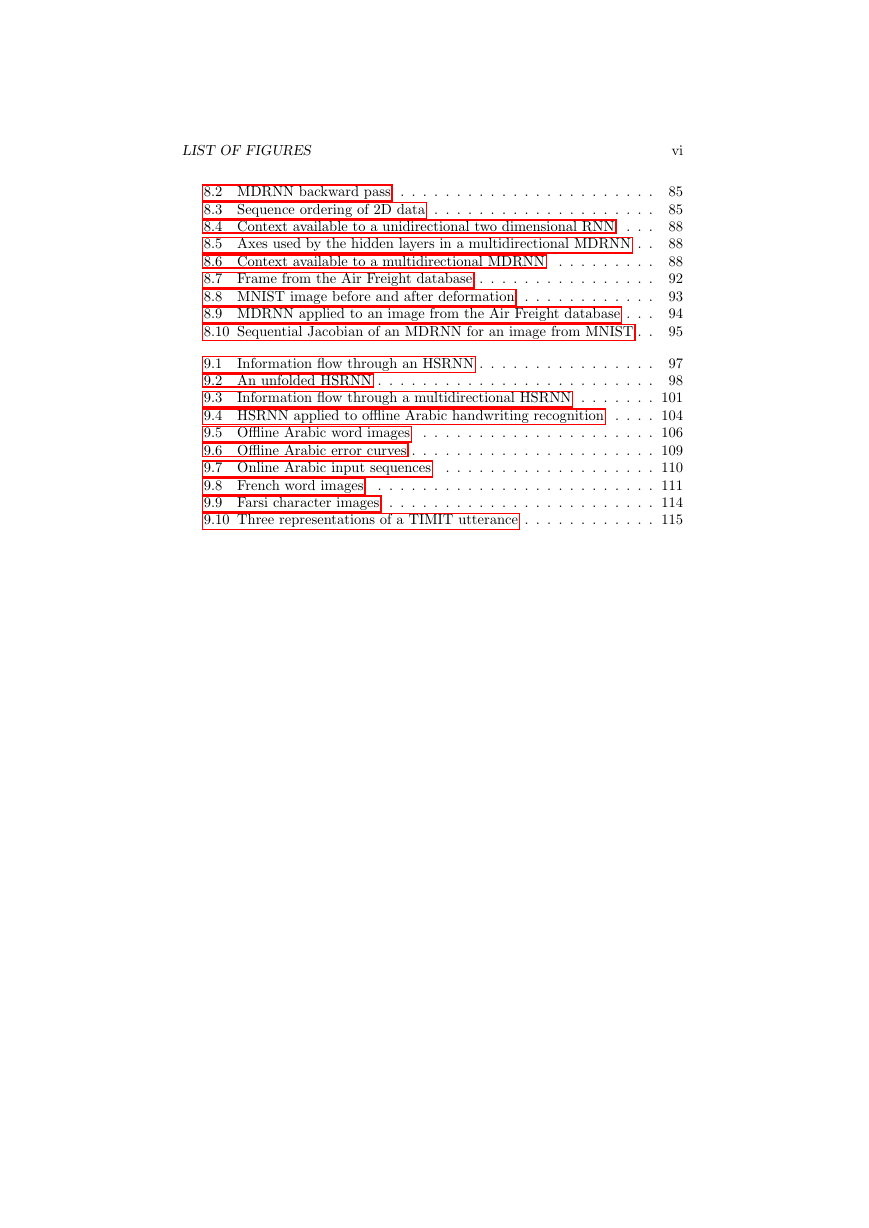

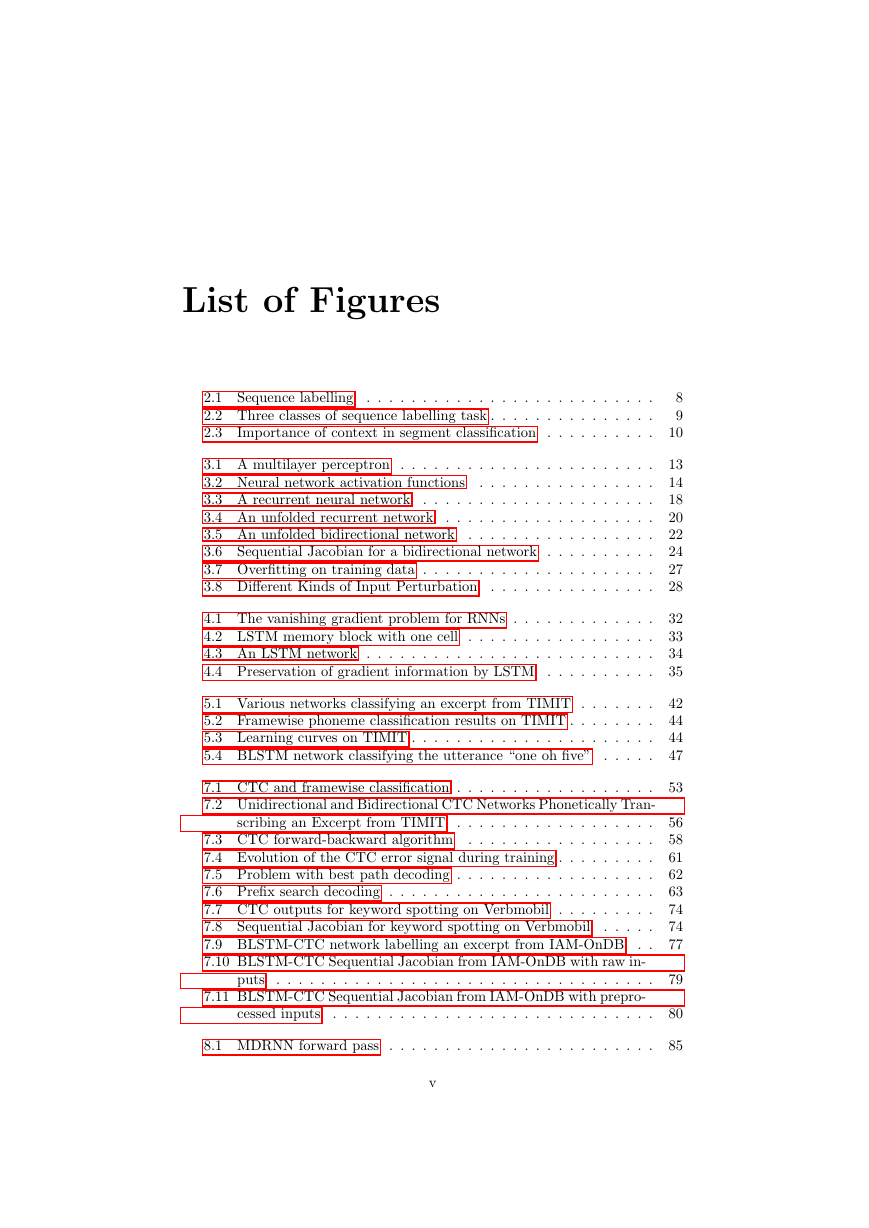

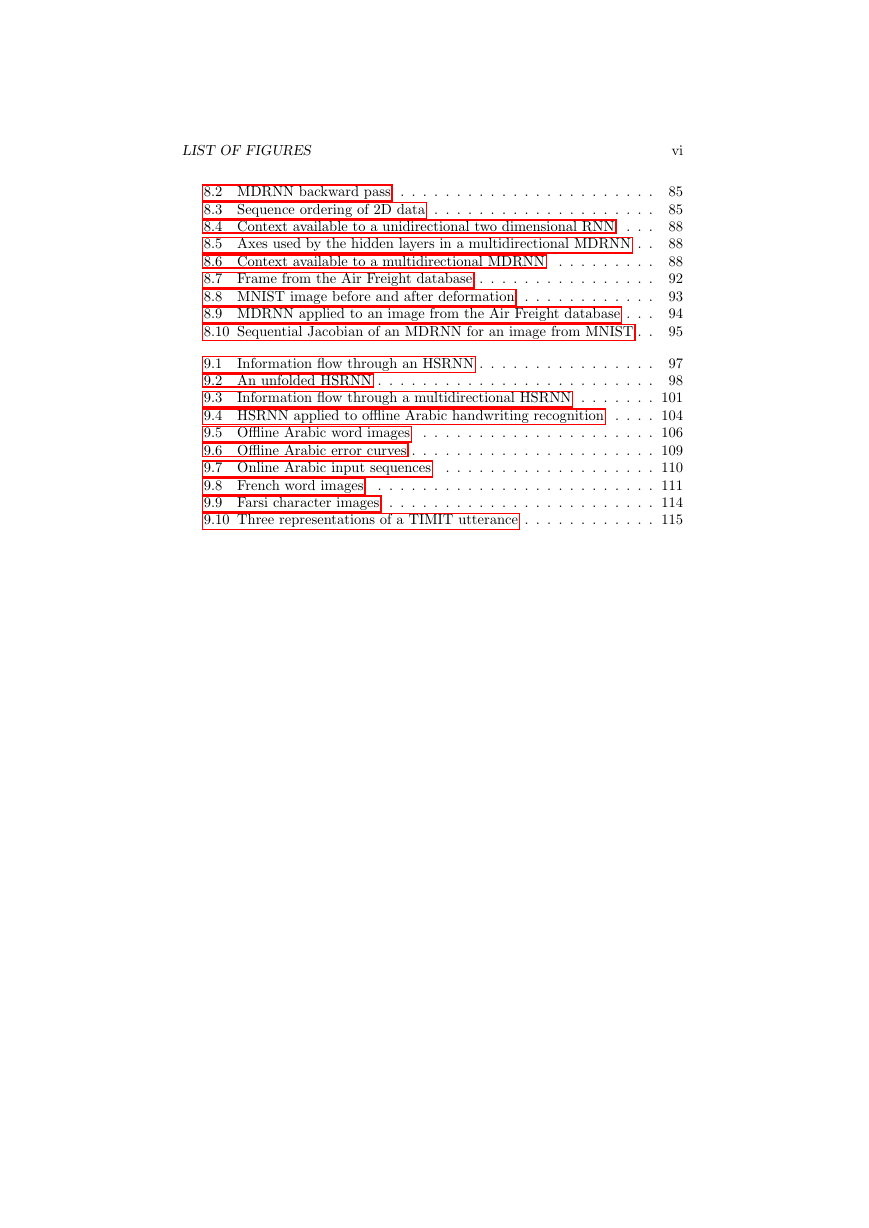

List of Figures

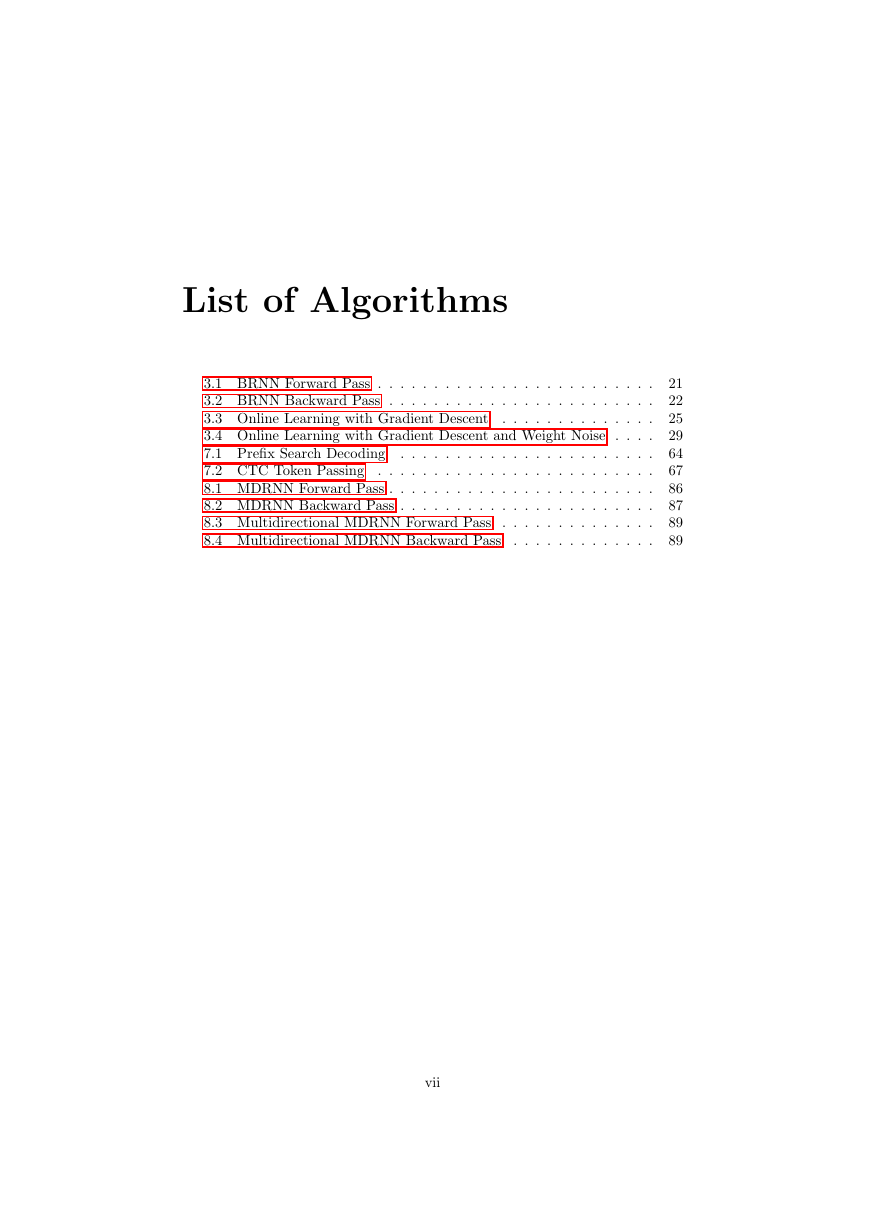

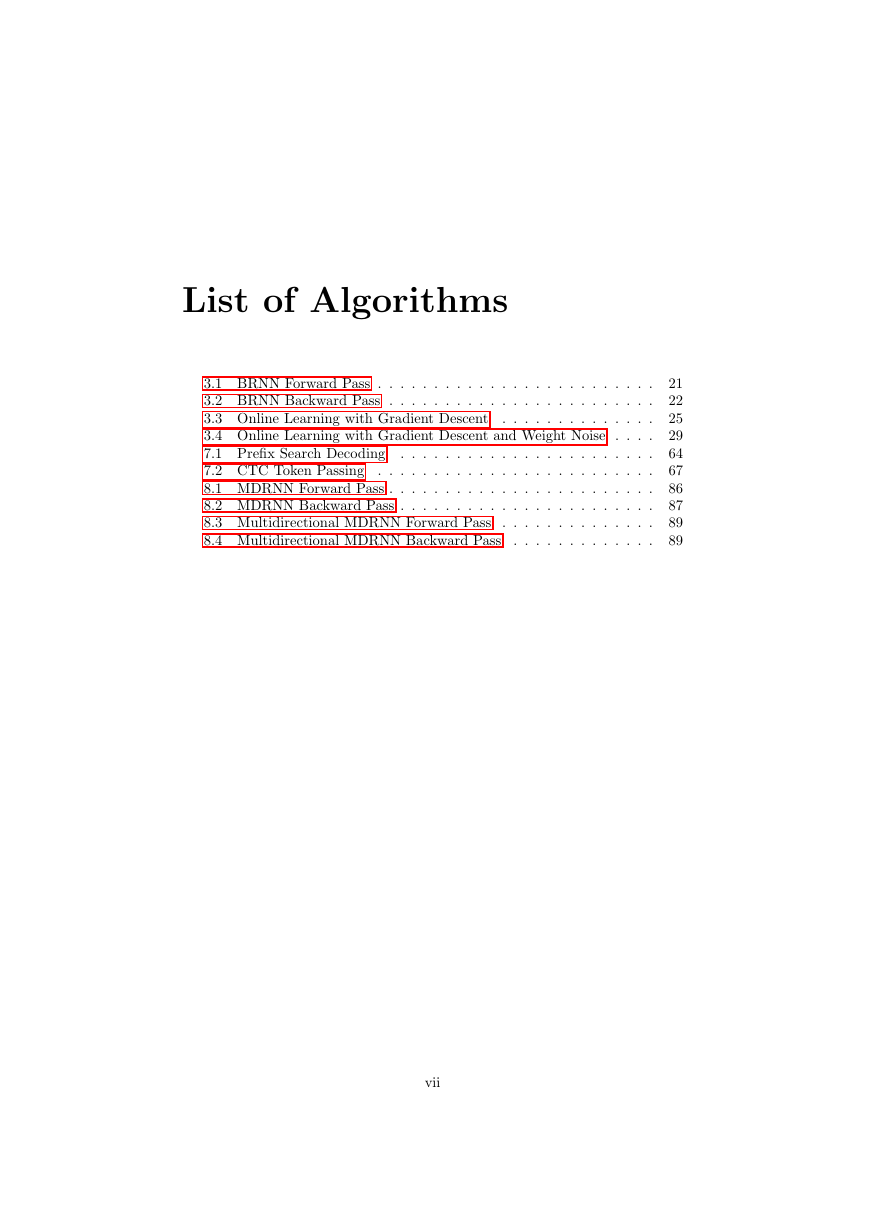

List of Algorithms

Introduction

Structure of the Book

Supervised Sequence Labelling

Supervised Learning

Pattern Classification

Probabilistic Classification

Training Probabilistic Classifiers

Generative and Discriminative Methods

Sequence Labelling

Sequence Classification

Segment Classification

Temporal Classification

Neural Networks

Multilayer Perceptrons

Forward Pass

Output Layers

Loss Functions

Backward Pass

Recurrent Neural Networks

Forward Pass

Backward Pass

Unfolding

Bidirectional Networks

Sequential Jacobian

Network Training

Gradient Descent Algorithms

Generalisation

Input Representation

Weight Initialisation

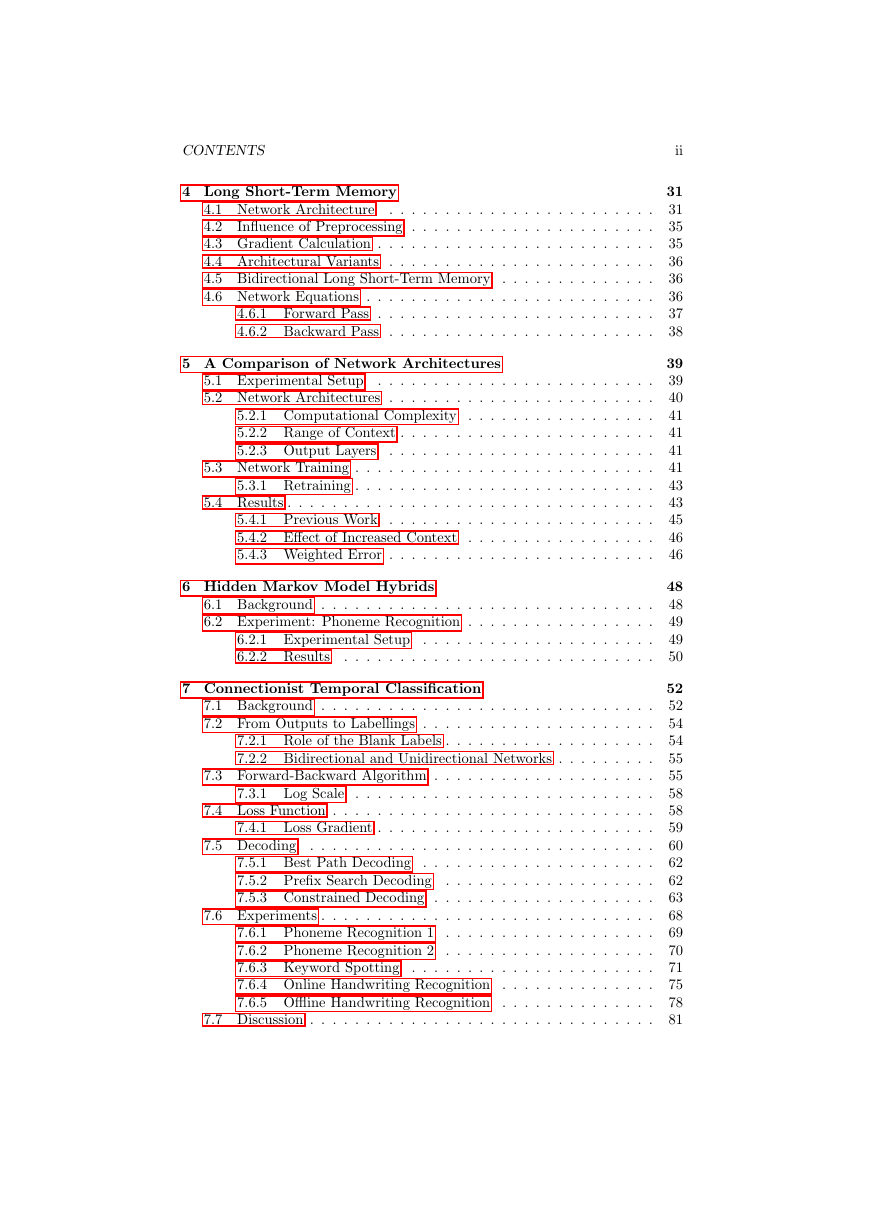

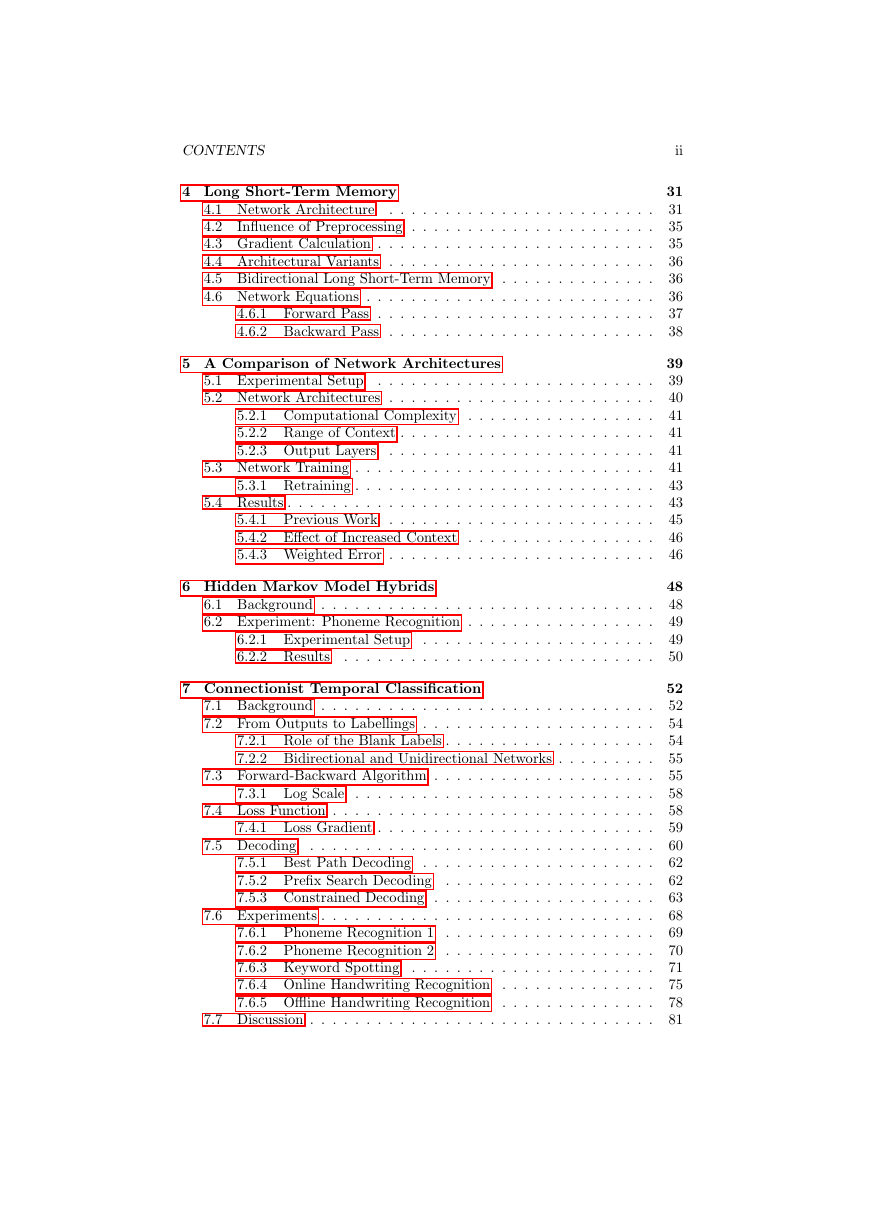

Long Short-Term Memory

Network Architecture

Influence of Preprocessing

Gradient Calculation

Architectural Variants

Bidirectional Long Short-Term Memory

Network Equations

Forward Pass

Backward Pass

A Comparison of Network Architectures

Experimental Setup

Network Architectures

Computational Complexity

Range of Context

Output Layers

Network Training

Retraining

Results

Previous Work

Effect of Increased Context

Weighted Error

Hidden Markov Model Hybrids

Background

Experiment: Phoneme Recognition

Experimental Setup

Results

Connectionist Temporal Classification

Background

From Outputs to Labellings

Role of the Blank Labels

Bidirectional and Unidirectional Networks

Forward-Backward Algorithm

Log Scale

Loss Function

Loss Gradient

Decoding

Best Path Decoding

Prefix Search Decoding

Constrained Decoding

Experiments

Phoneme Recognition 1

Phoneme Recognition 2

Keyword Spotting

Online Handwriting Recognition

Offline Handwriting Recognition

Discussion

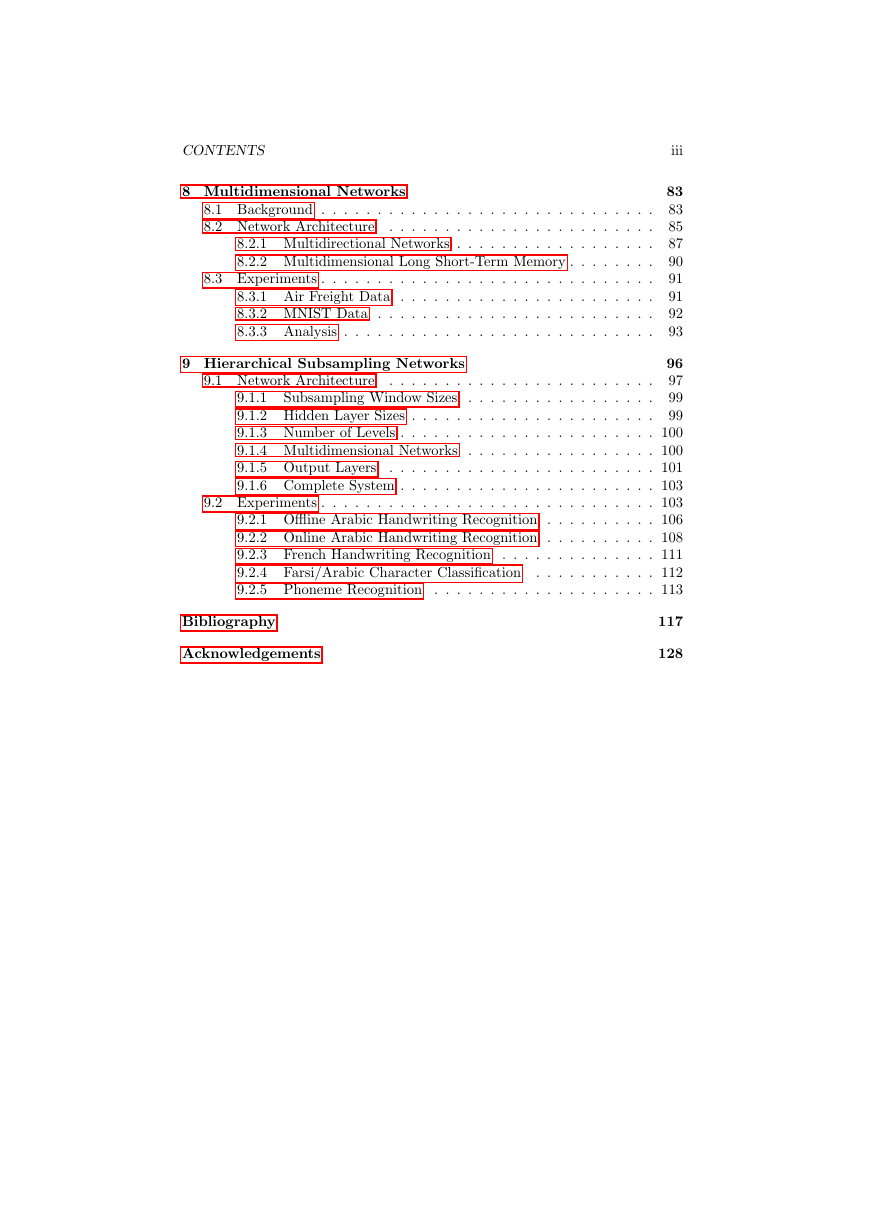

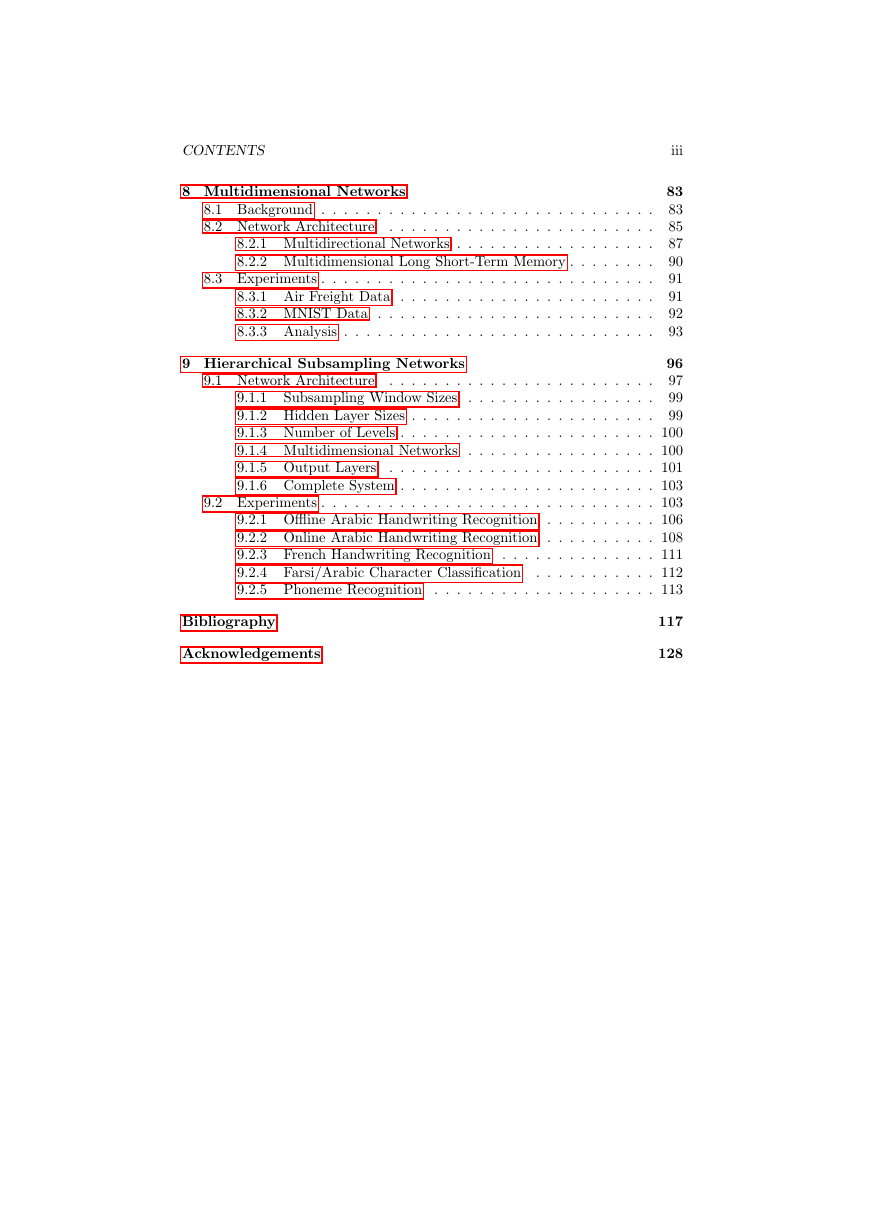

Multidimensional Networks

Background

Network Architecture

Multidirectional Networks

Multidimensional Long Short-Term Memory

Experiments

Air Freight Data

MNIST Data

Analysis

Hierarchical Subsampling Networks

Network Architecture

Subsampling Window Sizes

Hidden Layer Sizes

Number of Levels

Multidimensional Networks

Output Layers

Complete System

Experiments

Offline Arabic Handwriting Recognition

Online Arabic Handwriting Recognition

French Handwriting Recognition

Farsi/Arabic Character Classification

Phoneme Recognition

Bibliography

Acknowledgements

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc