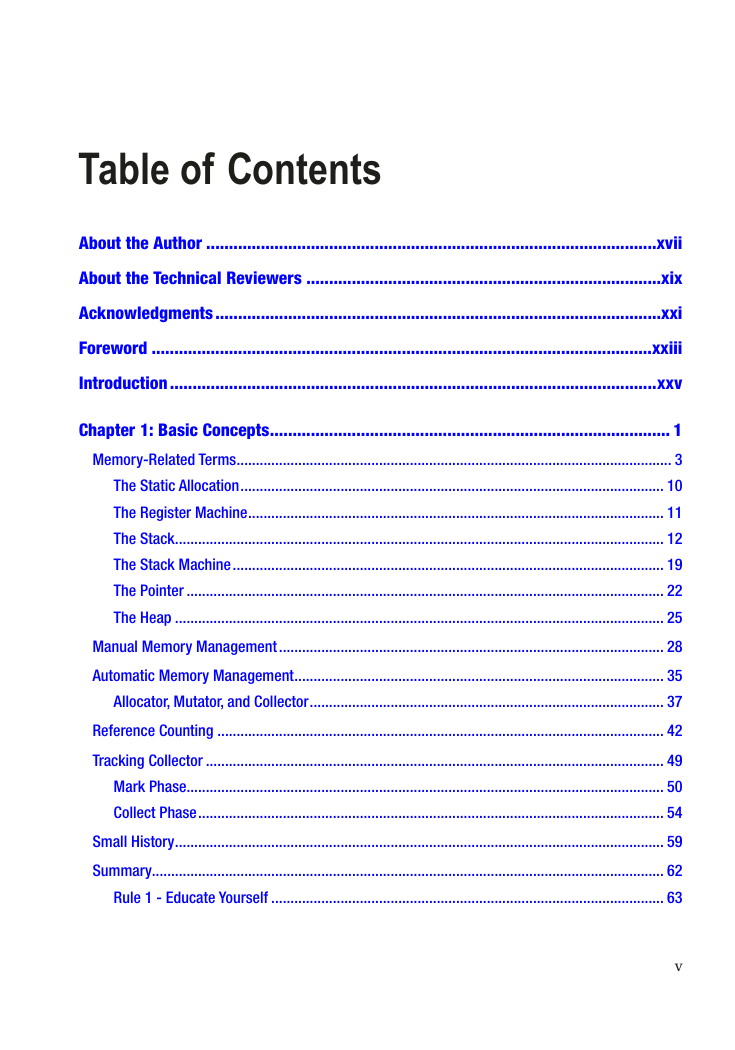

Table of Contents

About the Author

About the Technical Reviewers

Acknowledgments

Foreword

Introduction

Chapter 1: Basic Concepts

Memory-Related Terms

The Static Allocation

The Register Machine

The Stack

The Stack Machine

The Pointer

The Heap

Manual Memory Management

Automatic Memory Management

Allocator, Mutator, and Collector

The Mutator

The Allocator

The Collector

Reference Counting

Tracking Collector

Mark Phase

Conservative Garbage Collector

Precise Garbage Collector

Collect Phase

Sweep

Compact

Small History

Summary

Rule 1 - Educate Yourself

Chapter 2: Low-Level Memory Management

Hardware

Memory

CPU

CPU Cache

Cache Hit and Miss

Data Locality

Cache Implementation

Data Alignment

Non-temporal Access

Prefetching

Hierarchical Cache

Multicore Hierarchical Cache

Operating System

Virtual Memory

Large Pages

Virtual Memory Fragmentation

General Memory Layout

Windows Memory Management

Windows Memory Layout

Linux Memory Management

Linux Memory Layout

Operating System Influence

NUMA and CPU Groups

Summary

Rule 2 - Random Access Should Be Avoided, Sequential Access Should Be Encouraged

Rule 3 - Improve Spatial and Temporal Data Locality

Rule 4 - Consume More Advanced Possibilities

Chapter 3: Memory Measurements

Measure Early

Overhead and Invasiveness

Sampling vs. Tracing

Call Tree

Objects Graphs

Statistics

Latency vs. Throughput

Memory Dumps, Tracing, Live Debugging

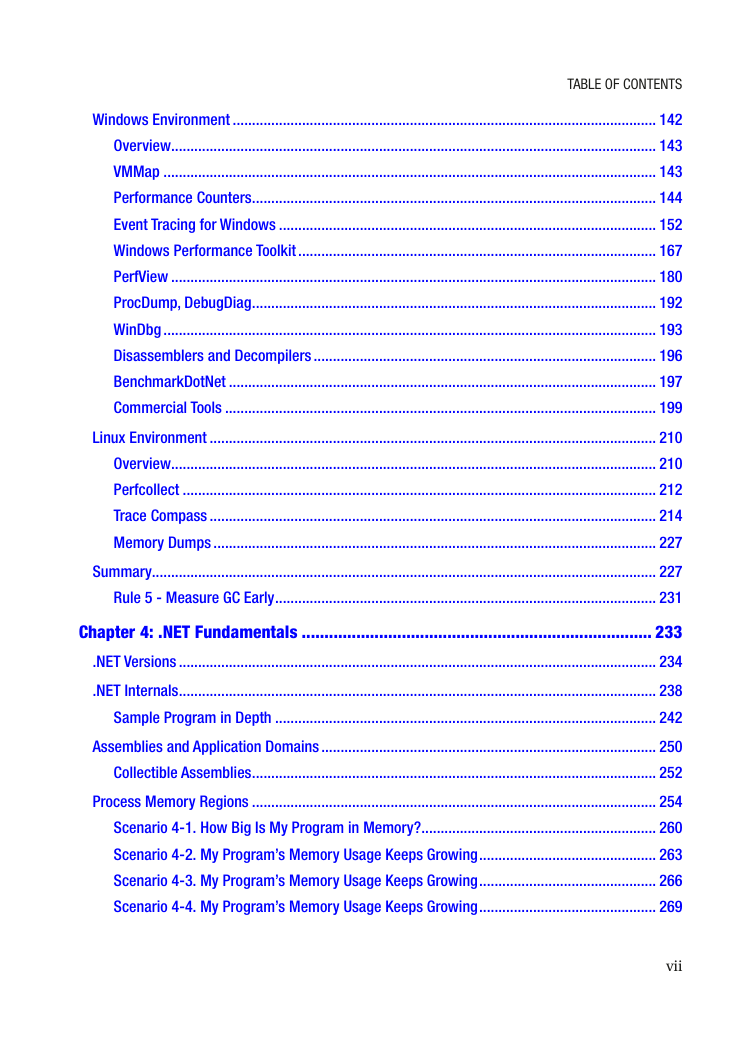

Windows Environment

Overview

VMMap

Performance Counters

Event Tracing for Windows

Windows Performance Toolkit

Windows Performance Recorder

Windows Performance Analyzer

Opening File and Configur ation

Generic Events

Region of Interests

Flame Charts

Stack Tags

Custom Graphs

Profiles

PerfView

Data Collection

Data Analysis

Memory Snapshots

ProcDump, DebugDiag

WinDbg

Disassemblers and Decompilers

BenchmarkDotNet

Commercial Tools

Visual Studio

Scitech .NET Memory Profiler

JetBrains DotMemory

RedGate ANTS Memory Profiler

Intel VTune Amplifier and AMD CodeAnalyst Performance Analyzer

Dynatrace and AppDynamics

Linux Environment

Overview

Perfcollect

Trace Compass

Opening File

CoreCLR.GC.collections

CoreCLR.threads.state

CoreCLR.GC.generations.ranges

The Final Results

Memory Dumps

Summary

Rule 5 - Measure GC Early

Chapter 4: .NET Fundamentals

.NET Versions

.NET Internals

Sample Program in Depth

Assemblies and Application Domains

Collectible Assemblies

Process Memory Regions

Scenario 4-1. How Big Is My Program in Memory?

Scenario 4-2. My Program’s Memory Usage Keeps Growing

Scenario 4-3. My Program’s Memory Usage Keeps Growing

Scenario 4-4. My Program’s Memory Usage Keeps Growing

Type System

Type Categories

Type Storage

Value Types

Value Types Storage

Structs

Structs in General

Structs Storage

Reference Types

Classes

Strings

String Interning

Scenario 4-5. My Program’s Memory Usage Is Too Big

Boxing and Unboxing

Passing by Reference

Pass-by-Reference Value-Type Instance

Pass-by-Reference Reference-Type Instance

Types Data Locality

Static Data

Static Fields

Static Data Internals

Summary

Structs

Classes

Rule 6 - Measure Your Program

Rule 7 - Do Not Assume There Is No Memory Leak

Rule 8 - Consider Using Struct

Rule 9 - Consider Using String Interning

Rule 10 - Avoid Boxing

Chapter 5: Memory Partitioning

Partitioning Strategies

Size Partitioning

Small Object Heap

Large Object Heap

Large Object Heap - Arrays of Doubles

Large Object Heap - Internal CLR Data

LargeHeapHandleTable

Lifetime Partitioning

Scenario 5-1. Is My Program Healthy? Generation Sizes in Time

Remembered Sets

Card Tables

Card Bundles

Physical Partitioning

Scenario 5-2. nopCommerce Memory Leak?

Scenario 5-3. Large Object Heap Waste?

Segments and Heap Anatomy

Segments Reuse

Summary

Rule 11 - Monitor Generation Sizes

Rule 12 - Avoid Unnecessary Heap References

Rule 13 - Monitor Segments Usage

Chapter 6: Memory Allocation

Allocation Introduction

Bump Pointer Allocation

Free-List Allocation

Creating New Object

Small Object Heap Allocation

Large Object Heap Allocation

Heap Balancing

OutOfMemoryException

Scenario 6-1. Out of Memory

Stack Allocation

Avoiding Allocations

Explicit Allocations of Reference Types

General Case - Consider Using Struct

Tuples - Use ValueTuple Instead

Small Temporary Local Data - Consider Using stackalloc

Creating Arrays - Use ArrayPool

Creating Streams - Use RecyclableMemoryStream

Creating a Lot of Objects - Use Object Pool

Async Methods Returning Task - Use ValueTask

Hidden Allocations

Delegate Allocation

Boxing

Closures

Yield Return

Parameters Array

String Concatenation

Various Hidden Allocations Inside Libraries

System.Generics Collections

LINQ - Delegates

LINQ - Anonymous Types Creation

LINQ - Enumerables

Scenario 6-2. Investigating Allocations

Scenario 6-3. Azure Functions

Summary

Rule 14 - Avoid Allocations on the Heap in Performance Critical Code Paths

Rule 15 - Avoid Excessive LOH Allocations

Rule 16 - Promote Allocations on the Stack When Appropriate

Chapter 7: Garbage Collection - Introduction

High-Level View

GC Process in Example

GC Process Steps

Scenario 7-1. Analyzing the GC Usage

Profiling the GC

Garbage Collection Performance Tuning Data

Static Data

Dynamic Data

Scenario 7-2. Understanding the Allocation Budget

Collection Triggers

Allocation Trigger

Explicit Trigger

Scenario 7-3. Analyzing the Explicit GC Calls

Low Memory Level System Trigger

Various Internal Triggers

EE Suspension

Scenario 7-4. Analyzing GC Suspension Times

Generation to Condemn

Scenario 7-5. Condemned Generations Analysis

Summary

Chapter 8: Garbage Collection - Mark Phase

Object Traversal and Marking

Local Variable Roots

Local Variables Storage

Stack Roots

Lexical Scope

Live Stack Roots vs. Lexical Scope

Live Stack Roots with Eager Root Collection

GC Info

Pinned Local Variables

Stack Root Scanning

Finalization Roots

GC Internal Roots

GC Handle Roots

Handling Memory Leaks

Scenario 8-1. nopCommerce Memory Leak?

Scenario 8-2. Identifying the Most Popular Roots

Summary

Chapter 9: Garbage Collection - Plan Phase

Small Object Heap

Plugs and Gaps

Scenario 9-1. Memory Dump with Invalid Structures

Brick Table

Pinning

Scenario 9-2. Investigating Pinning

Generation Boundaries

Demotion

Large Object Heap

Plugs and Gaps

Decide on Compaction

Summary

Chapter 10: Garbage Collection - Sweep and Compact

Sweep Phase

Small Object Heap

Large Object Heap

Compact Phase

Small Object Heap

Getting a New Ephemeral Segment if Necessary

Relocate References

Compact Objects

Fix Generation Boundaries

Delete/Decommit Segments if Necessary

Creating Free-List Items

Age roots

Large Object Heap

Scenario 10-1. Large Object Heap Fragmentation

Summary

Rule 17 - Watch Runtime Suspensions

Rule 18 - Avoid Mid-Life Crisis

Rule 19 - Avoid Old Generation and LOH Fragmentation

Rule 20 - Avoid Explicit GC

Rule 21 - Avoid Memory Leaks

Rule 22 - Avoid Pinning

Chapter 11: GC Flavors

Modes Overview

Workstation vs. Server Mode

Workstation Mode

Server Mode

Non-Concurrent vs. Concurrent Mode

Non-Concurrent Mode

Concurrent Mode

Modes Configuration

.NET Framework

.NET Core

GC Pause and Overhead

Modes Descriptions

Workstation Non-Concurrent

Workstation Concurrent (Before 4.0)

Background Workstation

Concurrent Mark

Concurrent Sweep

Server Non-Concurrent

Background Server

Latency Modes

Batch Mode

Interactive

Low Latency

Sustained Low Latency

No GC Region

Latency Optimization Goals

Choosing GC Flavor

Scenario 8-1. Checking GC Settings

Scenario 8-2. Benchmarking Different GC Modes

Summary

Rule 23 - Choose GC Mode Consciously

Rule 24 - Remember About Latency Modes

Chapter 12: Object Lifetime

Object vs. Resource Life Cycle

Finalization

Introduction

Eager Root Collection Problem

Critical Finalizers

Finalization Internals

Finalization Overhead

Scenario 12-1. Finalization Memory Leak

Resurrection

Disposable Objects

Safe Handles

Weak References

Caching

Weak Event Pattern

Scenario 9-2. Memory Leak Because of Events

Summary

Rule 25 - Avoid Finalizers

Rule 26 - Prefer Explicit Cleanup

Chapter 13: Miscellaneous Topics

Dependent Handles

Thread Local Storage

Thread Static Fields

Thread Data Slots

Thread Local Storage Internals

Usage Scenarios

Managed Pointers

Ref Locals

Ref Returns

Readonly Ref Variables and in Parameters

Ref Types Internals

Managed Pointer Into Stack-Allocated Object

Managed Pointer Into Heap-Allocated Object

Managed Pointers in C# - ref Variables

More on Structs...

Readonly Structs

Ref Structs (byref-like types)

Fixed Size Buffers

Object/Struct Layout

Unmanaged Constraint

Blittable Types

Summary

Chapter 14: Advanced Techniques

Span and Memory

Span

Usage Examples

Span Internals

“Slow Span”

“Fast Span”

Memory

IMemoryOwner

Memory Internals

Span and Memory Guidelines

Unsafe

Unsafe Internals

Data-Oriented Design

Tactical Design

Design Types to Fit as Much Relevant Data as Possible in the First Cache Line

Design Data to Fit into Higher Cache Levels

Design Data That Allows Easy Parallelization

Avoid Non-sequential, Especially Random Memory Access

Strategic Design

Moving from Array-of-Structures to Structure-of-Arrays

Entity Component System

More on Future...

Nullable Reference Types

Pipelines

Summary

Chapter 15: Programmatical APIs

GC API

Collection Data and Statistics

GC.MaxGeneration

GC.CollectionCount(Int32)

GC.GetGeneration

GC.GetTotalMemory

GC.GetAllocatedBytesForCurrentThread

GC.KeepAlive

GCSettings.LargeObjectHeapCompactionMode

GCSettings.LatencyMode

GCSettings.IsServerGC

GC Notifications

Controlling Unmanaged Memory Pressure

Explicit Collection

No-GC Regions

Finalization Management

Memory Usage

Internal Calls in the GC Class

CLR Hosting

ClrMD

TraceEvent Library

Custom GC

Summary

Index

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc