Cover

Book Information

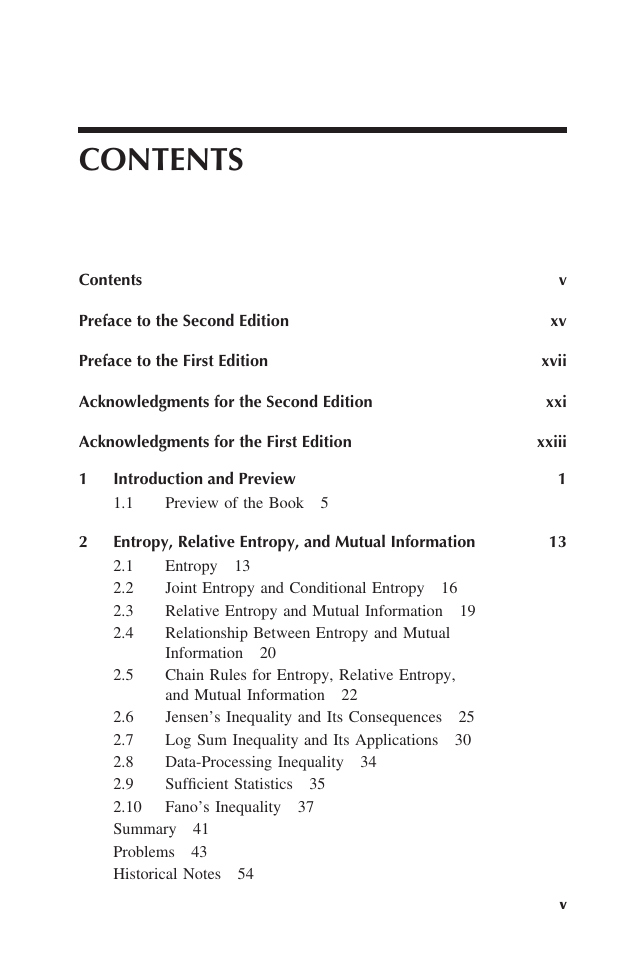

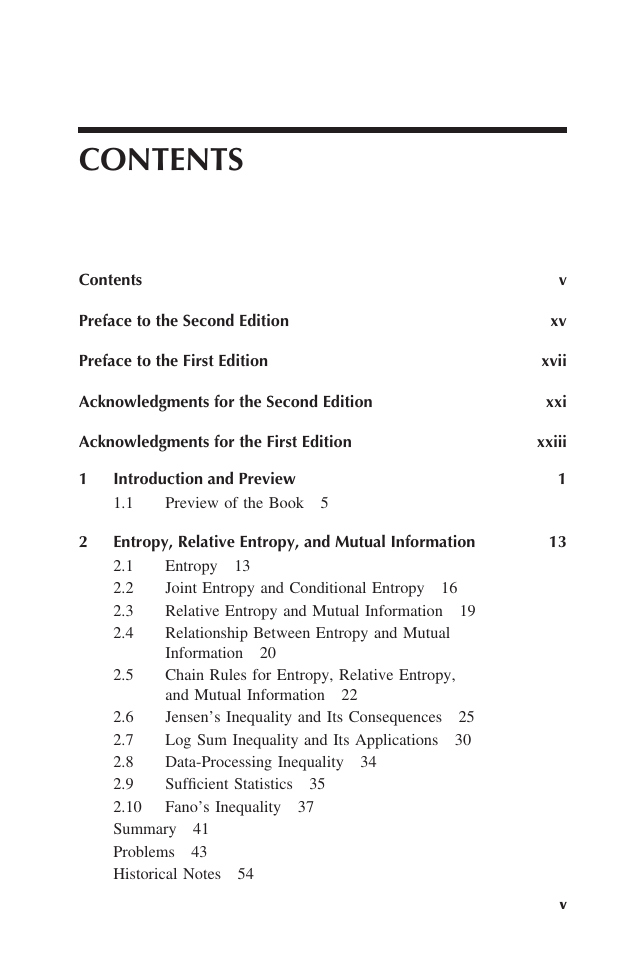

Contents

Preface to the Second Edition

Preface to the First Edition

Chapter1 Introduction and Preview

Chapter2 Entropy,Relative Entropy,and Mutual Information

2.1 Entropy

2.2 Joint Entropy and Conditional Entropy

2.3 Relative Entropy and Mutual Information

2.4 Relationship between Entropy and Mutual Information

2.5 Chain Rules for Entropy,Relative Entropy,and Mutual Information

2.6 Jensen's Inequality and It's Consequences

2.7 Log Sum Inequality and It's Applications

2.8 Data-Processing Inequality

2.9 Sufficient Statistics

2.10 Fano's Inequality

SUMMARY

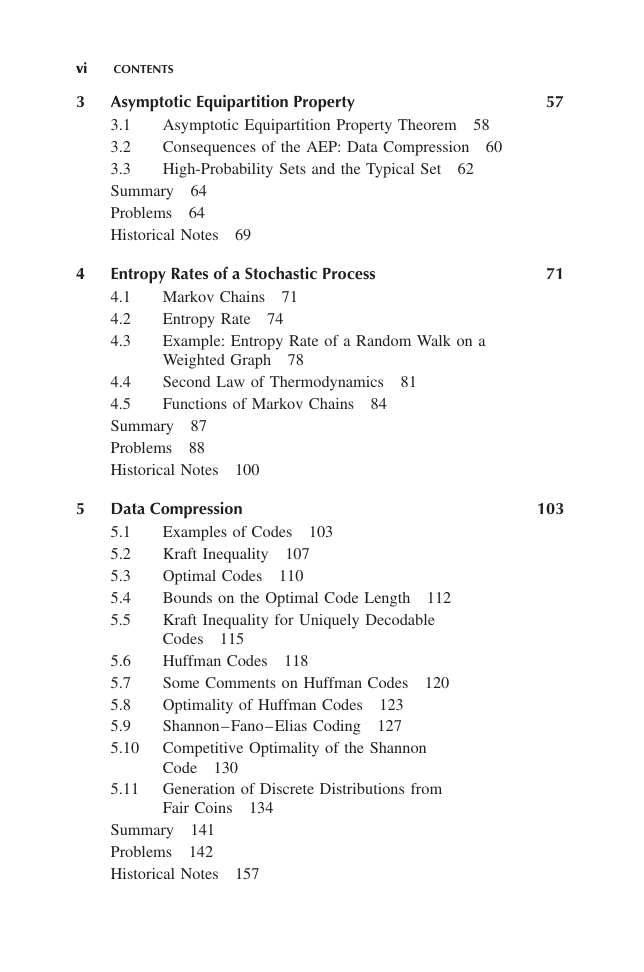

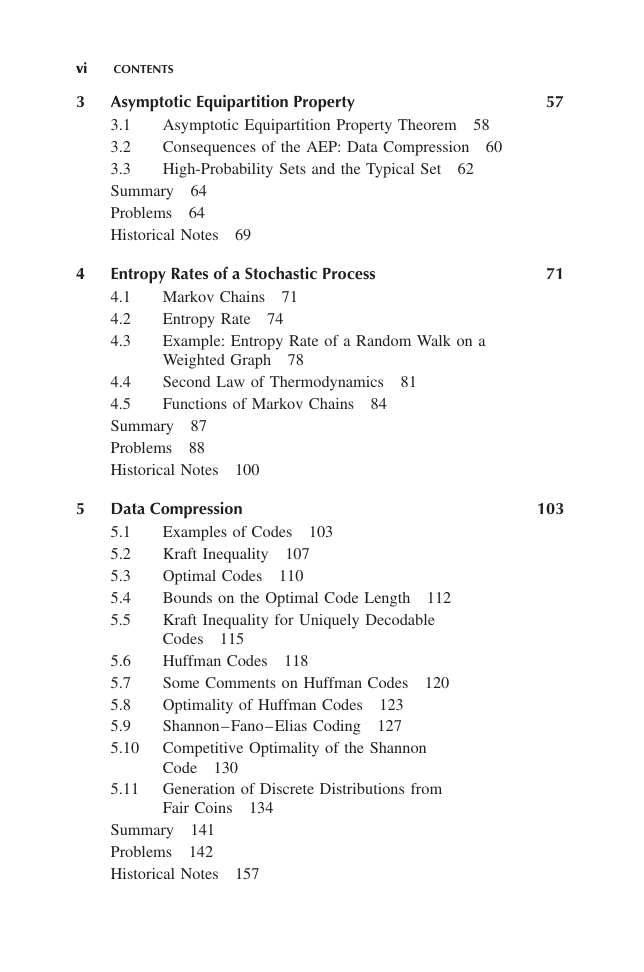

Chapter3 Asymptotic Equipatition Property

3.1 Asymptotic Equipartition Property Theorem

3.2 CONSEQUENCES OF THE AEP: DATA COMPRESSION

3.3 HIGH-PROBABILITY SETS AND THE TYPICAL SET

SUMMARY

Chapter4 ENTROPY RATES

OF A STOCHASTIC PROCESS

4.1 MARKOV CHAINS

4.2 ENTROPY RATE

4.3 EXAMPLE: ENTROPY RATE OF A RANDOM WALK

ON AWEIGHTED GRAPH

4.4 SECOND LAW OF THERMODYNAMICS

4.5 FUNCTIONS OF MARKOV CHAINS

SUMMARY

Chapter5 DATA COMPRESSION

5.1 EXAMPLES OF CODES

5.2 KRAFT INEQUALITY

5.3 OPTIMAL CODES

5.4 BOUNDS ON THE OPTIMAL CODE LENGTH

5.5 KRAFT INEQUALITY FOR UNIQUELY DECODABLE CODES

5.6 HUFFMAN CODES

5.7 SOME COMMENTS ON HUFFMAN CODES

5.8 OPTIMALITY OF HUFFMAN CODES

5.9 SHANNON–FANO–ELIAS CODING

5.10 COMPETITIVE OPTIMALITY OF THE SHANNON CODE

5.11 GENERATION OF DISCRETE DISTRIBUTIONS FROM FAIR

COINS

SUMMARY

Chapter6 GAMBLING AND DATA COMPRESSION

6.1 THE HORSE RACE

6.2 GAMBLING AND SIDE INFORMATION

6.3 DEPENDENT HORSE RACES AND ENTROPY RATE

6.4 THE ENTROPY OF ENGLISH

6.5 DATA COMPRESSION AND GAMBLING

6.6 GAMBLING ESTIMATE OF THE ENTROPY OF ENGLISH

SUMMARY

Chapter7 CHANNEL CAPACITY

7.1 EXAMPLES OF CHANNEL CAPACITY

7.2 SYMMETRIC CHANNELS

7.3 PROPERTIES OF CHANNEL CAPACITY

7.4 PREVIEW OF THE CHANNEL CODING THEOREM

7.5 DEFINITIONS

7.6 JOINTLY TYPICAL SEQUENCES

7.7 CHANNEL CODING THEOREM

7.8 ZERO-ERROR CODES

7.9 FANO’S INEQUALITY AND THE CONVERSE

TO THE CODING THEOREM

7.10 EQUALITY IN THE CONVERSE TO THE CHANNEL

CODING THEOREM

7.11 HAMMING CODES

7.12 FEEDBACK CAPACITY

7.13 SOURCE–CHANNEL SEPARATION THEOREM

SUMMARY

Chapter8 DIFFERENTIAL ENTROPY

8.1 DEFINITIONS

8.2 AEP FOR CONTINUOUS RANDOM VARIABLES

8.3 RELATION OF DIFFERENTIAL ENTROPY TO DISCRETE

ENTROPY

8.4 JOINT AND CONDITIONAL DIFFERENTIAL ENTROPY

8.5 RELATIVE ENTROPY AND MUTUAL INFORMATION

8.6 PROPERTIES OF DIFFERENTIAL ENTROPY, RELATIVE

ENTROPY, AND MUTUAL INFORMATION

SUMMARY

Chapter9 GAUSSIAN CHANNEL

9.1 GAUSSIAN CHANNEL: DEFINITIONS

9.2 CONVERSE TO THE CODING THEOREM FOR GAUSSIAN

CHANNELS

9.3 BANDLIMITED CHANNELS

9.4 PARALLEL GAUSSIAN CHANNELS

9.5 CHANNELS WITH COLORED GAUSSIAN NOISE

9.6 GAUSSIAN CHANNELS WITH FEEDBACK

SUMMARY

Chapter10 RATE DISTORTION THEORY

10.1 QUANTIZATION

10.2 DEFINITIONS

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc