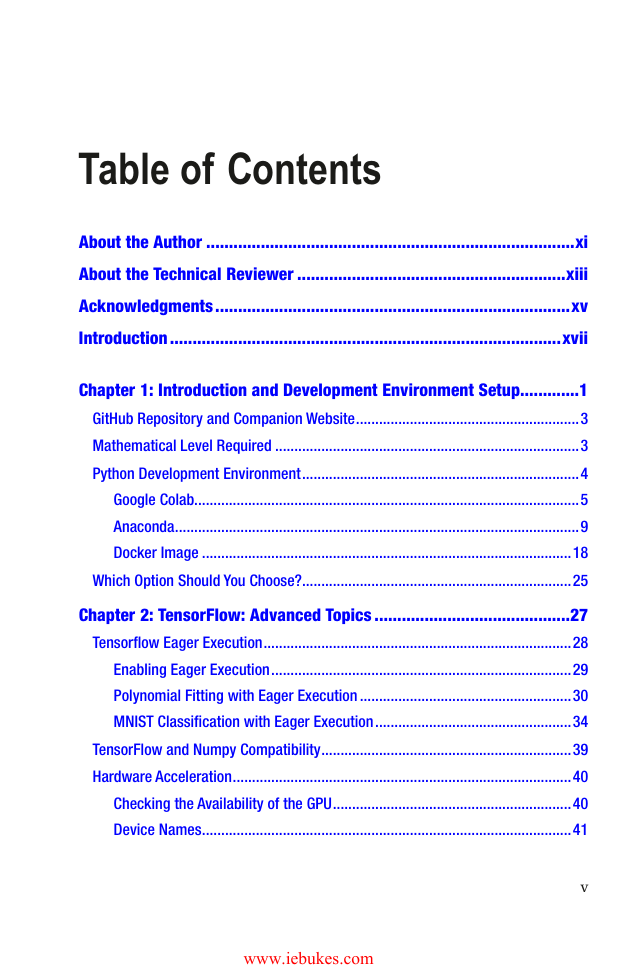

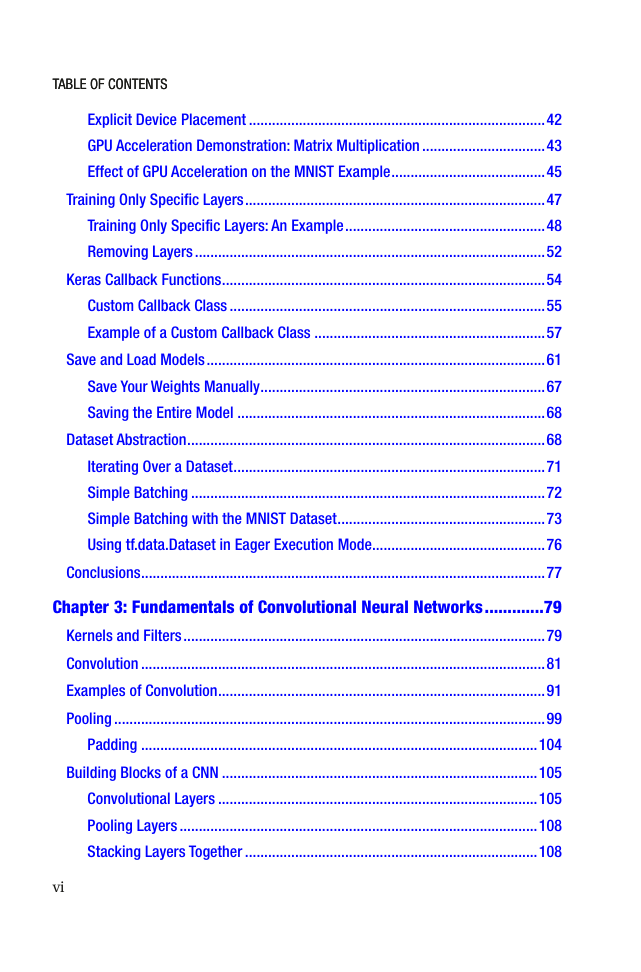

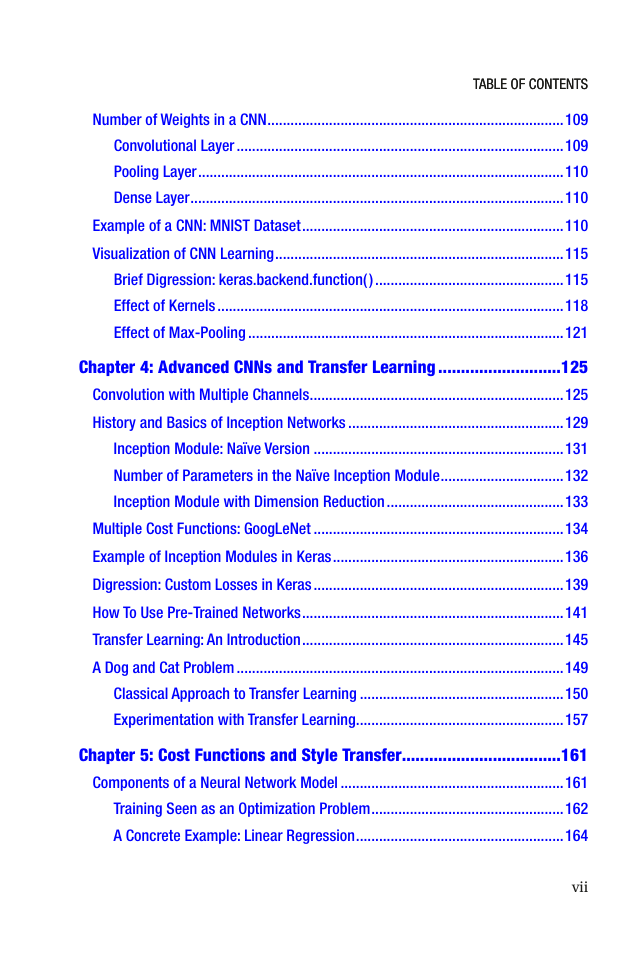

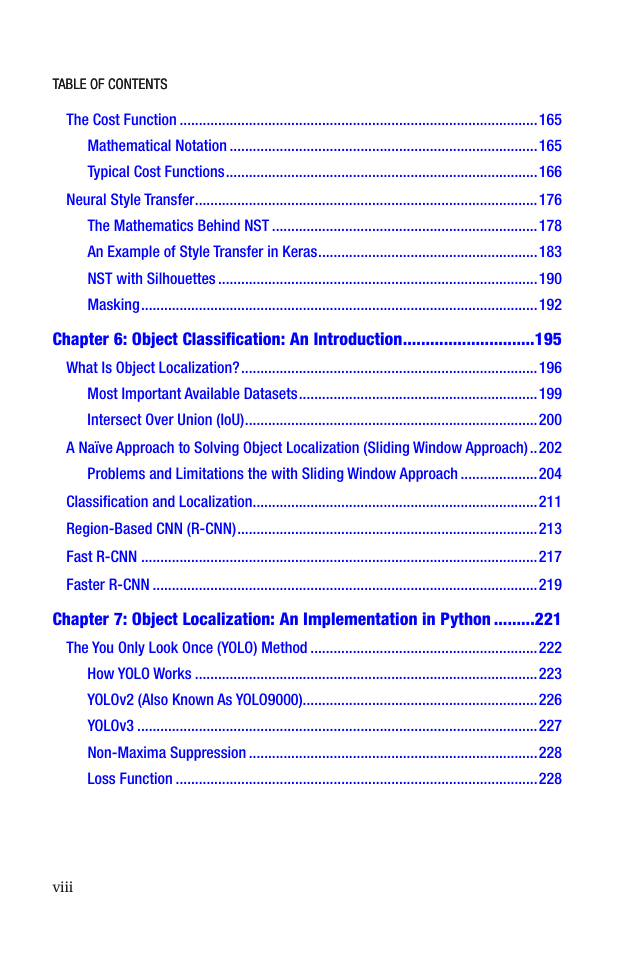

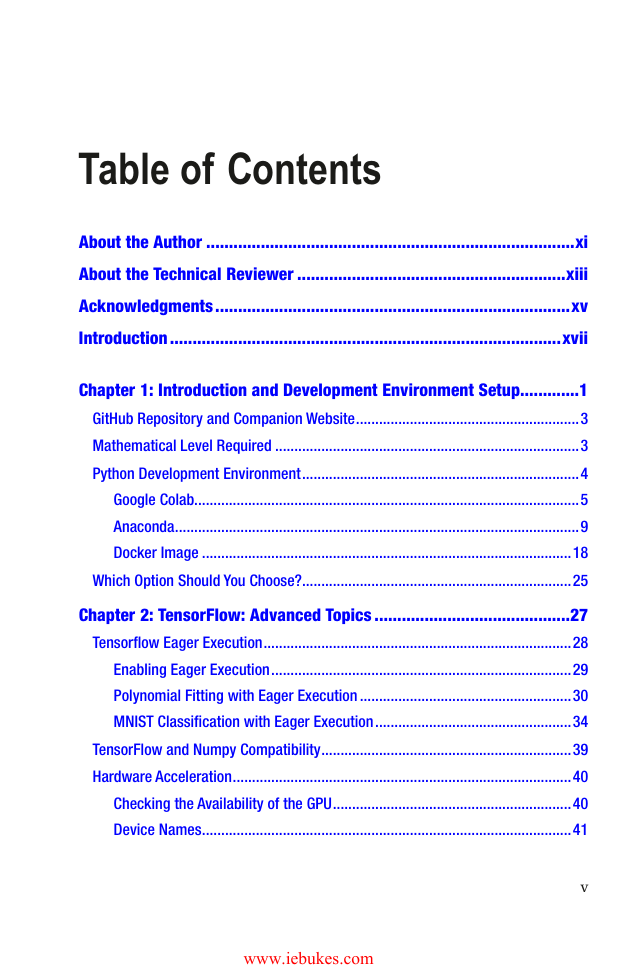

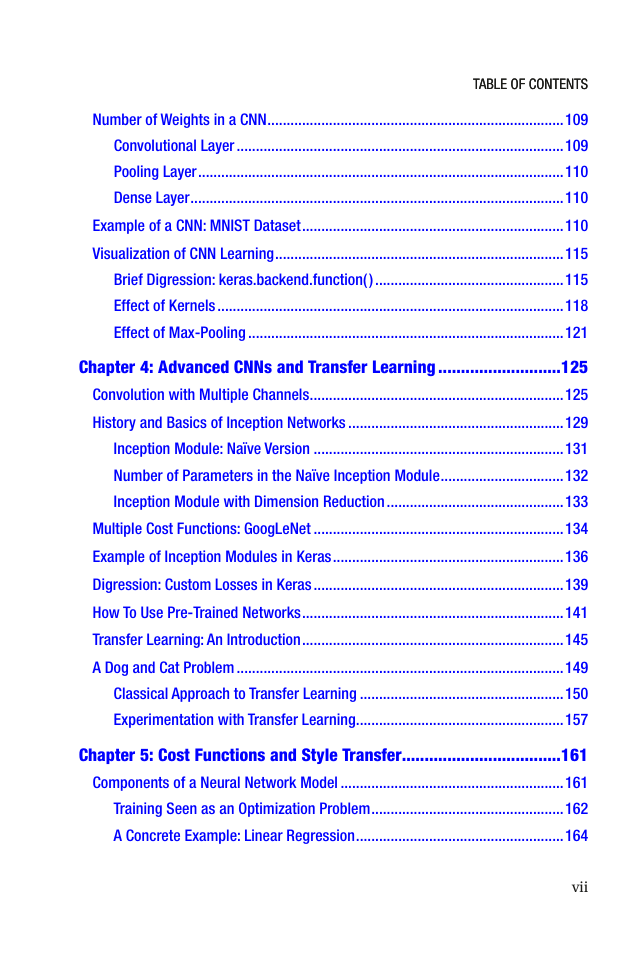

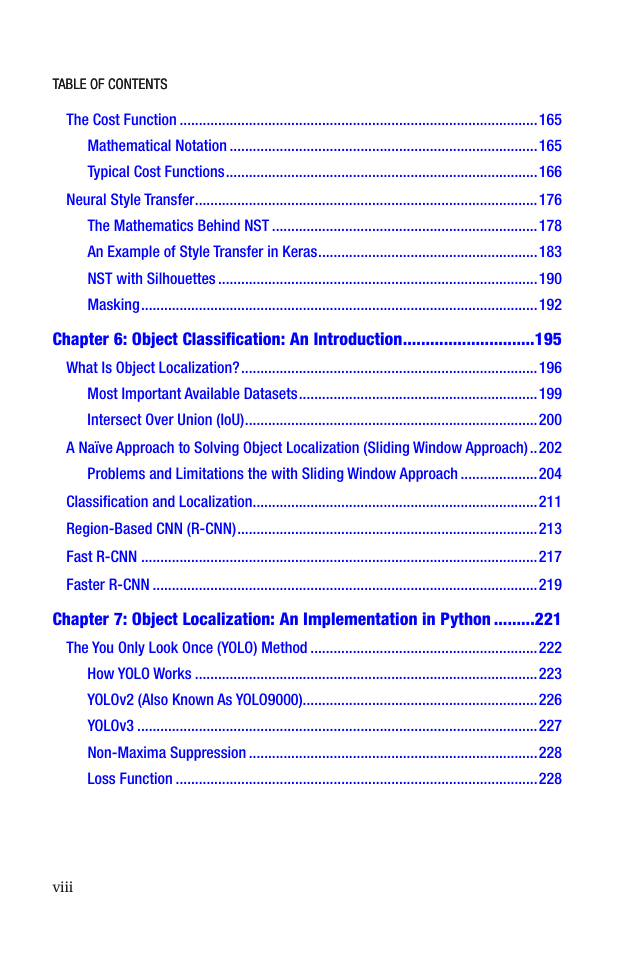

Table of Contents

About the Author

About the Technical Reviewer

Acknowledgments

Introduction

Chapter 1: Introduction and Development Environment Setup

GitHub Repository and Companion Website

Mathematical Level Required

Python Development Environment

Google Colab

Benefits and Drawbacks to Google Colab

Anaconda

Installing TensorFlow the Anaconda Way

Local Jupyter Notebooks

Benefits and Drawbacks to Anaconda

Docker Image

Benefits and Drawbacks to a Docker Image

Which Option Should You Choose?

Chapter 2: TensorFlow: Advanced Topics

Tensorflow Eager Execution

Enabling Eager Execution

Polynomial Fitting with Eager Execution

MNIST Classification with Eager Execution

TensorFlow and Numpy Compatibility

Hardware Acceleration

Checking the Availability of the GPU

Device Names

Explicit Device Placement

GPU Acceleration Demonstration: Matrix Multiplication

Effect of GPU Acceleration on the MNIST Example

Training Only Specific Layers

Training Only Specific Layers: An Example

Removing Layers

Keras Callback Functions

Custom Callback Class

Example of a Custom Callback Class

Save and Load Models

Save Your Weights Manually

Saving the Entire Model

Dataset Abstraction

Iterating Over a Dataset

Simple Batching

Simple Batching with the MNIST Dataset

Using tf.data.Dataset in Eager Execution Mode

Conclusions

Chapter 3: Fundamentals of Convolutional Neural Networks

Kernels and Filters

Convolution

Examples of Convolution

Pooling

Padding

Building Blocks of a CNN

Convolutional Layers

Pooling Layers

Stacking Layers Together

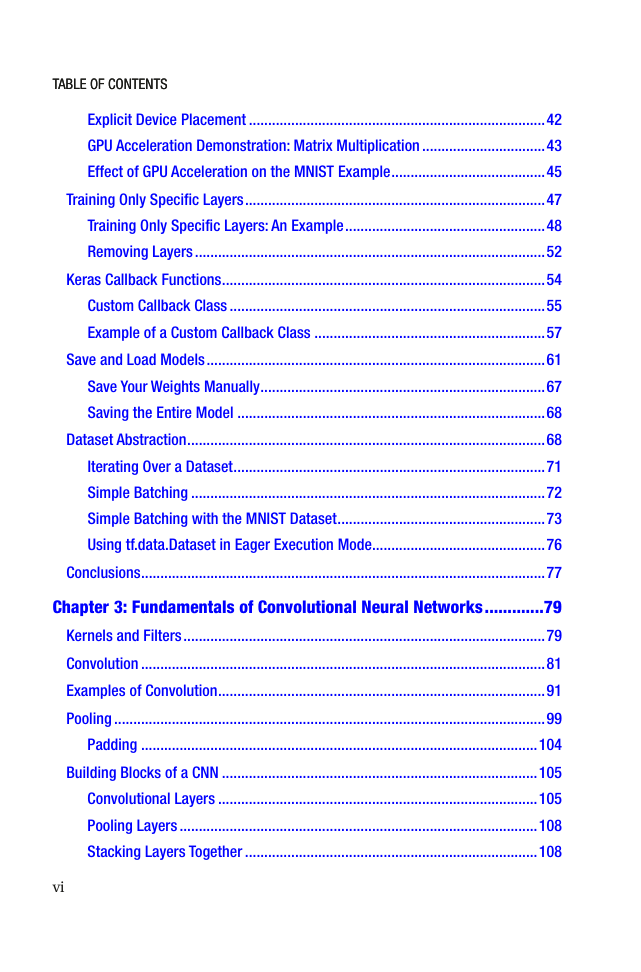

Number of Weights in a CNN

Convolutional Layer

Pooling Layer

Dense Layer

Example of a CNN: MNIST Dataset

Visualization of CNN Learning

Brief Digression: keras.backend.function()

Effect of Kernels

Effect of Max-Pooling

Chapter 4: Advanced CNNs and Transfer Learning

Convolution with Multiple Channels

History and Basics of Inception Networks

Inception Module: Naïve Version

Number of Parameters in the Naïve Inception Module

Inception Module with Dimension Reduction

Multiple Cost Functions: GoogLeNet

Example of Inception Modules in Keras

Digression: Custom Losses in Keras

How To Use Pre-Trained Networks

Transfer Learning: An Introduction

A Dog and Cat Problem

Classical Approach to Transfer Learning

Experimentation with Transfer Learning

Chapter 5: Cost Functions and Style Transfer

Components of a Neural Network Model

Training Seen as an Optimization Problem

A Concrete Example: Linear Regression

The Cost Function

Mathematical Notation

Typical Cost Functions

Mean Square Error

Intuitive Explanation

MSE as the Second Moment of a Moment-Generating Function

Cross-Entropy

Self-Information or Suprisal of an Event

Suprisal Associated with an Event X

Cross-Entropy

Cross-Entropy for Binary Classification

Cost Functions: A Final Word

Neural Style Transfer

The Mathematics Behind NST

An Example of Style Transfer in Keras

NST with Silhouettes

Masking

Chapter 6: Object Classification: An Introduction

What Is Object Localization?

Most Important Available Datasets

Intersect Over Union (IoU)

A Naïve Approach to Solving Object Localization (Sliding Window Approach)

Problems and Limitations the with Sliding Window Approach

Classification and Localization

Region-Based CNN (R-CNN)

Fast R-CNN

Faster R-CNN

Chapter 7: Object Localization: An Implementation in Python

The You Only Look Once (YOLO) Method

How YOLO Works

Dividing the Image Into Cells

YOLOv2 (Also Known As YOLO9000)

YOLOv3

Non-Maxima Suppression

Loss Function

Classification Loss

Localization Loss

Confidence Loss

Total Loss Function

YOLO Implementation in Python and OpenCV

Darknet Implementation of YOLO

Testing Object Detection with Darknet

Training a Model for YOLO for Your Specific Images

Concluding Remarks

Chapter 8: Histology Tissue Classification

Data Analysis and Preparation

Model Building

Data Augmentation

Horizontal and Vertical Shifts

Flipping Images Vertically

Randomly Rotating Images

Zooming in Images

Putting All Together

VGG16 with Data Augmentation

The fit() Function

The fit_generator() Function

The train_on_batch() Function

Training the Network

And Now Have Fun…

Index

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc