Architectural Support for Server-Side PHP Processing

Dibakar Gope David J. Schlais Mikko H. Lipasti

Department of Electrical and Computer Engineering

University of Wisconsin - Madison

Madison, WI, USA

gope@wisc.edu,schlais2@wisc.edu,mikko@engr.wisc.edu

ABSTRACT

PHP is the dominant server-side scripting language used to im-

plement dynamic web content. Just-in-time compilation, as imple-

mented in Facebook’s state-of-the-art HipHopVM, helps mitigate

the poor performance of PHP, but substantial overheads remain,

especially for realistic, large-scale PHP applications. This paper ana-

lyzes such applications and shows that there is little opportunity for

conventional microarchitectural enhancements. Furthermore, prior

approaches for function-level hardware acceleration present many

challenges due to the extremely flat distribution of execution time

across a large number of functions in these complex applications.

In-depth analysis reveals a more promising alternative: targeted ac-

celeration of four fine-grained PHP activities: hash table accesses,

heap management, string manipulation, and regular expression han-

dling. We highlight a set of guiding principles and then propose and

evaluate inexpensive hardware accelerators for these activities that

accrue substantial performance and energy gains across dozens of

functions. Our results reflect an average 17.93% improvement in

performance and 21.01% reduction in energy while executing these

complex PHP workloads on a state-of-the-art software and hardware

platform.

CCS CONCEPTS

• Computer systems organization → Cloud computing; Special

purpose systems;

KEYWORDS

PHP, dynamic languages, domain-specific accelerators

ACM Reference format:

Dibakar Gope David J. Schlais Mikko H. Lipasti Department of Electri-

cal and Computer Engineering University of Wisconsin - Madison Madison,

WI, USA . 2017. Architectural Support for Server-Side PHP Processing. In

Proceedings of ISCA ’17, Toronto, ON, Canada, June 24-28, 2017, 14 pages.

https://doi.org/10.1145/3079856.3080234

1 INTRODUCTION

In recent years, the importance and quantity of code written in dy-

namic scripting languages such as PHP, Python, Ruby and Javascript

Permission to make digital or hard copies of all or part of this work for personal or

classroom use is granted without fee provided that copies are not made or distributed

for profit or commercial advantage and that copies bear this notice and the full citation

on the first page. Copyrights for components of this work owned by others than ACM

must be honored. Abstracting with credit is permitted. To copy otherwise, or republish,

to post on servers or to redistribute to lists, requires prior specific permission and/or a

fee. Request permissions from permissions@acm.org.

ISCA ’17, June 24-28, 2017, Toronto, ON, Canada

© 2017 Association for Computing Machinery.

ACM ISBN 978-1-4503-4892-8/17/06. . . $15.00

https://doi.org/10.1145/3079856.3080234

has grown considerably. Among all scripting languages used for

server-side web development, PHP is the most commonly used [70,

72], representing about 82.3% [13] of all web applications. In par-

ticular, server-side PHP web applications have created an ecosystem

of their own in the world of web development. They are used to

build and maintain websites and web applications of all sizes and

complexity, ranging from small websites to complex large scale en-

terprise management systems. PHP powers many of the most popular

web applications, such as Facebook and Wikipedia. Despite their

considerable increase in popularity, their performance is still the

main impediment for deploying large applications. Since these PHP

applications run on live datacenters hosting millions of such web

applications, even small improvements in performance or utilization

will translate into immense cost savings.

The desire to reduce datacenter load inspired the design of the

HipHop JIT compiler (HHVM) [19, 72], which translates PHP to

native code. HHVM demonstrates a significant performance increase

for a set of micro-benchmark suites. However, runtime character-

istics of popular real-world PHP web applications (e-commerce

platforms, social networking sites, online news forums, banking

servers, etc.) are found to be dramatically different than the de-

facto benchmark suites SPECWeb2005 [10], bench.php [18], and

the computer language benchmarks [1] used so far for evaluating the

performance of web servers. These micro-benchmark suites have

been the primary focus for most architectural optimizations of web

servers [20]. Furthermore, these micro-benchmarks spend most of

their time in JIT-generated compiled code, contrary to the real-world

PHP applications that tend to spend most of their time in various

library routines of the VM.

Figure 1 depicts the distribution of CPU cycles spent in the hottest

leaf functions of a few real-world PHP applications compared to

SPECWeb2005’s banking and e-commerce workloads. Clearly, the

SPECWeb2005 workloads contain significant hotspots – with very

few functions responsible for about 90% of their execution time.

However, the PHP web applications exhibit significant diversity,

having very flat execution profiles – the hottest single function (JIT

compiled code) is responsible for only 10− 12% of cycles, and they

take about 100 functions to account for about 65% of cycles. This

tail-heavy behavior presents few obvious or compelling opportunities

for microarchitectural optimizations.

In order to understand the microarchitectural implications (perfor-

mance and energy-efficiency bottlenecks) of these workloads with

hundreds of leaf functions, we undertook a detailed architectural

characterization of these applications. Despite our best effort, we

could not find any obvious target or easy opportunity for architectural

optimization.

507�

ISCA ’17, June 24-28, 2017, Toronto, ON, Canada

D. Gope et al.

Heap Management. A significant fraction of execution time in

these applications come from memory allocation and deallocation,

despite significant efforts to optimize them in software [37, 51].

Current software implementations mostly rely on recursive data

structures and interact with the operating system, which makes them

non-trivial to implement in hardware. However, these applications

exhibit an unique memory usage pattern that allows us to develop

a heap manager with its most frequently accessed components in

hardware. It can respond to most of the memory allocation and

deallocation requests from this small and simple hardware structure.

String Functions. These PHP applications exercise a variety of

string copying, matching, and modifying functions to turn large

volumes of unstructured textual data (such as social media updates,

web documents, blog posts, news articles, and system logs) into

appropriate HTML format. Prior works [68] have realized the po-

tential of hardware specialization for string matching, but do not

support all the necessary string functions frequently used in these

PHP applications. Nevertheless, designing separate accelerators to

support all the necessary string functions will deter their commercial

deployment. Surprisingly, all the necessary string functions can be

supported with a few common sub-operations. We propose a string

accelerator that supports these string functions by accelerating the

common sub-operations rather than accelerating each function. It

processes multiple bytes per cycle for concurrency to outperform

the currently optimal software with SSE extensions.

Regular Expressions. These PHP applications also use regular

expressions (regexps) to dynamically generate HTML content from

the unstructured textual data described above. Software-based reg-

exp engines [8, 57] or recent hardware regexp accelerators [34, 39,

58, 66] are overly generic in nature for these PHP applications as

they do not take into consideration the inherent characteristics of

the regular expressions in them. We introduce two novel techniques

– Content Sifting and Content Reuse – to accelerate the execution

of regexp processing in these PHP applications and achieve high

performance and energy efficiency. These two techniques signifi-

cantly reduce the repetitive processing of textual data during regular

expression matching. They essentially exploit the content locality

across a particular or a series of consecutive regular expressions in

these PHP applications.

The remainder of this paper is organized as follows. Section 2

performs an in-depth microarchitectural analysis of the PHP applica-

tions. Failing to find any clear microarchitectural opportunities shifts

our focus towards designing specialized hardware. We discover the

potential candidates for specialization in Section 3. In Section 4

we design specialized hardware accelerators for them. Section 5

presents results. Section 6 discusses related work and Section 7

concludes the paper.

2 MICROARCHITECTURAL ANALYSIS

We begin by performing an in-depth architectural characterization of

the PHP applications to identify performance and energy-efficiency

bottlenecks (if any) in them. We use gem5 [4] for our architectural

simulation. Surprisingly, the most significant bottlenecks lie in the

processor front-end, with poor branch predictor and branch target

buffer performance. Neither increased memory bandwidth, nor larger

Figure 1: Distribution of CPU cycles of SPECWeb2005 work-

loads and few real-world PHP web applications over leaf func-

tions (details in evaluation section).

As the processor industry continues to lean towards customization

and heterogeneity [41, 54, 65, 67] to improve performance and en-

ergy efficiency, we seek to embrace domain-specific specializations

for these real-world PHP applications. We note function level special-

ization is not a viable solution for these applications given their very

flat execution profiles. However, a closer look into the leaf functions’

overall distribution reveals that many leaf functions suffer from ei-

ther the abstraction overheads of scripting languages (such as type

checking [22], hash table accesses for user-defined types [31, 32],

etc.) or the associated overhead of garbage collected languages [25].

These observations guide us to apply several hardware and software

optimization techniques from prior works [22, 31, 32, 40] together to

these PHP applications in order to minimize those overheads. After

applying these optimizations, a considerable fraction of their exe-

cution time falls into four major categories of activities – hash map

access, heap management, string manipulation, and regular expres-

sion processing. These four categories show the potential to improve

the performance and energy efficiency of many leaf functions in

their overall distribution. This motivates us to develop specialized

hardware to accelerate these four major activities.

Hash Table Access. Unlike micro-benchmarks that mostly ac-

cesses hash maps with static literal names, these real-world applica-

tions often tend to exercise hash maps in their execution environment

with dynamic key names. These accesses cannot be converted to

regular offset accesses by software methods [31, 32, 40]. Programma-

bility and flexibility in writing code are two of several reasons to

use dynamic key names. In order to reduce the overhead and inher-

ent sources of energy inefficiency from hash map accesses in these

PHP applications, we propose to deploy a hash table in hardware.

This hash table processes both GET and SET requests entirely in

hardware to satisfy the unique access pattern of these PHP applica-

tions, contrary to prior works deploying a hash table that supports

only GET requests in a memcached environment [55]. Furthermore,

supporting such a hash table in the PHP environment presents a new

set of challenges in order to support a rich set of PHP features com-

municating with hash maps that these real-world PHP applications

tend to exercise often.

02040608010016111621263136414651566166717681869196Distribution of cycles (CDF %)# Leaf functionsWordpressDrupalMediawikiSPECWeb(Banking)SPECWeb(E-commerce)Hottest: JIT-compiled code: ~10%508�

Architectural Support for Server-Side PHP Processing

ISCA ’17, June 24-28, 2017, Toronto, ON, Canada

(a) Sensitivity to BTB and ICache sizes, normalized

to 4K BTB 32KB ICache. Other PHP applications

show similar behavior.

(b) Cache analysis

(c) In-order vs. out-of-order behavior

Figure 2: Microarchitectural characterization of the PHP applications.

instruction or data caches show significant opportunity for improving

performance.

Branch predictor bottlenecks. We experimented with the state-

of-the-art TAGE branch predictor [63]1 with 32KB storage budget.

The branch mispredictions per kilo-instructions (MPKI) for the three

PHP applications considered in this work are 17.26, 14.48, and

15.14. Compared to the 2.9 MPKI average for the SPEC CPU2006

benchmarks, these applications clearly suffer from high mispre-

diction rates. The poor predictor performance is primarily due to

the presence of large number of data-dependent branches in the

PHP applications. The outcomes of data-dependent branches depend

solely on unpredictable data that the PHP applications require to

process during most of their execution time. Prior work on predicting

data-dependent branches [35] may improve the MPKI of the PHP

applications.

Branch target buffer bottlenecks. Real-world PHP applica-

tions suffer significantly from the poor performance of branch target

buffers (BTBs) in server cores. We simulate a BTB that resembles

the BTB found in modern Intel server cores with 4K entries and

2-way set associativity. Such behavior stems from the large number

of branches in the PHP applications. Around 12% of all dynamic

instructions are branches in the SPEC CPU2006 workloads [73],

whereas in the PHP applications about 22% of all instructions are

branches, thus adding more pressure on BTB. Figure 2(a) shows the

execution time of one of the PHP applications, as the BTB size is

progressively increased from 4K entries to 64K entries for different

sizes of I-cache. However even with 64K entries, the PHP appli-

cation obtains a modest BTB hit rate of 95.85%. Deploying such

large BTBs is not currently feasible in server cores due to power

constraints.

Cache analysis. Figure 2(b) presents the cache performance.

L1 instruction and data cache behavior are more typical of SPEC

CPU-like workloads contrary to the instruction cache behavior ob-

served in prior works with other server-side applications (servers-

side Javascript applications [73] or memcached workloads [55]).

Note that we simulate an aggressive memory system with prefetchers

at every cache level. Although there are hundreds of leaf functions

in the execution time distribution of these PHP applications, they are

1The branch prediction accuracy observed on Intel server processors and TAGE are in

the same range [60]

compact enough that can be effectively cached in the L1. Besides,

the numerous data structures in these PHP applications do not appear

to stress the data cache heavily. The L2 cache has very low MPKI,

as the L1 filters out most of the cache references. Figure 2(a) shows

the potential of minor performance gain with very large instruction

caches.

In-order vs. out-of-order. Figure 2c shows the impact four

different architectures (2-wide in-order, 2-wide out-of-order (OoO),

4-wide OoO, and 8-wide OoO) had on workload execution time.

Changing from in-order to OoO cores shows a significant increase

in performance. We also note that the 4-wide OoO shows fairly

significant performance gains over the 2-wide OoO architecture,

hinting that some ILP exists in these workloads. However, increasing

to an 8-wide OoO machine shows very little (< 3%) performance

increase, hinting that ILP cannot be exploited for large performance

benefits beyond 4-wide OoO cores.

Overall, our analysis suggests that PHP applications require far

more BTB capacity and much larger caches than server cores cur-

rently provide to obtain even minor performance benefit. In short,

our analysis does not present any obvious potential target for mi-

croarchitectural optimizations.

3 MITIGATE ABSTRACTION OVERHEAD

As microarchitectural analysis fails to reveal any clear opportuni-

ties for improvement, we shift our focus towards augmenting the

base processor with specialized hardware accelerators. However,

function-level acceleration is not an appealing solution for these ap-

plications given their very flat execution profiles. Nevertheless, it is

commonly known that PHP-like scripting languages suffer from the

high abstraction overheads of managed languages. Overhead exam-

ples include dynamic type checks for primitive types, and hash table

accesses for user-defined types. Furthermore PHP, like all garbage

collected languages, suffers from the overhead of reference counting.

While there are many research proposals [22, 31, 32, 40] from the

academic community in mitigating each of these abstraction over-

heads separately, most of them have not been adopted so far by the

industry into commercial server processors. Considering the fact that

these abstraction overheads constitute a significant source of perfor-

mance and energy overhead in these PHP applications (as our results

indicate), we believe the industry is more likely to embrace these

0.850.870.890.910.930.950.970.9932KB64KB128KB512KBNormalized execution timeICache sizeWordPress4K BTB8K BTB16K BTB32K BTB64K BTB0510152025ICacheMPKIDCacheMPKIL2 MPKIMisses per kilo-instructions (MPKI)WordPressDrupalMediaWiki00.20.40.60.81WordPressDruaplMediaWikiNormalized Execution TimeInOrder(2 wide)OoO(2 wide)OoO(4 wide)OoO(8 wide)509�

ISCA ’17, June 24-28, 2017, Toronto, ON, Canada

D. Gope et al.

subsystem performs the required type check for a variable before

returning its value.

Reference Counting. Reference counting constitutes a major

source of overhead in these PHP applications as it is spread across

compiled code and many library functions. We adopted a hardware

proposal from prior work that introduces minimal changes to the

cache subsystem to mitigate this overhead [46].

In addition to applying the above four optimizations, we tuned

the applications to reduce their overhead from expensive memory

allocation and deallocation calls to the kernel.

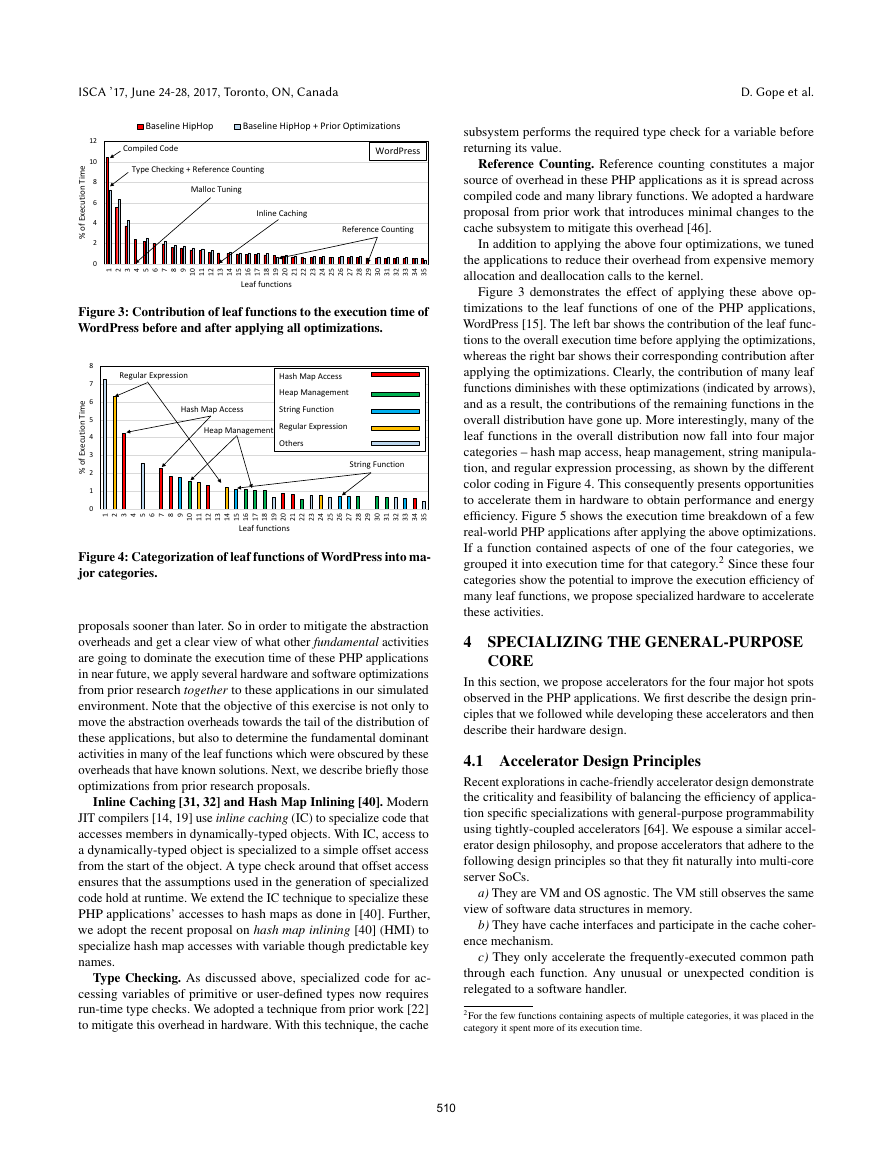

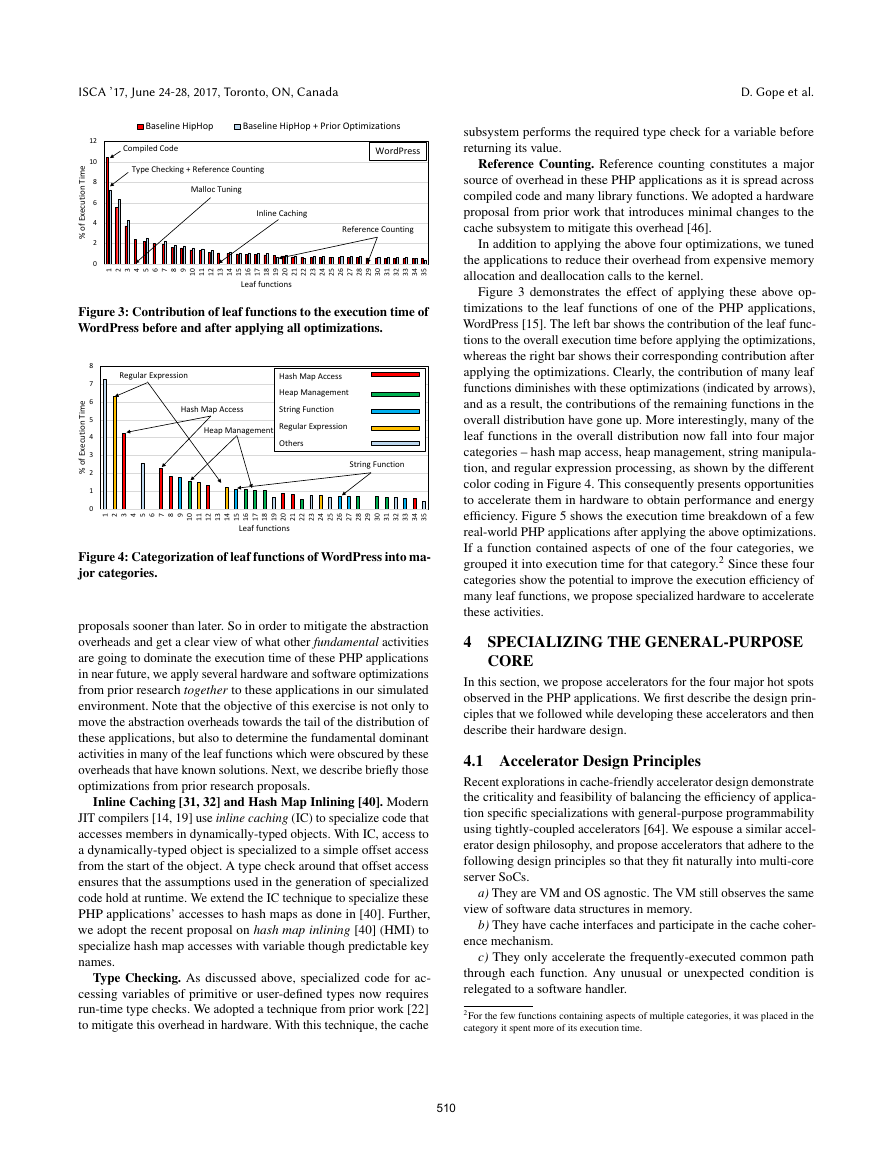

Figure 3 demonstrates the effect of applying these above op-

timizations to the leaf functions of one of the PHP applications,

WordPress [15]. The left bar shows the contribution of the leaf func-

tions to the overall execution time before applying the optimizations,

whereas the right bar shows their corresponding contribution after

applying the optimizations. Clearly, the contribution of many leaf

functions diminishes with these optimizations (indicated by arrows),

and as a result, the contributions of the remaining functions in the

overall distribution have gone up. More interestingly, many of the

leaf functions in the overall distribution now fall into four major

categories – hash map access, heap management, string manipula-

tion, and regular expression processing, as shown by the different

color coding in Figure 4. This consequently presents opportunities

to accelerate them in hardware to obtain performance and energy

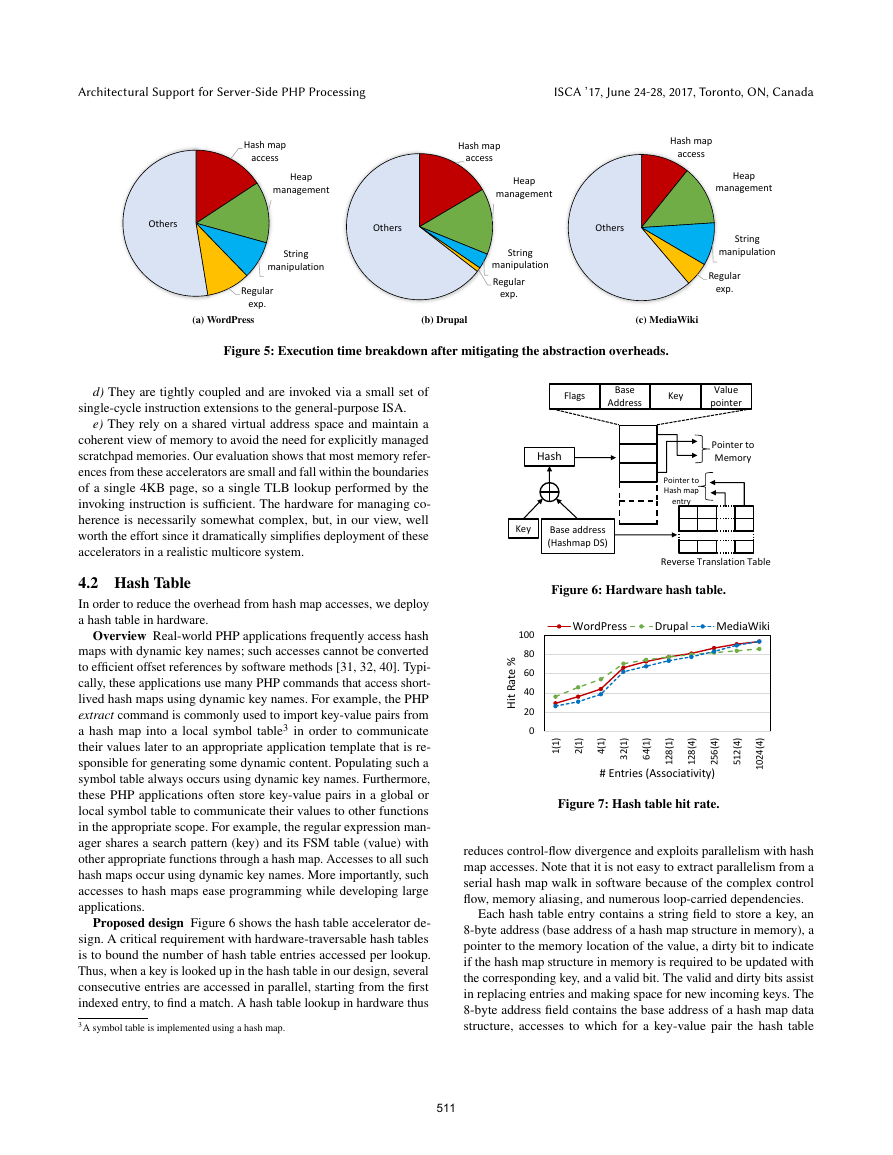

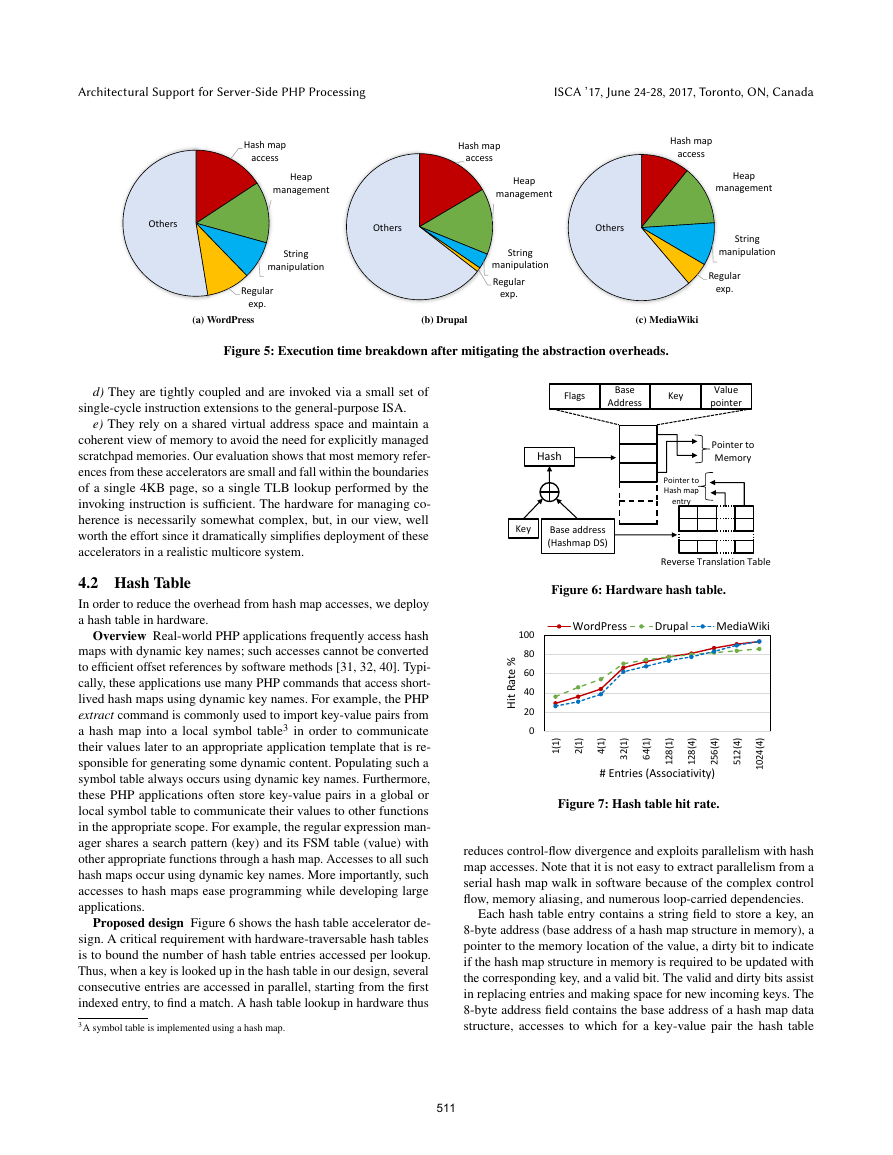

efficiency. Figure 5 shows the execution time breakdown of a few

real-world PHP applications after applying the above optimizations.

If a function contained aspects of one of the four categories, we

grouped it into execution time for that category.2 Since these four

categories show the potential to improve the execution efficiency of

many leaf functions, we propose specialized hardware to accelerate

these activities.

4 SPECIALIZING THE GENERAL-PURPOSE

CORE

In this section, we propose accelerators for the four major hot spots

observed in the PHP applications. We first describe the design prin-

ciples that we followed while developing these accelerators and then

describe their hardware design.

4.1 Accelerator Design Principles

Recent explorations in cache-friendly accelerator design demonstrate

the criticality and feasibility of balancing the efficiency of applica-

tion specific specializations with general-purpose programmability

using tightly-coupled accelerators [64]. We espouse a similar accel-

erator design philosophy, and propose accelerators that adhere to the

following design principles so that they fit naturally into multi-core

server SoCs.

a) They are VM and OS agnostic. The VM still observes the same

view of software data structures in memory.

b) They have cache interfaces and participate in the cache coher-

ence mechanism.

c) They only accelerate the frequently-executed common path

through each function. Any unusual or unexpected condition is

relegated to a software handler.

2For the few functions containing aspects of multiple categories, it was placed in the

category it spent more of its execution time.

Figure 3: Contribution of leaf functions to the execution time of

WordPress before and after applying all optimizations.

Figure 4: Categorization of leaf functions of WordPress into ma-

jor categories.

proposals sooner than later. So in order to mitigate the abstraction

overheads and get a clear view of what other fundamental activities

are going to dominate the execution time of these PHP applications

in near future, we apply several hardware and software optimizations

from prior research together to these applications in our simulated

environment. Note that the objective of this exercise is not only to

move the abstraction overheads towards the tail of the distribution of

these applications, but also to determine the fundamental dominant

activities in many of the leaf functions which were obscured by these

overheads that have known solutions. Next, we describe briefly those

optimizations from prior research proposals.

Inline Caching [31, 32] and Hash Map Inlining [40]. Modern

JIT compilers [14, 19] use inline caching (IC) to specialize code that

accesses members in dynamically-typed objects. With IC, access to

a dynamically-typed object is specialized to a simple offset access

from the start of the object. A type check around that offset access

ensures that the assumptions used in the generation of specialized

code hold at runtime. We extend the IC technique to specialize these

PHP applications’ accesses to hash maps as done in [40]. Further,

we adopt the recent proposal on hash map inlining [40] (HMI) to

specialize hash map accesses with variable though predictable key

names.

Type Checking. As discussed above, specialized code for ac-

cessing variables of primitive or user-defined types now requires

run-time type checks. We adopted a technique from prior work [22]

to mitigate this overhead in hardware. With this technique, the cache

0246810121234567891011121314151617181920212223242526272829303132333435% of Execution TimeLeaf functionsBaseline HipHopBaseline HipHop + Prior OptimizationsCompiledCodeType Checking + ReferenceCountingInlineCachingMallocTuningReferenceCountingWordPress0123456781234567891011121314151617181920212223242526272829303132333435% of Execution TimeLeaf functionsRegular ExpressionString FunctionHeap ManagementHash Map AccessHeap ManagementString FunctionRegular ExpressionOthersHash Map Access510�

Architectural Support for Server-Side PHP Processing

ISCA ’17, June 24-28, 2017, Toronto, ON, Canada

(a) WordPress

(b) Drupal

(c) MediaWiki

Figure 5: Execution time breakdown after mitigating the abstraction overheads.

d) They are tightly coupled and are invoked via a small set of

single-cycle instruction extensions to the general-purpose ISA.

e) They rely on a shared virtual address space and maintain a

coherent view of memory to avoid the need for explicitly managed

scratchpad memories. Our evaluation shows that most memory refer-

ences from these accelerators are small and fall within the boundaries

of a single 4KB page, so a single TLB lookup performed by the

invoking instruction is sufficient. The hardware for managing co-

herence is necessarily somewhat complex, but, in our view, well

worth the effort since it dramatically simplifies deployment of these

accelerators in a realistic multicore system.

4.2 Hash Table

In order to reduce the overhead from hash map accesses, we deploy

a hash table in hardware.

Overview Real-world PHP applications frequently access hash

maps with dynamic key names; such accesses cannot be converted

to efficient offset references by software methods [31, 32, 40]. Typi-

cally, these applications use many PHP commands that access short-

lived hash maps using dynamic key names. For example, the PHP

extract command is commonly used to import key-value pairs from

a hash map into a local symbol table3 in order to communicate

their values later to an appropriate application template that is re-

sponsible for generating some dynamic content. Populating such a

symbol table always occurs using dynamic key names. Furthermore,

these PHP applications often store key-value pairs in a global or

local symbol table to communicate their values to other functions

in the appropriate scope. For example, the regular expression man-

ager shares a search pattern (key) and its FSM table (value) with

other appropriate functions through a hash map. Accesses to all such

hash maps occur using dynamic key names. More importantly, such

accesses to hash maps ease programming while developing large

applications.

Proposed design Figure 6 shows the hash table accelerator de-

sign. A critical requirement with hardware-traversable hash tables

is to bound the number of hash table entries accessed per lookup.

Thus, when a key is looked up in the hash table in our design, several

consecutive entries are accessed in parallel, starting from the first

indexed entry, to find a match. A hash table lookup in hardware thus

3A symbol table is implemented using a hash map.

Figure 6: Hardware hash table.

Figure 7: Hash table hit rate.

reduces control-flow divergence and exploits parallelism with hash

map accesses. Note that it is not easy to extract parallelism from a

serial hash map walk in software because of the complex control

flow, memory aliasing, and numerous loop-carried dependencies.

Each hash table entry contains a string field to store a key, an

8-byte address (base address of a hash map structure in memory), a

pointer to the memory location of the value, a dirty bit to indicate

if the hash map structure in memory is required to be updated with

the corresponding key, and a valid bit. The valid and dirty bits assist

in replacing entries and making space for new incoming keys. The

8-byte address field contains the base address of a hash map data

structure, accesses to which for a key-value pair the hash table

Hash map accessHeap managementString manipulationRegular exp.OthersHash map accessHeap managementString manipulationRegular exp.OthersHash map accessHeap managementString manipulationRegular exp.OthersHashKeyBase address (HashmapDS)Base AddressKeyValue pointerFlagsPointer to MemoryPointer to Hash map entryReverse Translation Table0204060801001(1)2(1)4(1)32(1)64(1)128(1)128(4)256(4)512(4)1024(4)Hit Rate %# Entries (Associativity)WordPressDrupalMediaWiki511�

ISCA ’17, June 24-28, 2017, Toronto, ON, Canada

D. Gope et al.

attempts to provide a fast lookup. Thus, in response to a request, the

hash table performs a hash on the combined value of the key and

the base address of the requested hash map to index into an entry

and begin the lookup process. Starting with the entry, when a hash

table lookup is performed, each entry has its key and 8-byte base

address compared with the key and the base address of the request.

Upon a match, the hash table updates the entry’s last-access time

stamp (for LRU replacement) and sends the response to the request.

If no match is found, control falls back to the software to perform

the regular hash map access in memory.

Design considerations The HHVM JIT compiler [19] already

uses an efficient hash computation function that can operate on

variable length strings (in our case the combination of a hash map

base address and a key in it). However, we used a simplified hash

function for the hash table without compromising its hit rate. This is

because the HHVM hash function is overly complex to map into an

efficient hardware module and it requires many processor cycles to

compute a hash. Our design leverages several inherent characteristics

of these PHP applications.

First, in contrast to the most large-scale memcached deploy-

ments [23, 55] where the GET requests vastly outnumber the SET

and other request types, these PHP applications observe relatively

higher percentage of SET requests (ranging from 15− 25%) when

generating dynamic contents for web pages. As a result, a hash table

deployed for such applications should respond to both GET and SET

requests in order to take full advantage of the hardware hash table

and offload most operations associated with hash map accesses from

the core.

Second, the majority (about 95%) of the hash map keys accessed

in these PHP applications are at most 24 bytes in length. As a result,

we store the keys in the hash table itself, unlike the hash table

designed for memcached deployments [55]. Storing the keys directly

in the hash table eases the traversal of the hash table in hardware.

We next discuss typical operations offloaded from a traditional

software hash map to our hardware hash table. Note that the alloca-

tion or the deallocation of a hash map structure in memory is still

handled by software.

GET: A GET request attempts to retrieve a key-value pair of

the requested hash map from the hash table without any software

interaction. Upon a match, the hash table updates the entry’s last-

access time stamp. If the key is not found for the requested hash map,

control transfers to the software to retrieve the key-value pair from

memory and places it into the hash table. In order to make space for

the retrieved key, if an invalid entry is not found, then a clean entry

(dirty bit not set) is given more priority for replacement. This avoids

any costly software involvement associated with replacing a dirty

entry from the hardware hash table. The hash map of a dirty entry

is not up-to-date in memory with the key-value pair and therefore

requires software intervention in our design to be updated when the

entry is evicted. If none is found, the LRU dirty entry is replaced

with incurring the associated software cost.

SET: A SET request attempts to insert a key-value pair of the

requested hash map into the hash table without any software interac-

tion. Upon finding a match, SET simply updates the value pointer

and the entry’s last-access time stamp. If the key is not found, then

the key-value is inserted into the hash table and the entry’s dirty bit

is set. If an eviction is necessary (due to hash table overflow), the

same replacement policy as described for GET is followed. Note

that a SET operation silently updates the hash table in our design

without updating the memory. Figure 7 demonstrates the hit rate of

such a hash table. Even a hash table with only 256 entries observes a

high hit rate of about 80%. Since SET operations never miss in our

design, a hash table with very few entries (1, 2 or 4) shows such a

decent hit rate. Having support of a SET operation in hardware helps

in serving a major fraction of the short-lived hash map accesses from

the hash table.

Free: In response to deallocating a hash map from the memory,

the hardware hash table would (in a naive implementation) need to

scan the entire table to determine the set of entries belonging to the

requested hash map. The reverse translation table (RTT) in Figure 6

assists the hash table during this seemingly expensive operation.

The RTT is indexed by the base address of a requested hash map.

Each RTT entry stores back pointers to the set of hash table entries

containing key-value pairs of a hash map. Each RTT entry also has a

write pointer. The write pointer assists in adding these back-pointers

associated with different key-value pairs of a hash map into the RTT

entry in the same order as they are inserted into the hash table. As

a result, a SET operation adds a back pointer in a RTT entry using

the associated write pointer and increments it to point to the next

available position in the RTT entry. Consequently, each entry in the

RTT is implemented using a circular buffer. When an entry is evicted

from the hash table, its back pointer in the RTT is invalidated. Hence

in response to a Free request, the RTT invalidates the hash table

entries of the requested hash map. This way, short-lived hash maps

mostly stay in the hash table throughout their lifetime without ever

being written back to the memory.

Replacement: Replacement is handled by software as discussed

above.

foreach: The foreach array iterator in PHP iterates over the key-

value pairs of a hash map in their order of insertion. The RTT assists

in performing this operation. It captures the order of insertion and

later updates the memory of the hash map using it in response to a

foreach command. Even if an inserted key-value pair is evicted from

the hash table and re-inserted later, the RTT can still guarantee the

required insertion order invariant.

Ensure coherence Each hash map in the hardware hash table

has an equivalent software hash map laid out in the conventional

address space. The two are kept coherent by enforcing a writeback

policy from the hardware hash map, and by requiring inclusion of the

software equivalent at the L2 level. When a hash map is first inserted

into the hardware hash table, the accelerator acquires exclusive

coherence permission to the entire address range (depending on the

size of the hash map this could be several cache lines). If remote

coherence requests arrive for any hardware hash map entries, they

are forwarded via the RTT to the accelerator, which flushes out any

entries corresponding to the address range of the hash map (the same

thing happens for L2 evictions to enforce inclusion). In practice, the

hash maps we target are small (requiring a handful of cache lines),

process private, and exhibit a lot of temporal locality (small hash

maps are freed and reallocated from the process-local heap), so there

is virtually no coherence activity due to the hash map accelerator.

The software hash map stores each key/value pair in a table ordered

512�

Architectural Support for Server-Side PHP Processing

ISCA ’17, June 24-28, 2017, Toronto, ON, Canada

(a) Cumulative memory usage with different alloca-

tion slabs.

(b) Memory usage of WordPress.

(c) Memory usage of MediaWiki.

Figure 8: Memory usage pattern of the PHP applications.

based on insertion, and also stores a pointer to that table in a hash

table for fast lookup. The hardware hash table only writes back to

the former table while flushing out entries, and marks a flag in the

software hash map to indicate that the hash table of the software

hash map is now stale. Subsequent software accesses to the software

hash map check this flag and reconstruct the hash table if the flag is

set. This is exceedingly rare in practice (triggered only by process

migration), so the reconstruction mechanism is necessary only for

correctness.

4.3 Heap Manager

Memory allocations and deallocations are ubiquitous in real-world

PHP applications. They consume a substantial fraction of the execu-

tion time and are spread across many leaf functions. To handle dy-

namic memory management, the VM typically uses the well-known

slab allocation technique. In slab allocation, the VM allocates a

large chunk of memory and breaks it up into smaller segments of a

fixed size according to the slab class’s size and stores the pointer to

those segments in the associated free list.

Overview We propose a heap manager with its most frequently

accessed components in hardware to improve its performance and

energy-efficiency. Our hardware heap manager is motivated by the

unique memory usage pattern of these PHP applications.

First, a majority of the allocation and deallocation requests re-

trieve at most 128 bytes, which reflects heavy usage of small memory

objects. Figure 8a shows the cumulative distribution of memory us-

age with different memory allocation slabs.

Second, these applications exhibit strong memory reuse. Such

strong memory reuse is due to the following reasons: (a) these

applications insert many HTML tags while generating dynamic

contents for web pages. HTML tags are the keywords within a

web page that define how the browser must format and display the

content. Generating and formatting these HTML tags often require

retrieving many attribute values from the database, storing them in

string objects and later concatenating those values to form the overall

formatted tag. Once a HTML tag is produced with all its required

attributes, the memory associated with these strings are recycled. (b)

These applications typically process large volumes of textual data,

URL etc. Such processing commonly parses the content through

many string functions and regular expressions with frequent memory

allocations and deallocations to hold the string contents. To illustrate

Figure 9: Hardware heap manager.

this, Figure 8b and Figure 8c show memory usage pattern of two

workloads during the course of their execution. We see that memory

usage stays flat for the four smallest slabs of 0−32, 32−64, 64−96,

and 96− 128 bytes, demonstrating their strong memory reuse for

the slabs that dominate the total memory usage.

Proposed design We deploy a heap manager with only its size

class table and a few free lists in hardware to capture the heavy

memory usage of small objects and their strong memory reuse. Fig-

ure 9 shows the high-level block diagram. The comparator limits

the maximum size of a memory allocation request that the hardware

heap manager can satisfy. The size class table chooses an appropri-

ate free list for an incoming request depending on its request size.

Typically, a memory allocation request accesses the size class table

and retrieves an available memory block from the chosen hardware

free list without any software involvement. The free list for each size

class has head and tail pointers to orchestrate allocation and deallo-

cation of memory blocks. The core uses the head pointer for push

and pop requests, and the prefetcher pushes to the location of the tail

pointer. Upon finding a miss in the hardware free list, control trans-

fers to software to satisfy the memory allocation request. At the start

of a program, upon finding a miss for an available memory block in

the hardware free list, the runtime retrieves memory blocks from the

software heap manager and places it into the appropriate hardware

free list. A prefetcher ensures that the free lists stay populated with

available memory blocks so that a request for memory allocation

can hide the latency of software involvement whenever possible. We

use a pointer-based prefetcher to prefetch the next available memory

blocks from the software heap manager structure.

0204060801000-32 B32-64 B64-96 B96-128 B128-256 B256-512 B> 512 BCDF %Allocation SlabsWordPressDrupalMediaWiki050100150200250300Memory Usage (Bytes)ThousandsProgram Execution0-32 B32-64 B64-96 B96-128 B128-256 B256-512 B> 512 BSmaller Slabs05001000150020002500300035004000Memory Usage (Bytes)ThousandsProgram Execution0-32 B32-64 B64-96 B96-128 B128-256 B256-512 B> 512 BSmaller SlabsPrefetcherComparator>>Size Class TableSet zero flagFreelist513�

ISCA ’17, June 24-28, 2017, Toronto, ON, Canada

D. Gope et al.

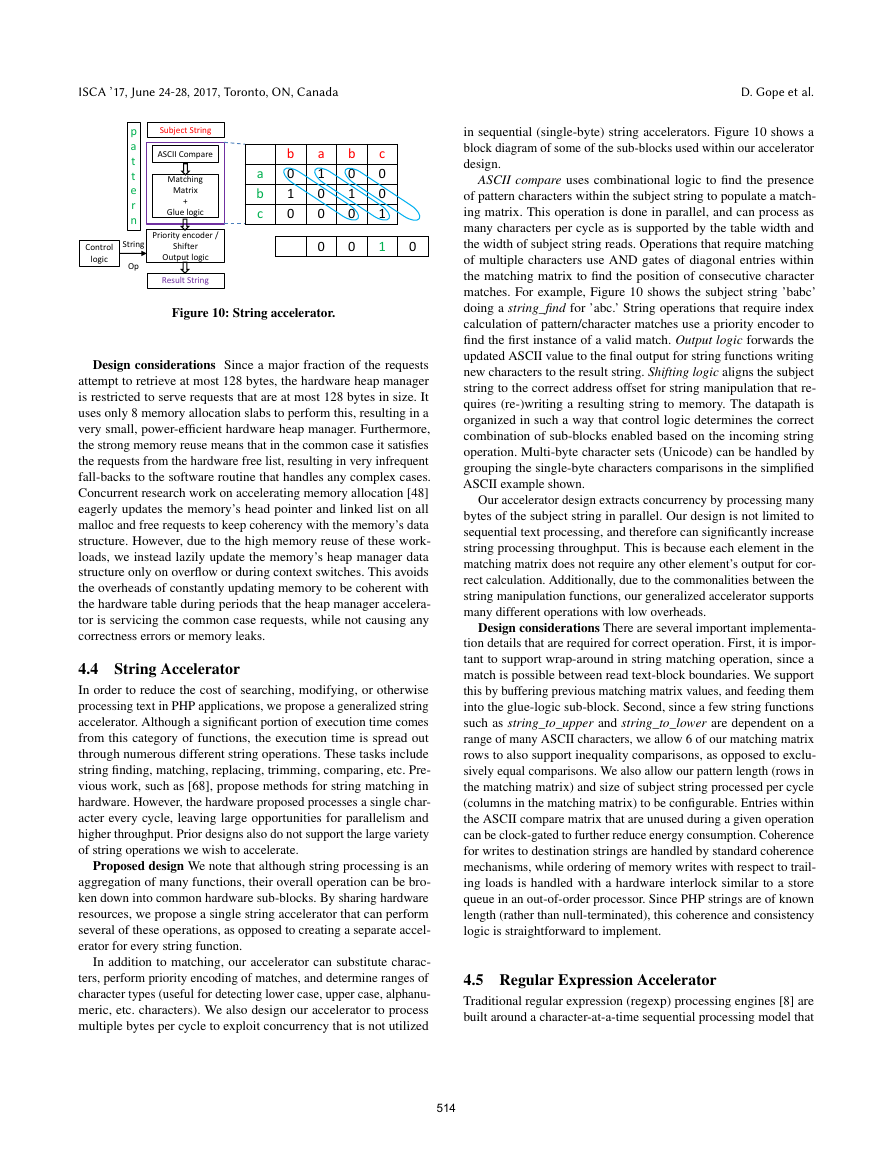

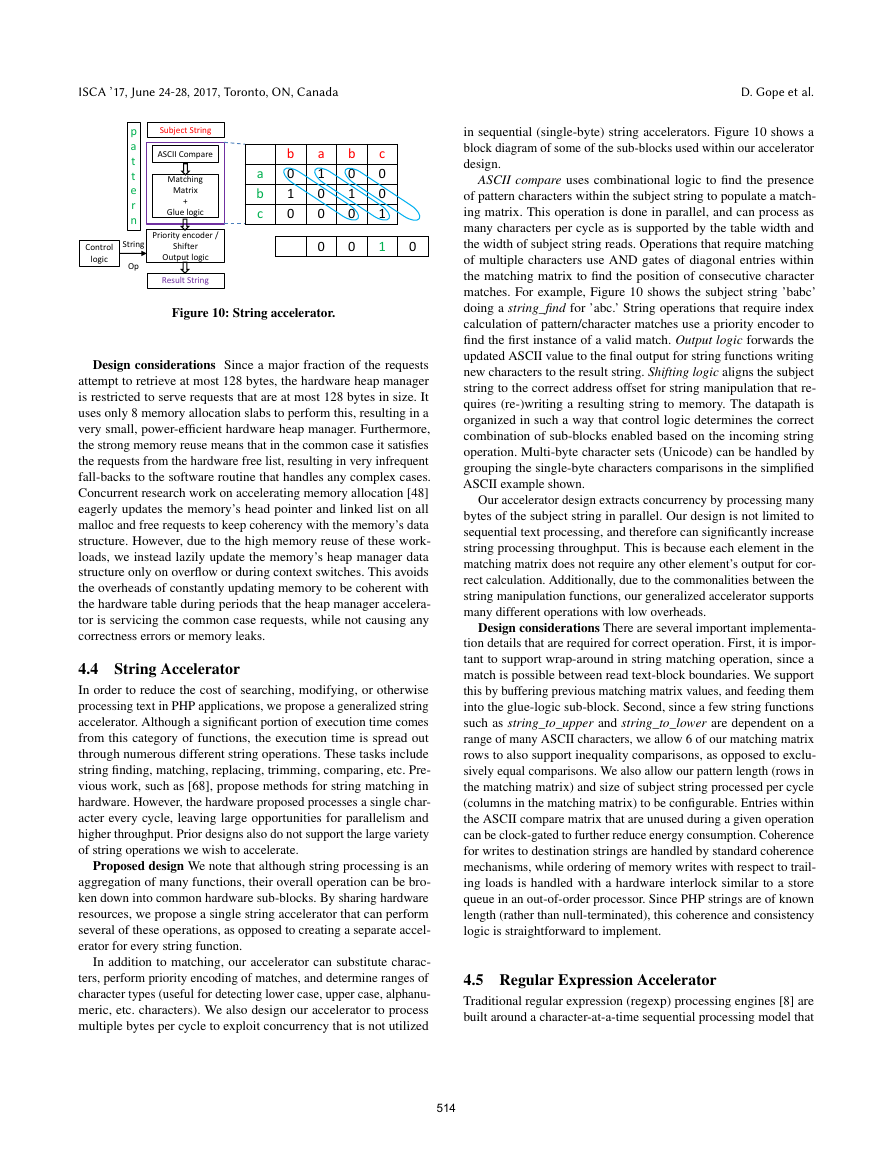

in sequential (single-byte) string accelerators. Figure 10 shows a

block diagram of some of the sub-blocks used within our accelerator

design.

ASCII compare uses combinational logic to find the presence

of pattern characters within the subject string to populate a match-

ing matrix. This operation is done in parallel, and can process as

many characters per cycle as is supported by the table width and

the width of subject string reads. Operations that require matching

of multiple characters use AND gates of diagonal entries within

the matching matrix to find the position of consecutive character

matches. For example, Figure 10 shows the subject string ’babc’

doing a string_find for ’abc.’ String operations that require index

calculation of pattern/character matches use a priority encoder to

find the first instance of a valid match. Output logic forwards the

updated ASCII value to the final output for string functions writing

new characters to the result string. Shifting logic aligns the subject

string to the correct address offset for string manipulation that re-

quires (re-)writing a resulting string to memory. The datapath is

organized in such a way that control logic determines the correct

combination of sub-blocks enabled based on the incoming string

operation. Multi-byte character sets (Unicode) can be handled by

grouping the single-byte characters comparisons in the simplified

ASCII example shown.

Our accelerator design extracts concurrency by processing many

bytes of the subject string in parallel. Our design is not limited to

sequential text processing, and therefore can significantly increase

string processing throughput. This is because each element in the

matching matrix does not require any other element’s output for cor-

rect calculation. Additionally, due to the commonalities between the

string manipulation functions, our generalized accelerator supports

many different operations with low overheads.

Design considerations There are several important implementa-

tion details that are required for correct operation. First, it is impor-

tant to support wrap-around in string matching operation, since a

match is possible between read text-block boundaries. We support

this by buffering previous matching matrix values, and feeding them

into the glue-logic sub-block. Second, since a few string functions

such as string_to_upper and string_to_lower are dependent on a

range of many ASCII characters, we allow 6 of our matching matrix

rows to also support inequality comparisons, as opposed to exclu-

sively equal comparisons. We also allow our pattern length (rows in

the matching matrix) and size of subject string processed per cycle

(columns in the matching matrix) to be configurable. Entries within

the ASCII compare matrix that are unused during a given operation

can be clock-gated to further reduce energy consumption. Coherence

for writes to destination strings are handled by standard coherence

mechanisms, while ordering of memory writes with respect to trail-

ing loads is handled with a hardware interlock similar to a store

queue in an out-of-order processor. Since PHP strings are of known

length (rather than null-terminated), this coherence and consistency

logic is straightforward to implement.

4.5 Regular Expression Accelerator

Traditional regular expression (regexp) processing engines [8] are

built around a character-at-a-time sequential processing model that

Figure 10: String accelerator.

Design considerations Since a major fraction of the requests

attempt to retrieve at most 128 bytes, the hardware heap manager

is restricted to serve requests that are at most 128 bytes in size. It

uses only 8 memory allocation slabs to perform this, resulting in a

very small, power-efficient hardware heap manager. Furthermore,

the strong memory reuse means that in the common case it satisfies

the requests from the hardware free list, resulting in very infrequent

fall-backs to the software routine that handles any complex cases.

Concurrent research work on accelerating memory allocation [48]

eagerly updates the memory’s head pointer and linked list on all

malloc and free requests to keep coherency with the memory’s data

structure. However, due to the high memory reuse of these work-

loads, we instead lazily update the memory’s heap manager data

structure only on overflow or during context switches. This avoids

the overheads of constantly updating memory to be coherent with

the hardware table during periods that the heap manager accelera-

tor is servicing the common case requests, while not causing any

correctness errors or memory leaks.

4.4 String Accelerator

In order to reduce the cost of searching, modifying, or otherwise

processing text in PHP applications, we propose a generalized string

accelerator. Although a significant portion of execution time comes

from this category of functions, the execution time is spread out

through numerous different string operations. These tasks include

string finding, matching, replacing, trimming, comparing, etc. Pre-

vious work, such as [68], propose methods for string matching in

hardware. However, the hardware proposed processes a single char-

acter every cycle, leaving large opportunities for parallelism and

higher throughput. Prior designs also do not support the large variety

of string operations we wish to accelerate.

Proposed design We note that although string processing is an

aggregation of many functions, their overall operation can be bro-

ken down into common hardware sub-blocks. By sharing hardware

resources, we propose a single string accelerator that can perform

several of these operations, as opposed to creating a separate accel-

erator for every string function.

In addition to matching, our accelerator can substitute charac-

ters, perform priority encoding of matches, and determine ranges of

character types (useful for detecting lower case, upper case, alphanu-

meric, etc. characters). We also design our accelerator to process

multiple bytes per cycle to exploit concurrency that is not utilized

babca0100b1010c00010010StringOpControl logicpatternSubject StringASCII CompareMatchingMatrix+Glue logicResult StringPriority encoder / Shifter Output logic514�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc