C H A P T E R

Parallel Computation

Parallelism takes many forms and appears in many guises. It is exhibited at the CPU level when

microinstructions are executed simultaneously. It is also present when an arithmetic or logic

operation is realized by a circuit of small depth, as with carry-save addition. And it is present

when multiple computers are connected together in a network. Parallelism can be available but

go unused, either because an application was not designed to exploit parallelism or because a

problem is inherently serial.

In this chapter we examine a number of explicitly parallel models of computation, includ-

ing shared and distributed memory models and, in particular, linear and multidimensional

arrays, hypercube-based machines, and the PRAM model. We give a broad introduction to

a large and representative set of models, describing a handful of good parallel programming

techniques and showing through analysis the limits on parallelization. Because of the limited

use so far of parallel algorithms and machines, the wide range of hardware and software models

developed by the research community has not yet been fully digested by the computer industry.

Parallelism in logic and algebraic circuits is also examined in Chapters 2 and 6. The block

I/O model, which characterizes parallelism at the disk level, is presented in Section 11.6 and

the classification of problems by their execution time on parallel machines is discussed in Sec-

tion 8.15.2.

281

�

282

Chapter 7 Parallel Computation

Models of Computation

7.1 Parallel Computational Models

A parallel computer is any computer that can perform more than one operation at time.

By this definition almost every computer is a parallel computer. For example, in the pursuit

of speed, computer architects regularly perform multiple operations in each CPU cycle: they

execute several microinstructions per cycle and overlap input and output operations (I/O) (see

Chapter 11) with arithmetic and logical operations. Architects also design parallel computers

that are either several CPU and memory units attached to a common bus or a collection of

computers connected together via a network. Clearly parallelism is common in computer

science today.

However, several decades of research have shown that exploiting large-scale parallelism is

very hard. Standard algorithmic techniques and their corresponding data structures do not

parallelize well, necessitating the development of new methods. In addition, when parallelism

is sought through the undisciplined coordination of a large number of tasks, the sheer number

of simultaneous activities to which one human mind must attend can be so large that it is

often difficult to insure correctness of a program design. The problems of parallelism are

indeed daunting.

Small illustrations of this point are seen in Section 2.7.1, which presents an O(log n)-step,

O(n)-gate addition circuit that is considerably more complex than the ripple adder given in

Section 2.7. Similarly, the fast matrix inversion straight-line algorithm of Section 6.5.5 is more

complex than other such algorithms (see Section 6.5).

In this chapter we examine forms of parallelism that are more coarse-grained than is typ-

ically found in circuits. We assume that a parallel computer consists of multiple processors

and memories but that each processor is primarily serial. That is, although a processor may

realize its instructions with parallel circuits, it typically executes only one or a small number of

instructions simultaneously. Thus, most of the parallelism exhibited by our parallel computer

is due to parallel execution by its processors.

We also describe a few programming styles that encourage a parallel style of programming

and offer promise for user acceptance. Finally, we present various methods of analysis that

have proven useful in either determining the parallel time needed for a problem or classifying

a problem according to its need for parallel time.

Given the doubling of CPU speed every two or three years, one may ask whether we can’t

just wait until CPU performance catches up with demand. Unfortunately, the appetite for

speed grows faster than increases in CPU speed alone can meet. Today many problems, es-

pecially those involving simulation of physical systems, require teraflop computers (those per-

forming 1012 floating-point operations per second (FLOPS)) but it is predicted that petaflop

computers (performing 1015 FLOPS) are needed. Achieving such high levels of performance

with a handful of CPUs may require CPU performance beyond what is physically possible at

reasonable prices.

7.2 Memoryless Parallel Computers

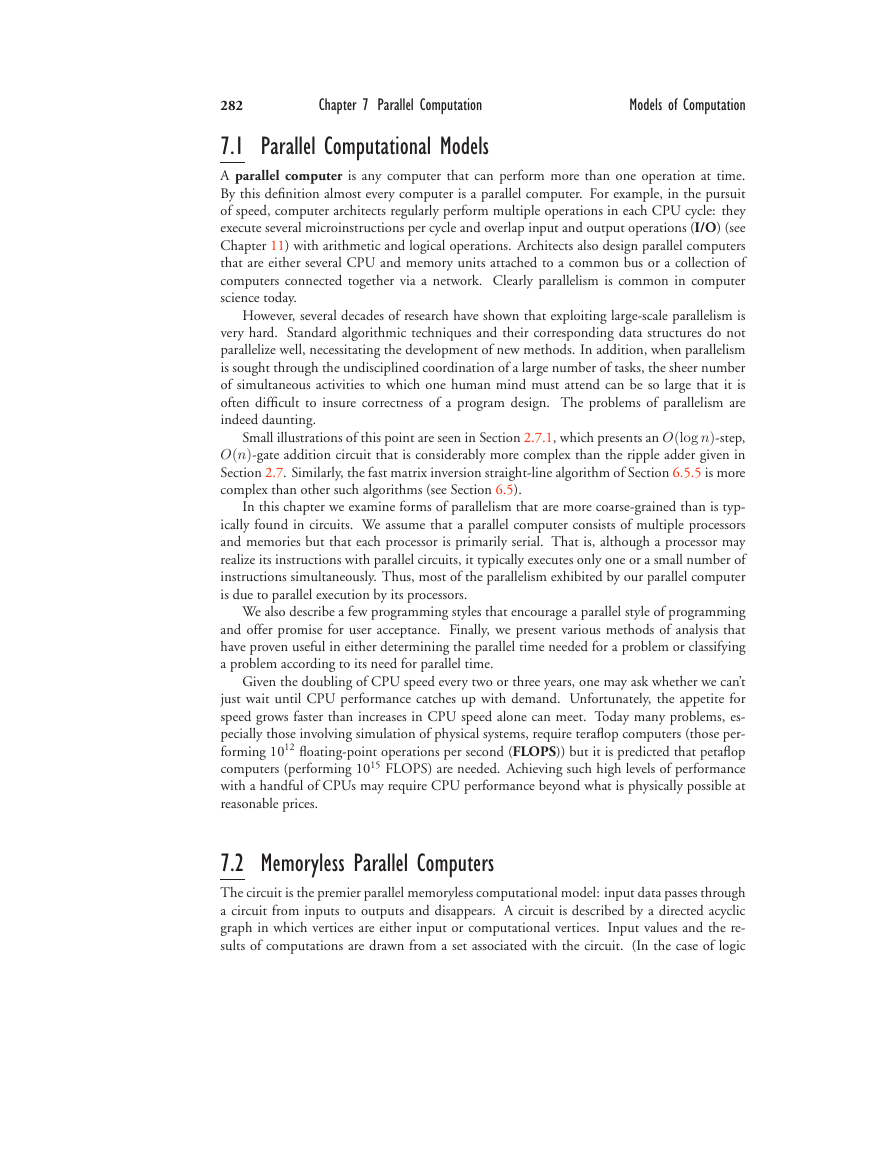

The circuit is the premier parallel memoryless computational model: input data passes through

a circuit from inputs to outputs and disappears. A circuit is described by a directed acyclic

graph in which vertices are either input or computational vertices. Input values and the re-

sults of computations are drawn from a set associated with the circuit. (In the case of logic

�

c!John E Savage

7.3 Parallel Computers with Memory

283

9

f+, ω0

10

11

f+, ω2

f+, ω1

f+, ω3

12

cj+1

sj

gj

pj

vj

uj

cj

5

f+, ω0

6

f+, ω2

7

f+, ω2

f+, ω0

1

2

3

a0

a2

a1

a3

8

4

(a)

(b)

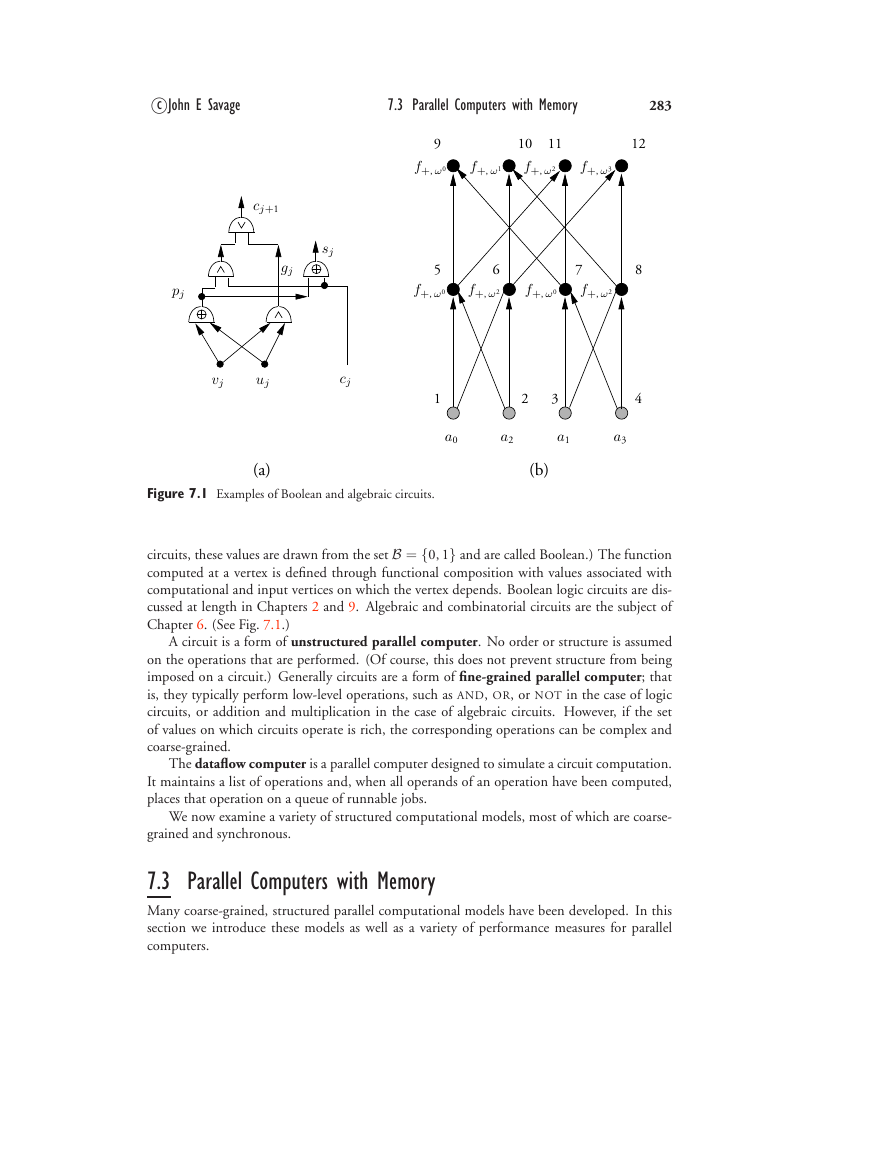

Figure 7.1 Examples of Boolean and algebraic circuits.

circuits, these values are drawn from the set B = {0, 1} and are called Boolean.) The function

computed at a vertex is defined through functional composition with values associated with

computational and input vertices on which the vertex depends. Boolean logic circuits are dis-

cussed at length in Chapters 2 and 9. Algebraic and combinatorial circuits are the subject of

Chapter 6. (See Fig. 7.1.)

A circuit is a form of unstructured parallel computer. No order or structure is assumed

on the operations that are performed. (Of course, this does not prevent structure from being

imposed on a circuit.) Generally circuits are a form of fine-grained parallel computer; that

is, they typically perform low-level operations, such as AND, OR, or NOT in the case of logic

circuits, or addition and multiplication in the case of algebraic circuits. However, if the set

of values on which circuits operate is rich, the corresponding operations can be complex and

coarse-grained.

The dataflow computer is a parallel computer designed to simulate a circuit computation.

It maintains a list of operations and, when all operands of an operation have been computed,

places that operation on a queue of runnable jobs.

We now examine a variety of structured computational models, most of which are coarse-

grained and synchronous.

7.3 Parallel Computers with Memory

Many coarse-grained, structured parallel computational models have been developed. In this

section we introduce these models as well as a variety of performance measures for parallel

computers.

�

284

Chapter 7 Parallel Computation

Models of Computation

There are many ways to characterize parallel computers. A fine-grained parallel computer

is one in which the focus is on its constituent components, which themselves consist of low-

level entities such as logic gates and binary memory cells. A coarse-grained parallel computer

is one in which we ignore the low-level components of the computer and focus instead on its

functioning at a high level. A complex circuit, such as a carry-lookahead adder, whose details

are ignored is a single coarse-grained unit, whereas one whose details are studied explicitly is

fine-grained. CPUs and large memory units are generally viewed as coarse-grained.

A parallel computer is a collection of interconnected processors (CPUs or memories). The

processors and the media used to connect them constitute a network. If the processors are

in close physical proximity and can communicate quickly, we often say that they are tightly

coupled and call the machine a parallel computer rather than a computer network. How-

ever, when the processors are not in close proximity or when their operating systems require a

large amount of time to exchange messages, we say that they are loosely coupled and call the

machine a computer network.

Unless a problem is trivially parallel, it must be possible to exchange messages between

processors. A variety of low-level mechanisms are generally available for this purpose. The use

of software for the exchange of potentially long messages is called message passing. In a tightly

coupled parallel computer, messages are prepared, sent, and received quickly relative to the

clock speed of its processors, but in a loosely coupled parallel computer, the time required for

these steps is much larger. The time Tm to transmit a message from one processor to another

is generally assumed to be of the form Tm = α + lβ, where l is the length of the message in

words, α (latency) is the time to set up a communication channel, and β (bandwidth) is the

time to send and receive one word. Both α and β are constant multiples of the duration of

the CPU clock cycle of the processors. Thus, α + β is the time to prepare, send, and receive

a single-word message. In a tightly coupled machine α and β are small, whereas in a loosely

coupled machine α is large.

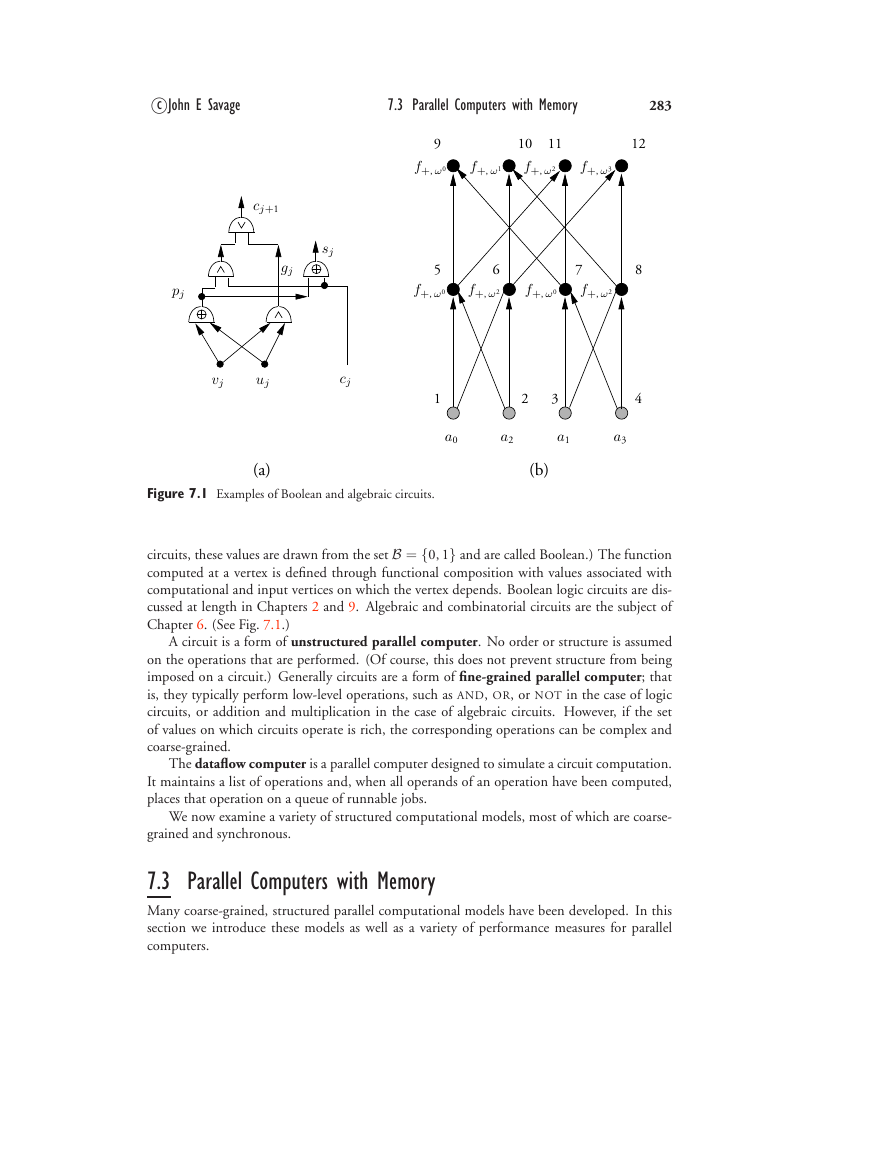

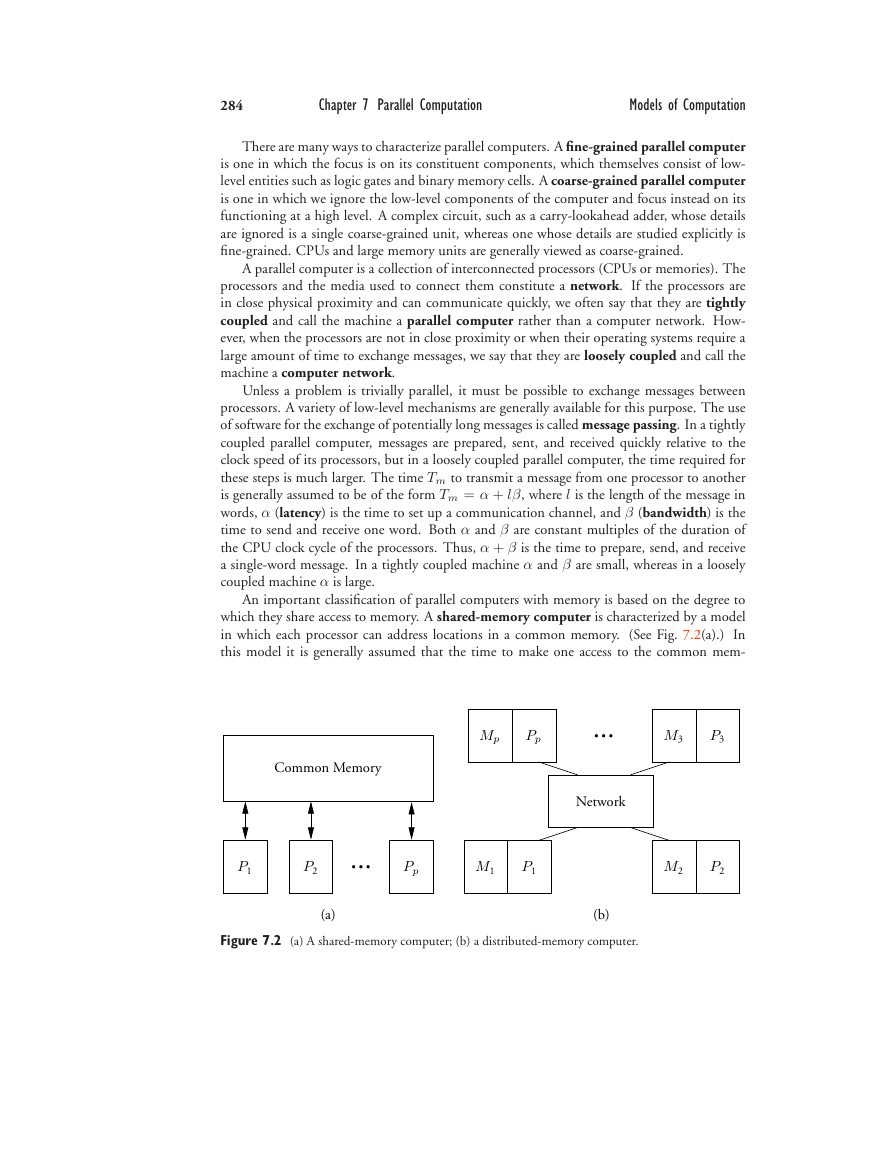

An important classification of parallel computers with memory is based on the degree to

which they share access to memory. A shared-memory computer is characterized by a model

in which each processor can address locations in a common memory. (See Fig. 7.2(a).) In

this model it is generally assumed that the time to make one access to the common mem-

Mp

Pp

...

M3

P3

Common Memory

Network

P1

P2

...

Pp

M1

P1

M2

P2

(a)

(b)

Figure 7.2 (a) A shared-memory computer; (b) a distributed-memory computer.

�

c!John E Savage

7.3 Parallel Computers with Memory

285

ory is relatively close to the time for a processor to access one of its registers. Processors in a

shared-memory computer can communicate with one another via the common memory. The

distributed-memory computer is characterized by a model in which processors can commu-

nicate with other processors only by sending messages. (See Fig. 7.2(b).) In this model it is

generally assumed that processors also have local memories and that the time to send a message

from one processor to another can be large relative to the time to access a local memory. A third

type of computer, a cross between the first two, is the distributed shared-memory computer.

It is realized on a distributed-memory computer on which the time to process messages is large

relative to the time to access a local memory, but a layer of software gives the programmer the

illusion of a shared-memory computer. Such a model is useful when programs can be executed

primarily from local memories and only occasionally must access remote memories.

Parallel computers are synchronous if all processors perform operations in lockstep and

asynchronous otherwise. A synchronous parallel machine may alternate between executing

instructions and reading from local or common memory.

(See the PRAM model of Sec-

tion 7.9, which is a synchronous, shared-memory model.) Although a synchronous parallel

computational model is useful in conveying concepts, in many situations, as with loosely cou-

pled distributed computers, it is not a realistic one. In other situations, such as in the design

of VLSI chips, it is realistic. (See, for example, the discussion of systolic arrays in Section 7.5.)

7.3.1 Flynn’s Taxonomy

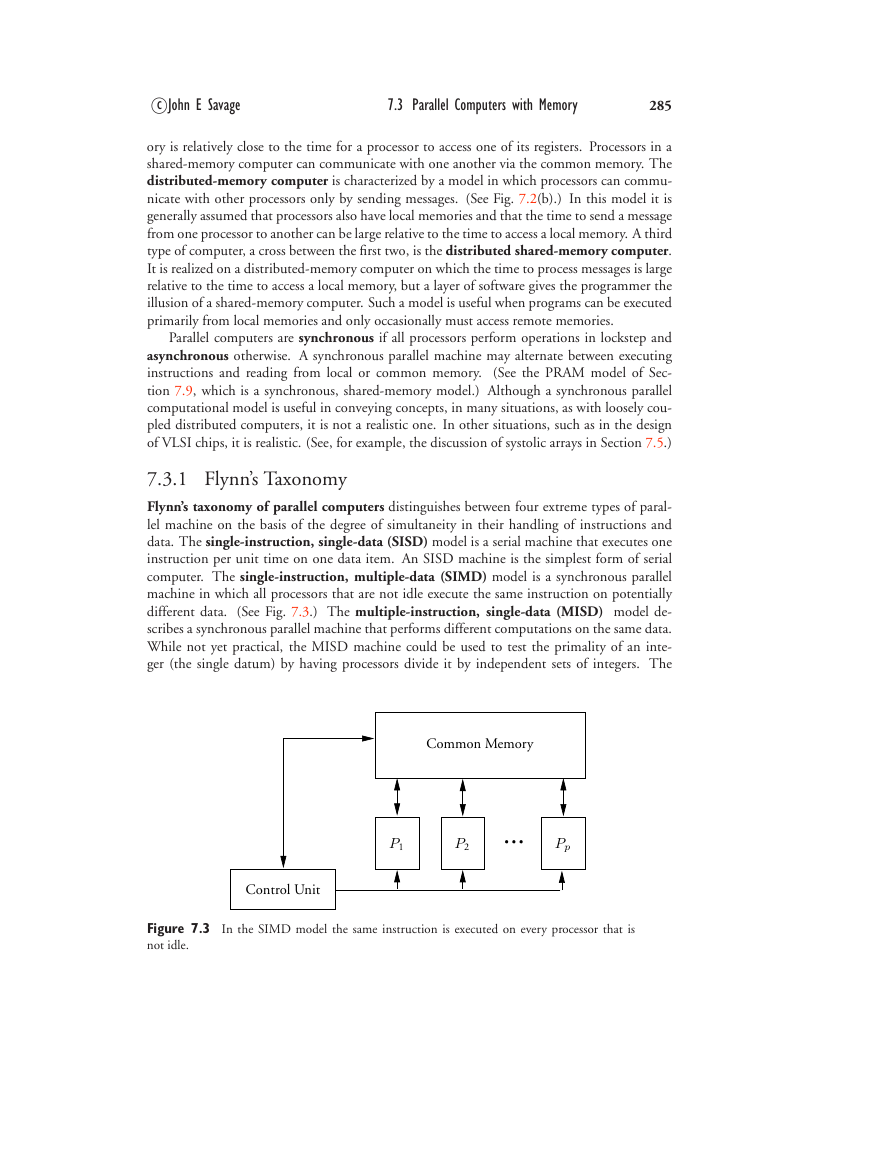

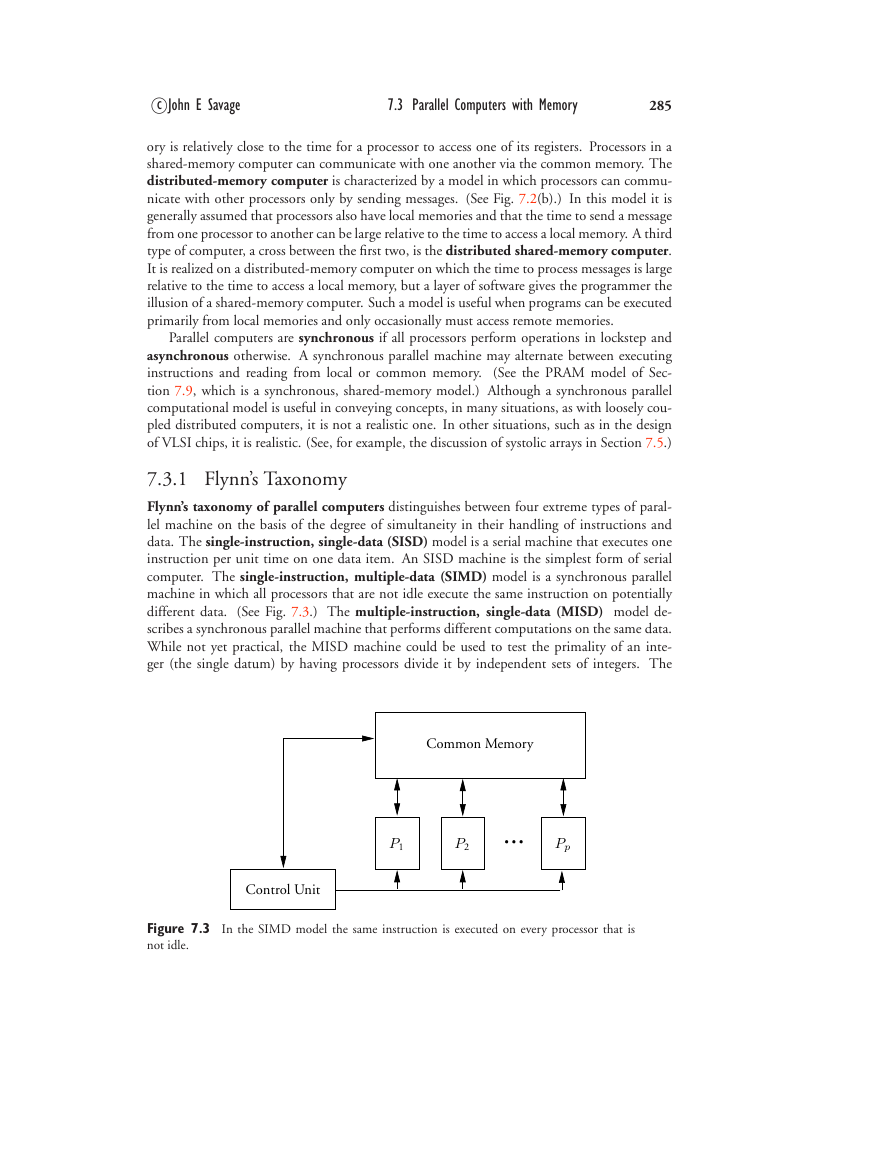

Flynn’s taxonomy of parallel computers distinguishes between four extreme types of paral-

lel machine on the basis of the degree of simultaneity in their handling of instructions and

data. The single-instruction, single-data (SISD) model is a serial machine that executes one

instruction per unit time on one data item. An SISD machine is the simplest form of serial

computer. The single-instruction, multiple-data (SIMD) model is a synchronous parallel

machine in which all processors that are not idle execute the same instruction on potentially

different data.

(See Fig. 7.3.) The multiple-instruction, single-data (MISD) model de-

scribes a synchronous parallel machine that performs different computations on the same data.

While not yet practical, the MISD machine could be used to test the primality of an inte-

ger (the single datum) by having processors divide it by independent sets of integers. The

Common Memory

P1

P2

...

Pp

Control Unit

Figure 7.3 In the SIMD model the same instruction is executed on every processor that is

not idle.

�

286

Chapter 7 Parallel Computation

Models of Computation

multiple-instruction, multiple-data (MIMD) model describes a parallel machine that runs

a potentially different program on potentially different data on each processor but can send

messages among processors.

The SIMD machine is generally designed to have a single instruction decoder unit that

controls the action of each processor, as suggested in Fig. 7.3. SIMD machines have not been a

commercial success because they require specialized processors rather than today’s commodity

processors that benefit from economies of scale. As a result, most parallel machines today are

MIMD. Nonetheless, the SIMD style of programming remains appealing because programs

having a single thread of control are much easier to code and debug. In addition, a MIMD

model, the more common parallel model in use today, can be programmed in a SIMD style.

While the MIMD model is often assumed to be much more powerful than the SIMD

one, we now show that the former can be converted to the latter with at most a constant

factor slowdown in execution time. Let K be the maximum number of different instructions

executable by a MIMD machine and index them with integers in the set {1, 2, 3, . . . , K}.

Slow down the computation of each machine by a factor K as follows: 1) identify time intervals

of length K, 2) on the kth step of the jth interval, execute the kth instruction of a processor if

this is the instruction that it would have performed on the jth step of the original computation.

Otherwise, let the processor be idle by executing its NOOP instruction. This construction

executes the instructions of a MIMD computation in a SIMD fashion (all processors either

are idle or execute the instruction with the same index) with a slowdown by a factor K in

execution time.

Although for most machines this simulation is impractical, it does demonstrate that in the

best case a SIMD program is at worst a constant factor slower than the corresponding MIMD

program for the same problem. It offers hope that the much simpler SIMD programming style

can be made close in performance to the more difficult MIMD style.

7.3.2 The Data-Parallel Model

The data-parallel model captures the essential features of the SIMD style. It has a single

thread of control in which serial and parallel operations are intermixed. The parallel opera-

tions possible typically include vector and shifting operations (see Section 2.5.1), prefix and

segmented prefix computations (see Sections 2.6), and data-movement operations such as are

realized by a permutation network (see Section 7.8.1). They also include conditional vector

operations, vector operations that are performed on those vector components for which the

corresponding component of an auxiliary flag vector has value 1 (others have value 0).

Figure 7.4 shows a data-parallel program for radix sort. This program sorts n d-bit inte-

gers, {x[n], . . . , x[1]}, represented in binary. The program makes d passes over the integers.

On each pass the program reorders the integers, placing those whose jth least significant bit

(lsb) is 1 ahead of those for which it is 0. This reordering is stable; that is, the previous or-

dering among integers with the same jth lsb is retained. After the jth pass, the n integers are

sorted according to their j least significant bits, so that after d passes the list is fully sorted.

The prefix function P (n)

+ computes the running sum of the jth lsb on the jth pass. Thus, for

k such that x[k]j = 1 (0), bk (ck) is the number of integers with index k or higher whose

jth lsb is 1 (0). The value of ak = bkx[k]j + (ck + b1)x[k]j is bk or ck + b1, depending on

whether the lsb of x[k] is 1 or 0, respectively. That is, ak is the index of the location in which

the kth integer is placed after the jth pass.

�

7.3 Parallel Computers with Memory

c!John E Savage

{ x[n]j is the jth least significant bit of the nth integer. }

{ After the jth pass, the integers are sorted by their j least significant bits. }

{ Upon completion, the kth location contains the kth largest integer. }

for j := 0 to d − 1

begin

287

(bn, . . . , b1) := P (n)

+ (x[n]j, . . . , x[1]j);

{ bk is the number of 1’s among x[n]j, . . . , x[k]j. }

{ b1 is the number of integers whose jth bit is 1. }

+ (x[n]j, . . . , x[1]j);

{ ck is the number of 0’s among x[n]j, . . ., x[k]j. }

(cn, . . . , c1) := P (n)

(an, . . . , a1) :=!bnx[n]j + (cn + b1)x[n]j, . . . , b1x[1]j + (c1 + b1)x[1]j";

{ ak = bkx[k]j + (ck + b1)x[k]j is the rank of the kth key. }

(x[n + 1 − an], x[n + 1 − an−1], . . . , x[n + 1 − a1]) := (x[n], x[n − 1], . . . , x[1])

{ This operation permutes the integers. }

end

Figure 7.4 A data-parallel radix sorting program to sort n d-bit binary integers that makes two

uses of the prefix function P (n)

+ .

The data-parallel model is often implemented using the single-program multiple-data

(SPMD) model. This model allows copies of one program to run on multiple processors with

potentially different data without requiring that the copies run in synchrony. It also allows

the copies to synchronize themselves periodically for the transfer of data. A convenient ab-

straction often used in the data-parallel model that translates nicely to the SPMD model is the

assumption that a collection of virtual processors is available, one per vector component. An

operating system then maps these virtual processors to physical ones. This method is effective

when there are many more virtual processors than real ones so that the time for interprocessor

communication is amortized.

7.3.3 Networked Computers

A networked computer consists of a collection of processors with direct connections between

them. In this context a processor is a CPU with memory or a sequential machine designed

to route messages between processors. The graph of a network has a vertex associated with

each processor and an edge between two connected processors. Properties of the graph of a

network, such as its size (number of vertices), its diameter (the largest number of edges on

the shortest path between two vertices), and its bisection width (the smallest number of edges

between a subgraph and its complement, both of which have about the same size) characterize

its computational performance. Since a transmission over an edge of a network introduces

delay, the diameter of a network graph is a crude measure of the worst-case time to transmit

�

288

Chapter 7 Parallel Computation

Models of Computation

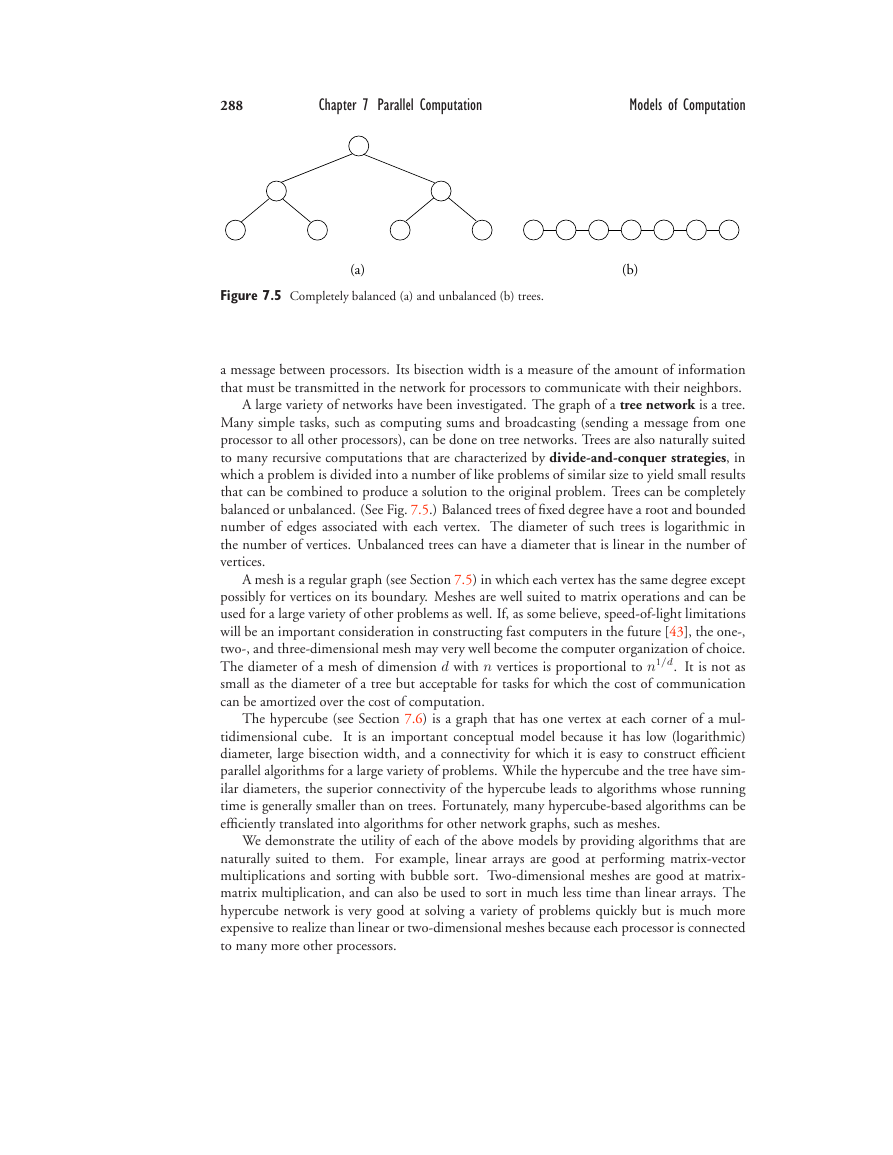

(a)

(b)

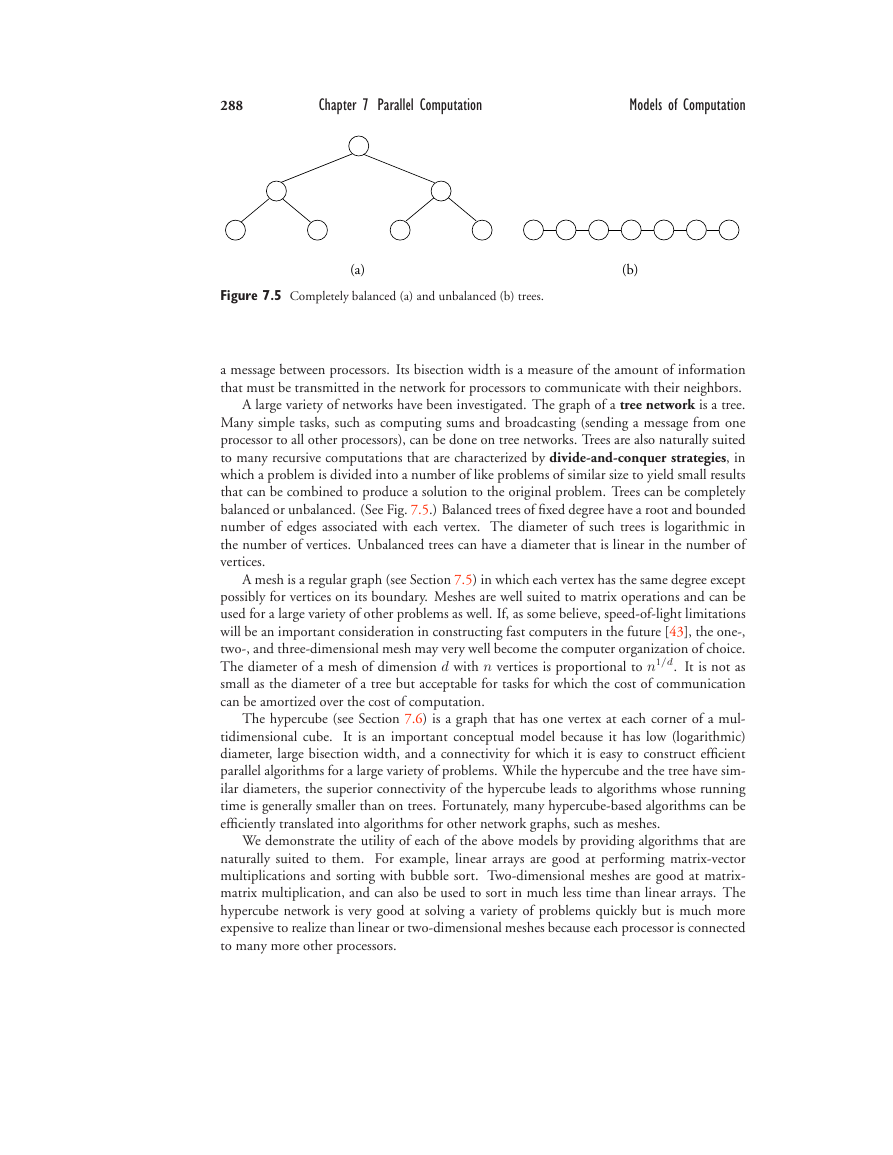

Figure 7.5 Completely balanced (a) and unbalanced (b) trees.

a message between processors. Its bisection width is a measure of the amount of information

that must be transmitted in the network for processors to communicate with their neighbors.

A large variety of networks have been investigated. The graph of a tree network is a tree.

Many simple tasks, such as computing sums and broadcasting (sending a message from one

processor to all other processors), can be done on tree networks. Trees are also naturally suited

to many recursive computations that are characterized by divide-and-conquer strategies, in

which a problem is divided into a number of like problems of similar size to yield small results

that can be combined to produce a solution to the original problem. Trees can be completely

balanced or unbalanced. (See Fig. 7.5.) Balanced trees of fixed degree have a root and bounded

number of edges associated with each vertex. The diameter of such trees is logarithmic in

the number of vertices. Unbalanced trees can have a diameter that is linear in the number of

vertices.

A mesh is a regular graph (see Section 7.5) in which each vertex has the same degree except

possibly for vertices on its boundary. Meshes are well suited to matrix operations and can be

used for a large variety of other problems as well. If, as some believe, speed-of-light limitations

will be an important consideration in constructing fast computers in the future [43], the one-,

two-, and three-dimensional mesh may very well become the computer organization of choice.

The diameter of a mesh of dimension d with n vertices is proportional to n1/d. It is not as

small as the diameter of a tree but acceptable for tasks for which the cost of communication

can be amortized over the cost of computation.

The hypercube (see Section 7.6) is a graph that has one vertex at each corner of a mul-

tidimensional cube.

It is an important conceptual model because it has low (logarithmic)

diameter, large bisection width, and a connectivity for which it is easy to construct efficient

parallel algorithms for a large variety of problems. While the hypercube and the tree have sim-

ilar diameters, the superior connectivity of the hypercube leads to algorithms whose running

time is generally smaller than on trees. Fortunately, many hypercube-based algorithms can be

efficiently translated into algorithms for other network graphs, such as meshes.

We demonstrate the utility of each of the above models by providing algorithms that are

naturally suited to them. For example, linear arrays are good at performing matrix-vector

multiplications and sorting with bubble sort. Two-dimensional meshes are good at matrix-

matrix multiplication, and can also be used to sort in much less time than linear arrays. The

hypercube network is very good at solving a variety of problems quickly but is much more

expensive to realize than linear or two-dimensional meshes because each processor is connected

to many more other processors.

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc