I Background

Introduction

The scarcity of labels

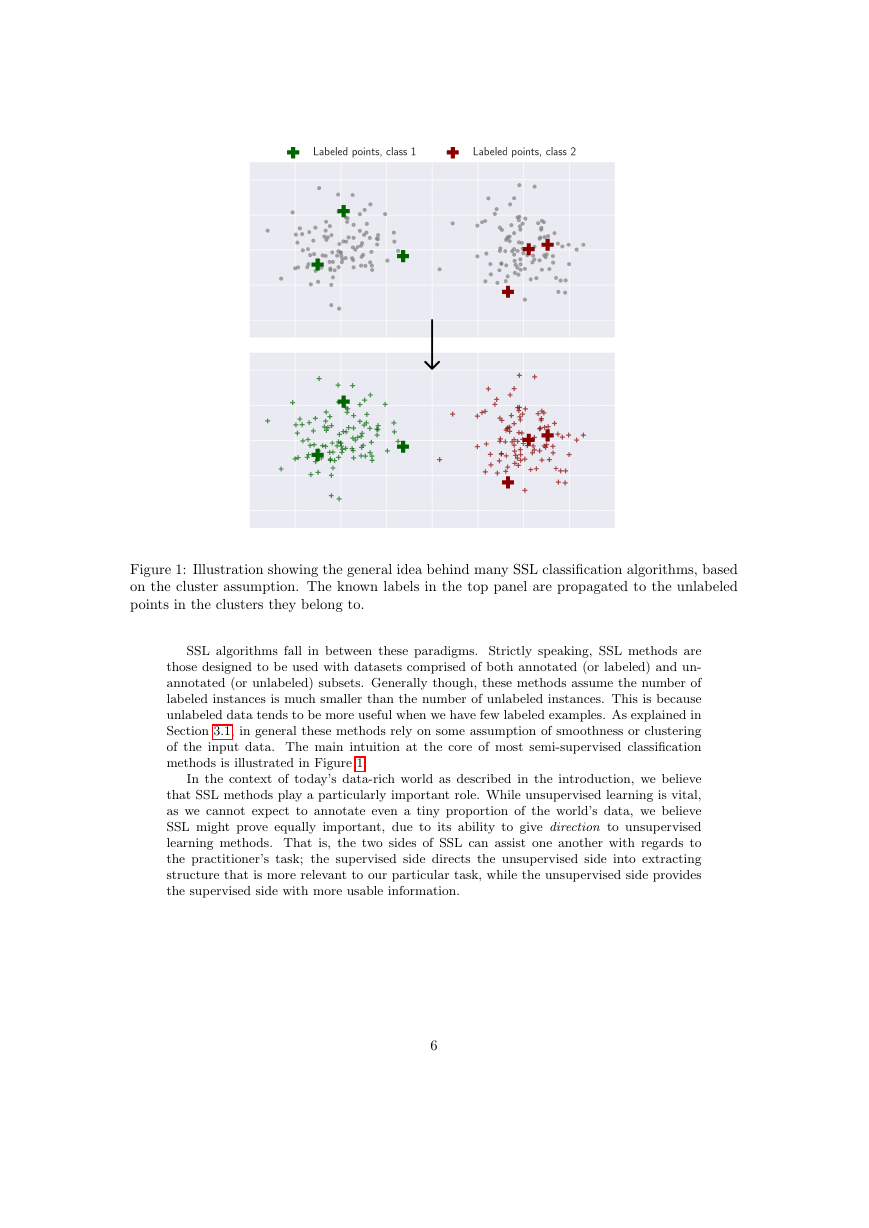

Semi-supervised learning

Overview and contributions

Overview

Contributions

Assumptions and approaches that enable semi-supervised learning

Assumptions required for semi-supervised learning

Smoothness assumption

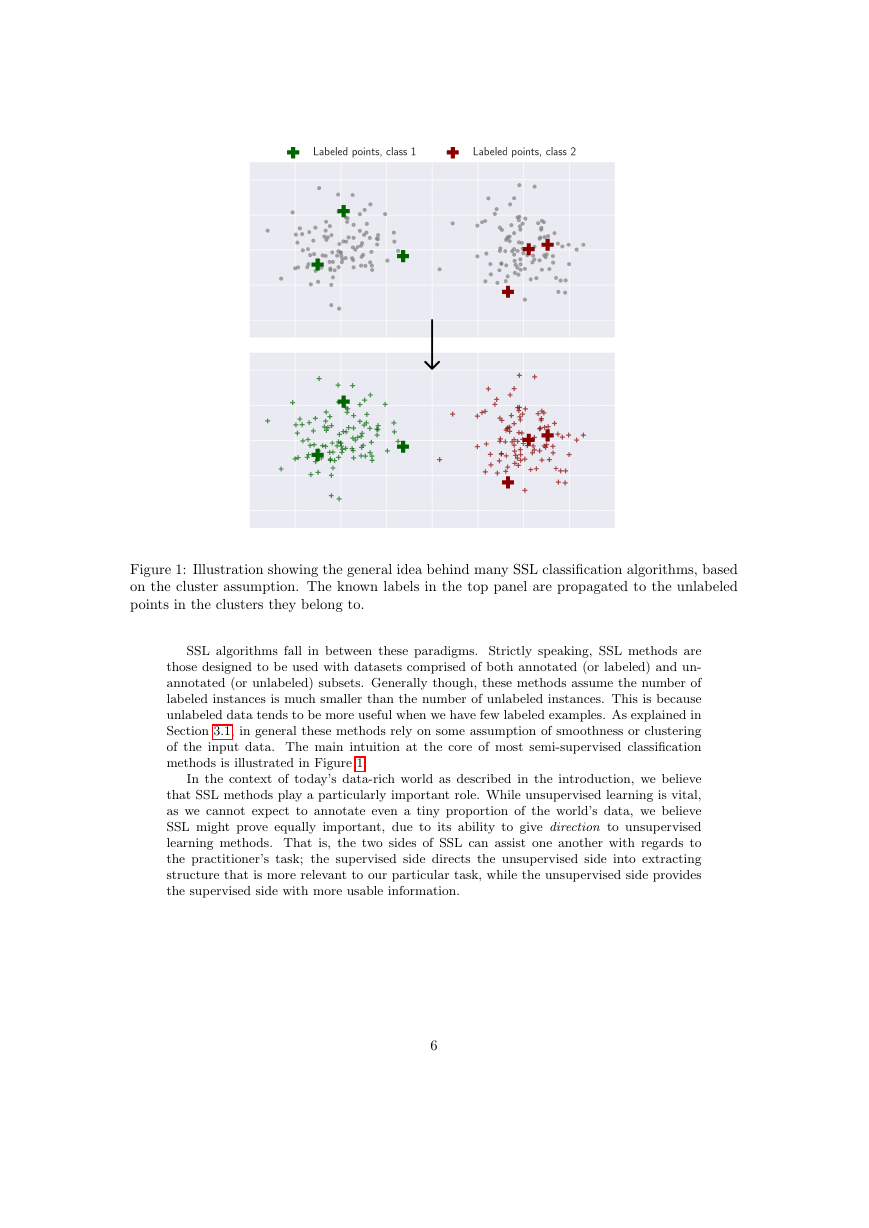

Cluster assumption

Low-density separation

Existence of a discoverable manifold

Classes of semi-supervised learning algorithms

Methods based on generative models

Methods based on low-density separation

Graph-based methods

Methods based on a change of representation

II Related Work

Review of Semi-Supervised Learning Literature

Semi-supervised learning before the deep learning era

Semi-supervised deep learning

Autoencoder-based approaches

Regularisation and data augmentation-based approaches

Other approaches

Semi-supervised generative adversarial networks

Generative adversarial networks

Approaches by which generative adversarial networks can be used for semi-supervised learning

Model focus: Good Semi-Supervised Learning that Requires a Bad GAN

Shannon's entropy and its relation to decision boundaries

CatGAN

Improved GAN

The Improved GAN SSL Model

BadGAN

Implications of the BadGAN model

III Analysis and experiments

Enforcing low-density separation

Approaches to low-density separation based on entropy

Synthetic experiments used in this section

Using entropy to incorporate the low-density separation assumption into our model

Taking advantage of a known prior class distribution

Generating data in low-density regions of the input space

Viewing entropy maximisation as minimising a Kullback-Leibler divergence

Theoretical evaluation of different entropy-related loss functions

Similarity of Improved GAN and CatGAN approaches

The CatGAN and Reverse KL approaches may be `forgetful'

Another approach: removing the K+1th class' constraint in the Improved GAN formulation

Summary

Experiments with alternative loss functions on synthetic datasets

Research questions and hypotheses

Which loss function formulation is best?

Can the PixelCNN++ model be replaced by some other density estimate?

Discriminator from a pre-trained generative adversarial network

Pre-trained denoising autoencoder

Do the generated examples actually contribute to feature learning?

Is VAT or InfoReg really complementary to BadGAN?

Experiments

PI-MNIST-100

Experimental setup

Effectiveness of different proxies for entropy

Potential for replacing PixelCNN++ model with a DAE or discriminator from a GAN

Extent to which generated images contribute to feature learning

Complementarity of VAT and BadGAN

SVHN-1k

Experimental setup

Effectiveness of different proxies for entropy

Potential for replacing PixelCNN++ model with a DAE or discriminator from a GAN

Hypotheses as to why our BadGAN implementation does not perform well on SVHN-1k

Experiments summary

Conclusions, practical recommendations and suggestions for future work

IV Appendices

Information regularisation for neural networks

Derivation

Intuition and experiments on synthetic datasets

FastInfoReg: overcoming InfoReg's speed issues

Performance on PI-MNIST-100

Viewing entropy minimisation as a KL divergence minimisation problem

References

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc