Learning Detection with Diverse Proposals

Samaneh Azadi1, Jiashi Feng2, and Trevor Darrell1

1University of California, Berkeley, 2National University of Singapore

{sazadi,trevor}@eecs.berkeley.edu

elefjia@nus.edu.sg

Abstract

To predict a set of diverse and informative proposals with

enriched representations, this paper introduces a differen-

tiable Determinantal Point Process (DPP) layer that is able

to augment the object detection architectures. Most modern

object detection architectures, such as Faster R-CNN, learn

to localize objects by minimizing deviations from the ground

truth, but ignore correlation “between” multiple proposals

and object categories. Non-Maximum Suppression (NMS) as

a widely used proposal pruning scheme ignores label- and

instance-level relations between object candidates resulting

in multi-labeled detections. In the multi-class case, NMS

selects boxes with the largest prediction scores ignoring the

semantic relation between categories of potential election.

In contrast, our trainable DPP layer, allowing for Learning

Detection with Diverse Proposals (LDDP), considers both

label-level contextual information and spatial layout rela-

tionships between proposals without increasing the number

of parameters of the network, and thus improves location

and category specifications of final detected bounding boxes

substantially during both training and inference schemes.

Furthermore, we show that LDDP keeps it superiority over

Faster R-CNN even if the number of proposals generated by

LDPP is only ∼30% as many as those for Faster R-CNN.

1. Introduction

Image classification [9] and object detection [17, 7] have

been improved significantly by development of deep convo-

lutional networks [9, 11]. However, object detection is still

more challenging than image classification as it aims at both

localizing and classifying objects. Accurate localization of

objects in each image requires both well-processed “can-

didate” object locations and “selected refined” boxes with

precise locations. Looking at the object detection problem as

an extractive image summarization and representation task,

the set of all predicted bounding boxes per image should be

as informative and non-repetitive as possible.

Figure 1: Potential proposals as output from the region pro-

posal network. There are many overlapping boxes on each

object of the image whose prediction scores and location

offsets are updated similarly in the Faster R-CNN network:

the deviation of “all” proposals from their corresponding

ground truth should be minimized. However, the overlap-

ping correlation between these proposals is ignored while

training the model. We increase the probability of selecting

the most representative boxes, shown in red, resulting in

more diverse final detections.

The Region-based Convolutional Network methods such

as Fast and Faster R-CNN [7, 6, 16] proposed an efficient

approach for object proposal classification and localization

with a multi-task loss function during training. The training

process in such methods contains a fine-tuning stage, which

jointly optimizes a softmax classifier and a bounding-box

regressor. Such a bounding box regressor tries to minimize

the distance between the candidate object proposals with

their corresponding ground-truth boxes for each category of

objects. However, it does not consider relation “between”

boxes in terms of location and context while learning a rep-

resentation model. In this paper, we propose a new loss layer

added to the other two softmax classifier and bounding-box

regressor layers (all included in the multi-task loss for train-

ing the deep model) which formulates the discriminative

17149

�

contextual information as well as mutual relation between

boxes into a Determinantal Point Process (DPP) [10] loss

function. This DPP loss finds a subset of diverse bound-

ing boxes using the outputs of the other two loss functions

(namely, the probability of each proposal to belong to each

object category as well as the location information of the

proposals) and will reinforce them in finding more accurate

object instances in the end, as illustrated in Figure 1. We

employ our DPP loss to maximize the likelihood of an accu-

rate selection given the pool of overlapping background and

non-background boxes over multiple categories.

Inference in state-of-the-art detection methods [18, 6,

16, 14] is generally based on Non-Maximum Suppression

(NMS), which considers only the overlap between candidate

boxes per class label and ignores their semantic relationship.

We propose a DPP inference scheme to select a set of non-

repetitive high-quality boxes per image taking into account

spatial layout, category-level analogy between proposals, as

well as their quality score obtained from deep trained model.

We call our proposed model as “Learning Detection with

Diverse Proposals Network – LDDP-Net”.

Our proposed loss function for representation enhance-

ment and more accurate inference can be applied on any

deep network architecture for object detection. In our exper-

iments below we focus on the Faster R-CNN model to show

the significant performance improvement added by our DPP

model. We demonstrate the effect of our proposed DPP loss

layer in accurate object localization during training as well

as inference on the benchmark detection data sets PASCAL

VOC and MS COCO based on average precision and average

recall detection metrics.

To sum up, we make following contributions in this work:

• We propose to explicitly pursue diversity on generated

object proposals and introduce the strategy of learning

detection with diverse proposals.

• We introduce a DPP layer that is able to maximize diver-

sity favorably in an end-to-end trainable way. Besides

it is compatible with many existing state-of-the-art de-

tection architectures and thus able to augment them

effectively.

• Experiments on Pascal VOC and MS COCO data sets

clearly demonstrate the superiority of diverse proposals

and effectiveness of our proposed method on producing

diverse detections.

LDDP Code is available at https://github.com/

azadis/LDDP.

2. End-to-End LDDP Model

Faster R-CNN [16] as a unified deep convolutional frame-

work for generating and refining region proposals alternates

between fine-tuning for proposals using a fully convolutional

Region Proposal Network (RPN) and fine-tuning for object

detection by Fast R-CNN model. Keeping the object pro-

posals generated from RPN fixed, they will be mapped into

convolutional features through several convolutional and

max-pooling layers. An RoI pooling layer converts the fea-

tures inside each region of interest (RoI) into a fixed-length

feature vector afterwards, which will be fed into a sequence

of fully connected layers.

The loss function on the top layer of detection model is

a multi-task loss dealing with both classification and local-

ization of object proposals: the softmax loss layer outputs a

discrete probability distribution over the K + 1 categories

of objects in addition to the background for each object

proposal, and the bounding box regressor layer determines

location offsets per object proposal for all categories.

Applying a diversity-ignited model can reinforce the net-

work to limit the boxes around each object while they have

minimum overlap with other object bounding boxes in the

image. It will also “select” boxes in such a way to make

their collection as informative as possible given the mini-

mum possible number of proposals. We define such a model

through a DPP loss layer added to the other two loss func-

tions introduced in the Faster R-CNN architecture.

On the other hand, inference in Region-based CNN mod-

els as well as other state-of-the-art networks is done through

NMS which selects boxes with highest detection scores for

each category. Giving a priority to the detection scores,

it might finally end up in selecting overlapping detection

boxes and miss the best possible set of non-overlapping ones

with acceptable score. Besides, NMS neglects the semantic

relations between categories as its selection is done category-

wisely. We address all such problems through a probabilistic

DPP inference scheme which jointly considers all spatial

layout, contextual information, and semantic similarities be-

tween object candidates and selects the best probable ones.

In the following section, we define our learn-able DPP loss

layer, show how to back-propagate through this layer while

training the model (summarized in Alg. 1), and then clar-

ify how to infer the best non-overlapping boxes from the

predicted proposals.

2.1. Learning with Diverse Proposals

Determinantal Point Processes (DPPs) are natural models

for diverse subset selection problems [10]. In the selection

problem, there is a trade-off between two influential metrics:

The selected subset of items, or in other words their summary,

should be “representative” and cover significant amount of

information from the whole set. Besides, the information

should be passed “efficiently” through this selection; the

selection should be diverse and non-repetitive. We briefly

explain a determinantal point process here and refer the

readers to [10] for an in-depth discussion.

7150

�

Algorithm 1 LDDP Learning

Algorithm 2 LDDP Inference

i , tc

i :

∀i ∈ X

∀i ∈ X, c = 0, · · · , K}: box probability and

Input Set X : {i : i ∈ mini-batch},

{bc

offset values,

Output Loss L, ∂L/∂bc

i

Xs ← subset of X including non-background proposals

with high overlap with gt boxes

Φi ← IoUi,gti ∀i ∈ Xs,

Y ← Apply Alg. 2 on Xs,

B ← background proposals as defined in § 2.1.2,

Φi ← Eq. (3), (4) ∀i ∈ X,

L ← Eq. (1), (2),

∂L/∂bc

return L, ∂L/∂bc

i

i ← Eq. (5), (6) ∀i ∈ X, c ∈ {0, · · · , K}

2.1.1 Determinantal Point Process

A point process P on a discrete set X = {x1, · · · , xN } is

a probability measure on the set of all subsets of X. P is

called a determinantal point process (DPP) if:

PL(Y = Y ) =

det(LY )

det(L + I)

where I is an identity matrix, L is the kernel matrix, and Y

is a random subset of X . The positive semi-definite kernel

matrix L indexed by elements of Y models diversity among

items: the marginal probability of inclusion of each single

item is proportional to the diagonal values of the kernel

matrix L, while correlation between each pair of items is

proportional to the off-diagonal values of the kernel. As a

result, subsets with higher diversity measured by the kernel

have higher likelihood.

In the object detection scenario, items are indeed the

set of proposals in the image produced by the region pro-

posal network. Given the bounding box probability scores

of softmax loss layer and location offsets from the bound-

ing box regressor layer for the given set of proposals for

image i, X i, we seek for a precise and diverse set of boxes,

Y i. In other words, the probability of selecting informa-

tive non-redundant boxes given the whole set of background

and non-background proposals should be maximized during

training. Simultaneuosly, the probability of selecting back-

ground boxes, denoted by Bi, should be minimized. We

employ a learn-able determinantal point process layer by

maximizing the log-likelihood of the training set and mini-

mizing the log-likelihood of background proposals election:

L(α) =

Pα(Y i|X i)

Pα(Bi|X i)

logYi

= Xi log Pα(Y i|X i) − log Pα(Bi|X i)(1)

where α refers to the parameters of the deep network.

Input Set X: Set of proposals and their prediction scores

and box offsets,

T : fixed threshold

Output Set Y : Non-overlapping representative proposals

Y ← ∅, Y ′ ← X,

while len(Y ) < # Dets and Y ′ 6= ∅ do

cost(i) ← maxj Sij

k ← arg maxi∈Y ′ Pα(Y ∪ i|X)

if cost(k) < T then

Y ← Y ∪ k

end if

Y ′ ← Y ′\k

end while

return Y

For simplicity, we assume that number of images per

iteration is one and thus, remove index i from our notations.

We follow the same mini-batch setting as in Faster R-CNN

network [16] where m is the number of object proposals in

each iteration or the size of mini-batch.

Given a list of object proposals as output of the RPN

network, X, a posterior probability Pα(Y |X) modeled as a

determinantal point process would imply which boxes should

be selected with a high probability:

Pα(Y = Y |X) =

,

det(LY )

det(L + I)

Li,j = Φ1/2

i SijΦ1/2

,

Sij = IoUij × simij,

j

IoUij =

simij =

,

Ai ∩ Aj

Ai ∪ Aj

2IC(lcs(Ci, Cj))

IC(Ci) + IC(Cj)

.

(2)

The above distribution model considers relation among dif-

ferent proposals (indexed by i, j) through their similarity

encoded by Sij (which is the product of their spatial overlap

rate and category similarity) as well as their individual qual-

ity score Φi. We now proceed to explain each quantity in the

distribution model.

We set S = S + ǫI with a small ǫ > 0 to make sure that

the ensemble matrix L is positive semi-definite, which is im-

portant for a proper DPP model definition. Here, det(L + I)

is a normalizing factor, and IoUij is the Intersection-over-

Union associated with each pair of proposals i and j, where

Ai is the area covered by the proposal i. Motivated by

[13, 15], we consider the semantic relation among propos-

als, simij , as the semantic similarity between the labels of

each pair of proposals (i, j). Here lcs(Ci, Cj) refers to the

lowest common subsumer of the category labels Ci and Cj

in the WordNet hierarchy. The information content of class

7151

�

C is computed as IC(C) = − log P (C) where P (C) is the

probability of occurrence of an instance with label C among

all images. Posterior probability of selecting background

boxes, Pα(B|X), follows the same determinantal point pro-

cess formulation as in Eq. (2). The difference between these

two posterior probabilities lies in how we measure quality

of proposals Φ.

2.1.2 Model Description

In general, the classification score over K + 1 categories as

well as overlap with the bounding box target can be used

to define the quality score Φi for proposal i. The classifica-

tion scores are computed in the fully-connected layer before

“softmax” layer in the Faster R-CNN architecture, and lo-

cation of each bounding box is given by the inner product

layer feeding into the “bounding box regressor”. The ex-

act definition of Φi for different proposals in the two terms

of log-likelihood function Eq. (1) varies according to the

general goal of increasing scores of high-quality boxes in

their ground-truth label and increasing scores of background

boxes in the background category.

One should note that Y is an ideal extractive summary

of input which is the list of proposals in each mini-batch,

X (e.g. m = 128) [10]. Thus, we apply a maximum a

posteriori (MAP) DPP inference, Alg. 2, in each iteration of

the training algorithm to determine the set of representative

boxes Y from the set of m proposals. If ground truth boxes

exist among the proposals in each mini-batch, they will be

automatically selected as the set Y through MAP. However,

if they don’t exist among the proposals, MAP will select the

best summarizing ones. To make sure that selected boxes,

Y , are accurate and close to ground-truth boxes, we only

select from a “subset” of proposals in the mini-batch with

high overlap with their bounding box targets. Also, we

define the quality of boxes only based on their overlap with

their bounding box target in this step of applying MAP, as

summarized in Alg. 1. Thus, by selecting Y as the set of

best representations of ground-truth boxes in X, maximizing

P (Y |X) results in maximizing selection of ground-truth

boxes, which corresponds to maximizing the probability of

training data.

On the other hand, to specify the set of more probable

background proposals as B, given the set of proposals in

each mini-batch X, we define B as the set of all proposals in

X − Y except those that have high overlap with ground-truth

and their associated predicted label matches their ground-

truth label. As mentioned before, the goal here is to minimize

the probability of selecting “background proposals” as the

representative boxes in each image.

To complete the DPP model description, we define the

quality scores of the proposals as follows.

For the first term log Pα(Y |X), we define the quality of

boxes as:

Φi =(IoUi,gti × exp{W T

IoUi,gti ×Pc6=0 exp{W T

gtfi},

if i ∈ Y

c fi} if i 6∈ Y

(3)

where W T

c fi is the output of the inner product layer before

the softmax loss layer, Wc is the weight vector learned for

category c and fi is the fc7 feature learned for proposal i.

Moreover, Wgt denotes the weight vector for the correspond-

ing ground-truth label of proposal i, and c = 0 shows the

background category. Note that the goal is to increase the

score of boxes in Y in their ground-truth label and the score

of other boxes in the back-ground category. Derivatives of

log-likelihood function with respect to W T

c fi in Eq. (5), (6)

clarifies all above definitions.

The second term log Pα(B|X) is designed for minimiz-

ing the probability of selection of background boxes which

results in a boost in the scores of such proposals in their back-

ground category. We thus define the quality of proposals for

this term as:

Φi =(IoUi,gti ×Pc6=0 exp{W T

IoUi,gti × exp{W T

gtfi}

c fi},

if i ∈ B

if i 6∈ B

(4)

The effect of involving IoUi,gti in quality scores during train-

ing appears in computing the gradient, Eq. (5),(6), where a

larger gradient would be passed for boxes with higher over-

lap with their bbox target. It means more accurate boxes

will move toward being selected (achieve higher softmax

prediction scores) faster than others.

To avoid degrading the relatively accurate bounding boxes

which are not selected in Y , in Eq. (1), during the learning

process, we exclude from X all the boxes that have high

overlap with boxes in Y and their label matches their ground-

truth category (in both Pα(Y i|X i), Pα(Bi|X i)).

2.1.3 LDDP Back-Propagation

We modify the negative log-likelihood function in Eq. (1)

such that the two terms get balanced according to the number

of selected proposals in Y, B. This DPP loss function, de-

pends on the inputs of both softmax loss and bounding box

regressor in the deep network. Since the ensemble matrix

L, Eq. (2), and the consequent log-likelihood function in

Eq. (1) are functions of the parameters of deep network, we

incorporate the gradient of our DPP loss layer into the back-

propagation scheme of the detection network. We assume

the location coordinates of proposals are fixed and only con-

sider the outputs of the fully-connected layer before softmax

loss as the parameters of our loss layer.

Based on definitions of Φi in Eq. (3) and model presented

in Eq. (2), the gradient of p1 = log Pα(Y |X) with respect

to bc

c fi, the output of the inner product layer, would

i = W T

7152

�

be as follows:

3. Related Work

−∂ log p1

∂bc

i

Kii − 1,

Kii exp{bc

i }

Pc′ 6=0 exp{bc′

i }

0

if i ∈ Y, c = gt

if i 6∈ Y, c 6= 0

,

(5)

otherwise

where Kii = Lii/ det(L + I). Therefore, minimizing the

negative log-likelihood increases the scores of representative

boxes in their ground-truth label and background boxes in

background label. Similarly, according to the defined Φi’s

in Eq. (4), the gradient of p2 = log Pα(B|X) w.r.t bc

i is:

=

=

∂ log p2

∂bc

i

−Kii,

−(Kii−1) exp{bc

Pc′ 6=0 exp{bc′

i }

i }

,

if i 6∈ B, c = gt

if i ∈ B, c 6= 0

0

otherwise

(6)

Consequently, the gradient of the above log-likelihood

function with respect to bc

i will be added to the gradient of the

other two loss functions in the backward pass while end-to-

end training of parameters of the network. The proof for the

above gradient derivations is provided in the supplemental.

2.2. Inference with Diverse Proposals

Given the prediction scores and bounding box offsets

from the learned network, we model the selection problem

for unseen images as a maximum a posteriori (MAP) in-

ference scheme where the probability of inclusion of each

candidate box depends on the determinant of a kernel matrix.

We define this kernel or similarity matrix such that it captures

all spatial and contextual information between boxes all at

once. We use the same kernel matrix L as in Eq. (2) where

X is the list of all candidate proposals per image over all K

non-background categories with a score above an specific

threshold (e.g. 0.05).

We capture quality of boxes, Φ, by their per class predic-

tion scores:

exp{W T

c fi}

Φi =

c′ fi}

Pc′ exp{W T

for c ∈ {0, · · · , K}

Similarly, we employ the spatial information by IoU and the

semantic similarity between box labels by simij as shown in

Eq. (2).

Thus, our kernel definition allows selection of a set of

candidate boxes which have minimum possible overlapping

as well as highest detection scores.

In other words, the

boxes with less location- and label-level similarity and higher

detection scores would be more probable to be selected. To

figure out which boxes should be selected, similar to [10],

we use a greedy optimization algorithm, Alg. 2, which keeps

the box with the highest probability found by Eq. (2) at each

iteration.

Several works [2, 15, 12] have proposed a replacement

for the conventional non-maximum suppression to optimally

select among detection proposals. Desai et al. [2] proposed

a unified model for multi-class object detection that both

learns optimal NMS parameters and infers boxes by cap-

turing different structured contextual interactions between

object and their categories. In contrast to [2], the contex-

tual information used in our model captures fixed label-level

semantic similarities based on WordNet hierarchy as well

as learned proposal probability scores. We also use IoU to

capture spatial layout within a similarity kernel, while there

is no strong notion of quality or overlap among boxes in [2]

and only a thresholded value is used in a (0/1) loss function.

Unlike this method, the learnable deep features in our full

end-to-end framework improve bounding box locations and

category specifications through our proposed differentiable

loss function.

Mrowca et al. [15] proposed a large-scale multi-class

affinity propagation clustering (MAPC) [5] to improve both

the localization and categorization of selected detected pro-

posals, which simultaneously optimizes across all categories

and all proposed locations in the image. Similar to our se-

mantic similarity metric, they use WordNet relationships to

capture highly related fine-grained categories in a large-scale

detection setting. Lee et al. [12] use individualness to mea-

sure quality and similarity scores in a determinantal point

process inference scheme focusing on the “binary” pedes-

trian detection problem. However, these methods are only

applied for the inference paradigm and can neither improve

proposal representations nor impose diversity among object

bounding boxes while training the model.

4. Experiments

We demonstrate the significant improvement obtained

from our model on the representation of detected bounding

boxes via a set of quantitative and qualitative experiments

on Pascal VOC2007 and MS COCO benchmark data sets

as explained in the following sections. We use the caffe

deep learning framework [8] in all our experiments. We use

one image per iteration with mini-batch of 128 proposals.

We replaced the semantic similarity matrix in Eq. (2) with

its fourth power during LDDP inference as we observed

improvement on detection performance on the validation

sets.

Our baseline in all experiments is the state-of-the-art ob-

ject detection Faster R-CNN approach used as our training

model. Moreover, final detections are inferred by the NMS

scheme applied on top of the deep trained network, denoted

as our inference baseline. We use two different NMS IoU

threshold values for within class and across class suppres-

sions: First, proposals labeled similarly are suppressed by

7153

�

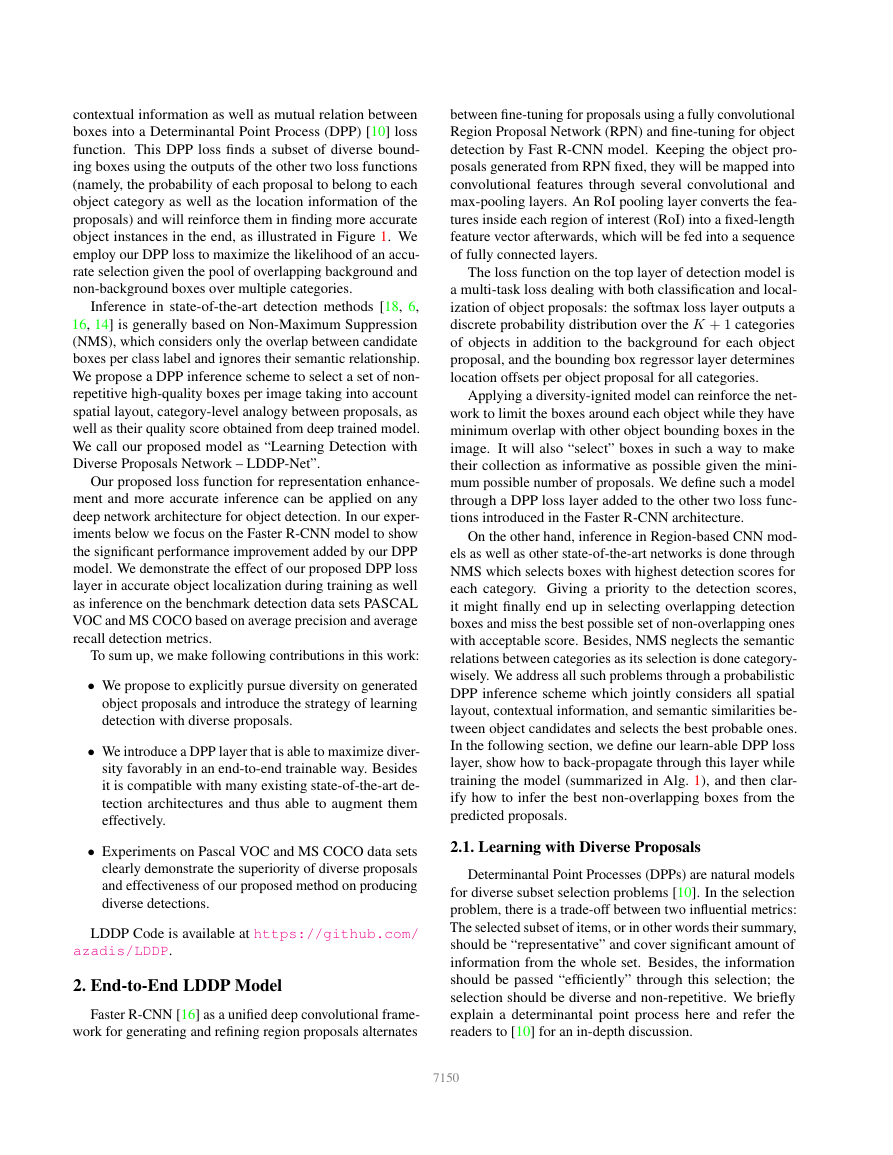

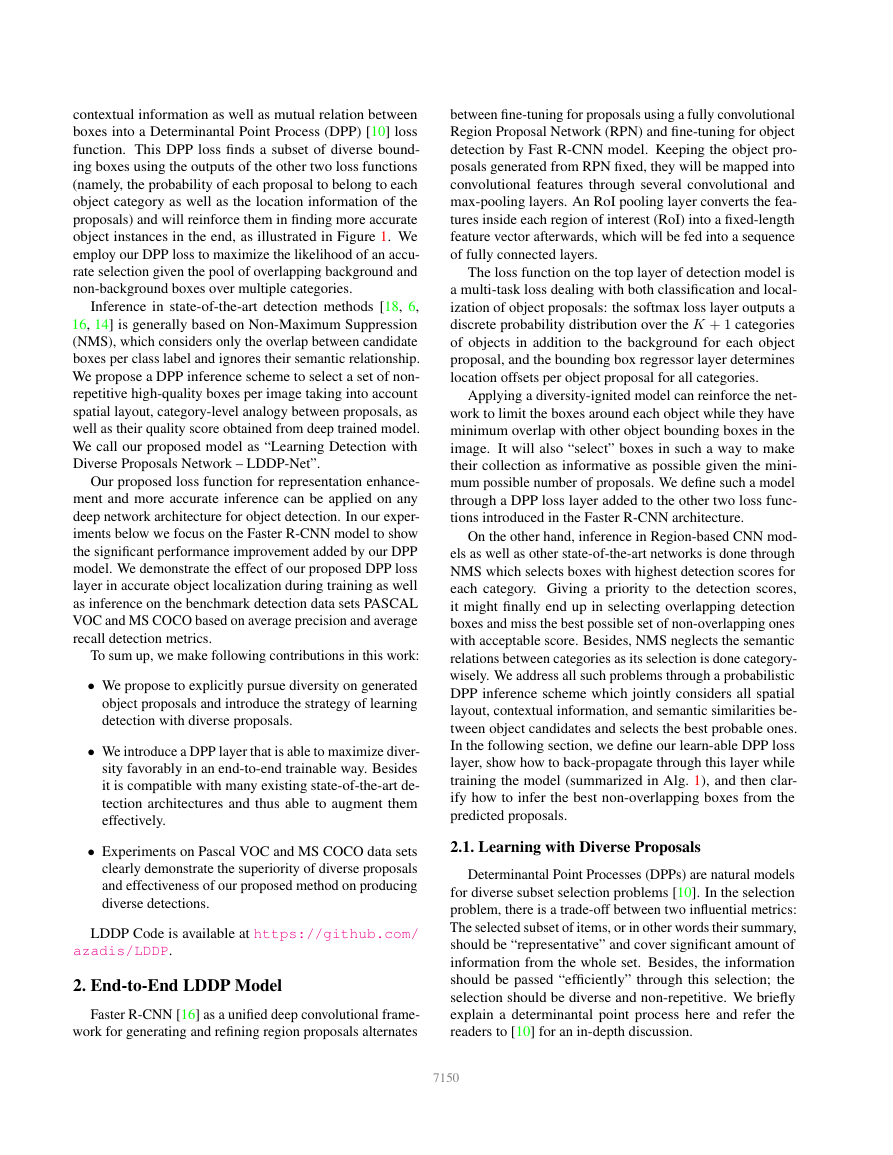

Table 1: VOC2007 test detection average precision(%) (trained on VOC2007 trainval) at IoU thresholds o.5 and 0.7. All

methods use ZF deep convolutional network. Each pair (x, y) indicates method x used for learning and y for inference. In

both tables, the two top rows use NMS and the two bottom rows show LDDP used for inference. Here “FrRCNN” refers to

“Faster RCNN”

Method

.

5 (FrRCNN, NMS)

0

U

o

I

(LDDP, NMS)

(FrRCNN, LDDP)

(LDDP, LDDP)

@

aero bike bird boat bottle bus

63.7 70.1 55.5 45.4 37.1 66.3 75.5 71.5 39.3 66.3 60.2 61.5 76.7 69.7

39.4 63.5 61.8 65.7 74.9 70.9

65.6 72.7 56.3 44.6 36.9 67.8 75.5 73

66.1 70.4 56.5 45.8 37.1 67.8 75.5 71.1 40

68.9 59.9 65.8 78.7 71.6

70.1 75.4 74.9 39.4 66.6 61.5 68.5 77.4 71.5

66

chair cow table dog

73.2 56.9 45.6 37

car

cat

mAP

71.5 34.2 53.3 55.9 69.9 65.3 60.45

71.2 37.4 55.7 55.8 71.4 62.6 61.14

71.4 34

71

37.7 58.8 56.6 71.9 64.1 62.21

59.7 56.4 72.3 64.9 61.7

horse mbike persn plant sheep sofa train tv

.

7 (FrRCNN, NMS)

0

U

o

I

(LDDP, NMS)

(FrRCNN, LDDP)

(LDDP, LDDP)

@

36.0 45.8 25.5 18.0 14.8 46.6 55.2 41.9 17.1 30.8 38.8 30.9 48.1 45.1

37.6 44.8 22.8 19.7 16.2 50.8 56.5 45.2 19.2 36.7 39.2 35.6 48.9 45.4

36.4 45.7 26.9 19.3 15.3 47.7 55.0 41.1 17.2 31.5 38.7 34.4 48.1 49.1

38.2 45.2 25.2 20.9 16.4 50.7 56.8 45.1 19.5 37.2 39.8 35.7 49.6 46.3

35.2 13.8 30.7 30.3 43.8 44.2 34.6

36.9 13.7 35.5 35.1 42.6 40.8 36.2

35.3 13.9 31.1 32.3 44.1 45.6 35.4

37

14.5 36.4 35.2 44.7 40.9 36.8

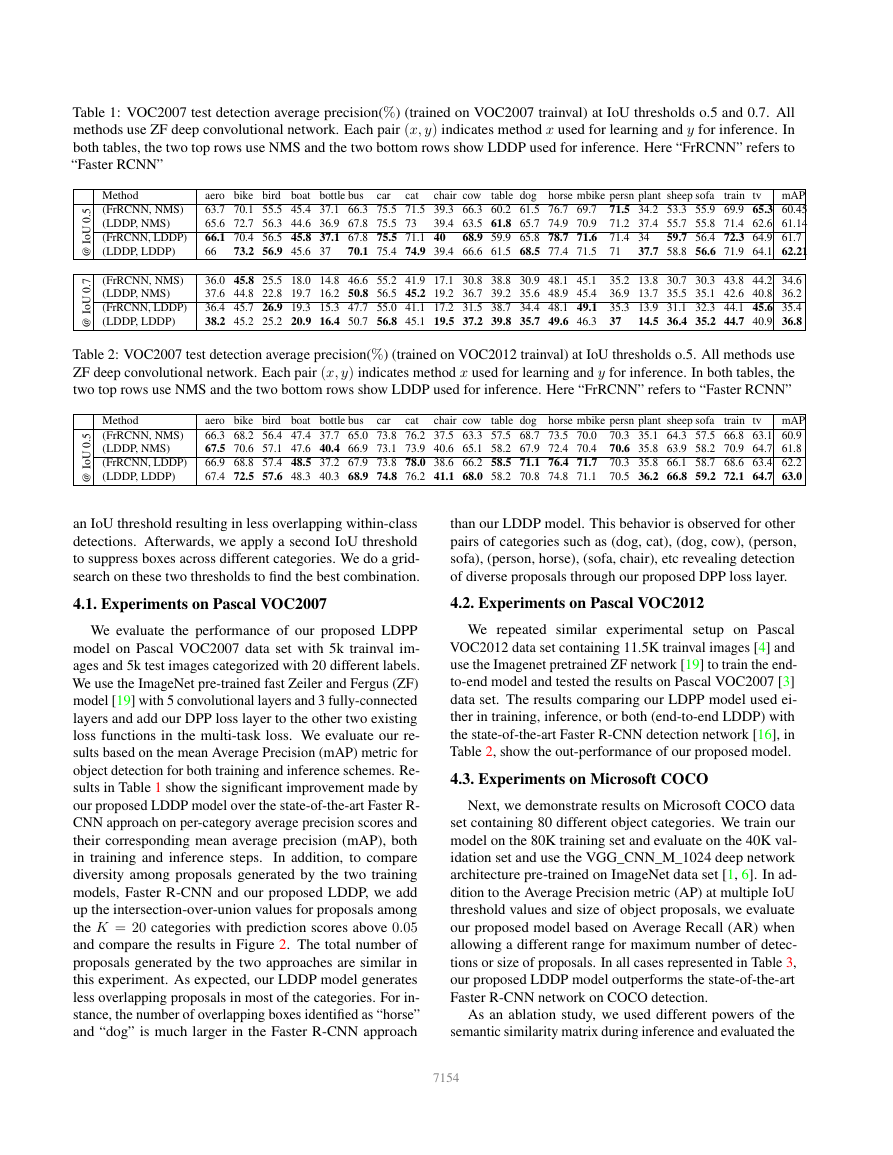

Table 2: VOC2007 test detection average precision(%) (trained on VOC2012 trainval) at IoU thresholds o.5. All methods use

ZF deep convolutional network. Each pair (x, y) indicates method x used for learning and y for inference. In both tables, the

two top rows use NMS and the two bottom rows show LDDP used for inference. Here “FrRCNN” refers to “Faster RCNN”

Method

.

5 (FrRCNN, NMS)

0

U

o

I

(LDDP, NMS)

(FrRCNN, LDDP)

(LDDP, LDDP)

@

aero bike bird boat bottle bus

66.3 68.2 56.4 47.4 37.7 65.0 73.8 76.2 37.5 63.3 57.5 68.7 73.5 70.0

67.5 70.6 57.1 47.6 40.4 66.9 73.1 73.9 40.6 65.1 58.2 67.9 72.4 70.4

66.9 68.8 57.4 48.5 37.2 67.9 73.8 78.0 38.6 66.2 58.5 71.1 76.4 71.7

67.4 72.5 57.6 48.3 40.3 68.9 74.8 76.2 41.1 68.0 58.2 70.8 74.8 71.1

chair cow table dog

car

cat

mAP

70.3 35.1 64.3 57.5 66.8 63.1 60.9

70.6 35.8 63.9 58.2 70.9 64.7 61.8

70.3 35.8 66.1 58.7 68.6 63.4 62.2

70.5 36.2 66.8 59.2 72.1 64.7 63.0

horse mbike persn plant sheep sofa train tv

an IoU threshold resulting in less overlapping within-class

detections. Afterwards, we apply a second IoU threshold

to suppress boxes across different categories. We do a grid-

search on these two thresholds to find the best combination.

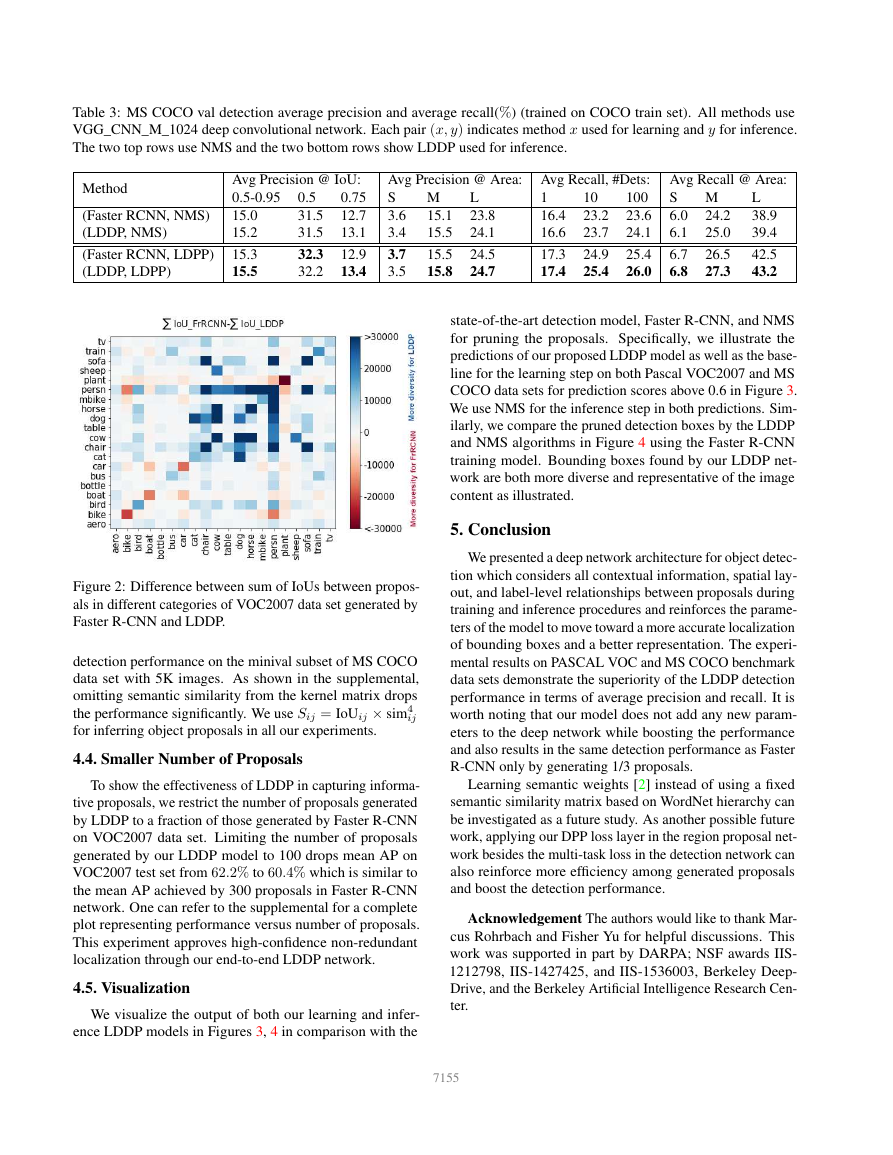

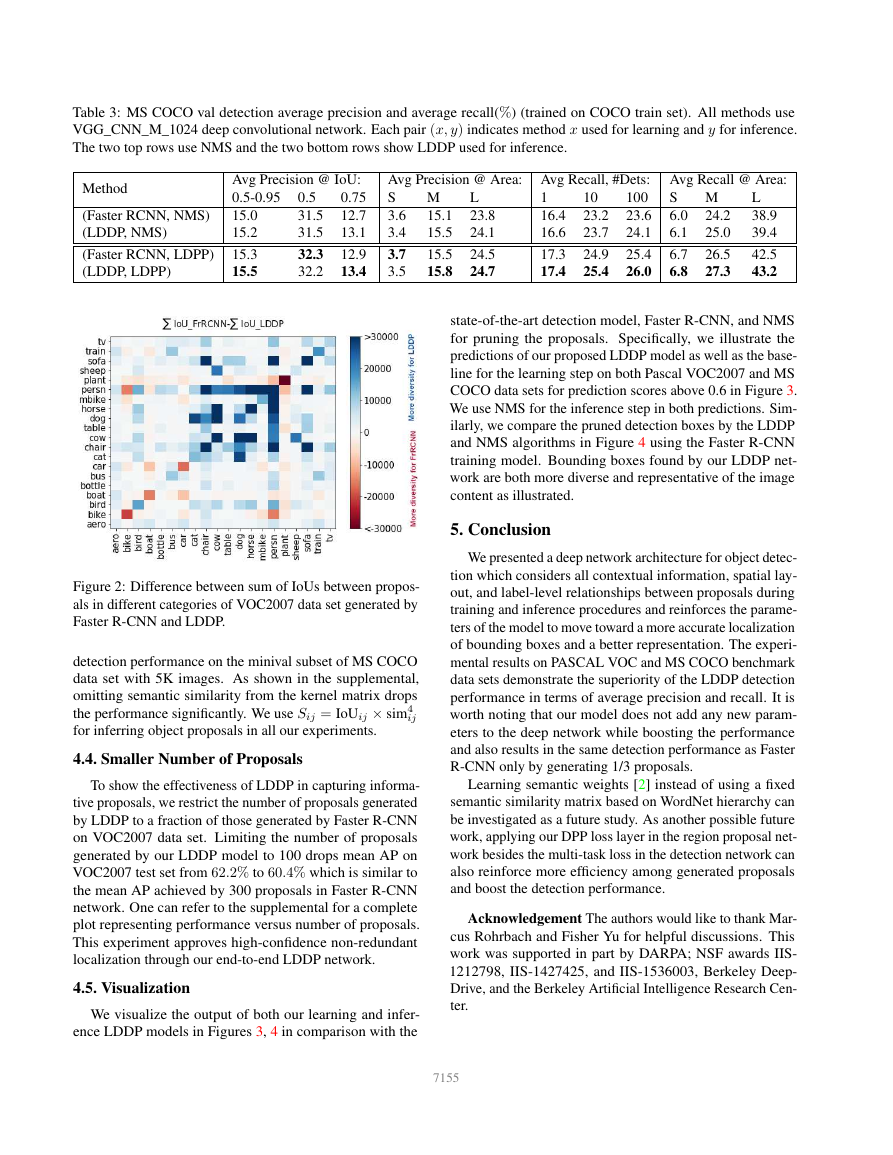

than our LDDP model. This behavior is observed for other

pairs of categories such as (dog, cat), (dog, cow), (person,

sofa), (person, horse), (sofa, chair), etc revealing detection

of diverse proposals through our proposed DPP loss layer.

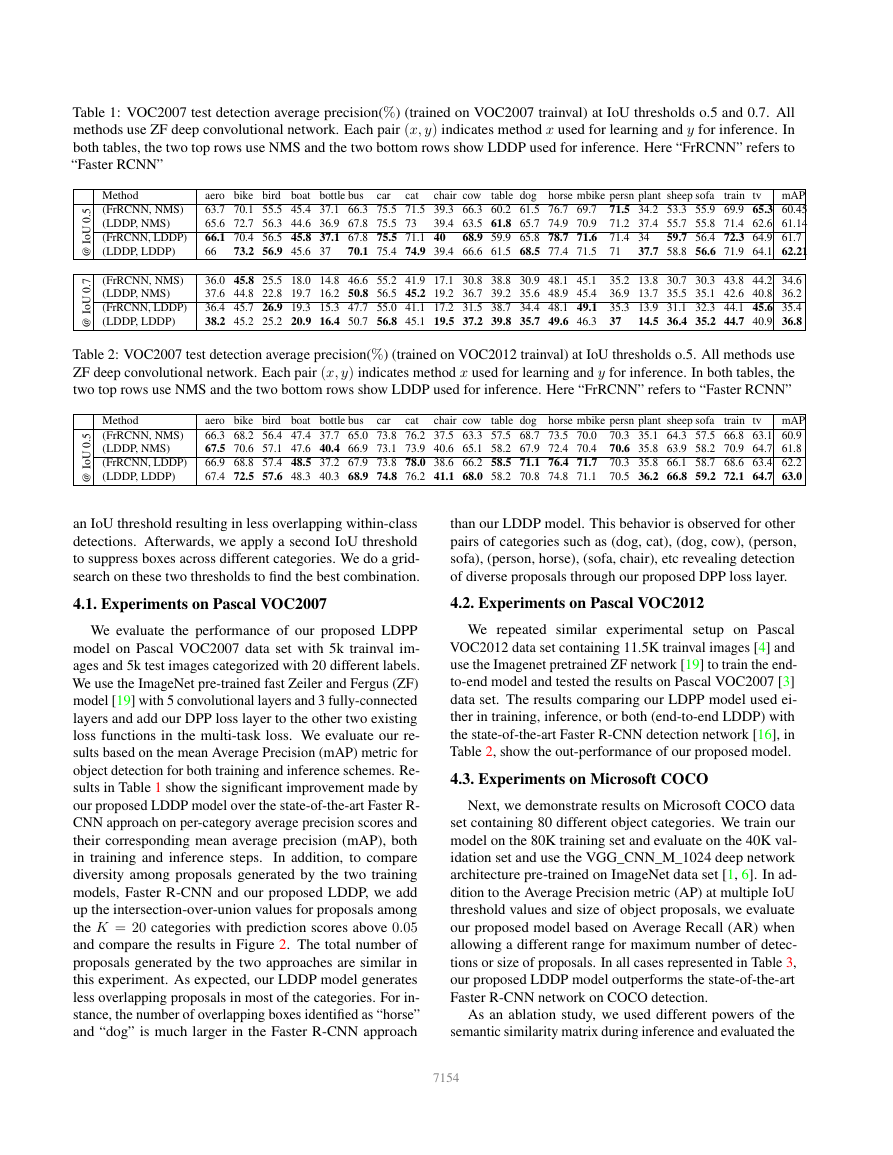

4.1. Experiments on Pascal VOC2007

4.2. Experiments on Pascal VOC2012

We evaluate the performance of our proposed LDPP

model on Pascal VOC2007 data set with 5k trainval im-

ages and 5k test images categorized with 20 different labels.

We use the ImageNet pre-trained fast Zeiler and Fergus (ZF)

model [19] with 5 convolutional layers and 3 fully-connected

layers and add our DPP loss layer to the other two existing

loss functions in the multi-task loss. We evaluate our re-

sults based on the mean Average Precision (mAP) metric for

object detection for both training and inference schemes. Re-

sults in Table 1 show the significant improvement made by

our proposed LDDP model over the state-of-the-art Faster R-

CNN approach on per-category average precision scores and

their corresponding mean average precision (mAP), both

in training and inference steps.

In addition, to compare

diversity among proposals generated by the two training

models, Faster R-CNN and our proposed LDDP, we add

up the intersection-over-union values for proposals among

the K = 20 categories with prediction scores above 0.05

and compare the results in Figure 2. The total number of

proposals generated by the two approaches are similar in

this experiment. As expected, our LDDP model generates

less overlapping proposals in most of the categories. For in-

stance, the number of overlapping boxes identified as “horse”

and “dog” is much larger in the Faster R-CNN approach

We repeated similar experimental setup on Pascal

VOC2012 data set containing 11.5K trainval images [4] and

use the Imagenet pretrained ZF network [19] to train the end-

to-end model and tested the results on Pascal VOC2007 [3]

data set. The results comparing our LDPP model used ei-

ther in training, inference, or both (end-to-end LDDP) with

the state-of-the-art Faster R-CNN detection network [16], in

Table 2, show the out-performance of our proposed model.

4.3. Experiments on Microsoft COCO

Next, we demonstrate results on Microsoft COCO data

set containing 80 different object categories. We train our

model on the 80K training set and evaluate on the 40K val-

idation set and use the VGG_CNN_M_1024 deep network

architecture pre-trained on ImageNet data set [1, 6]. In ad-

dition to the Average Precision metric (AP) at multiple IoU

threshold values and size of object proposals, we evaluate

our proposed model based on Average Recall (AR) when

allowing a different range for maximum number of detec-

tions or size of proposals. In all cases represented in Table 3,

our proposed LDDP model outperforms the state-of-the-art

Faster R-CNN network on COCO detection.

As an ablation study, we used different powers of the

semantic similarity matrix during inference and evaluated the

7154

�

Table 3: MS COCO val detection average precision and average recall(%) (trained on COCO train set). All methods use

VGG_CNN_M_1024 deep convolutional network. Each pair (x, y) indicates method x used for learning and y for inference.

The two top rows use NMS and the two bottom rows show LDDP used for inference.

Method

(Faster RCNN, NMS)

(LDDP, NMS)

Avg Precision @ IoU:

0.5-0.95

0.75

12.7

15.0

15.2

13.1

0.5

31.5

31.5

Avg Precision @ Area: Avg Recall, #Dets: Avg Recall @ Area:

S

3.6

3.4

L

38.9

39.4

M

15.1

15.5

S

6.0

6.1

M

24.2

25.0

L

23.8

24.1

1

16.4

16.6

10

23.2

23.7

100

23.6

24.1

(Faster RCNN, LDPP)

(LDDP, LDPP)

15.3

15.5

32.3

32.2

12.9

13.4

3.7

3.5

15.5

15.8

24.5

24.7

17.3

17.4

24.9

25.4

25.4

26.0

6.7

6.8

26.5

27.3

42.5

43.2

state-of-the-art detection model, Faster R-CNN, and NMS

for pruning the proposals. Specifically, we illustrate the

predictions of our proposed LDDP model as well as the base-

line for the learning step on both Pascal VOC2007 and MS

COCO data sets for prediction scores above 0.6 in Figure 3.

We use NMS for the inference step in both predictions. Sim-

ilarly, we compare the pruned detection boxes by the LDDP

and NMS algorithms in Figure 4 using the Faster R-CNN

training model. Bounding boxes found by our LDDP net-

work are both more diverse and representative of the image

content as illustrated.

5. Conclusion

We presented a deep network architecture for object detec-

tion which considers all contextual information, spatial lay-

out, and label-level relationships between proposals during

training and inference procedures and reinforces the parame-

ters of the model to move toward a more accurate localization

of bounding boxes and a better representation. The experi-

mental results on PASCAL VOC and MS COCO benchmark

data sets demonstrate the superiority of the LDDP detection

performance in terms of average precision and recall. It is

worth noting that our model does not add any new param-

eters to the deep network while boosting the performance

and also results in the same detection performance as Faster

R-CNN only by generating 1/3 proposals.

Learning semantic weights [2] instead of using a fixed

semantic similarity matrix based on WordNet hierarchy can

be investigated as a future study. As another possible future

work, applying our DPP loss layer in the region proposal net-

work besides the multi-task loss in the detection network can

also reinforce more efficiency among generated proposals

and boost the detection performance.

Acknowledgement The authors would like to thank Mar-

cus Rohrbach and Fisher Yu for helpful discussions. This

work was supported in part by DARPA; NSF awards IIS-

1212798, IIS-1427425, and IIS-1536003, Berkeley Deep-

Drive, and the Berkeley Artificial Intelligence Research Cen-

ter.

7155

Figure 2: Difference between sum of IoUs between propos-

als in different categories of VOC2007 data set generated by

Faster R-CNN and LDDP.

detection performance on the minival subset of MS COCO

data set with 5K images. As shown in the supplemental,

omitting semantic similarity from the kernel matrix drops

the performance significantly. We use Sij = IoUij × sim4

ij

for inferring object proposals in all our experiments.

4.4. Smaller Number of Proposals

To show the effectiveness of LDDP in capturing informa-

tive proposals, we restrict the number of proposals generated

by LDDP to a fraction of those generated by Faster R-CNN

on VOC2007 data set. Limiting the number of proposals

generated by our LDDP model to 100 drops mean AP on

VOC2007 test set from 62.2% to 60.4% which is similar to

the mean AP achieved by 300 proposals in Faster R-CNN

network. One can refer to the supplemental for a complete

plot representing performance versus number of proposals.

This experiment approves high-confidence non-redundant

localization through our end-to-end LDDP network.

4.5. Visualization

We visualize the output of both our learning and infer-

ence LDDP models in Figures 3, 4 in comparison with the

�

Figure 3: Example images from Pascal VOC2007 and MS COCO data sets illustrating LDDP and Faster R-CNN networks

used for learning. A score threshold of 0.6 is used to display images. NMS is used for pruning proposals.

Figure 4: Example images from Pascal VOC2007 and MS COCO data sets illustrating LDDP inference and NMS applied on

top of Faster R-CNN predicted detections. A score threshold of 0.6 is used to display images.

7156

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc