Distributed RPC Framework

DISTRIBUTED RPC FRAMEWORK

The distributed RPC framework provides mechanisms for multi-machine model training through a set of primitives to allow

for remote communication, and a higher-level API to automatically differentiate models split across several machines.

Distributed RPC Framework

Design Notes

The distributed autograd design note covers the design of the RPC-based distributed autograd framework that is useful for

applications such as model parallel training.

Distributed Autograd Design

The RRef design note covers the design of the RRef (Remote REFerence) protocol used to refer to values on remote

workers by the framework.

Remote Reference Protocol

Tutorials

The RPC tutorial introduces users to the RPC framework and provides two example applications using torch.distributed.rpc

APIs.

Getting started with Distributed RPC Framework

�

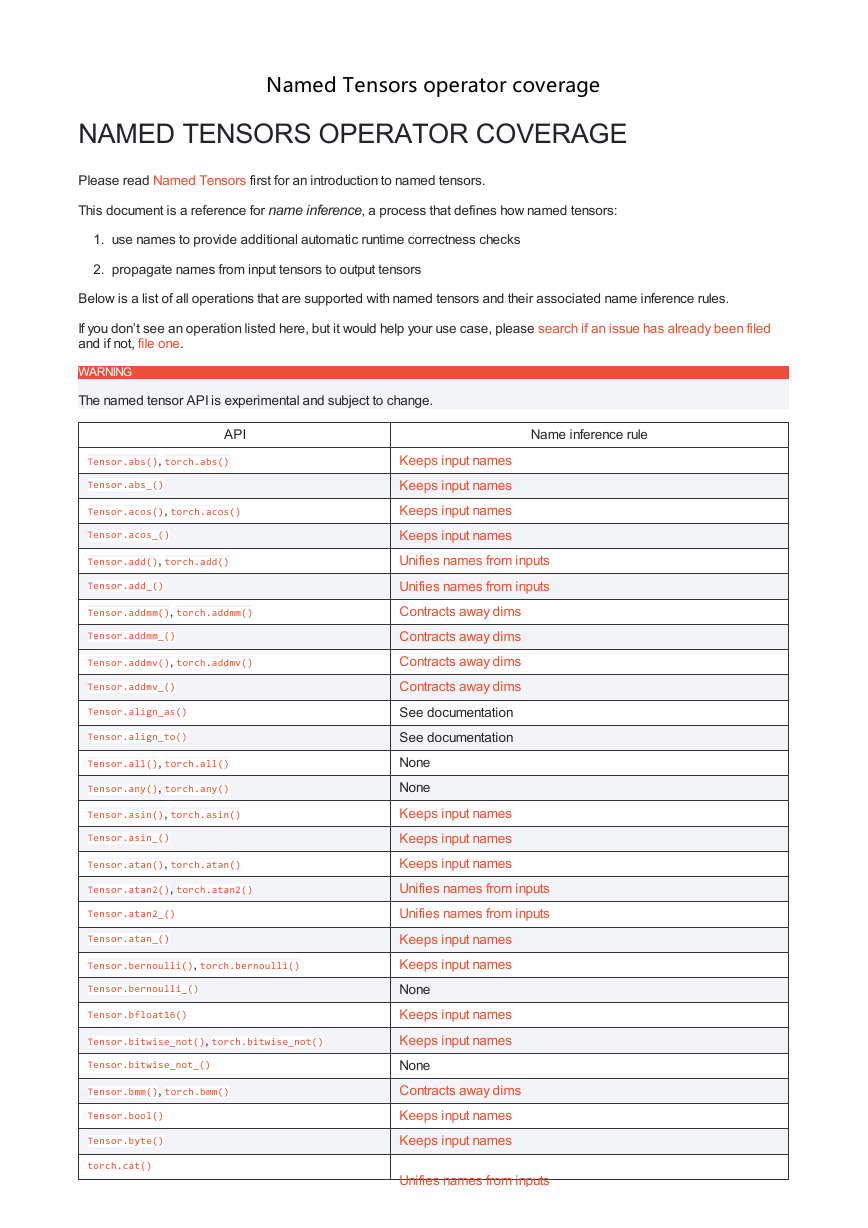

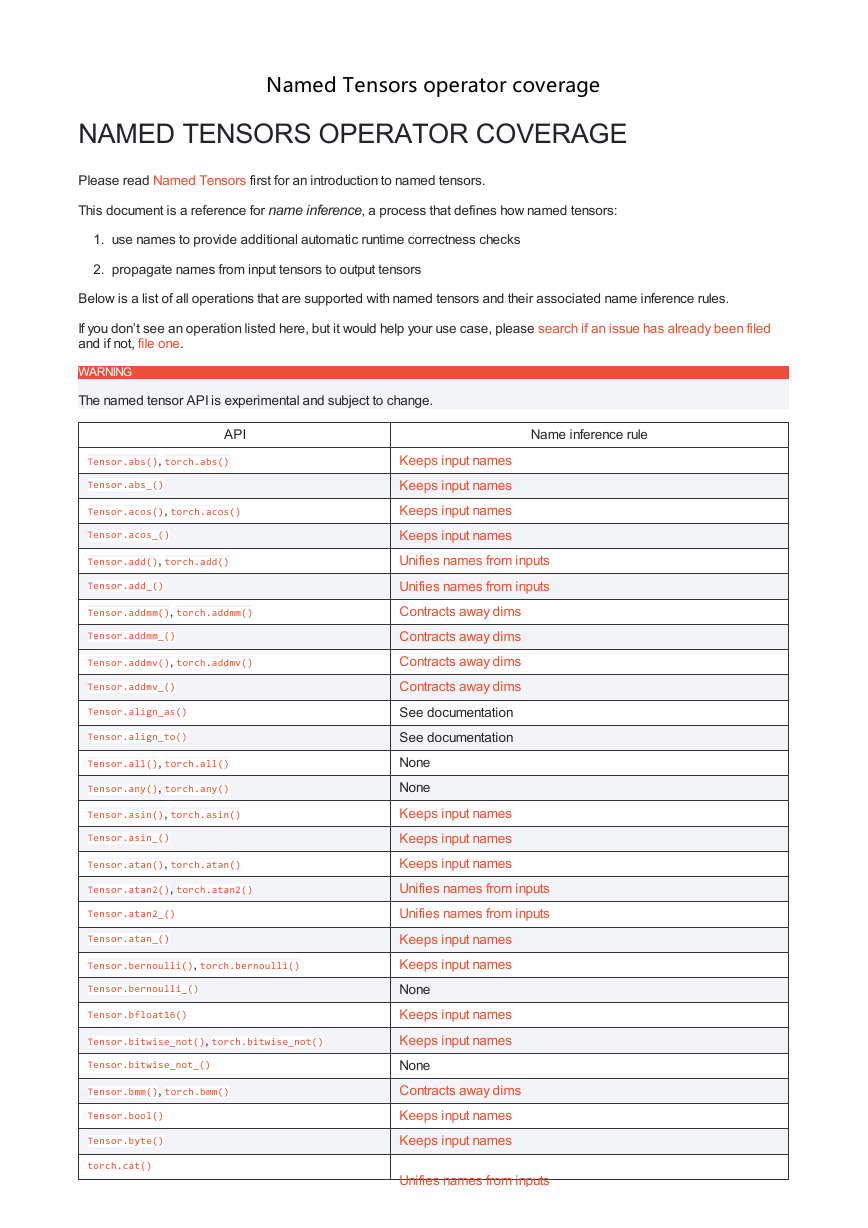

Named Tensors operator coverage

NAMED TENSORS OPERATOR COVERAGE

Please read Named Tensors first for an introduction to named tensors.

This document is a reference for name inference, a process that defines how named tensors:

1. use names to provide additional automatic runtime correctness checks

2. propagate names from input tensors to output tensors

Below is a list of all operations that are supported with named tensors and their associated name inference rules.

If you don’t see an operation listed here, but it would help your use case, please search if an issue has already been filed

and if not, file one.

WARNING

The named tensor API is experimental and subject to change.

API

Tensor.abs(), torch.abs()

Tensor.abs_()

Tensor.acos(), torch.acos()

Tensor.acos_()

Tensor.add(), torch.add()

Tensor.add_()

Tensor.addmm(), torch.addmm()

Tensor.addmm_()

Tensor.addmv(), torch.addmv()

Tensor.addmv_()

Tensor.align_as()

Tensor.align_to()

Tensor.all(), torch.all()

Tensor.any(), torch.any()

Tensor.asin(), torch.asin()

Tensor.asin_()

Tensor.atan(), torch.atan()

Tensor.atan2(), torch.atan2()

Tensor.atan2_()

Tensor.atan_()

Tensor.bernoulli(), torch.bernoulli()

Tensor.bernoulli_()

Tensor.bfloat16()

Tensor.bitwise_not(), torch.bitwise_not()

Tensor.bitwise_not_()

Tensor.bmm(), torch.bmm()

Tensor.bool()

Tensor.byte()

torch.cat()

Name inference rule

Keeps input names

Keeps input names

Keeps input names

Keeps input names

Unifies names from inputs

Unifies names from inputs

Contracts away dims

Contracts away dims

Contracts away dims

Contracts away dims

See documentation

See documentation

None

None

Keeps input names

Keeps input names

Keeps input names

Unifies names from inputs

Unifies names from inputs

Keeps input names

Keeps input names

None

Keeps input names

Keeps input names

None

Contracts away dims

Keeps input names

Keeps input names

Unifies names from inputs

�

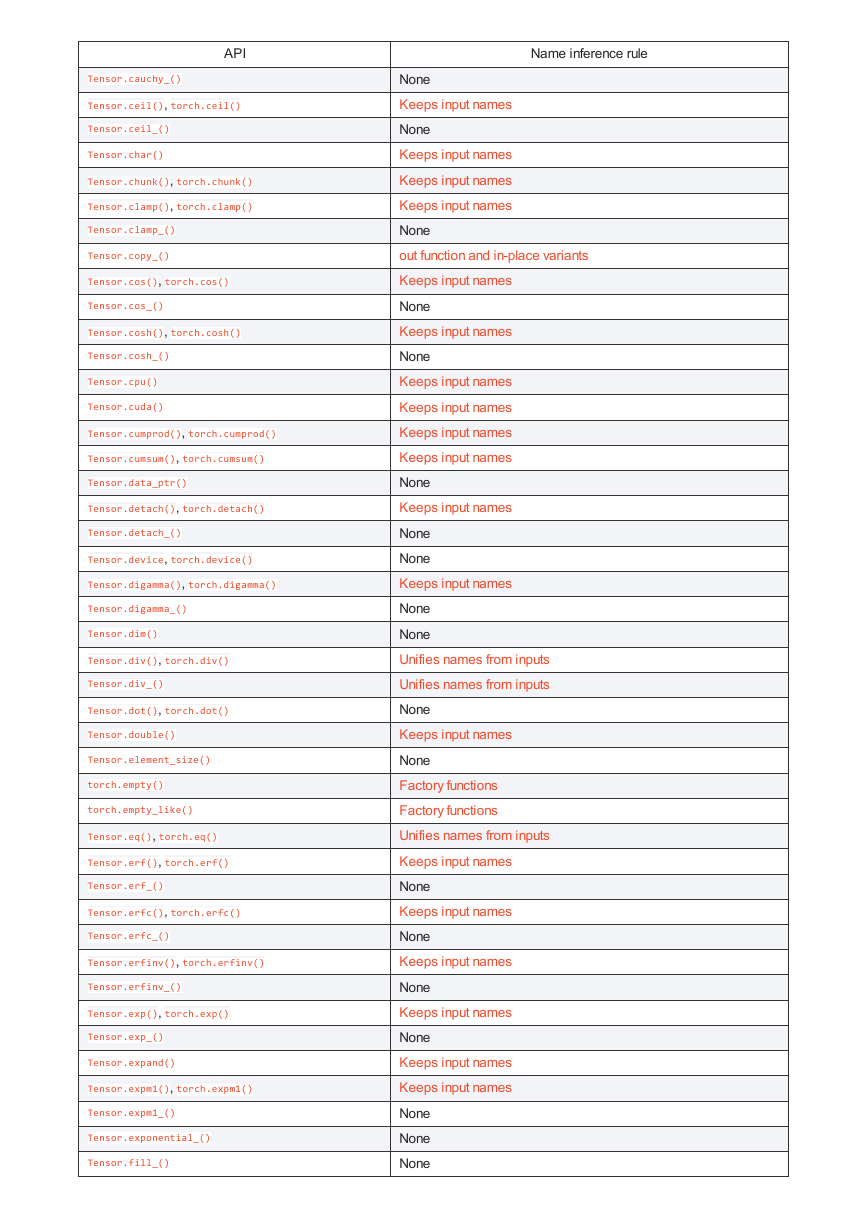

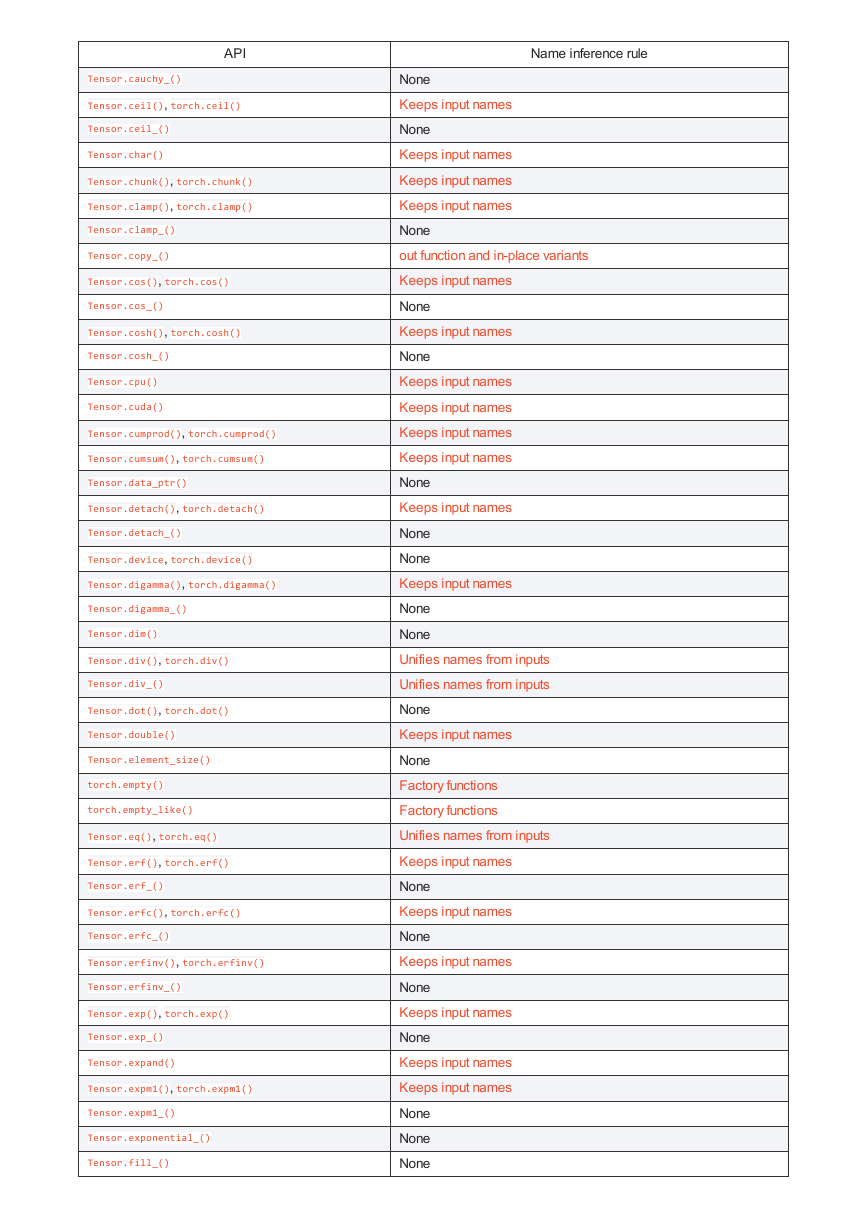

API

Tensor.cauchy_()

Tensor.ceil(), torch.ceil()

Tensor.ceil_()

Tensor.char()

Tensor.chunk(), torch.chunk()

Tensor.clamp(), torch.clamp()

Tensor.clamp_()

Tensor.copy_()

Tensor.cos(), torch.cos()

Tensor.cos_()

Tensor.cosh(), torch.cosh()

Tensor.cosh_()

Tensor.cpu()

Tensor.cuda()

Tensor.cumprod(), torch.cumprod()

Tensor.cumsum(), torch.cumsum()

Tensor.data_ptr()

Tensor.detach(), torch.detach()

Tensor.detach_()

Tensor.device, torch.device()

Tensor.digamma(), torch.digamma()

Tensor.digamma_()

Tensor.dim()

Tensor.div(), torch.div()

Tensor.div_()

Tensor.dot(), torch.dot()

Tensor.double()

Tensor.element_size()

torch.empty()

torch.empty_like()

Tensor.eq(), torch.eq()

Tensor.erf(), torch.erf()

Tensor.erf_()

Tensor.erfc(), torch.erfc()

Tensor.erfc_()

Tensor.erfinv(), torch.erfinv()

Tensor.erfinv_()

Tensor.exp(), torch.exp()

Tensor.exp_()

Tensor.expand()

Tensor.expm1(), torch.expm1()

Tensor.expm1_()

Tensor.exponential_()

Tensor.fill_()

Name inference rule

None

Keeps input names

None

Keeps input names

Keeps input names

Keeps input names

None

out function and in-place variants

Keeps input names

None

Keeps input names

None

Keeps input names

Keeps input names

Keeps input names

Keeps input names

None

Keeps input names

None

None

Keeps input names

None

None

Unifies names from inputs

Unifies names from inputs

None

Keeps input names

None

Factory functions

Factory functions

Unifies names from inputs

Keeps input names

None

Keeps input names

None

Keeps input names

None

Keeps input names

None

Keeps input names

Keeps input names

None

None

None

�

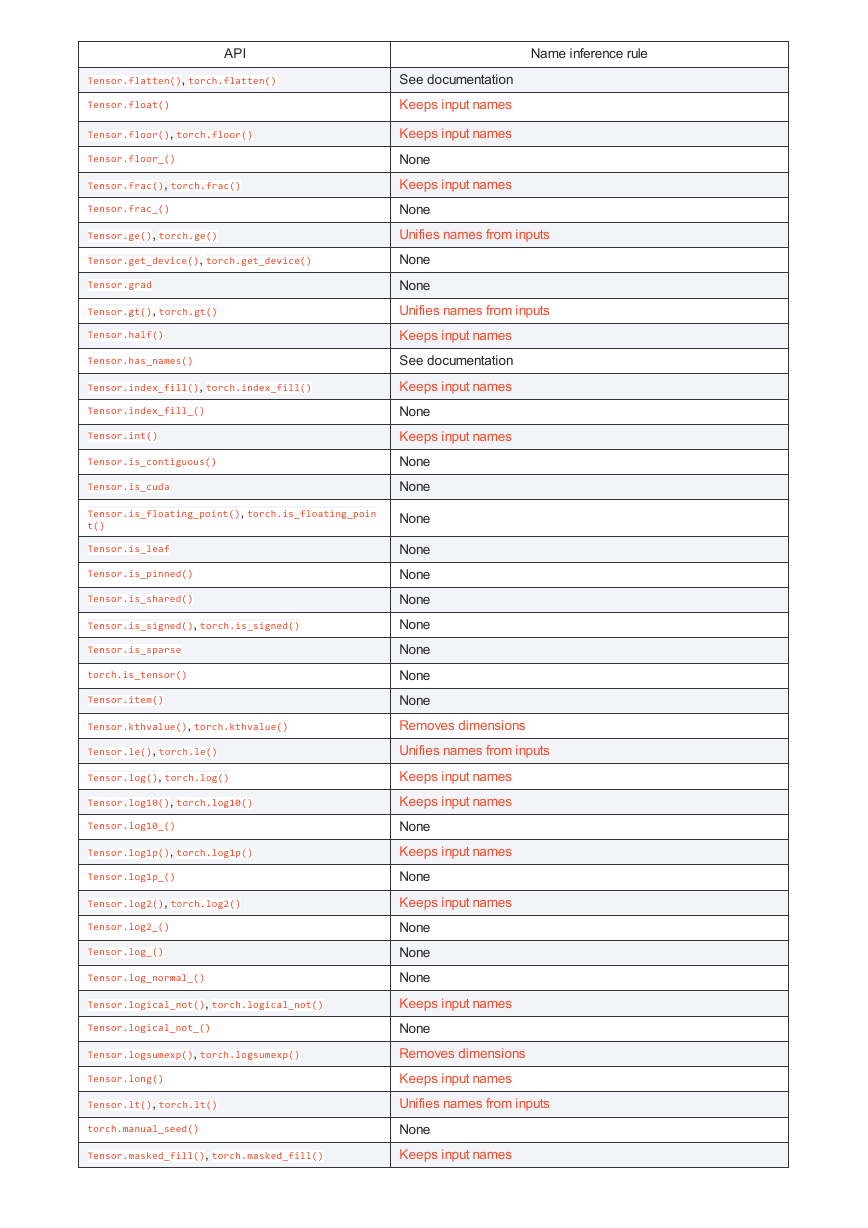

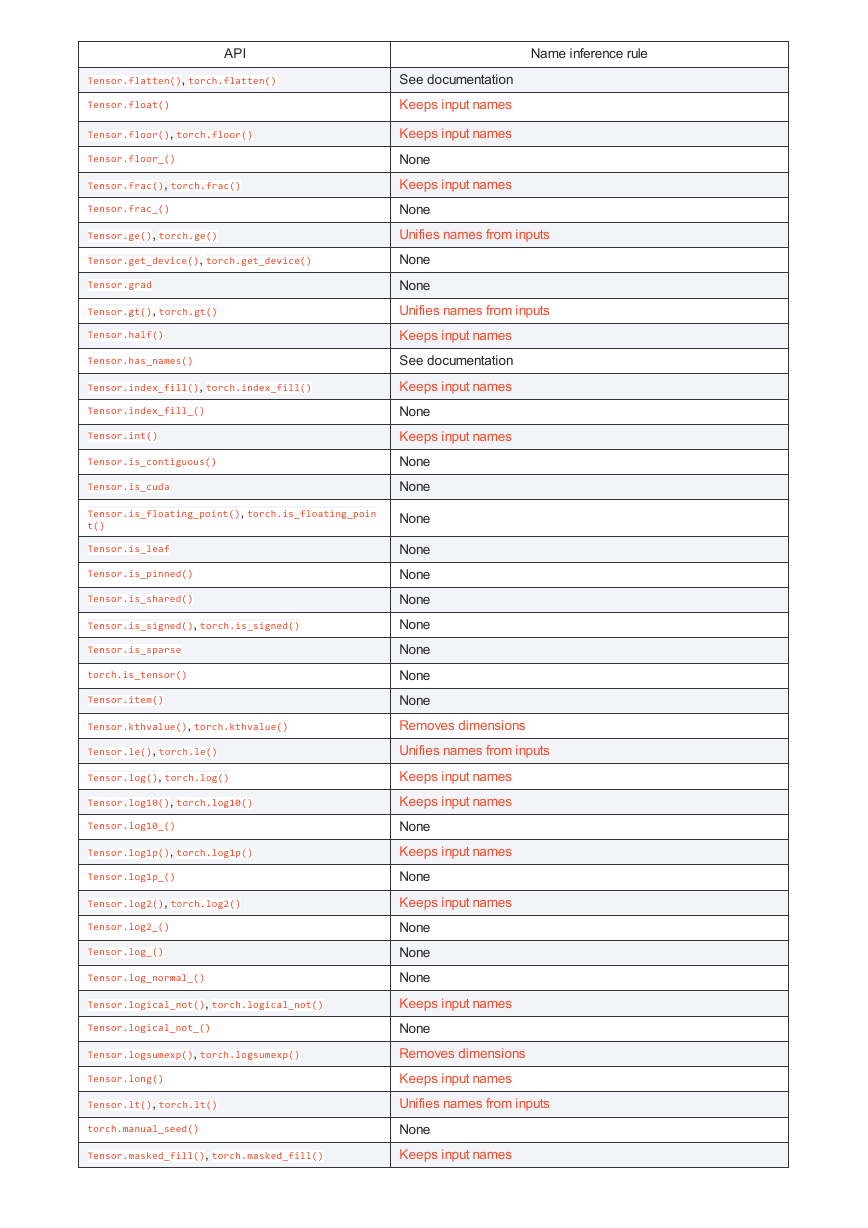

API

Tensor.flatten(), torch.flatten()

Tensor.float()

Tensor.floor(), torch.floor()

Tensor.floor_()

Tensor.frac(), torch.frac()

Tensor.frac_()

Tensor.ge(), torch.ge()

Tensor.get_device(), torch.get_device()

Tensor.grad

Tensor.gt(), torch.gt()

Tensor.half()

Tensor.has_names()

Tensor.index_fill(), torch.index_fill()

Tensor.index_fill_()

Tensor.int()

Tensor.is_contiguous()

Tensor.is_cuda

Tensor.is_floating_point(), torch.is_floating_poin

t()

Tensor.is_leaf

Tensor.is_pinned()

Tensor.is_shared()

Tensor.is_signed(), torch.is_signed()

Tensor.is_sparse

torch.is_tensor()

Tensor.item()

Tensor.kthvalue(), torch.kthvalue()

Tensor.le(), torch.le()

Tensor.log(), torch.log()

Tensor.log10(), torch.log10()

Tensor.log10_()

Tensor.log1p(), torch.log1p()

Tensor.log1p_()

Tensor.log2(), torch.log2()

Tensor.log2_()

Tensor.log_()

Tensor.log_normal_()

Tensor.logical_not(), torch.logical_not()

Tensor.logical_not_()

Tensor.logsumexp(), torch.logsumexp()

Tensor.long()

Tensor.lt(), torch.lt()

torch.manual_seed()

Tensor.masked_fill(), torch.masked_fill()

Name inference rule

See documentation

Keeps input names

Keeps input names

None

Keeps input names

None

Unifies names from inputs

None

None

Unifies names from inputs

Keeps input names

See documentation

Keeps input names

None

Keeps input names

None

None

None

None

None

None

None

None

None

None

Removes dimensions

Unifies names from inputs

Keeps input names

Keeps input names

None

Keeps input names

None

Keeps input names

None

None

None

Keeps input names

None

Removes dimensions

Keeps input names

Unifies names from inputs

None

Keeps input names

�

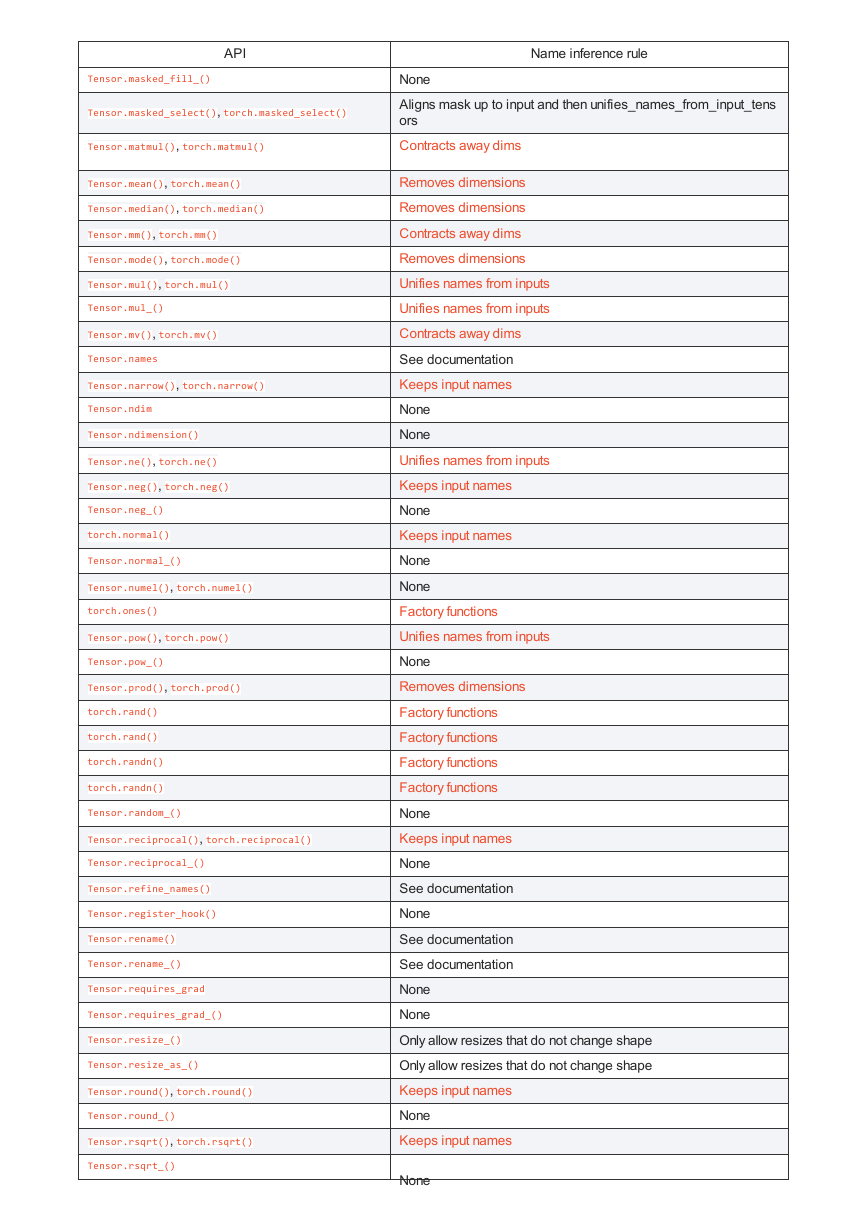

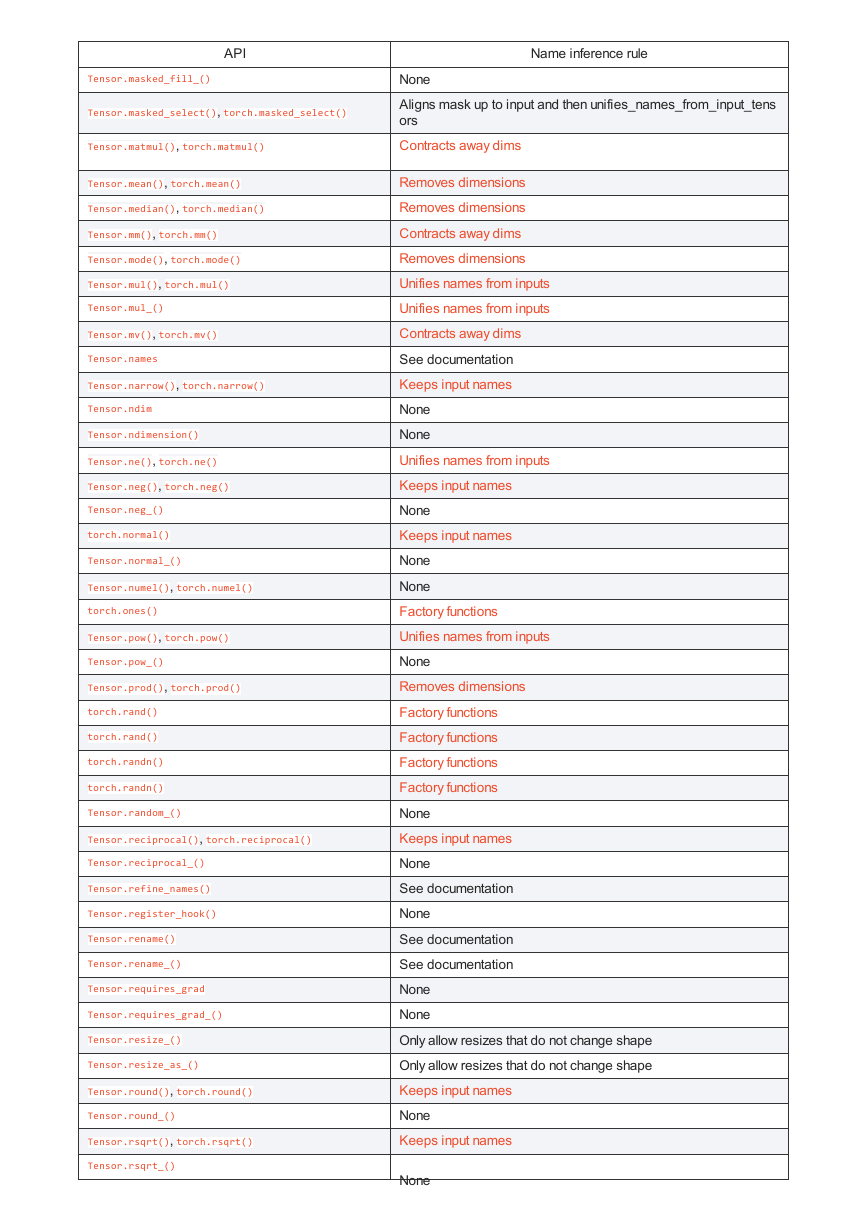

API

Tensor.masked_fill_()

Tensor.masked_select(), torch.masked_select()

Tensor.matmul(), torch.matmul()

Tensor.mean(), torch.mean()

Tensor.median(), torch.median()

Tensor.mm(), torch.mm()

Tensor.mode(), torch.mode()

Tensor.mul(), torch.mul()

Tensor.mul_()

Tensor.mv(), torch.mv()

Tensor.names

Tensor.narrow(), torch.narrow()

Tensor.ndim

Tensor.ndimension()

Tensor.ne(), torch.ne()

Tensor.neg(), torch.neg()

Tensor.neg_()

torch.normal()

Tensor.normal_()

Tensor.numel(), torch.numel()

torch.ones()

Tensor.pow(), torch.pow()

Tensor.pow_()

Tensor.prod(), torch.prod()

torch.rand()

torch.rand()

torch.randn()

torch.randn()

Tensor.random_()

Tensor.reciprocal(), torch.reciprocal()

Tensor.reciprocal_()

Tensor.refine_names()

Tensor.register_hook()

Tensor.rename()

Tensor.rename_()

Tensor.requires_grad

Tensor.requires_grad_()

Tensor.resize_()

Tensor.resize_as_()

Tensor.round(), torch.round()

Tensor.round_()

Tensor.rsqrt(), torch.rsqrt()

Tensor.rsqrt_()

Name inference rule

None

Aligns mask up to input and then unifies_names_from_input_tens

ors

Contracts away dims

Removes dimensions

Removes dimensions

Contracts away dims

Removes dimensions

Unifies names from inputs

Unifies names from inputs

Contracts away dims

See documentation

Keeps input names

None

None

Unifies names from inputs

Keeps input names

None

Keeps input names

None

None

Factory functions

Unifies names from inputs

None

Removes dimensions

Factory functions

Factory functions

Factory functions

Factory functions

None

Keeps input names

None

See documentation

None

See documentation

See documentation

None

None

Only allow resizes that do not change shape

Only allow resizes that do not change shape

Keeps input names

None

Keeps input names

None

�

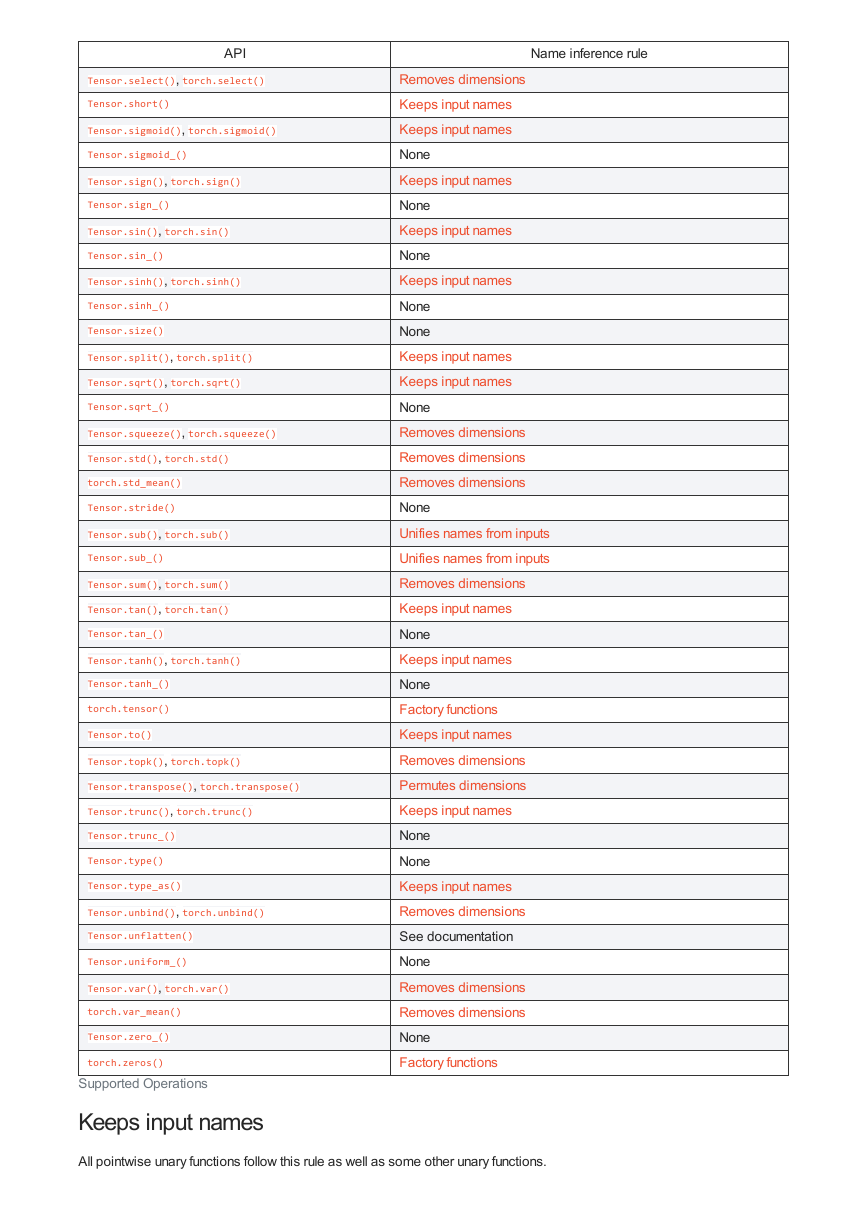

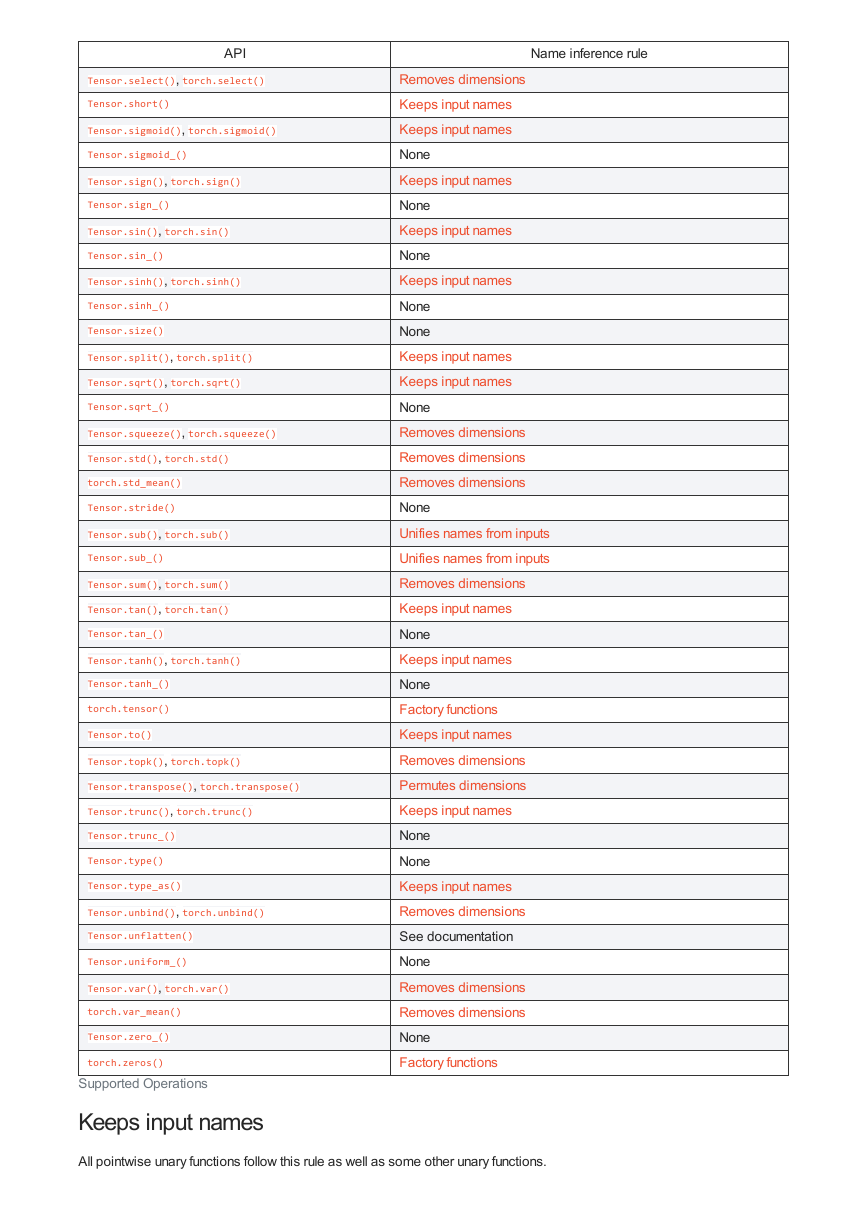

API

Tensor.select(), torch.select()

Tensor.short()

Tensor.sigmoid(), torch.sigmoid()

Tensor.sigmoid_()

Tensor.sign(), torch.sign()

Tensor.sign_()

Tensor.sin(), torch.sin()

Tensor.sin_()

Tensor.sinh(), torch.sinh()

Tensor.sinh_()

Tensor.size()

Tensor.split(), torch.split()

Tensor.sqrt(), torch.sqrt()

Tensor.sqrt_()

Tensor.squeeze(), torch.squeeze()

Tensor.std(), torch.std()

torch.std_mean()

Tensor.stride()

Tensor.sub(), torch.sub()

Tensor.sub_()

Tensor.sum(), torch.sum()

Tensor.tan(), torch.tan()

Tensor.tan_()

Tensor.tanh(), torch.tanh()

Tensor.tanh_()

torch.tensor()

Tensor.to()

Tensor.topk(), torch.topk()

Tensor.transpose(), torch.transpose()

Tensor.trunc(), torch.trunc()

Tensor.trunc_()

Tensor.type()

Tensor.type_as()

Tensor.unbind(), torch.unbind()

Tensor.unflatten()

Tensor.uniform_()

Tensor.var(), torch.var()

torch.var_mean()

Tensor.zero_()

torch.zeros()

Name inference rule

Removes dimensions

Keeps input names

Keeps input names

None

Keeps input names

None

Keeps input names

None

Keeps input names

None

None

Keeps input names

Keeps input names

None

Removes dimensions

Removes dimensions

Removes dimensions

None

Unifies names from inputs

Unifies names from inputs

Removes dimensions

Keeps input names

None

Keeps input names

None

Factory functions

Keeps input names

Removes dimensions

Permutes dimensions

Keeps input names

None

None

Keeps input names

Removes dimensions

See documentation

None

Removes dimensions

Removes dimensions

None

Factory functions

Supported Operations

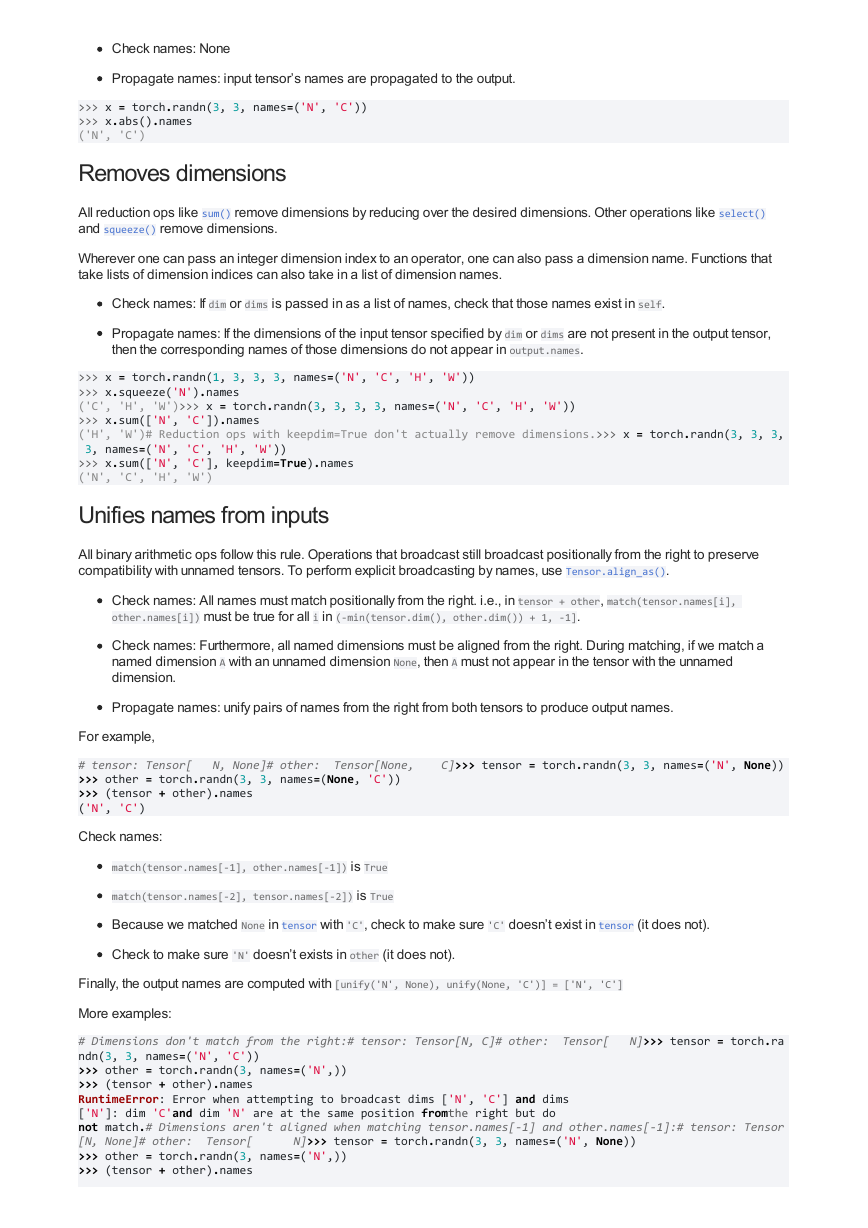

Keeps input names

All pointwise unary functions follow this rule as well as some other unary functions.

�

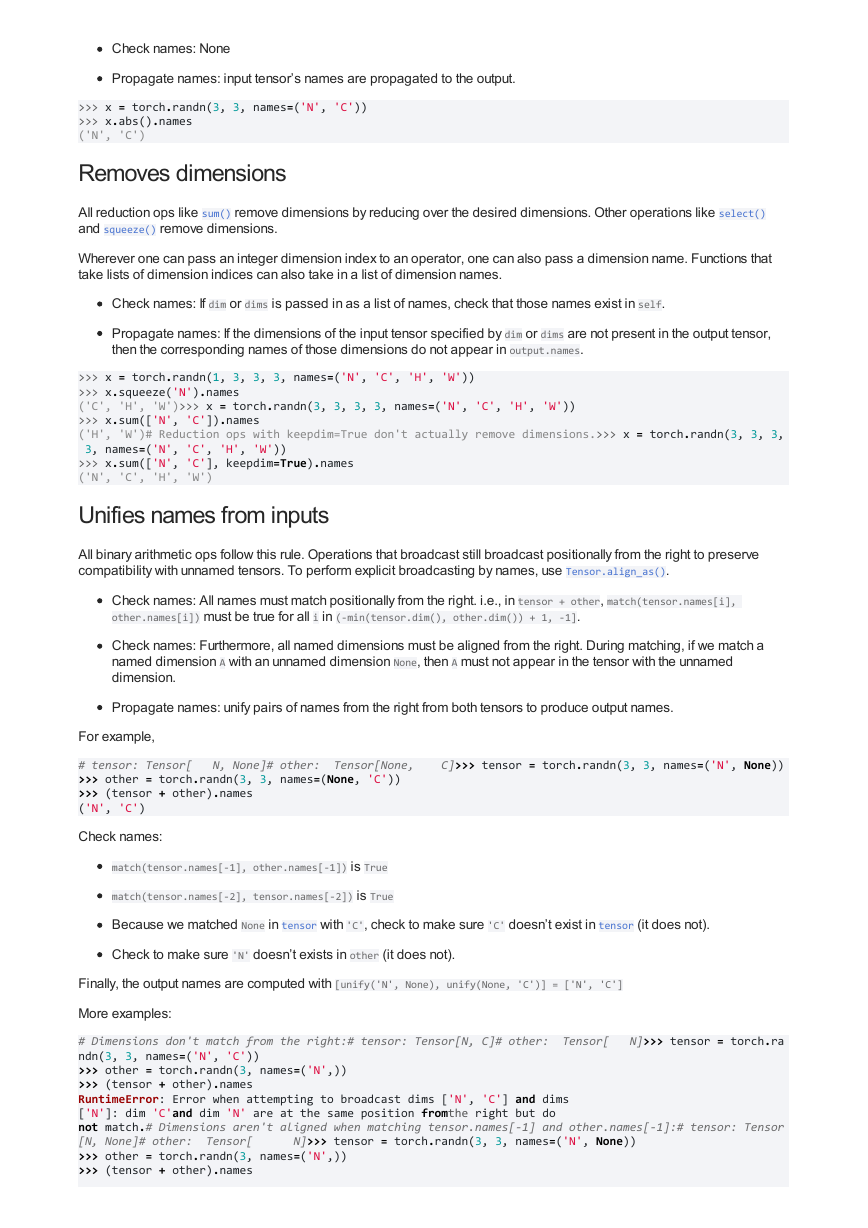

Check names: None

Propagate names: input tensor’s names are propagated to the output.

>>> x = torch.randn(3, 3, names=('N', 'C'))

>>> x.abs().names

('N', 'C')

Removes dimensions

All reduction ops like sum() remove dimensions by reducing over the desired dimensions. Other operations like select()

and squeeze() remove dimensions.

Wherever one can pass an integer dimension index to an operator, one can also pass a dimension name. Functions that

take lists of dimension indices can also take in a list of dimension names.

Check names: If dim or dims is passed in as a list of names, check that those names exist in self.

Propagate names: If the dimensions of the input tensor specified by dim or dims are not present in the output tensor,

then the corresponding names of those dimensions do not appear in output.names.

>>> x = torch.randn(1, 3, 3, 3, names=('N', 'C', 'H', 'W'))

>>> x.squeeze('N').names

('C', 'H', 'W')>>> x = torch.randn(3, 3, 3, 3, names=('N', 'C', 'H', 'W'))

>>> x.sum(['N', 'C']).names

('H', 'W')# Reduction ops with keepdim=True don't actually remove dimensions.>>> x = torch.randn(3, 3, 3,

3, names=('N', 'C', 'H', 'W'))

>>> x.sum(['N', 'C'], keepdim=True).names

('N', 'C', 'H', 'W')

Unifies names from inputs

All binary arithmetic ops follow this rule. Operations that broadcast still broadcast positionally from the right to preserve

compatibility with unnamed tensors. To perform explicit broadcasting by names, use Tensor.align_as().

Check names: All names must match positionally from the right. i.e., in tensor + other, match(tensor.names[i],

other.names[i]) must be true for all i in (-min(tensor.dim(), other.dim()) + 1, -1].

Check names: Furthermore, all named dimensions must be aligned from the right. During matching, if we match a

named dimension A with an unnamed dimension None, then A must not appear in the tensor with the unnamed

dimension.

Propagate names: unify pairs of names from the right from both tensors to produce output names.

For example,

# tensor: Tensor[ N, None]# other: Tensor[None, C]>>> tensor = torch.randn(3, 3, names=('N', None))

>>> other = torch.randn(3, 3, names=(None, 'C'))

>>> (tensor + other).names

('N', 'C')

Check names:

match(tensor.names[-1], other.names[-1]) is True

match(tensor.names[-2], tensor.names[-2]) is True

Because we matched None in tensor with 'C', check to make sure 'C' doesn’t exist in tensor (it does not).

Check to make sure 'N' doesn’t exists in other (it does not).

Finally, the output names are computed with [unify('N', None), unify(None, 'C')] = ['N', 'C']

More examples:

# Dimensions don't match from the right:# tensor: Tensor[N, C]# other: Tensor[ N]>>> tensor = torch.ra

ndn(3, 3, names=('N', 'C'))

>>> other = torch.randn(3, names=('N',))

>>> (tensor + other).names

RuntimeError: Error when attempting to broadcast dims ['N', 'C'] and dims

['N']: dim 'C'and dim 'N' are at the same position fromthe right but do

not match.# Dimensions aren't aligned when matching tensor.names[-1] and other.names[-1]:# tensor: Tensor

[N, None]# other: Tensor[ N]>>> tensor = torch.randn(3, 3, names=('N', None))

>>> other = torch.randn(3, names=('N',))

>>> (tensor + other).names

�

RuntimeError: Misaligned dims when attempting to broadcast dims ['N'] and

dims ['N', None]: dim 'N' appears in a different position fromthe right

across both lists.

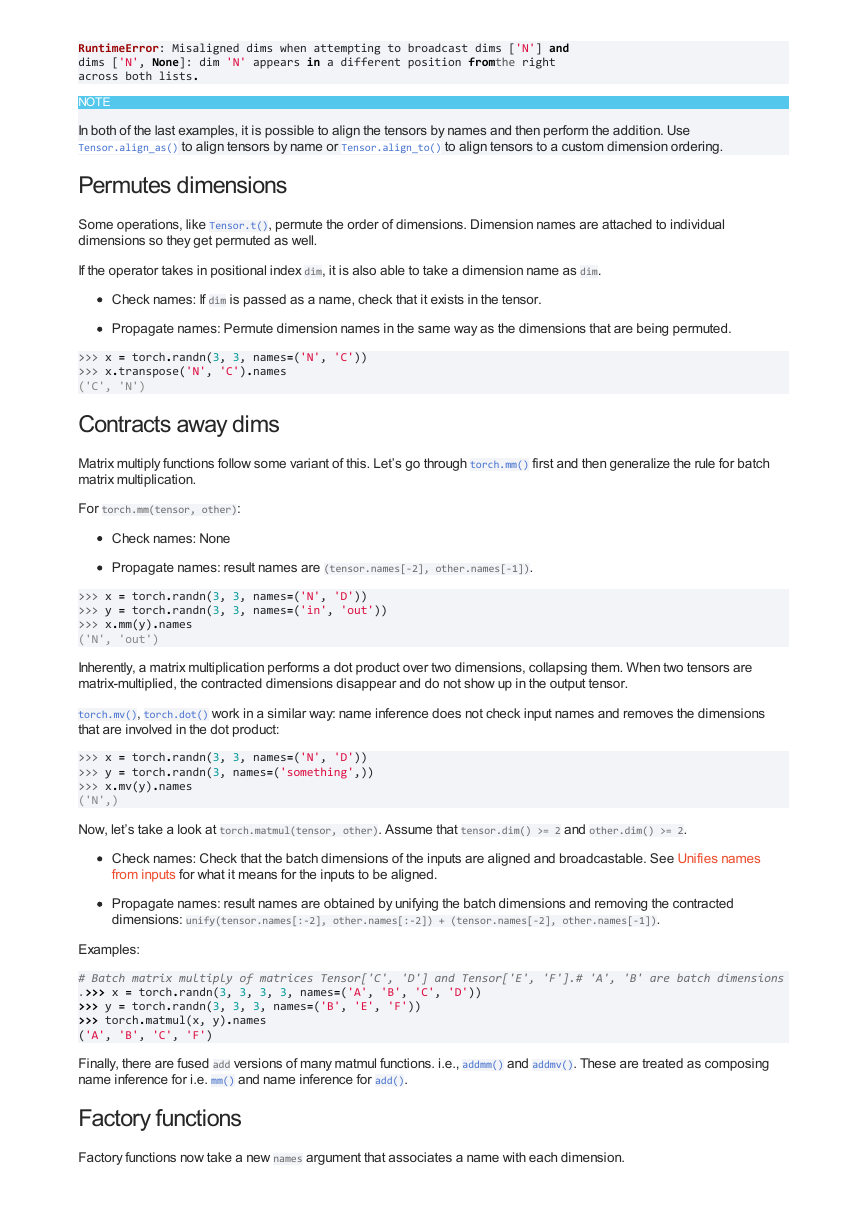

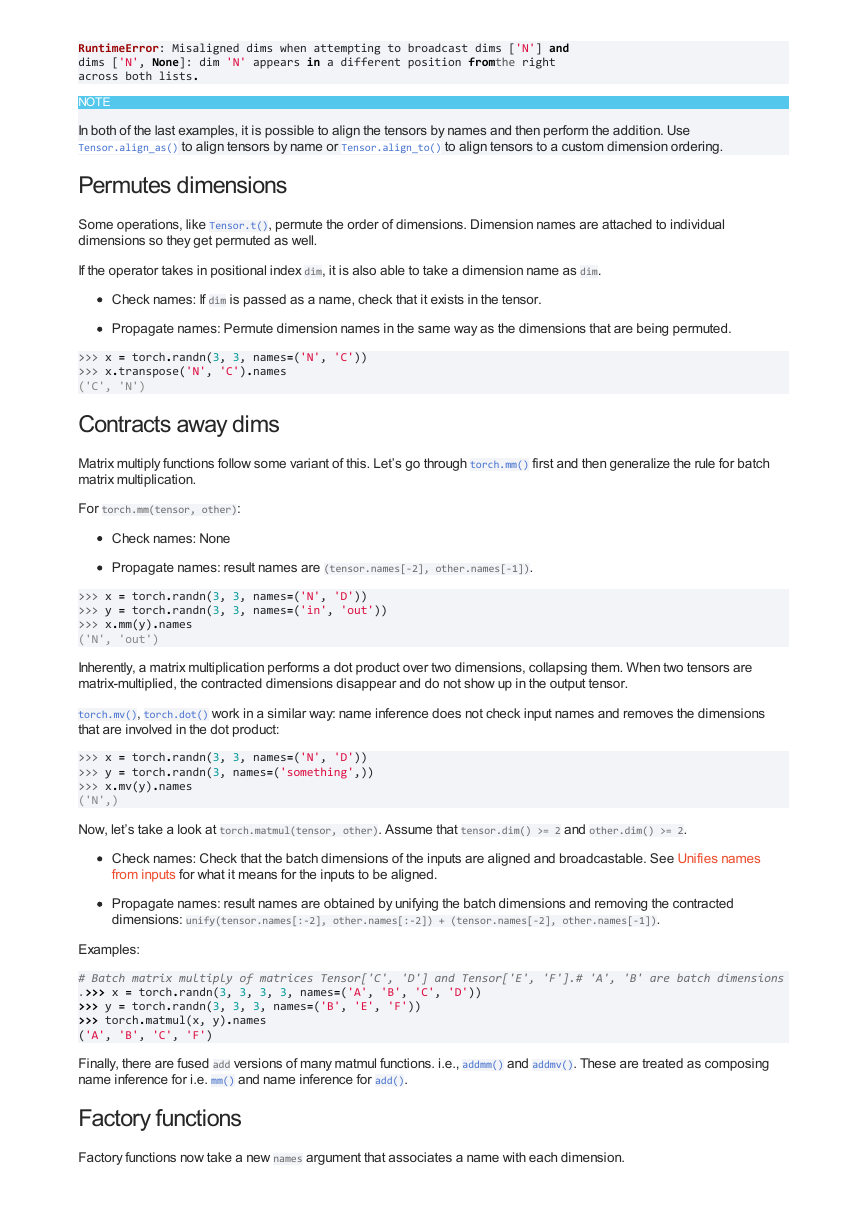

NOTE

In both of the last examples, it is possible to align the tensors by names and then perform the addition. Use

Tensor.align_as() to align tensors by name or Tensor.align_to() to align tensors to a custom dimension ordering.

Permutes dimensions

Some operations, like Tensor.t(), permute the order of dimensions. Dimension names are attached to individual

dimensions so they get permuted as well.

If the operator takes in positional index dim, it is also able to take a dimension name as dim.

Check names: If dim is passed as a name, check that it exists in the tensor.

Propagate names: Permute dimension names in the same way as the dimensions that are being permuted.

>>> x = torch.randn(3, 3, names=('N', 'C'))

>>> x.transpose('N', 'C').names

('C', 'N')

Contracts away dims

Matrix multiply functions follow some variant of this. Let’s go through torch.mm() first and then generalize the rule for batch

matrix multiplication.

For torch.mm(tensor, other):

Check names: None

Propagate names: result names are (tensor.names[-2], other.names[-1]).

>>> x = torch.randn(3, 3, names=('N', 'D'))

>>> y = torch.randn(3, 3, names=('in', 'out'))

>>> x.mm(y).names

('N', 'out')

Inherently, a matrix multiplication performs a dot product over two dimensions, collapsing them. When two tensors are

matrix-multiplied, the contracted dimensions disappear and do not show up in the output tensor.

torch.mv(), torch.dot() work in a similar way: name inference does not check input names and removes the dimensions

that are involved in the dot product:

>>> x = torch.randn(3, 3, names=('N', 'D'))

>>> y = torch.randn(3, names=('something',))

>>> x.mv(y).names

('N',)

Now, let’s take a look at torch.matmul(tensor, other). Assume that tensor.dim() >= 2 and other.dim() >= 2.

Check names: Check that the batch dimensions of the inputs are aligned and broadcastable. See Unifies names

from inputs for what it means for the inputs to be aligned.

Propagate names: result names are obtained by unifying the batch dimensions and removing the contracted

dimensions: unify(tensor.names[:-2], other.names[:-2]) + (tensor.names[-2], other.names[-1]).

Examples:

# Batch matrix multiply of matrices Tensor['C', 'D'] and Tensor['E', 'F'].# 'A', 'B' are batch dimensions

.>>> x = torch.randn(3, 3, 3, 3, names=('A', 'B', 'C', 'D'))

>>> y = torch.randn(3, 3, 3, names=('B', 'E', 'F'))

>>> torch.matmul(x, y).names

('A', 'B', 'C', 'F')

Finally, there are fused add versions of many matmul functions. i.e., addmm() and addmv(). These are treated as composing

name inference for i.e. mm() and name inference for add().

Factory functions

Factory functions now take a new names argument that associates a name with each dimension.

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc