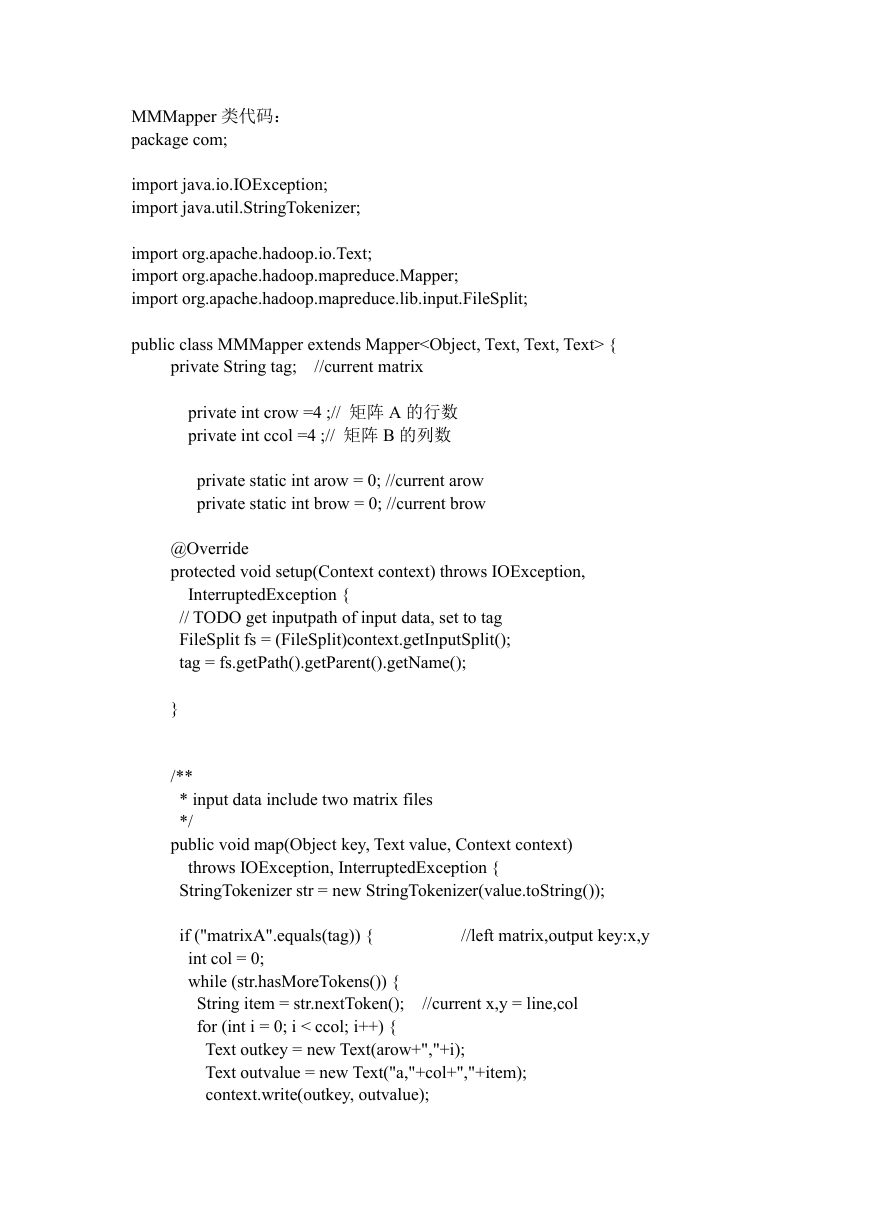

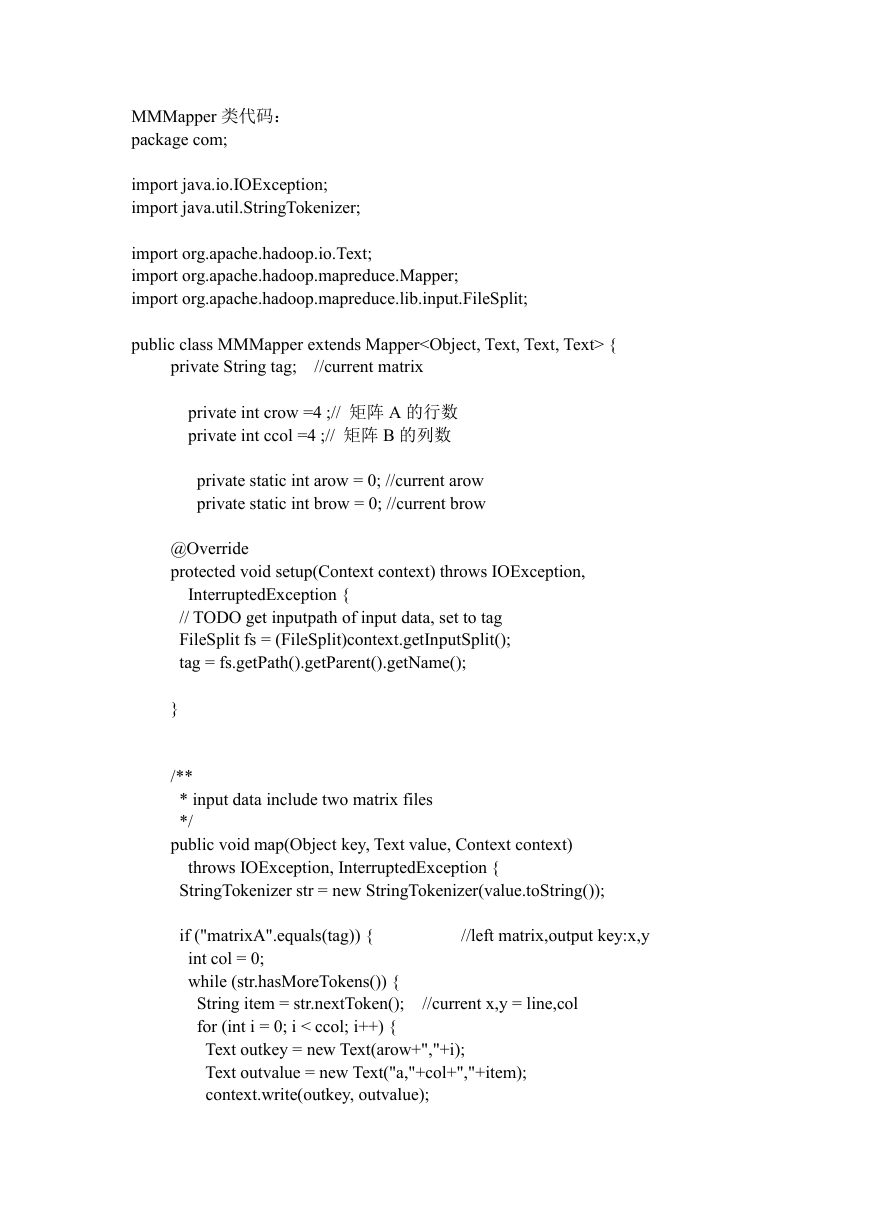

MMMapper 类代码:

package com;

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

public class MMMapper extends Mapper {

private String tag;

//current matrix

private int crow =4 ;// 矩阵 A 的行数

private int ccol =4 ;// 矩阵 B 的列数

private static int arow = 0; //current arow

private static int brow = 0; //current brow

@Override

protected void setup(Context context) throws IOException,

InterruptedException {

// TODO get inputpath of input data, set to tag

FileSplit fs = (FileSplit)context.getInputSplit();

tag = fs.getPath().getParent().getName();

}

/**

* input data include two matrix files

*/

public void map(Object key, Text value, Context context)

throws IOException, InterruptedException {

StringTokenizer str = new StringTokenizer(value.toString());

//left matrix,output key:x,y

if ("matrixA".equals(tag)) {

int col = 0;

while (str.hasMoreTokens()) {

String item = str.nextToken();

for (int i = 0; i < ccol; i++) {

Text outkey = new Text(arow+","+i);

Text outvalue = new Text("a,"+col+","+item);

context.write(outkey, outvalue);

//current x,y = line,col

�

System.out.println(outkey+" | "+outvalue);

}

col++;

}

arow++;

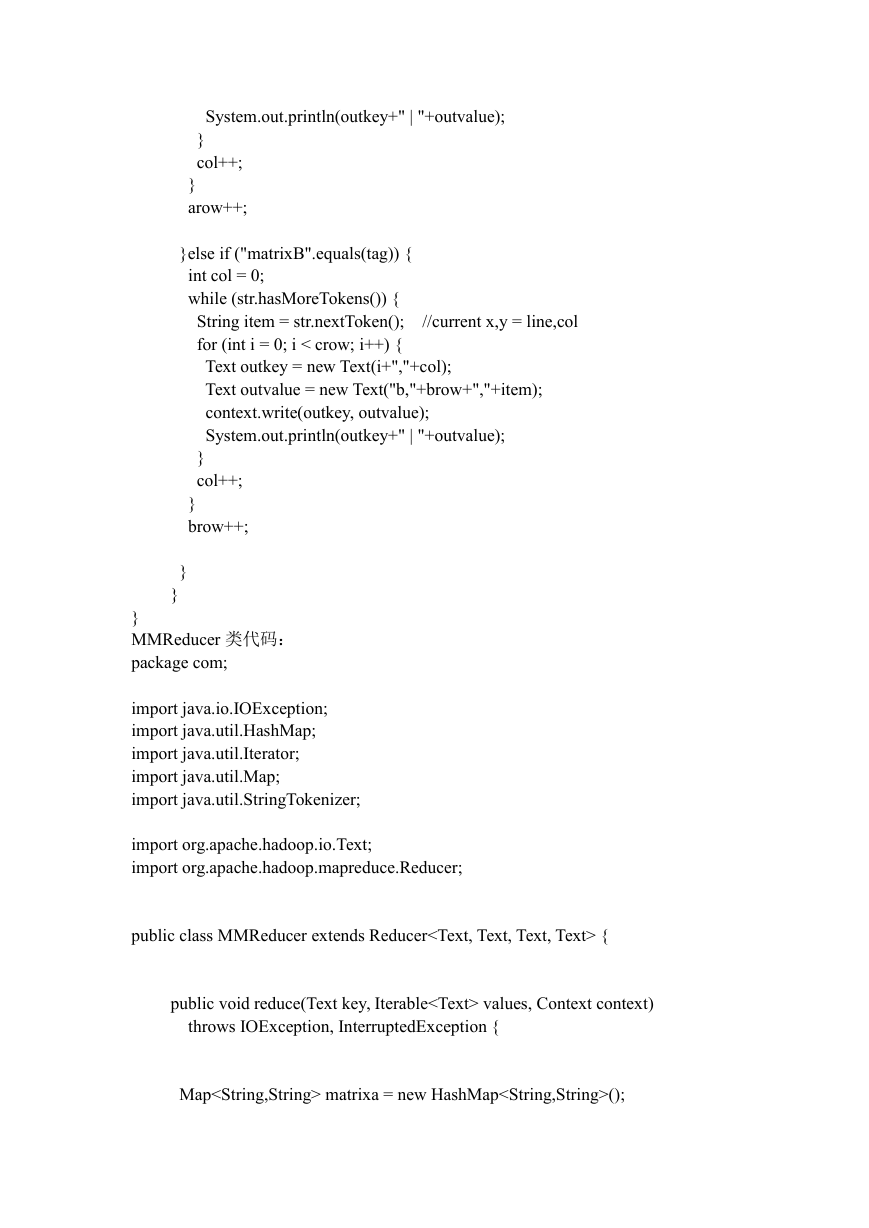

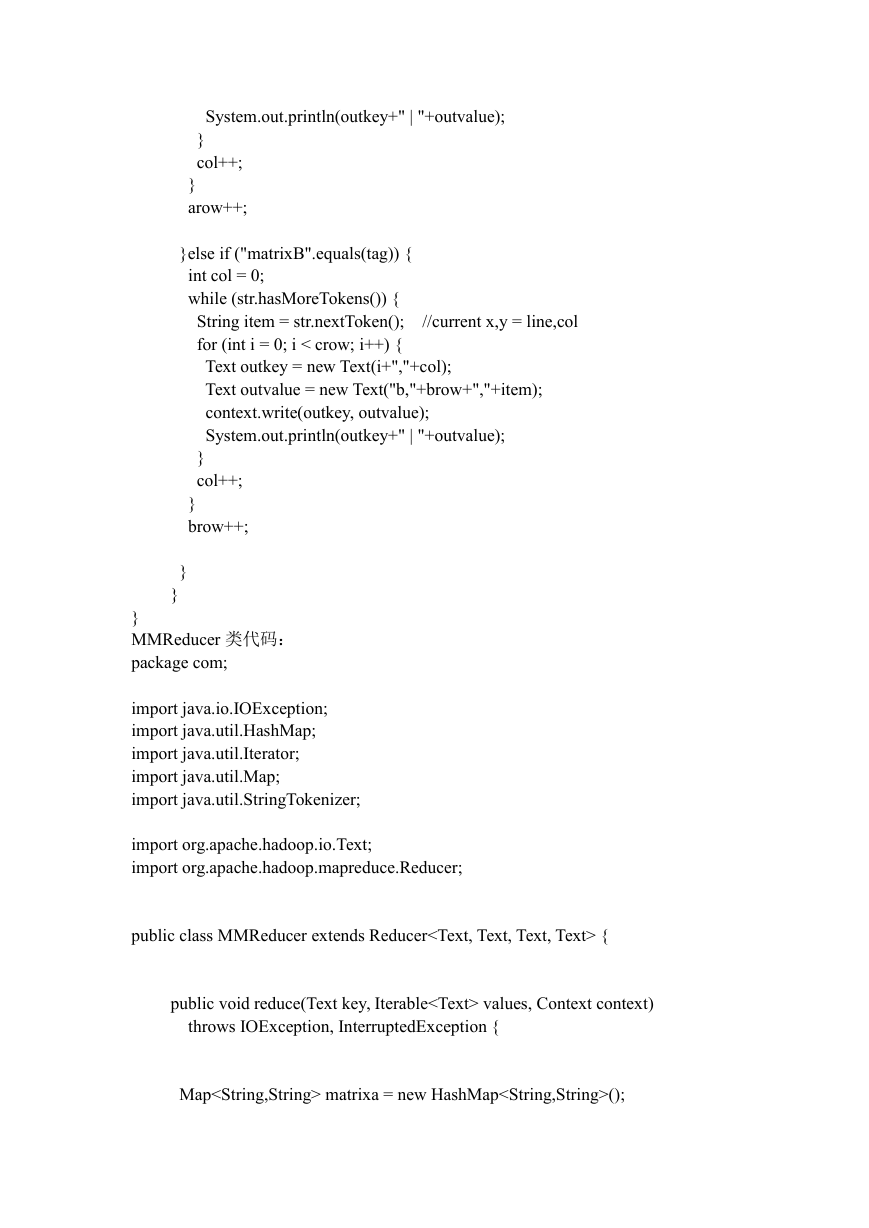

}else if ("matrixB".equals(tag)) {

int col = 0;

while (str.hasMoreTokens()) {

String item = str.nextToken();

for (int i = 0; i < crow; i++) {

Text outkey = new Text(i+","+col);

Text outvalue = new Text("b,"+brow+","+item);

context.write(outkey, outvalue);

System.out.println(outkey+" | "+outvalue);

//current x,y = line,col

}

col++;

}

brow++;

}

}

}

MMReducer 类代码:

package com;

import java.io.IOException;

import java.util.HashMap;

import java.util.Iterator;

import java.util.Map;

import java.util.StringTokenizer;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class MMReducer extends Reducer {

public void reduce(Text key, Iterable values, Context context)

throws IOException, InterruptedException {

Map matrixa = new HashMap();

�

Map matrixb = new HashMap();

//values example : b,0,2

for (Text val : values) {

a,0,4

StringTokenizer str = new StringTokenizer(val.toString(),",");

String sourceMatrix = str.nextToken();

if ("a".equals(sourceMatrix)) {

matrixa.put(str.nextToken(), str.nextToken());

//(0,4)

or

}

if ("b".equals(sourceMatrix)) {

matrixb.put(str.nextToken(), str.nextToken());

//(0,2)

int result = 0;

Iterator iter = matrixa.keySet().iterator();

while (iter.hasNext()) {

String mapkey = iter.next();

+=

result

Integer.parseInt(matrixb.get(mapkey));

Integer.parseInt(matrixa.get(mapkey))

*

}

}

}

}

context.write(key, new Text(String.valueOf(result)));

}

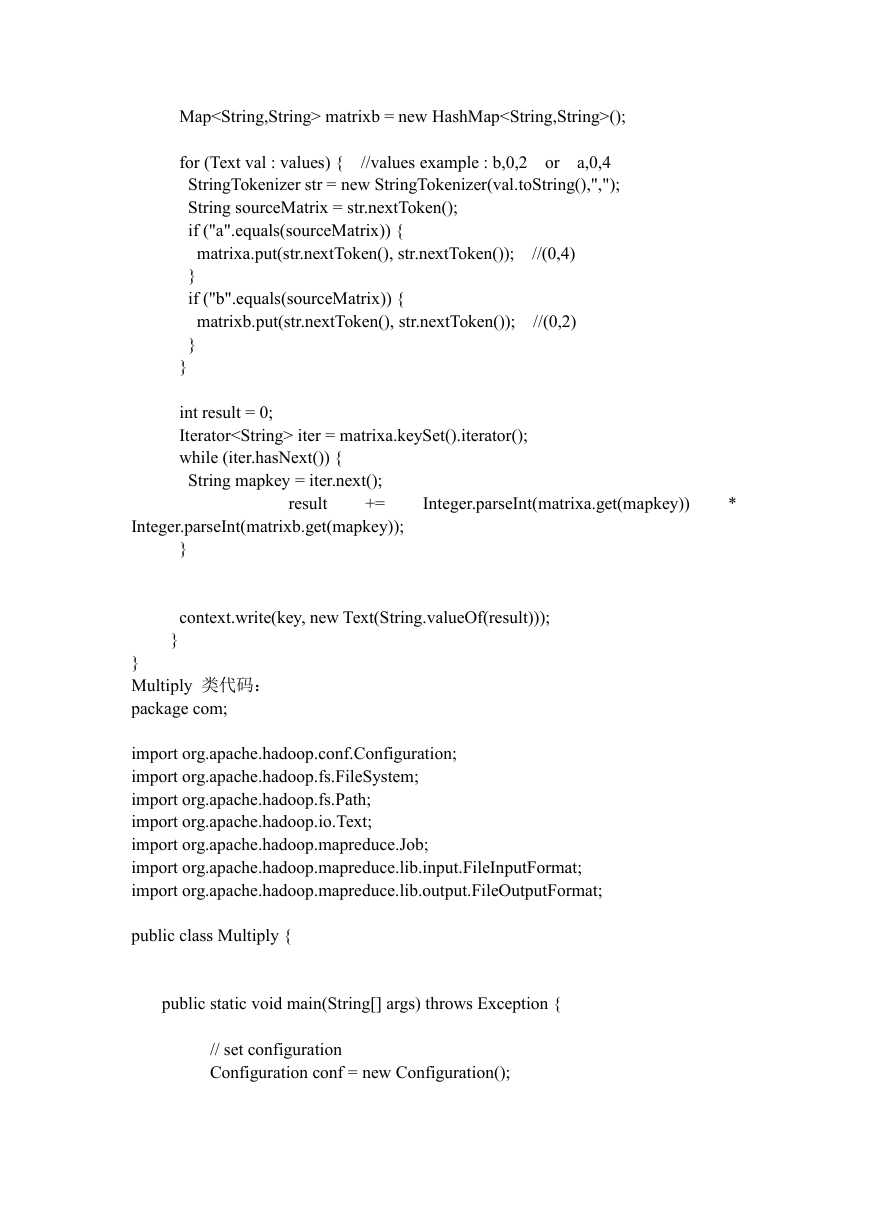

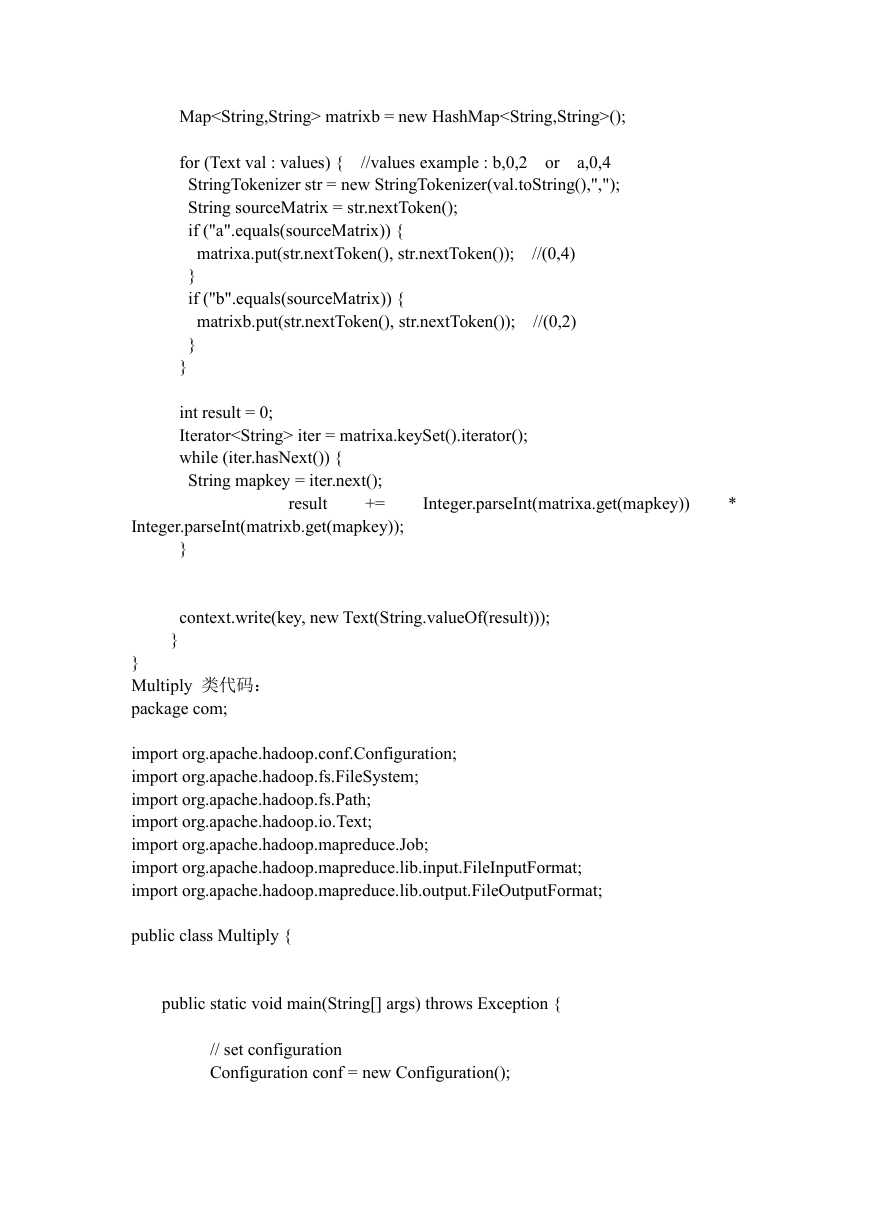

Multiply 类代码:

package com;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class Multiply {

public static void main(String[] args) throws Exception {

// set configuration

Configuration conf = new Configuration();

�

// create job

Job job = new Job(conf,"MatrixMultiply");

job.setJarByClass(Multiply.class);

//

specify Mapper & Reducer

job.setMapperClass(MMMapper.class);

job.setReducerClass(MMReducer.class);

// specify output types of mapper and reducer

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

// specify input and output DIRECTORIES

Path inPathA = new Path(args[0]);

Path inPathB = new Path(args[1]);

Path outPath = new Path(args[2]);

FileInputFormat.addInputPath(job, inPathA);

FileInputFormat.addInputPath(job, inPathB);

FileOutputFormat.setOutputPath(job,outPath);

// delete output directory

try{

FileSystem hdfs = outPath.getFileSystem(conf);

if(hdfs.exists(outPath))

hdfs.delete(outPath);

hdfs.close();

} catch (Exception e){

e.printStackTrace();

return ;

}

run the job

//

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

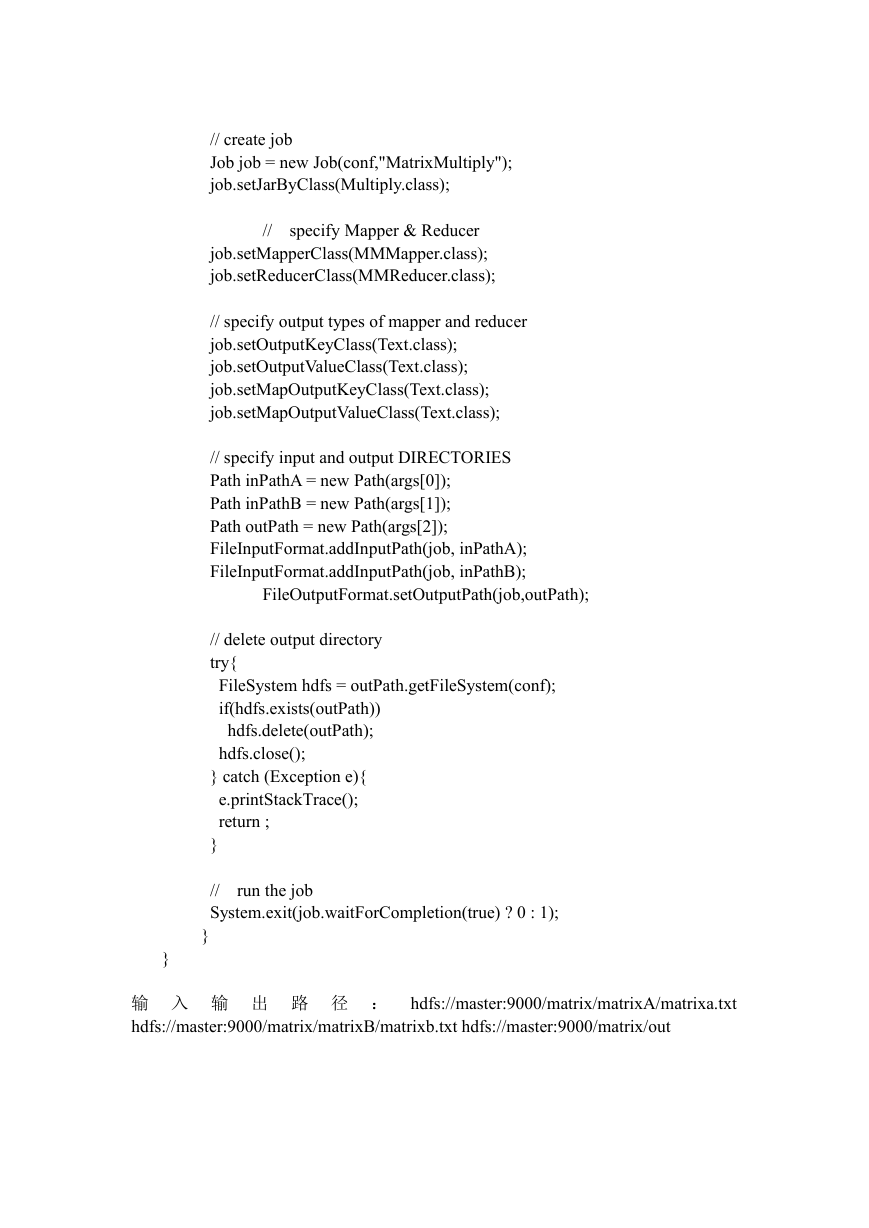

输 入 输 出 路 径 : hdfs://master:9000/matrix/matrixA/matrixa.txt

hdfs://master:9000/matrix/matrixB/matrixb.txt hdfs://master:9000/matrix/out

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc