CS 229 – Machine Learning

https://stanford.edu/~shervine

VIP Refresher: Linear Algebra and Calculus

Afshine Amidi and Shervine Amidi

August 8, 2018

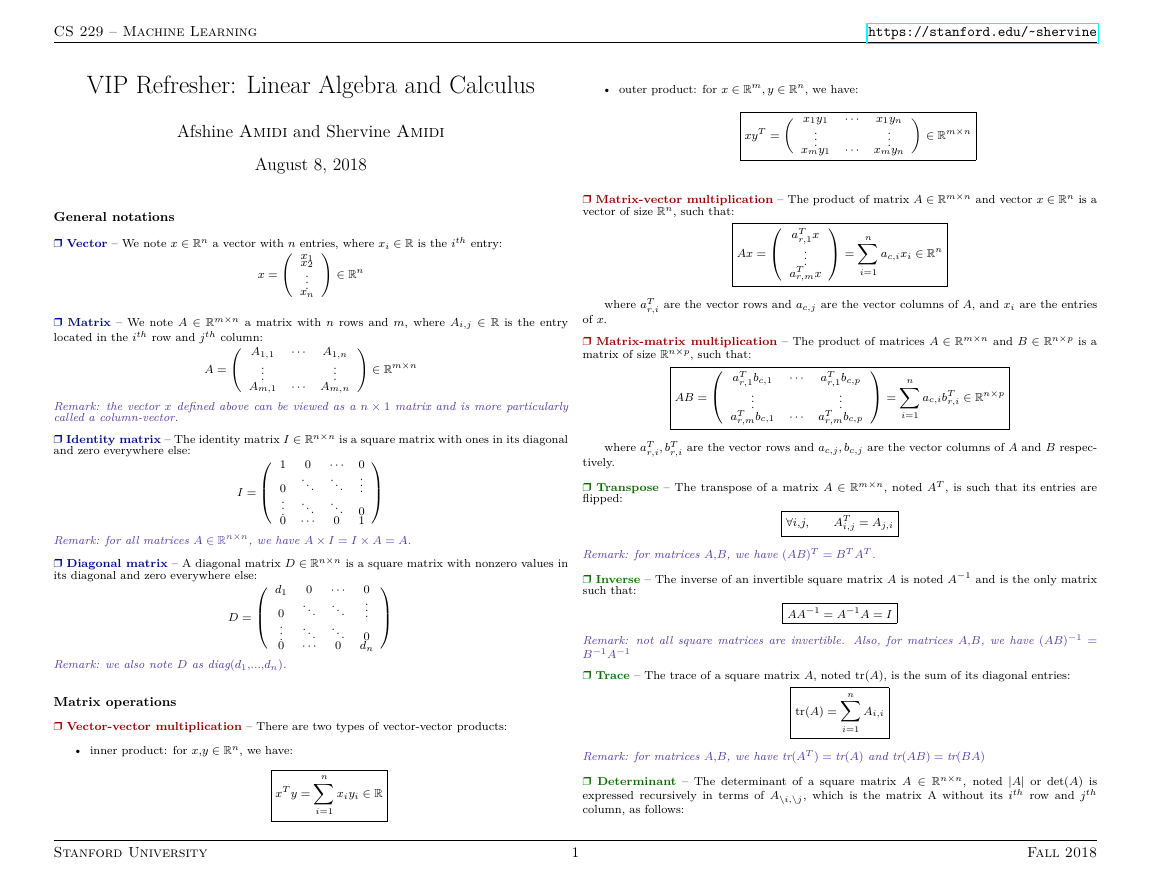

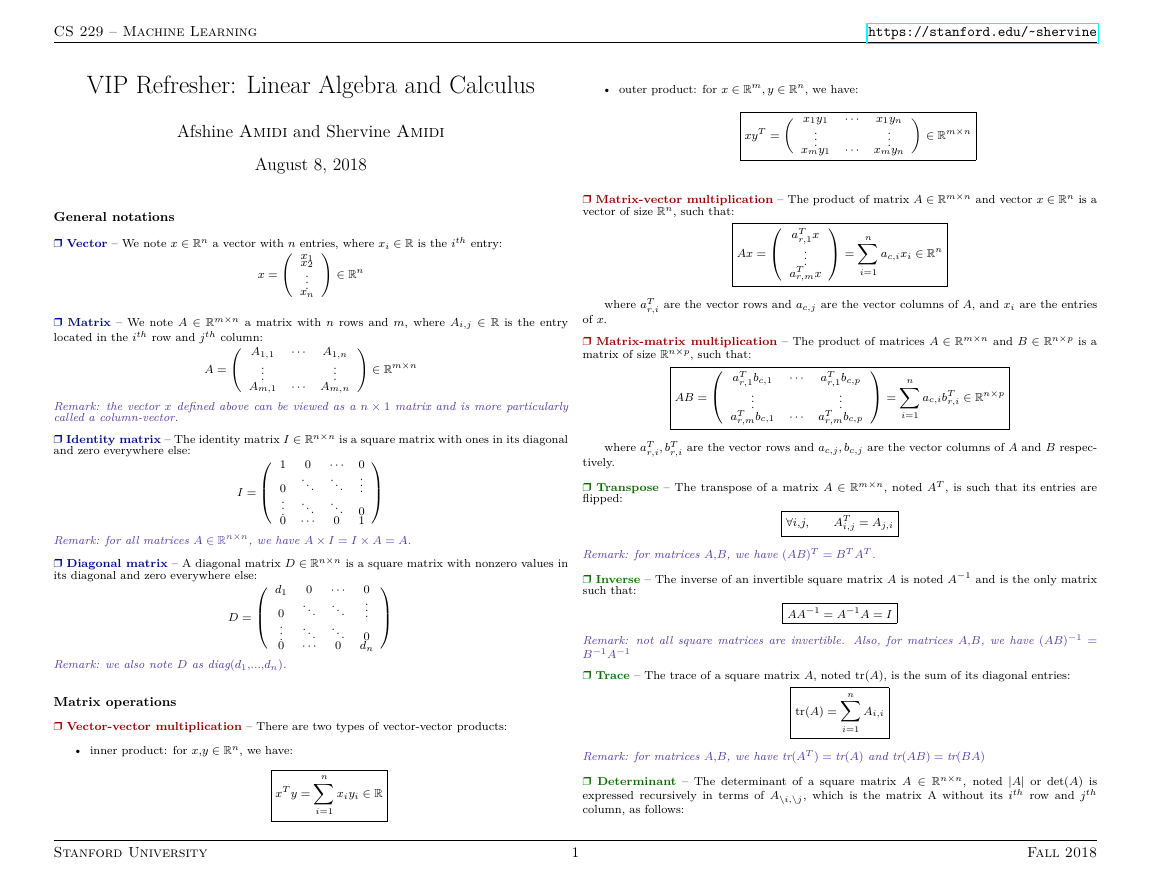

• outer product: for x ∈ Rm, y ∈ Rn, we have:

· · ·

x1y1

...

xmy1

∈ Rm×n

x1yn

...

xmyn

· · ·

xyT =

General notations

Ì Vector – We note x ∈ Rn a vector with n entries, where xi ∈ R is the ith entry:

!

x1x2...

xn

x =

∈ Rn

A1,1

Ì Matrix – We note A ∈ Rm×n a matrix with n rows and m, where Ai,j ∈ R is the entry

located in the ith row and jth column:

...

∈ Rm×n

!

A =

· · · A1,n

...

· · · Am,n

Am,1

Remark: the vector x defined above can be viewed as a n × 1 matrix and is more particularly

called a column-vector.

Ì Identity matrix – The identity matrix I ∈ Rn×n is a square matrix with ones in its diagonal

and zero everywhere else:

Remark: for all matrices A ∈ Rn×n, we have A × I = I × A = A.

Ì Diagonal matrix – A diagonal matrix D ∈ Rn×n is a square matrix with nonzero values in

its diagonal and zero everywhere else:

I =

0

...

0

1

d1

0

...

0

1

0

...

0

dn

0

...

...

· · ·

0

...

...

· · ·

· · ·

...

...

0

· · ·

...

...

0

0

...

0

Remark: we also note D as diag(d1,...,dn).

D =

Matrix operations

Ì Vector-vector multiplication – There are two types of vector-vector products:

• inner product: for x,y ∈ Rn, we have:

xT y =

nX

i=1

xiyi ∈ R

Ì Matrix-vector multiplication – The product of matrix A ∈ Rm×n and vector x ∈ Rn is a

vector of size Rn, such that:

aT

r,1x

...

aT

r,mx

=

nX

i=1

Ax =

ac,ixi ∈ Rn

r,i

where aT

are the vector rows and ac,j are the vector columns of A, and xi are the entries

of x.

Ì Matrix-matrix multiplication – The product of matrices A ∈ Rm×n and B ∈ Rn×p is a

matrix of size Rn×p, such that:

aT

r,1bc,1

...

aT

r,mbc,1

AB =

=

nX

i=1

· · ·

· · ·

aT

r,1bc,p

...

aT

r,mbc,p

ac,ibT

r,i ∈ Rn×p

r,i, bT

r,i

where aT

are the vector rows and ac,j , bc,j are the vector columns of A and B respec-

tively.

Ì Transpose – The transpose of a matrix A ∈ Rm×n, noted AT , is such that its entries are

flipped:

∀i,j,

AT

i,j

= Aj,i

Remark: for matrices A,B, we have (AB)T = BT AT .

Ì Inverse – The inverse of an invertible square matrix A is noted A−1 and is the only matrix

such that:

AA−1 = A−1A = I

Remark: not all square matrices are invertible. Also, for matrices A,B, we have (AB)−1 =

B−1A−1

Ì Trace – The trace of a square matrix A, noted tr(A), is the sum of its diagonal entries:

nX

tr(A) =

Ai,i

i=1

Remark: for matrices A,B, we have tr(AT ) = tr(A) and tr(AB) = tr(BA)

Ì Determinant – The determinant of a square matrix A ∈ Rn×n, noted |A| or det(A) is

expressed recursively in terms of A\i,\j, which is the matrix A without its ith row and jth

column, as follows:

Stanford University

1

Fall 2018

�

CS 229 – Machine Learning

https://stanford.edu/~shervine

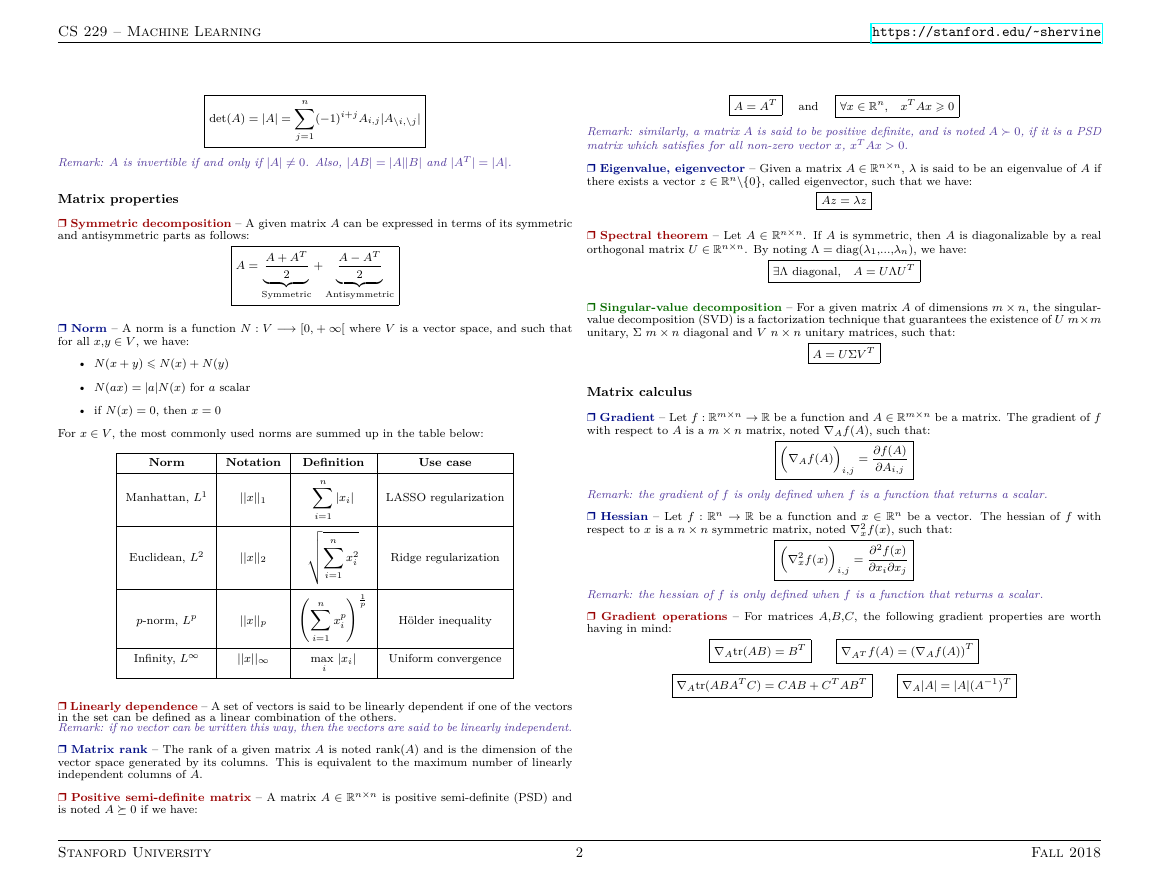

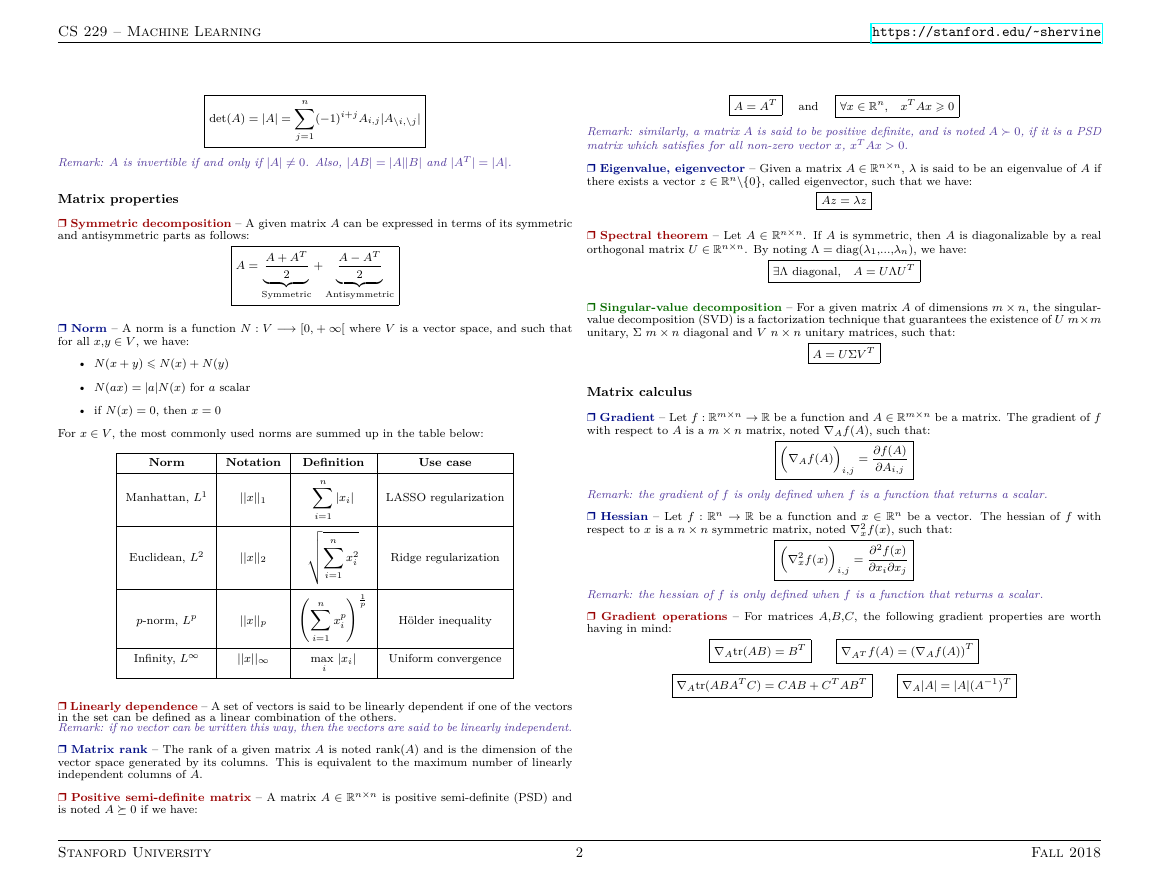

det(A) = |A| =

nX

(−1)i+j Ai,j|A\i,\j|

j=1

Remark: A is invertible if and only if |A| 6= 0. Also, |AB| = |A||B| and |AT | = |A|.

Matrix properties

Ì Symmetric decomposition – A given matrix A can be expressed in terms of its symmetric

and antisymmetric parts as follows:

A = A + AT

| {z }

2

Symmetric

+ A − AT

| {z }

2

Antisymmetric

Ì Norm – A norm is a function N : V −→ [0, + ∞[ where V is a vector space, and such that

for all x,y ∈ V , we have:

• N(x + y) N(x) + N(y)

• N(ax) = |a|N(x) for a scalar

• if N(x) = 0, then x = 0

For x ∈ V , the most commonly used norms are summed up in the table below:

Norm

Notation Definition

Manhattan, L1

||x||1

Euclidean, L2

||x||2

p-norm, Lp

Infinity, L∞

||x||p

||x||∞

|xi|

i=1

nX

vuut nX

nX

i=1

xp

i

x2

i

! 1

p

i=1

max

i

|xi|

Use case

LASSO regularization

Ridge regularization

Hölder inequality

Uniform convergence

A = AT

and

∀x ∈ Rn, xT Ax 0

Remark: similarly, a matrix A is said to be positive definite, and is noted A 0, if it is a PSD

matrix which satisfies for all non-zero vector x, xT Ax > 0.

Ì Eigenvalue, eigenvector – Given a matrix A ∈ Rn×n, λ is said to be an eigenvalue of A if

there exists a vector z ∈ Rn\{0}, called eigenvector, such that we have:

Az = λz

Ì Spectral theorem – Let A ∈ Rn×n. If A is symmetric, then A is diagonalizable by a real

orthogonal matrix U ∈ Rn×n. By noting Λ = diag(λ1,...,λn), we have:

∃Λ diagonal, A = UΛU T

Ì Singular-value decomposition – For a given matrix A of dimensions m × n, the singular-

value decomposition (SVD) is a factorization technique that guarantees the existence of U m×m

unitary, Σ m × n diagonal and V n × n unitary matrices, such that:

A = UΣV T

Matrix calculus

Ì Gradient – Let f : Rm×n → R be a function and A ∈ Rm×n be a matrix. The gradient of f

with respect to A is a m × n matrix, noted ∇Af(A), such that:

∇Af(A)

= ∂f(A)

∂Ai,j

i,j

Remark: the gradient of f is only defined when f is a function that returns a scalar.

Ì Hessian – Let f : Rn → R be a function and x ∈ Rn be a vector. The hessian of f with

respect to x is a n × n symmetric matrix, noted ∇2

∇2

xf(x)

xf(x), such that:

= ∂2f(x)

∂xi∂xj

i,j

Remark: the hessian of f is only defined when f is a function that returns a scalar.

Ì Gradient operations – For matrices A,B,C, the following gradient properties are worth

having in mind:

∇Atr(AB) = BT

∇AT f(A) = (∇Af(A))T

∇Atr(ABAT C) = CAB + CT ABT

∇A|A| = |A|(A−1)T

Ì Linearly dependence – A set of vectors is said to be linearly dependent if one of the vectors

in the set can be defined as a linear combination of the others.

Remark: if no vector can be written this way, then the vectors are said to be linearly independent.

Ì Matrix rank – The rank of a given matrix A is noted rank(A) and is the dimension of the

vector space generated by its columns. This is equivalent to the maximum number of linearly

independent columns of A.

Ì Positive semi-definite matrix – A matrix A ∈ Rn×n is positive semi-definite (PSD) and

is noted A 0 if we have:

Stanford University

2

Fall 2018

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc