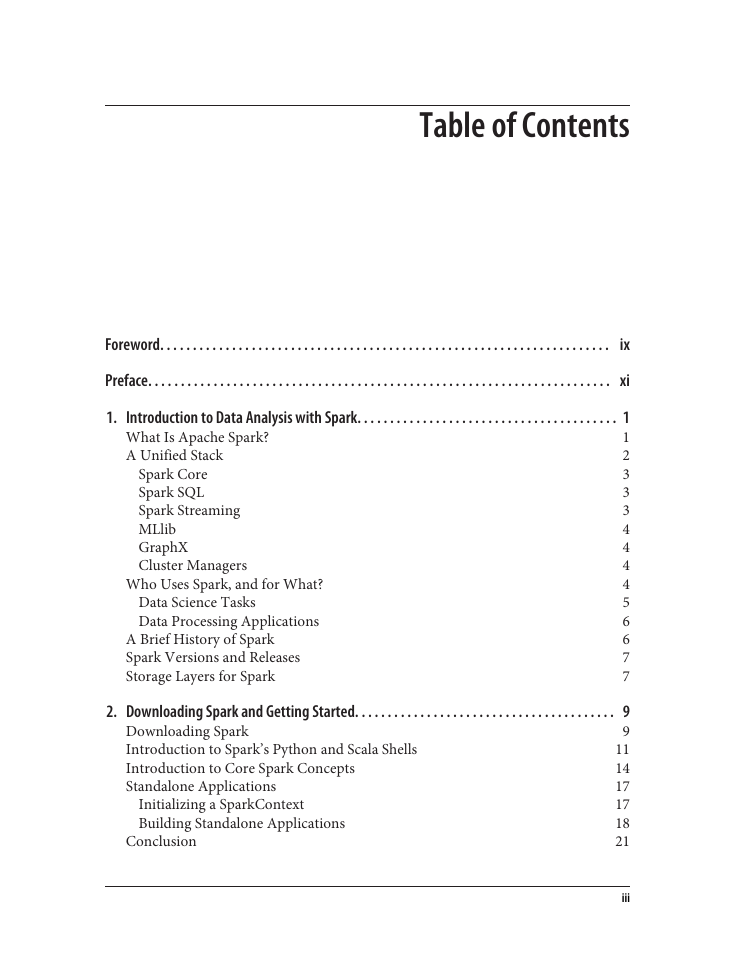

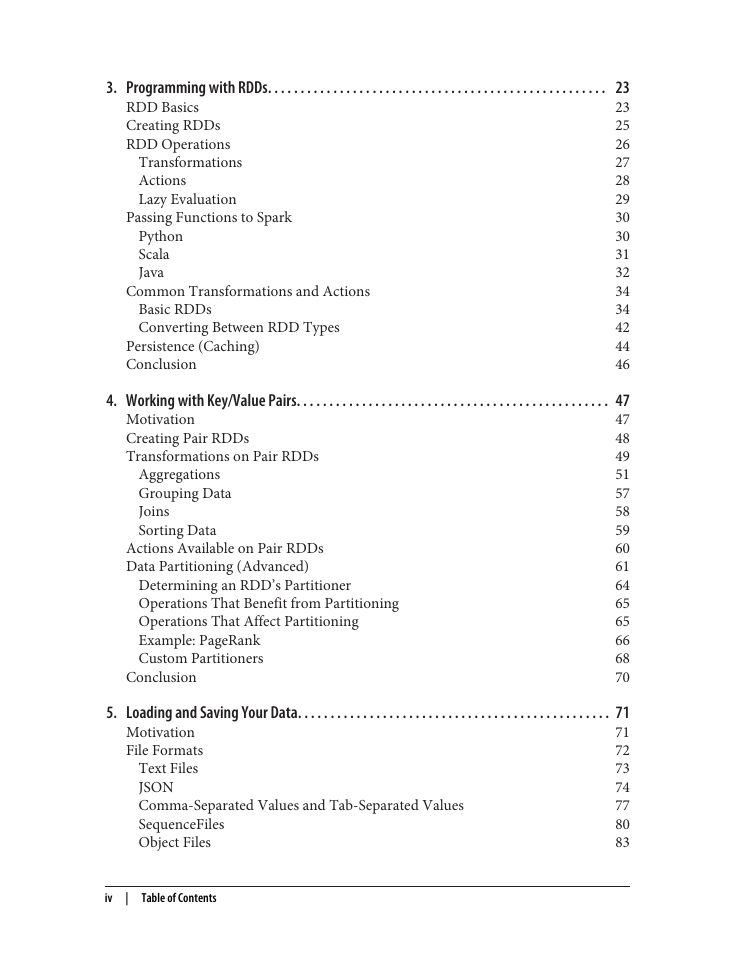

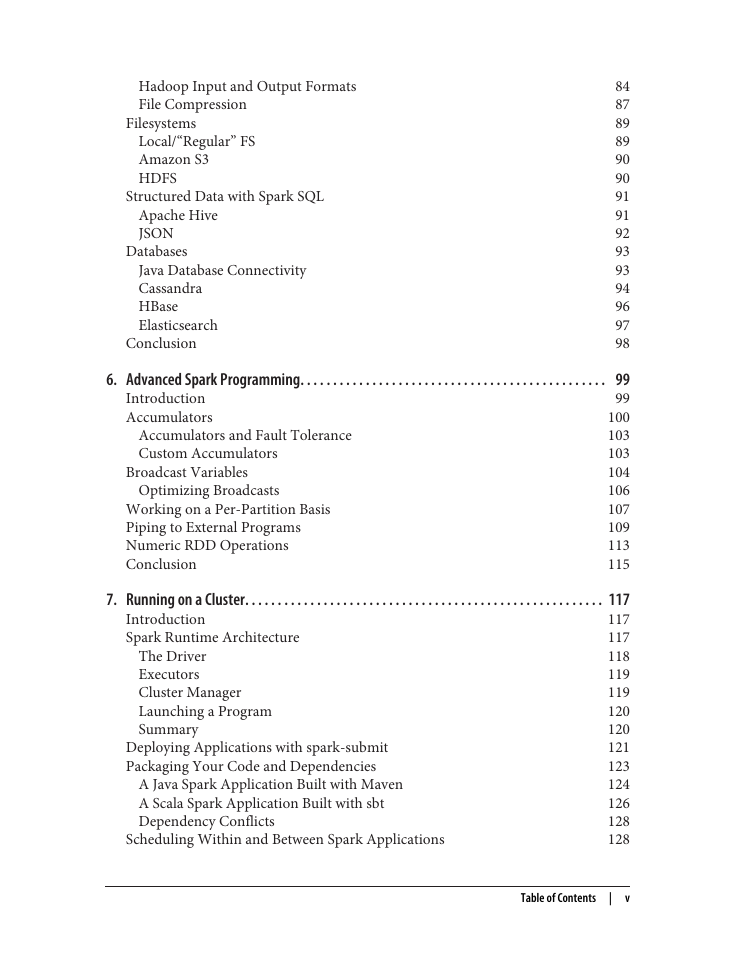

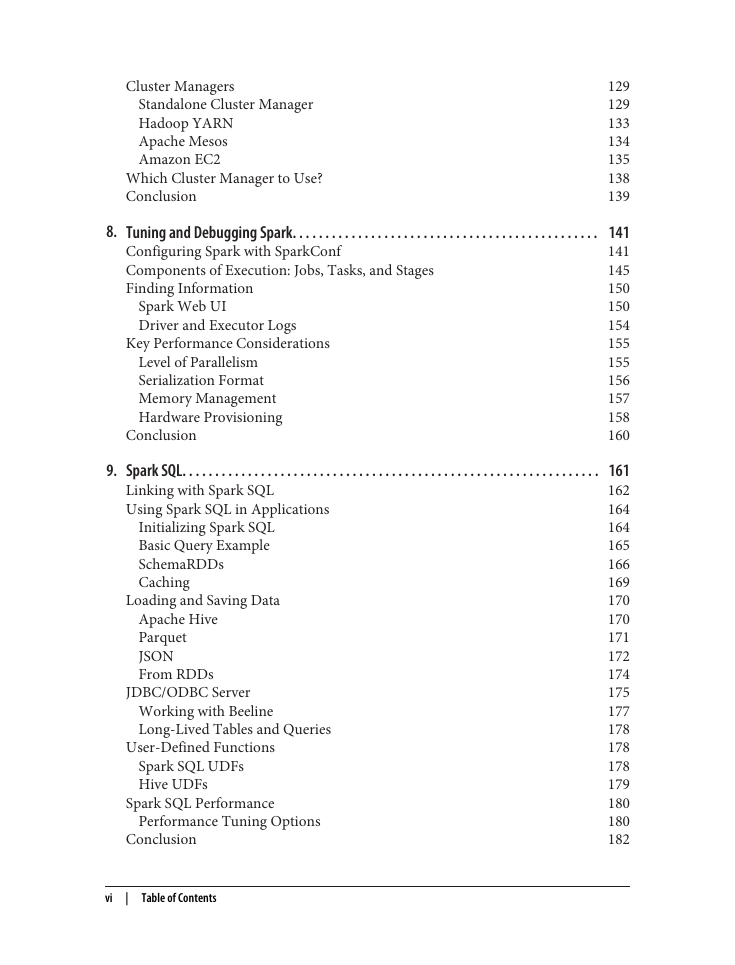

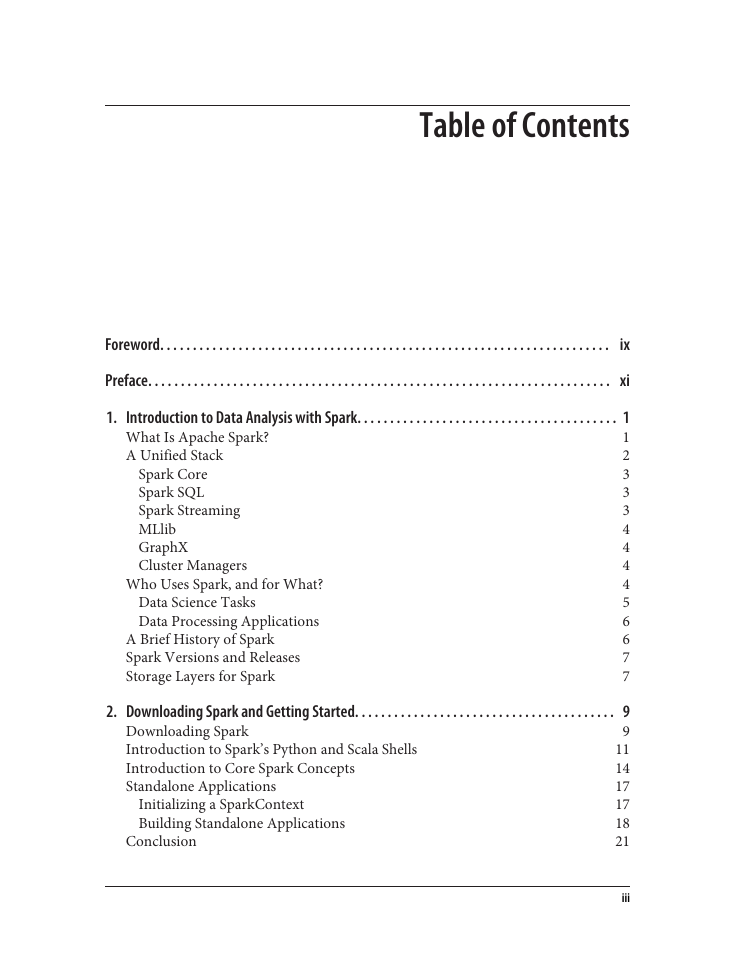

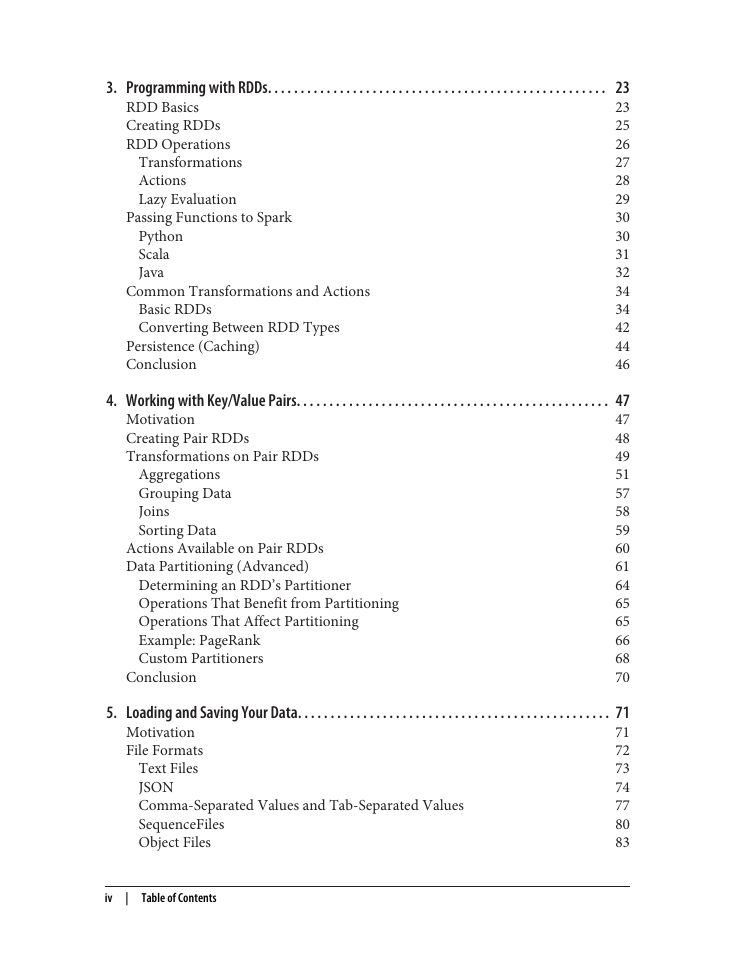

Table of Contents

Foreword

Preface

Audience

How This Book Is Organized

Supporting Books

Conventions Used in This Book

Code Examples

Safari® Books Online

How to Contact Us

Acknowledgments

Chapter 1. Introduction to Data Analysis with Spark

What Is Apache Spark?

A Unified Stack

Spark Core

Spark SQL

Spark Streaming

MLlib

GraphX

Cluster Managers

Who Uses Spark, and for What?

Data Science Tasks

Data Processing Applications

A Brief History of Spark

Spark Versions and Releases

Storage Layers for Spark

Chapter 2. Downloading Spark and Getting Started

Downloading Spark

Introduction to Spark’s Python and Scala Shells

Introduction to Core Spark Concepts

Standalone Applications

Initializing a SparkContext

Building Standalone Applications

Conclusion

Chapter 3. Programming with RDDs

RDD Basics

Creating RDDs

RDD Operations

Transformations

Actions

Lazy Evaluation

Passing Functions to Spark

Python

Scala

Java

Common Transformations and Actions

Basic RDDs

Converting Between RDD Types

Persistence (Caching)

Conclusion

Chapter 4. Working with Key/Value Pairs

Motivation

Creating Pair RDDs

Transformations on Pair RDDs

Aggregations

Grouping Data

Joins

Sorting Data

Actions Available on Pair RDDs

Data Partitioning (Advanced)

Determining an RDD’s Partitioner

Operations That Benefit from Partitioning

Operations That Affect Partitioning

Example: PageRank

Custom Partitioners

Conclusion

Chapter 5. Loading and Saving Your Data

Motivation

File Formats

Text Files

JSON

Comma-Separated Values and Tab-Separated Values

SequenceFiles

Object Files

Hadoop Input and Output Formats

File Compression

Filesystems

Local/“Regular” FS

Amazon S3

HDFS

Structured Data with Spark SQL

Apache Hive

JSON

Databases

Java Database Connectivity

Cassandra

HBase

Elasticsearch

Conclusion

Chapter 6. Advanced Spark Programming

Introduction

Accumulators

Accumulators and Fault Tolerance

Custom Accumulators

Broadcast Variables

Optimizing Broadcasts

Working on a Per-Partition Basis

Piping to External Programs

Numeric RDD Operations

Conclusion

Chapter 7. Running on a Cluster

Introduction

Spark Runtime Architecture

The Driver

Executors

Cluster Manager

Launching a Program

Summary

Deploying Applications with spark-submit

Packaging Your Code and Dependencies

A Java Spark Application Built with Maven

A Scala Spark Application Built with sbt

Dependency Conflicts

Scheduling Within and Between Spark Applications

Cluster Managers

Standalone Cluster Manager

Hadoop YARN

Apache Mesos

Amazon EC2

Which Cluster Manager to Use?

Conclusion

Chapter 8. Tuning and Debugging Spark

Configuring Spark with SparkConf

Components of Execution: Jobs, Tasks, and Stages

Finding Information

Spark Web UI

Driver and Executor Logs

Key Performance Considerations

Level of Parallelism

Serialization Format

Memory Management

Hardware Provisioning

Conclusion

Chapter 9. Spark SQL

Linking with Spark SQL

Using Spark SQL in Applications

Initializing Spark SQL

Basic Query Example

SchemaRDDs

Caching

Loading and Saving Data

Apache Hive

Parquet

JSON

From RDDs

JDBC/ODBC Server

Working with Beeline

Long-Lived Tables and Queries

User-Defined Functions

Spark SQL UDFs

Hive UDFs

Spark SQL Performance

Performance Tuning Options

Conclusion

Chapter 10. Spark Streaming

A Simple Example

Architecture and Abstraction

Transformations

Stateless Transformations

Stateful Transformations

Output Operations

Input Sources

Core Sources

Additional Sources

Multiple Sources and Cluster Sizing

24/7 Operation

Checkpointing

Driver Fault Tolerance

Worker Fault Tolerance

Receiver Fault Tolerance

Processing Guarantees

Streaming UI

Performance Considerations

Batch and Window Sizes

Level of Parallelism

Garbage Collection and Memory Usage

Conclusion

Chapter 11. Machine Learning with MLlib

Overview

System Requirements

Machine Learning Basics

Example: Spam Classification

Data Types

Working with Vectors

Algorithms

Feature Extraction

Statistics

Classification and Regression

Clustering

Collaborative Filtering and Recommendation

Dimensionality Reduction

Model Evaluation

Tips and Performance Considerations

Preparing Features

Configuring Algorithms

Caching RDDs to Reuse

Recognizing Sparsity

Level of Parallelism

Pipeline API

Conclusion

Index

About the Authors

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc