IEEE TRANSACTIONS ON KNOWLEDGE AND DATA ENGINEERING, VOL. 22, NO. 10, OCTOBER 2010

1345

A Survey on Transfer Learning

Sinno Jialin Pan and Qiang Yang, Fellow, IEEE

Abstract—A major assumption in many machine learning and data mining algorithms is that the training and future data must be in the

same feature space and have the same distribution. However, in many real-world applications, this assumption may not hold. For

example, we sometimes have a classification task in one domain of interest, but we only have sufficient training data in another domain

of interest, where the latter data may be in a different feature space or follow a different data distribution. In such cases, knowledge

transfer, if done successfully, would greatly improve the performance of learning by avoiding much expensive data-labeling efforts. In

recent years, transfer learning has emerged as a new learning framework to address this problem. This survey focuses on categorizing

and reviewing the current progress on transfer learning for classification, regression, and clustering problems. In this survey, we

discuss the relationship between transfer learning and other related machine learning techniques such as domain adaptation, multitask

learning and sample selection bias, as well as covariate shift. We also explore some potential future issues in transfer learning

research.

Index Terms—Transfer learning, survey, machine learning, data mining.

Ç

1 INTRODUCTION

DATA mining and machine learning technologies have

already achieved significant success in many knowl-

edge engineering areas including classification, regression,

and clustering (e.g., [1], [2]). However, many machine

learning methods work well only under a common assump-

tion: the training and test data are drawn from the same

feature space and the same distribution. When the distribu-

tion changes, most statistical models need to be rebuilt from

scratch using newly collected training data. In many real-

world applications, it is expensive or impossible to recollect

the needed training data and rebuild the models. It would be

nice to reduce the need and effort to recollect the training

data. In such cases, knowledge transfer or transfer learning

between task domains would be desirable.

Many examples in knowledge engineering can be found

where transfer learning can truly be beneficial. One

example is Web-document classification [3], [4], [5], where

our goal is to classify a given Web document into several

predefined categories. As an example, in the area of Web-

document classification (see, e.g., [6]), the labeled examples

may be the university webpages that are associated with

category information obtained through previous manual-

labeling efforts. For a classification task on a newly created

website where the data features or data distributions may

be different, there may be a lack of labeled training data. As

a result, we may not be able to directly apply the webpage

classifiers learned on the university website to the new

website. In such cases, it would be helpful if we could

transfer the classification knowledge into the new domain.

. The authors are with the Department of Computer Science and

Engineering, Hong Kong University of Science and Technology,

Clearwater Bay, Kowloon, Hong Kong.

E-mail: {sinnopan, qyang}@cse.ust.hk.

Manuscript received 13 Nov. 2008; revised 29 May 2009; accepted 13 July

2009; published online 12 Oct. 2009.

Recommended for acceptance by C. Clifton.

For information on obtaining reprints of this article, please send e-mail to:

tkde@computer.org, and reference IEEECS Log Number TKDE-2008-11-0600.

Digital Object Identifier no. 10.1109/TKDE.2009.191.

In this case,

The need for transfer learning may arise when the data

can be easily outdated.

the labeled data

obtained in one time period may not follow the same

distribution in a later time period. For example, in indoor

WiFi localization problems, which aims to detect a user’s

current location based on previously collected WiFi data, it

is very expensive to calibrate WiFi data for building

localization models in a large-scale environment, because

a user needs to label a large collection of WiFi signal data at

each location. However, the WiFi signal-strength values

may be a function of time, device, or other dynamic factors.

A model trained in one time period or on one device may

cause the performance for location estimation in another

time period or on another device to be reduced. To reduce

the recalibration effort, we might wish to adapt

the

localization model trained in one time period (the source

domain) for a new time period (the target domain), or to

adapt the localization model trained on a mobile device (the

source domain)

for a new mobile device (the target

domain), as done in [7].

As a third example, consider the problem of sentiment

classification, where our task is to automatically classify the

reviews on a product, such as a brand of camera, into

positive and negative views. For this classification task, we

need to first collect many reviews of the product and

annotate them. We would then train a classifier on the

reviews with their corresponding labels. Since the distribu-

tion of review data among different types of products can be

very different, to maintain good classification performance,

we need to collect a large amount of labeled data in order to

train the review-classification models for each product.

However, this data-labeling process can be very expensive to

do. To reduce the effort for annotating reviews for various

products, we may want to adapt a classification model that is

trained on some products to help learn classification models

for some other products. In such cases, transfer learning can

save a significant amount of labeling effort [8].

In this survey paper, we give a comprehensive overview

of transfer learning for classification, regression, and cluster-

1041-4347/10/$26.00 ß 2010 IEEE

Published by the IEEE Computer Society

�

1346

IEEE TRANSACTIONS ON KNOWLEDGE AND DATA ENGINEERING, VOL. 22, NO. 10, OCTOBER 2010

ing developed in machine learning and data mining areas.

There has been a large amount of work on transfer learning

for reinforcement learning in the machine learning literature

(e.g., [9], [10]). However, in this paper, we only focus on

transfer learning for classification, regression, and clustering

problems that are related more closely to data mining tasks.

By doing the survey, we hope to provide a useful resource for

the data mining and machine learning community.

The rest of the survey is organized as follows: In the next

four sections, we first give a general overview and define

some notations we will use later. We, then, briefly survey the

history of transfer learning, give a unified definition of

transfer learning and categorize transfer learning into three

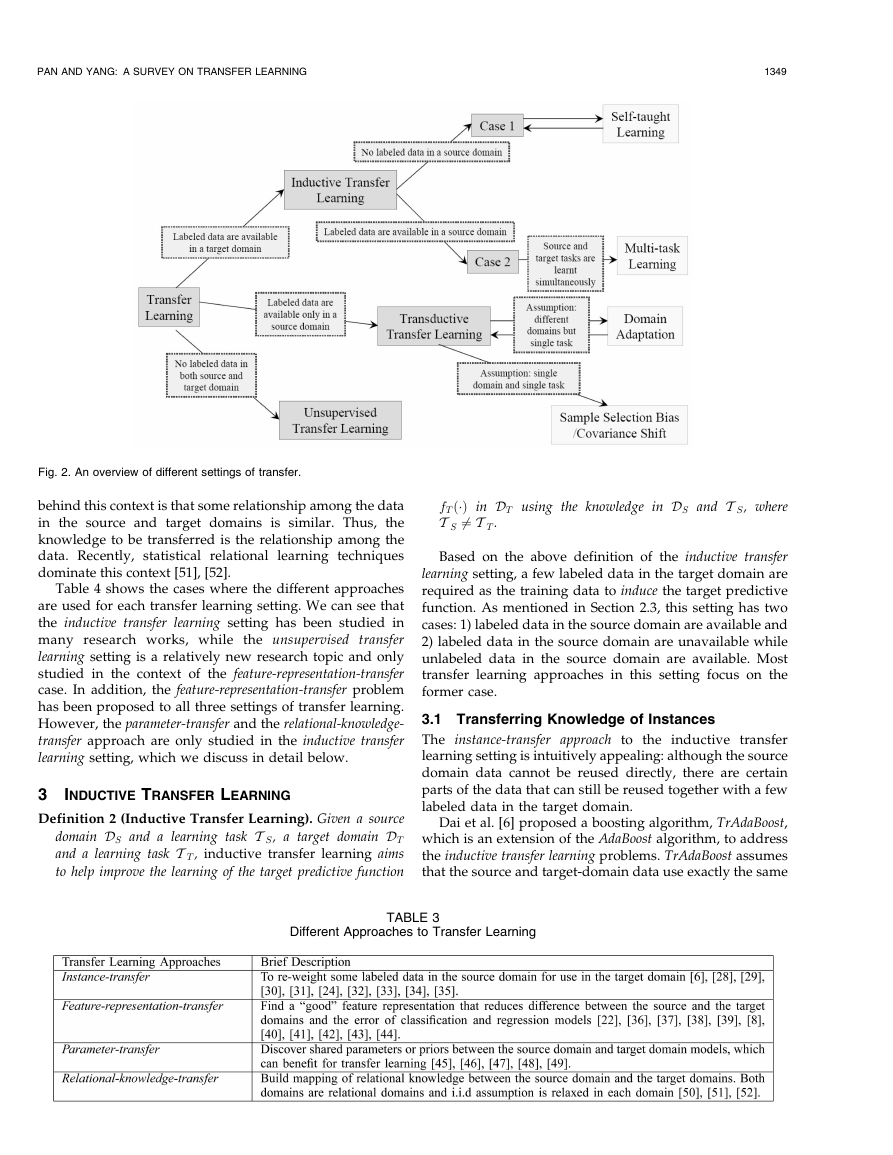

different settings (given in Table 2 and Fig. 2). For each

setting, we review different approaches, given in Table 3 in

detail. After that, in Section 6, we review some current

research on the topic of “negative transfer,” which happens

when knowledge transfer has a negative impact on target

learning. In Section 7, we introduce some successful

applications of transfer learning and list some published

data sets and software toolkits for transfer learning research.

Finally, we conclude the paper with a discussion of future

works in Section 8.

2 OVERVIEW

2.1 A Brief History of Transfer Learning

Traditional data mining and machine learning algorithms

make predictions on the future data using statistical models

that are trained on previously collected labeled or unlabeled

training data [11], [12], [13]. Semisupervised classification

[14], [15], [16], [17] addresses the problem that the labeled

data may be too few to build a good classifier, by making use

of a large amount of unlabeled data and a small amount of

labeled data. Variations of supervised and semisupervised

learning for imperfect data sets have been studied; for

example, Zhu and Wu [18] have studied how to deal with the

noisy class-label problems. Yang et al. considered cost-

sensitive learning [19] when additional tests can be made to

future samples. Nevertheless, most of them assume that the

distributions of the labeled and unlabeled data are the same.

Transfer learning, in contrast, allows the domains, tasks, and

distributions used in training and testing to be different. In

the real world, we observe many examples of transfer

learning. For example, we may find that learning to

recognize apples might help to recognize pears. Similarly,

learning to play the electronic organ may help facilitate

learning the piano. The study of Transfer learning is motivated

by the fact that people can intelligently apply knowledge

learned previously to solve new problems faster or with

better solutions. The fundamental motivation for Transfer

learning in the field of machine learning was discussed in a

NIPS-95 workshop on “Learning to Learn,”1 which focused

on the need for lifelong machine learning methods that retain

and reuse previously learned knowledge.

Research on transfer learning has attracted more and

more attention since 1995 in different names: learning to

learn,

inductive

life-long learning, knowledge transfer,

1. http://socrates.acadiau.ca/courses/comp/dsilver/NIPS95_LTL/

transfer.workshop.1995.html.

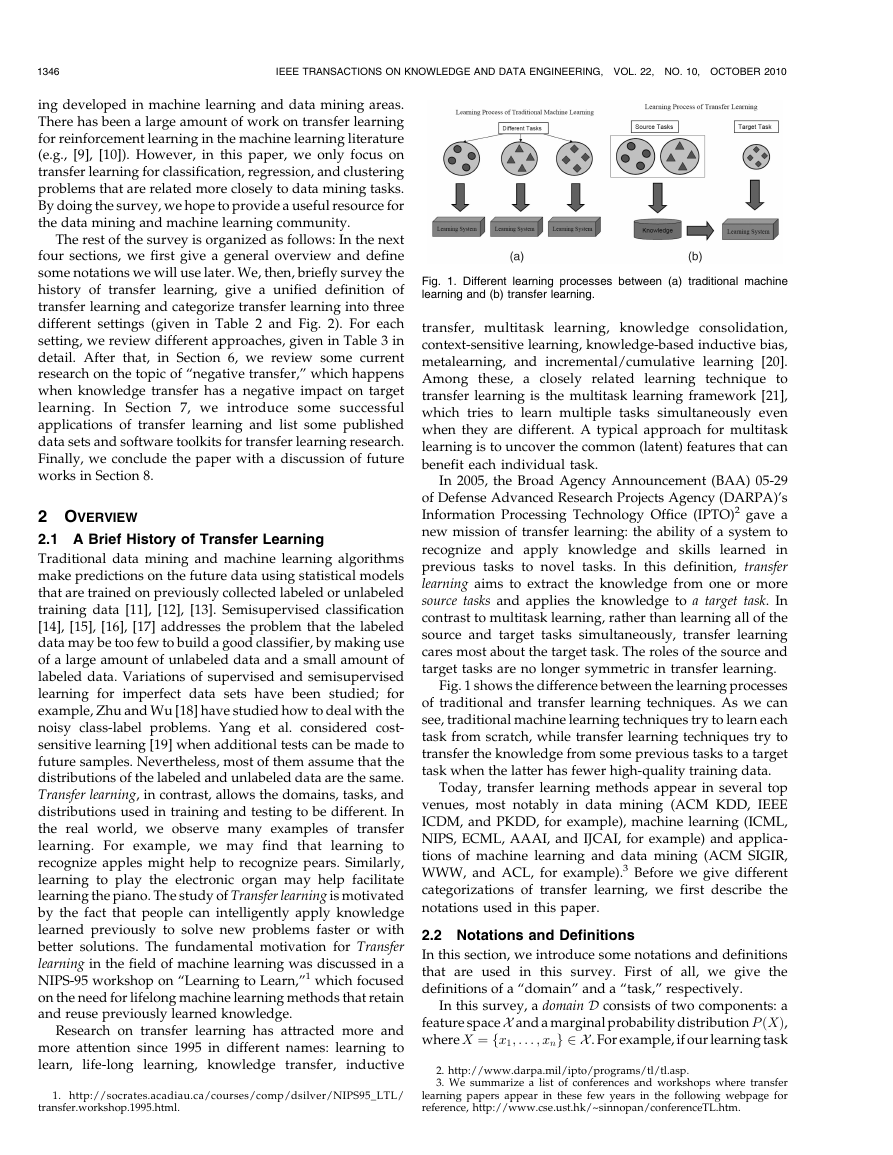

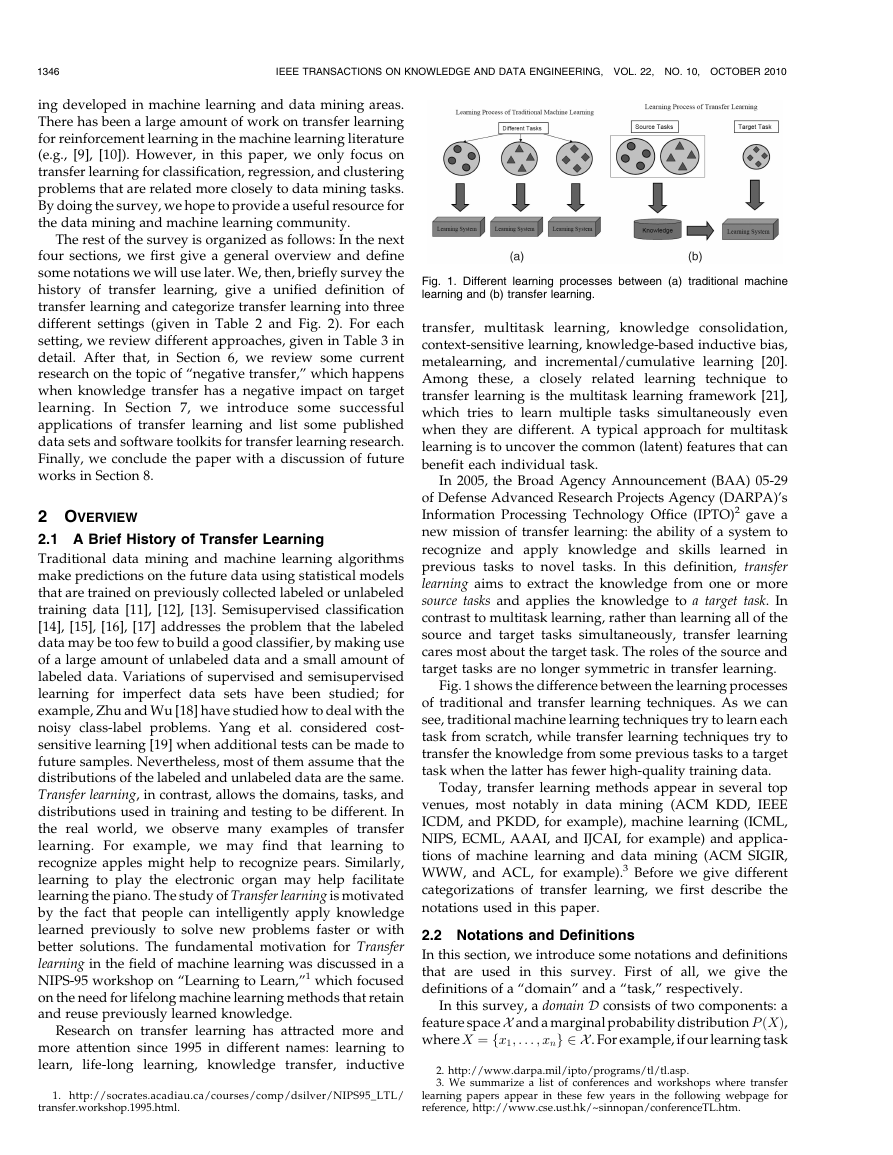

Fig. 1. Different learning processes between (a) traditional machine

learning and (b) transfer learning.

transfer, multitask learning, knowledge consolidation,

context-sensitive learning, knowledge-based inductive bias,

metalearning, and incremental/cumulative learning [20].

Among these, a closely related learning technique to

transfer learning is the multitask learning framework [21],

which tries to learn multiple tasks simultaneously even

when they are different. A typical approach for multitask

learning is to uncover the common (latent) features that can

benefit each individual task.

In 2005, the Broad Agency Announcement (BAA) 05-29

of Defense Advanced Research Projects Agency (DARPA)’s

Information Processing Technology Office (IPTO)2 gave a

new mission of transfer learning: the ability of a system to

recognize and apply knowledge and skills learned in

previous tasks to novel tasks. In this definition, transfer

learning aims to extract the knowledge from one or more

source tasks and applies the knowledge to a target task. In

contrast to multitask learning, rather than learning all of the

source and target tasks simultaneously, transfer learning

cares most about the target task. The roles of the source and

target tasks are no longer symmetric in transfer learning.

Fig. 1 shows the difference between the learning processes

of traditional and transfer learning techniques. As we can

see, traditional machine learning techniques try to learn each

task from scratch, while transfer learning techniques try to

transfer the knowledge from some previous tasks to a target

task when the latter has fewer high-quality training data.

Today, transfer learning methods appear in several top

venues, most notably in data mining (ACM KDD, IEEE

ICDM, and PKDD, for example), machine learning (ICML,

NIPS, ECML, AAAI, and IJCAI, for example) and applica-

tions of machine learning and data mining (ACM SIGIR,

WWW, and ACL, for example).3 Before we give different

categorizations of transfer learning, we first describe the

notations used in this paper.

2.2 Notations and Definitions

In this section, we introduce some notations and definitions

that are used in this survey. First of all, we give the

definitions of a “domain” and a “task,” respectively.

In this survey, a domain D consists of two components: a

feature spaceX and a marginal probability distribution PðXÞ,

where X ¼ fx1; . . . ; xng 2 X . For example, if our learning task

2. http://www.darpa.mil/ipto/programs/tl/tl.asp.

3. We summarize a list of conferences and workshops where transfer

learning papers appear in these few years in the following webpage for

reference, http://www.cse.ust.hk/~sinnopan/conferenceTL.htm.

�

PAN AND YANG: A SURVEY ON TRANSFER LEARNING

1347

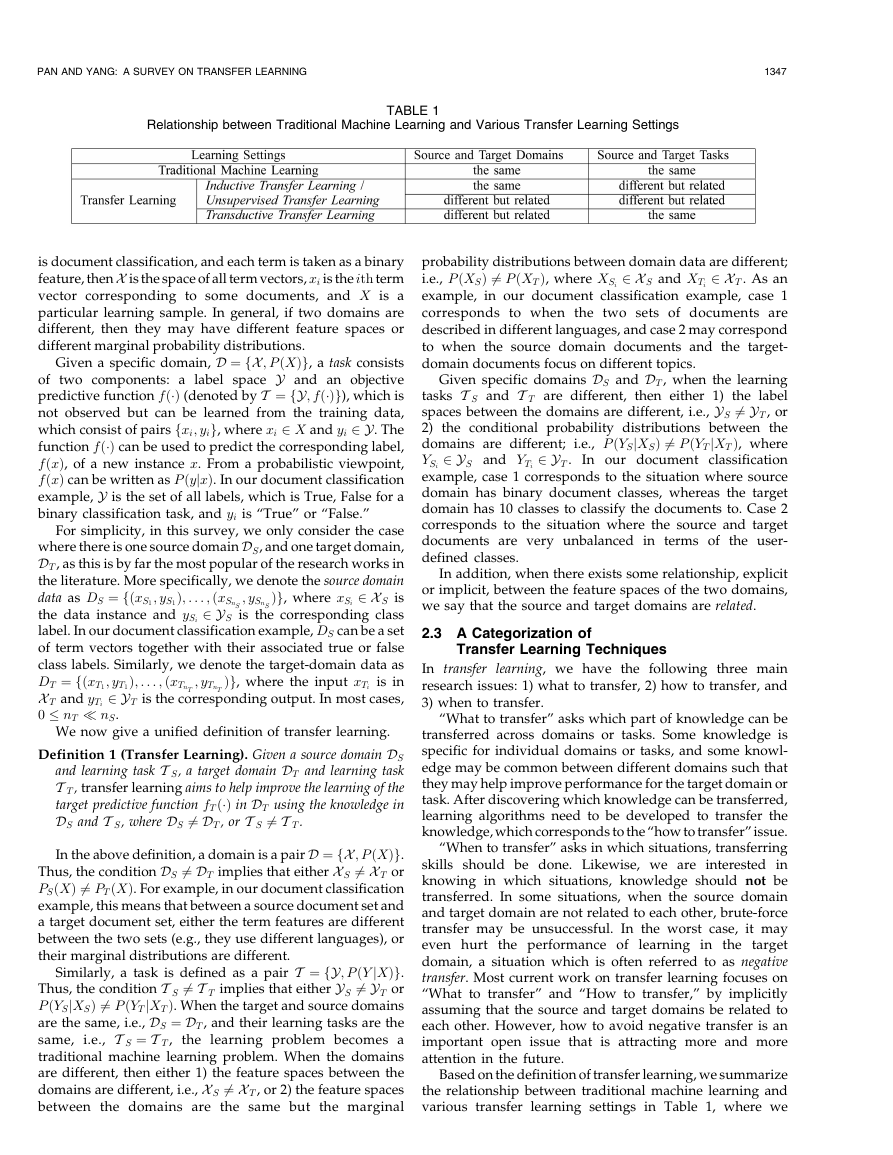

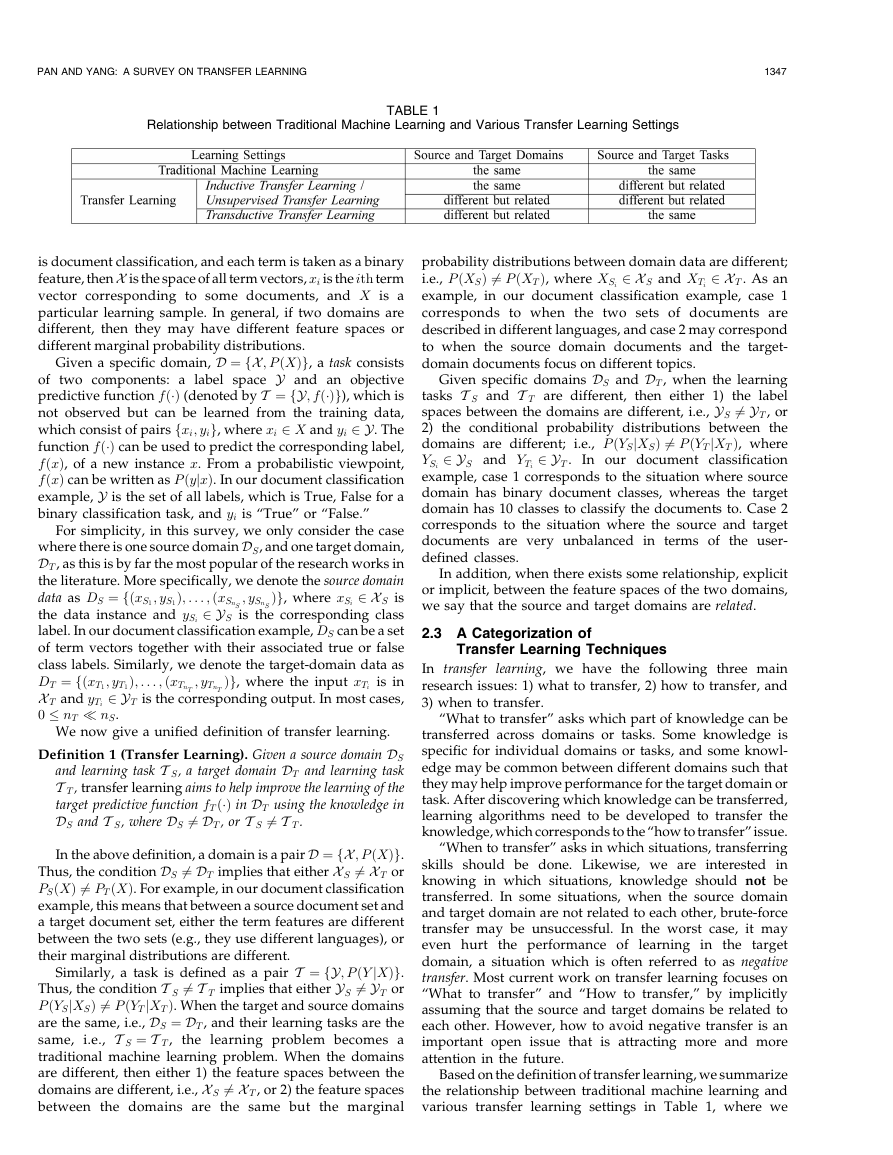

Relationship between Traditional Machine Learning and Various Transfer Learning Settings

TABLE 1

is document classification, and each term is taken as a binary

feature, thenX is the space of all term vectors, xi is the ith term

vector corresponding to some documents, and X is a

particular learning sample. In general, if two domains are

different, then they may have different feature spaces or

different marginal probability distributions.

Given a specific domain, D ¼ fX ; PðXÞg, a task consists

of two components: a label space Y and an objective

predictive function fð�Þ (denoted by T ¼ fY; fð�Þg), which is

not observed but can be learned from the training data,

which consist of pairs fxi; yig, where xi 2 X and yi 2 Y. The

function fð�Þ can be used to predict the corresponding label,

fðxÞ, of a new instance x. From a probabilistic viewpoint,

fðxÞ can be written as PðyjxÞ. In our document classification

example, Y is the set of all labels, which is True, False for a

binary classification task, and yi is “True” or “False.”

For simplicity, in this survey, we only consider the case

where there is one source domain DS, and one target domain,

DT , as this is by far the most popular of the research works in

the literature. More specifically, we denote the source domain

data as DS ¼ fðxS1 ; yS1Þ; . . . ;ðxSnS

Þg, where xSi 2 X S is

the data instance and ySi 2 YS is the corresponding class

label. In our document classification example, DS can be a set

of term vectors together with their associated true or false

class labels. Similarly, we denote the target-domain data as

DT ¼ fðxT1 ; yT1Þ; . . . ;ðxTnT

Þg, where the input xTi is in

X T and yTi 2 YT is the corresponding output. In most cases,

0 � nT � nS.

; ySnS

; yTnT

We now give a unified definition of transfer learning.

Definition 1 (Transfer Learning). Given a source domain DS

and learning task T S, a target domain DT and learning task

T T , transfer learning aims to help improve the learning of the

target predictive function fTð�Þ in DT using the knowledge in

DS and T S, where DS 6¼ DT , or T S 6¼ T T .

In the above definition, a domain is a pair D ¼ fX ; PðXÞg.

Thus, the condition DS 6¼ DT implies that either X S 6¼ X T or

PSðXÞ 6¼ PTðXÞ. For example, in our document classification

example, this means that between a source document set and

a target document set, either the term features are different

between the two sets (e.g., they use different languages), or

their marginal distributions are different.

Similarly, a task is defined as a pair T ¼ fY; PðY jXÞg.

Thus, the condition T S 6¼ T T implies that either YS 6¼ YT or

PðYSjXSÞ 6¼ PðYTjXTÞ. When the target and source domains

are the same, i.e., DS ¼ DT , and their learning tasks are the

the learning problem becomes a

same,

traditional machine learning problem. When the domains

are different, then either 1) the feature spaces between the

domains are different, i.e., X S 6¼ X T , or 2) the feature spaces

between the domains are the same but the marginal

i.e., T S ¼ T T ,

probability distributions between domain data are different;

i.e., PðXSÞ 6¼ PðXTÞ, where XSi 2 X S and XTi 2 X T . As an

example, in our document classification example, case 1

corresponds to when the two sets of documents are

described in different languages, and case 2 may correspond

to when the source domain documents and the target-

domain documents focus on different topics.

Given specific domains DS and DT , when the learning

tasks T S and T T are different, then either 1) the label

spaces between the domains are different, i.e., YS 6¼ YT , or

2) the conditional probability distributions between the

domains are different; i.e., PðYSjXSÞ 6¼ PðYTjXTÞ, where

YSi 2 YS and YTi 2 YT .

In our document classification

example, case 1 corresponds to the situation where source

domain has binary document classes, whereas the target

domain has 10 classes to classify the documents to. Case 2

corresponds to the situation where the source and target

documents are very unbalanced in terms of the user-

defined classes.

In addition, when there exists some relationship, explicit

or implicit, between the feature spaces of the two domains,

we say that the source and target domains are related.

2.3 A Categorization of

Transfer Learning Techniques

In transfer learning, we have the following three main

research issues: 1) what to transfer, 2) how to transfer, and

3) when to transfer.

“What to transfer” asks which part of knowledge can be

transferred across domains or tasks. Some knowledge is

specific for individual domains or tasks, and some knowl-

edge may be common between different domains such that

they may help improve performance for the target domain or

task. After discovering which knowledge can be transferred,

learning algorithms need to be developed to transfer the

knowledge, which corresponds to the “how to transfer” issue.

“When to transfer” asks in which situations, transferring

skills should be done. Likewise, we are interested in

knowing in which situations, knowledge should not be

transferred. In some situations, when the source domain

and target domain are not related to each other, brute-force

transfer may be unsuccessful. In the worst case, it may

even hurt

learning in the target

domain, a situation which is often referred to as negative

transfer. Most current work on transfer learning focuses on

“What to transfer” and “How to transfer,” by implicitly

assuming that the source and target domains be related to

each other. However, how to avoid negative transfer is an

important open issue that is attracting more and more

attention in the future.

the performance of

Based on the definition of transfer learning, we summarize

the relationship between traditional machine learning and

various transfer learning settings in Table 1, where we

�

1348

IEEE TRANSACTIONS ON KNOWLEDGE AND DATA ENGINEERING, VOL. 22, NO. 10, OCTOBER 2010

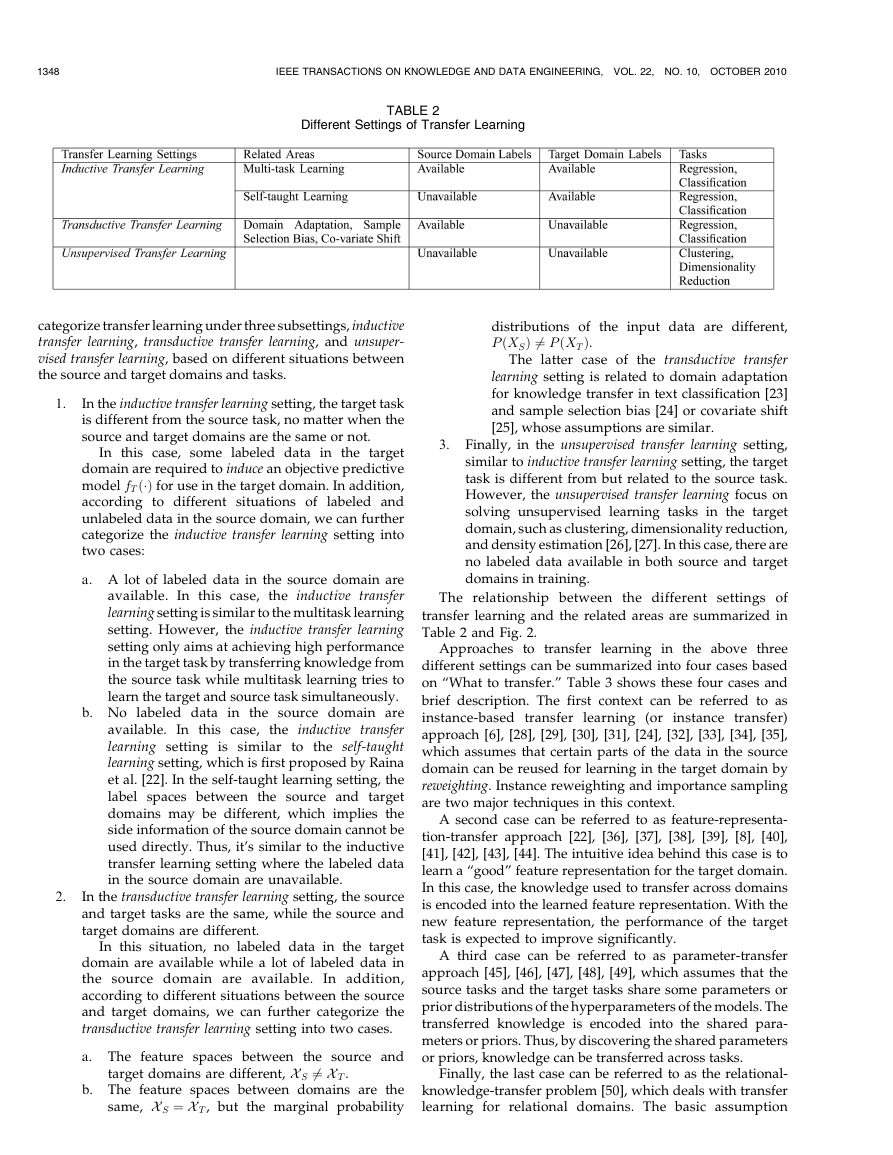

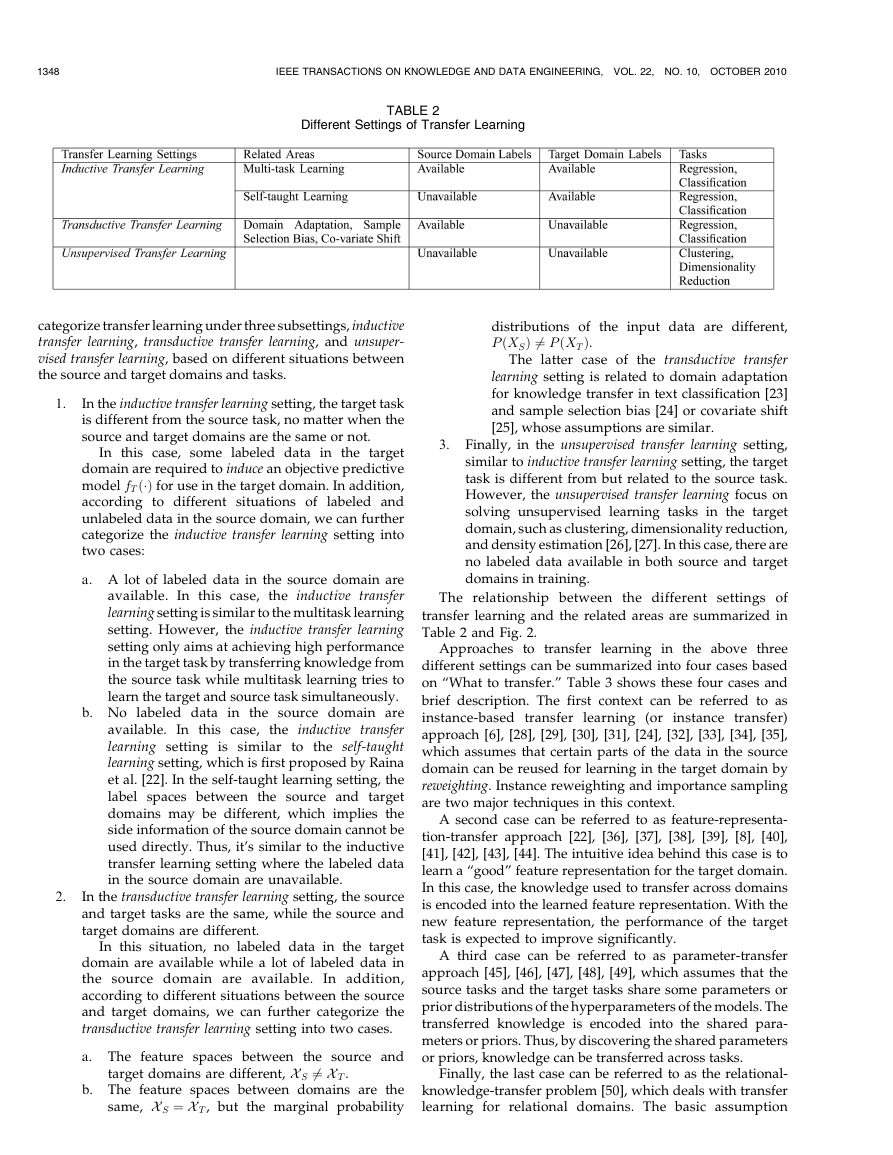

TABLE 2

Different Settings of Transfer Learning

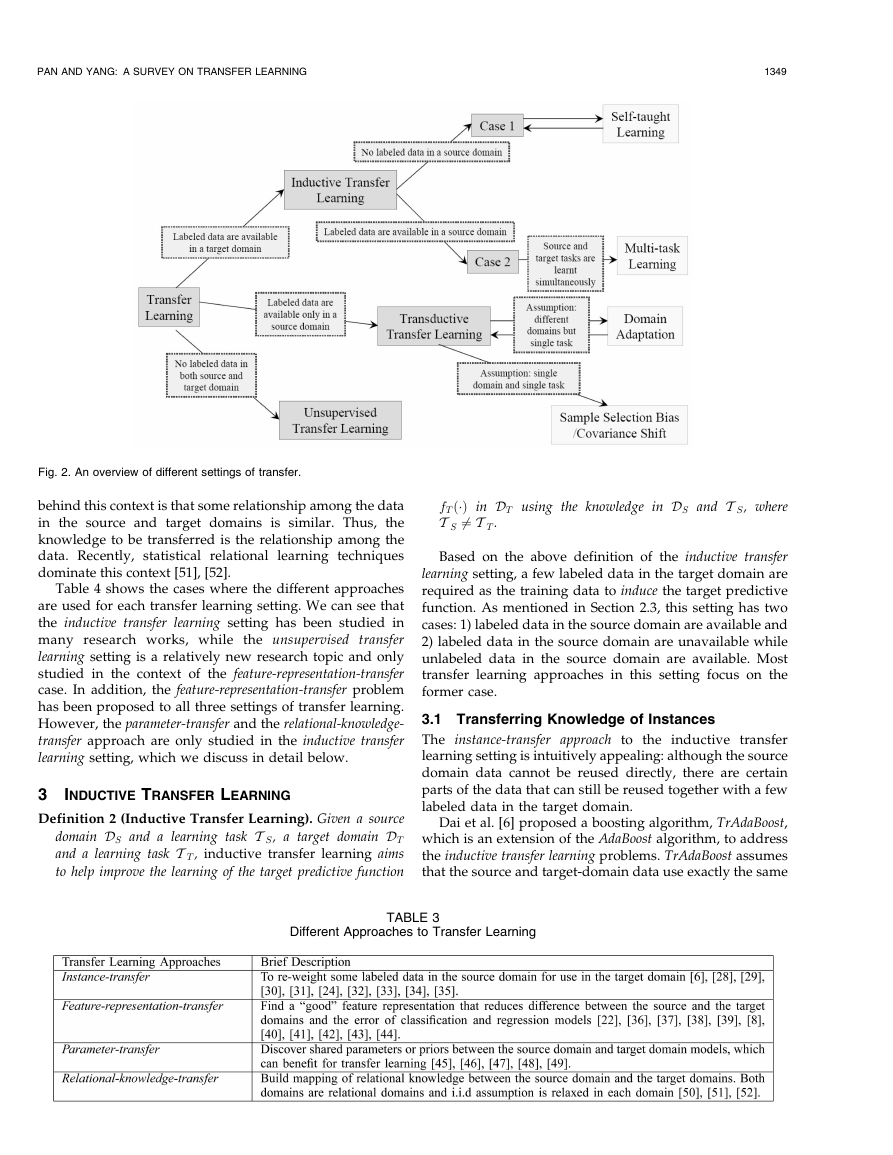

categorize transfer learning under three subsettings, inductive

transfer learning, transductive transfer learning, and unsuper-

vised transfer learning, based on different situations between

the source and target domains and tasks.

1.

In the inductive transfer learning setting, the target task

is different from the source task, no matter when the

source and target domains are the same or not.

In this case, some labeled data in the target

domain are required to induce an objective predictive

model fTð�Þ for use in the target domain. In addition,

according to different situations of

labeled and

unlabeled data in the source domain, we can further

categorize the inductive transfer learning setting into

two cases:

a. A lot of labeled data in the source domain are

available. In this case, the inductive transfer

learning setting is similar to the multitask learning

setting. However, the inductive transfer learning

setting only aims at achieving high performance

in the target task by transferring knowledge from

the source task while multitask learning tries to

learn the target and source task simultaneously.

b. No labeled data in the source domain are

available.

the inductive transfer

learning setting is similar to the self-taught

learning setting, which is first proposed by Raina

et al. [22]. In the self-taught learning setting, the

label spaces between the source and target

domains may be different, which implies the

side information of the source domain cannot be

used directly. Thus, it’s similar to the inductive

transfer learning setting where the labeled data

in the source domain are unavailable.

In this case,

In the transductive transfer learning setting, the source

and target tasks are the same, while the source and

target domains are different.

In this situation, no labeled data in the target

domain are available while a lot of labeled data in

the source domain are available.

In addition,

according to different situations between the source

and target domains, we can further categorize the

transductive transfer learning setting into two cases.

a. The feature spaces between the source and

target domains are different, X S 6¼ X T .

b. The feature spaces between domains are the

same, X S ¼ X T , but the marginal probability

2.

3.

distributions of the input data are different,

PðXSÞ 6¼ PðXTÞ.

The latter case of the transductive transfer

learning setting is related to domain adaptation

for knowledge transfer in text classification [23]

and sample selection bias [24] or covariate shift

[25], whose assumptions are similar.

Finally, in the unsupervised transfer learning setting,

similar to inductive transfer learning setting, the target

task is different from but related to the source task.

However, the unsupervised transfer learning focus on

solving unsupervised learning tasks in the target

domain, such as clustering, dimensionality reduction,

and density estimation [26], [27]. In this case, there are

no labeled data available in both source and target

domains in training.

The relationship between the different settings of

transfer learning and the related areas are summarized in

Table 2 and Fig. 2.

Approaches to transfer learning in the above three

different settings can be summarized into four cases based

on “What to transfer.” Table 3 shows these four cases and

brief description. The first context can be referred to as

instance-based transfer learning (or instance transfer)

approach [6], [28], [29], [30], [31], [24], [32], [33], [34], [35],

which assumes that certain parts of the data in the source

domain can be reused for learning in the target domain by

reweighting. Instance reweighting and importance sampling

are two major techniques in this context.

A second case can be referred to as feature-representa-

tion-transfer approach [22], [36], [37], [38], [39], [8], [40],

[41], [42], [43], [44]. The intuitive idea behind this case is to

learn a “good” feature representation for the target domain.

In this case, the knowledge used to transfer across domains

is encoded into the learned feature representation. With the

new feature representation, the performance of the target

task is expected to improve significantly.

A third case can be referred to as parameter-transfer

approach [45], [46], [47], [48], [49], which assumes that the

source tasks and the target tasks share some parameters or

prior distributions of the hyperparameters of the models. The

transferred knowledge is encoded into the shared para-

meters or priors. Thus, by discovering the shared parameters

or priors, knowledge can be transferred across tasks.

Finally, the last case can be referred to as the relational-

knowledge-transfer problem [50], which deals with transfer

learning for relational domains. The basic assumption

�

PAN AND YANG: A SURVEY ON TRANSFER LEARNING

1349

Fig. 2. An overview of different settings of transfer.

behind this context is that some relationship among the data

in the source and target domains is similar. Thus, the

knowledge to be transferred is the relationship among the

data. Recently, statistical relational

learning techniques

dominate this context [51], [52].

Table 4 shows the cases where the different approaches

are used for each transfer learning setting. We can see that

the inductive transfer learning setting has been studied in

many research works, while the unsupervised transfer

learning setting is a relatively new research topic and only

studied in the context of the feature-representation-transfer

case. In addition, the feature-representation-transfer problem

has been proposed to all three settings of transfer learning.

However, the parameter-transfer and the relational-knowledge-

transfer approach are only studied in the inductive transfer

learning setting, which we discuss in detail below.

3 INDUCTIVE TRANSFER LEARNING

Definition 2 (Inductive Transfer Learning). Given a source

domain DS and a learning task T S, a target domain DT

and a learning task T T , inductive transfer learning aims

to help improve the learning of the target predictive function

fTð�Þ in DT using the knowledge in DS and T S, where

T S 6¼ T T .

Based on the above definition of the inductive transfer

learning setting, a few labeled data in the target domain are

required as the training data to induce the target predictive

function. As mentioned in Section 2.3, this setting has two

cases: 1) labeled data in the source domain are available and

2) labeled data in the source domain are unavailable while

unlabeled data in the source domain are available. Most

transfer learning approaches in this setting focus on the

former case.

3.1 Transferring Knowledge of Instances

The instance-transfer approach to the inductive transfer

learning setting is intuitively appealing: although the source

domain data cannot be reused directly, there are certain

parts of the data that can still be reused together with a few

labeled data in the target domain.

Dai et al. [6] proposed a boosting algorithm, TrAdaBoost,

which is an extension of the AdaBoost algorithm, to address

the inductive transfer learning problems. TrAdaBoost assumes

that the source and target-domain data use exactly the same

Different Approaches to Transfer Learning

TABLE 3

�

1350

IEEE TRANSACTIONS ON KNOWLEDGE AND DATA ENGINEERING, VOL. 22, NO. 10, OCTOBER 2010

Different Approaches Used in Different Settings

TABLE 4

set of features and labels, but the distributions of the data in

the two domains are different. In addition, TrAdaBoost

assumes that, due to the difference in distributions between

the source and the target domains, some of the source

domain data may be useful

in learning for the target

domain but some of them may not and could even be

harmful. It attempts to iteratively reweight

the source

domain data to reduce the effect of the “bad” source data

while encourage the “good” source data to contribute more

for the target domain. For each round of

iteration,

TrAdaBoost trains the base classifier on the weighted source

and target data. The error is only calculated on the target

data. Furthermore, TrAdaBoost uses the same strategy as

AdaBoost to update the incorrectly classified examples in the

target domain while using a different strategy from

AdaBoost to update the incorrectly classified source exam-

ples in the source domain. Theoretical analysis of TrAda-

Boost in also given in [6].

Jiang and Zhai [30] proposed a heuristic method to

remove “misleading” training examples from the source

domain based on the difference between conditional

probabilities PðyTjxTÞ and PðySjxSÞ. Liao et al.

[31]

proposed a new active learning method to select

the

unlabeled data in a target domain to be labeled with the

help of the source domain data. Wu and Dietterich [53]

integrated the source domain (auxiliary) data an Support

Vector Machine (SVM)

framework for improving the

classification performance.

3.2 Transferring Knowledge of Feature

Representations

The feature-representation-transfer approach to the induc-

tive transfer learning problem aims at finding “good” feature

representations to minimize domain divergence and classi-

fication or regression model error. Strategies to find “good”

feature representations are different for different types of

the source domain data. If a lot of labeled data in the source

domain are available, supervised learning methods can be

used to construct a feature representation. This is similar to

common feature learning in the field of multitask learning

[40]. If no labeled data in the source domain are available,

unsupervised learning methods are proposed to construct

the feature representation.

3.2.1 Supervised Feature Construction

Supervised feature construction methods for the inductive

transfer learning setting are similar to those used in multitask

learning. The basic idea is to learn a low-dimensional

representation that

In

addition, the learned new representation can reduce the

classification or regression model error of each task as well.

Argyriou et al. [40] proposed a sparse feature learning

method for multitask learning. In the inductive transfer

is shared across related tasks.

learning setting, the common features can be learned by

solving an optimization problem, given as follows:

Lðyti ;hat; U T xtiiÞ þ

kAk2

2;1

ð1Þ

X

X

nt

arg min

A;U

i¼1

t2fT ;Sg

s:t: U 2 Od:

In this equation, S and T denote the tasks in the source

domain and target domain, respectively. A ¼ ½aS; aT 2 Rd�2

is a matrix of parameters. U is a d � d orthogonal matrix

P

(mapping function) for mapping the original high-dimen-

sional data to low-dimensional representations. The ðr; pÞ-

i¼1 kaikp

norm of A is defined as kAkr;p :¼ ð

rÞ1

p. The

optimization problem (1) estimates the low-dimensional

representations U T XT , U T XS and the parameters, A, of the

model at the same time. The optimization problem (1) can

be further transformed into an equivalent convex optimiza-

tion formulation and be solved efficiently. In a follow-up

work, Argyriou et al. [41] proposed a spectral regularization

framework on matrices for multitask structure learning.

d

Lee et al. [42] proposed a convex optimization algorithm

for simultaneously learning metapriors and feature weights

from an ensemble of related prediction tasks. The meta-

priors can be transferred among different tasks. Jebara [43]

proposed to select features for multitask learning with

SVMs. Ru¨ ckert and Kramer [54] designed a kernel-based

approach to inductive transfer, which aims at finding a

suitable kernel for the target data.

3.2.2 Unsupervised Feature Construction

In [22], Raina et al. proposed to apply sparse coding [55],

which is an unsupervised feature construction method, for

learning higher level features for transfer learning. The basic

idea of this approach consists of two steps. In the first step,

higher level basis vectors b ¼ fb1; b2; . . . ; bsg are learned on

the source domain data by solving the optimization

problem (2) as shown as follows:

X

min

a;b

i

s:t:

X

2

xSi �

aj

Si

kbjk2 � 1;

j

þ �

aSi

bj

8j 2 1; . . . ; s:

2

1

In this equation, aj

Si is a new representation of basis bj for

input xSi and � is a coefficient to balance the feature

construction term and the regularization term. After learning

the basis vectors b, in the second step, an optimization

algorithm (3) is applied on the target-domain data to learn

higher level features based on the basis vectors b.

X

2

þ �

aTi

1:

Ti ¼ arg min

a�

aTi

xTi �

aj

Ti

bj

j

2

ð2Þ

ð3Þ

�

PAN AND YANG: A SURVEY ON TRANSFER LEARNING

Tig0

Finally, discriminative algorithms can be applied to fa�

s

with corresponding labels to train classification or regres-

sion models for use in the target domain. One drawback of

this method is that the so-called higher level basis vectors

learned on the source domain in the optimization problem

(2) may not be suitable for use in the target domain.

Recently, manifold learning methods have been

adapted for transfer learning. In [44], Wang and Mahade-

van proposed a Procrustes analysis-based approach to

manifold alignment without correspondences, which can

be used to transfer the knowledge across domains via the

aligned manifolds.

3.3 Transferring Knowledge of Parameters

Most parameter-transfer approaches to the inductive transfer

learning setting assume that individual models for related

tasks should share some parameters or prior distributions

of hyperparameters. Most approaches described in this

section,

including a regularization framework and a

hierarchical Bayesian framework, are designed to work

under multitask learning. However, they can be easily

modified for transfer learning. As mentioned above, multi-

task learning tries to learn both the source and target tasks

simultaneously and perfectly, while transfer learning only

aims at boosting the performance of the target domain by

utilizing the source domain data. Thus,

in multitask

learning, weights of the loss functions for the source and

target data are the same. In contrast, in transfer learning,

weights in the loss functions for different domains can be

different. Intuitively, we may assign a larger weight to the

loss function of the target domain to make sure that we can

achieve better performance in the target domain.

Lawrence and Platt [45] proposed an efficient algorithm

known as MT-IVM, which is based on Gaussian Processes

(GP), to handle the multitask learning case. MT-IVM tries to

learn parameters of a Gaussian Process over multiple tasks

by sharing the same GP prior. Bonilla et al. [46] also

investigated multitask learning in the context of GP. The

authors proposed to use a free-form covariance matrix over

tasks to model intertask dependencies, where a GP prior is

used to induce correlations between tasks. Schwaighofer

et al. [47] proposed to use a hierarchical Bayesian frame-

work (HB) together with GP for multitask learning.

Besides transferring the priors of the GP models, some

researchers also proposed to transfer parameters of SVMs

under a regularization framework. Evgeniou and Pontil [48]

borrowed the idea of HB to SVMs for multitask learning.

The proposed method assumed that the parameter, w, in

SVMs for each task can be separated into two terms. One is

a common term over tasks and the other is a task-specific

term. In inductive transfer learning,

wS ¼ w0 þ vS and wT ¼ w0 þ vT ;

where wS and wT are parameters of the SVMs for the source

task and the target learning task, respectively. w0 is a

common parameter while vS and vT are specific parameters

for the source task and the target task, respectively. By

assuming ft ¼ wt � x to be a hyperplane for task t, an

extension of SVMs to multitask learning case can be written

as the following:

min

w0;vt;�ti

¼

s:t:

Jðw0; vt; �tiÞ

X

X

nt

X

�ti þ �1

2

kvtk2 þ �2kw0k2

i¼1

t2fS;Tg

t2fS;Tg

ytiðw0 þ vtÞ � xti � 1 � �ti ;

�ti � 0; i 2 f1; 2; . . . ; ntg and t 2 fS; Tg:

1351

ð4Þ

By solving the optimization problem above, we can learn

the parameters w0, vS, and vT simultaneously.

Several researchers have pursued the parameter-transfer

approach further. Gao et al.

[49] proposed a locally

weighted ensemble learning framework to combine multi-

ple models for transfer learning, where the weights are

dynamically assigned according to a model’s predictive

power on each test example in the target domain.

3.4 Transferring Relational Knowledge

Different from other three contexts, the relational-knowl-

edge-transfer approach deals with transfer learning pro-

blems in relational domains, where the data are non-i.i.d. and

can be represented by multiple relations, such as networked

data and social network data. This approach does not assume

that the data drawn from each domain be independent and

identically distributed (i.i.d.) as traditionally assumed. It

tries to transfer the relationship among data from a source

domain to a target domain. In this context, statistical relational

learning techniques are proposed to solve these problems.

Mihalkova et al. [50] proposed an algorithm TAMAR that

transfers relational knowledge with Markov Logic Net-

works (MLNs) across relational domains. MLNs [56] is a

powerful formalism, which combines the compact expres-

siveness of first-order logic with flexibility of probability,

for statistical relational learning. In MLNs, entities in a

relational domain are represented by predicates and their

relationships are represented in first-order logic. TAMAR is

motivated by the fact that if two domains are related to each

other, there may exist mappings to connect entities and

their relationships from a source domain to a target domain.

For example, a professor can be considered as playing a

similar role in an academic domain as a manager in an

industrial management domain. In addition, the relation-

ship between a professor and his or her students is similar

to the relationship between a manager and his or her

workers. Thus, there may exist a mapping from professor to

manager and a mapping from the professor-student

relationship to the manager-worker relationship. In this

vein, TAMAR tries to use an MLN learned for a source

domain to aid in the learning of an MLN for a target

domain. Basically, TAMAR is a two-stage algorithm. In the

first step, a mapping is constructed from a source MLN to

the target domain based on weighted pseudo log-likelihood

measure (WPLL). In the second step, a revision is done for

the mapped structure in the target domain through the

FORTE algorithm [57], which is an inductive logic

programming (ILP) algorithm for revising first-order

theories. The revised MLN can be used as a relational

model for inference or reasoning in the target domain.

In the AAAI-2008 workshop on transfer learning for

complex tasks,4 Mihalkova and Mooney [51] extended

4. http://www.cs.utexas.edu/~mtaylor/AAAI08TL/.

�

1352

IEEE TRANSACTIONS ON KNOWLEDGE AND DATA ENGINEERING, VOL. 22, NO. 10, OCTOBER 2010

TAMAR to the single-entity-centered setting of transfer

learning, where only one entity in a target domain is

available. Davis and Domingos [52] proposed an approach

to transferring relational knowledge based on a form of

second-order Markov logic. The basic idea of the algorithm

is to discover structural regularities in the source domain in

the form of Markov logic formulas with predicate variables,

by instantiating these formulas with predicates from the

target domain.

4 TRANSDUCTIVE TRANSFER LEARNING

The term transductive transfer learning was first proposed by

Arnold et al. [58], where they required that the source and

target tasks be the same, although the domains may be

different. On top of these conditions, they further required

that all unlabeled data in the target domain are available at

training time, but we believe that this condition can be

relaxed; instead, in our definition of the transductive transfer

learning setting, we only require that part of the unlabeled

target data be seen at training time in order to obtain the

marginal probability for the target data.

Note that the word “transductive” is used with several

meanings.

In the traditional machine learning setting,

transductive learning [59] refers to the situation where all

test data are required to be seen at training time, and that

the learned model cannot be reused for future data. Thus,

when some new test data arrive, they must be classified

together with all existing data. In our categorization of

transfer learning, in contrast, we use the term transductive to

emphasize the concept that in this type of transfer learning,

the tasks must be the same and there must be some

unlabeled data available in the target domain.

Definition 3 (Transductive Transfer Learning). Given a

source domain DS and a corresponding learning task T S, a

target domain DT and a corresponding learning task T T ,

transductive transfer learning aims to improve the learning of

the target predictive function fTð�Þ inDT using the knowledge in

DS and T S, where DS 6¼ DT and T S ¼ T T . In addition, some

unlabeled target-domain data must be available at training time.

This definition covers the work of Arnold et al. [58], since

the latter considered domain adaptation, where the difference

lies between the marginal probability distributions of

source and target data; i.e., the tasks are the same but the

domains are different.

Similar to the traditional transductive learning setting,

which aims to make the best use of the unlabeled test data

for learning, in our classification scheme under transductive

transfer learning, we also assume that some target-domain

unlabeled data be given.

In the above definition of

transductive transfer learning, the source and target tasks

are the same, which implies that one can adapt

the

predictive function learned in the source domain for use

in the target domain through some unlabeled target-domain

data. As mentioned in Section 2.3, this setting can be split to

two cases: 1) The feature spaces between the source and

target domains are different, X S 6¼ X T , and 2) the feature

spaces between domains are the same, X S ¼ X T , but the

marginal probability distributions of the input data are

different, PðXSÞ 6¼ PðXTÞ. This is similar to the require-

ments in domain adaptation and sample selection bias.

Most approaches described in the following sections are

related to case 2 above.

4.1 Transferring the Knowledge of Instances

Most

instance-transfer approaches to the transductive

transfer learning setting are motivated by importance

sampling. To see how importance-sampling-based methods

may help in this setting, we first review the problem of

empirical risk minimization (ERM) [60]. In general, we

might want to learn the optimal parameters �� of the model

by minimizing the expected risk,

�� ¼ arg min

�2�

EEðx;yÞ2P½lðx; y; �Þ;

where lðx; y; �Þ is a loss function that depends on the

parameter �. However, since it is hard to estimate the

probability distribution P , we choose to minimize the ERM

instead,

X

n

i¼1

�� ¼ arg min

�2�

1

n

½lðxi; yi; �Þ;

where n is size of the training data.

In the transductive transfer learning setting, we want to

learn an optimal model for the target domain by minimiz-

ing the expected risk,

X

�� ¼ arg min

�2�

ðx;yÞ2DT

PðDTÞlðx; y; �Þ:

However, since no labeled data in the target domain are

observed in training data, we have to learn a model from

the source domain data instead. If PðDSÞ ¼ PðDTÞ, then we

may simply learn the model by solving the following

optimization problem for use in the target domain,

X

�� ¼ arg min

�2�

ðx;yÞ2DS

PðDSÞlðx; y; �Þ:

Otherwise, when PðDSÞ 6¼ PðDTÞ, we need to modify the

above optimization problem to learn a model with high

generalization ability for the target domain, as follows:

X

X

ðx;yÞ2DS

nS

i¼1

PðDTÞ

PðDSÞ PðDSÞlðx; y; �Þ

PTðxTi ; yTiÞ

PSðxSi ; ySiÞ lðxSi ; ySi ; �Þ:

�� ¼ arg min

�2�

� arg min

�2�

ð5Þ

Therefore, by adding different penalty values to each instance

PTðxTi ;yTiÞ

ðxSi ; ySiÞ with the corresponding weight

PSðxSi ;ySiÞ , we can

learn a precise model for the target domain. Furthermore,

since PðYTjXTÞ ¼ PðYSjXSÞ. Thus, the difference between

PðDSÞ and PðDTÞ is caused by PðXSÞ and PðXTÞ and

PSðxSi ; ySiÞ ¼ PðxSiÞ

PTðxTi ; yTiÞ

PðxTiÞ :

PðxSiÞ

PðxTiÞ for each instance, we can solve the

If we can estimate

transductive transfer learning problems.

There exist various ways to estimate

PðxSiÞ

PðxTiÞ . Zadrozny [24]

proposed to estimate the terms PðxSiÞ and PðxTiÞ indepen-

dently by constructing simple classification problems.

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc