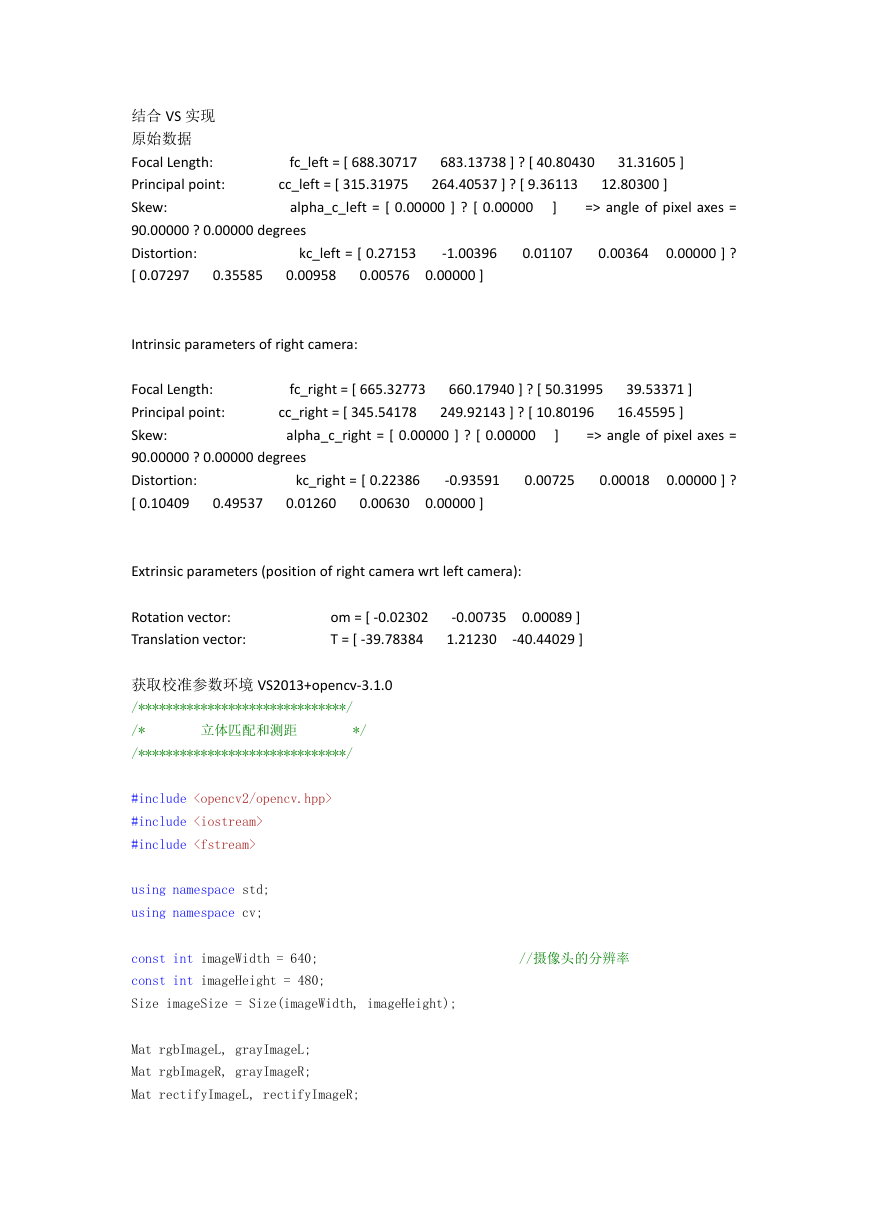

结合 VS 实现

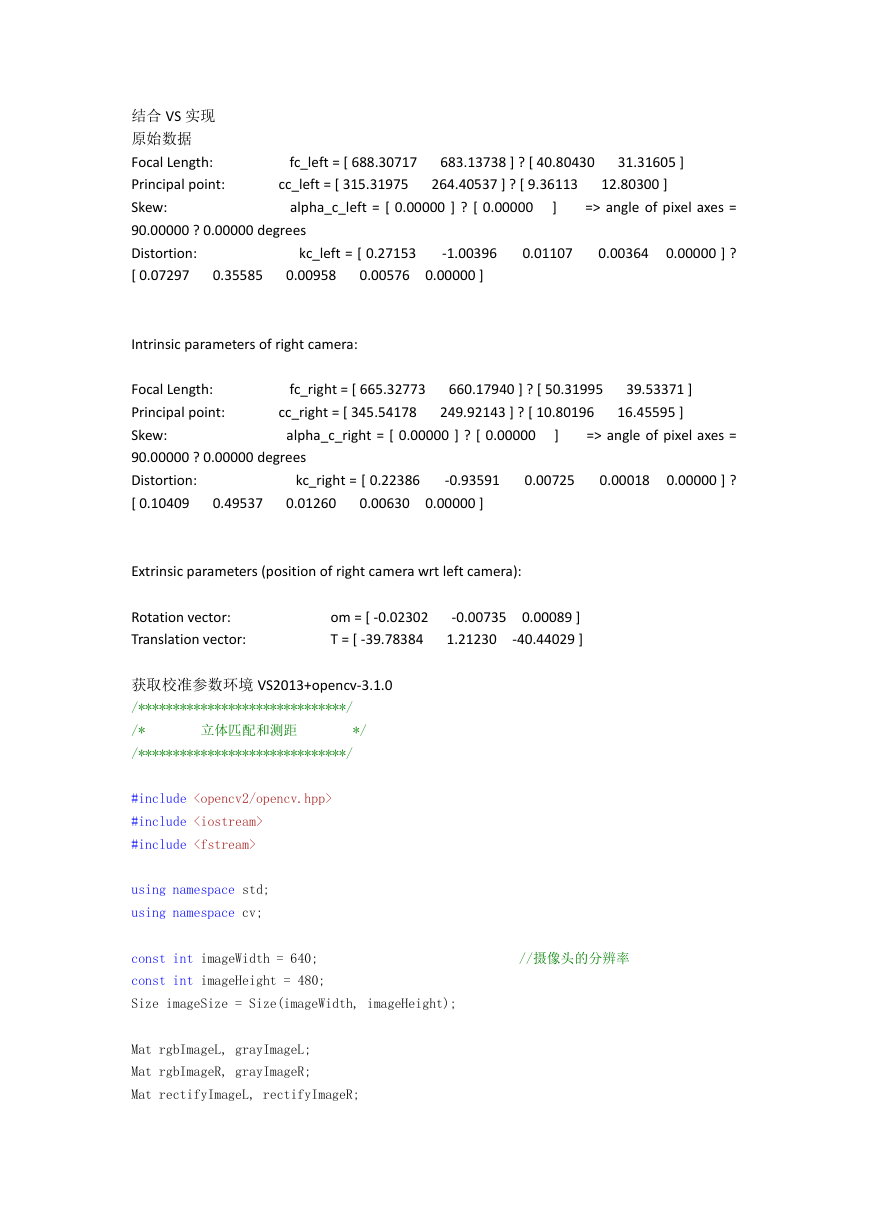

原始数据

Focal Length:

Principal point:

Skew:

90.00000 ? 0.00000 degrees

Distortion:

[ 0.07297

0.35585

fc_left = [ 688.30717

cc_left = [ 315.31975

683.13738 ] ? [ 40.80430

264.40537 ] ? [ 9.36113

31.31605 ]

12.80300 ]

alpha_c_left = [ 0.00000 ] ? [ 0.00000

]

=> angle of pixel axes =

kc_left = [ 0.27153

-1.00396

0.01107

0.00364

0.00000 ] ?

0.00958

0.00576 0.00000 ]

Intrinsic parameters of right camera:

Focal Length:

Principal point:

Skew:

90.00000 ? 0.00000 degrees

Distortion:

[ 0.10409

0.49537

fc_right = [ 665.32773

cc_right = [ 345.54178

alpha_c_right = [ 0.00000 ] ? [ 0.00000

660.17940 ] ? [ 50.31995

249.92143 ] ? [ 10.80196

39.53371 ]

16.45595 ]

]

=> angle of pixel axes =

kc_right = [ 0.22386

0.01260

0.00630 0.00000 ]

-0.93591

0.00725

0.00018

0.00000 ] ?

Extrinsic parameters (position of right camera wrt left camera):

Rotation vector:

Translation vector:

om = [ -0.02302

T = [ -39.78384

-0.00735 0.00089 ]

-40.44029 ]

1.21230

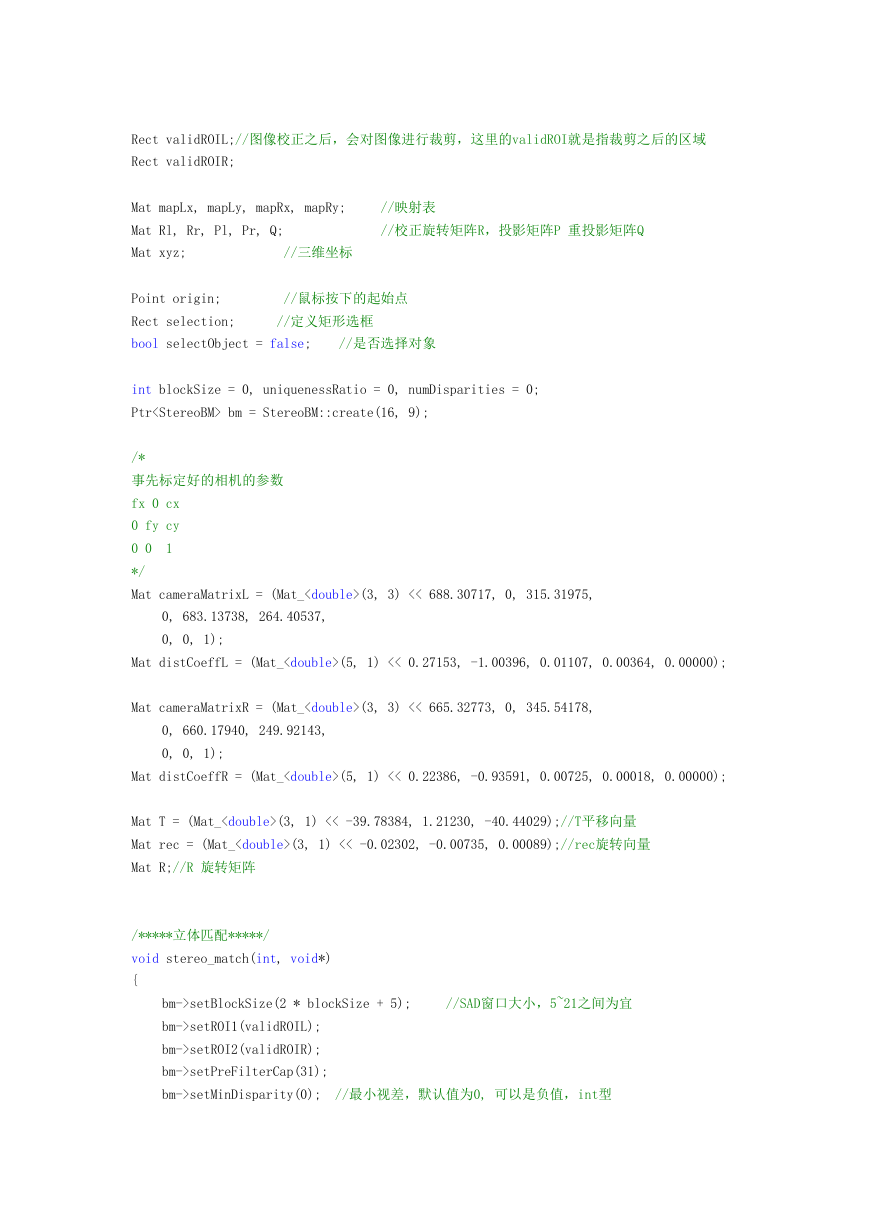

获取校准参数环境 VS2013+opencv-3.1.0

/******************************/

/*

立体匹配和测距

*/

/******************************/

#include

#include

#include

using namespace std;

using namespace cv;

const int imageWidth = 640;

const int imageHeight = 480;

Size imageSize = Size(imageWidth, imageHeight);

//摄像头的分辨率

Mat rgbImageL, grayImageL;

Mat rgbImageR, grayImageR;

Mat rectifyImageL, rectifyImageR;

�

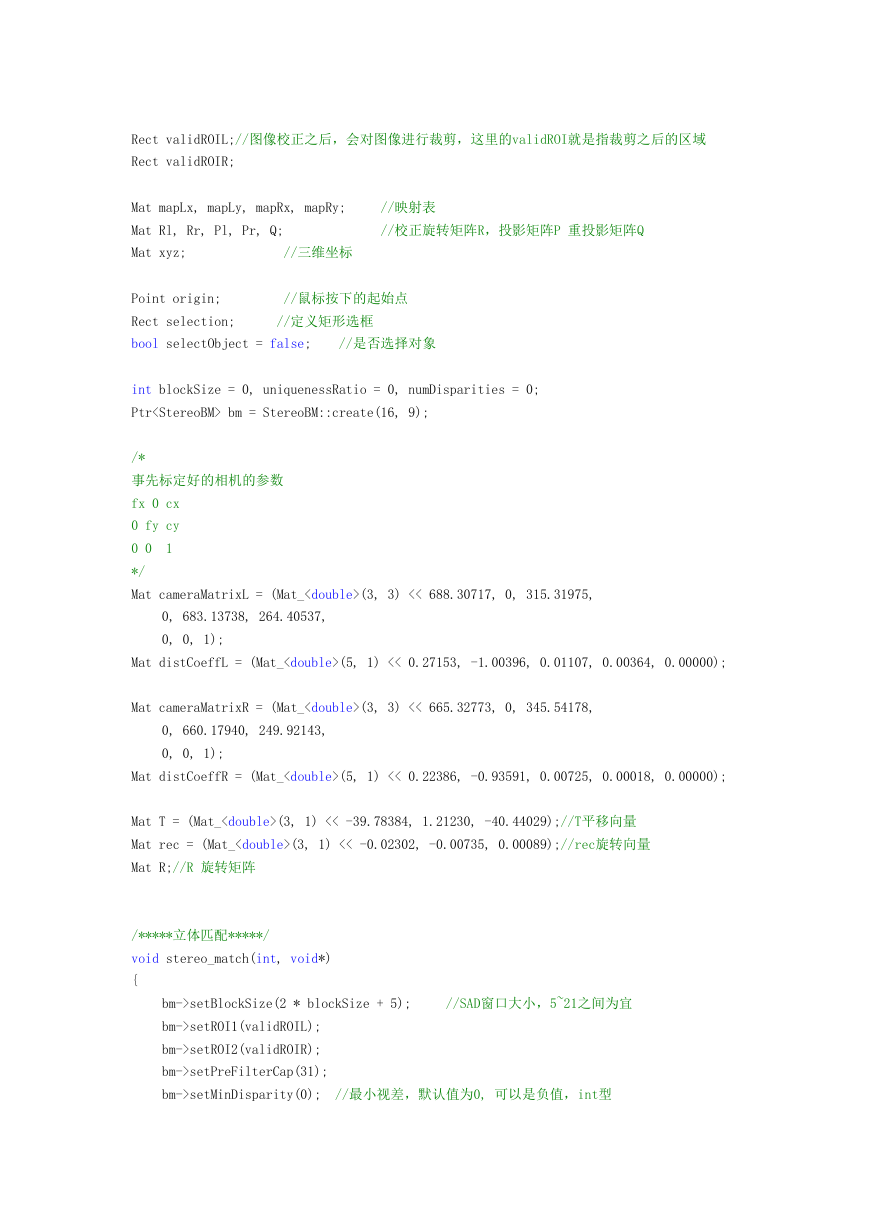

Rect validROIL;//图像校正之后,会对图像进行裁剪,这里的validROI就是指裁剪之后的区域

Rect validROIR;

Mat mapLx, mapLy, mapRx, mapRy;

//映射表

Mat Rl, Rr, Pl, Pr, Q;

//校正旋转矩阵R,投影矩阵P 重投影矩阵Q

Mat xyz;

//三维坐标

Point origin;

//鼠标按下的起始点

Rect selection;

//定义矩形选框

bool selectObject = false;

//是否选择对象

int blockSize = 0, uniquenessRatio = 0, numDisparities = 0;

Ptr bm = StereoBM::create(16, 9);

/*

事先标定好的相机的参数

fx 0 cx

0 fy cy

0 0

1

*/

Mat cameraMatrixL = (Mat_(3, 3) << 688.30717, 0, 315.31975,

0, 683.13738, 264.40537,

0, 0, 1);

Mat distCoeffL = (Mat_(5, 1) << 0.27153, -1.00396, 0.01107, 0.00364, 0.00000);

Mat cameraMatrixR = (Mat_(3, 3) << 665.32773, 0, 345.54178,

0, 660.17940, 249.92143,

0, 0, 1);

Mat distCoeffR = (Mat_(5, 1) << 0.22386, -0.93591, 0.00725, 0.00018, 0.00000);

Mat T = (Mat_(3, 1) << -39.78384, 1.21230, -40.44029);//T平移向量

Mat rec = (Mat_(3, 1) << -0.02302, -0.00735, 0.00089);//rec旋转向量

Mat R;//R 旋转矩阵

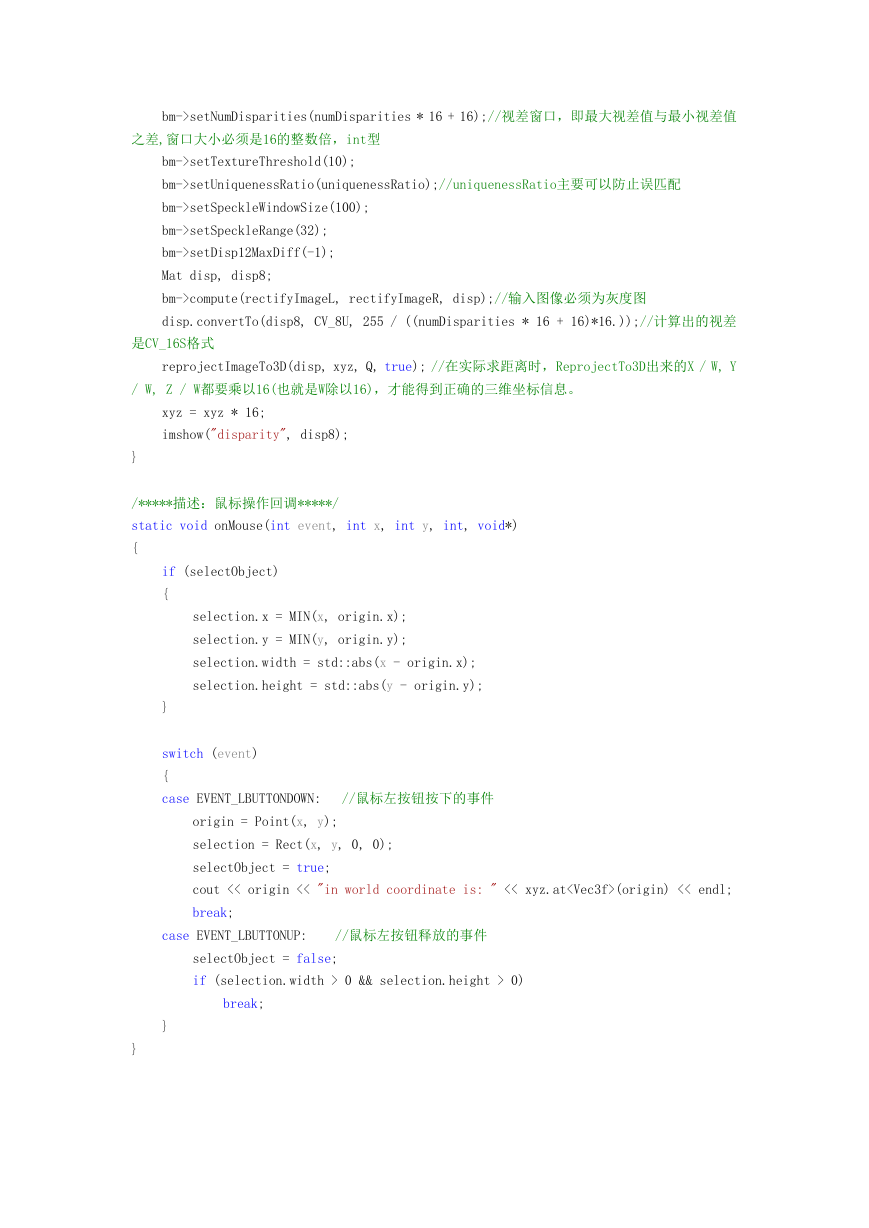

/*****立体匹配*****/

void stereo_match(int, void*)

{

bm->setBlockSize(2 * blockSize + 5);

//SAD窗口大小,5~21之间为宜

bm->setROI1(validROIL);

bm->setROI2(validROIR);

bm->setPreFilterCap(31);

bm->setMinDisparity(0);

//最小视差,默认值为0, 可以是负值,int型

�

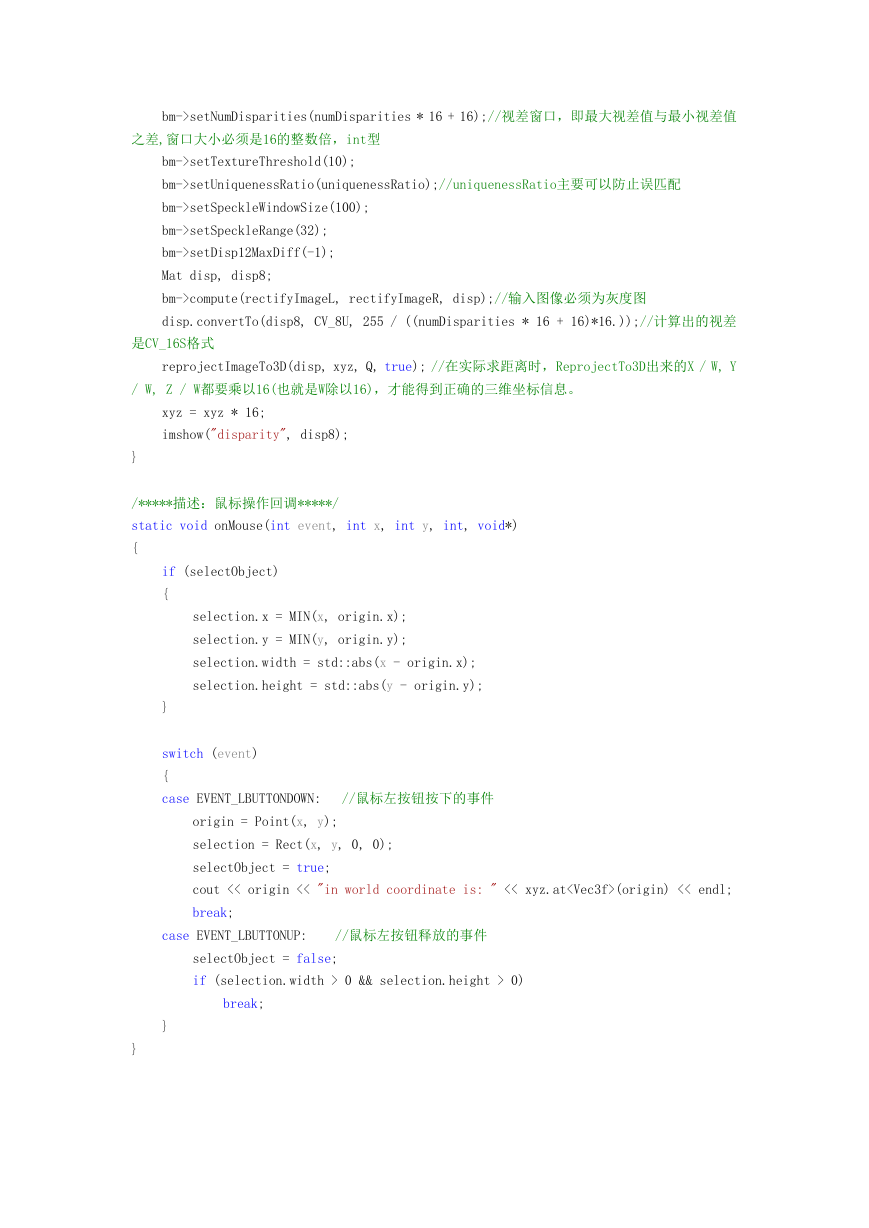

bm->setNumDisparities(numDisparities * 16 + 16);//视差窗口,即最大视差值与最小视差值

之差,窗口大小必须是16的整数倍,int型

bm->setTextureThreshold(10);

bm->setUniquenessRatio(uniquenessRatio);//uniquenessRatio主要可以防止误匹配

bm->setSpeckleWindowSize(100);

bm->setSpeckleRange(32);

bm->setDisp12MaxDiff(-1);

Mat disp, disp8;

bm->compute(rectifyImageL, rectifyImageR, disp);//输入图像必须为灰度图

disp.convertTo(disp8, CV_8U, 255 / ((numDisparities * 16 + 16)*16.));//计算出的视差

是CV_16S格式

reprojectImageTo3D(disp, xyz, Q, true); //在实际求距离时,ReprojectTo3D出来的X / W, Y

/ W, Z / W都要乘以16(也就是W除以16),才能得到正确的三维坐标信息。

xyz = xyz * 16;

imshow("disparity", disp8);

}

/*****描述:鼠标操作回调*****/

static void onMouse(int event, int x, int y, int, void*)

{

if (selectObject)

{

}

selection.x = MIN(x, origin.x);

selection.y = MIN(y, origin.y);

selection.width = std::abs(x - origin.x);

selection.height = std::abs(y - origin.y);

switch (event)

{

case EVENT_LBUTTONDOWN:

//鼠标左按钮按下的事件

origin = Point(x, y);

selection = Rect(x, y, 0, 0);

selectObject = true;

cout << origin << "in world coordinate is: " << xyz.at(origin) << endl;

break;

case EVENT_LBUTTONUP:

//鼠标左按钮释放的事件

selectObject = false;

if (selection.width > 0 && selection.height > 0)

break;

}

}

�

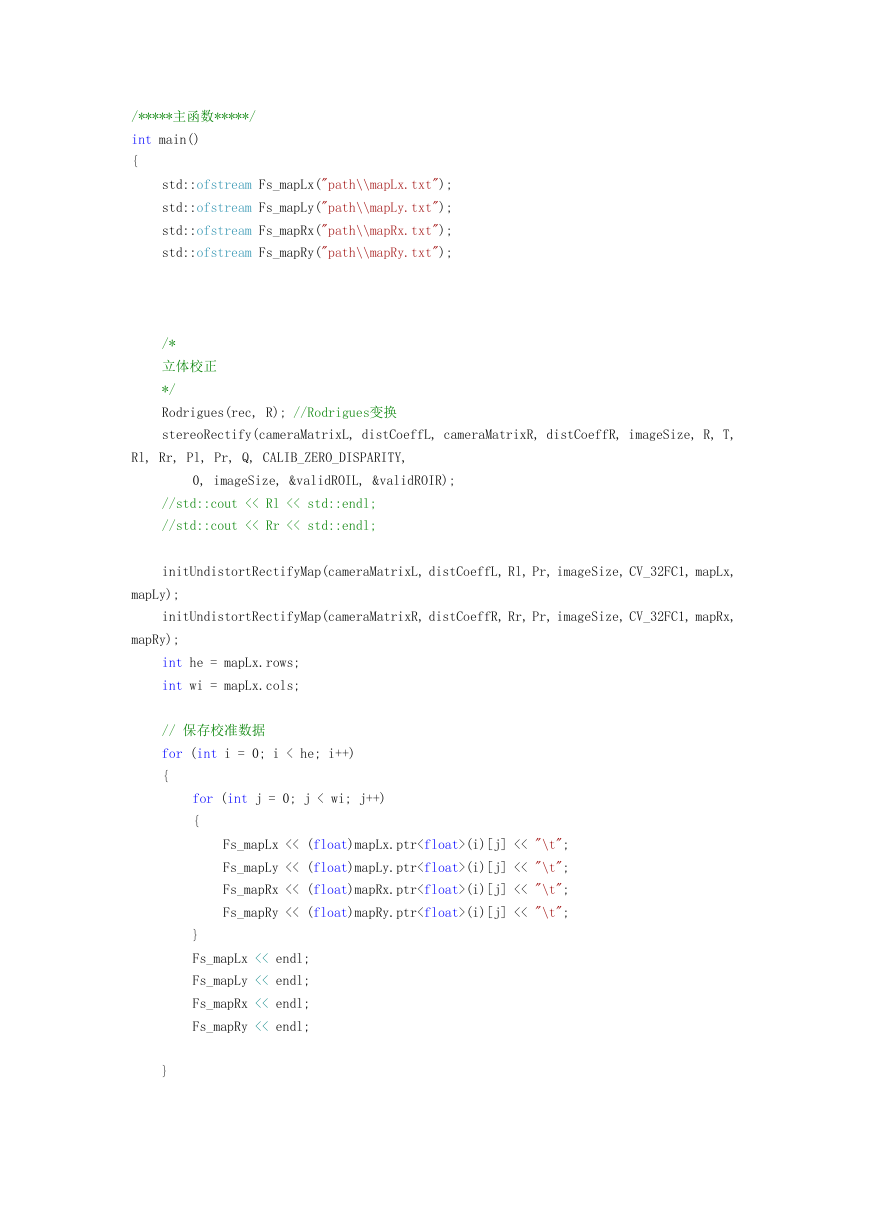

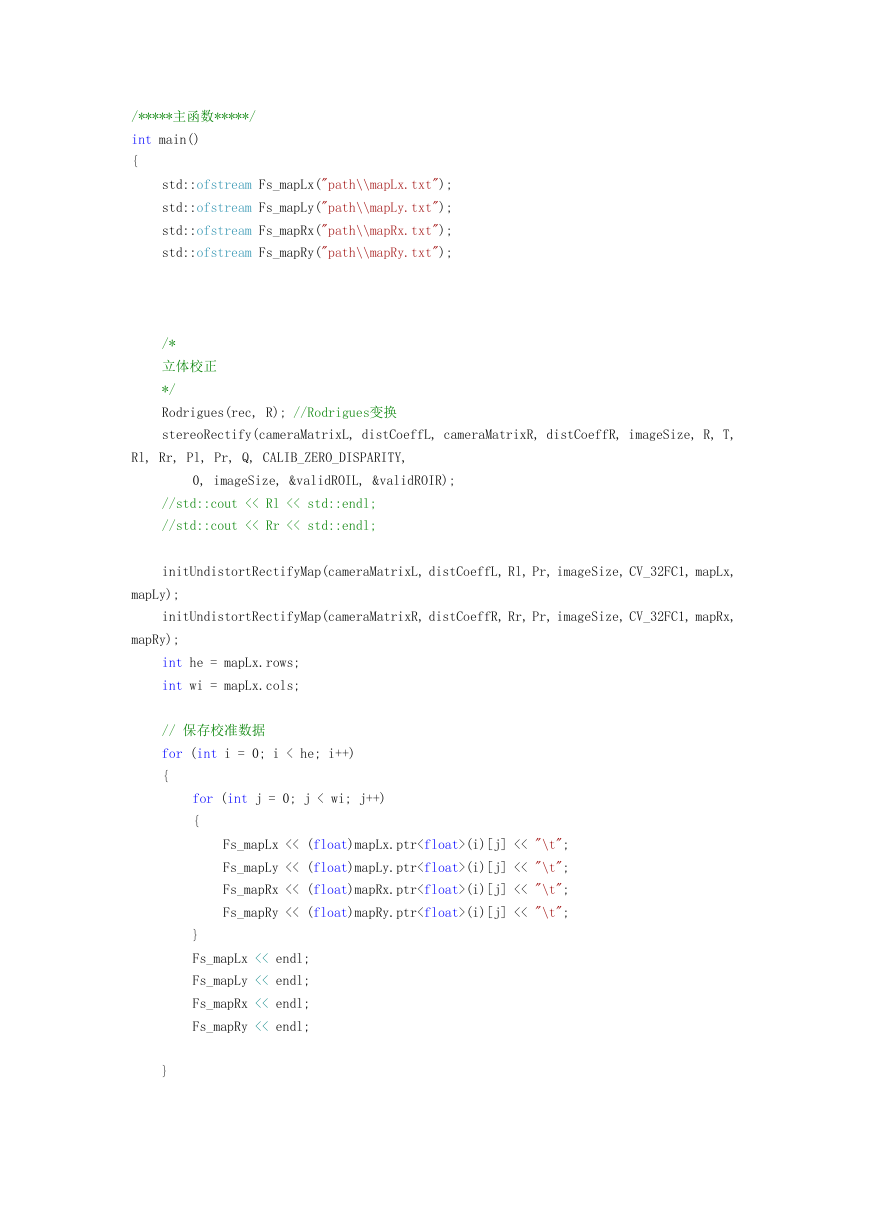

/*****主函数*****/

int main()

{

std::ofstream Fs_mapLx("path\\mapLx.txt");

std::ofstream Fs_mapLy("path\\mapLy.txt");

std::ofstream Fs_mapRx("path\\mapRx.txt");

std::ofstream Fs_mapRy("path\\mapRy.txt");

/*

立体校正

*/

Rodrigues(rec, R); //Rodrigues变换

stereoRectify(cameraMatrixL, distCoeffL, cameraMatrixR, distCoeffR, imageSize, R, T,

Rl, Rr, Pl, Pr, Q, CALIB_ZERO_DISPARITY,

0, imageSize, &validROIL, &validROIR);

//std::cout << Rl << std::endl;

//std::cout << Rr << std::endl;

initUndistortRectifyMap(cameraMatrixL, distCoeffL, Rl, Pr, imageSize, CV_32FC1, mapLx,

mapLy);

initUndistortRectifyMap(cameraMatrixR, distCoeffR, Rr, Pr, imageSize, CV_32FC1, mapRx,

mapRy);

int he = mapLx.rows;

int wi = mapLx.cols;

// 保存校准数据

for (int i = 0; i < he; i++)

for (int j = 0; j < wi; j++)

{

}

Fs_mapLx << (float)mapLx.ptr(i)[j] << "\t";

Fs_mapLy << (float)mapLy.ptr(i)[j] << "\t";

Fs_mapRx << (float)mapRx.ptr(i)[j] << "\t";

Fs_mapRy << (float)mapRy.ptr(i)[j] << "\t";

Fs_mapLx << endl;

Fs_mapLy << endl;

Fs_mapRx << endl;

Fs_mapRy << endl;

{

}

�

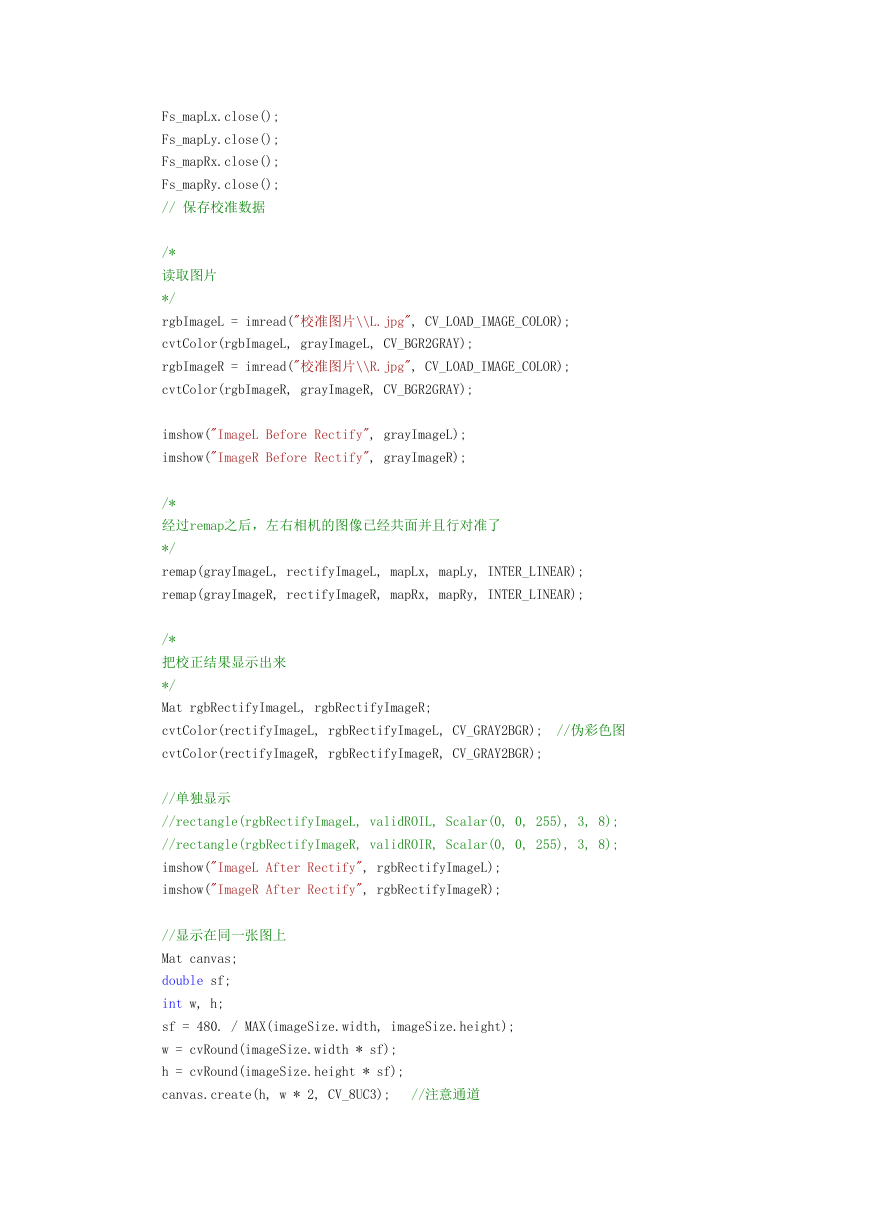

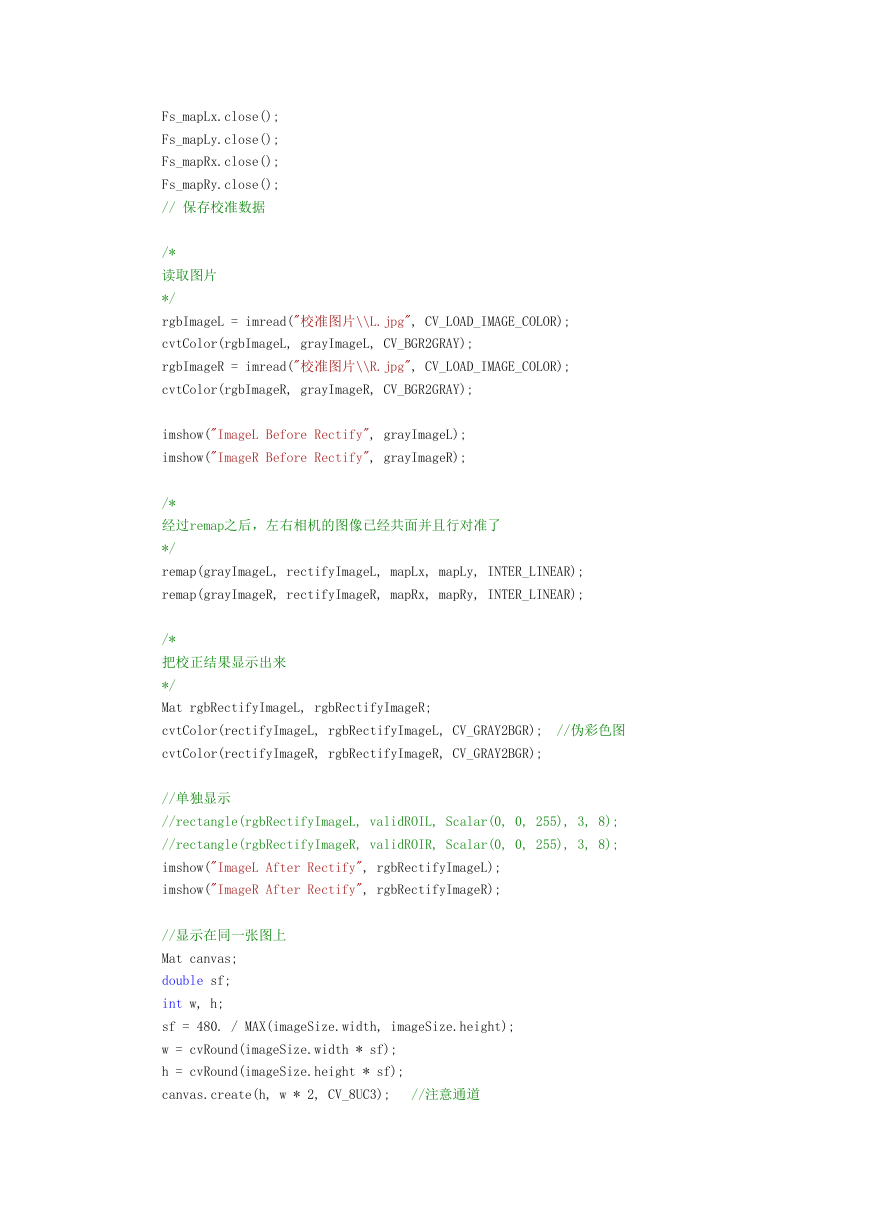

Fs_mapLx.close();

Fs_mapLy.close();

Fs_mapRx.close();

Fs_mapRy.close();

// 保存校准数据

/*

读取图片

*/

rgbImageL = imread("校准图片\\L.jpg", CV_LOAD_IMAGE_COLOR);

cvtColor(rgbImageL, grayImageL, CV_BGR2GRAY);

rgbImageR = imread("校准图片\\R.jpg", CV_LOAD_IMAGE_COLOR);

cvtColor(rgbImageR, grayImageR, CV_BGR2GRAY);

imshow("ImageL Before Rectify", grayImageL);

imshow("ImageR Before Rectify", grayImageR);

/*

经过remap之后,左右相机的图像已经共面并且行对准了

*/

remap(grayImageL, rectifyImageL, mapLx, mapLy, INTER_LINEAR);

remap(grayImageR, rectifyImageR, mapRx, mapRy, INTER_LINEAR);

/*

把校正结果显示出来

*/

Mat rgbRectifyImageL, rgbRectifyImageR;

cvtColor(rectifyImageL, rgbRectifyImageL, CV_GRAY2BGR);

//伪彩色图

cvtColor(rectifyImageR, rgbRectifyImageR, CV_GRAY2BGR);

//单独显示

//rectangle(rgbRectifyImageL, validROIL, Scalar(0, 0, 255), 3, 8);

//rectangle(rgbRectifyImageR, validROIR, Scalar(0, 0, 255), 3, 8);

imshow("ImageL After Rectify", rgbRectifyImageL);

imshow("ImageR After Rectify", rgbRectifyImageR);

//显示在同一张图上

Mat canvas;

double sf;

int w, h;

sf = 480. / MAX(imageSize.width, imageSize.height);

w = cvRound(imageSize.width * sf);

h = cvRound(imageSize.height * sf);

canvas.create(h, w * 2, CV_8UC3);

//注意通道

�

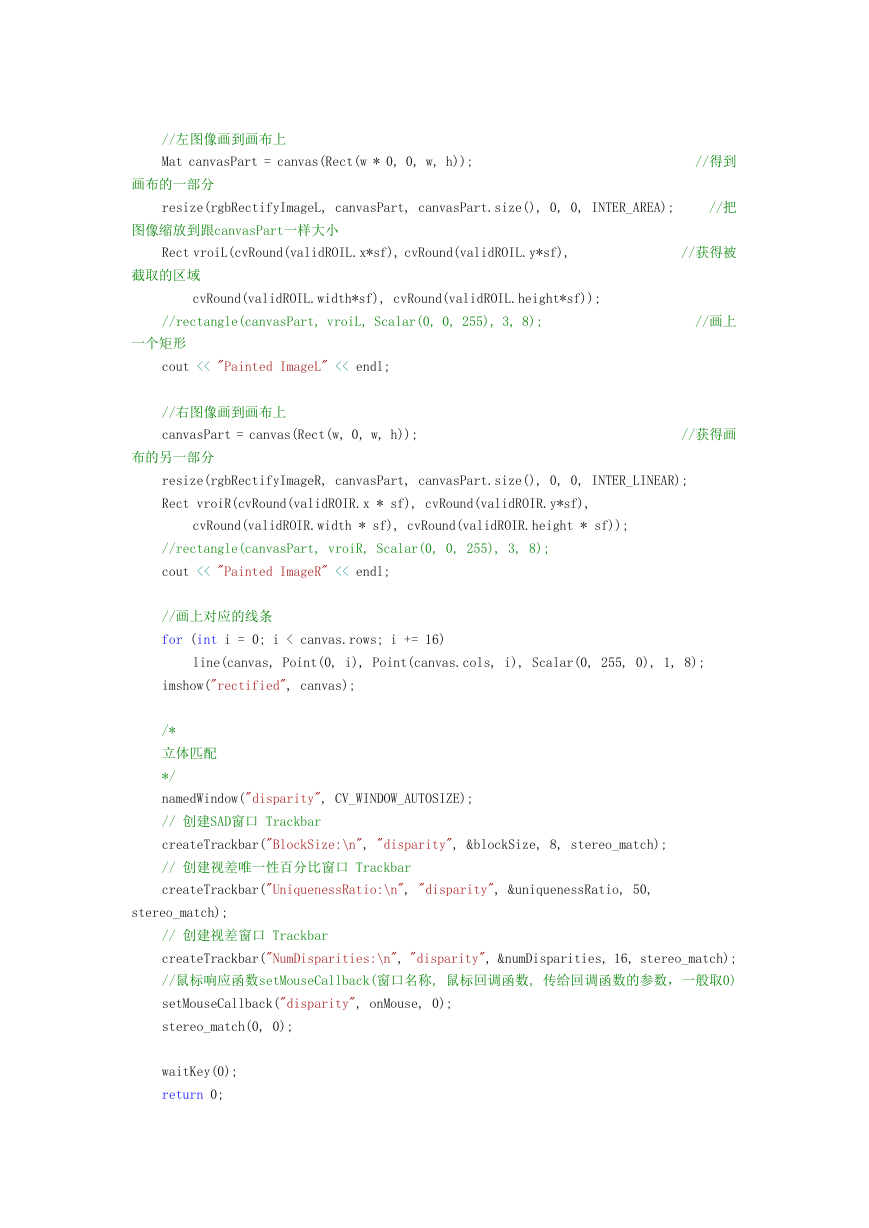

//左图像画到画布上

Mat canvasPart = canvas(Rect(w * 0, 0, w, h));

//得到

画布的一部分

resize(rgbRectifyImageL, canvasPart, canvasPart.size(), 0, 0, INTER_AREA);

//把

图像缩放到跟canvasPart一样大小

Rect vroiL(cvRound(validROIL.x*sf), cvRound(validROIL.y*sf),

//获得被

截取的区域

cvRound(validROIL.width*sf), cvRound(validROIL.height*sf));

//rectangle(canvasPart, vroiL, Scalar(0, 0, 255), 3, 8);

//画上

一个矩形

cout << "Painted ImageL" << endl;

//右图像画到画布上

canvasPart = canvas(Rect(w, 0, w, h));

//获得画

布的另一部分

resize(rgbRectifyImageR, canvasPart, canvasPart.size(), 0, 0, INTER_LINEAR);

Rect vroiR(cvRound(validROIR.x * sf), cvRound(validROIR.y*sf),

cvRound(validROIR.width * sf), cvRound(validROIR.height * sf));

//rectangle(canvasPart, vroiR, Scalar(0, 0, 255), 3, 8);

cout << "Painted ImageR" << endl;

//画上对应的线条

for (int i = 0; i < canvas.rows; i += 16)

line(canvas, Point(0, i), Point(canvas.cols, i), Scalar(0, 255, 0), 1, 8);

imshow("rectified", canvas);

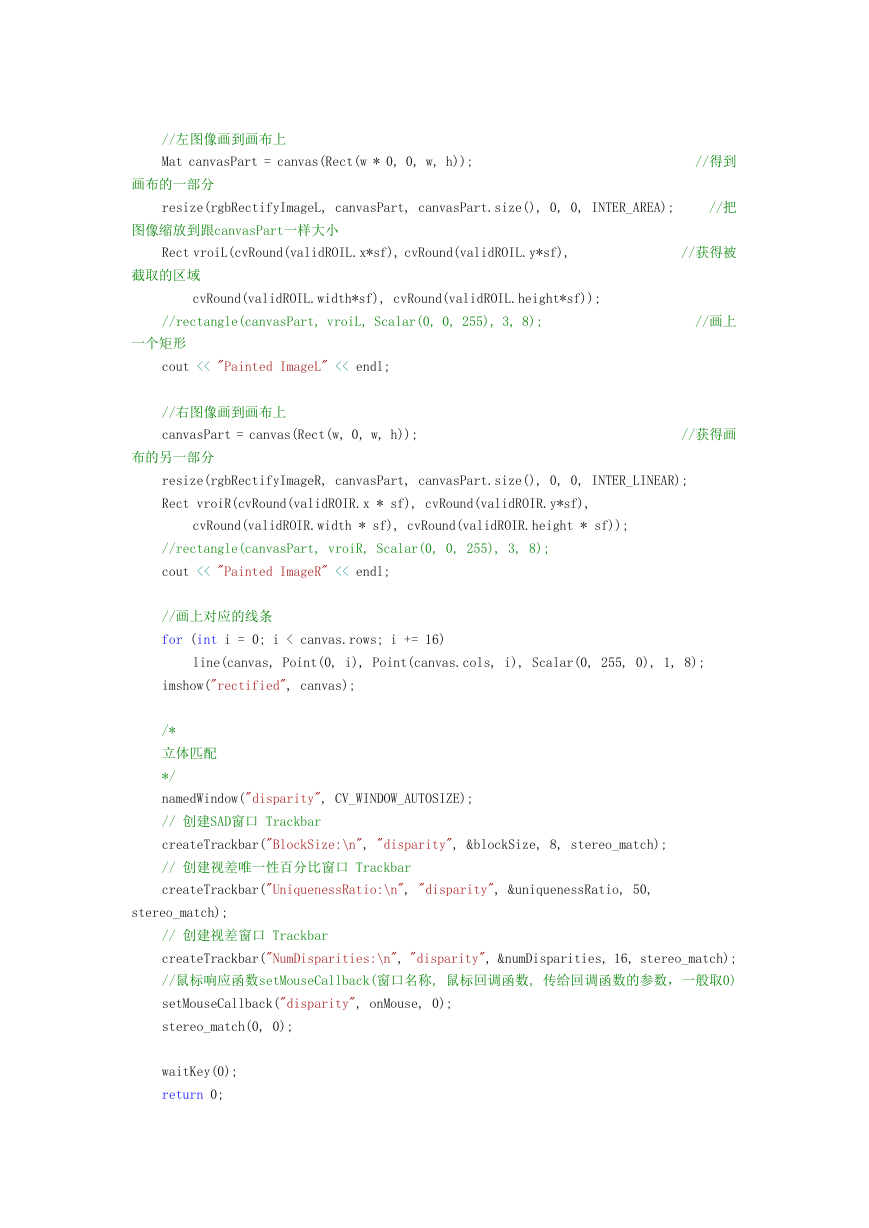

/*

立体匹配

*/

namedWindow("disparity", CV_WINDOW_AUTOSIZE);

// 创建SAD窗口 Trackbar

createTrackbar("BlockSize:\n", "disparity", &blockSize, 8, stereo_match);

// 创建视差唯一性百分比窗口 Trackbar

createTrackbar("UniquenessRatio:\n", "disparity", &uniquenessRatio, 50,

stereo_match);

// 创建视差窗口 Trackbar

createTrackbar("NumDisparities:\n", "disparity", &numDisparities, 16, stereo_match);

//鼠标响应函数setMouseCallback(窗口名称, 鼠标回调函数, 传给回调函数的参数,一般取0)

setMouseCallback("disparity", onMouse, 0);

stereo_match(0, 0);

waitKey(0);

return 0;

�

}

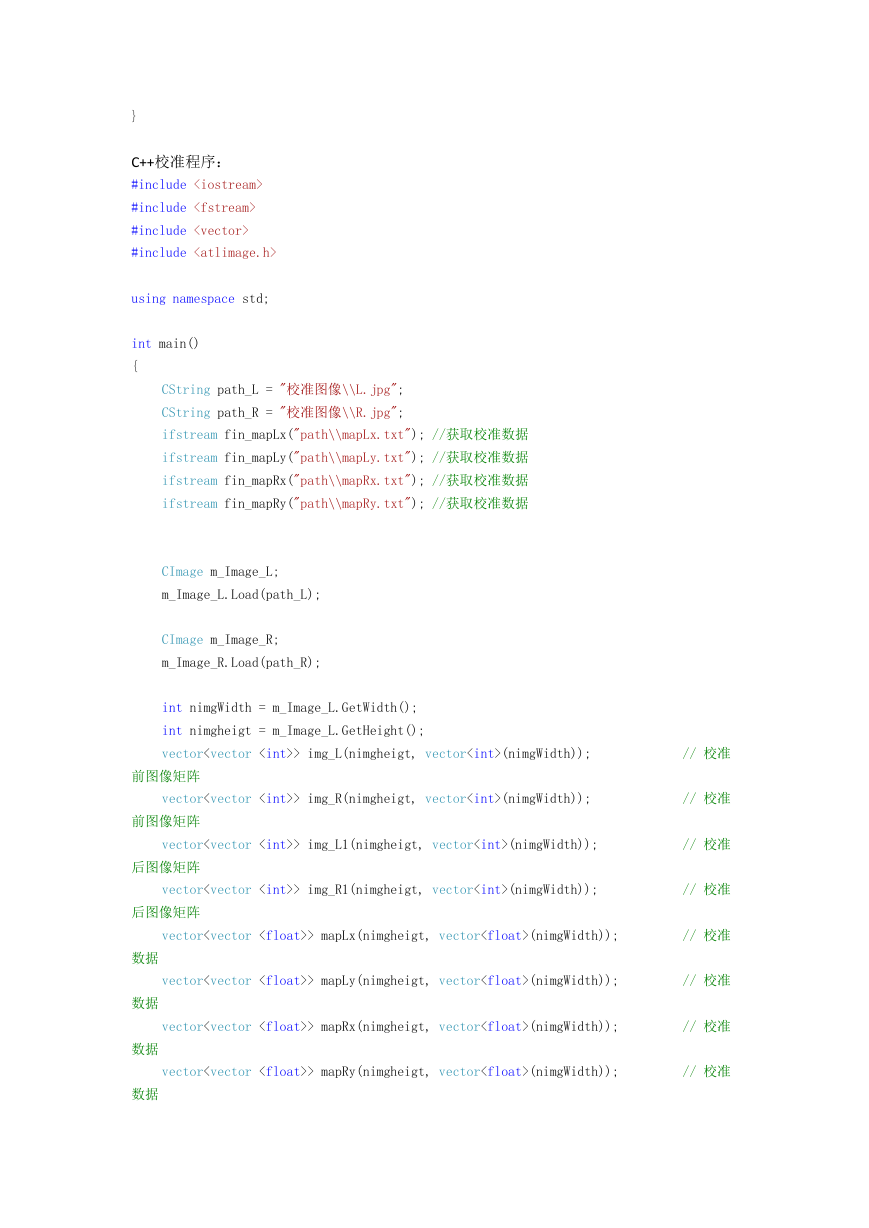

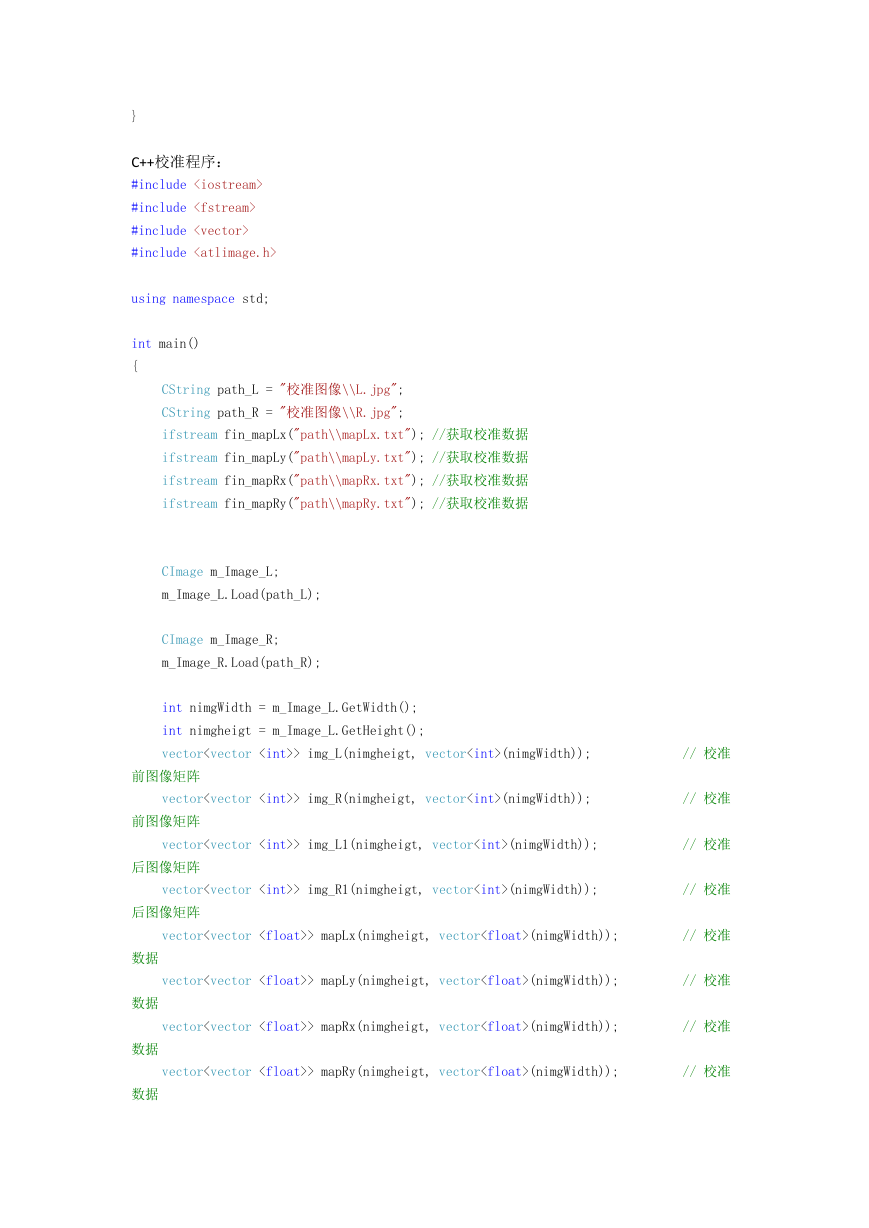

C++校准程序:

#include

#include

#include

#include

using namespace std;

int main()

{

CString path_L = "校准图像\\L.jpg";

CString path_R = "校准图像\\R.jpg";

ifstream fin_mapLx("path\\mapLx.txt"); //获取校准数据

ifstream fin_mapLy("path\\mapLy.txt"); //获取校准数据

ifstream fin_mapRx("path\\mapRx.txt"); //获取校准数据

ifstream fin_mapRy("path\\mapRy.txt"); //获取校准数据

CImage m_Image_L;

m_Image_L.Load(path_L);

CImage m_Image_R;

m_Image_R.Load(path_R);

int nimgWidth = m_Image_L.GetWidth();

int nimgheigt = m_Image_L.GetHeight();

vector> img_L(nimgheigt, vector(nimgWidth));

// 校准

前图像矩阵

vector> img_R(nimgheigt, vector(nimgWidth));

// 校准

前图像矩阵

vector> img_L1(nimgheigt, vector(nimgWidth));

// 校准

后图像矩阵

vector> img_R1(nimgheigt, vector(nimgWidth));

// 校准

后图像矩阵

vector> mapLx(nimgheigt, vector(nimgWidth));

// 校准

数据

数据

数据

数据

vector> mapLy(nimgheigt, vector(nimgWidth));

// 校准

vector> mapRx(nimgheigt, vector(nimgWidth));

// 校准

vector> mapRy(nimgheigt, vector(nimgWidth));

// 校准

�byte* pRealData_L = (byte*)m_Image_L.GetBits();

int pit_L = m_Image_L.GetPitch();

int bitCount_L = m_Image_L.GetBPP() / 8;

byte* pRealData_R = (byte*)m_Image_R.GetBits();

int pit_R = m_Image_R.GetPitch();

int bitCount_R = m_Image_R.GetBPP() / 8;

for (int y = 0; y> 16;

// 将RGB转灰度

img_R[y][x] = (int)(((int)(int)(*(pRealData_R + pit_R*y + x*bitCount_R)) *

19595 + (int)(int)(*(pRealData_R + pit_R*y + x*bitCount_R + 1)) * 38469 +

(int)(int)(*(pRealData_R + pit_R*y + x*bitCount_R + 2)) * 7472)) >> 16;

// 将RGB转灰度

fin_mapLx >> mapLx[y][x];

fin_mapLy >> mapLy[y][x];

fin_mapRx >> mapRx[y][x];

fin_mapRy >> mapRy[y][x];

img_L1[y][x] = 0.0;

img_R1[y][x] = 0.0;

}

}

fin_mapLx.clear();

fin_mapLy.clear();

fin_mapRx.clear();

fin_mapRy.clear();

// 将校准后的数据保存,在MATLAB中进行显示

ofstream Fs_img_L("path\\img_L.txt");

ofstream Fs_img_R("path\\img_R.txt");

for (int i = 0; i < nimgheigt; i++)

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc