8

1

0

2

v

o

N

5

2

]

L

C

.

s

c

[

8

v

9

0

7

2

0

.

8

0

7

1

:

v

i

X

r

a

Recent Trends in Deep Learning Based

Natural Language Processing

Tom Young†≡, Devamanyu Hazarika‡≡, Soujanya Poria⊕≡, Erik Cambria∗

1

† School of Information and Electronics, Beijing Institute of Technology, China

‡ School of Computing, National University of Singapore, Singapore

⊕ Temasek Laboratories, Nanyang Technological University, Singapore

School of Computer Science and Engineering, Nanyang Technological University, Singapore

Abstract

Deep learning methods employ multiple processing layers to learn hierarchical representations of data, and have produced

state-of-the-art results in many domains. Recently, a variety of model designs and methods have blossomed in the context of

natural language processing (NLP). In this paper, we review significant deep learning related models and methods that have been

employed for numerous NLP tasks and provide a walk-through of their evolution. We also summarize, compare and contrast the

various models and put forward a detailed understanding of the past, present and future of deep learning in NLP.

Natural Language Processing, Deep Learning, Word2Vec, Attention, Recurrent Neural Networks, Convolutional Neural Net-

works, LSTM, Sentiment Analysis, Question Answering, Dialogue Systems, Parsing, Named-Entity Recognition, POS Tagging,

Semantic Role Labeling

Index Terms

I. INTRODUCTION

Natural language processing (NLP) is a theory-motivated range of computational techniques for the automatic analysis and

representation of human language. NLP research has evolved from the era of punch cards and batch processing, in which the

analysis of a sentence could take up to 7 minutes, to the era of Google and the likes of it, in which millions of webpages can

be processed in less than a second [1]. NLP enables computers to perform a wide range of natural language related tasks at

all levels, ranging from parsing and part-of-speech (POS) tagging, to machine translation and dialogue systems.

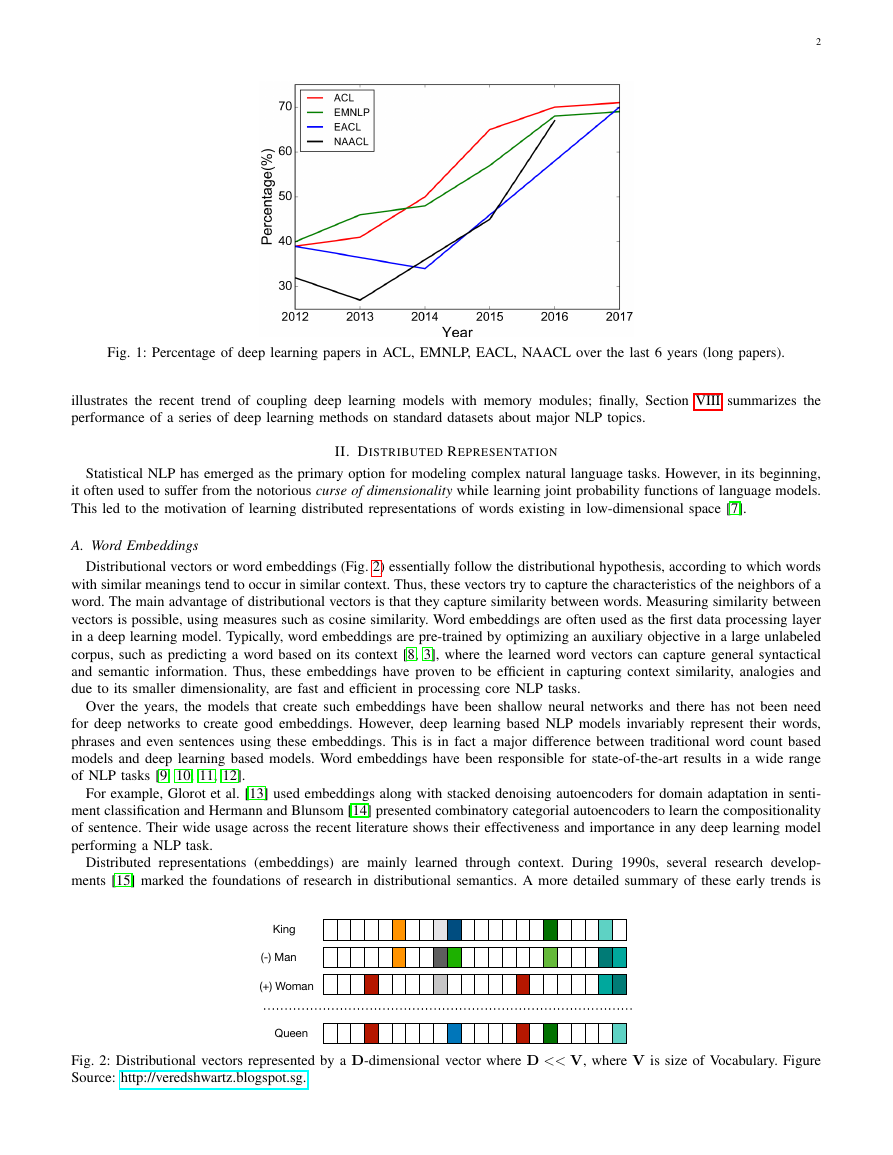

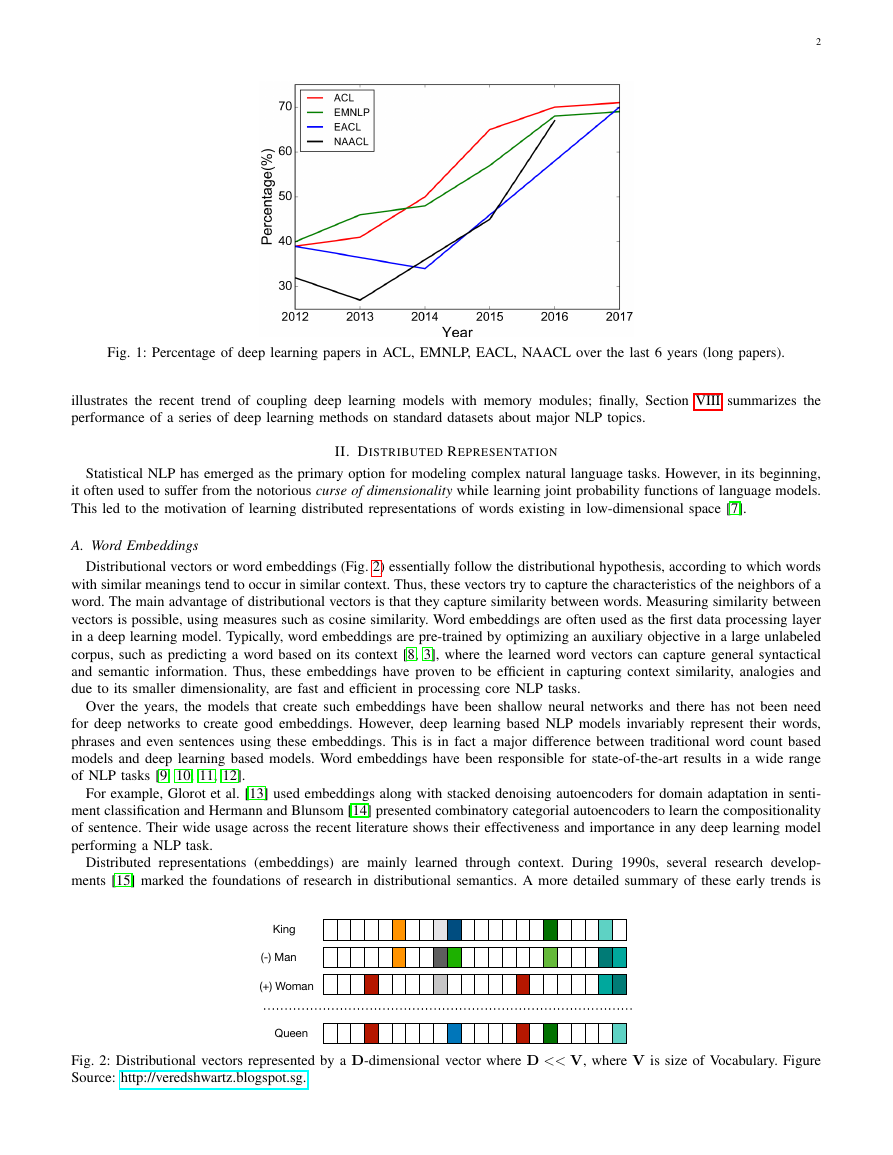

Deep learning architectures and algorithms have already made impressive advances in fields such as computer vision and

pattern recognition. Following this trend, recent NLP research is now increasingly focusing on the use of new deep learning

methods (see Figure 1). For decades, machine learning approaches targeting NLP problems have been based on shallow models

(e.g., SVM and logistic regression) trained on very high dimensional and sparse features. In the last few years, neural networks

based on dense vector representations have been producing superior results on various NLP tasks. This trend is sparked by

the success of word embeddings [2, 3] and deep learning methods [4]. Deep learning enables multi-level automatic feature

representation learning. In contrast, traditional machine learning based NLP systems liaise heavily on hand-crafted features.

Such hand-crafted features are time-consuming and often incomplete.

Collobert et al. [5] demonstrated that a simple deep learning framework outperforms most state-of-the-art approaches in

several NLP tasks such as named-entity recognition (NER), semantic role labeling (SRL), and POS tagging. Since then,

numerous complex deep learning based algorithms have been proposed to solve difficult NLP tasks. We review major deep

learning related models and methods applied to natural language tasks such as convolutional neural networks (CNNs), recurrent

neural networks (RNNs), and recursive neural networks. We also discuss memory-augmenting strategies, attention mechanisms

and how unsupervised models, reinforcement learning methods and recently, deep generative models have been employed for

language-related tasks.

To the best of our knowledge, this work is the first of its type to comprehensively cover the most popular deep learning

methods in NLP research today 1. The work by Goldberg [6] only presented the basic principles for applying neural networks

to NLP in a tutorial manner. We believe this paper will give readers a more comprehensive idea of current practices in this

domain.

The structure of the paper is as follows: Section II introduces the concept of distributed representation, the basis of

sophisticated deep learning models; next, Sections III, IV, and V discuss popular models such as convolutional, recurrent,

and recursive neural networks, as well as their use in various NLP tasks; following, Section VI lists recent applications of

reinforcement learning in NLP and new developments in unsupervised sentence representation learning; later, Section VII

≡ means authors contributed equally

∗ Corresponding author (e-mail: cambria@ntu.edu.sg)

1We intend to update this article with time as and when significant advances are proposed and used by the community

�

2

Fig. 1: Percentage of deep learning papers in ACL, EMNLP, EACL, NAACL over the last 6 years (long papers).

illustrates the recent trend of coupling deep learning models with memory modules; finally, Section VIII summarizes the

performance of a series of deep learning methods on standard datasets about major NLP topics.

Statistical NLP has emerged as the primary option for modeling complex natural language tasks. However, in its beginning,

it often used to suffer from the notorious curse of dimensionality while learning joint probability functions of language models.

This led to the motivation of learning distributed representations of words existing in low-dimensional space [7].

II. DISTRIBUTED REPRESENTATION

A. Word Embeddings

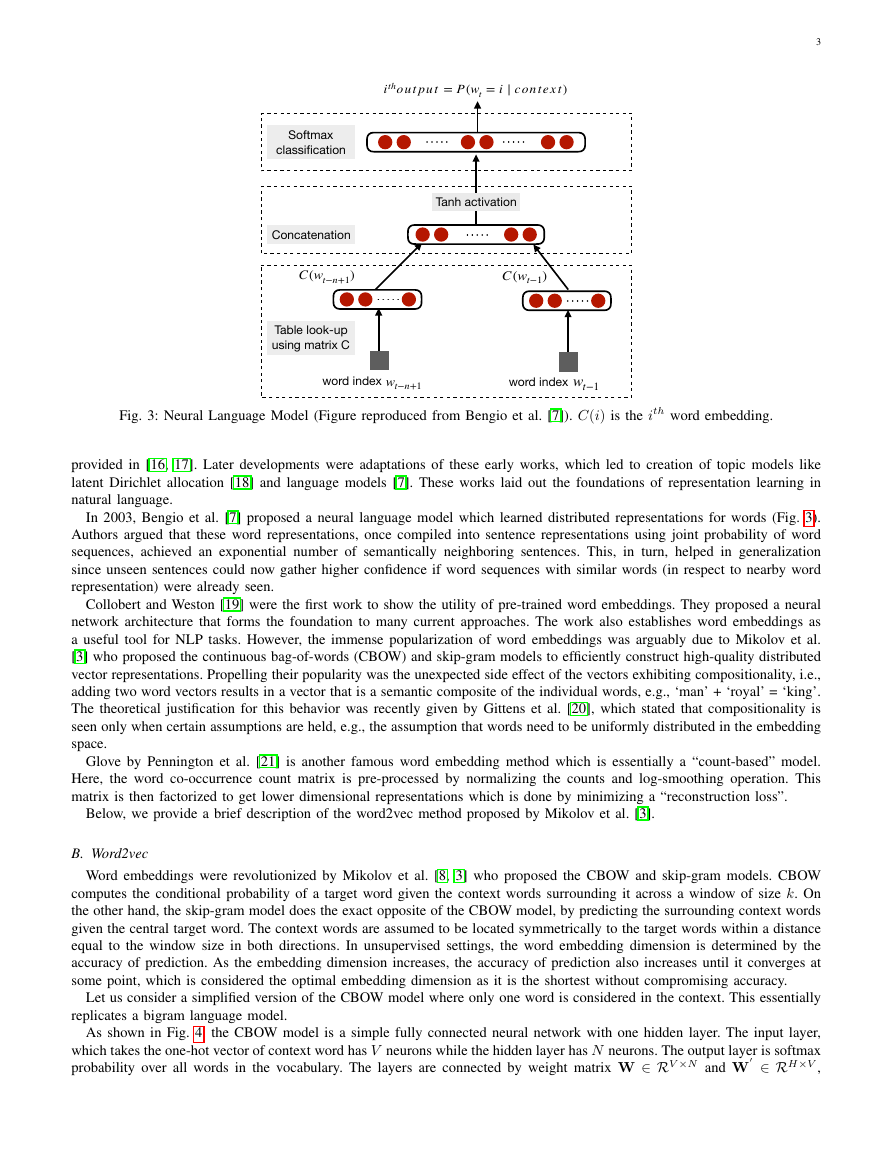

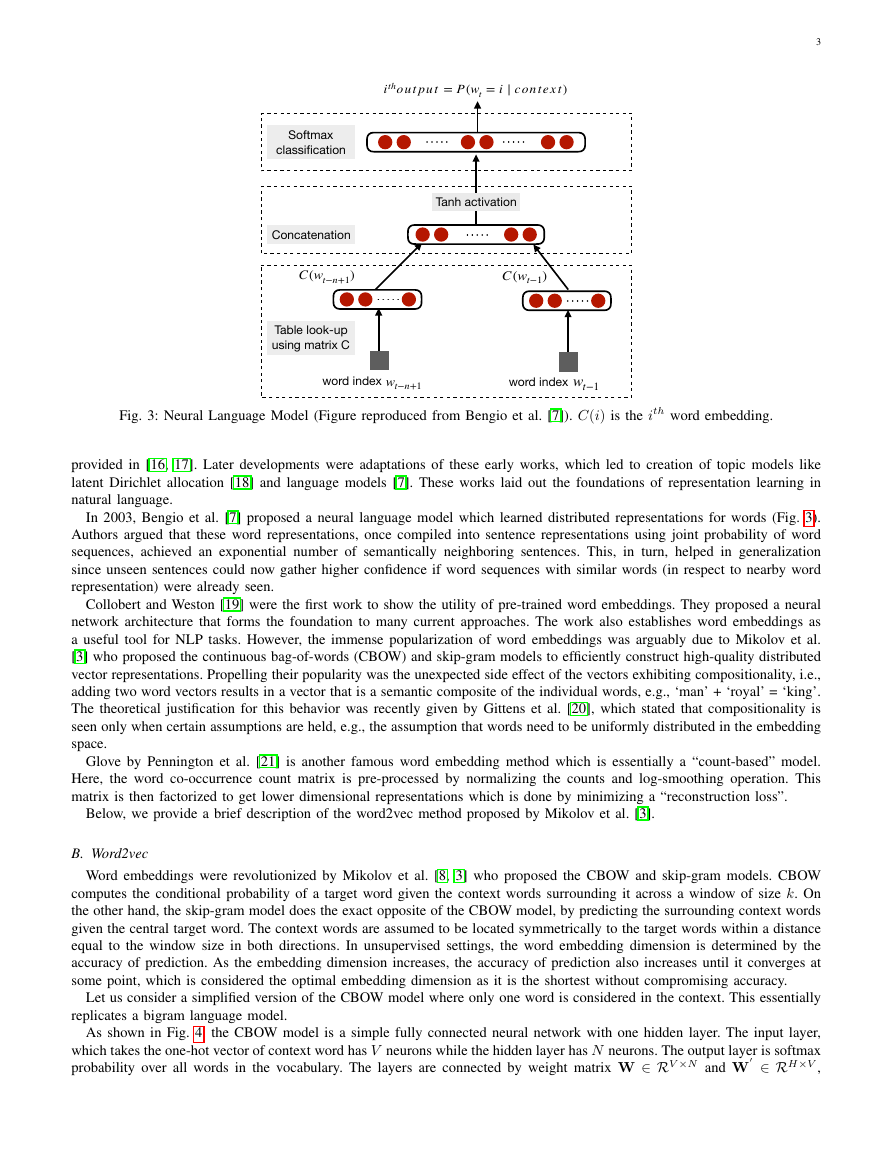

Distributional vectors or word embeddings (Fig. 2) essentially follow the distributional hypothesis, according to which words

with similar meanings tend to occur in similar context. Thus, these vectors try to capture the characteristics of the neighbors of a

word. The main advantage of distributional vectors is that they capture similarity between words. Measuring similarity between

vectors is possible, using measures such as cosine similarity. Word embeddings are often used as the first data processing layer

in a deep learning model. Typically, word embeddings are pre-trained by optimizing an auxiliary objective in a large unlabeled

corpus, such as predicting a word based on its context [8, 3], where the learned word vectors can capture general syntactical

and semantic information. Thus, these embeddings have proven to be efficient in capturing context similarity, analogies and

due to its smaller dimensionality, are fast and efficient in processing core NLP tasks.

Over the years, the models that create such embeddings have been shallow neural networks and there has not been need

for deep networks to create good embeddings. However, deep learning based NLP models invariably represent their words,

phrases and even sentences using these embeddings. This is in fact a major difference between traditional word count based

models and deep learning based models. Word embeddings have been responsible for state-of-the-art results in a wide range

of NLP tasks [9, 10, 11, 12].

For example, Glorot et al. [13] used embeddings along with stacked denoising autoencoders for domain adaptation in senti-

ment classification and Hermann and Blunsom [14] presented combinatory categorial autoencoders to learn the compositionality

of sentence. Their wide usage across the recent literature shows their effectiveness and importance in any deep learning model

performing a NLP task.

Distributed representations (embeddings) are mainly learned through context. During 1990s, several research develop-

ments [15] marked the foundations of research in distributional semantics. A more detailed summary of these early trends is

Fig. 2: Distributional vectors represented by a D-dimensional vector where D << V, where V is size of Vocabulary. Figure

Source: http://veredshwartz.blogspot.sg.

King(-) Man(+) WomanQueen�

3

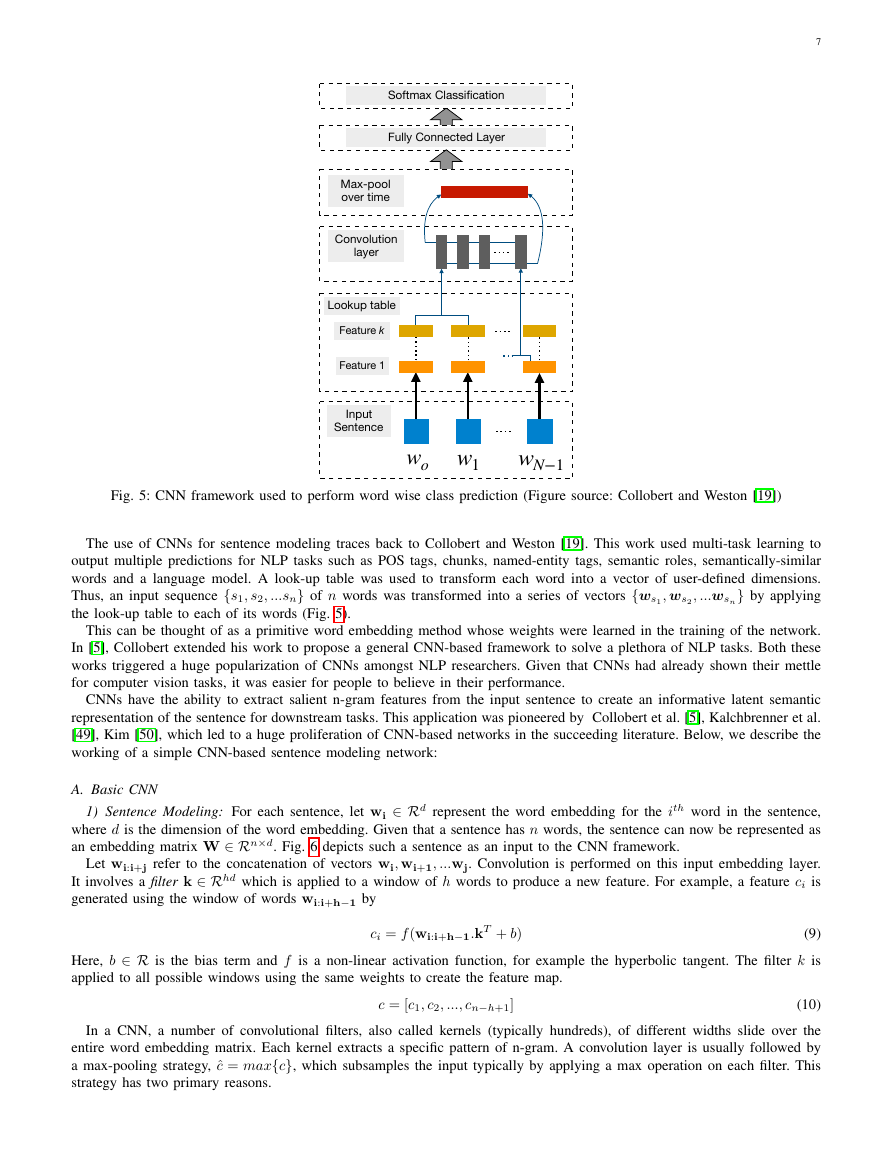

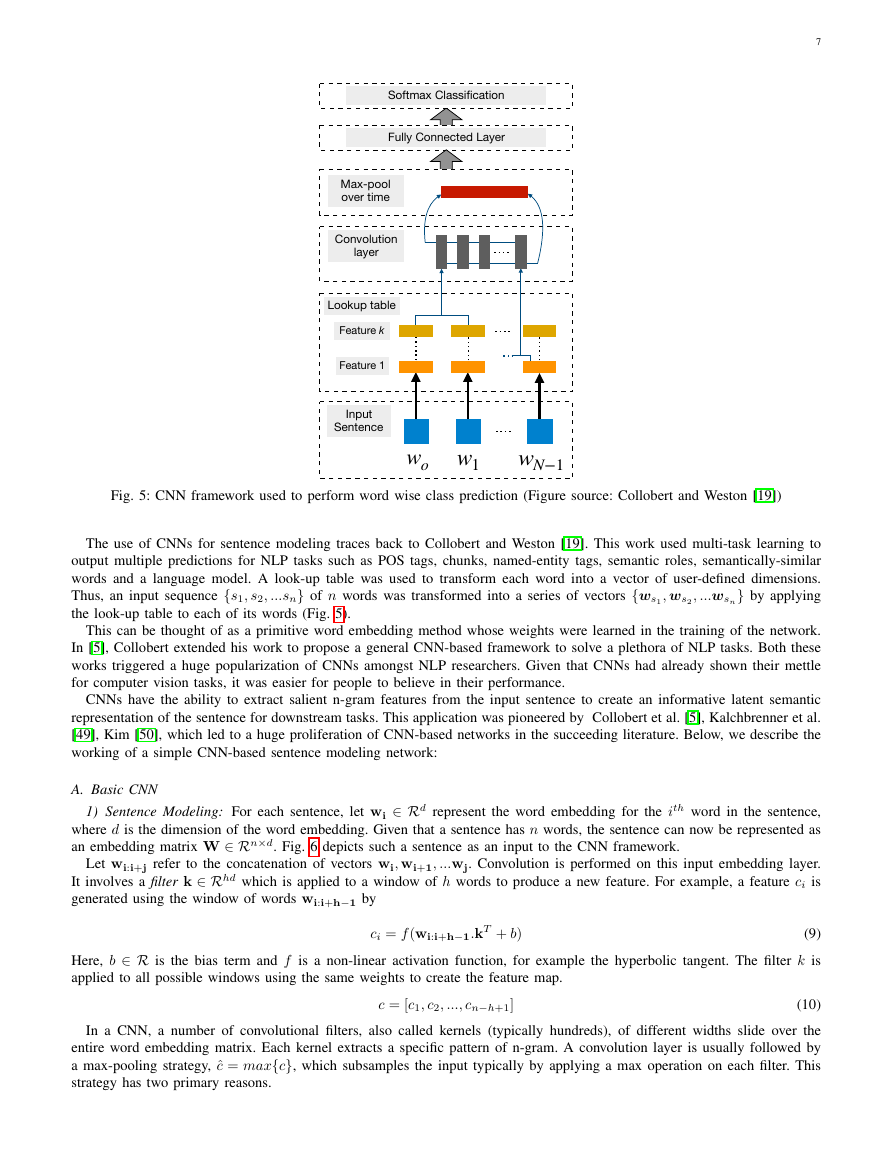

Fig. 3: Neural Language Model (Figure reproduced from Bengio et al. [7]). C(i) is the ith word embedding.

provided in [16, 17]. Later developments were adaptations of these early works, which led to creation of topic models like

latent Dirichlet allocation [18] and language models [7]. These works laid out the foundations of representation learning in

natural language.

In 2003, Bengio et al. [7] proposed a neural language model which learned distributed representations for words (Fig. 3).

Authors argued that these word representations, once compiled into sentence representations using joint probability of word

sequences, achieved an exponential number of semantically neighboring sentences. This, in turn, helped in generalization

since unseen sentences could now gather higher confidence if word sequences with similar words (in respect to nearby word

representation) were already seen.

Collobert and Weston [19] were the first work to show the utility of pre-trained word embeddings. They proposed a neural

network architecture that forms the foundation to many current approaches. The work also establishes word embeddings as

a useful tool for NLP tasks. However, the immense popularization of word embeddings was arguably due to Mikolov et al.

[3] who proposed the continuous bag-of-words (CBOW) and skip-gram models to efficiently construct high-quality distributed

vector representations. Propelling their popularity was the unexpected side effect of the vectors exhibiting compositionality, i.e.,

adding two word vectors results in a vector that is a semantic composite of the individual words, e.g., ‘man’ + ‘royal’ = ‘king’.

The theoretical justification for this behavior was recently given by Gittens et al. [20], which stated that compositionality is

seen only when certain assumptions are held, e.g., the assumption that words need to be uniformly distributed in the embedding

space.

Glove by Pennington et al. [21] is another famous word embedding method which is essentially a “count-based” model.

Here, the word co-occurrence count matrix is pre-processed by normalizing the counts and log-smoothing operation. This

matrix is then factorized to get lower dimensional representations which is done by minimizing a “reconstruction loss”.

Below, we provide a brief description of the word2vec method proposed by Mikolov et al. [3].

B. Word2vec

Word embeddings were revolutionized by Mikolov et al. [8, 3] who proposed the CBOW and skip-gram models. CBOW

computes the conditional probability of a target word given the context words surrounding it across a window of size k. On

the other hand, the skip-gram model does the exact opposite of the CBOW model, by predicting the surrounding context words

given the central target word. The context words are assumed to be located symmetrically to the target words within a distance

equal to the window size in both directions. In unsupervised settings, the word embedding dimension is determined by the

accuracy of prediction. As the embedding dimension increases, the accuracy of prediction also increases until it converges at

some point, which is considered the optimal embedding dimension as it is the shortest without compromising accuracy.

Let us consider a simplified version of the CBOW model where only one word is considered in the context. This essentially

replicates a bigram language model.

As shown in Fig. 4, the CBOW model is a simple fully connected neural network with one hidden layer. The input layer,

which takes the one-hot vector of context word has V neurons while the hidden layer has N neurons. The output layer is softmax

probability over all words in the vocabulary. The layers are connected by weight matrix W ∈ RV ×N and W

∈ RH×V ,

Table look-up using matrix Cword indexword indexithoutput=P(wt=i∣context)C(wt−n+1)C(wt−1)wt−n+1wt−1Softmax classificationTanh activationConcatenation�

4

Fig. 4: Model for CBOW (Figure source: Rong [22])

respectively. Each word from the vocabulary is finally represented as two learned vectors vc and vw, corresponding to context

and target word representations, respectively. Thus, kth word in the vocabulary will have

vc = W(k,.) and vw = W

(.,k)

(1)

Overall, for any word wi with given context word c as input,

(2)

The parameters θ = {vw, vc}w,c ∈ Vocab are learned by defining the objective function as the log-likelihood and finding its

gradient as

where, ui = vT

wi

i=1 eui

= yi =

.vc

p

c

wi

euiV

l(θ) =

log

w∈Vocab

w

w

p

c

∂l(θ)

∂vw

= vc

1 − p

c

(3)

(4)

(5)

In the general CBOW model, all the one-hot vectors of context words are taken as input simultaneously, i.e,

h = WT(x1 + x2 + ... + xc)

One limitation of individual word embeddings is their inability to represent phrases [3], where the combination of two or

more words – e.g., idioms like “hot potato” or named entities such as “Boston Globe’ – does not represent the combination

of meanings of individual words. One solution to this problem, as explored by Mikolov et al. [3], is to identify such phrases

based on word co-occurrence and train embeddings for them separately. Later methods have explored directly learning n-gram

embeddings from unlabeled data [23].

Another limitation comes from learning embeddings based only on a small window of surrounding words, sometimes

words such as good and bad share almost the same embedding [24], which is problematic if used in tasks such as sentiment

analysis [25]. At times these embeddings cluster semantically-similar words which have opposing sentiment polarities. This

leads the downstream model used for the sentiment analysis task to be unable to identify this contrasting polarities leading to

poor performance. Tang et al. [26] addressed this problem by proposing sentiment specific word embedding (SSWE). Authors

incorporated the supervised sentiment polarity of text in their loss functions while learning the embeddings.

A general caveat for word embeddings is that they are highly dependent on the applications in which it is used. Labutov

and Lipson [27] proposed task specific embeddings which retrain the word embeddings to align them in the current task space.

This is very important as training embeddings from scratch requires large amount of time and resource. Mikolov et al. [8] tried

to address this issue by proposing negative sampling which does frequency-based sampling of negative terms while training

the word2vec model.

Traditional word embedding algorithms assign a distinct vector to each word. This makes them unable to account for

polysemy. In a recent work, Upadhyay et al. [28] provided an innovative way to address this deficit. The authors leveraged

multilingual parallel data to learn multi-sense word embeddings. For example, the English word bank, when translated to

French provides two different words: banc and banque representing financial and geographical meanings, respectively. Such

multilingual distributional information helped them in accounting for polysemy.

Table I provides a directory of existing frameworks that are frequently used for creating embeddings which are further

incorporated into deep learning models.

�

5

Language

Java

Java

Python

Python

Python

Python

Python

URL

https://github.com/fozziethebeat/S-Space

https://github.com/semanticvectors/

https://radimrehurek.com/gensim/

https://github.com/jimmycallin/pydsm

http://clic.cimec.unitn.it/composes/toolkit/

https://fasttext.cc/

https://tfhub.dev/google/elmo/2

Framework

S-Space

Semanticvectors

Gensim

Pydsm

Dissect

FastText

Elmo

TABLE I: Frameworks providing word embedding tools and methods.

C. Character Embeddings

Word embeddings are able to capture syntactic and semantic information, yet for tasks such as POS-tagging and NER, intra-

word morphological and shape information can also be very useful. Generally speaking, building natural language understanding

systems at the character level has attracted certain research attention [29, 30, 31, 32]. Better results on morphologically rich

languages are reported in certain NLP tasks. Santos and Guimaraes [31] applied character-level representations, along with

word embeddings for NER, achieving state-of-the-art results in Portuguese and Spanish corpora. Kim et al. [29] showed positive

results on building a neural language model using only character embeddings. Ma et al. [33] exploited several embeddings,

including character trigrams, to incorporate prototypical and hierarchical information for learning pre-trained label embeddings

in the context of NER.

A common phenomenon for languages with large vocabularies is the unknown word issue, also known as out-of-vocabulary

(OOV) words. Character embeddings naturally deal with it since each word is considered as no more than a composition

of individual letters. In languages where text is not composed of separated words but individual characters and the semantic

meaning of words map to its compositional characters (such as Chinese), building systems at the character level is a natural

choice to avoid word segmentation [34]. Thus, works employing deep learning applications on such languages tend to prefer

character embeddings over word vectors [35]. For example, Peng et al. [36] proved that radical-level processing could greatly

improve sentiment classification performance. In particular, the authors proposed two types of Chinese radical-based hierarchical

embeddings, which incorporate not only semantics at radical and character level, but also sentiment information. Bojanowski

et al. [37] also tried to improve the representation of words by using character-level information in morphologically-rich

languages. They approached the skip-gram method by representing words as bag-of-character n-grams. Their work thus had

the effectiveness of the skip-gram model along with addressing some persistent issues of word embeddings. The method was

also fast, which allowed training models on large corpora quickly. Popularly known as FastText, such a method stands out over

previous methods in terms of speed, scalability, and effectiveness.

Apart from character embeddings, different approaches have been proposed for OOV handling. Herbelot and Baroni [38]

provided on-the-fly OOV handling by initializing the unknown words as the sum of the context words and refining these

words with a high learning rate. However, their approach is yet to be tested on typical NLP tasks. Pinter et al. [39] provided

an interesting approach of training a character-based model to recreate pre-trained embeddings. This allowed them to learn a

compositional mapping form character to word embedding, thus tackling the OOV problem.

Despite the ever growing popularity of distributional vectors, recent discussions on their relevance in the long run have

cropped up. For example, Lucy and Gauthier [40] has recently tried to evaluate how well the word vectors capture the

necessary facets of conceptual meaning. The authors have discovered severe limitations in perceptual understanding of the

concepts behind the words, which cannot be inferred from distributional semantics alone. A possible direction for mitigating

these deficiencies will be grounded learning, which has been gaining popularity in this research domain.

D. Contextualized Word Embeddings

The quality of word representations is generally gauged by its ability to encode syntactical information and handle polysemic

behavior (or word senses). These properties result in improved semantic word representations. Recent approaches in this area

encode such information into its embeddings by leveraging the context. These methods provide deeper networks that calculate

word representations as a function of its context.

Traditional word embedding methods such as Word2Vec and Glove consider all the sentences where a word is present in

order to create a global vector representation of that word. However, a word can have completely different senses or meanings

in the contexts. For example, lets consider these two sentences - 1) “The bank will not be accepting cash on Saturdays” 2)

“The river overflowed the bank.”. The word senses of bank are different in these two sentences depending on its context.

Reasonably, one might want two different vector representations of the word bank based on its two different word senses.

The new class of models adopt this reasoning by diverging from the concept of global word representations and proposing

contextual word embeddings instead.

Embedding from Language Model (ELMo) [41] is one such method that provides deep contextual embeddings. ELMo

produces word embeddings for each context where the word is used, thus allowing different representations for varying senses

�

k

of the same word. Specifically, for N different sentences where a word w is present, ELMo generates N different representations

of w i.e., w1, w2, ˙,wN .

The mechanism of ELMo is based on the representation obtained from a bidirectional language model. A bidirectional

language model (biLM) constitutes of two language models (LM) 1) forward LM and 2) backward LM. A forward LM takes

input representation xLM

for each of the kth token and passes it through L layers of forward LSTM to get representations

−→

N

k,j where j = 1, . . . , L. Each of these representations, being hidden representations of recurrent neural networks, is context

h LM

dependent. A forward LM can be seen as a method to model the joint probability of a sequence of tokens: p (t1, t2, . . . , tN ) =

k=1 p (tk|t1, t2, . . . , tk−1). At a timestep k− 1 the forward LM predicts the next token tk given the previous observed tokens

tokens: p (t1, t2, . . . , tN ) =N

t1, t2, ..., tk. This is typically achieved by placing a softmax layer on top of the final LSTM in a forward LM. On the other

hand, a backward LM models the same joint probability of the sequence by predicting the previous token given the future

k=1 p (tk|tk+1, tk+2, . . . , tN ). In other words, a backward LM is similar to forward LM which

processes a sequence with the order being reversed. The training of the biLM model involves modeling the log-likelihood of

both the sentence orientations. Finally, hidden representations from both LMs are concetenated to compose the final token

vectors [42].

For each tokem, ELMo extracts the intermediate layer representations from the biLM and performs a linear combination

based on the given downstream task. A L-layer biLM contains 2L + 1 set of representations as shown below -

←−

k,j |j = 1, . . . , L

h LM

Rk =

,

k

xLM

−→

h LM

k,j ,

=hLM

k,j |j = 0, . . . , L

−→

←−

h LM

k,j

∀j = 1, . . . , L.

L

k = ERk; Θtask = γtask

ELMotask

stask

j hLM

k,j

6

(6)

(7)

(8)

k,0 is the token representation at the lowest level. One can use either character or word embeddings to initialize

Here, hLM

k,0 . For other values of j,

hLM

h LM

k,j ,

ELMo flattens all layers in R in a single vector such that -

hLM

k,j =

j=0

j

In Eq. 8, stask

is the softmax-normalized weight vector to combine the representations of different layers. γtask is a hyper-

parameter which helps in optimization and task specific scaling of the ELMo representation. ELMo produces varied word

representations for the same word in different sentences. According to Peters et al. [41], it is always beneficial to combine

ELMo word representations with standard global word representations like Glove and Word2Vec.

Off-late, there has been a surge of interest in pre-trained language models for myriad of natural language tasks [43].

Language modeling is chosen as the pre-training objective as it is widely considered to incorporate multiple traits of natual

language understanding and generation. A good language model requires learning complex characteristics of language involving

syntactical properties and also semantical coherence. Thus, it is believed that unsupervised training on such objectives would

infuse better linguistic knowledge into the networks than random initialization. The generative pre-training and discriminative

fine-tuning procedure is also desirable as the pre-training is unsupervised and does not require any manual labeling.

Radford et al. [44] proposed similar pre-trained model, the OpenAI-GPT, by adapting the Transformer (see section IV-E).

Recently, Devlin et al. [45] proposed BERT which utilizes a transformer network to pre-train a language model for extracting

contextual word embeddings. Unlike ELMo and OpenAI-GPT, BERT uses different pre-training tasks for language modeling.

In one of the tasks, BERT randomly masks a percentage of words in the sentences and only predicts those masked words. In

the other task, BERT predicts the next sentence given a sentence. This task in particular tries to model the relationship among

two sentences which is supposedly not captured by traditional bidirectional language models. Consequently, this particular

pre-training scheme helps BERT to outperform state-of-the-art techniques by a large margin on key NLP tasks such as QA,

Natural Language Inference (NLI) where understanding relation among two sentences is very important. We discuss the impact

of these proposed models and the performance achieved by them in section VIII-I.

The described approaches for contextual word embeddings promises better quality representations for words. The pre-trained

deep language models also provide a headstart for downstream tasks in the form of transfer learning. This approach has been

extremely popular in computer vision tasks. Whether there would be similar trends in the NLP community, where researchers

and practitioners would prefer such models over traditional variants remains to be seen in the future.

III. CONVOLUTIONAL NEURAL NETWORKS

Following the popularization of word embeddings and its ability to represent words in a distributed space, the need arose

for an effective feature function that extracts higher-level features from constituting words or n-grams. These abstract features

would then be used for numerous NLP tasks such as sentiment analysis, summarization, machine translation, and question

answering (QA). CNNs turned out to be the natural choice given their effectiveness in computer vision tasks [46, 47, 48].

�

7

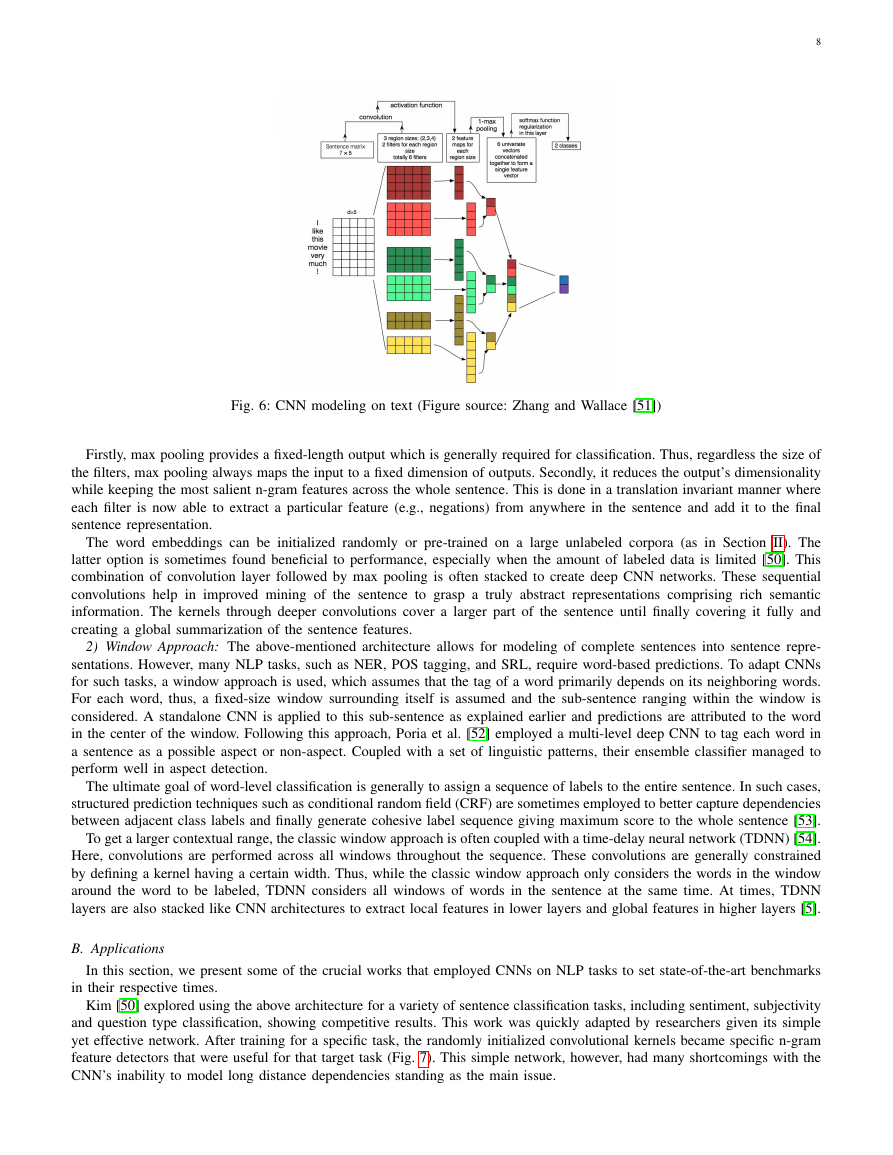

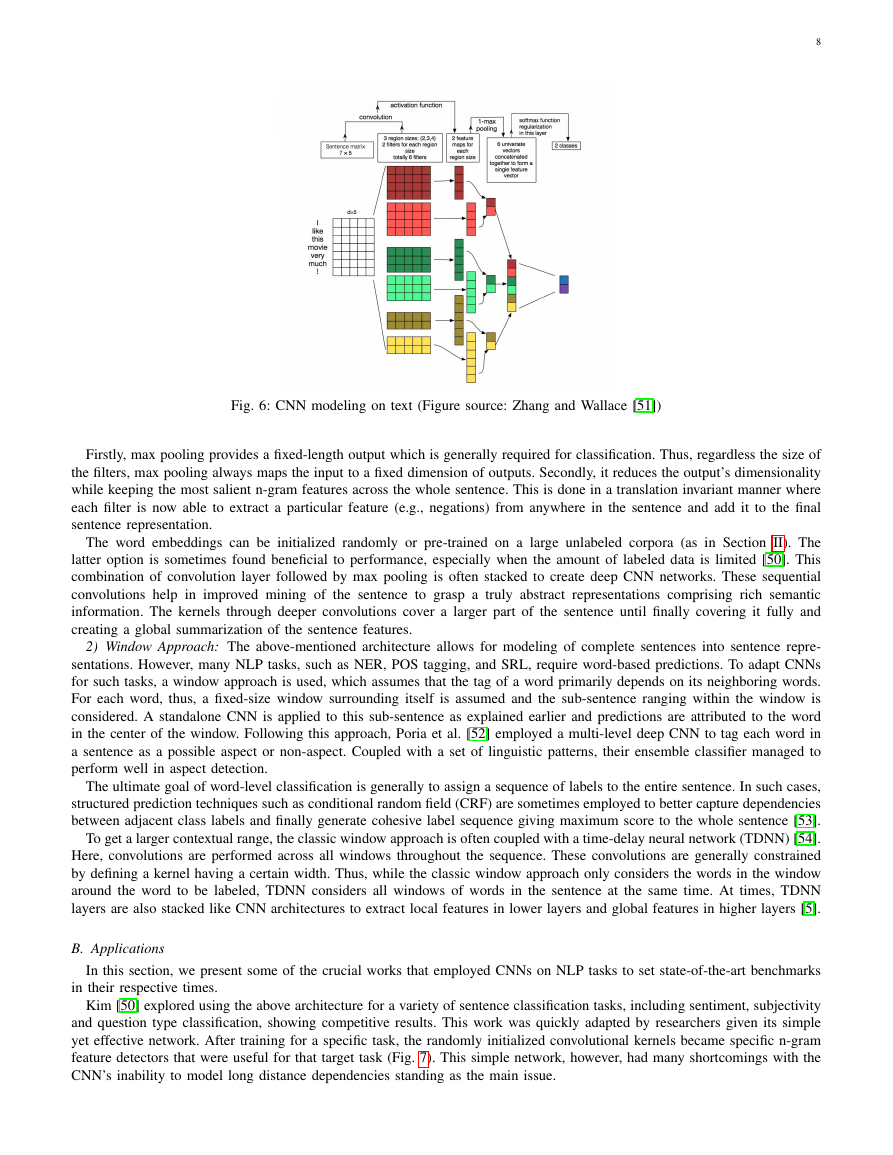

Fig. 5: CNN framework used to perform word wise class prediction (Figure source: Collobert and Weston [19])

The use of CNNs for sentence modeling traces back to Collobert and Weston [19]. This work used multi-task learning to

output multiple predictions for NLP tasks such as POS tags, chunks, named-entity tags, semantic roles, semantically-similar

words and a language model. A look-up table was used to transform each word into a vector of user-defined dimensions.

Thus, an input sequence {s1, s2, ...sn} of n words was transformed into a series of vectors {ws1, ws2 , ...wsn} by applying

the look-up table to each of its words (Fig. 5).

This can be thought of as a primitive word embedding method whose weights were learned in the training of the network.

In [5], Collobert extended his work to propose a general CNN-based framework to solve a plethora of NLP tasks. Both these

works triggered a huge popularization of CNNs amongst NLP researchers. Given that CNNs had already shown their mettle

for computer vision tasks, it was easier for people to believe in their performance.

CNNs have the ability to extract salient n-gram features from the input sentence to create an informative latent semantic

representation of the sentence for downstream tasks. This application was pioneered by Collobert et al. [5], Kalchbrenner et al.

[49], Kim [50], which led to a huge proliferation of CNN-based networks in the succeeding literature. Below, we describe the

working of a simple CNN-based sentence modeling network:

A. Basic CNN

1) Sentence Modeling: For each sentence, let wi ∈ Rd represent the word embedding for the ith word in the sentence,

where d is the dimension of the word embedding. Given that a sentence has n words, the sentence can now be represented as

an embedding matrix W ∈ Rn×d. Fig. 6 depicts such a sentence as an input to the CNN framework.

Let wi:i+j refer to the concatenation of vectors wi, wi+1, ...wj. Convolution is performed on this input embedding layer.

It involves a filter k ∈ Rhd which is applied to a window of h words to produce a new feature. For example, a feature ci is

generated using the window of words wi:i+h−1 by

(9)

Here, b ∈ R is the bias term and f is a non-linear activation function, for example the hyperbolic tangent. The filter k is

applied to all possible windows using the same weights to create the feature map.

ci = f (wi:i+h−1.kT + b)

c = [c1, c2, ..., cn−h+1]

(10)

In a CNN, a number of convolutional filters, also called kernels (typically hundreds), of different widths slide over the

entire word embedding matrix. Each kernel extracts a specific pattern of n-gram. A convolution layer is usually followed by

a max-pooling strategy, ˆc = max{c}, which subsamples the input typically by applying a max operation on each filter. This

strategy has two primary reasons.

wowN−1Input SentenceLookup tableFeature 1Feature kConvolution layerMax-pool over timeFully Connected LayerSoftmax Classificationw1�

8

Fig. 6: CNN modeling on text (Figure source: Zhang and Wallace [51])

Firstly, max pooling provides a fixed-length output which is generally required for classification. Thus, regardless the size of

the filters, max pooling always maps the input to a fixed dimension of outputs. Secondly, it reduces the output’s dimensionality

while keeping the most salient n-gram features across the whole sentence. This is done in a translation invariant manner where

each filter is now able to extract a particular feature (e.g., negations) from anywhere in the sentence and add it to the final

sentence representation.

The word embeddings can be initialized randomly or pre-trained on a large unlabeled corpora (as in Section II). The

latter option is sometimes found beneficial to performance, especially when the amount of labeled data is limited [50]. This

combination of convolution layer followed by max pooling is often stacked to create deep CNN networks. These sequential

convolutions help in improved mining of the sentence to grasp a truly abstract representations comprising rich semantic

information. The kernels through deeper convolutions cover a larger part of the sentence until finally covering it fully and

creating a global summarization of the sentence features.

2) Window Approach: The above-mentioned architecture allows for modeling of complete sentences into sentence repre-

sentations. However, many NLP tasks, such as NER, POS tagging, and SRL, require word-based predictions. To adapt CNNs

for such tasks, a window approach is used, which assumes that the tag of a word primarily depends on its neighboring words.

For each word, thus, a fixed-size window surrounding itself is assumed and the sub-sentence ranging within the window is

considered. A standalone CNN is applied to this sub-sentence as explained earlier and predictions are attributed to the word

in the center of the window. Following this approach, Poria et al. [52] employed a multi-level deep CNN to tag each word in

a sentence as a possible aspect or non-aspect. Coupled with a set of linguistic patterns, their ensemble classifier managed to

perform well in aspect detection.

The ultimate goal of word-level classification is generally to assign a sequence of labels to the entire sentence. In such cases,

structured prediction techniques such as conditional random field (CRF) are sometimes employed to better capture dependencies

between adjacent class labels and finally generate cohesive label sequence giving maximum score to the whole sentence [53].

To get a larger contextual range, the classic window approach is often coupled with a time-delay neural network (TDNN) [54].

Here, convolutions are performed across all windows throughout the sequence. These convolutions are generally constrained

by defining a kernel having a certain width. Thus, while the classic window approach only considers the words in the window

around the word to be labeled, TDNN considers all windows of words in the sentence at the same time. At times, TDNN

layers are also stacked like CNN architectures to extract local features in lower layers and global features in higher layers [5].

B. Applications

in their respective times.

In this section, we present some of the crucial works that employed CNNs on NLP tasks to set state-of-the-art benchmarks

Kim [50] explored using the above architecture for a variety of sentence classification tasks, including sentiment, subjectivity

and question type classification, showing competitive results. This work was quickly adapted by researchers given its simple

yet effective network. After training for a specific task, the randomly initialized convolutional kernels became specific n-gram

feature detectors that were useful for that target task (Fig. 7). This simple network, however, had many shortcomings with the

CNN’s inability to model long distance dependencies standing as the main issue.

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc