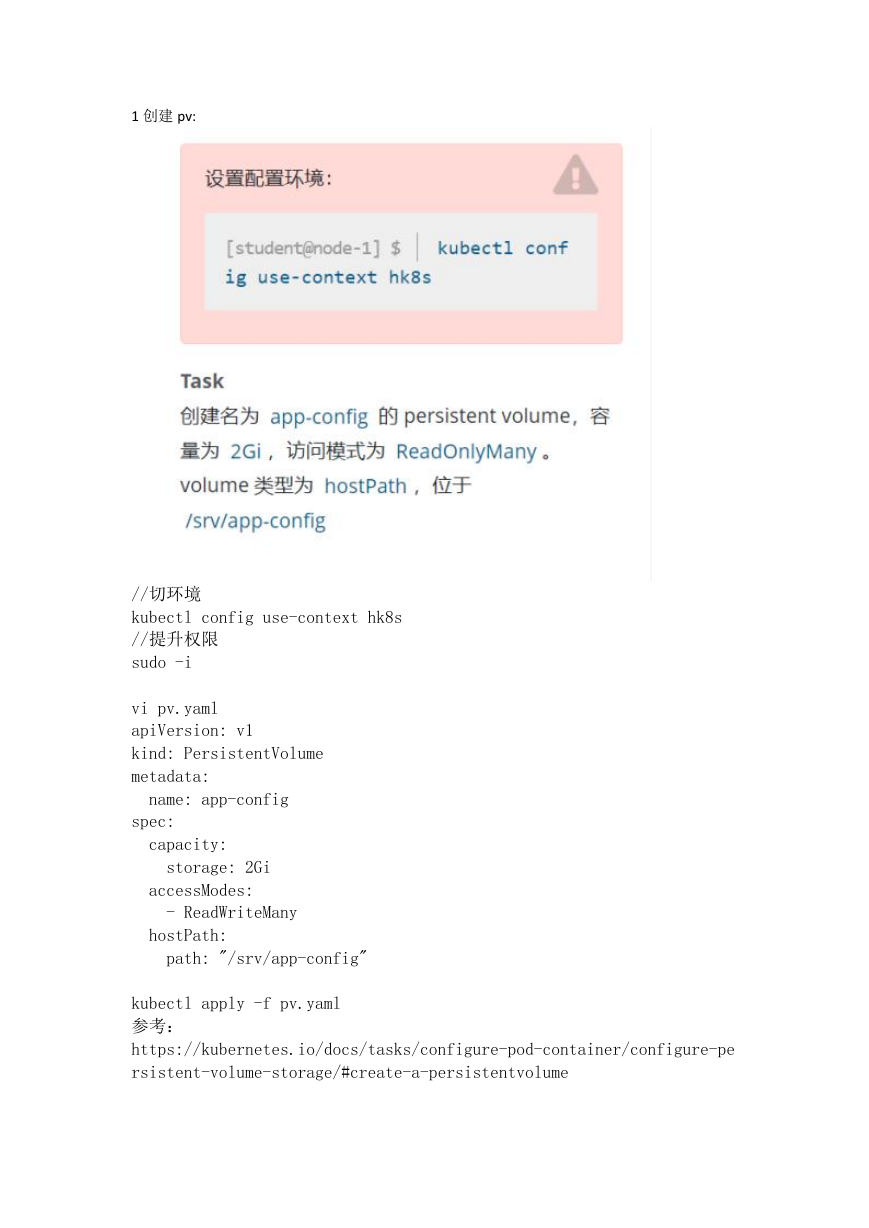

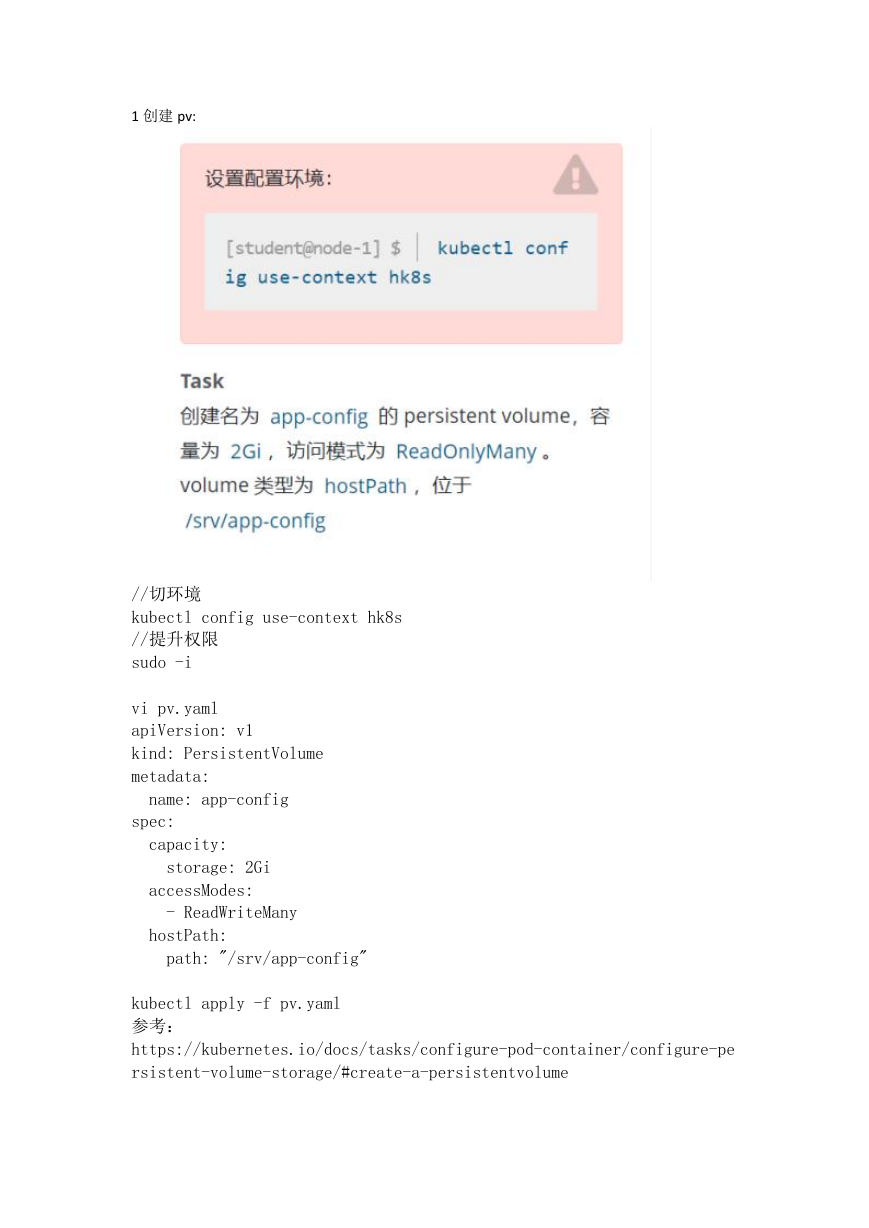

1 创建 pv:

//切环境

kubectl config use-context hk8s

//提升权限

sudo -i

vi pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: app-config

spec:

capacity:

storage: 2Gi

accessModes:

- ReadWriteMany

hostPath:

path: "/srv/app-config"

kubectl apply -f pv.yaml

参考:

https://kubernetes.io/docs/tasks/configure-pod-container/configure-pe

rsistent-volume-storage/#create-a-persistentvolume

�

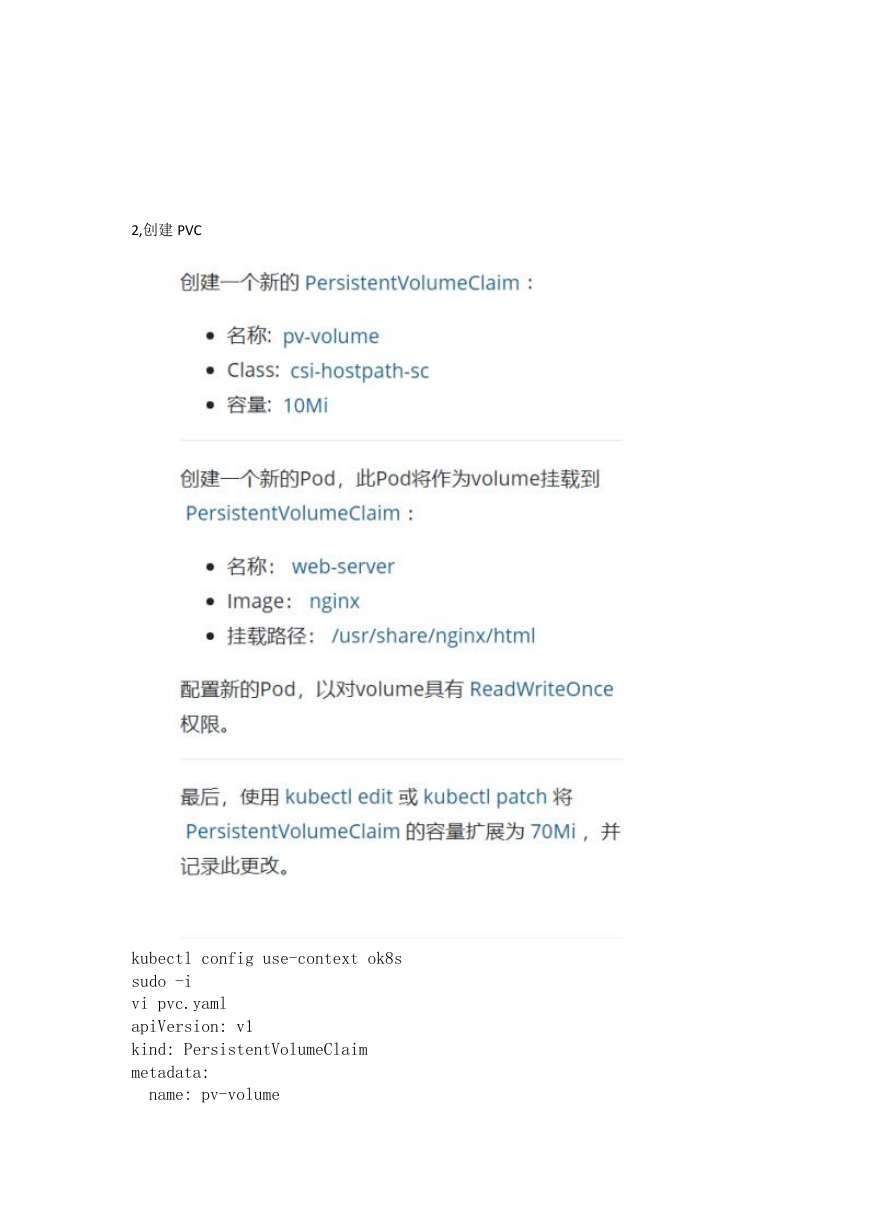

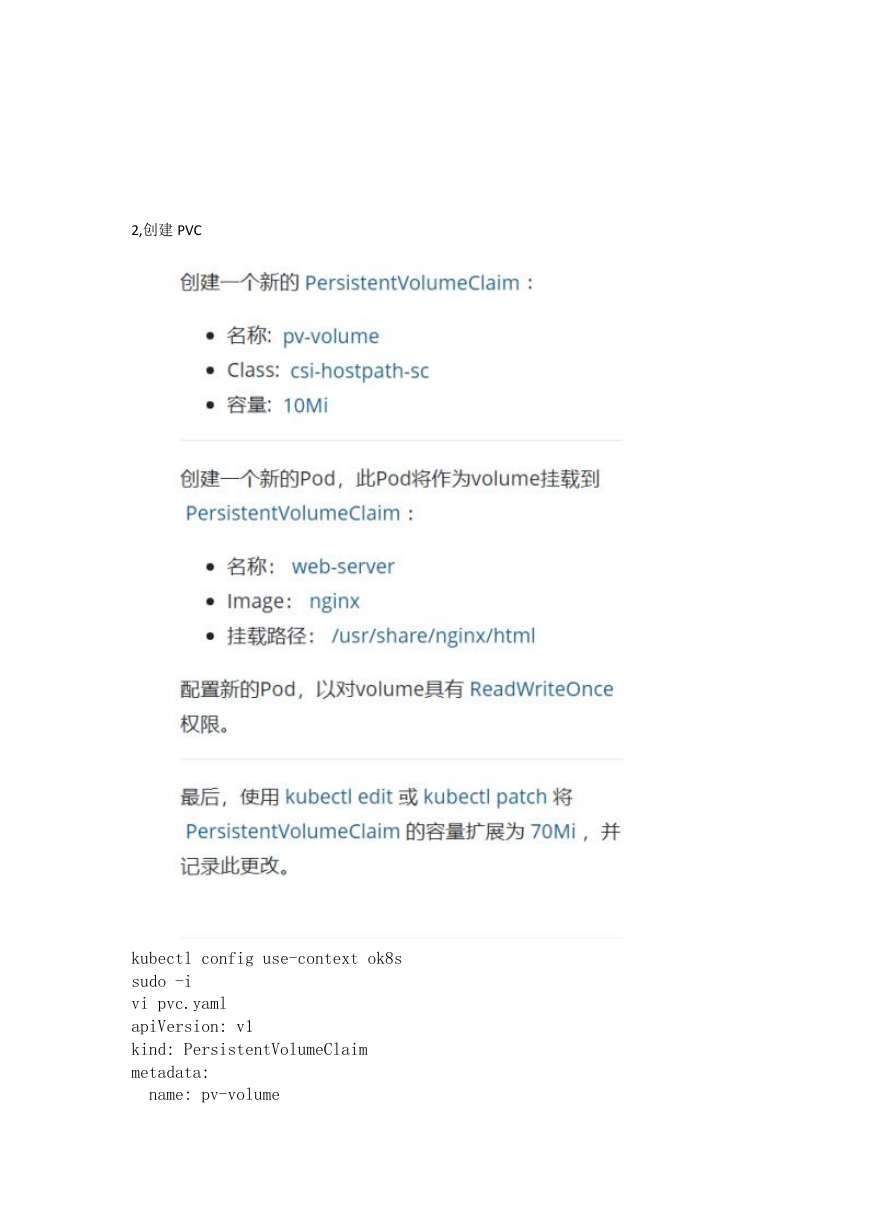

2,创建 PVC

kubectl config use-context ok8s

sudo -i

vi pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pv-volume

�

spec:

storageClassName: csi-hostpath-sc

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Mi

kubectl apply -f pvc.yaml

https://kubernetes.io/docs/tasks/configure-pod-container/configure-pe

rsistent-volume-storage/#create-a-persistentvolumeclaim

vi pod-pvc.yaml

apiVersion: v1

kind: Pod

metadata:

name: web-server

spec:

volumes:

- name: task-pv-storage

persistentVolumeClaim:

claimName: pv-volume

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: task-pv-storage

kubectl apply -f pod-pvc.yaml

https://kubernetes.io/docs/tasks/configure-pod-container/configure-pe

rsistent-volume-storage/#create-a-pod

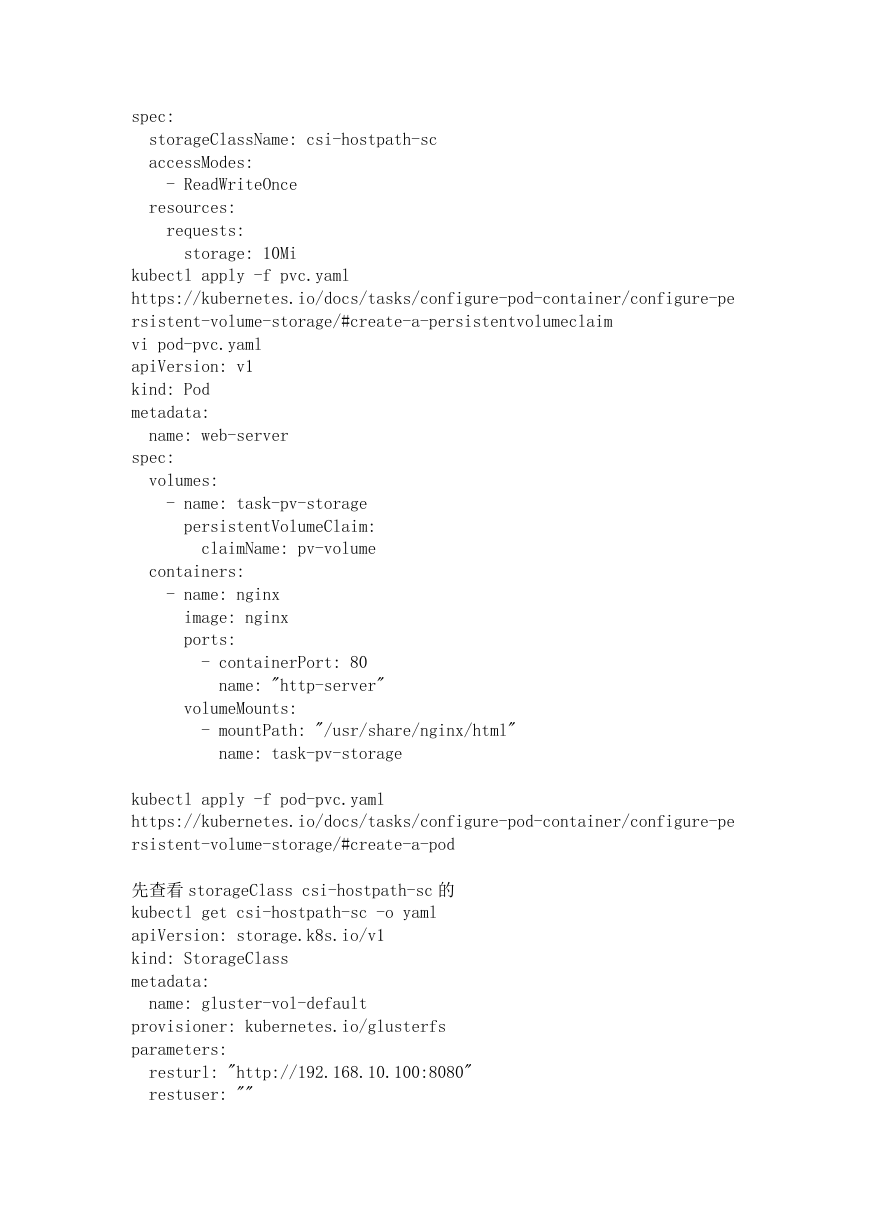

先查看 storageClass csi-hostpath-sc 的

kubectl get csi-hostpath-sc -o yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: gluster-vol-default

provisioner: kubernetes.io/glusterfs

parameters:

resturl: "http://192.168.10.100:8080"

restuser: ""

�

secretNamespace: ""

secretName: ""

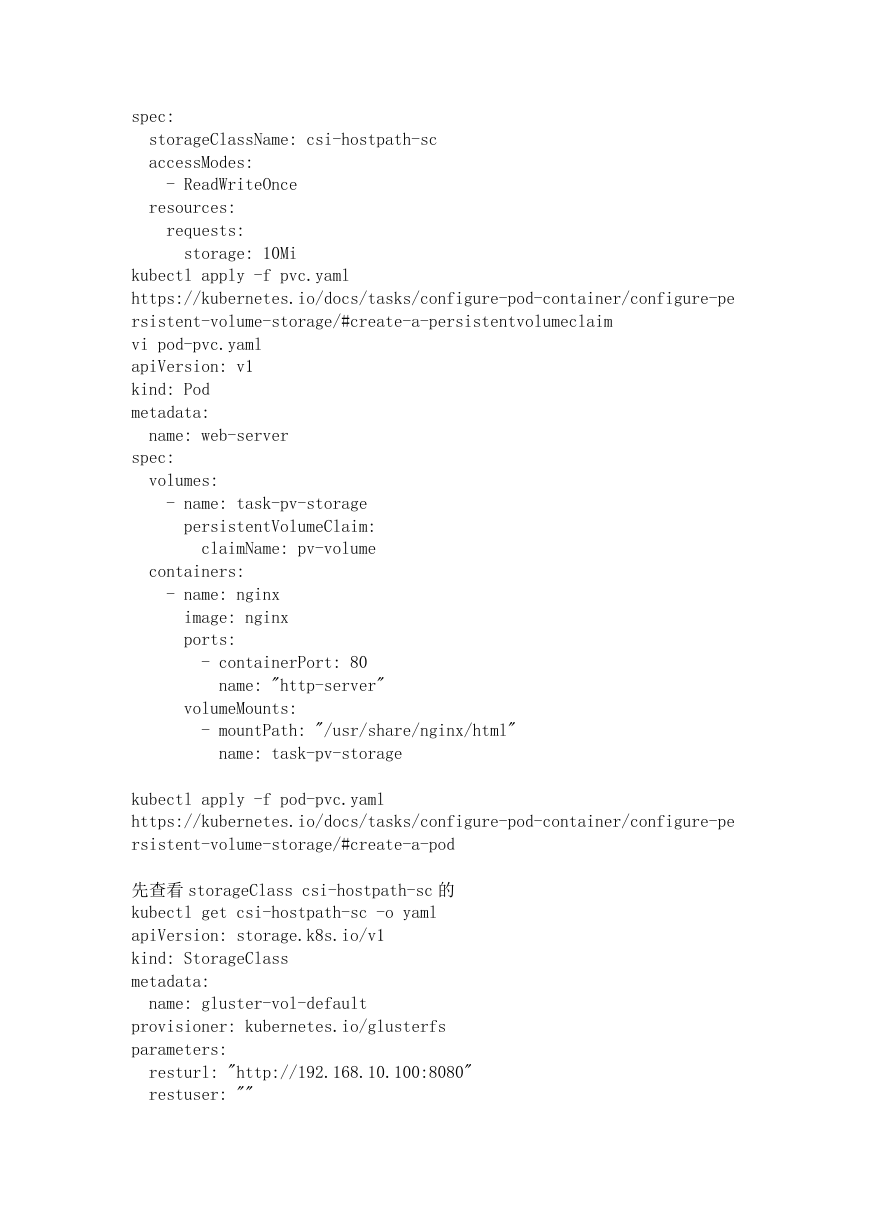

allowVolumeExpansion: true

有这个属性 allowVolumeExpansion: true 既可修改该属性 没有该属性的话 需

要修改

kubectl edit storageClass csi-hostpath-sc

加上这个属性即可

kubectl patch pvc pv-volume -p

'{"spec":{"resources":{"requests":{"storage":"70Mi"}}}}' --record

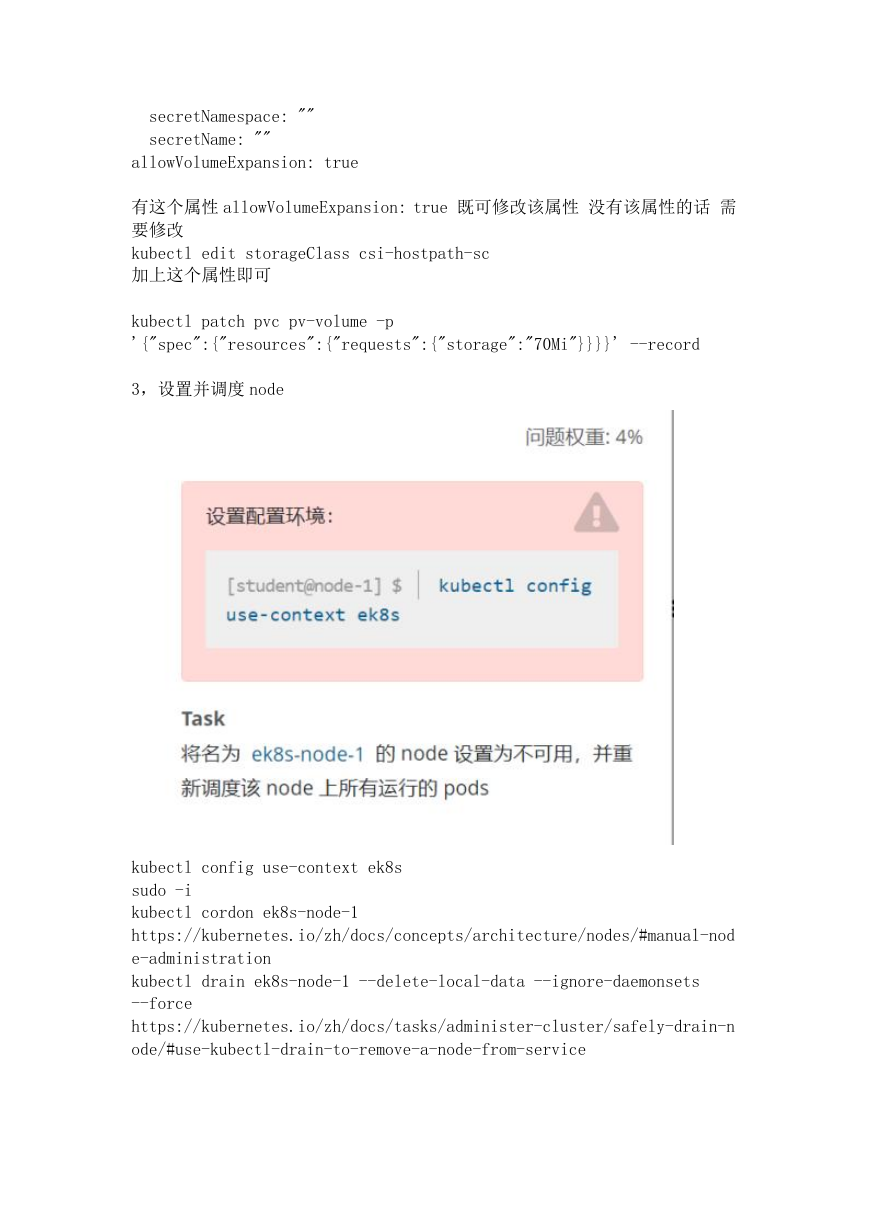

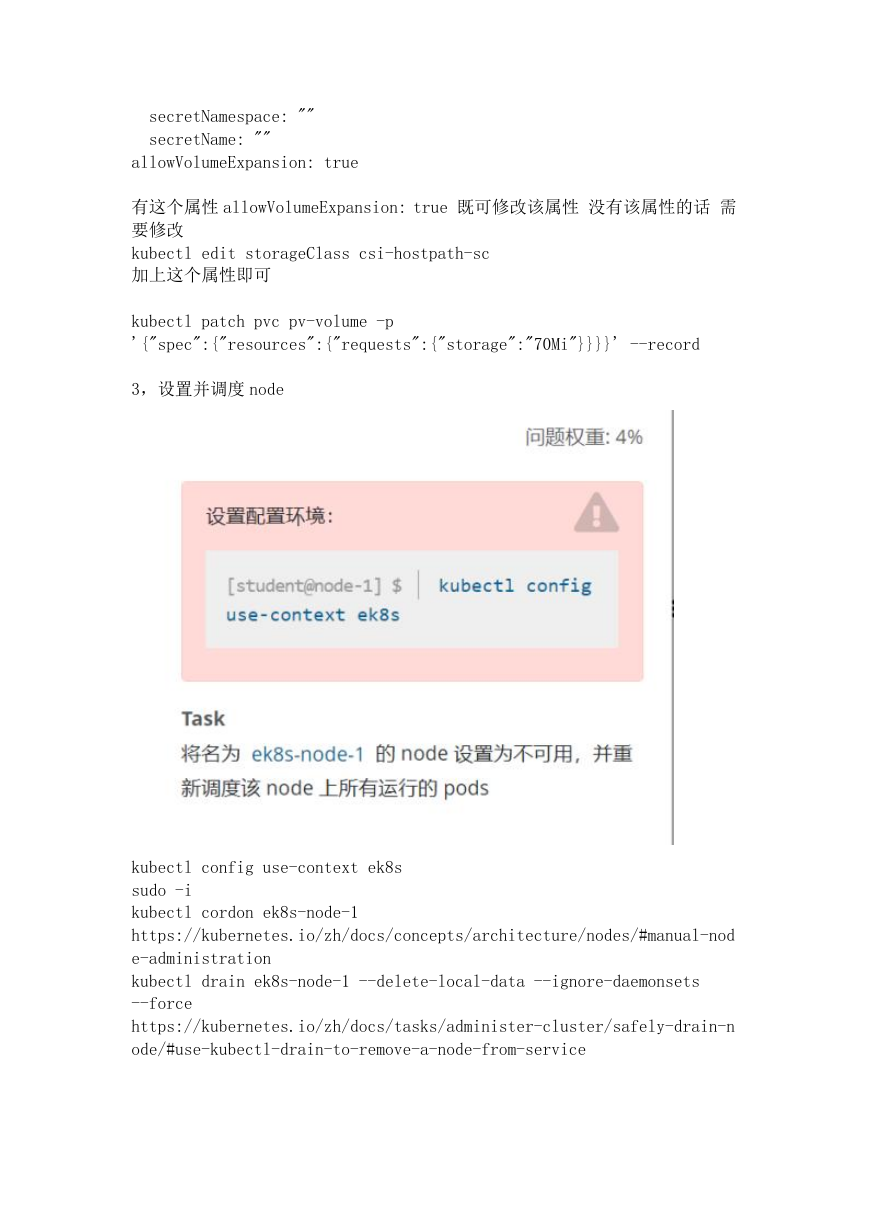

3,设置并调度 node

kubectl config use-context ek8s

sudo -i

kubectl cordon ek8s-node-1

https://kubernetes.io/zh/docs/concepts/architecture/nodes/#manual-nod

e-administration

kubectl drain ek8s-node-1 --delete-local-data --ignore-daemonsets

--force

https://kubernetes.io/zh/docs/tasks/administer-cluster/safely-drain-n

ode/#use-kubectl-drain-to-remove-a-node-from-service

�

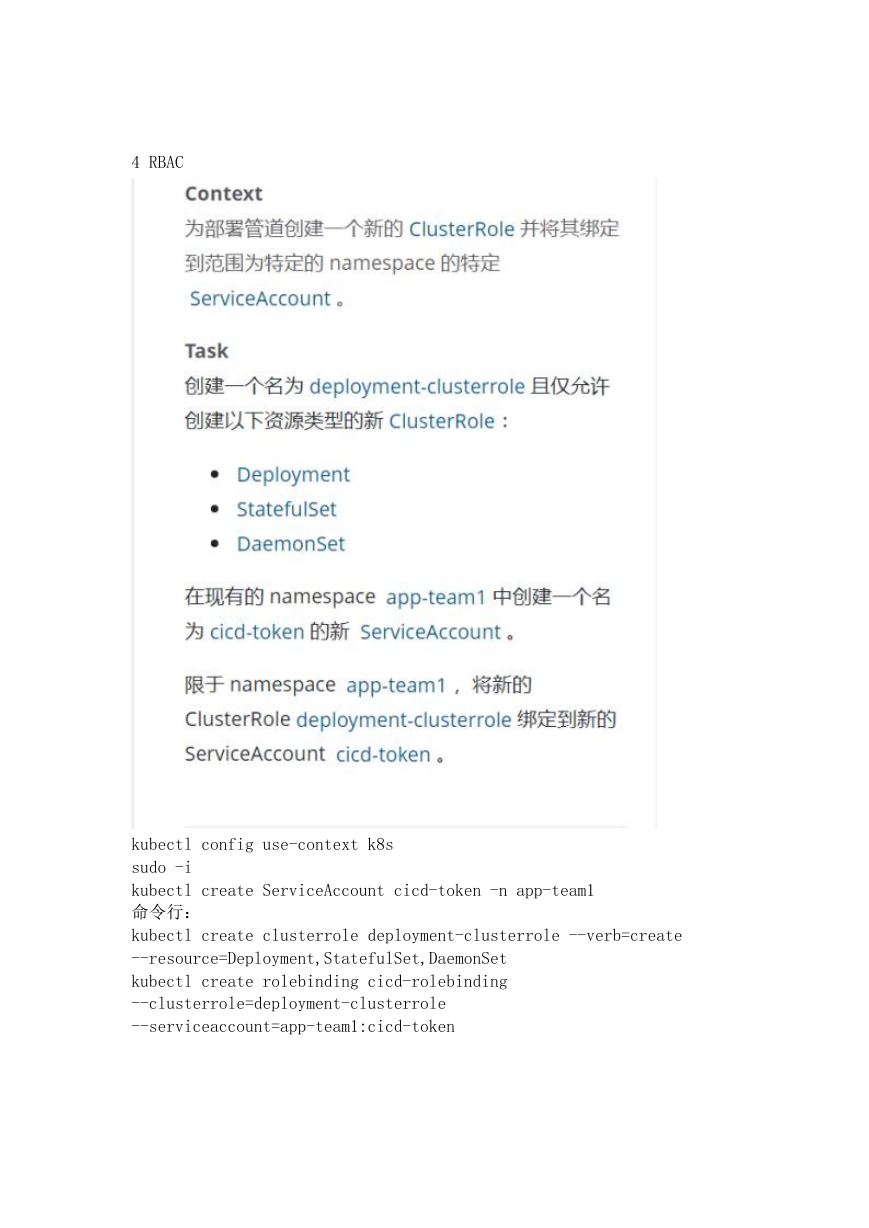

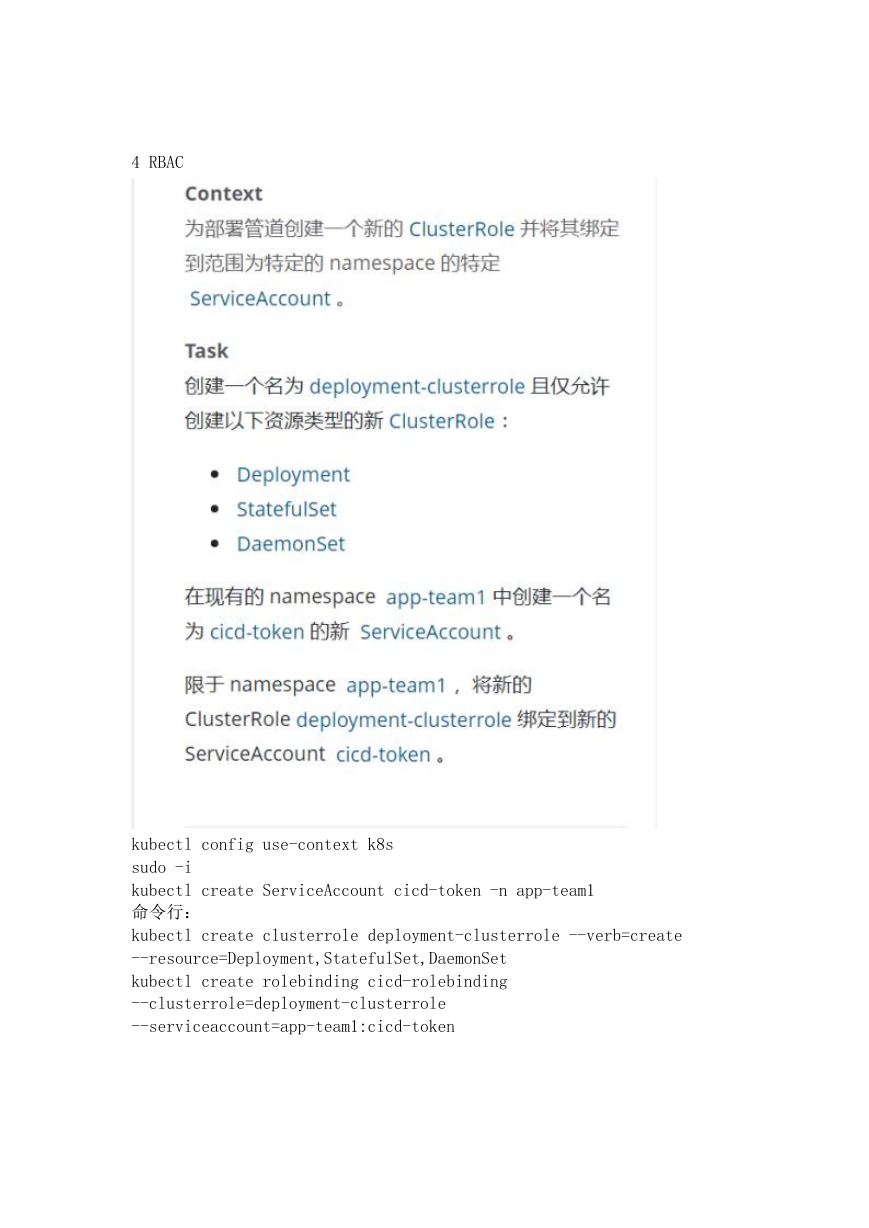

4 RBAC

kubectl config use-context k8s

sudo -i

kubectl create ServiceAccount cicd-token -n app-team1

命令行:

kubectl create clusterrole deployment-clusterrole --verb=create

--resource=Deployment,StatefulSet,DaemonSet

kubectl create rolebinding cicd-rolebinding

--clusterrole=deployment-clusterrole

--serviceaccount=app-team1:cicd-token

�

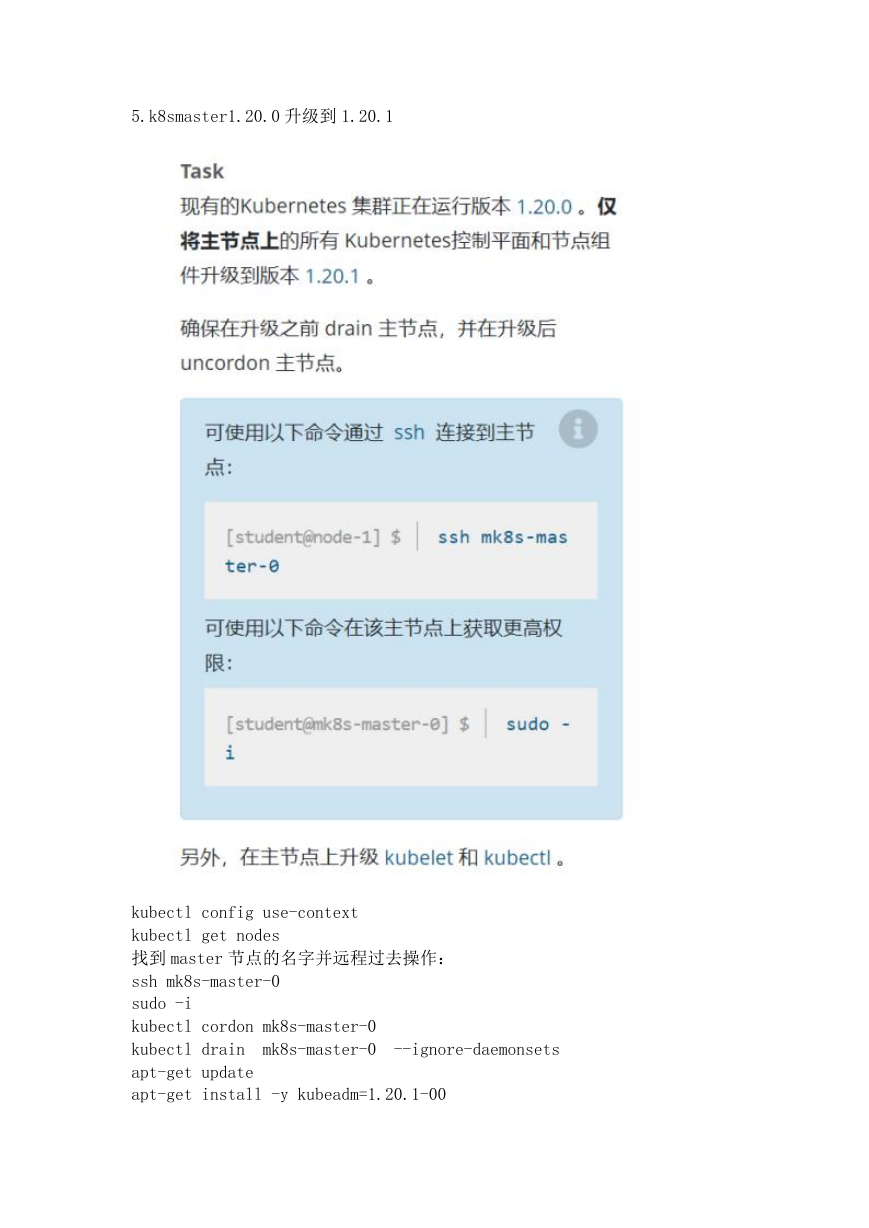

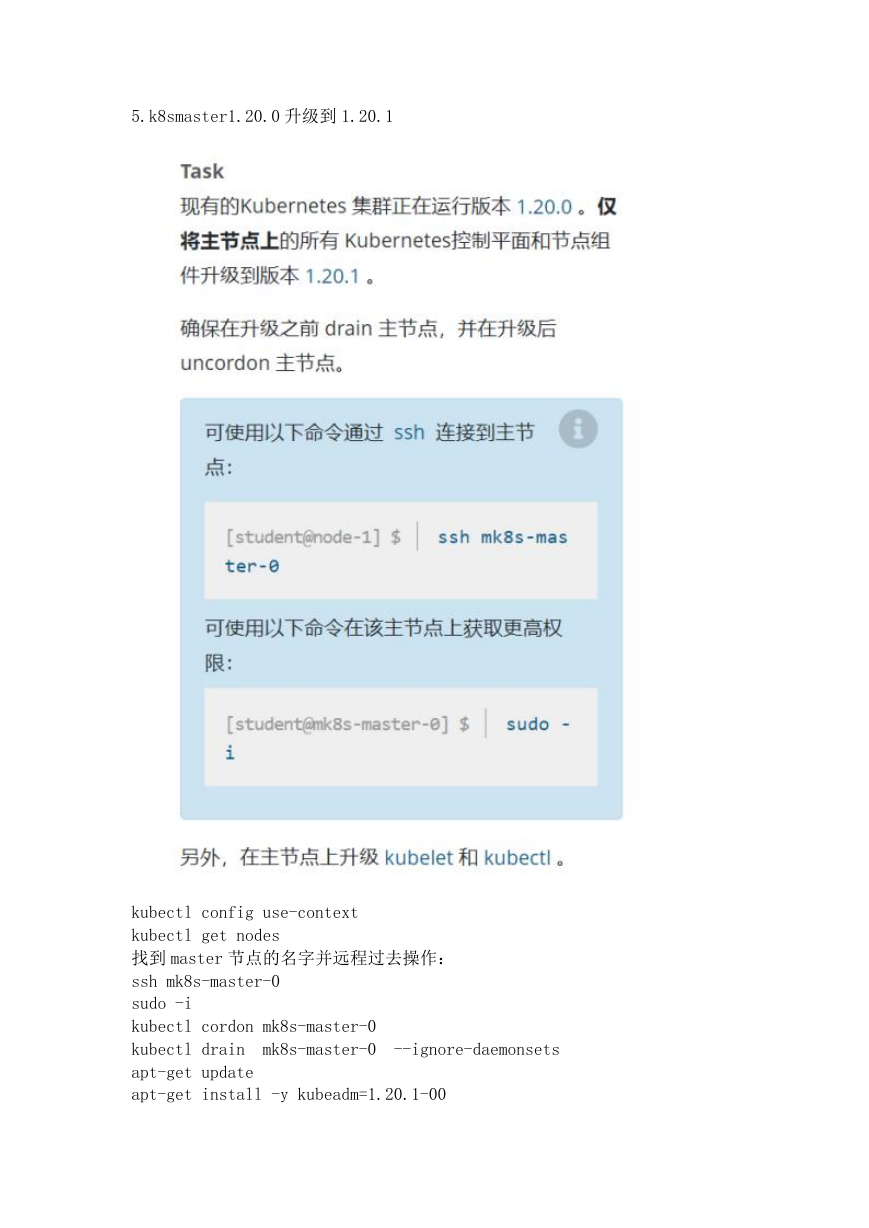

5.k8smaster1.20.0 升级到 1.20.1

kubectl config use-context

kubectl get nodes

找到 master 节点的名字并远程过去操作:

ssh mk8s-master-0

sudo -i

kubectl cordon mk8s-master-0

kubectl drain

mk8s-master-0

apt-get update

apt-get install -y kubeadm=1.20.1-00

--ignore-daemonsets

�

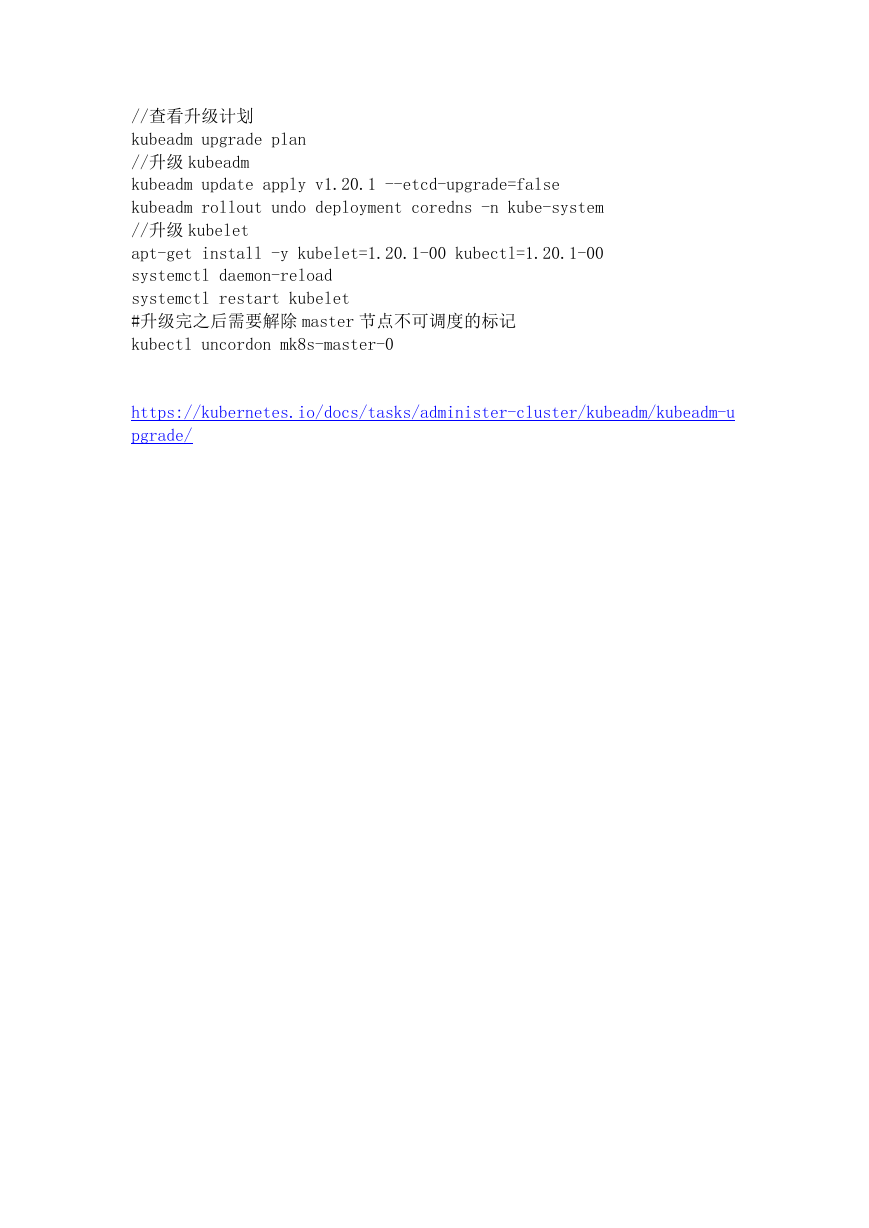

//查看升级计划

kubeadm upgrade plan

//升级 kubeadm

kubeadm update apply v1.20.1 --etcd-upgrade=false

kubeadm rollout undo deployment coredns -n kube-system

//升级 kubelet

apt-get install -y kubelet=1.20.1-00 kubectl=1.20.1-00

systemctl daemon-reload

systemctl restart kubelet

#升级完之后需要解除 master 节点不可调度的标记

kubectl uncordon mk8s-master-0

https://kubernetes.io/docs/tasks/administer-cluster/kubeadm/kubeadm-u

pgrade/

�

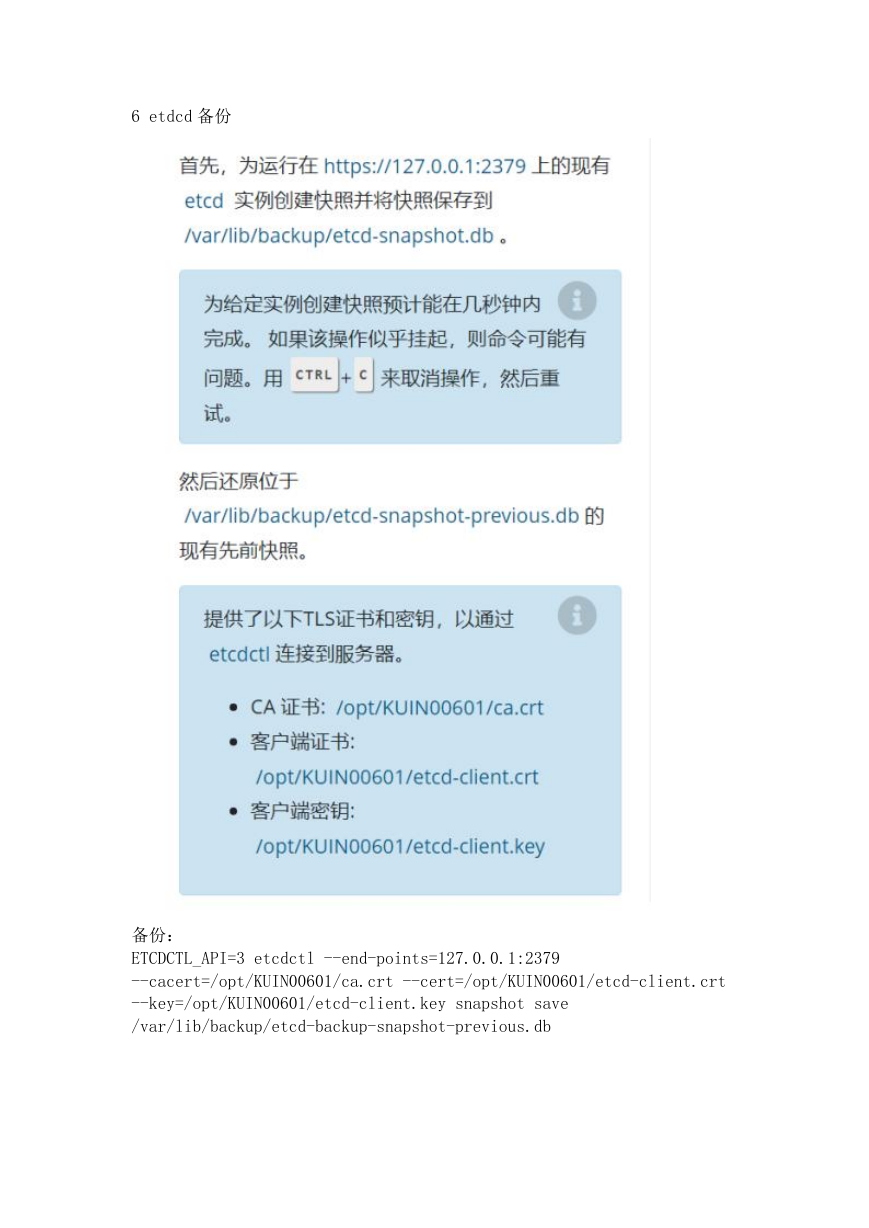

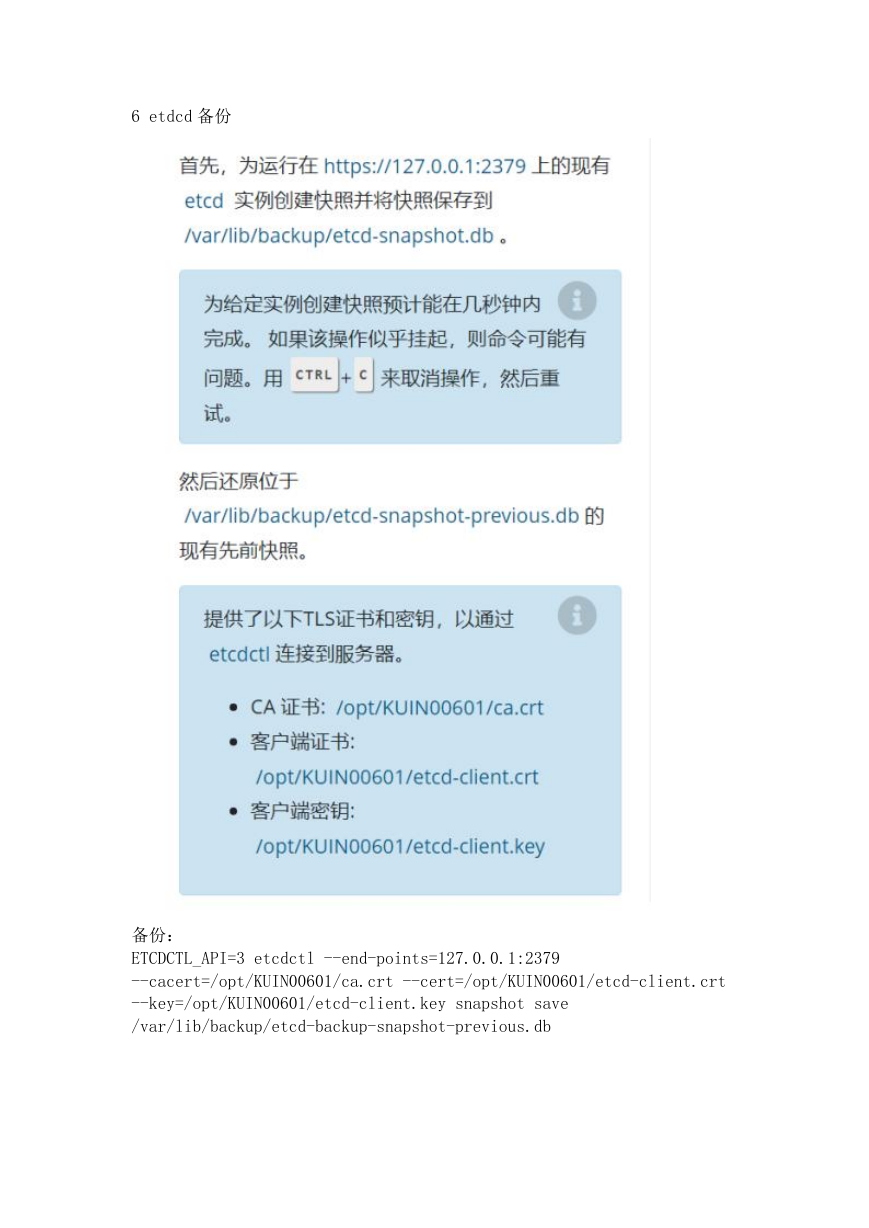

6 etdcd 备份

备份:

ETCDCTL_API=3 etcdctl --end-points=127.0.0.1:2379

--cacert=/opt/KUIN00601/ca.crt --cert=/opt/KUIN00601/etcd-client.crt

--key=/opt/KUIN00601/etcd-client.key snapshot save

/var/lib/backup/etcd-backup-snapshot-previous.db

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc