Cover

Title Page

Copyright and Credits

Contributors

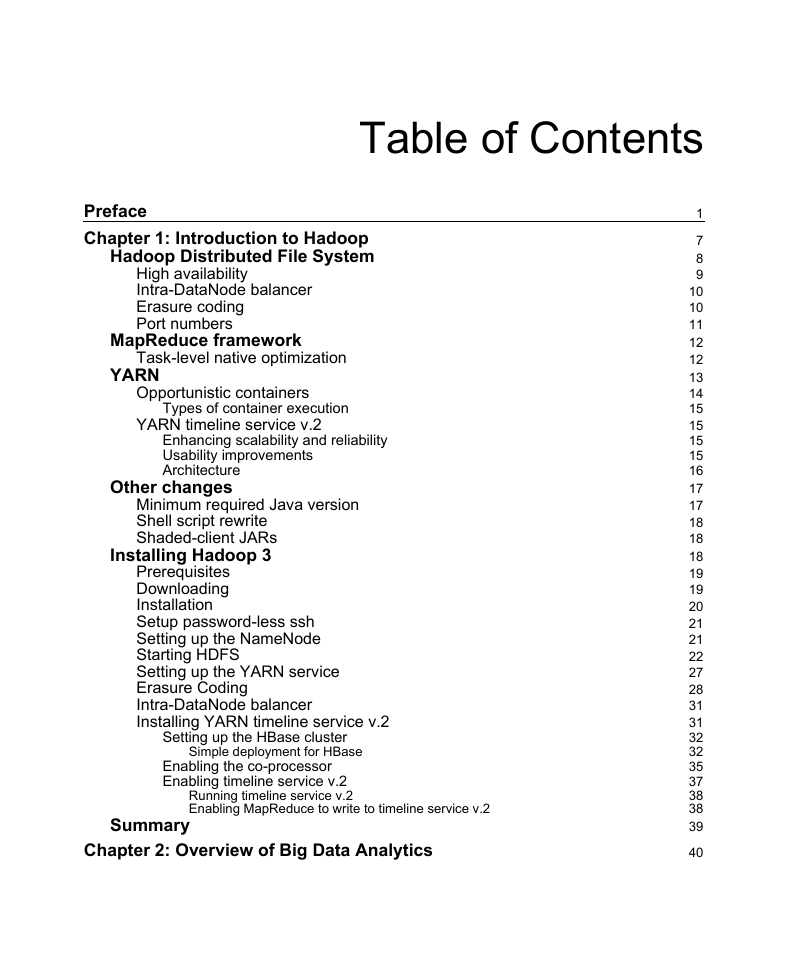

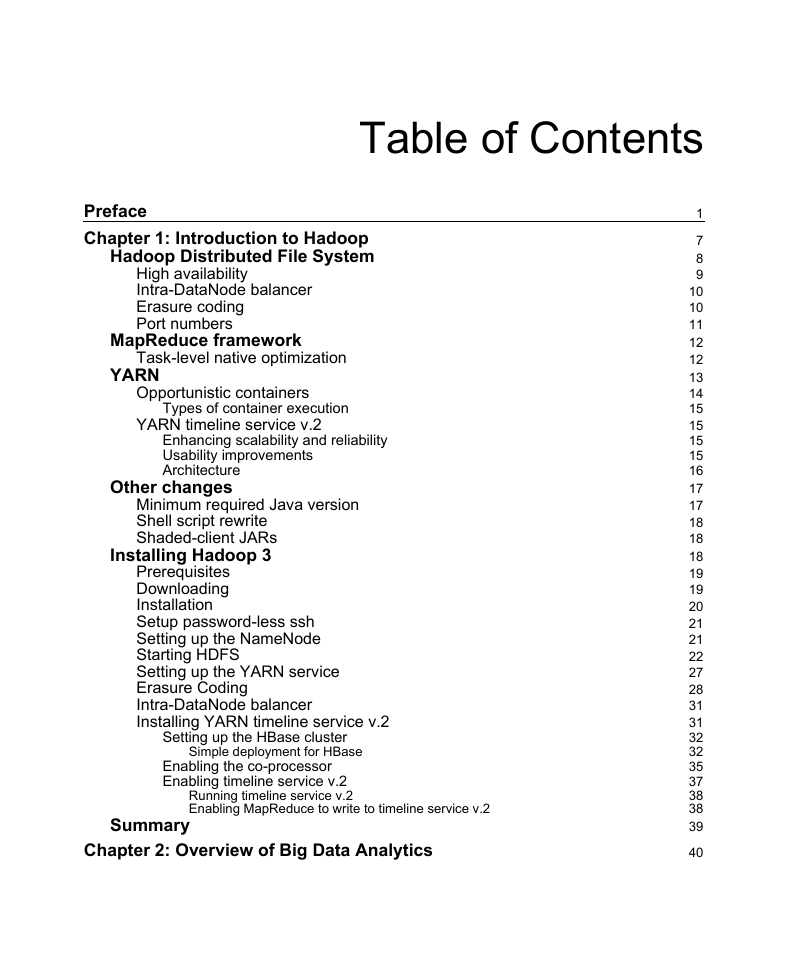

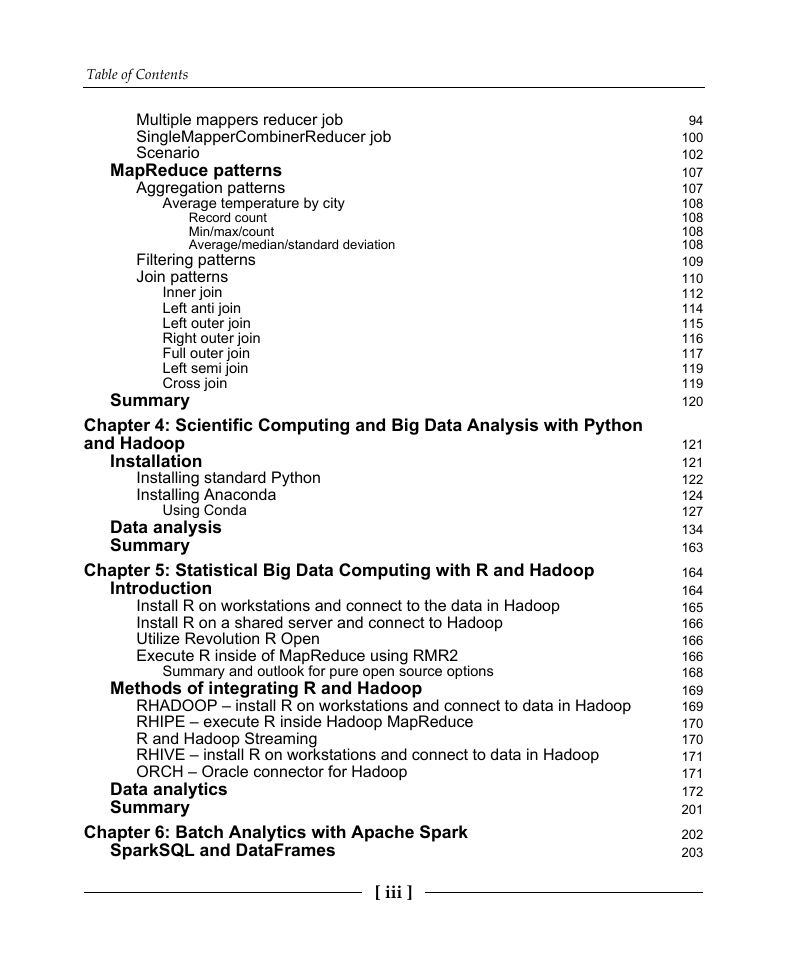

Table of Contents

Preface

Chapter 1: Introduction to Hadoop

Hadoop Distributed File System

High availability

Intra-DataNode balancer

Erasure coding

Port numbers

MapReduce framework

Task-level native optimization

YARN

Opportunistic containers

Types of container execution

YARN timeline service v.2

Enhancing scalability and reliability

Usability improvements

Architecture

Other changes

Minimum required Java version

Shell script rewrite

Shaded-client JARs

Installing Hadoop 3

Prerequisites

Downloading

Installation

Setup password-less ssh

Setting up the NameNode

Starting HDFS

Setting up the YARN service

Erasure Coding

Intra-DataNode balancer

Installing YARN timeline service v.2

Setting up the HBase cluster

Simple deployment for HBase

Enabling the co-processor

Enabling timeline service v.2

Running timeline service v.2

Enabling MapReduce to write to timeline service v.2

Summary

Chapter 2: Overview of Big Data Analytics

Introduction to data analytics

Inside the data analytics process

Introduction to big data

Variety of data

Velocity of data

Volume of data

Veracity of data

Variability of data

Visualization

Value

Distributed computing using Apache Hadoop

The MapReduce framework

Hive

Downloading and extracting the Hive binaries

Installing Derby

Using Hive

Creating a database

Creating a table

SELECT statement syntax

WHERE clauses

INSERT statement syntax

Primitive types

Complex types

Built-in operators and functions

Built-in operators

Built-in functions

Language capabilities

A cheat sheet on retrieving information

Apache Spark

Visualization using Tableau

Summary

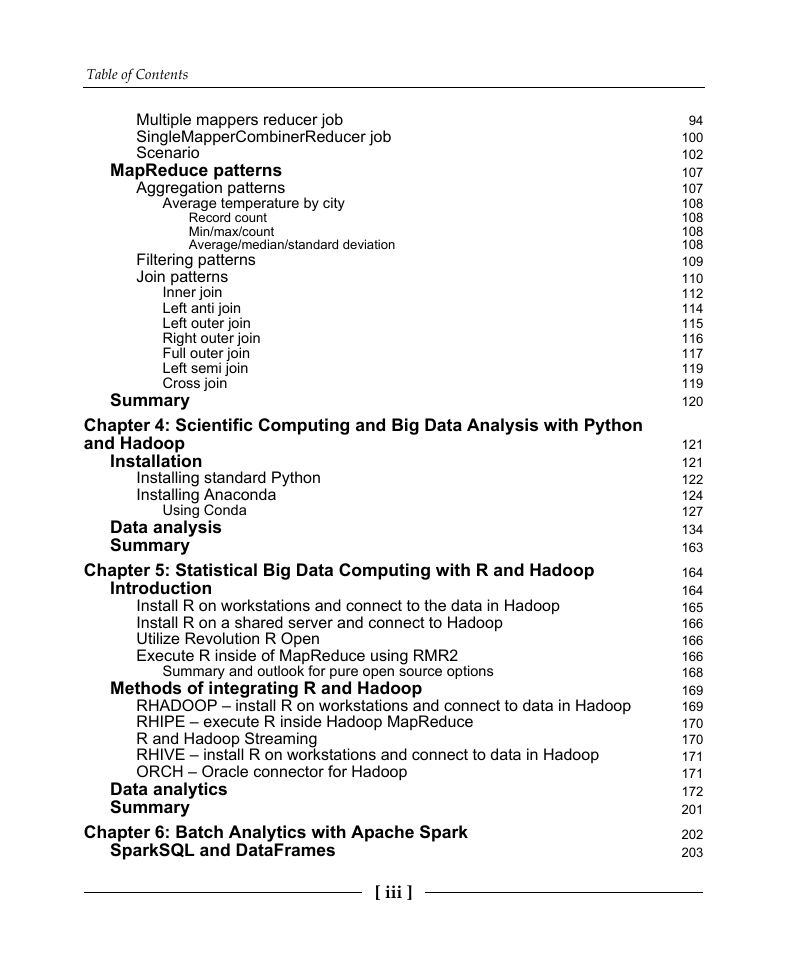

Chapter 3: Big Data Processing with MapReduce

The MapReduce framework

Dataset

Record reader

Map

Combiner

Partitioner

Shuffle and sort

Reduce

Output format

MapReduce job types

Single mapper job

Single mapper reducer job

Multiple mappers reducer job

SingleMapperCombinerReducer job

Scenario

MapReduce patterns

Aggregation patterns

Average temperature by city

Record count

Min/max/count

Average/median/standard deviation

Filtering patterns

Join patterns

Inner join

Left anti join

Left outer join

Right outer join

Full outer join

Left semi join

Cross join

Summary

Chapter 4: Scientific Computing and Big Data Analysis with Python and Hadoop

Installation

Installing standard Python

Installing Anaconda

Using Conda

Data analysis

Summary

Chapter 5: Statistical Big Data Computing with R and Hadoop

Introduction

Install R on workstations and connect to the data in Hadoop

Install R on a shared server and connect to Hadoop

Utilize Revolution R Open

Execute R inside of MapReduce using RMR2

Summary and outlook for pure open source options

Methods of integrating R and Hadoop

RHADOOP – install R on workstations and connect to data in Hadoop

RHIPE – execute R inside Hadoop MapReduce

R and Hadoop Streaming

RHIVE – install R on workstations and connect to data in Hadoop

ORCH – Oracle connector for Hadoop

Data analytics

Summary

Chapter 6: Batch Analytics with Apache Spark

SparkSQL and DataFrames

DataFrame APIs and the SQL API

Pivots

Filters

User-defined functions

Schema – structure of data

Implicit schema

Explicit schema

Encoders

Loading datasets

Saving datasets

Aggregations

Aggregate functions

count

first

last

approx_count_distinct

min

max

avg

sum

kurtosis

skewness

Variance

Standard deviation

Covariance

groupBy

Rollup

Cube

Window functions

ntiles

Joins

Inner workings of join

Shuffle join

Broadcast join

Join types

Inner join

Left outer join

Right outer join

Outer join

Left anti join

Left semi join

Cross join

Performance implications of join

Summary

Chapter 7: Real-Time Analytics with Apache Spark

Streaming

At-least-once processing

At-most-once processing

Exactly-once processing

Spark Streaming

StreamingContext

Creating StreamingContext

Starting StreamingContext

Stopping StreamingContext

Input streams

receiverStream

socketTextStream

rawSocketStream

fileStream

textFileStream

binaryRecordsStream

queueStream

textFileStream example

twitterStream example

Discretized Streams

Transformations

Windows operations

Stateful/stateless transformations

Stateless transformations

Stateful transformations

Checkpointing

Metadata checkpointing

Data checkpointing

Driver failure recovery

Interoperability with streaming platforms (Apache Kafka)

Receiver-based

Direct Stream

Structured Streaming

Getting deeper into Structured Streaming

Handling event time and late date

Fault-tolerance semantics

Summary

Chapter 8: Batch Analytics with Apache Flink

Introduction to Apache Flink

Continuous processing for unbounded datasets

Flink, the streaming model, and bounded datasets

Installing Flink

Downloading Flink

Installing Flink

Starting a local Flink cluster

Using the Flink cluster UI

Batch analytics

Reading file

File-based

Collection-based

Generic

Transformations

GroupBy

Aggregation

Joins

Inner join

Left outer join

Right outer join

Full outer join

Writing to a file

Summary

Chapter 9: Stream Processing with Apache Flink

Introduction to streaming execution model

Data processing using the DataStream API

Execution environment

Data sources

Socket-based

File-based

Transformations

map

flatMap

filter

keyBy

reduce

fold

Aggregations

window

Global windows

Tumbling windows

Sliding windows

Session windows

windowAll

union

Window join

split

Select

Project

Physical partitioning

Custom partitioning

Random partitioning

Rebalancing partitioning

Rescaling

Broadcasting

Event time and watermarks

Connectors

Kafka connector

Twitter connector

RabbitMQ connector

Elasticsearch connector

Cassandra connector

Summary

Chapter 10: Visualizing Big Data

Introduction

Tableau

Chart types

Line charts

Pie chart

Bar chart

Heat map

Using Python to visualize data

Using R to visualize data

Big data visualization tools

Summary

Chapter 11: Introduction to Cloud Computing

Concepts and terminology

Cloud

IT resource

On-premise

Cloud consumers and Cloud providers

Scaling

Types of scaling

Horizontal scaling

Vertical scaling

Cloud service

Cloud service consumer

Goals and benefits

Increased scalability

Increased availability and reliability

Risks and challenges

Increased security vulnerabilities

Reduced operational governance control

Limited portability between Cloud providers

Roles and boundaries

Cloud provider

Cloud consumer

Cloud service owner

Cloud resource administrator

Additional roles

Organizational boundary

Trust boundary

Cloud characteristics

On-demand usage

Ubiquitous access

Multi-tenancy (and resource pooling)

Elasticity

Measured usage

Resiliency

Cloud delivery models

Infrastructure as a Service

Platform as a Service

Software as a Service

Combining Cloud delivery models

IaaS + PaaS

IaaS + PaaS + SaaS

Cloud deployment models

Public Clouds

Community Clouds

Private Clouds

Hybrid Clouds

Summary

Chapter 12: Using Amazon Web Services

Amazon Elastic Compute Cloud

Elastic web-scale computing

Complete control of operations

Flexible Cloud hosting services

Integration

High reliability

Security

Inexpensive

Easy to start

Instances and Amazon Machine Images

Launching multiple instances of an AMI

Instances

AMIs

Regions and availability zones

Region and availability zone concepts

Regions

Availability zones

Available regions

Regions and endpoints

Instance types

Tag basics

Amazon EC2 key pairs

Amazon EC2 security groups for Linux instances

Elastic IP addresses

Amazon EC2 and Amazon Virtual Private Cloud

Amazon Elastic Block Store

Amazon EC2 instance store

What is AWS Lambda?

When should I use AWS Lambda?

Introduction to Amazon S3

Getting started with Amazon S3

Comprehensive security and compliance capabilities

Query in place

Flexible management

Most supported platform with the largest ecosystem

Easy and flexible data transfer

Backup and recovery

Data archiving

Data lakes and big data analytics

Hybrid Cloud storage

Cloud-native application data

Disaster recovery

Amazon DynamoDB

Amazon Kinesis Data Streams

What can I do with Kinesis Data Streams?

Accelerated log and data feed intake and processing

Real-time metrics and reporting

Real-time data analytics

Complex stream processing

Benefits of using Kinesis Data Streams

AWS Glue

When should I use AWS Glue?

Amazon EMR

Practical AWS EMR cluster

Summary

Index

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc