Cover

Copyright

Packt Upsell

Contributors

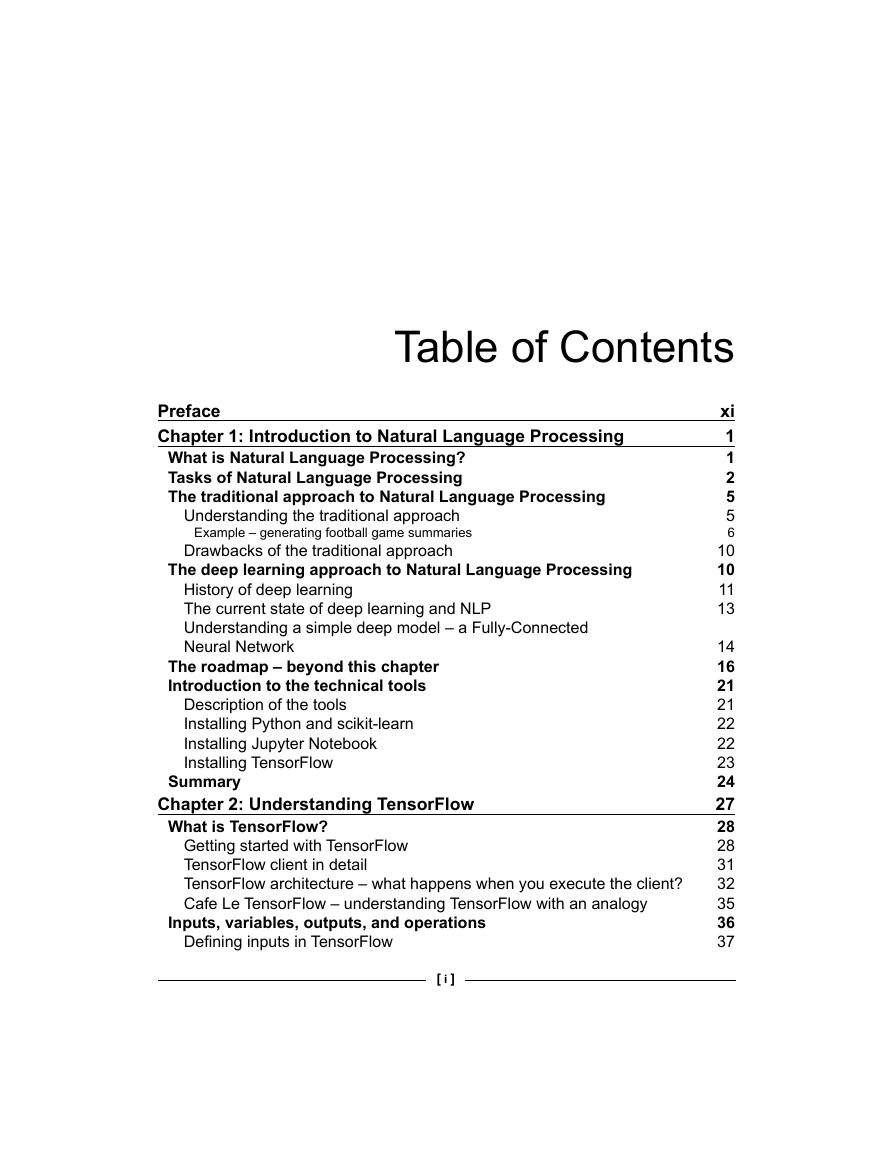

Table of Contents

Preface

Chapter 1: Introduction to Natural Language Processing

What is Natural Language Processing?

Tasks of Natural Language Processing

The traditional approach to Natural Language Processing

Understanding the traditional approach

Example – generating football game summaries

Drawbacks of the traditional approach

The deep learning approach to Natural Language Processing

History of deep learning

The current state of deep learning and NLP

Understanding a simple deep model – a Fully Connected Neural Network

The roadmap – beyond this chapter

Introduction to the technical tools

Description of the tools

Installing Python and scikit-learn

Installing Jupyter Notebook

Installing TensorFlow

Summary

Chapter 2: Understanding TensorFlow

What is TensorFlow?

Getting started with TensorFlow

TensorFlow client in detail

TensorFlow architecture – what happens when you execute the client?

Cafe Le TensorFlow – understanding TensorFlow with an analogy

Inputs, variables, outputs, and operations

Defining inputs in TensorFlow

Feeding data with Python code

Preloading and storing data as tensors

Building an input pipeline

Defining variables in TensorFlow

Defining TensorFlow outputs

Defining TensorFlow operations

Comparison operations

Mathematical operations

Scatter and gather operations

Neural network-related operations

Reusing variables with scoping

Implementing our first neural network

Preparing the data

Defining the TensorFlow graph

Running the neural network

Summary

Chapter 3: Word2vec – Learning

Word Embeddings

What is a word representation or meaning?

Classical approaches to learning word representation

WordNet – using an external lexical knowledge base for learning word representations

Tour of WordNet

Problems with WordNet

One-hot encoded representation

The TF-IDF method

Co-occurrence matrix

Word2vec – a neural network-based approach to learning word representation

Exercise: is queen = king – he + she?

Designing a loss function for learning word embeddings

The skip-gram algorithm

From raw text to structured data

Learning the word embeddings with a

neural network

Formulating a practical loss function

Efficiently approximating the loss function

Implementing skip-gram with TensorFlow

The Continuous Bag-of-Words algorithm

Implementing CBOW in TensorFlow

Summary

Chapter 4: Advanced Word2vec

The original skip-gram algorithm

Implementing the original skip-gram algorithm

Comparing the original skip-gram with the improved skip-gram

Comparing skip-gram with CBOW

Performance comparison

Which is the winner, skip-gram or CBOW?

Extensions to the word embeddings algorithms

Using the unigram distribution for negative sampling

Implementing unigram-based negative sampling

Subsampling – probabilistically ignoring the common words

Implementing subsampling

Comparing the CBOW and its extensions

More recent algorithms extending

skip-gram and CBOW

A limitation of the skip-gram algorithm

The structured skip-gram algorithm

The loss function

The continuous window model

GloVe – Global Vectors representation

Understanding GloVe

Implementing GloVe

Document classification with Word2vec

Dataset

Classifying documents with word embeddings

Implementation – learning word embeddings

Implementation – word embeddings to document embeddings

Document clustering and t-SNE visualization of embedded documents

Inspecting several outliers

Implementation – clustering/classification of documents with K-means

Summary

Chapter 5: Sentence Classification

with Convolutional

Neural Networks

Introducing Convolution Neural Networks

CNN fundamentals

The power of Convolution Neural Networks

Understanding Convolution Neural Networks

Convolution operation

Standard convolution operation

Convolving with stride

Convolving with padding

Transposed convolution

Pooling operation

Max pooling

Max pooling with stride

Average pooling

Fully connected layers

Putting everything together

Exercise – image classification on MNIST with CNN

About the data

Implementing the CNN

Analyzing the predictions produced with

a CNN

Using CNNs for sentence classification

CNN structure

Data transformation

The convolution operation

Pooling over time

Implementation – sentence classification

with CNNs

Summary

Chapter 6: Recurrent Neural Networks

Understanding Recurrent Neural Networks

The problem with feed-forward neural networks

Modeling with Recurrent Neural Networks

Technical description of a Recurrent Neural Network

Backpropagation Through Time

How backpropagation works

Why we cannot use BP directly for RNNs

Backpropagation Through Time – training RNNs

Truncated BPTT – training RNNs efficiently

Limitations of BPTT – vanishing and exploding gradients

Applications of RNNs

One-to-one RNNs

One-to-many RNNs

Many-to-one RNNs

Many-to-many RNNs

Generating text with RNNs

Defining hyperparameters

Unrolling the inputs over time for

Truncated BPTT

Defining the validation dataset

Defining weights and biases

Defining state persisting variables

Calculating the hidden states and outputs with unrolled inputs

Calculating the loss

Resetting state at the beginning of a new segment of text

Calculating validation output

Calculating gradients and optimizing

Outputting a freshly generated chunk of text

Evaluating text results output from

the RNN

Perplexity – measuring the quality of the text result

Recurrent Neural Networks with Context Features – RNNs with longer memory

Technical description of the RNN-CF

Implementing the RNN-CF

Defining the RNN-CF hyperparameters

Defining input and output placeholders

Defining weights of the RNN-CF

Variables and operations for maintaining hidden and context states

Calculating output

Calculating the loss

Calculating validation output

Computing test output

Computing the gradients and optimizing

Text generated with the RNN-CF

Summary

Chapter 7: Long Short-Term

Memory Networks

Understanding Long Short-Term Memory Networks

What is an LSTM?

LSTMs in more detail

How LSTMs differ from standard RNNs

How LSTMs solve the vanishing gradient problem

Improving LSTMs

Greedy sampling

Beam search

Using word vectors

Bidirectional LSTMs (BiLSTM)

Other variants of LSTMs

Peephole connections

Gated Recurrent Units

Summary

Chapter 8: Applications of LSTM – Generating Text

Our data

About the dataset

Preprocessing data

Implementing an LSTM

Defining hyperparameters

Defining parameters

Defining an LSTM cell and its operations

Defining inputs and labels

Defining sequential calculations required to process sequential data

Defining the optimizer

Decaying learning rate over time

Making predictions

Calculating perplexity (loss)

Resetting states

Greedy sampling to break unimodality

Generating new text

Example generated text

Comparing LSTMs to LSTMs with peephole connections and GRUs

Standard LSTM

Review

Example generated text

Gated Recurrent Units (GRUs)

Review

The code

Example generated text

LSTMs with peepholes

Review

The code

Example generated text

Training and validation perplexities over time

Improving LSTMs – beam search

Implementing beam search

Examples generated with beam search

Improving LSTMs – generating text with words instead of n-grams

The curse of dimensionality

Word2vec to the rescue

Generating text with Word2vec

Examples generated with LSTM-Word2vec and beam search

Perplexity over time

Using the TensorFlow RNN API

Summary

Chapter 9: Applications of LSTM – Image Caption Generation

Getting to know the data

ILSVRC ImageNet dataset

The MS-COCO dataset

The machine learning pipeline for image caption generation

Extracting image features with CNNs

Implementation – loading weights and inferencing with VGG-16

Building and updating variables

Preprocessing inputs

Inferring VGG-16

Extracting vectorized representations

of images

Predicting class probabilities with VGG-16

Learning word embeddings

Preparing captions for feeding into LSTMs

Generating data for LSTMs

Defining the LSTM

Evaluating the results quantitatively

BLEU

ROUGE

METEOR

CIDEr

BLEU-4 over time for our model

Captions generated for test images

Using TensorFlow RNN API with pretrained GloVe word vectors

Loading GloVe word vectors

Cleaning data

Using pretrained embeddings with TensorFlow RNN API

Defining the pretrained embedding layer and the adaptation layer

Defining the LSTM cell and softmax layer

Defining inputs and outputs

Processing images and text differently

Defining the LSTM output calculation

Defining the logits and predictions

Defining the sequence loss

Defining the optimizer

Summary

Chapter 10: Sequence-to-Sequence Learning – Neural Machine Translation

Machine translation

A brief historical tour of machine translation

Rule-based translation

Statistical Machine Translation (SMT)

Neural Machine Translation (NMT)

Understanding Neural Machine Translation

Intuition behind NMT

NMT architecture

The embedding layer

The encoder

The context vector

The decoder

Preparing data for the NMT system

At training time

Reversing the source sentence

At testing time

Training the NMT

Inference with NMT

The BLEU score – evaluating the machine translation systems

Modified precision

Brevity penalty

The final BLEU score

Implementing an NMT from scratch – a German to English translator

Introduction to data

Preprocessing data

Learning word embeddings

Defining the encoder and the decoder

Defining the end-to-end output calculation

Some translation results

Training an NMT jointly with word embeddings

Maximizing matchings between the dataset vocabulary and the pretrained embeddings

Defining the embeddings layer as a TensorFlow variable

Improving NMTs

Teacher forcing

Deep LSTMs

Attention

Breaking the context vector bottleneck

The attention mechanism in detail

Implementing the attention mechanism

Defining weights

Computing attention

Some translation results – NMT with attention

Visualizing attention for source and target sentences

Other applications of Seq2Seq

models – chatbots

Training a chatbot

Evaluating chatbots – Turing test

Summary

Chapter 11: Current Trends and

the Future of Natural Language Processing

Current trends in NLP

Word embeddings

Region embedding

Probabilistic word embedding

Ensemble embedding

Topic embedding

Neural Machine Translation (NMT)

Improving the attention mechanism

Hybrid MT models

Penetration into other research fields

Combining NLP with computer vision

Visual Question Answering (VQA)

Caption generation for images with attention

Reinforcement learning

Teaching agents to communicate using their

own language

Dialogue agents with reinforcement learning

Generative Adversarial Networks for NLP

Towards Artificial General Intelligence

One Model to Learn Them All

A joint many-task model – growing a neural network for multiple NLP tasks

First level – word-based tasks

Second level – syntactic tasks

Third level – semantic-level tasks

NLP for social media

Detecting rumors in social media

Detecting emotions in social media

Analyzing political framing in tweets

New tasks emerging

Detecting sarcasm

Language grounding

Skimming text with LSTMs

Newer machine learning models

Phased LSTM

Dilated Recurrent Neural Networks (DRNNs)

Summary

References

Appendix: Mathematical Foundations and Advanced TensorFlow

Basic data structures

Scalar

Vectors

Matrices

Indexing of a matrix

Special types of matrices

Identity matrix

Diagonal matrix

Tensors

Tensor/matrix operations

Transpose

Multiplication

Element-wise multiplication

Inverse

Finding the matrix inverse – Singular Value Decomposition (SVD)

Norms

Determinant

Probability

Random variables

Discrete random variables

Continuous random variables

The probability mass/density function

Conditional probability

Joint probability

Marginal probability

Bayes' rule

Introduction to Keras

Introduction to the TensorFlow seq2seq library

Defining embeddings for the encoder

and decoder

Defining the encoder

Defining the decoder

Visualizing word embeddings with TensorBoard

Starting TensorBoard

Saving word embeddings and visualizing via TensorBoard

Summary

Other Books You May Enjoy

Index

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc