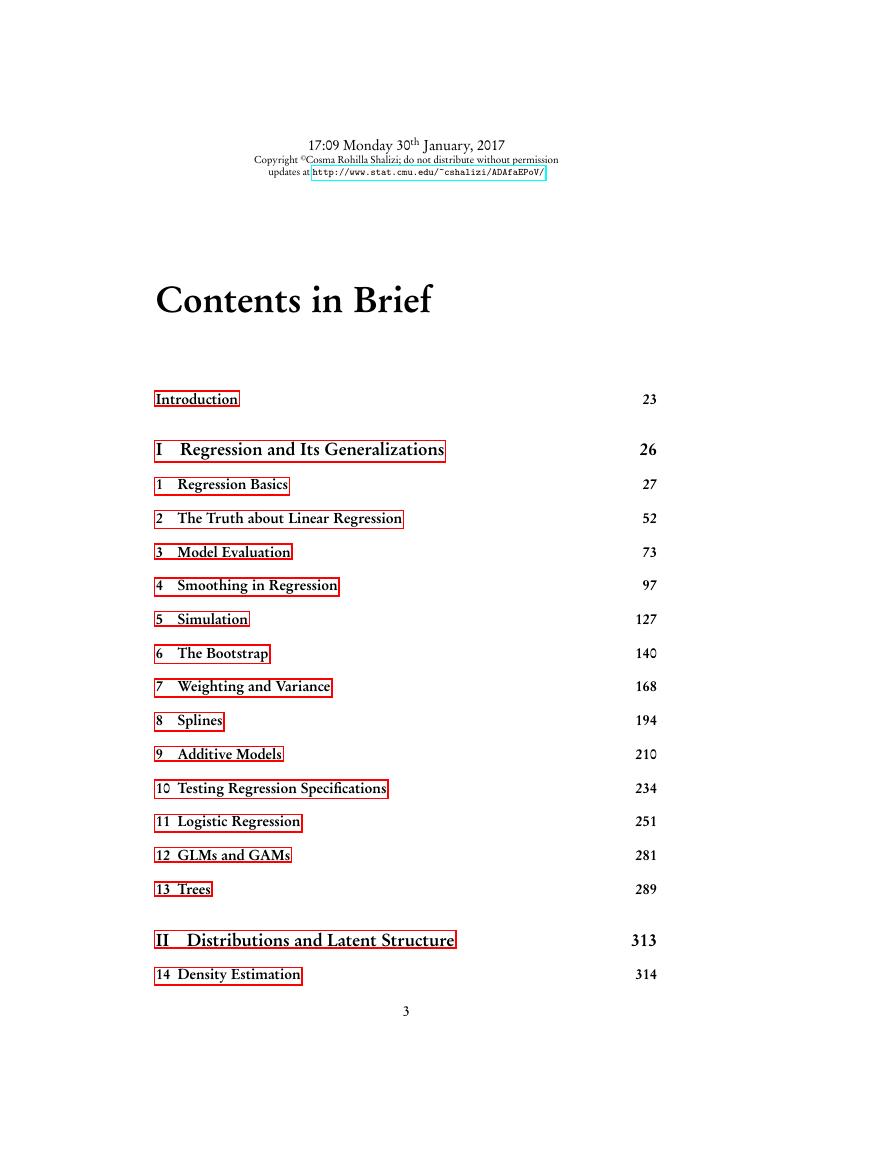

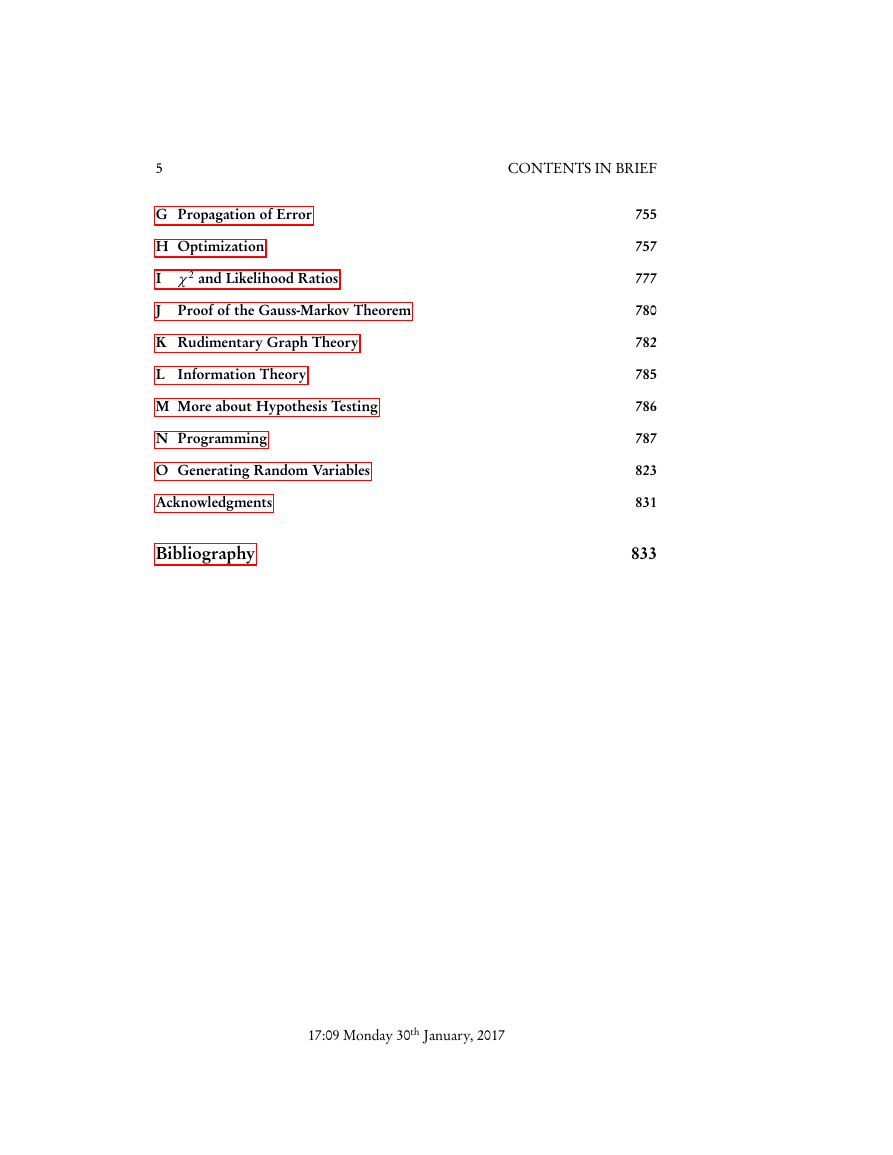

Contents in Brief

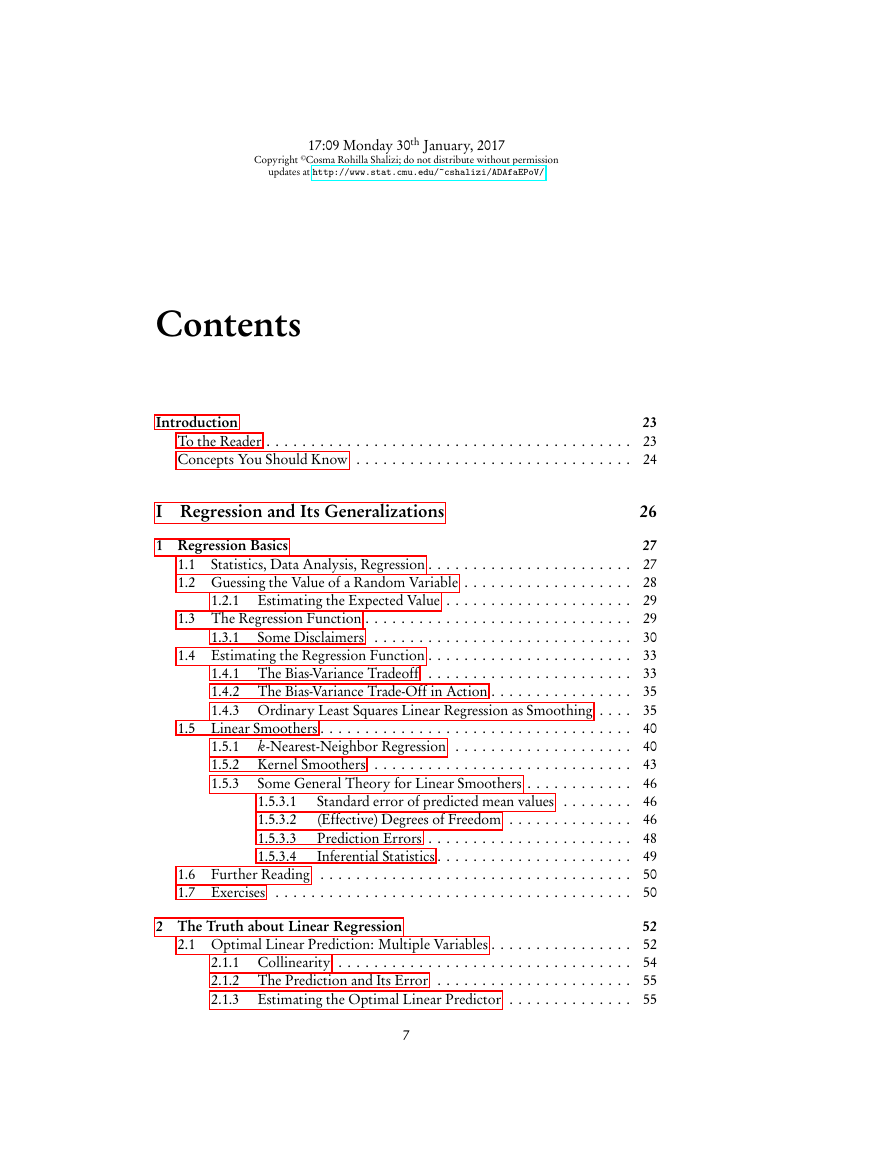

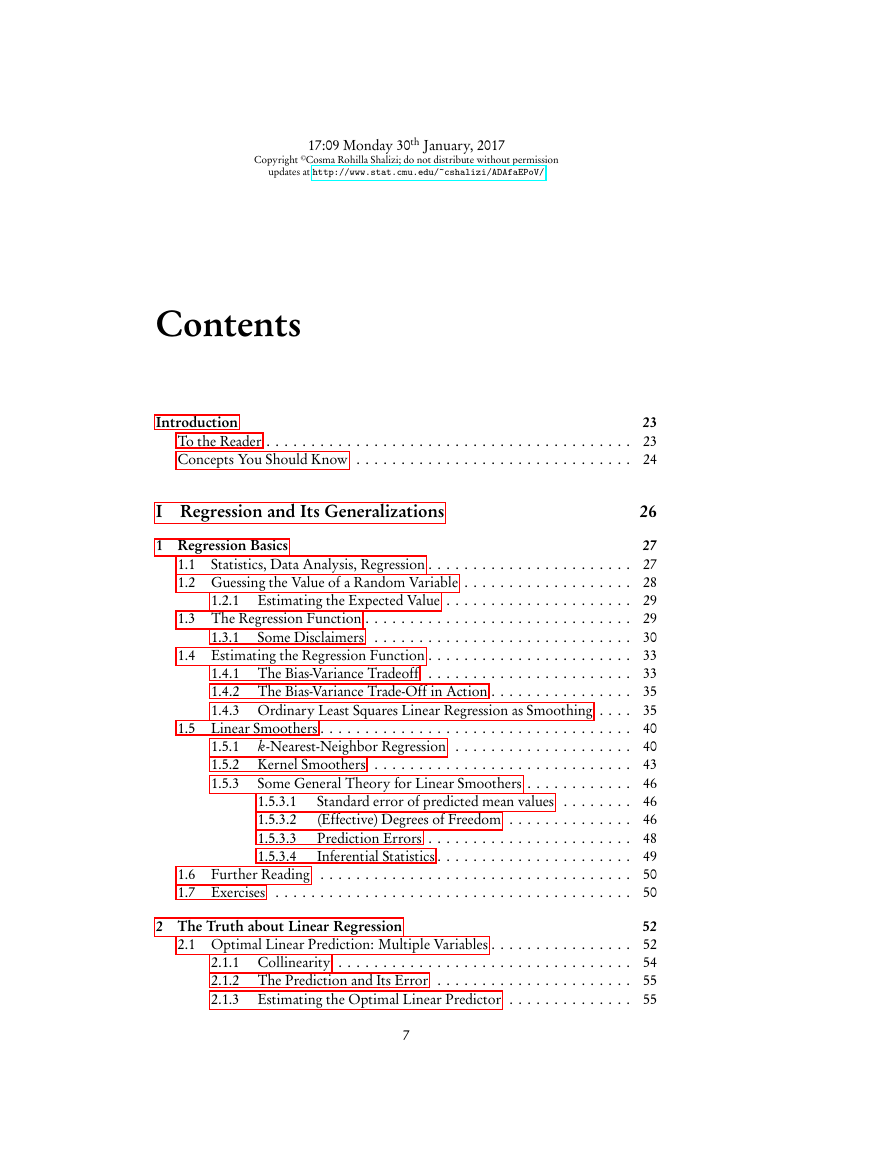

Contents

Introduction

To the Reader

Concepts You Should Know

I Regression and Its Generalizations

Regression Basics

Statistics, Data Analysis, Regression

Guessing the Value of a Random Variable

Estimating the Expected Value

The Regression Function

Some Disclaimers

Estimating the Regression Function

The Bias-Variance Tradeoff

The Bias-Variance Trade-Off in Action

Ordinary Least Squares Linear Regression as Smoothing

Linear Smoothers

k-Nearest-Neighbor Regression

Kernel Smoothers

Some General Theory for Linear Smoothers

Standard error of predicted mean values

(Effective) Degrees of Freedom

Prediction Errors

Inferential Statistics

Further Reading

Exercises

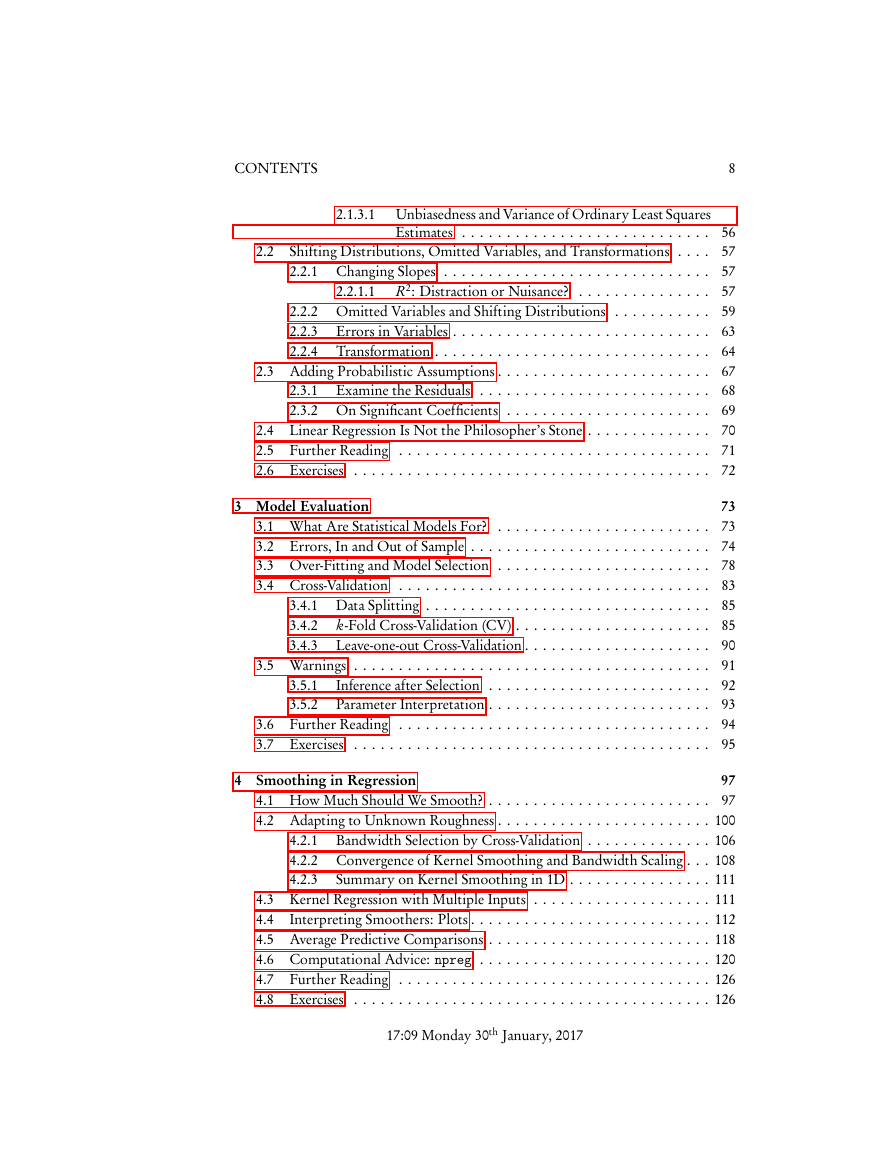

The Truth about Linear Regression

Optimal Linear Prediction: Multiple Variables

Collinearity

The Prediction and Its Error

Estimating the Optimal Linear Predictor

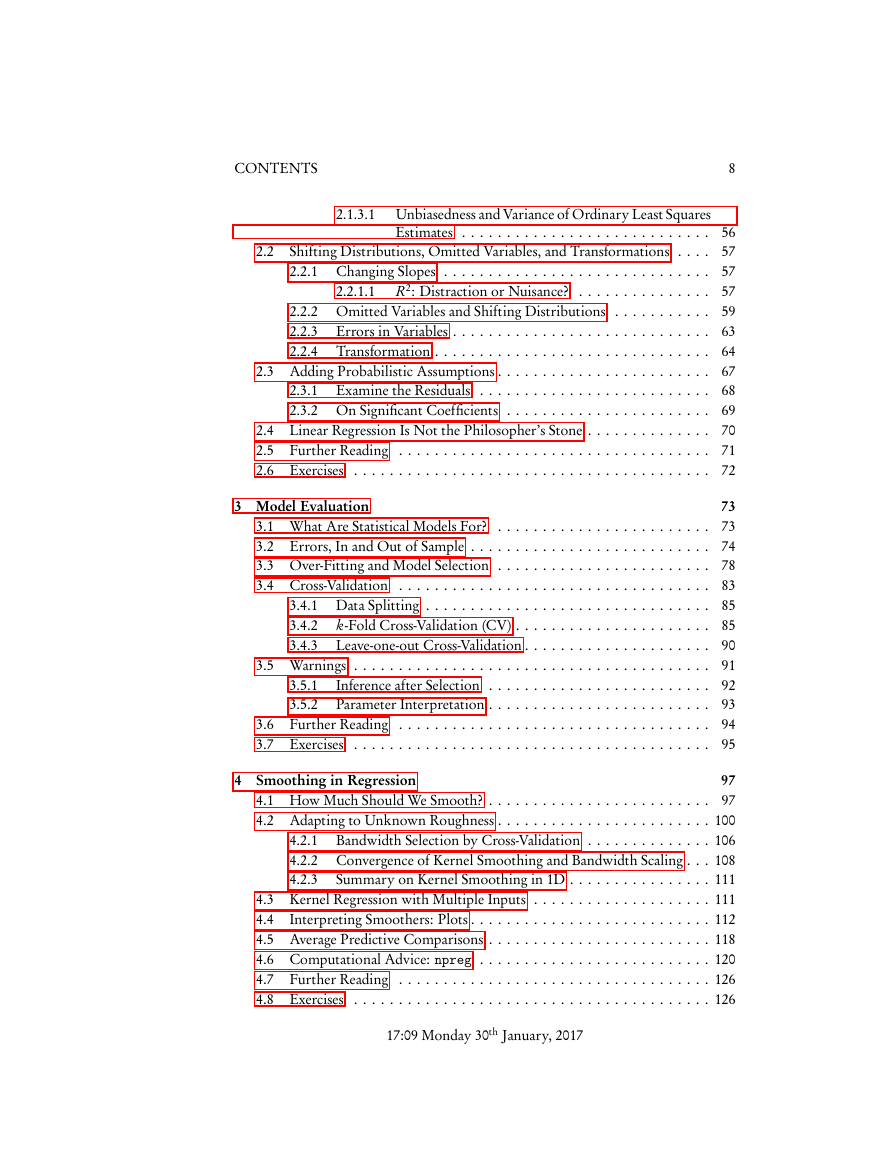

Unbiasedness and Variance of Ordinary Least Squares Estimates

Shifting Distributions, Omitted Variables, and Transformations

Changing Slopes

R2: Distraction or Nuisance?

Omitted Variables and Shifting Distributions

Errors in Variables

Transformation

Adding Probabilistic Assumptions

Examine the Residuals

On Significant Coefficients

Linear Regression Is Not the Philosopher's Stone

Further Reading

Exercises

Model Evaluation

What Are Statistical Models For?

Errors, In and Out of Sample

Over-Fitting and Model Selection

Cross-Validation

Data Splitting

k-Fold Cross-Validation (CV)

Leave-one-out Cross-Validation

Warnings

Inference after Selection

Parameter Interpretation

Further Reading

Exercises

Smoothing in Regression

How Much Should We Smooth?

Adapting to Unknown Roughness

Bandwidth Selection by Cross-Validation

Convergence of Kernel Smoothing and Bandwidth Scaling

Summary on Kernel Smoothing in 1D

Kernel Regression with Multiple Inputs

Interpreting Smoothers: Plots

Average Predictive Comparisons

Computational Advice: npreg

Further Reading

Exercises

Simulation

What Is a Simulation?

How Do We Simulate Stochastic Models?

Chaining Together Random Variables

Random Variable Generation

Built-in Random Number Generators

Transformations

Quantile Method

Sampling

Sampling Rows from Data Frames

Multinomials and Multinoullis

Probabilities of Observation

Repeating Simulations

Why Simulate?

Understanding the Model; Monte Carlo

Checking the Model

``Exploratory'' Analysis of Simulations

Sensitivity Analysis

Further Reading

Exercises

The Bootstrap

Stochastic Models, Uncertainty, Sampling Distributions

The Bootstrap Principle

Variances and Standard Errors

Bias Correction

Confidence Intervals

Other Bootstrap Confidence Intervals

Hypothesis Testing

Double bootstrap hypothesis testing

Model-Based Bootstrapping Example: Pareto's Law of Wealth Inequality

Resampling

Model-Based vs. Resampling Bootstraps

Bootstrapping Regression Models

Re-sampling Points: Parametric Model Example

Re-sampling Points: Non-parametric Model Example

Re-sampling Residuals: Example

Bootstrap with Dependent Data

Things Bootstrapping Does Poorly

Which Bootstrap When?

Further Reading

Exercises

Weighting and Variance

Weighted Least Squares

Heteroskedasticity

Weighted Least Squares as a Solution to Heteroskedasticity

Some Explanations for Weighted Least Squares

Finding the Variance and Weights

Conditional Variance Function Estimation

Iterative Refinement of Mean and Variance: An Example

Real Data Example: Old Heteroskedastic

Re-sampling Residuals with Heteroskedasticity

Local Linear Regression

For and Against Locally Linear Regression

Lowess

Further Reading

Exercises

Splines

Smoothing by Penalizing Curve Flexibility

The Meaning of the Splines

Computational Example: Splines for Stock Returns

Confidence Bands for Splines

Basis Functions and Degrees of Freedom

Basis Functions

Splines in Multiple Dimensions

Smoothing Splines versus Kernel Regression

Some of the Math Behind Splines

Further Reading

Exercises

Additive Models

Additive Models

Partial Residuals and Back-fitting

Back-fitting for Linear Models

Backfitting Additive Models

The Curse of Dimensionality

Example: California House Prices Revisited

Interaction Terms and Expansions

Closing Modeling Advice

Further Reading

Exercises

Testing Regression Specifications

Testing Functional Forms

Examples of Testing a Parametric Model

Remarks

Why Use Parametric Models At All?

Why We Sometimes Want Mis-Specified Parametric Models

Further Reading

Exercises

Logistic Regression

Modeling Conditional Probabilities

Logistic Regression

Likelihood Function for Logistic Regression

Numerical Optimization of the Likelihood

Iteratively Re-Weighted Least Squares

Generalized Linear and Additive Models

Generalized Additive Models

Model Checking

Residuals

Non-parametric Alternatives

Calibration

A Toy Example

Weather Forecasting in Snoqualmie Falls

Logistic Regression with More Than Two Classes

Exercises

GLMs and GAMs

Generalized Linear Models and Iterative Least Squares

GLMs in General

Examples of GLMs

Vanilla Linear Models

Binomial Regression

Poisson Regression

Uncertainty

Modeling Dispersion

Likelihood and Deviance

Maximum Likelihood and the Choice of Link Function

R: glm

Generalized Additive Models

Further Reading

Exercises

Trees

Prediction Trees

Regression Trees

Example: California Real Estate Again

Regression Tree Fitting

Cross-Validation and Pruning in R

Uncertainty in Regression Trees

Classification Trees

Measuring Information

Making Predictions

Measuring Error

Misclassification Rate

Average Loss

Likelihood and Cross-Entropy

Neyman-Pearson Approach

Further Reading

Exercises

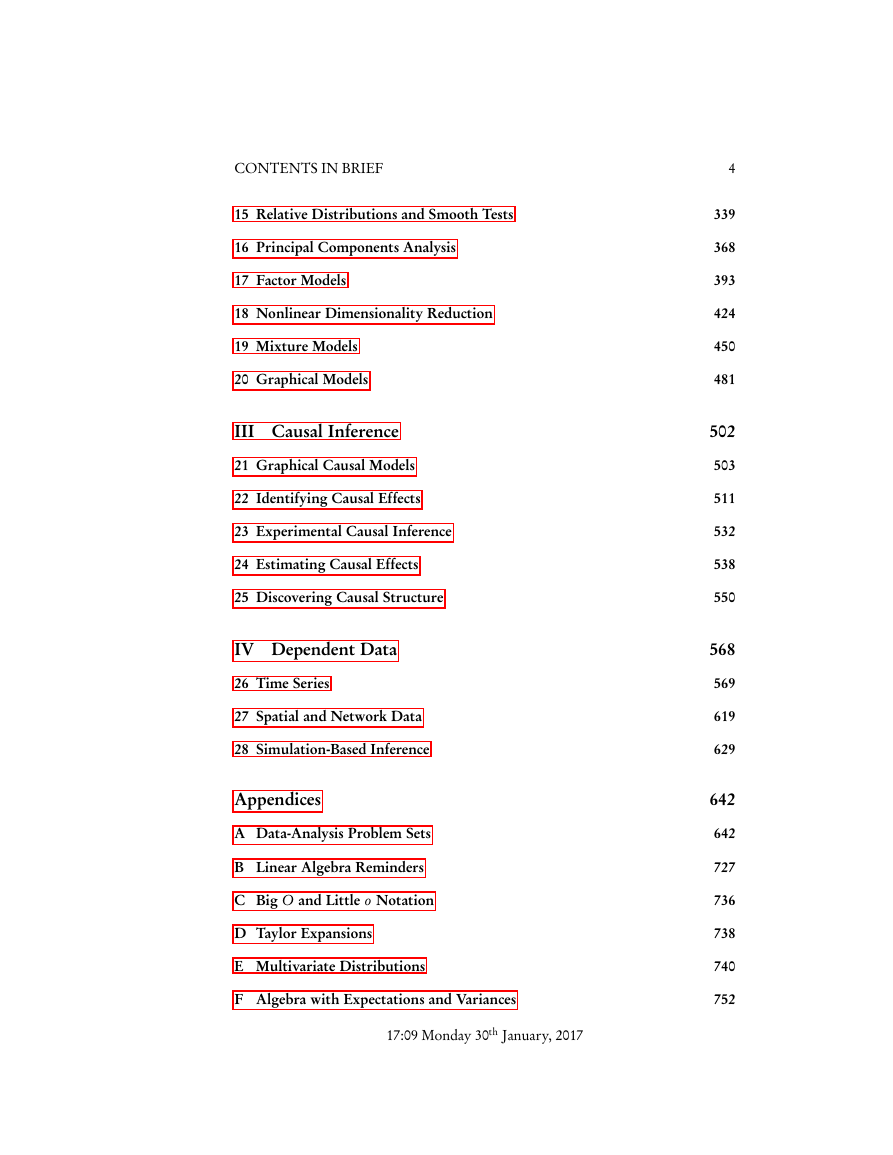

II Distributions and Latent Structure

Density Estimation

Histograms Revisited

``The Fundamental Theorem of Statistics''

Error for Density Estimates

Error Analysis for Histogram Density Estimates

Kernel Density Estimates

Analysis of Kernel Density Estimates

Joint Density Estimates

Categorical and Ordered Variables

Practicalities

Kernel Density Estimation in R: An Economic Example

Conditional Density Estimation

Practicalities and a Second Example

More on the Expected Log-Likelihood Ratio

Simulating from Density Estimates

Simulating from Kernel Density Estimates

Sampling from a Joint Density

Sampling from a Conditional Density

Drawing from Histogram Estimates

Examples of Simulating from Kernel Density Estimates

Exercises

Relative Distributions and Smooth Tests

Smooth Tests of Goodness of Fit

From Continuous CDFs to Uniform Distributions

Testing Uniformity

Neyman's Smooth Test

Choice of Function Basis

Choice of Number of Basis Functions

Application: Combining p-Values

Density Estimation by Series Expansion

Smooth Tests of Non-Uniform Parametric Families

Estimated Parameters

Implementation in R

Some Examples

Conditional Distributions and Calibration

Relative Distributions

Estimating the Relative Distribution

R Implementation and Examples

Example: Conservative versus Liberal Brains

Example: Economic Growth Rates

Adjusting for Covariates

Example: Adjusting Growth Rates

Further Reading

Exercises

Principal Components Analysis

Mathematics of Principal Components

Minimizing Projection Residuals

Maximizing Variance

More Geometry; Back to the Residuals

Scree Plots

Statistical Inference, or Not

Example 1: Cars

Example 2: The United States circa 1977

Latent Semantic Analysis

Principal Components of the New York Times

PCA for Visualization

PCA Cautions

Further Reading

Exercises

Factor Models

From PCA to Factor Analysis

Preserving correlations

The Graphical Model

Observables Are Correlated Through the Factors

Geometry: Approximation by Linear Subspaces

Roots of Factor Analysis in Causal Discovery

Estimation

Degrees of Freedom

A Clue from Spearman's One-Factor Model

Estimating Factor Loadings and Specific Variances

Maximum Likelihood Estimation

Alternative Approaches

Estimating Factor Scores

The Rotation Problem

Factor Analysis as a Predictive Model

How Many Factors?

R2 and Goodness of Fit

Factor Models versus PCA Once More

Examples in R

Example 1: Back to the US circa 1977

Example 2: Stocks

Reification, and Alternatives to Factor Models

The Rotation Problem Again

Factors or Mixtures?

The Thomson Sampling Model

Further Reading

Nonlinear Dimensionality Reduction

Why We Need Nonlinear Dimensionality Reduction

Local Linearity and Manifolds

Locally Linear Embedding (LLE)

Finding Neighborhoods

Finding Weights

k > p

Finding Coordinates

More Fun with Eigenvalues and Eigenvectors

Finding the Weights

k > p

Finding the Coordinates

Calculation

Finding the Nearest Neighbors

Calculating the Weights

Calculating the Coordinates

Example

Further Reading

Exercises

Mixture Models

Two Routes to Mixture Models

From Factor Analysis to Mixture Models

From Kernel Density Estimates to Mixture Models

Mixture Models

Geometry

Identifiability

Probabilistic Clustering

Simulation

Estimating Parametric Mixture Models

More about the EM Algorithm

Topic Models and Probabilistic LSA

Non-parametric Mixture Modeling

Worked Computating Example

Mixture Models in R

Fitting a Mixture of Gaussians to Real Data

Calibration-checking for the Mixture

Selecting the Number of Clusters by Cross-Validation

Interpreting the Clusters in the Mixture, or Not

Hypothesis Testing for Mixture-Model Selection

Further Reading

Exercises

Graphical Models

Conditional Independence and Factor Models

Directed Acyclic Graph (DAG) Models

Conditional Independence and the Markov Property

Conditional Independence and d-Separation

D-Separation Illustrated

Linear Graphical Models and Path Coefficients

Positive and Negative Associations

Independence and Information

Examples of DAG Models and Their Uses

Missing Variables

Non-DAG Graphical Models

Undirected Graphs

Directed but Cyclic Graphs

Further Reading

Exercises

III Causal Inference

Graphical Causal Models

Causation and Counterfactuals

Causal Graphical Models

Calculating the ``effects of causes''

Back to Teeth

Conditional Independence and d-Separation Revisited

Further Reading

Exercises

Identifying Causal Effects

Causal Effects, Interventions and Experiments

The Special Role of Experiment

Identification and Confounding

Identification Strategies

The Back-Door Criterion: Identification by Conditioning

The Entner Rules

The Front-Door Criterion: Identification by Mechanisms

The Front-Door Criterion and Mechanistic Explanation

Instrumental Variables

Some Invalid Instruments

Critique of Instrumental Variables

Failures of Identification

Summary

Further Reading

Exercises

Experimental Causal Inference

Why Experiment?

Jargon

Basic Ideas Guiding Experimental Design

Randomization

Causal Identification

Randomization and Linear Models

Randomization and Non-Linear Models

Modes of Randomization

IID Assignment

Planned Assignment

Perspectives: Units vs. Treatments

Choice of Levels

Parameter Estimation or Prediction

Maximizing Yield

Model Discrimination

Multiple Goals

Multiple Manipulated Variables

Factorial Designs

Blocking

Within-Subject Designs

Summary on the elements of an experimental design

``What the experiment died of''

Further Reading

Exercises

Estimating Causal Effects

Estimators in the Back- and Front- Door Criteria

Estimating Average Causal Effects

Avoiding Estimating Marginal Distributions

Matching

Propensity Scores

Propensity Score Matching

Instrumental-Variables Estimates

Uncertainty and Inference

Recommendations

Further Reading

Exercises

Discovering Causal Structure

Testing DAGs

Testing Conditional Independence

Faithfulness and Equivalence

Partial Identification of Effects

Causal Discovery with Known Variables

The PC Algorithm

Causal Discovery with Hidden Variables

On Conditional Independence Tests

Software and Examples

Limitations on Consistency of Causal Discovery

Further Reading

Exercises

Pseudo-code for the SGS and PC Algorithms

The SGS Algorithm

The PC Algorithm

IV Dependent Data

Time Series

What Time Series Are

Stationarity

Autocorrelation

The Ergodic Theorem

The World's Simplest Ergodic Theorem

Rate of Convergence

Why Ergodicity Matters

Markov Models

Meaning of the Markov Property

Estimating Markov Models

Autoregressive Models

Autoregressions with Covariates

Additive Autoregressions

Linear Autoregression

``Unit Roots'' and Stationary Solutions

Conditional Variance

Regression with Correlated Noise; Generalized Least Squares

Bootstrapping Time Series

Parametric or Model-Based Bootstrap

Block Bootstraps

Sieve Bootstrap

Cross-Validation

Testing Stationarity by Cross-Prediction

Trends and De-Trending

Forecasting Trends

Seasonal Components

Detrending by Differencing

Cautions with Detrending

Bootstrapping with Trends

Breaks in Time Series

Long Memory Series

Change Points and Structural Breaks

Change Points and Long Memory

Change Point Detection

Time Series with Latent Variables

Examples

General Gaussian-Linear State Space Model

Autoregressive-Moving Average (ARMA) Models

Regime Switching

Noisily-Observed Dynamical Systems

State Estimation

Particle Filtering

Parameter Estimation

Prediction

Moving Averages and Cycles

Yule-Slutsky

Longitudinal Data

Multivariate Time Series

Further Reading

Exercises

Spatial and Network Data

Least-Squares Optimal Linear Prediction

Extension to Vectors

Estimation

Stronger Probabilistic Assumptions

Nonlinearity?

Application to Spatial and Spatio-Temporal Data

Special Case: One Scalar Field

Role of Symmetry Assumptions

A Worked Example

Spatial Smoothing with Splines

Data on Networks

Adapting Spatial Techniques

Laplacian Smoothing

Further Reading

Simulation-Based Inference

The Method of Simulated Moments

The Method of Moments

Adding in the Simulation

An Example: Moving Average Models and the Stock Market

Indirect Inference

Further Reading

Exercises

Some Design Notes on the Method of Moments Code

Appendices

Data-Analysis Problem Sets

Your Daddy's Rich and Your Momma's Good Looking

…But We Make It Up in Volume

Past Performance, Future Results

Free Soil

There Were Giants in the Earth in Those Day

The Sound of Gunfire, Off in the Distance

The Bullet or the Ballot?

A Diversified Portfolio

The Monkey's Paw

Specific Problems

Formatting Instructions and Rubric

What's That Got to Do with the Price of Condos in California?

The Advantages of Backwardness

It's Not the Heat that Gets You

Nice Demo City, but Will It Scale?

Version 1

Background

Tasks and Questions

Version 2

Problems

Fair's Affairs

How the North American Paleofauna Got a Crook in Its Regression Line

How the Hyracotherium Got Its Mass

How the Recent Mammals Got Their Size Distribution

Red Brain, Blue Brain

Brought to You by the Letters D, A and G

Teacher, Leave Those Kids Alone! (They're the Control Group)

Estimating with DAGs

Use and Abuse of Conditioning

What Makes the Union Strong?

An Insufficiently Random Walk Down Wall Street

Predicting Nine of the Last Five Recessions

Debt Needs Time for What It Kills to Grow In

How Tetracycline Came to Peoria

Formatting Instructions and Rubric

Linear Algebra Reminders

Vector Space, Linear Subspace, Linear Independence

Inner Product, Orthogonal Vectors

Orthonormal Bases

Rank

Eigenvalues and Eigenvectors of Matrices

Special Kinds of Matrix

Matrix Decompositions

Singular Value Decomposition

Eigen-Decomposition or Spectral Decomposition of Matrix

Square Root of a Matrix

Orthogonal Projections, Idempotent Matrices

R Commands for Linear Algebra

Vector Calculus

Function Spaces

Bases

Eigenvalues and Eigenfunctions of Operators

Further Reading

Exercises

Big O and Little o Notation

Taylor Expansions

Multivariate Distributions

Review of Definitions

Multivariate Gaussians

Linear Algebra and the Covariance Matrix

Conditional Distributions and Least Squares

Projections of Multivariate Gaussians

Computing with Multivariate Gaussians

Inference with Multivariate Distributions

Model Comparison

Goodness-of-Fit

Uncorrelated = Independent

Exercises

Algebra with Expectations and Variances

Variance-Covariance Matrices of Vectors

Quadratic Forms

Propagation of Error

Optimization

Basic Concepts of Optimization

Newton's Method

Optimization in R

Small-Noise Asymptotics for Optimization

Application to Maximum Likelihood

The Akaike Information Criterion

Constrained and Penalized Optimization

Constrained Optimization

Lagrange Multipliers

Penalized Optimization

Constrained Linear Regression

Statistical Remark: ``Ridge Regression'' and ``The Lasso''

Further Reading

2 and Likelihood Ratios

Proof of the Gauss-Markov Theorem

Rudimentary Graph Theory

Information Theory

More about Hypothesis Testing

Programming

Functions

First Example: Pareto Quantiles

Functions Which Call Functions

Sanity-Checking Arguments

Layering Functions and Debugging

More on Debugging

Automating Repetition and Passing Arguments

Avoiding Iteration: Manipulating Objects

ifelse and which

apply and Its Variants

More Complicated Return Values

Re-Writing Your Code: An Extended Example

General Advice on Programming

Comment your code

Use meaningful names

Check whether your program works

Avoid writing the same thing twice

Start from the beginning and break it down

Break your code into many short, meaningful functions

Further Reading

Generating Random Variables

Rejection Method

The Metropolis Algorithm and Markov Chain Monte Carlo

Generating Uniform Random Numbers

Further Reading

Exercises

Acknowledgments

Bibliography

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc