Information

Theory

A Tutorial Introduction

James V Stone

�

Reviews of Information Theory

“Information lies at the heart of biology, societies depend on it, and our

ability to process information ever more efficiently is transforming our lives.

By introducing the theory that enabled our information revolution, this

book describes what information is, how it can be communicated efficiently,

and why it underpins our understanding of biology, brains, and physical

reality. Its tutorial approach develops a deep intuitive understanding using

the minimum number of elementary equations. Thus, this superb introduction

not only enables scientists of all persuasions to appreciate the relevance of

information theory, it also equips them to start using it. The same goes for

students. I have used a handout to teach elementary information theory to

biologists and neuroscientists for many years. I will throw away my handout

and use this book. ”

Simon Laughlin, Professor of Neurobiology, Fellow of the Royal Society,

Department of Zoology, University of Cambridge, England.

uThis is a really great book it describes a simple and beautiful idea in a

way that is accessible for novices and experts alike. This ’’sim ple idea” is

that information is a formal quantity that underlies nearly everything we

do. In this book, Stone leads us through Shannons fundamental insights;

starting with the basics of probability and ending with a range of applications

including thermodynamics, telecommunications, computational neuroscience

and evolution. There are some lovely anecdotes: I particularly liked the

account of how Samuel Morse (inventor of the Morse code) pre-empted

modern notions of efficient coding by counting how many copies of each letter

were held in stock in a printer’s workshop. The treatment of natural selection

as ”a means by which information about the environment is incorporated into

DNA” is both compelling and entertaining. The substance of this book is a

clear exposition of information theory, written in an intuitive fashion (true

to Stone’s observation that ’’rigour follows insight”). Indeed, I wish that this

text had been available when I was learning about information theory. Stone

has managed to distil all of the key ideas in information theory into a coherent

story. Every idea and equation that underpins recent advances in technology

and the life sciences can be found in this informative little book. ”

Professor Karl Friston, Fellow of the Royal Society. Scientific Director of the

Wellcome Trust Centre for Neuroimaging,

Institute of Neurology, University College London.

�

Reviews of Bayes’ Rule: A Tutorial Introduction

“An excellent book ... highly recommended.”

CHOICE: Academic Reviews Online, February 2014.

“Short, interesting, and very easy to read, Bayes’ Rule serves as an excellent

primer for students and professionals ... ”

Top Ten Math Books On Bayesian Analysis, July 2014.

“An excellent first step for readers with little background in the topic. ”

Computing Reviews, June 2014.

“The author deserves a praise for bringing out some of the main principles

of Bayesian inference using just visuals and plain English. Certainly a nice

intro book that can be read by any newbie to Bayes. ”

https://rkbookreviews.wordpress.com/, May 2015.

From the Back Cover

“Bayes’ Rule explains in a very easy to follow manner the basics of Bayesian

analysis. ”

Dr Inigo Arregui, Ramon y Cajal Researcher, Institute of Astrophysics,

Spain.

“A crackingly clear tutorial for beginners. Exactly the sort of book required

for those taking their first steps in Bayesian analysis. ”

Dr Paul A. Warren, School of Psychological Sciences, University of

Manchester.

“This book is short and eminently readable. R introduces the Bayesian

approach to addressing statistical issues without using any advanced

mathematics, which should make it accessible to students from a wide range

of backgrounds, including biological and social sciences. ”

Dr Devinder Sivia, Lecturer in Mathematics, St John’s College, Oxford

University, and author of Data Analysis: A Bayesian Tutorial.

“For those with a limited mathematical background, Stone’s book provides an

ideal introduction to the main concepts of Bayesian analysis. ”

Dr Peter M Lee, Department of Mathematics, University of York. Author of

Bayesian Statistics: An Introduction.

“Bayesian analysis involves concepts which can be hard for the uninitiated to

grasp. Stone’s patient pedagogy and gentle examples convey these concepts

with uncommon lucidity.”

Dr Charles Fox, Department of Computer Science, University of Sheffield.

�

Information Theory

A Tutorial Introduction

James V Stone

�

Title: Information Theory: A Tutorial Introduction

Author: James V Stone

©2015 Sebtel Press

First Edition, 2015.

Typeset in ETeK2£.

Cover design: Stefan Brazzo.

Second printing.

ISBN 978-0-9563728-5-7

The front cover depicts Claude Shannon (1916-2001).

�

For Nikki

�

Suppose that we were asked to arrange the following in two

categories - distance, mass, electric force, entropy, beauty,

melody. I think there are the strongest grounds for placing

entropy alongside beauty and melody ...

Eddington A, The Nature of the Physical World, 1928.

�

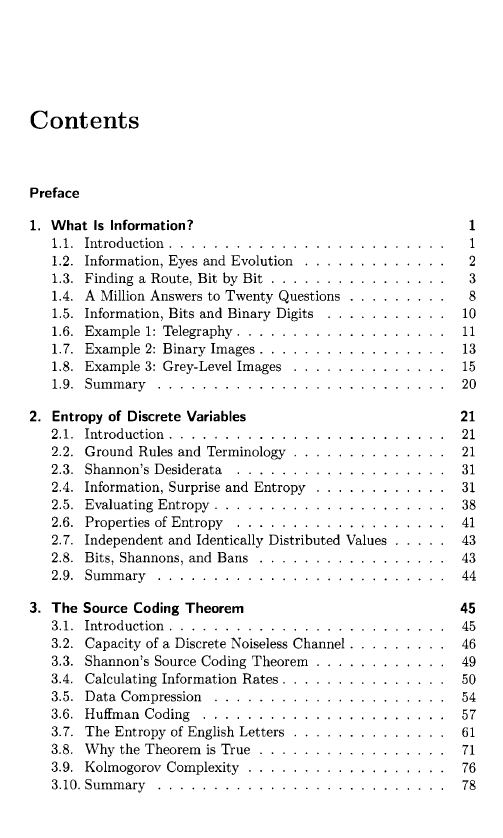

Contents

Preface

1. What Is Information?

2. Entropy of Discrete Variables

1

1

1.1. Introduction.................................................

2

1.2. Information, Eyes and Evolution........................

3

1.3. Finding a Route, Bit by B it ..............................

8

1.4. A Million Answers to Twenty Questions................

1.5. Information, Bits and Binary Digits .................... 10

1.6. Example 1: Telegraphy..................................... 11

1.7. Example 2: Binary Images................................ 13

1.8. Example 3: Grey-Level Im a ges.......................... 15

1.9. Sum m ary................................................... 20

21

2.1. Introduction................................................. 21

2.2. Ground Rules and Terminology.......................... 21

2.3. Shannon’s Desiderata ..................................... 31

2.4. Information, Surprise and Entropy...................... 31

2.5. Evaluating Entropy......................................... 38

2.6. Properties of E n trop y ..................................... 41

2.7. Independent and Identically Distributed Values........ 43

2.8. Bits, Shannons, and B a n s................................ 43

2.9. Sum m ary................................................... 44

45

3.1. Introduction................................................. 45

3.2. Capacity of a Discrete Noiseless Channel................ 46

3.3. Shannon’s Source Coding Theorem ...................... 49

3.4. Calculating Information Rates............................ 50

3.5. Data Com pression......................................... 54

3.6. Huffman C o d in g ........................................... 57

3.7. The Entropy of English Letters.......................... 61

3.8. Why the Theorem is T ru e................................ 71

3.9. Kolmogorov Complexity.................................. 76

3.10. Sum m ary................................................... 78

3. The Source Coding Theorem

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc