Start

Search

Title Pages

Preface

List of Editors

List of Authors

Contents

List of Abbreviations

1 Introduction to Speech Processing

1.1 A Brief History of Speech Processing

1.2 Applications of Speech Processing

1.3 Organization of the Handbook

References

A Production, Perception, and Modeling of Speech

2 Physiological Processes of Speech Production

2.1 Overview of Speech Apparatus

2.2 Voice Production Mechanisms

2.2.1 Regulation of Respiration

2.2.2 Structure of the Larynx

2.2.3 Vocal Fold and its Oscillation

2.2.4 Regulation of Fundamental Frequency (F0)

2.2.5 Methods for Measuring Voice Production

2.3 Articulatory Mechanisms

2.3.1 Articulatory Organs

2.3.2 Vocal Tract and Nasal Cavity

2.3.3 Aspects of Articulation in Relation to Voicing

2.3.4 Articulators' Mobility and Coarticulation

2.3.5 Instruments for Observing Articulatory Dynamics

2.4 Summary

References

3 Nonlinear Cochlear Signal Processing and Masking in Speech Perception

3.1 Basics

3.1.1 Function of the Inner Ear

3.1.2 History of Cochlear Modeling

3.2 The Nonlinear Cochlea

3.2.1 Cochlear Modeling

3.2.2 Outer-Hair-Cell Transduction

3.2.3 Micromechanics

3.3 Neural Masking

3.3.1 Basic Definitions

3.3.2 Empirical Models

3.3.3 Models of the JND

3.3.4 A Direct Estimate of the Loudness JND

3.3.5 Determination of the Loudness SNR

3.3.6 Weber-Fraction Formula

3.4 Discussion and Summary

3.4.1 Model Validation

3.4.2 The Noise Model

References

4 Perception of Speech and Sound

4.1 Basic Psychoacoustic Quantities

4.1.1 Mapping of Intensity into Loudness

4.1.2 Pitch

4.1.3 Temporal Analysis and Modulation Perception

4.1.4 Binaural Hearing

4.1.5 Binaural Noise Suppression

4.2 Acoustical Information Required for Speech Perception

4.2.1 Speech Intelligibility and Speech Reception Threshold (SRT)

4.2.2 Measurement Methods

4.2.3 Factors Influencing Speech Intelligibility

4.2.4 Prediction Methods

4.3 Speech Feature Perception

4.3.1 Formant Features

4.3.2 Phonetic and Distinctive Feature Sets

4.3.3 Internal Representation Approach and Higher-Order Temporal-Spectral Features

4.3.4 Man-Machine Comparison

References

5 Speech Quality Assessment

5.1 Degradation Factors Affecting Speech Quality

5.2 Subjective Tests

5.2.1 Single Metric (Integral Speech Quality)

5.2.2 Multidimensional Metric (Diagnostic Speech-Quality)

5.2.3 Assessment of Specific Quality Dimensions

5.2.4 Test Implementation

5.2.5 Discussion of Subjective Tests

5.3 Objective Measures

5.3.1 Intrusive Listening Quality Measures

5.3.2 Non-Intrusive Listening Quality Measures

5.3.3 Objective Measures for Assessment of Conversational Quality

5.3.4 Discussion of Objective Measures

5.4 Conclusions

References

B Signal Processing for Speech

6 Wiener and Adaptive Filters

6.1 Overview

6.2 Signal Models

6.2.1 SISO Model

6.2.2 SIMO Model

6.2.3 MISO Model

6.2.4 MIMO Model

6.3 Derivation of the Wiener Filter

6.4 Impulse Response Tail Effect

6.5 Condition Number

6.5.1 Decomposition of the Correlation Matrix

6.5.2 Condition Number with the Frobenius Norm

6.5.3 Fast Computation of the Condition Number

6.6 Adaptive Algorithms

6.6.1 Deterministic Algorithm

6.6.2 Stochastic Algorithm

6.6.3 Variable-Step-Size NLMS Algorithm

6.6.4 Proportionate NLMS Algorithms

6.6.5 Sign Algorithms

6.7 MIMO Wiener Filter

6.7.1 Conditioning of the Covariance Matrix

6.8 Conclusions

References

7 Linear Prediction

7.1 Fundamentals

7.2 Forward Linear Prediction

7.3 Backward Linear Prediction

7.4 Levinson-Durbin Algorithm

7.5 Lattice Predictor

7.6 Spectral Representation

7.7 Linear Interpolation

7.8 Line Spectrum Pair Representation

7.9 Multichannel Linear Prediction

7.10 Conclusions

References

8 The Kalman Filter

8.1 Derivation of the Kalman Filter

8.1.1 The Minimum Mean Square Linear Optimal Estimator

8.1.2 The Estimation Error: Necessary and Sufficient Conditions for Optimality

8.1.3 The Kalman Filter

8.2 Examples: Estimation of Parametric Stochastic Process from Noisy Observations

8.2.1 Autoregressive (AR) Process

8.2.2 Moving-Average (MA) Process

8.2.3 Autoregressive Moving-Average (ARMA) Process

8.2.4 The Case of Temporally Correlated Noise

8.3 Extensions of the Kalman Filter

8.3.1 The Kalman Predictor

8.3.2 The Kalman Smoother

8.3.3 The Extended Kalman Filter

8.4 The Application of the Kalman Filter to Speech Processing

8.4.1 Literature Survey

8.4.2 Speech Enhancement

8.4.3 Speaker Tracking

8.5 Summary

References

9 Homomorphic Systems and Cepstrum Analysis of Speech

9.1 Definitions

9.1.1 Definition of the Cepstrum

9.1.2 Homomorphic Systems

9.1.3 Numerical Computation of Cepstra

9.2 Z-Transform Analysis

9.3 Discrete-Time Model for Speech Production

9.4 The Cepstrum of Speech

9.4.1 Short-Time Cepstrum of Speech

9.4.2 Homomorphic Filtering of Speech

9.5 Relation to LPC

9.5.1 LPC Versus Cepstrum Smoothing

9.5.2 Cepstrum from LPC Model

9.5.3 Minimum Phase and Recursive Computation

9.6 Application to Pitch Detection

9.7 Applications to Analysis/Synthesis Coding

9.7.1 Homomorphic Vocoder

9.7.2 Homomorphic Formant Vocoder

9.7.3 Analysis-by-Synthesis Vocoder

9.8 Applications to Speech Pattern Recognition

9.8.1 Compensation for Linear Filtering

9.8.2 Weighted Distance Measures

9.8.3 Group Delay Spectrum

9.8.4 Mel-Frequency Cepstrum Coefficients (MFCC)

9.9 Summary

References

10 Pitch and Voicing Determination of Speech with an Extension Toward Music Signals

10.1 Pitch in Time-Variant Quasiperiodic Acoustic Signals

10.1.1 Basic Definitions

10.1.2 Why is the Problem Difficult?

10.1.3 Categorizing the Methods

10.2 Short-Term Analysis PDAs

10.2.1 Correlation and Distance Function

10.2.2 Cepstrum and Other Double-Transform Methods

10.2.3 Frequency-Domain Methods: Harmonic Analysis

10.2.4 Active Modeling

10.2.5 Least Squares and Other Statistical Methods

10.2.6 Concluding Remarks

10.3 Selected Time-Domain Methods

10.3.1 Temporal Structure Investigation

10.3.2 Fundamental Harmonic Processing

10.3.3 Temporal Structure Simplification

10.3.4 Cascaded Solutions

10.4 A Short Look into Voicing Determination

10.4.1 Simultaneous Pitch and Voicing Determination

10.4.2 Pattern-Recognition VDAs

10.5 Evaluation and Postprocessing

10.5.1 Developing Reference PDAs with Instrumental Help

10.5.2 Error Analysis

10.5.3 Evaluation of PDAs and VDAs- Some Results

10.5.4 Postprocessing and Pitch Tracking

10.6 Applications in Speech and Music

10.7 Some New Challenges and Developments

10.7.1 Detecting the Instant of Glottal Closure

10.7.2 Multiple Pitch Determination

10.7.3 Instantaneousness Versus Reliability

10.8 Concluding Remarks

References

11 Formant Estimation and Tracking

11.1 Historical

11.2 Vocal Tract Resonances

11.3 Speech Production

11.4 Acoustics of the Vocal Tract

11.4.1 Two-Tube Models for Vowels

11.4.2 Three-Tube Models for Nasals and Fricatives

11.4.3 Obstruents

11.4.4 Coarticulation

11.5 Short-Time Speech Analysis

11.5.1 Vowels

11.5.2 Nasals

11.5.3 Fricatives and Stops

11.6 Formant Estimation

11.6.1 Continuity Constraints

11.6.2 Use of Phase Shift

11.6.3 Smoothing

11.7 Summary

References

12 The STFT, Sinusoidal Models, and Speech Modification

12.1 The Short-Time Fourier Transform

12.1.1 The STFT as a Sliding-Window Transform

12.1.2 The STFT as a Modulated Filter Bank

12.1.3 Original Formulation of the STFT

12.1.4 The Time Reference of the STFT

12.1.5 The STFT as a Heterodyne Filter Bank

12.1.6 Reconstruction Methods and Signal Models

12.1.7 Examples

12.1.8 Limitations of the STFT

12.2 Sinusoidal Models

12.2.1 Parametric Extension of the STFT

12.2.2 The Sinusoidal Signal Model

12.2.3 Sinusoidal Analysis and Synthesis

12.2.4 Signal Modeling by Matching Pursuit

12.2.5 Sinusoidal Matching Pursuits

12.2.6 Sinusoidal Analysis

12.2.7 Sinusoidal Synthesis

12.3 Speech Modification

12.3.1 Comparing the STFT and Sinusoidal Models

12.3.2 Linear Filtering

12.3.3 Enhancement

12.3.4 Time-Scale Modification

12.3.5 Pitch Modification

12.3.6 Cross-Synthesis and Other Modifications

12.3.7 Audio Coding with Decode-Side Modification

References

13 Adaptive Blind Multichannel Identification

13.1 Overview

13.2 Signal Model and Problem Formulation

13.3 Identifiability and Principle

13.4 Constrained Time-Domain Multichannel LMS and Newton Algorithms

13.4.1 Unit-Norm Constrained Multichannel LMS Algorithm

13.4.2 Unit-Norm Constrained Multichannel Newton Algorithm

13.5 Unconstrained Multichannel LMS Algorithm with Optimal Step-Size Control

13.6 Frequency-Domain Blind Multichannel Identification Algorithms

13.6.1 Frequency-Domain Multichannel LMS Algorithm

13.6.2 Frequency-Domain Normalized Multichannel LMS Algorithm

13.7 Adaptive Multichannel Exponentiated Gradient Algorithm

13.8 Summary

References

C Speech Coding

14 Principles of Speech Coding

14.1 The Objective of Speech Coding

14.2 Speech Coder Attributes

14.2.1 Rate

14.2.2 Quality

14.2.3 Robustness to Channel Imperfections

14.2.4 Delay

14.2.5 Computational and Memory Requirements

14.3 A Universal Coder for Speech

14.3.1 Speech Segment as Random Vector

14.3.2 Encoding Random Speech Vectors

14.3.3 A Model of Quantization

14.3.4 Coding Speech with a Model Family

14.4 Coding with Autoregressive Models

14.4.1 Spectral-Domain Index of Resolvability

14.4.2 A Criterion for Model Selection

14.4.3 Bit Allocation for the Model

14.4.4 Remarks on Practical Coding

14.5 Distortion Measures and Coding Architecture

14.5.1 Squared Error

14.5.2 Masking Models and Squared Error

14.5.3 Auditory Models and Squared Error

14.5.4 Distortion Measure and Coding Architecture

14.6 Summary

References

15 Voice over IP: Speech Transmission over Packet Networks

15.1 Voice Communication

15.1.1 Limitations of PSTN

15.1.2 The Promise of VoIP

15.2 Properties of the Network

15.2.1 Network Protocols

15.2.2 Network Characteristics

15.2.3 Typical Network Characteristics

15.2.4 Quality-of-Service Techniques

15.3 Outline of a VoIP System

15.3.1 Echo Cancelation

15.3.2 Speech Codec

15.3.3 Jitter Buffer

15.3.4 Packet Loss Recovery

15.3.5 Joint Design of Jitter Buffer and Packet Loss Concealment

15.3.6 Auxiliary Speech Processing Components

15.3.7 Measuring the Quality of a VoIP System

15.4 Robust Encoding

15.4.1 Forward Error Correction

15.4.2 Multiple Description Coding

15.5 Packet Loss Concealment

15.5.1 Nonparametric Concealment

15.5.2 Parametric Concealment

15.6 Conclusion

References

16 Low-Bit-Rate Speech Coding

16.1 Speech Coding

16.2 Fundamentals: Parametric Modeling of Speech Signals

16.2.1 Speech Production

16.2.2 Human Speech Perception

16.2.3 Vocoders

16.3 Flexible Parametric Models

16.3.1 Mixed Excitation Linear Prediction (MELP)

16.3.2 Sinusoidal Coding

16.3.3 Waveform Interpolation

16.3.4 Comparison and Contrast of Modeling Approaches

16.4 Efficient Quantization of Model Parameters

16.4.1 Vector Quantization

16.4.2 Exploiting Temporal Properties

16.4.3 LPC Filter Quantization

16.5 Low-Rate Speech Coding Standards

16.5.1 MIL-STD 3005

16.5.2 The NATO STANAG 4591

16.5.3 Satellite Communications

16.5.4 ITU 4 kb/s Standardization

16.6 Summary

References

17 Analysis-by-Synthesis Speech Coding

17.1 Overview

17.2 Basic Concepts of Analysis-by-Synthesis Coding

17.2.1 Definition of Analysis-by-Synthesis

17.2.2 From Conventional Predictive Waveform Coding to a Speech Synthesis Model

17.2.3 Basic Principle of Analysis by Synthesis

17.2.4 Generic Analysis-by-Synthesis Encoder Structure

17.2.5 Reasons for the Coding Efficiency of Analysis by Synthesis

17.3 Overview of Prominent Analysis-by-Synthesis Speech Coders

17.4 Multipulse Linear Predictive Coding (MPLPC)

17.5 Regular-Pulse Excitation with Long-Term Prediction (RPE-LTP)

17.6 The Original Code Excited Linear Prediction (CELP) Coder

17.7 US Federal Standard FS1016 CELP

17.8 Vector Sum Excited Linear Prediction (VSELP)

17.9 Low-Delay CELP (LD-CELP)

17.10 Pitch Synchronous Innovation CELP (PSI-CELP)

17.11 Algebraic CELP (ACELP)

17.11.1 ACELP Background

17.11.2 ACELP Efficient Search Methods

17.11.3 ACELP in Standards

17.12 Conjugate Structure CELP (CS-CELP) and CS-ACELP

17.13 Relaxed CELP (RCELP) - Generalized Analysis by Synthesis

17.13.1 Generalized Analysis by Synthesis Applied to the Pitch Parameters

17.13.2 RCELP in Standards

17.14 eX-CELP

17.14.1 eX-CELP in Standards

17.15 iLBC

17.16 TSNFC

17.16.1 Excitation VQ in TSNFC

17.16.2 TSNFC in Standards

17.17 Embedded CELP

17.18 Summary of Analysis-by-Synthesis Speech Coders

17.19 Conclusion

References

18 Perceptual Audio Coding of Speech Signals

18.1 History of Audio Coding

18.2 Fundamentals of Perceptual Audio Coding

18.2.1 General Background

18.2.2 Coder Structure

18.2.3 Perceptual Audio Coding Versus Speech Coding

18.3 Some Successful Standardized Audio Coders

18.3.1 MPEG-1

18.3.2 MPEG-2

18.3.3 MPEG-2 Advanced Audio Coding

18.3.4 MPEG-4 Advanced Audio Coding

18.3.5 Progress in Coding Performance

18.4 Perceptual Audio Coding for Real-Time Communication

18.4.1 Delay Sources in Perceptual Audio Coding

18.4.2 MPEG-4 Low-Delay AAC

18.4.3 ITU-T G.722.1-C

18.4.4 Ultra-Low-Delay Perceptual Audio Coding

18.5 Hybrid/Crossover Coders

18.5.1 MPEG-4 Scalable Speech/Audio Coding

18.5.2 ITU-T G.729.1

18.5.3 AMR-WB+

18.5.4 ARDOR

18.6 Summary

References

D Text-to-Speech Synthesis

19 Basic Principles of Speech Synthesis

19.1 The Basic Components of a TTS System

19.1.1 TTS Frontend

19.1.2 TTS Backend

19.2 Speech Representations and Signal Processing for Concatenative Synthesis

19.2.1 Time-Domain Pitch Synchronous Overlap Add (TD-PSOLA)

19.2.2 LPC-Based Synthesis

19.2.3 Sinusoidal Synthesis

19.3 Speech Signal Transformation Principles

19.3.1 Prosody Transformation Principles

19.3.2 Principle Methods for Changing Speaker Characteristics and Speaking Style

19.4 Speech Synthesis Evaluation

19.5 Conclusions

References

20 Rule-Based Speech Synthesis

20.1 Background

20.2 Terminal Analog

20.2.1 Formant Synthesizers

20.2.2 Higher-Level Parameters

20.2.3 Voice Source Models

20.3 Controlling the Synthesizer

20.3.1 Rule Compilers for Speech Synthesis

20.3.2 Data-Driven Parametric Synthesis

20.4 Special Applications of Rule-Based Parametric Synthesis

20.5 Concluding Remarks

References

21 Corpus-Based Speech Synthesis

21.1 Basics

21.2 Concatenative Synthesis with a Fixed Inventory

21.2.1 Diphone-Based Synthesis

21.2.2 Modifying Prosody

21.2.3 Smoothing Joints

21.2.4 Up from Diphones

21.3 Unit-Selection-Based Synthesis

21.3.1 Selecting Units

21.3.2 Target Cost

21.3.3 Concatenation Cost

21.3.4 Speech Corpus

21.3.5 Computational Cost

21.4 Statistical Parametric Synthesis

21.4.1 HMM-Based Synthesis Framework

21.4.2 The State of the Art and Perspectives

21.5 Conclusion

References

22 Linguistic Processing for Speech Synthesis

22.1 Why Linguistic Processing is Hard

22.2 Fundamentals: Writing Systems and the Graphical Representation of Language

22.3 Problems to be Solved and Methods to Solve Them

22.3.1 Text Preprocessing

22.3.2 Morphological Analysis and Word Pronunciation

22.3.3 Syntactic Analysis, Accenting, and Phrasing

22.3.4 Sense Disambiguation: Dealing with Ambiguity in Written Language

22.4 Architectures for Multilingual Linguistic Processing

22.5 Document-Level Processing

22.6 Future Prospects

References

23 Prosodic Processing

23.1 Overview

23.1.1 What Is Prosody?

23.1.2 Prosody in Human-Human Communication

23.2 Historical Overview

23.2.1 Rule-Based Approaches in Formant Synthesis

23.2.2 Statistical Approaches in Diphone Synthesis

23.2.3 Using as-is Prosody in Unit Selection Synthesis

23.3 Fundamental Challenges

23.3.1 Challenge to Unit Selection: Combinatorics of Language

23.3.2 Challenge to Target Prosody-Based Approaches: Multitude of Interrelated Acoustic Prosodic Features

23.4 A Survey of Current Approaches

23.4.1 Timing

23.4.2 Intonation

23.5 Future Approaches

23.5.1 Hybrid Approaches

23.6 Conclusions

References

24 Voice Transformation

24.1 Background

24.2 Source-Filter Theory and Harmonic Models

24.2.1 Harmonic Model

24.2.2 Analysis Based on the Harmonic Model

24.2.3 Synthesis Based on the Harmonic Model

24.3 Definitions

24.3.1 Source Modifications

24.3.2 Filter Modifications

24.3.3 Combining Source and Filter Modifications

24.4 Source Modifications

24.4.1 Time-Scale Modification

24.4.2 Pitch Modification

24.4.3 Joint Pitch and Time-Scale Modification

24.4.4 Energy Modification

24.4.5 Generating the Source Modified Speech Signal

24.5 Filter Modifications

24.5.1 The Gaussian Mixture Model

24.6 Conversion Functions

24.7 Voice Conversion

24.8 Quality Issues in Voice Transformations

24.9 Summary

References

25 Expressive/Affective Speech Synthesis

25.1 Overview

25.2 Characteristics of Affective Speech

25.2.1 Intentions and Emotions

25.2.2 Message and Filters

25.2.3 Coding and Expression

25.3 The Communicative Functionality of Speech

25.3.1 Multiple Layers of Prosodic Information

25.3.2 Text Data versus Speech Synthesis

25.4 Approaches to Synthesizing Expressive Speech

25.4.1 Emotion in Expressive Speech Synthesis

25.5 Modeling Human Speech

25.5.1 Discourse-Act Labeling

25.5.2 Expressive Speech and Emotion

25.5.3 Concatenative Synthesis Using Expressive Speech Samples

25.6 Conclusion

References

E Speech Recognition

26 Historical Perspective of the Field of ASR/NLU

26.1 ASR Methodologies

26.1.1 Issues in Speech Recognition

26.2 Important Milestones in Speech Recognition History

26.3 Generation 1 - The Early History of Speech Recognition

26.4 Generation 2 - The First Working Systems for Speech Recognition

26.5 Generation 3 - The Pattern Recognition Approach to Speech Recognition

26.5.1 The ARPA SUR Project

26.5.2 Research Outside of the ARPA Community

26.6 Generation 4 - The Era of the Statistical Model

26.6.1 DARPA Programs in Generation 4

26.7 Generation 5 - The Future

26.8 Summary

References

27 HMMs and Related Speech Recognition Technologies

27.1 Basic Framework

27.2 Architecture of an HMM-Based Recognizer

27.2.1 Feature Extraction

27.2.2 HMM Acoustic Models

27.2.3 N-Gram Language Models

27.2.4 Decoding and Lattice Generation

27.3 HMM-Based Acoustic Modeling

27.3.1 Discriminative Training

27.3.2 Covariance Modeling

27.4 Normalization

27.4.1 Mean and Variance Normalization

27.4.2 Gaussianization

27.4.3 Vocal-Tract-Length Normalization

27.5 Adaptation

27.5.1 Maximum A Posteriori (MAP) Adaptation

27.5.2 ML-Based Linear Transforms

27.5.3 Adaptive Training

27.6 Multipass Recognition Architectures

27.7 Conclusions

References

28 Speech Recognition with Weighted Finite-State Transducers

28.1 Definitions

28.2 Overview

28.2.1 Weighted Acceptors

28.2.2 Weighted Transducers

28.2.3 Composition

28.2.4 Determinization

28.2.5 Minimization

28.2.6 Speech Recognition Transducers

28.3 Algorithms

28.3.1 Preliminaries

28.3.2 Composition

28.3.3 Determinization

28.3.4 Weight Pushing

28.3.5 Minimization

28.4 Applications to Speech Recognition

28.4.1 Speech Recognition Transducers

28.4.2 Transducer Standardization

28.5 Conclusion

References

29 A Machine Learning Framework for Spoken-Dialog Classification

29.1 Motivation

29.2 Introduction to Kernel Methods

29.3 Rational Kernels

29.4 Algorithms

29.5 Experiments

29.6 Theoretical Results for Rational Kernels

29.7 Conclusion

References

30 Towards Superhuman Speech Recognition

30.1 Current Status

30.2 A Multidomain Conversational Test Set

30.3 Listening Experiments

30.3.1 Baseline Listening Tests

30.3.2 Listening Tests to Determine Knowledge Source Contributions

30.4 Recognition Experiments

30.4.1 Preliminary Recognition Results

30.4.2 Results on the Multidomain Test Set

30.4.3 System Redesign

30.4.4 Coda

30.5 Speculation

30.5.1 Proposed Human Listening Experiments

30.5.2 Promising Incremental Approaches

30.5.3 Promising Disruptive Approaches

References

31 Natural Language Understanding

31.1 Overview of NLU Applications

31.1.1 Context Dependence

31.1.2 Semantic Representation

31.2 Natural Language Parsing

31.2.1 Decision Tree Parsers

31.3 Practical Implementation

31.3.1 Classing

31.3.2 Labeling

31.4 Speech Mining

31.4.1 Word Tagging

31.5 Conclusion

References

32 Transcription and Distillation of Spontaneous Speech

32.1 Background

32.2 Overview of Research Activities on Spontaneous Speech

32.2.1 Classification of Spontaneous Speech

32.2.2 Major Projects and Corpora of Spontaneous Speech

32.2.3 Issues in Design of Spontaneous Speech Corpora

32.2.4 Corpus of Spontaneous Japanese (CSJ)

32.3 Analysis for Spontaneous Speech Recognition

32.3.1 Observation in Spectral Analysis

32.3.2 Analysis of Speaking Rate

32.3.3 Analysis of Factors Affecting ASR Accuracy

32.4 Approaches to Spontaneous Speech Recognition

32.4.1 Effect of Corpus Size

32.4.2 Acoustic Modeling

32.4.3 Models Considering Speaking Rate

32.4.4 Pronunciation Variation Modeling

32.4.5 Language Model

32.4.6 Adaptation of Acoustic Model

32.4.7 Adaptation of Language Model

32.5 Metadata and Structure Extraction of Spontaneous Speech

32.5.1 Sentence Boundary Detection

32.5.2 Disfluency Detection

32.5.3 Detection of Topic and Discourse Boundaries

32.6 Speech Summarization

32.6.1 Categories of Speech Summarization

32.6.2 Key Sentence Extraction

32.6.3 Summary Generation

32.7 Conclusions

References

33 Environmental Robustness

33.1 Noise Robust Speech Recognition

33.1.1 Standard Noise-Robust ASR Tasks

33.1.2 The Acoustic Mismatch Problem

33.1.3 Reducing Acoustic Mismatch

33.2 Model Retraining and Adaptation

33.2.1 Retraining on Corrupted Speech

33.2.2 Single-Utterance Retraining

33.2.3 Model Adaptation

33.3 Feature Transformation and Normalization

33.3.1 Feature Moment Normalization

33.3.2 Voice Activity Detection

33.3.3 Cepstral Time Smoothing

33.3.4 SPLICE - Normalization Learned from Stereo Data

33.4 A Model of the Environment

33.5 Structured Model Adaptation

33.5.1 Analysis of Noisy Speech Features

33.5.2 Log-Normal Parallel Model Combination

33.5.3 Vector Taylor-Series Model Adaptation

33.5.4 Comparison of VTS and Log-Normal PMC

33.5.5 Strategies for Highly Nonstationary Noises

33.6 Structured Feature Enhancement

33.6.1 Spectral Subtraction

33.6.2 Vector Taylor-Series Speech Enhancement

33.7 Unifying Model and Feature Techniques

33.7.1 Noise Adaptive Training

33.7.2 Uncertainty Decoding and Missing Feature Techniques

33.8 Conclusion

References

34 The Business of Speech Technologies

34.1 Introduction

34.1.1 Economic Value of Network-Based Speech Services

34.1.2 Economic Value of Device-Based Speech Applications

34.1.3 Technology Overview

34.2 Network-Based Speech Services

34.2.1 The Industry

34.2.2 The Service Paradigm and Historical View of Service Deployments

34.2.3 Paradigm Shift from Directed-Dialog- to Open-Dialog-Based Services

34.2.4 Technical Challenges that Lay Ahead for Network-Based Services

34.3 Device-Based Speech Applications

34.3.1 The Industry

34.3.2 The Device-Based Speech Application Marketplace

34.3.3 Technical Challenges that Enabled Mass Deployment

34.3.4 History of Device-Based ASR

34.3.5 Modern Use of ASR

34.3.6 Government Applications of Speech Recognition

34.4 Vision/Predications of Future Services - Fueling the Trends

34.4.1 Multimodal-Based Speech Services

34.4.2 Increased Automation of Service Development Process

34.4.3 Complex Problem Solving

34.4.4 Speech Mining

34.4.5 Mobile Devices

34.5 Conclusion

References

35 Spoken Dialogue Systems

35.1 Technology Components and System Development

35.1.1 System Architecture

35.1.2 Spoken Input Processing

35.1.3 Spoken Output Processing

35.1.4 Dialogue Management

35.2 Development Issues

35.2.1 Data Collection

35.2.2 Evaluation

35.3 Historical Perspectives

35.3.1 Large-Scale Government Programs

35.3.2 Some Example Systems

35.4 New Directions

35.4.1 User Simulation

35.4.2 Machine Learning and Dialogue Management

35.4.3 Portability

35.4.4 Multimodal, Multidomain, and Multilingual Application Development

35.5 Concluding Remarks

References

F Speaker Recognition

36 Overview of Speaker Recognition

36.1 Speaker Recognition

36.1.1 Personal Identity Characteristics

36.1.2 Speaker Recognition Definitions

36.1.3 Bases for Speaker Recognition

36.1.4 Extracting Speaker Characteristics from the Speech Signal

36.1.5 Applications

36.2 Measuring Speaker Features

36.2.1 Acoustic Measurements

36.2.2 Linguistic Measurements

36.3 Constructing Speaker Models

36.3.1 Nonparametric Approaches

36.3.2 Parametric Approaches

36.4 Adaptation

36.5 Decision and Performance

36.5.1 Decision Rules

36.5.2 Threshold Setting and Score Normalization

36.5.3 Errors and DET Curves

36.6 Selected Applications for Automatic Speaker Recognition

36.6.1 Indexing Multispeaker Data

36.6.2 Forensics

36.6.3 Customization: SCANmail

36.7 Summary

References

37 Text-Dependent Speaker Recognition

37.1 Brief Overview

37.1.1 Features

37.1.2 Acoustic Modeling

37.1.3 Likelihood Ratio Score

37.1.4 Speaker Model Training

37.1.5 Score Normalization and Fusion

37.1.6 Speaker Model Adaptation

37.2 Text-Dependent Challenges

37.2.1 Technological Challenges

37.2.2 Commercial Deployment Challenges

37.3 Selected Results

37.3.1 Feature Extraction

37.3.2 Accuracy Dependence on Lexicon

37.3.3 Background Model Design

37.3.4 T-Norm in the Context of Text-Dependent Speaker Recognition

37.3.5 Adaptation of Speaker Models

37.3.6 Protection Against Recordings

37.3.7 Automatic Impostor Trials Generation

37.4 Concluding Remarks

References

38 Text-Independent Speaker Recognition

38.1 Introduction

38.2 Likelihood Ratio Detector

38.3 Features

38.3.1 Spectral Features

38.3.2 High-Level Features

38.4 Classifiers

38.4.1 Adapted Gaussian Mixture Models

38.4.2 Support Vector Machines

38.4.3 High-Level Feature Classifiers

38.4.4 System Fusion

38.5 Performance Assessment

38.5.1 Task and Corpus

38.5.2 Systems

38.5.3 Results

38.5.4 Computational Considerations

38.6 Summary

References

G Language Recognition

39 Principles of Spoken Language Recognition

39.1 Spoken Language

39.2 Language Recognition Principles

39.3 Phone Recognition Followed by Language Modeling (PRLM)

39.4 Vector-Space Characterization (VSC)

39.5 Spoken Language Verification

39.6 Discriminative Classifier Design

39.7 Summary

References

40 Spoken Language Characterization

40.1 Language versus Dialect

40.2 Spoken Language Collections

40.3 Spoken Language Characteristics

40.4 Human Language Identification

40.5 Text as a Source of Information on Spoken Languages

40.6 Summary

References

41 Automatic Language Recognition Via Spectral and Token Based Approaches

41.1 Automatic Language Recognition

41.2 Spectral Based Methods

41.2.1 Shifted Delta Cepstral Features

41.2.2 Classifiers

41.3 Token-Based Methods

41.3.1 Tokens

41.3.2 Classifiers

41.4 System Fusion

41.4.1 Methods

41.4.2 Output Scores

41.5 Performance Assessment

41.5.1 Task and Corpus

41.5.2 Systems

41.5.3 Results

41.5.4 Computational Considerations

41.6 Summary

References

42 Vector-Based Spoken Language Classification

42.1 Vector Space Characterization

42.2 Unit Selection and Modeling

42.2.1 Augmented Phoneme Inventory (API)

42.2.2 Acoustic Segment Model (ASM)

42.2.3 Comparison of Unit Selection

42.3 Front-End: Voice Tokenization and Spoken Document Vectorization

42.4 Back-End: Vector-Based Classifier Design

42.4.1 Ensemble Classifier Design

42.4.2 Ensemble Decision Strategy

42.4.3 Generalized VSC-Based Classification

42.5 Language Classification Experiments and Discussion

42.5.1 Experimental Setup

42.5.2 Language Identification

42.5.3 Language Verification

42.5.4 Overall Performance Comparison

42.6 Summary

References

H Speech Enhancement

43 Fundamentals of Noise Reduction

43.1 Noise

43.2 Signal Model and Problem Formulation

43.3 Evaluation of Noise Reduction

43.3.1 Signal-to-Noise Ratio

43.3.2 Noise-Reduction Factor and Gain Function

43.3.3 Speech-Distortion Index and Attenuation Frequency Distortion

43.4 Noise Reduction via Filtering Techniques

43.4.1 Time-Domain Wiener Filter

43.4.2 A Suboptimal Filter

43.4.3 Subspace Method

43.4.4 Frequency-Domain Wiener Filter

43.4.5 Short-Time Parametric Wiener Filter

43.5 Noise Reduction via Spectral Restoration

43.5.1 MMSE Spectral Estimator

43.5.2 MMSE Spectral Amplitude and Phase Estimators

43.5.3 Maximum A Posteriori (MAP) Spectral Estimator

43.5.4 Maximum-Likelihood Spectral Amplitude Estimator

43.5.5 Maximum-Likelihood Spectral Power Estimator

43.5.6 MAP Spectral Amplitude Estimator

43.6 Speech-Model-Based Noise Reduction

43.6.1 Harmonic-Model-Based Noise Reduction

43.6.2 Linear-Prediction-Based Noise Reduction

43.6.3 Hidden-Markov-Model-Based Noise Reduction

43.7 Summary

References

44 Spectral Enhancement Methods

44.1 Spectral Enhancement

44.2 Problem Formulation

44.3 Statistical Models

44.4 Signal Estimation

44.4.1 MMSE Spectral Estimation

44.4.2 MMSE Log-Spectral Amplitude Estimation

44.5 Signal Presence Probability Estimation

44.6 A Priori SNR Estimation

44.6.1 Decision-Directed Estimation

44.6.2 Causal Recursive Estimation

44.6.3 Relation Between Causal Recursive Estimation and Decision-Directed Estimation

44.6.4 Noncausal Recursive Estimation

44.7 Noise Spectrum Estimation

44.7.1 Time-Varying Recursive Averaging

44.7.2 Minima-Controlled Estimation

44.8 Summary of a Spectral Enhancement Algorithm

44.9 Selection of Spectral Enhancement Algorithms

44.9.1 Choice of a Statistical Model and Fidelity Criterion

44.9.2 Choice of an A Priori SNR Estimator

44.9.3 Choice of a Noise Estimator

44.10 Conclusions

References

45 Adaptive Echo Cancelation for Voice Signals

45.1 Network Echoes

45.1.1 Network Echo Canceler

45.1.2 Adaptation Algorithms

45.2 Single-Channel Acoustic Echo Cancelation

45.2.1 The Subband Canceler

45.2.2 RLS for Subband Echo Cancelers

45.2.3 The Delayless Subband Structure

45.2.4 Frequency-Domain Adaptation

45.2.5 The Two-Echo-Path Model

45.2.6 Variable-Step Algorithm for Acoustic Echo Cancelers

45.2.7 Cancelers for Nonlinear Echo Paths

45.3 Multichannel Acoustic Echo Cancelation

45.3.1 Nonuniqueness of the Misalignment Vector

45.3.2 Solutions for the Nonuniqueness Problem

45.4 Summary

References

46 Dereverberation

46.1 Background and Overview

46.1.1 Why Speech Dereverberation?

46.1.2 Room Acoustics and Reverberation Evaluation

46.1.3 Classification of Speech Dereverberation Methods

46.2 Signal Model and Problem Formulation

46.3 Source Model-Based Speech Dereverberation

46.3.1 Speech Models

46.3.2 LP Residual Enhancement Methods

46.3.3 Harmonic Filtering

46.3.4 Speech Dereverberation Using Probabilistic Models

46.4 Separation of Speech and Reverberation via Homomorphic Transformation

46.4.1 Cepstral Liftering

46.4.2 Cepstral Mean Subtraction and High-Pass Filtering of Cepstral Frame Coefficients

46.5 Channel Inversion and Equalization

46.5.1 Single-Channel Systems

46.5.2 Multichannel Systems

46.6 Summary

References

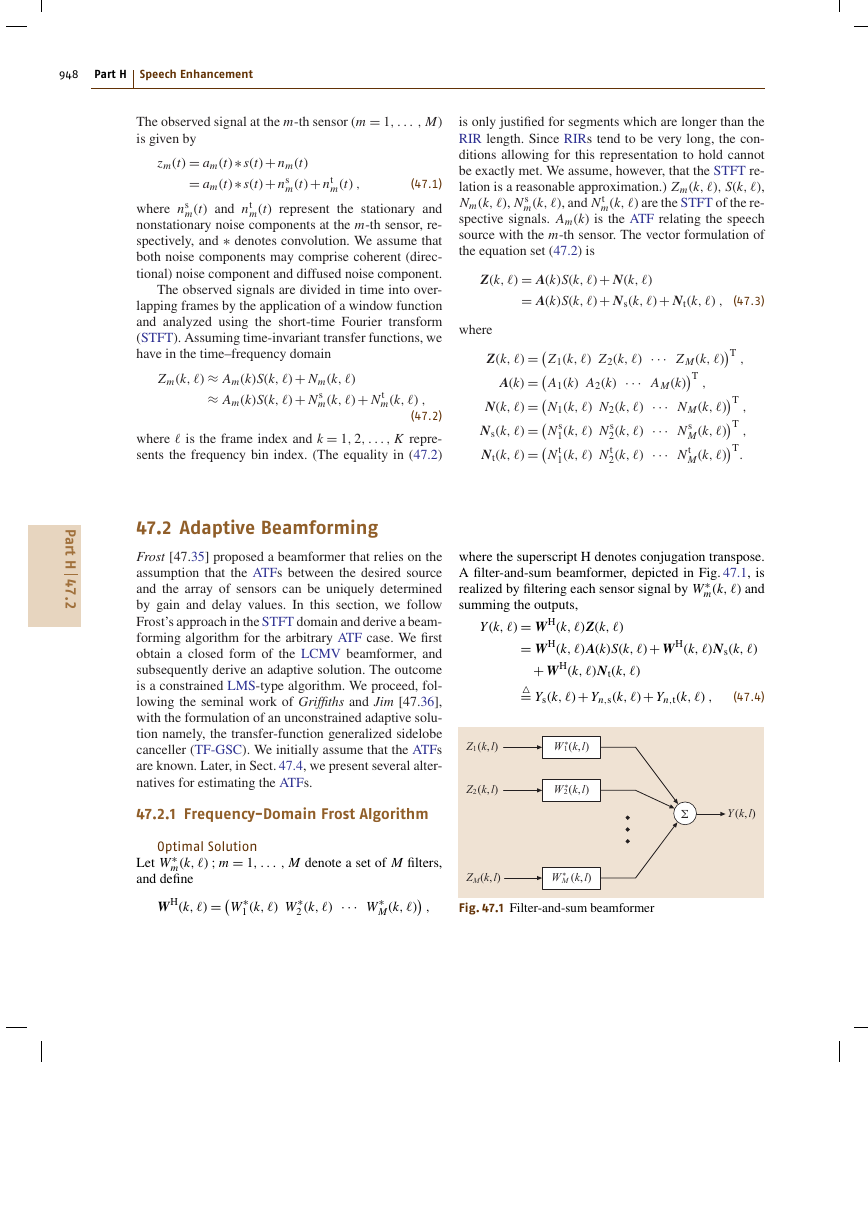

47 Adaptive Beamforming and Postfiltering

47.1 Problem Formulation

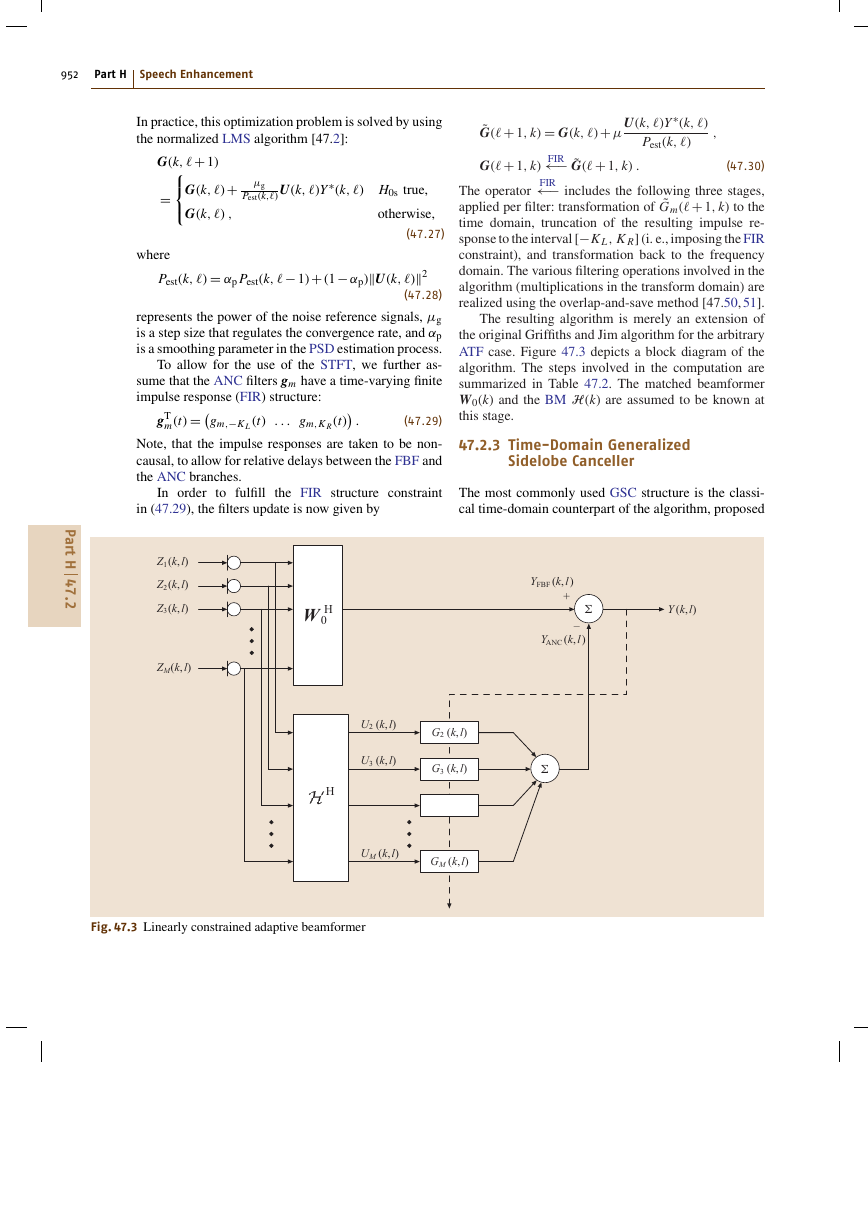

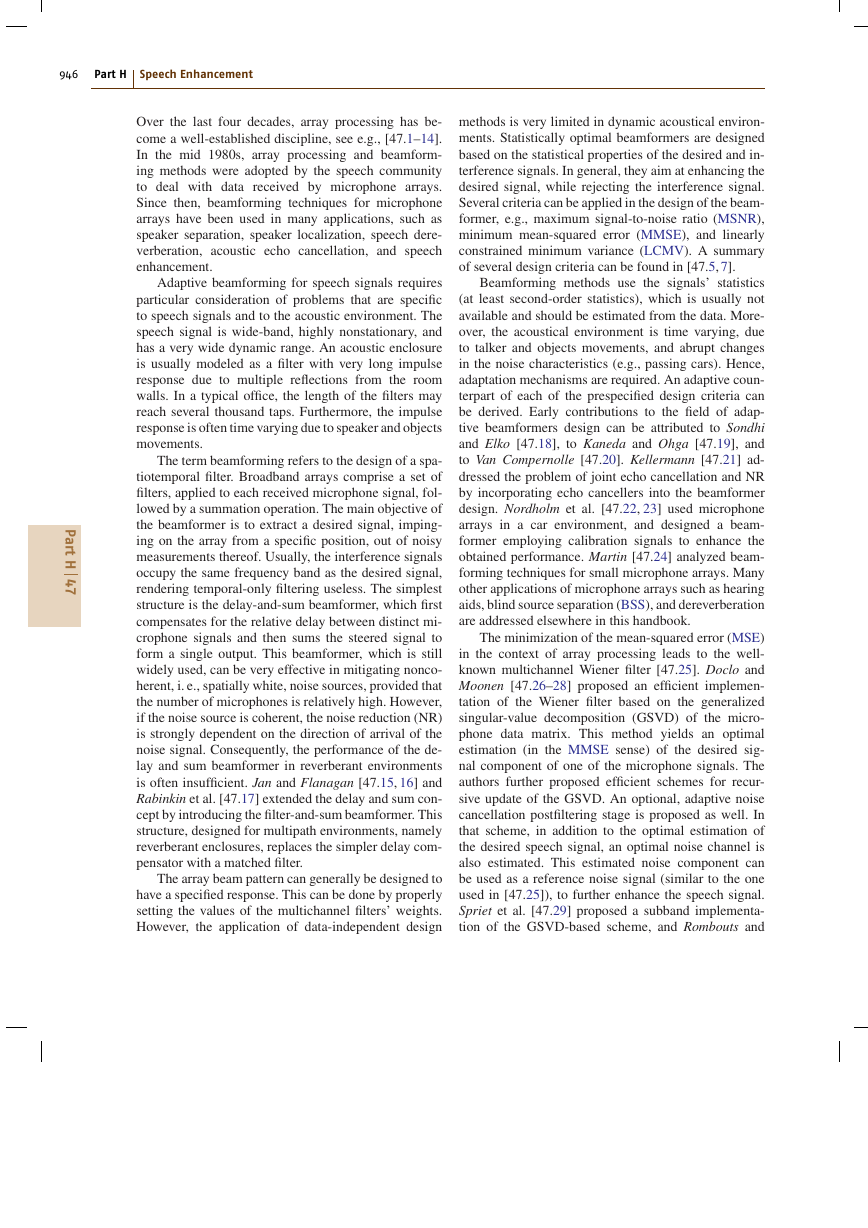

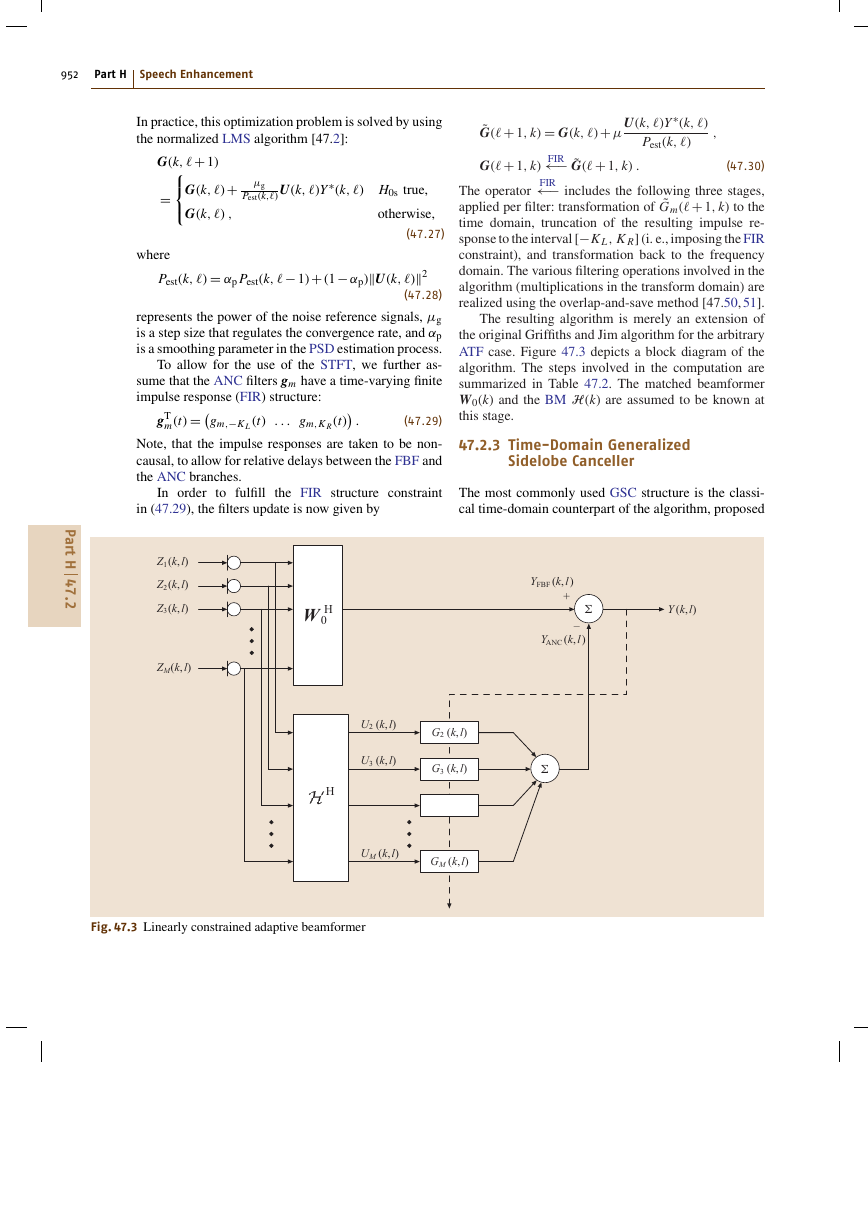

47.2 Adaptive Beamforming

47.2.1 Frequency-Domain Frost Algorithm

47.2.2 Frequency-Domain Generalized Sidelobe Canceller

47.2.3 Time-Domain Generalized Sidelobe Canceller

47.3 Fixed Beamformer and Blocking Matrix

47.3.1 Using Acoustical Transfer Functions

47.3.2 Using Delay-Only Filters

47.3.3 Using Relative Transfer Functions

47.4 Identification of the Acoustical Transfer Function

47.4.1 Signal Subspace Method

47.4.2 Time Difference of Arrival

47.4.3 Relative Transfer Function Estimation

47.5 Robustness and Distortion Weighting

47.6 Multichannel Postfiltering

47.6.1 MMSE Postfiltering

47.6.2 Log-Spectral Amplitude Postfiltering

47.7 Performance Analysis

47.7.1 The Power Spectral Density of the Beamformer Output

47.7.2 Signal Distortion

47.7.3 Stationary Noise Reduction

47.8 Experimental Results

47.9 Summary

47.A Appendix: Derivation of the Expected Noise Reduction for a Coherent Noise Field

47.B Appendix: Equivalence Between Maximum SNR and LCMV Beamformers

References

48 Feedback Control in Hearing Aids

48.1 Problem Statement

48.1.1 Acoustic Feedback in Hearing Aids

48.1.2 Feedforward Suppression Versus Feedback Cancellation

48.1.3 Performance of a Feedback Canceller

48.2 Standard Adaptive Feedback Canceller

48.2.1 Adaptation of the CAF

48.2.2 Bias of the CAF

48.2.3 Reducing the Bias of the CAF

48.3 Feedback Cancellation Based on Prior Knowledge of the Acoustic Feedback Path

48.3.1 Constrained Adaptation (C-CAF)

48.3.2 Bandlimited Adaptation (BL-CAF)

48.4 Feedback Cancellation Based on Closed-Loop System Identification

48.4.1 Closed-Loop System Setup

48.4.2 Direct Method

48.4.3 Desired Signal Model

48.4.4 Indirect and Joint Input-Output Method

48.5 Comparison

48.5.1 Steady-State Performance

48.5.2 Tracking Performance

48.5.3 Measurement of the Actual Maximum Stable Gain

48.6 Conclusions

References

49 Active Noise Control

49.1 Broadband Feedforward Active Noise Control

49.1.1 Filtered-X LMS Algorithm

49.1.2 Analysis of the FXLMS Algorithm

49.1.3 Leaky FXLMS Algorithm

49.1.4 Feedback Effects and Solutions

49.2 Narrowband Feedforward Active Noise Control

49.2.1 Introduction

49.2.2 Waveform Synthesis Method

49.2.3 Adaptive Notch Filters

49.2.4 Multiple-Frequency ANC

49.2.5 Active Noise Equalization

49.3 Feedback Active Noise Control

49.4 Multichannel ANC

49.4.1 Principles

49.4.2 Multichannel FXLMS Algorithms

49.4.3 Frequency-Domain Convergence Analysis

49.4.4 Multichannel IIR Algorithm

49.4.5 Multichannel Adaptive Feedback ANC Systems

49.5 Summary

References

I Multichannel Speech Processing

50 Microphone Arrays

50.1 Microphone Array Beamforming

50.1.1 Delay-and-Sum Beamforming

50.1.2 Filter-and-Sum Beamforming

50.1.3 Arrays with Directional Elements

50.2 Constant-Beamwidth Microphone Array System

50.3 Constrained Optimization of the Directional Gain

50.4 Differential Microphone Arrays

50.5 Eigenbeamforming Arrays

50.5.1 Spherical Array

50.5.2 Eigenbeamformer

50.5.3 Modal Beamformer

50.6 Adaptive Array Systems

50.6.1 Constrained Broadband Arrays

50.7 Conclusions

References

51 Time Delay Estimation and Source Localization

51.1 Technology Taxonomy

51.2 Time Delay Estimation

51.2.1 Problem Formulation and Signal Models

51.2.2 The Family of the Generalized Cross-Correlation Methods

51.2.3 Adaptive Eigenvalue Decomposition Algorithm

51.2.4 Adaptive Blind Multichannel Identification Based Methods

51.2.5 Multichannel Spatial Prediction and Interpolation Methods

51.2.6 Multichannel Cross-Correlation Coefficient Algorithm

51.2.7 Minimum-Entropy Method

51.3 Source Localization

51.3.1 Problem Formulation

51.3.2 Measurement Model and Cramèr-Rao Lower Bound

51.3.3 Maximum-Likelihood Estimator

51.3.4 Least-Squares Estimators

51.3.5 Least-Squares Error Criteria

51.3.6 Spherical Intersection (SX) Estimator

51.3.7 Spherical Interpolation (SI) Estimator

51.3.8 Linear-Correction Least-Squares Estimator

51.4 Summary

References

52 Convolutive Blind Source Separation Methods

52.1 The Mixing Model

52.1.1 Special Cases

52.1.2 Convolutive Model in the Frequency Domain

52.1.3 Block-Based Model

52.2 The Separation Model

52.2.1 Feedforward Structure

52.2.2 Relation Between Source and Separated Signals

52.2.3 Feedback Structure

52.2.4 Example: The TITO System

52.3 Identification

52.4 Separation Principle

52.4.1 Higher-Order Statistics

52.4.2 Second-Order Statistics

52.4.3 Sparseness in the Time/Frequency Domain

52.4.4 Priors from Auditory Scene Analysis and Psychoacoustics

52.5 Time Versus Frequency Domain

52.5.1 Frequency Permutations

52.5.2 Time-Frequency Algorithms

52.5.3 Circularity Problem

52.5.4 Subband Filtering

52.6 The Permutation Ambiguity

52.6.1 Consistency of the Filter Coefficients

52.6.2 Consistency of the Spectrum of the Recovered Signals

52.6.3 Global Permutations

52.7 Results

52.8 Conclusion

References

53 Sound Field Reproduction

53.1 Sound Field Synthesis

53.2 Mathematical Representation of Sound Fields

53.2.1 Coordinate Systems

53.2.2 Wave Equation

53.2.3 Plane Waves

53.2.4 General Wave Fields in Two Dimensions

53.2.5 Spherical Waves

53.2.6 The Kirchhoff-Helmholtz Integral

53.3 Stereophony

53.3.1 Sine Law

53.3.2 Tangent Law

53.3.3 Application of Amplitude Panning

53.3.4 The Sweet Spot

53.4 Vector-Based Amplitude Panning

53.4.1 Two-Dimensional Vector-Based Amplitude Panning

53.4.2 Three-Dimensional Vector-Based Amplitude Panning

53.4.3 Perception of Vector-Based Amplitude Panning

53.5 Ambisonics

53.5.1 Two-Dimensional Ambisonics

53.5.2 Three-Dimensional Ambisonics

53.5.3 Extensions of Ambisonics

53.6 Wave Field Synthesis

53.6.1 Description of Acoustical Scenes by the Kirchhoff-Helmholtz Integral

53.6.2 Monopole and Dipole Sources

53.6.3 Reduction to Two Spatial Dimensions

53.6.4 Spatial Sampling

53.6.5 Determination of the Loudspeaker Driving Signals

References

Acknowledgements

About the Authors

Detailed Contents

Subject Index

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc