Machine Learning 3: 261-283, 1989

© 1989 Kluwer Academic Publishers - Manufactured in The Netherlands

The CN2 Induction Algorithm

PETER CLARK

TIM NIBLETT

The Turing Institute, 36 North Hanover Street, Glasgow, G1 2AD, U.K.

(PETE@TURING.AC.UK)

(TIM@TURING.AC.UK)

(Received: June 25, 1987)

(Revised: October 25, 1988)

Keywords: Concept learning, rule induction, noise, comprehensibility

Abstract. Systems for inducing concept descriptions from examples are valuable

tools for assisting in the task of knowledge acquisition for expert systems. This

paper presents a description and empirical evaluation of a new induction system,

CN2, designed for the efficient induction of simple, comprehensible production rules

in domains where problems of poor description language and/or noise may be present.

Implementations of the CN2, ID3, and AQ algorithms are compared on three medical

classification tasks.

1. Introduction

In the task of constructing expert systems, methods for inducing concept de-

scriptions from examples have proved useful in easing the bottleneck of knowl-

edge acquisition (Mowforth, 1986). Two families of systems, based on the ID3

(Quinlan, 1983) and AQ (Michalski, 1969) algorithms, have been especially

successful. These basic algorithms assume no noise in the domain, searching

for a concept description that classifies training data perfectly. However, ap-

plication to real-world domains requires methods for handling noisy data. In

particular, one needs mechanisms that do not overfit the induced concept de-

scription to the data, and this requires relaxing the constraint that the induced

description must classify the training data perfectly.

Fortunately, the ID3 algorithm lends itself to such modification by the na-

ture of its general-to-specific search. Tree-pruning techniques (e.g., Quinlan,

1987a; Niblett, 1987), used for example in C4 (Quinlan, Compton, Horn, &

Lazarus, 1987) and ASSISTANT (Kononenko, Bratko, & Roskar, 1984), have

proved effective against overfitting. However, the AQ algorithm's dependence

on specific training examples during search makes it less easy to modify. Ex-

isting implementations, such as AQll (Michalski & Larson, 1983) and AQ15

(Michalski, Mozetic, Hong, & Lavrac, 1986), leave the basic AQ algorithm

intact and handle noise with pre-processing and post-processing techniques.

Our objective in designing CN2 was to modify the AQ algorithm itself in

ways that removed this dependence on specific examples and increased the

�

262

P. CLARK AND T. NIBLETT

space of rules searched. This lets one apply statistical techniques, analogous

to those used for tree pruning, in the generation of if-then rules, leading to a

simpler induction algorithm.

One can identify several requirements that learning systems should meet if

they are to prove useful in a variety of real-world situations:

• Accurate classification. The induced rules should be able to classify new

examples accurately, even in the presence of noise.

• Simple rules. For the sake of comprehensibility, the induced rules should

be as short as possible. However, when noise is present, overfitting can

lead to long rules. Thus, to induce short rules, one must usually relax

the requirement that the induced rules be consistent with all the training

data. The choice of how much to relax this requirement involves a trade-off

between accuracy and simplicity (Iba, Wogulis, & Langley, 1988).

• Efficient

rule generation. If one expects to use large example sets, it is

important that the algorithm scales up to complex situations. In practice,

the time taken for rule generation should be linear in the size of the

example set.

With these requirements in mind, this paper presents a description and empir-

ical evaluation of CN2, a new induction algorithm. This system combines the

efficiency and ability to cope with noisy data of ID3 with the if-then rule form

and flexible search strategy of the AQ family. The representation for rules

output by CN2 is an ordered set of if-then rules, also known as a decision

list (Rivest, 1987). CN2 uses a heuristic function to terminate search during

rule construction, based on an estimate of the noise present in the data. This

results in rules that may not classify all the training examples correctly, but

that perform well on new data.

In the following section we describe CN2 and three other systems used for

our comparative study. In Section 3 we consider the time complexity of the

various algorithms and in Section 4 we compare their performance on three

medical diagnosis tasks. We also compare the performance of ASSISTANT and

CN2 on two synthetic tasks. In Section 5 we discuss the significance of our

results, and we follow this with some suggestions for future work in Section 6.

2. CN2 and related algorithms

CN2 incorporates ideas from both Michalski's (1969) AQ and Quinlan's

(1983) ID3 algorithms. Thus we begin by describing Kononenko et al.'s (1984)

ASSISTANT, a variant of ID3, and AQR, the authors' reconstruction of Michal-

ski's method. After this, we present CN2 and discuss its relationship to these

systems. We also describe a simple Bayesian classifier, which provides a refer-

ence for the performance of the other algorithms. We characterize each system

along three dimensions:

• the representation language for the induced knowledge;

• the performance engine for executing the rules; and

• the learning algorithm and its associated search heuristics.

�

THE CN2 ALGORITHM

263

In all of our experiments, the example description language consisted of at-

tributes, attribute values, and user-specified classes. This language was the

same for each algorithm.

2.1 ASSISTANT

The ASSISTANT algorithm (Kononenko et al., 1984) is a descendant of Quin-

lan's ID3 (1983), and incorporates a tree-pruning mechanism for handling

noisy data.

2.1.1 Concept description and interpretation in ASSISTANT

ASSISTANT represents acquired knowledge in the form of decision trees. An

internal node of a tree specifies a test of an attribute, with each outgoing

branch corresponding to a possible result of this test. Leaf nodes represent the

classification to be assigned to an example.

To classify a new example, a path from the root of the decision tree to a

leaf node is traced. At each internal node reached, one follows the branch

corresponding to the value of the attribute tested at that node. The class at

the leaf node represents the class prediction for that example.

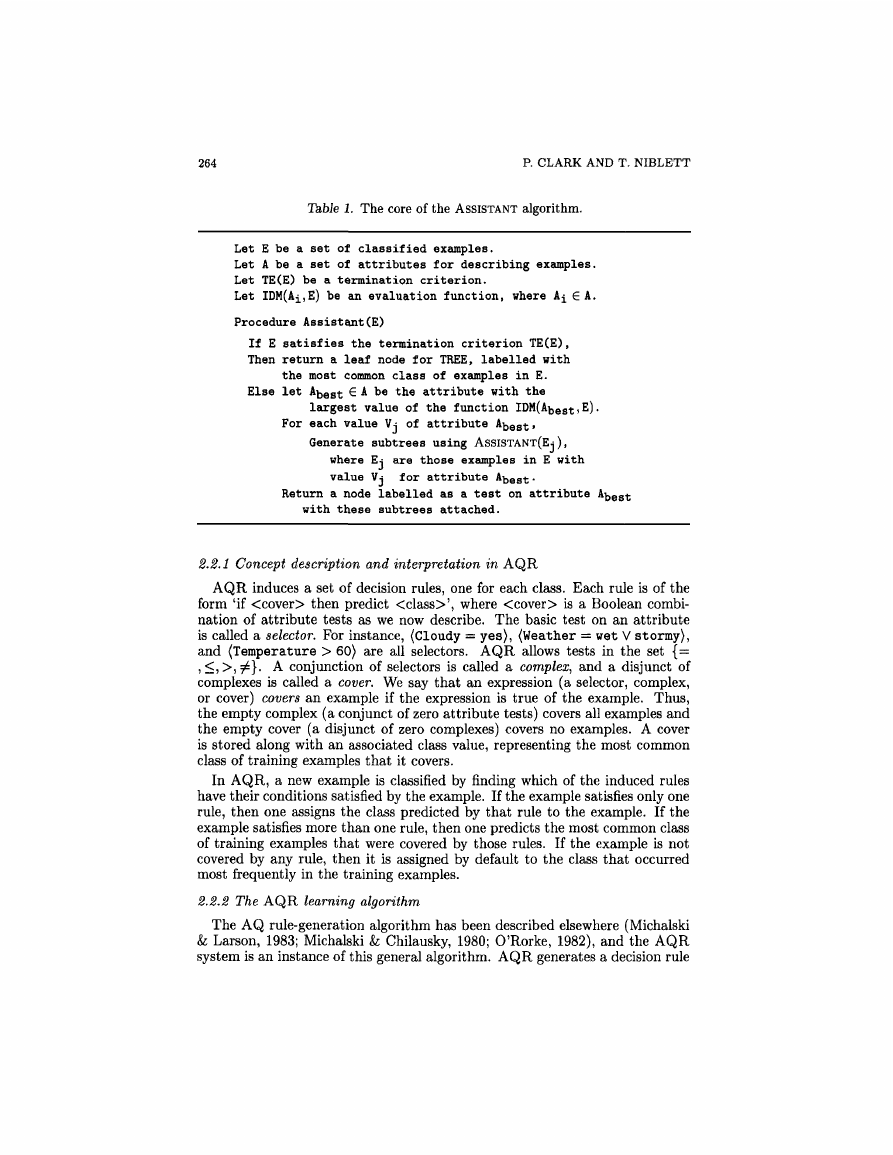

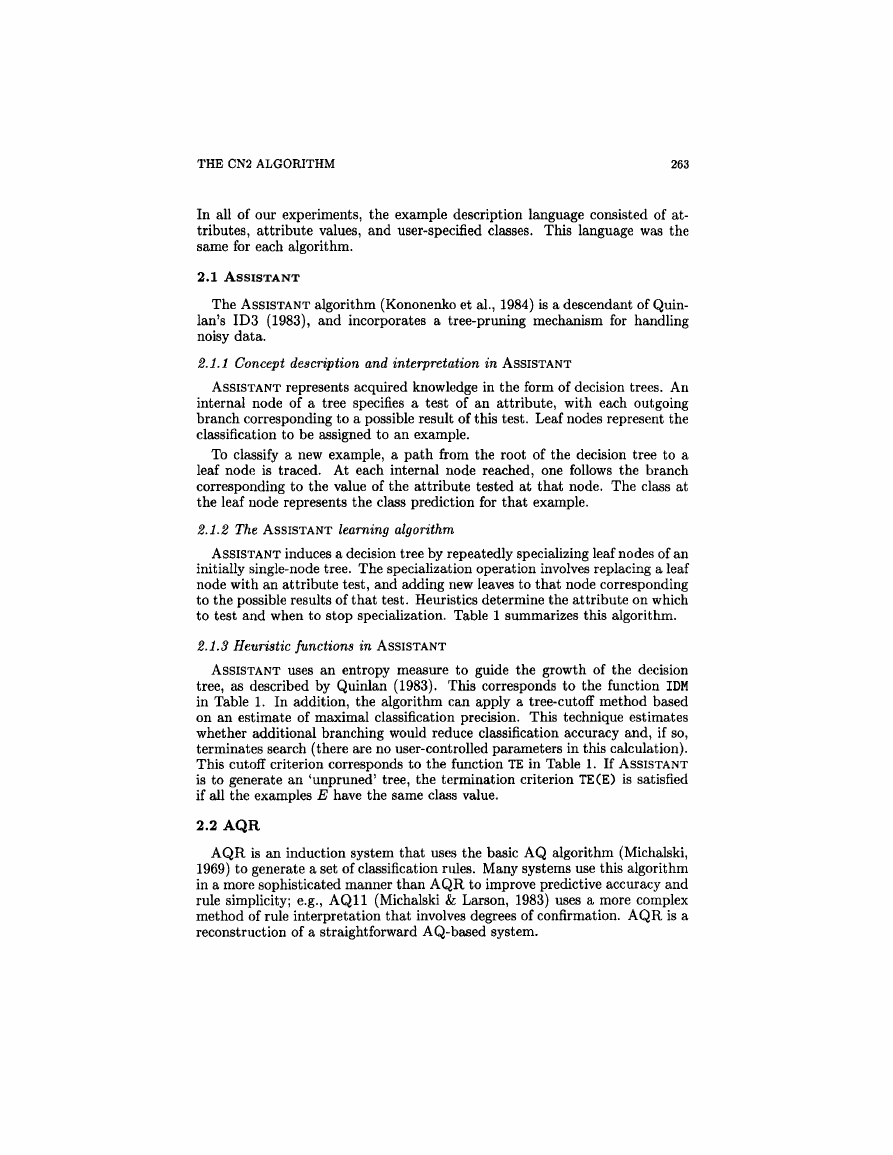

2.1.2 The ASSISTANT learning algorithm

ASSISTANT induces a decision tree by repeatedly specializing leaf nodes of an

initially single-node tree. The specialization operation involves replacing a leaf

node with an attribute test, and adding new leaves to that node corresponding

to the possible results of that test. Heuristics determine the attribute on which

to test and when to stop specialization. Table 1 summarizes this algorithm.

2.1.3 Heuristic functions in ASSISTANT

ASSISTANT uses an entropy measure to guide the growth of the decision

tree, as described by Quinlan (1983). This corresponds to the function IDM

in Table 1. In addition, the algorithm can apply a tree-cutoff method based

on an estimate of maximal classification precision. This technique estimates

whether additional branching would reduce classification accuracy and, if so,

terminates search (there are no user-controlled parameters in this calculation).

This cutoff criterion corresponds to the function TE in Table 1. If ASSISTANT

is to generate an 'unpruned' tree, the termination criterion TE(E) is satisfied

if all the examples E have the same class value,

2.2 AQR

AQR is an induction system that uses the basic AQ algorithm (Michalski,

1969) to generate a set of classification rules. Many systems use this algorithm

in a more sophisticated manner than AQR to improve predictive accuracy and

rule simplicity; e.g., AQ11 (Michalski & Larson, 1983) uses a more complex

method of rule interpretation that involves degrees of confirmation. AQR is a

reconstruction of a straightforward AQ-based system.

�

264

P. CLARK AND T. NIBLETT

Table 1. The core of the ASSISTANT algorithm.

Let E be a set of classified examples.

Let A be a set of attributes for describing examples.

Let TE(E) be a termination criterion.

Let IDM(Ai,E) be an evaluation function, where Ai 6 A.

Procedure Assistant (E)

If E satisfies the termination criterion TE(E) ,

Then return a leaf node for TREE, labelled with

the most common class of examples in E.

Else let Abest € A be the attribute with the

largest value of the function IDM(Abest,E).

For each value Vj of attribute Abest,

Generate subtrees using ASSISTANT(Ej),

where Ej are those examples in E with

value Vj for attribute Abest.

Return a node labelled as a test on attribute Abest

with these subtrees attached.

2.2.1 Concept description and interpretation in AQR

AQR induces a set of decision rules, one for each class. Each rule is of the

form 'if then predict ', where is a Boolean combi-

nation of attribute tests as we now describe. The basic test on an attribute

is called a selector. For instance, (Cloudy = yes), (Weather = wet V stormy),

and (Temperature > 60} are all selectors. AQR allows tests in the set {=

,<,>,T^}. A conjunction of selectors is called a complex, and a disjunct of

complexes is called a cover. We say that an expression (a selector, complex,

or cover) covers an example if the expression is true of the example. Thus,

the empty complex (a conjunct of zero attribute tests) covers all examples and

the empty cover (a disjunct of zero complexes) covers no examples. A cover

is stored along with an associated class value, representing the most common

class of training examples that it covers.

In AQR, a new example is classified by finding which of the induced rules

have their conditions satisfied by the example. If the example satisfies only one

rule, then one assigns the class predicted by that rule to the example. If the

example satisfies more than one rule, then one predicts the most common class

of training examples that were covered by those rules. If the example is not

covered by any rule, then it is assigned by default to the class that occurred

most frequently in the training examples.

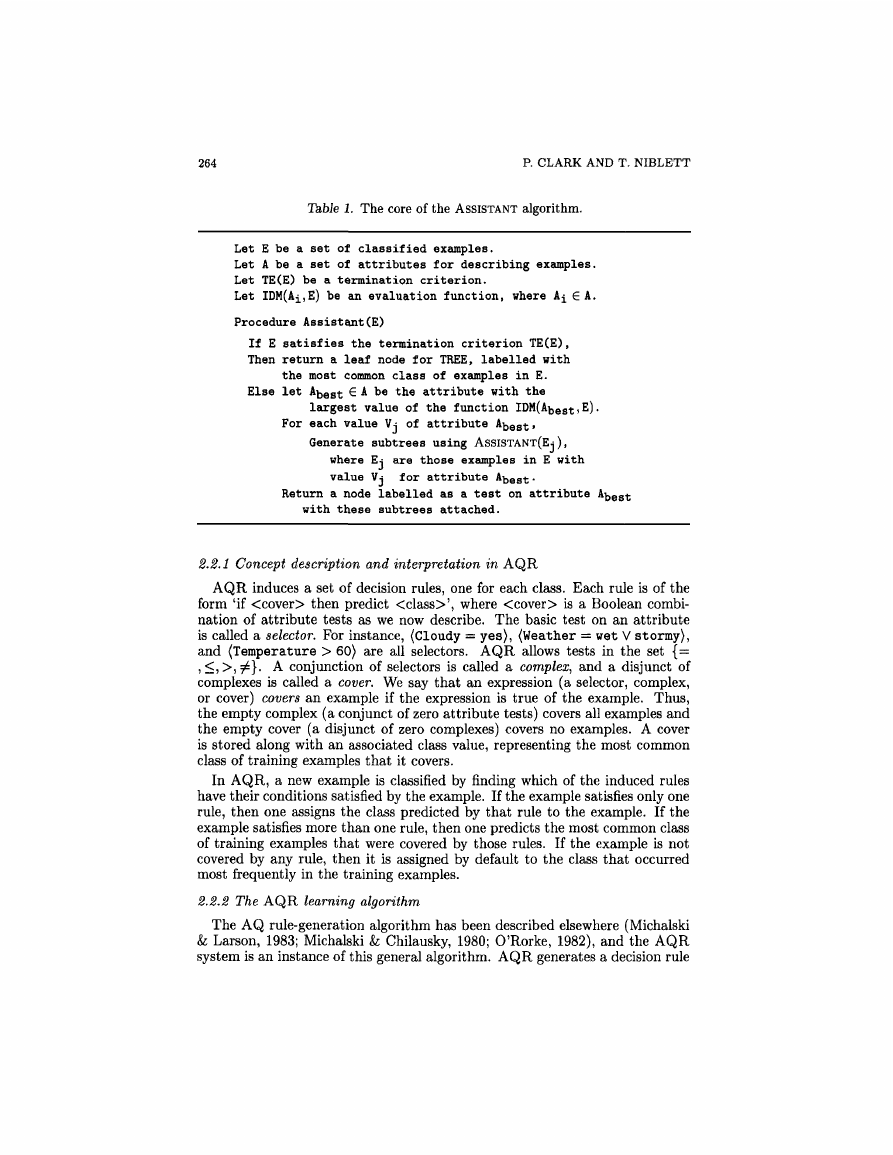

2.2.2 The AQR learning algorithm

The AQ rule-generation algorithm has been described elsewhere (Michalski

& Larson, 1983; Michalski & Chilausky, 1980; O'Rorke, 1982), and the AQR

system is an instance of this general algorithm. AQR generates a decision rule

�

THE CN2 ALGORITHM

265

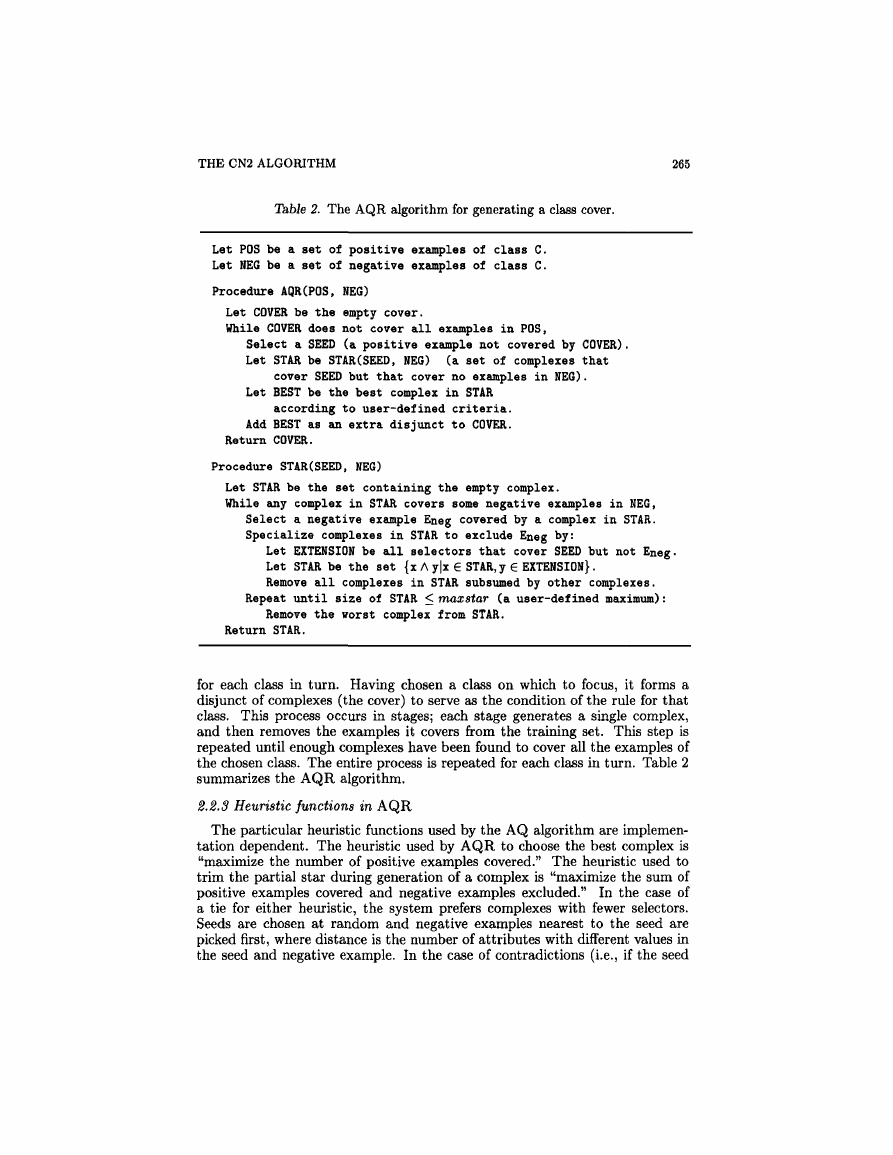

Table 2. The AQR algorithm for generating a class cover.

Let POS be a set of positive examples of class C.

Let NEG be a set of negative examples of class C.

Procedure AQR(POS, NEG)

Let COVER be the empty cover.

While COVER does not cover all examples in POS,

Select a SEED (a positive example not covered by COVER) .

Let STAR be STAR (SEED, NEG) (a set of complexes that

cover SEED but that cover no examples in NEG).

Let BEST be the best complex in STAR

according to user-defined criteria.

Add BEST as an extra disjunct to COVER.

Return COVER.

Procedure STAR(SEED, NEG)

Let STAR be the set containing the empty complex.

While any complex in STAR covers some negative examples in NEG,

Select a negative example Eneg covered by a complex in STAR.

Specialize complexes in STAR to exclude Eneg by:

Let EXTENSION be all selectors that cover SEED but not Eneg.

Let STAR be the set {x Ay|x € STAR,y 6 EXTENSION}.

Remove all complexes in STAR subsumed by other complexes.

Repeat until size of STAR < max star (a user-defined maximum):

Remove the Worst complex from STAR.

Return STAR.

for each class in turn. Having chosen a class on which to focus, it forms a

disjunct of complexes (the cover) to serve as the condition of the rule for that

class. This process occurs in stages; each stage generates a single complex,

and then removes the examples it covers from the training set. This step is

repeated until enough complexes have been found to cover all the examples of

the chosen class. The entire process is repeated for each class in turn. Table 2

summarizes the AQR algorithm.

2.2.3 Heuristic functions in AQR

The particular heuristic functions used by the AQ algorithm are implemen-

tation dependent. The heuristic used by AQR to choose the best complex is

"maximize the number of positive examples covered." The heuristic used to

trim the partial star during generation of a complex is "maximize the sum of

positive examples covered and negative examples excluded." In the case of

a tie for either heuristic, the system prefers complexes with fewer selectors.

Seeds are chosen at random and negative examples nearest to the seed are

picked first, where distance is the number of attributes with different values in

the seed and negative example. In the case of contradictions (i.e., if the seed

�

266

P. CLARK AND T. NIBLETT

and negative example have identical attribute values) the negative example is

ignored and a different one is chosen, since the complex cannot be specialized

to exclude it but still include the seed.

2.3 The CN2 algorithm

Now that we have reviewed ASSISTANT and AQR, we can turn to CN2, a

new algorithm that combines aspects of both methods. We begin by describing

how the general approach arises naturally from consideration of the decision-

tree and AQ algorithms and then consider its details.

2.3.1. Relation to ID3 and AQ

ID3 can be easily adapted to handle noisy data by virtue of its top-down

approach to tree generation. During induction, all possible attribute tests are

considered when 'growing' a leaf node in the tree, and entropy is used to select

the best one to place at that node. Overfitting of decision trees can thus be

avoided by halting tree growth when no more significant information can be

gained. We wish to apply a similar method to the induction of if-then rules.

The AQ algorithm, when generating a complex, also performs a general-

to-specific search for the best complex. However, the method only considers

specializations that exclude some particular covered negative example from

the complex while ensuring some particular 'seed' positive example remains

covered, iterating until all negative examples are excluded. As a result, AQ

searches only the space of complexes that are completely consistent with the

training data. The basic algorithm employs a beam search, which can be

viewed as several hill-climbing searches in parallel.

For the CN2 algorithm, we have retained the beam search method of the

AQ algorithm but removed its dependence on specific examples during search

and extended its search space to include rules that do not perform perfectly

on the training data. This is achieved by broadening the specialization process

to examine all specializations of a complex, in much the same way that ID3

considers all attribute tests when growing a node in the tree. Indeed, with a

beam width of one the CN2 algorithm behaves equivalently to ID3 growing a

single tree branch. This top-down search for complexes lets one apply a cutoff

method similar to decision-tree pruning to halt specialization when no further

specializations are statistically significant.

Finally, we note that CN2 produces an ordered list of if-then rules, rather

than an unordered set like that generated by AQ-based systems. Both repre-

sentations have their respective advantages and disadvantages for comprehen-

sibility. Order-independent rules require some additional mechanism to resolve

any rule conflicts that may occur, thus detracting from a strict logical inter-

pretation of the rules. Ordered rules also sacrifice in comprehensibility, in that

the interpretation of a single rule is dependent on the other rules that precede

it in the list.1

10ne can make CN2 produce unordered if-then rules by appropriately changing the eval-

uation function; e.g., one can use the same evaluation function as AQR, then generate a rule

set for each class in turn.

�

THE CN2 ALGORITHM

267

2.3.2 Concept description and interpretation in CN2

Rules induced by CN2 each have the form 'if then predict

', where has the same definition as for AQR, namely a

conjunction of attribute tests. This ordered rule representation is a version of

what Rivest (1987) has termed decision lists. The last rule in CN2's list is a

'default rule', which simply predicts the most commonly occurring class in the

training data for all new examples.

To use the induced rules to classify new examples, CN2 tries each rule in

order until one is found whose conditions are satisfied by the example being

classified. The class prediction of this rule is then assigned as the class of the

example. Thus, the ordering of the rules is important. If no induced rules

are satisfied, the final default rule assigns the most common class to the new

example.

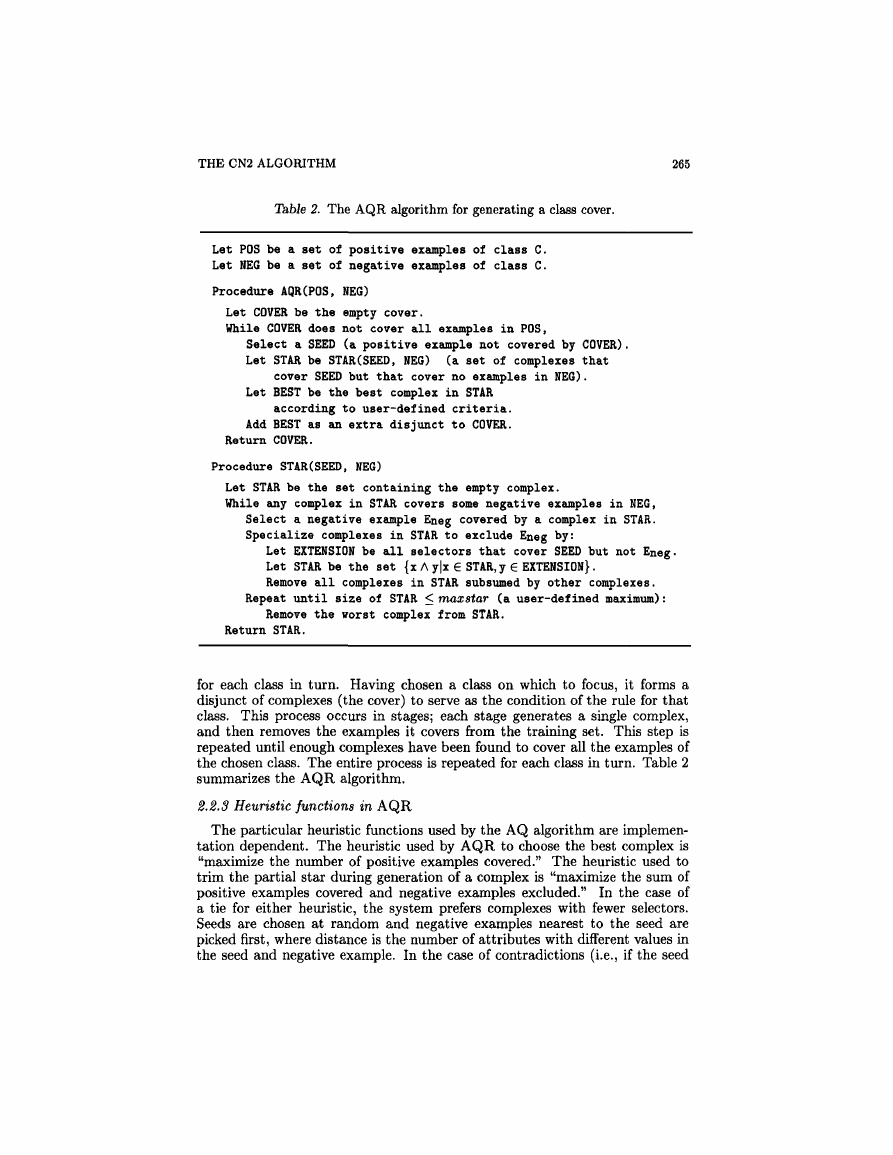

2.3.3 The CN2 learning algorithm

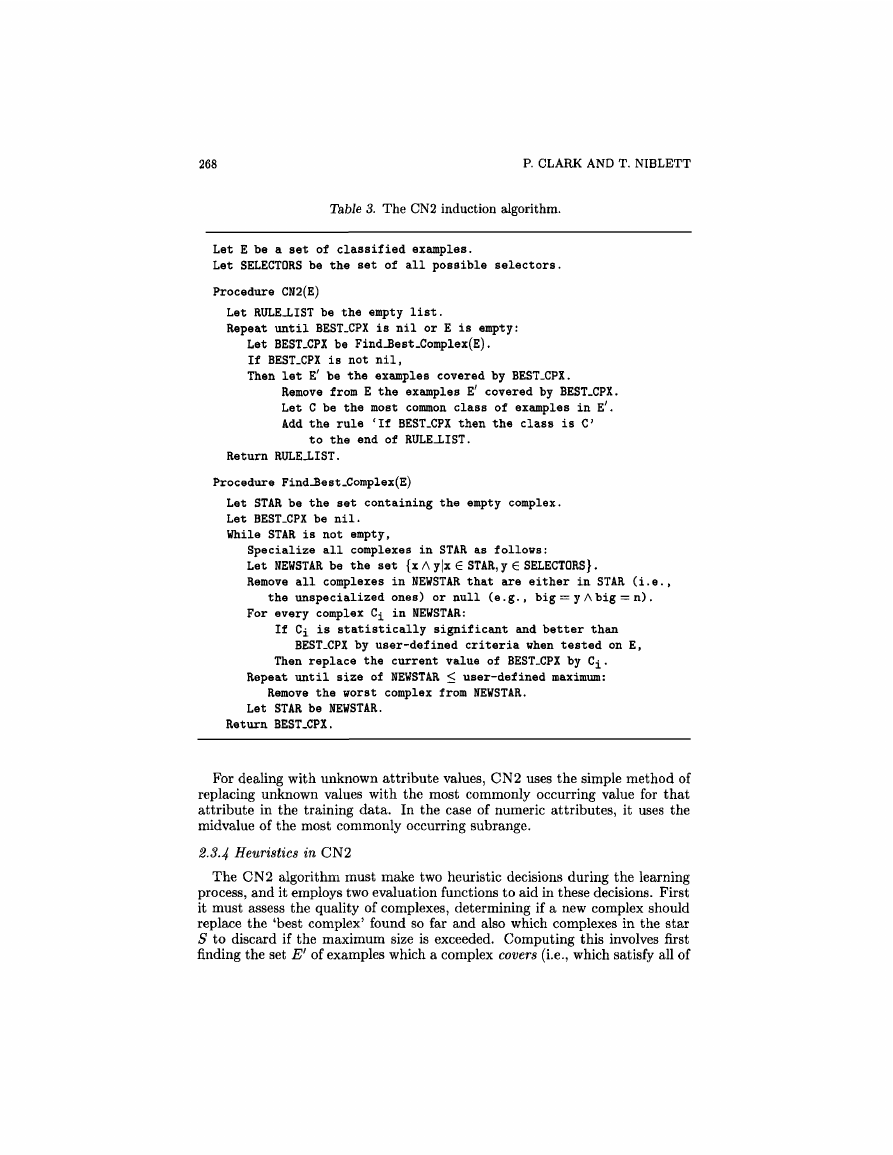

Table 3 presents a summary of the CN2 algorithm. This works in an iterative

fashion, each iteration searching for a complex that covers a large number of

examples of a single class C and few of other classes. The complex must be

both predictive and reliable, as determined by CN2's evaluation functions.

Having found a good complex, the algorithm removes those examples it covers

from the training set and adds the rule 'if then predict C' to the

end of the rule list. This process iterates until no more satisfactory complexes

can be found.

The system searches for complexes by carrying out a pruned general-to-

specific search. At each stage in the search, CN2 retains a size-limited set or

star S of 'best complexes found so far'. The system examines only special-

izations of this set, carrying out a beam search of the space of complexes. A

complex is specialized by either adding a new conjunctive term or removing a

disjunctive element in one of its selectors. Each complex can be specialized in

several ways, and CN2 generates and evaluates all such specializations. The

star is trimmed after completion of this step by removing its lowest ranking

elements as measured by an evaluation function that we will describe shortly.

Our implementation of the specialization step is to repeatedly intersect2

the set of all possible selectors with the current star, eliminating all the null

and unchanged elements in the resulting set of complexes. (A null complex

is one that contains a pair of incompatible selectors, e.g., big = y A big = n.)

CN2 deals with continuous attributes in a manner similar to ASSISTANT - by

dividing the range of values of each attribute into discrete subranges. Tests on

such attributes examine whether a value is greater or less (or equal) than the

values at subrange boundaries. The complete range of values and size of each

subrange is provided by the user.

2The intersection of set A with set B is the set {x A y|x e A, y 6 B}. For example, {a A

b, aAc, bf\d] intersected with {a,b, c, d} is { a f e , aAfcAc, aA&Ad, aAc, aAcAd, bAd, &AcAd}. If

we now remove unchanged elements in this set, we obtain {aAiAc, aAbAd, aAcAd, 6AcAd}.

�

268

P. CLARK AND T. NIBLETT

Table 3. The CN2 induction algorithm.

Let E be a set of classified examples.

Let SELECTORS be the set of all possible selectors.

Procedure CN2(E)

Let RULE-LIST be the empty list.

Repeat until BEST.CPX is nil or E is empty:

Let BEST.CPX be Find-Best.Complex(E).

If BEST.CPX is not nil,

Then let E' be the examples covered by BEST.CPX.

Remove from E the examples E' covered by BEST.CPX.

Let C be the most common class of examples in E'.

Add the rule 'If BEST.CPX then the class is C'

to the end of RULE-LIST.

Return RULE-LIST.

Procedure Find-Best.Complex(E)

Let STAR be the set containing the empty complex.

Let BEST.CPX be nil.

While STAR is not empty,

Specialize all complexes in STAR as follows:

Let NEWSTAR be the set {x A y|x 6 STAR, y € SELECTORS}.

Remove all complexes in NEWSTAR that are either in STAR (i.e.,

the unspecialized ones) or null (e.g., big = y A big = n).

For every complex Ci in NEWSTAR:

If Ci is statistically significant and better than

BEST.CPX by user-defined criteria when tested on E,

Then replace the current value of BEST.CPX by Ci.

Repeat until size of NEWSTAR < user-defined maximum:

Remove the worst complex from NEWSTAR.

Let STAR be NEWSTAR.

Return BEST.CPX.

For dealing with unknown attribute values, CN2 uses the simple method of

replacing unknown values with the most commonly occurring value for that

attribute in the training data. In the case of numeric attributes, it uses the

midvalue of the most commonly occurring subrange.

2.3.4 Heuristics in CN2

The CN2 algorithm must make two heuristic decisions during the learning

process, and it employs two evaluation functions to aid in these decisions. First

it must assess the quality of complexes, determining if a new complex should

replace the 'best complex' found so far and also which complexes in the star

5 to discard if the maximum size is exceeded. Computing this involves first

finding the set E' of examples which a complex covers (i.e., which satisfy all of

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc