8

1

0

2

r

a

M

2

1

]

I

N

.

s

c

[

1

v

1

1

3

4

0

.

3

0

8

1

:

v

i

X

r

a

IEEECOMMUNICATIONSSURVEYS&TUTORIALS1DeepLearninginMobileandWirelessNetworking:ASurveyChaoyunZhang,PaulPatras,andHamedHaddadiAbstract—Therapiduptakeofmobiledevicesandtherisingpopularityofmobileapplicationsandservicesposeunprece-denteddemandsonmobileandwirelessnetworkinginfrastruc-ture.Upcoming5Gsystemsareevolvingtosupportexplodingmobiletrafficvolumes,agilemanagementofnetworkresourcetomaximizeuserexperience,andextractionoffine-grainedreal-timeanalytics.Fulfillingthesetasksischallenging,asmobileenvironmentsareincreasinglycomplex,heterogeneous,andevolv-ing.Onepotentialsolutionistoresorttoadvancedmachinelearningtechniquestohelpmanagingtheriseindatavolumesandalgorithm-drivenapplications.Therecentsuccessofdeeplearningunderpinsnewandpowerfultoolsthattackleproblemsinthisspace.Inthispaperwebridgethegapbetweendeeplearningandmobileandwirelessnetworkingresearch,bypresentingacomprehensivesurveyofthecrossoversbetweenthetwoareas.Wefirstbrieflyintroduceessentialbackgroundandstate-of-the-artindeeplearningtechniqueswithpotentialapplicationstonetworking.Wethendiscussseveraltechniquesandplatformsthatfacilitatetheefficientdeploymentofdeeplearningontomobilesystems.Subsequently,weprovideanencyclopedicreviewofmobileandwirelessnetworkingresearchbasedondeeplearning,whichwecategorizebydifferentdomains.Drawingfromourexperience,wediscusshowtotailordeeplearningtomobileenvironments.Wecompletethissurveybypinpointingcurrentchallengesandopenfuturedirectionsforresearch.IndexTerms—DeepLearning,MachineLearning,MobileNet-working,WirelessNetworking,MobileBigData,5GSystems,NetworkManagement.I.INTRODUCTIONINTERNETconnectedmobiledevicesarepenetratingeveryaspectofindividuals’life,work,andentertainment.Theincreasingnumberofsmartphonesandtheemergenceofever-morediverseapplicationstriggerasurgeinmobiledatatraffic.Indeed,thelatestindustryforecastsindicatethattheannualworldwideIPtrafficconsumptionwillreach3.3zettabytes(1015MB)by2021,withsmartphonetrafficexceedingPCtrafficbythesameyear[1].Giventheshiftinuserpreferencetowardswirelessconnectivity,currentmobileinfrastructurefacesgreatcapacitydemands.Inresponsetothisincreasingde-mand,earlyeffortsproposetoagilelyprovisionresources[2]andtacklemobilitymanagementdistributively[3].Inthelongrun,however,InternetServiceProviders(ISPs)mustde-velopintelligentheterogeneousarchitecturesandtoolsthatcanspawnthe5thgenerationofmobilesystems(5G)andgraduallymeetmorestringentend-userapplicationrequirements[4],[5].C.ZhangandP.PatrasarewiththeInstituteforComputingSystemsArchi-tecture(ICSA),SchoolofInformatics,UniversityofEdinburgh,Edinburgh,UK.Emails:{chaoyun.zhang,paul.patras}@ed.ac.uk.H.HaddadiiswiththeDysonSchoolofDesignEngineeringatImperialCollegeLondon.Email:h.haddadi@imperial.ac.uk.Thegrowingdiversityandcomplexityofmobilenetworkarchitectureshasmademonitoringandmanagingthemulti-tudeofnetworkselementsintractable.Therefore,embeddingversatilemachineintelligenceintofuturemobilenetworksisdrawingunparalleledresearchinterest[6],[7].Thistrendisreflectedinmachinelearning(ML)basedsolutionstoprob-lemsrangingfromradioaccesstechnology(RAT)selection[8]tomalwaredetection[9],aswellasthedevelopmentofnetworkedsystemsthatsupportmachineleaningpractices(e.g.[10],[11]).MLenablessystematicminingofvaluableinformationfromtrafficdataandautomaticallyuncovercorre-lationsthatwouldotherwisehavebeentoocomplextoextractbyhumanexperts[12].Astheflagshipofmachinelearning,deeplearninghasachievedremarkableperformanceinareassuchascomputervision[13]andnaturallanguageprocessing(NLP)[14].Networkingresearchersarealsobeginningtorecognizethepowerandimportanceofdeeplearning,andareexploringitspotentialtosolveproblemsspecifictothemobilenetworkingdomain[15],[16].Embeddingdeeplearningintothe5Gmobileandwirelessnetworksiswelljustified.Inparticular,datageneratedbymobileenvironmentsareheterogeneousastheseareusuallycollectedfromvarioussources,havedifferentformats,andexhibitcomplexcorrelations[17].TraditionalMLtoolsrequireexpensivehand-craftedfeatureengineeringtomakeaccurateinferencesanddecisionsbasedonsuchdata.Deeplearningeliminatesdomainexpertiseasitemployshierarchicalfeatureextraction,bywhichitefficientlydistillsinformationandobtainsincreasinglyabstractcorrelationsfromthedata,whileminimizingthedatapre-processingeffort.GraphicsProcessingUnit(GPU)-basedparallelcomputingfurtherenablesdeeplearningtomakeinferenceswithinmilliseconds.Thisfacil-itatesnetworkanalysisandmanagementwithhighaccuracyandinatimelymanner,overcomingtheruntimelimitationsoftraditionalmathematicaltechniques(e.g.convexoptimization,gametheory,metaheuristics).Despitegrowinginterestindeeplearninginthemobilenetworkingdomain,existingcontributionsarescatteredacrossdifferentresearchareasandacomprehensiveyetconcisesurveyislacking.Thisarticlefillsthisgapbetweendeeplearningandmobileandwirelessnetworking,bypresentinganup-to-datesurveyofresearchthatliesattheintersectionbetweenthesetwofields.Beyondreviewingthemostrele-vantliterature,wediscussthekeyprosandconsofvariousdeeplearningarchitectures,andoutlinedeeplearningmodelselectionstrategiesinviewofsolvingmobilenetworkingproblems.Wefurtherinvestigatemethodsthattailordeeplearningtoindividualmobilenetworkingtasks,toachievebest�

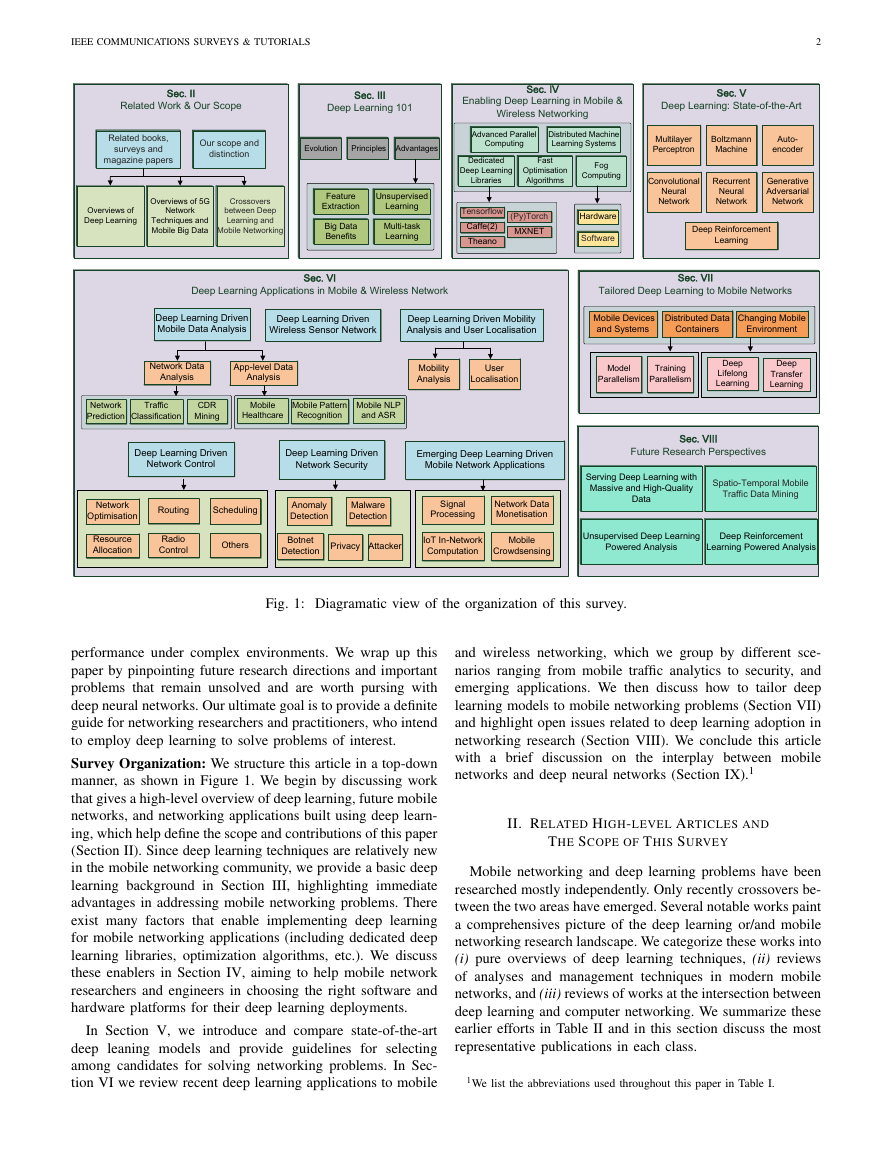

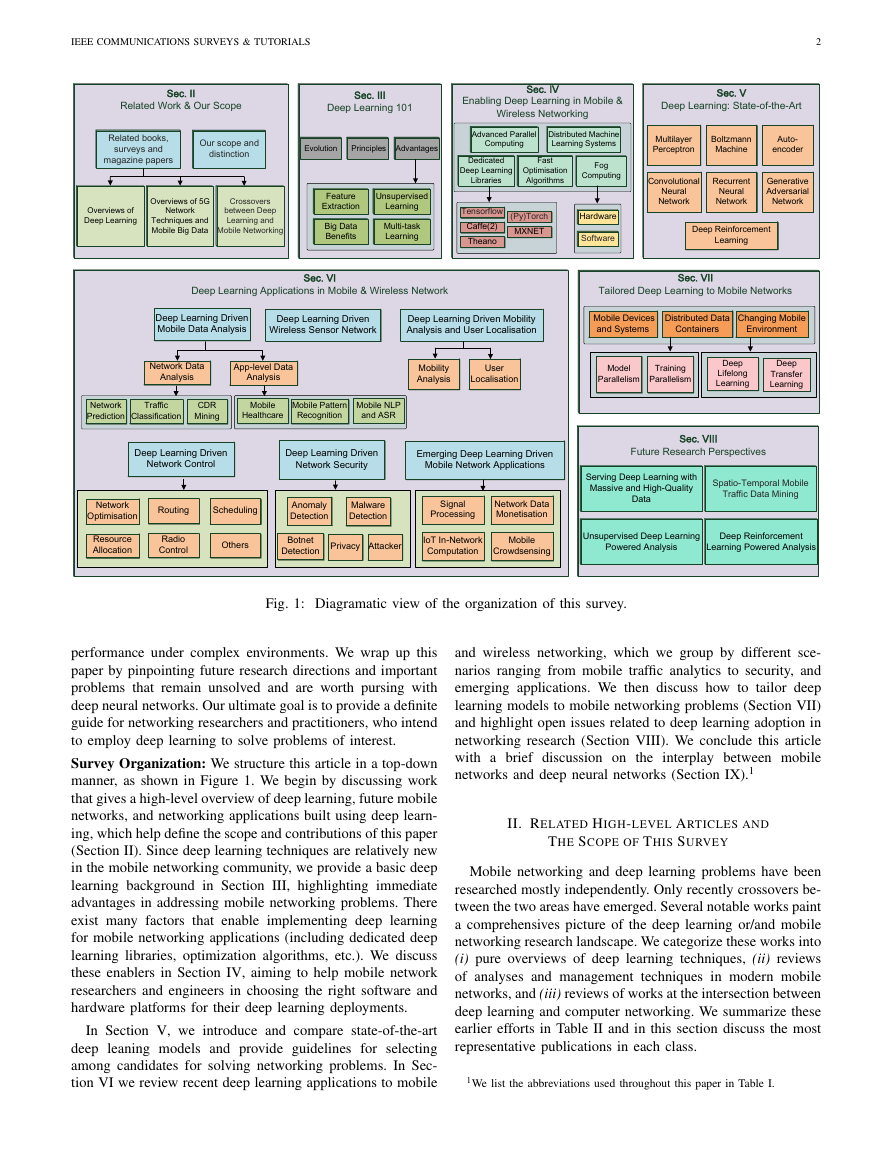

IEEECOMMUNICATIONSSURVEYS&TUTORIALS2Related books, surveys and magazine papersOur scope and distinction Overviews of Deep LearningOverviews of 5G Network Techniques and Mobile Big Data Crossovers between Deep Learning and Mobile NetworkingEvolution PrinciplesAdvantagesMultilayer PerceptronBoltzmann MachineAuto-encoderConvolutional Neural NetworkRecurrent Neural NetworkGenerative Adversarial NetworkDeep Reinforcement LearningModel ParallelismTraining ParallelismServing Deep Learning with Massive and High-Quality DataUnsupervised Deep Learning Powered AnalysisSpatio-Temporal Mobile Traffic Data MiningDeep Reinforcement Learning Powered AnalysisAdvanced Parallel ComputingDedicated Deep Learning LibrariesFog ComputingFast Optimisation AlgorithmsDistributed Machine Learning Systems TensorflowTheano(Py)TorchCaffe(2)MXNETSoftwareHardware Mobile Devices and SystemsDistributed Data ContainersChanging Mobile EnvironmentFeature ExtractionBig Data BenefitsMulti-task LearningUnsupervised LearningDeep Lifelong LearningDeep Transfer LearningDeep Learning Driven Mobile Data AnalysisDeep Learning Driven Wireless Sensor NetworkDeep Learning Driven Network ControlDeep Learning Driven Network SecurityEmerging Deep Learning Driven Mobile Network ApplicationsDeep Learning Driven Mobility Analysis and User LocalisationApp-level Data AnalysisNetwork Data AnalysisNetwork PredictionTraffic ClassificationCDR MiningMobile HealthcareMobile Pattern RecognitionMobile NLP and ASRAnomaly DetectionMalware DetectionBotnet DetectionPrivacyNetwork OptimisationRoutingSchedulingResource AllocationRadio ControlOthersNetwork Data MonetisationIoT In-Network ComputationMobile CrowdsensingMobility AnalysisUser LocalisationSignal ProcessingAttackerSec. VI Deep Learning Applications in Mobile & Wireless NetworkSec. VIITailored Deep Learning to Mobile NetworksSec. VIIIFuture Research PerspectivesSec. IIRelated Work & Our ScopeSec. IIIDeep Learning 101Sec. IVEnabling Deep Learning in Mobile & Wireless Networking Sec. VDeep Learning: State-of-the-ArtFig.1:Diagramaticviewoftheorganizationofthissurvey.performanceundercomplexenvironments.Wewrapupthispaperbypinpointingfutureresearchdirectionsandimportantproblemsthatremainunsolvedandareworthpursingwithdeepneuralnetworks.Ourultimategoalistoprovideadefiniteguidefornetworkingresearchersandpractitioners,whointendtoemploydeeplearningtosolveproblemsofinterest.SurveyOrganization:Westructurethisarticleinatop-downmanner,asshowninFigure1.Webeginbydiscussingworkthatgivesahigh-leveloverviewofdeeplearning,futuremobilenetworks,andnetworkingapplicationsbuiltusingdeeplearn-ing,whichhelpdefinethescopeandcontributionsofthispaper(SectionII).Sincedeeplearningtechniquesarerelativelynewinthemobilenetworkingcommunity,weprovideabasicdeeplearningbackgroundinSectionIII,highlightingimmediateadvantagesinaddressingmobilenetworkingproblems.Thereexistmanyfactorsthatenableimplementingdeeplearningformobilenetworkingapplications(includingdedicateddeeplearninglibraries,optimizationalgorithms,etc.).WediscusstheseenablersinSectionIV,aimingtohelpmobilenetworkresearchersandengineersinchoosingtherightsoftwareandhardwareplatformsfortheirdeeplearningdeployments.InSectionV,weintroduceandcomparestate-of-the-artdeepleaningmodelsandprovideguidelinesforselectingamongcandidatesforsolvingnetworkingproblems.InSec-tionVIwereviewrecentdeeplearningapplicationstomobileandwirelessnetworking,whichwegroupbydifferentsce-nariosrangingfrommobiletrafficanalyticstosecurity,andemergingapplications.Wethendiscusshowtotailordeeplearningmodelstomobilenetworkingproblems(SectionVII)andhighlightopenissuesrelatedtodeeplearningadoptioninnetworkingresearch(SectionVIII).Weconcludethisarticlewithabriefdiscussionontheinterplaybetweenmobilenetworksanddeepneuralnetworks(SectionIX).1II.RELATEDHIGH-LEVELARTICLESANDTHESCOPEOFTHISSURVEYMobilenetworkinganddeeplearningproblemshavebeenresearchedmostlyindependently.Onlyrecentlycrossoversbe-tweenthetwoareashaveemerged.Severalnotableworkspaintacomprehensivespictureofthedeeplearningor/andmobilenetworkingresearchlandscape.Wecategorizetheseworksinto(i)pureoverviewsofdeeplearningtechniques,(ii)reviewsofanalysesandmanagementtechniquesinmodernmobilenetworks,and(iii)reviewsofworksattheintersectionbetweendeeplearningandcomputernetworking.WesummarizetheseearliereffortsinTableIIandinthissectiondiscussthemostrepresentativepublicationsineachclass.1WelisttheabbreviationsusedthroughoutthispaperinTableI.�

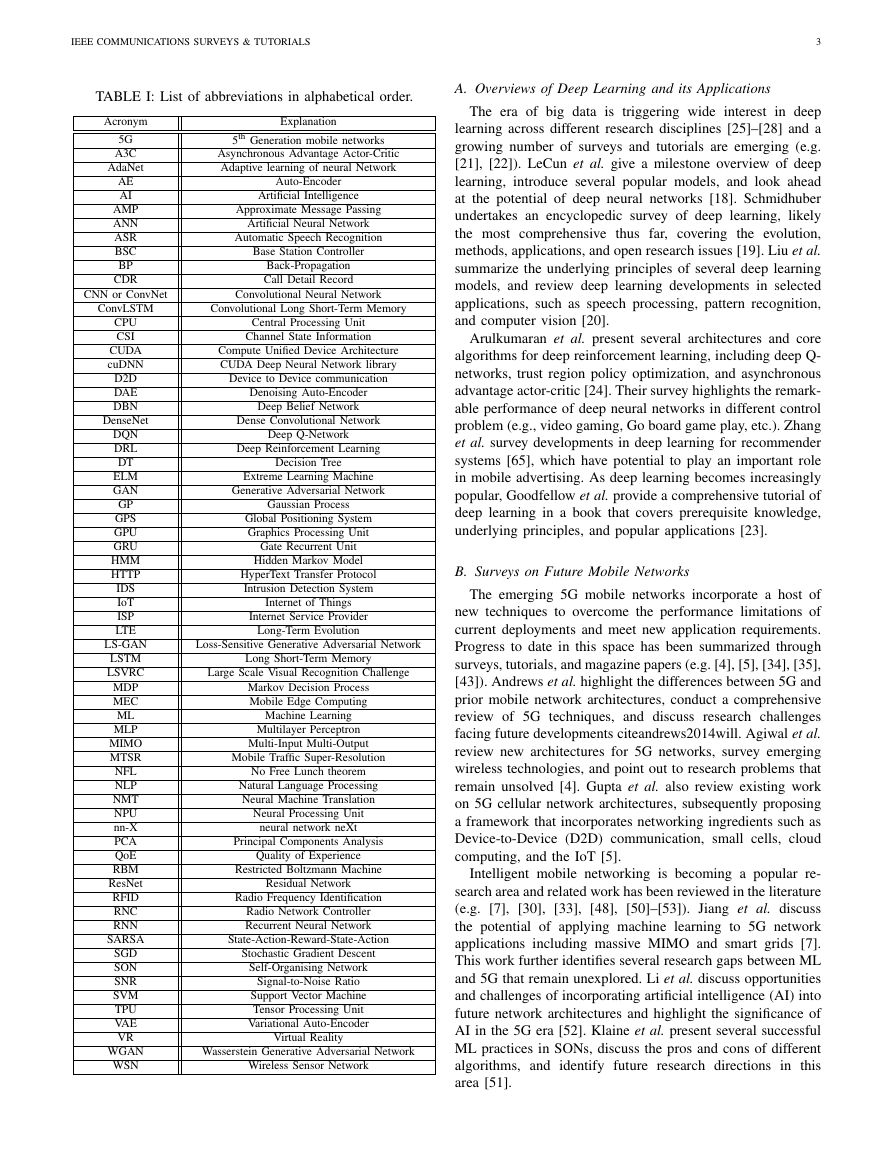

IEEECOMMUNICATIONSSURVEYS&TUTORIALS3TABLEI:Listofabbreviationsinalphabeticalorder.AcronymExplanation5G5thGenerationmobilenetworksA3CAsynchronousAdvantageActor-CriticAdaNetAdaptivelearningofneuralNetworkAEAuto-EncoderAIArtificialIntelligenceAMPApproximateMessagePassingANNArtificialNeuralNetworkASRAutomaticSpeechRecognitionBSCBaseStationControllerBPBack-PropagationCDRCallDetailRecordCNNorConvNetConvolutionalNeuralNetworkConvLSTMConvolutionalLongShort-TermMemoryCPUCentralProcessingUnitCSIChannelStateInformationCUDAComputeUnifiedDeviceArchitecturecuDNNCUDADeepNeuralNetworklibraryD2DDevicetoDevicecommunicationDAEDenoisingAuto-EncoderDBNDeepBeliefNetworkDenseNetDenseConvolutionalNetworkDQNDeepQ-NetworkDRLDeepReinforcementLearningDTDecisionTreeELMExtremeLearningMachineGANGenerativeAdversarialNetworkGPGaussianProcessGPSGlobalPositioningSystemGPUGraphicsProcessingUnitGRUGateRecurrentUnitHMMHiddenMarkovModelHTTPHyperTextTransferProtocolIDSIntrusionDetectionSystemIoTInternetofThingsISPInternetServiceProviderLTELong-TermEvolutionLS-GANLoss-SensitiveGenerativeAdversarialNetworkLSTMLongShort-TermMemoryLSVRCLargeScaleVisualRecognitionChallengeMDPMarkovDecisionProcessMECMobileEdgeComputingMLMachineLearningMLPMultilayerPerceptronMIMOMulti-InputMulti-OutputMTSRMobileTrafficSuper-ResolutionNFLNoFreeLunchtheoremNLPNaturalLanguageProcessingNMTNeuralMachineTranslationNPUNeuralProcessingUnitnn-XneuralnetworkneXtPCAPrincipalComponentsAnalysisQoEQualityofExperienceRBMRestrictedBoltzmannMachineResNetResidualNetworkRFIDRadioFrequencyIdentificationRNCRadioNetworkControllerRNNRecurrentNeuralNetworkSARSAState-Action-Reward-State-ActionSGDStochasticGradientDescentSONSelf-OrganisingNetworkSNRSignal-to-NoiseRatioSVMSupportVectorMachineTPUTensorProcessingUnitVAEVariationalAuto-EncoderVRVirtualRealityWGANWassersteinGenerativeAdversarialNetworkWSNWirelessSensorNetworkA.OverviewsofDeepLearninganditsApplicationsTheeraofbigdataistriggeringwideinterestindeeplearningacrossdifferentresearchdisciplines[25]–[28]andagrowingnumberofsurveysandtutorialsareemerging(e.g.[21],[22]).LeCunetal.giveamilestoneoverviewofdeeplearning,introduceseveralpopularmodels,andlookaheadatthepotentialofdeepneuralnetworks[18].Schmidhuberundertakesanencyclopedicsurveyofdeeplearning,likelythemostcomprehensivethusfar,coveringtheevolution,methods,applications,andopenresearchissues[19].Liuetal.summarizetheunderlyingprinciplesofseveraldeeplearningmodels,andreviewdeeplearningdevelopmentsinselectedapplications,suchasspeechprocessing,patternrecognition,andcomputervision[20].Arulkumaranetal.presentseveralarchitecturesandcorealgorithmsfordeepreinforcementlearning,includingdeepQ-networks,trustregionpolicyoptimization,andasynchronousadvantageactor-critic[24].Theirsurveyhighlightstheremark-ableperformanceofdeepneuralnetworksindifferentcontrolproblem(e.g.,videogaming,Goboardgameplay,etc.).Zhangetal.surveydevelopmentsindeeplearningforrecommendersystems[65],whichhavepotentialtoplayanimportantroleinmobileadvertising.Asdeeplearningbecomesincreasinglypopular,Goodfellowetal.provideacomprehensivetutorialofdeeplearninginabookthatcoversprerequisiteknowledge,underlyingprinciples,andpopularapplications[23].B.SurveysonFutureMobileNetworksTheemerging5Gmobilenetworksincorporateahostofnewtechniquestoovercometheperformancelimitationsofcurrentdeploymentsandmeetnewapplicationrequirements.Progresstodateinthisspacehasbeensummarizedthroughsurveys,tutorials,andmagazinepapers(e.g.[4],[5],[34],[35],[43]).Andrewsetal.highlightthedifferencesbetween5Gandpriormobilenetworkarchitectures,conductacomprehensivereviewof5Gtechniques,anddiscussresearchchallengesfacingfuturedevelopmentsciteandrews2014will.Agiwaletal.reviewnewarchitecturesfor5Gnetworks,surveyemergingwirelesstechnologies,andpointouttoresearchproblemsthatremainunsolved[4].Guptaetal.alsoreviewexistingworkon5Gcellularnetworkarchitectures,subsequentlyproposingaframeworkthatincorporatesnetworkingingredientssuchasDevice-to-Device(D2D)communication,smallcells,cloudcomputing,andtheIoT[5].Intelligentmobilenetworkingisbecomingapopularre-searchareaandrelatedworkhasbeenreviewedintheliterature(e.g.[7],[30],[33],[48],[50]–[53]).Jiangetal.discussthepotentialofapplyingmachinelearningto5GnetworkapplicationsincludingmassiveMIMOandsmartgrids[7].ThisworkfurtheridentifiesseveralresearchgapsbetweenMLand5Gthatremainunexplored.Lietal.discussopportunitiesandchallengesofincorporatingartificialintelligence(AI)intofuturenetworkarchitecturesandhighlightthesignificanceofAIinthe5Gera[52].Klaineetal.presentseveralsuccessfulMLpracticesinSONs,discusstheprosandconsofdifferentalgorithms,andidentifyfutureresearchdirectionsinthisarea[51].�

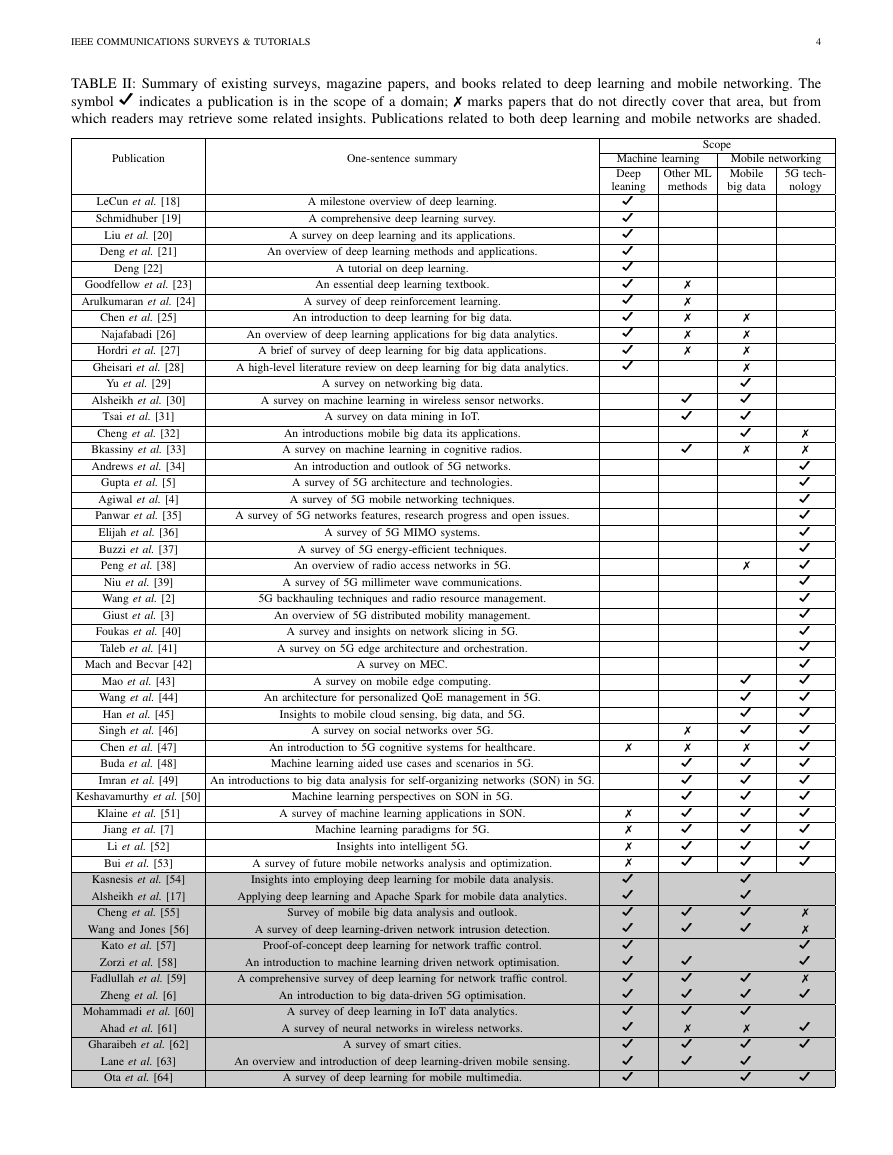

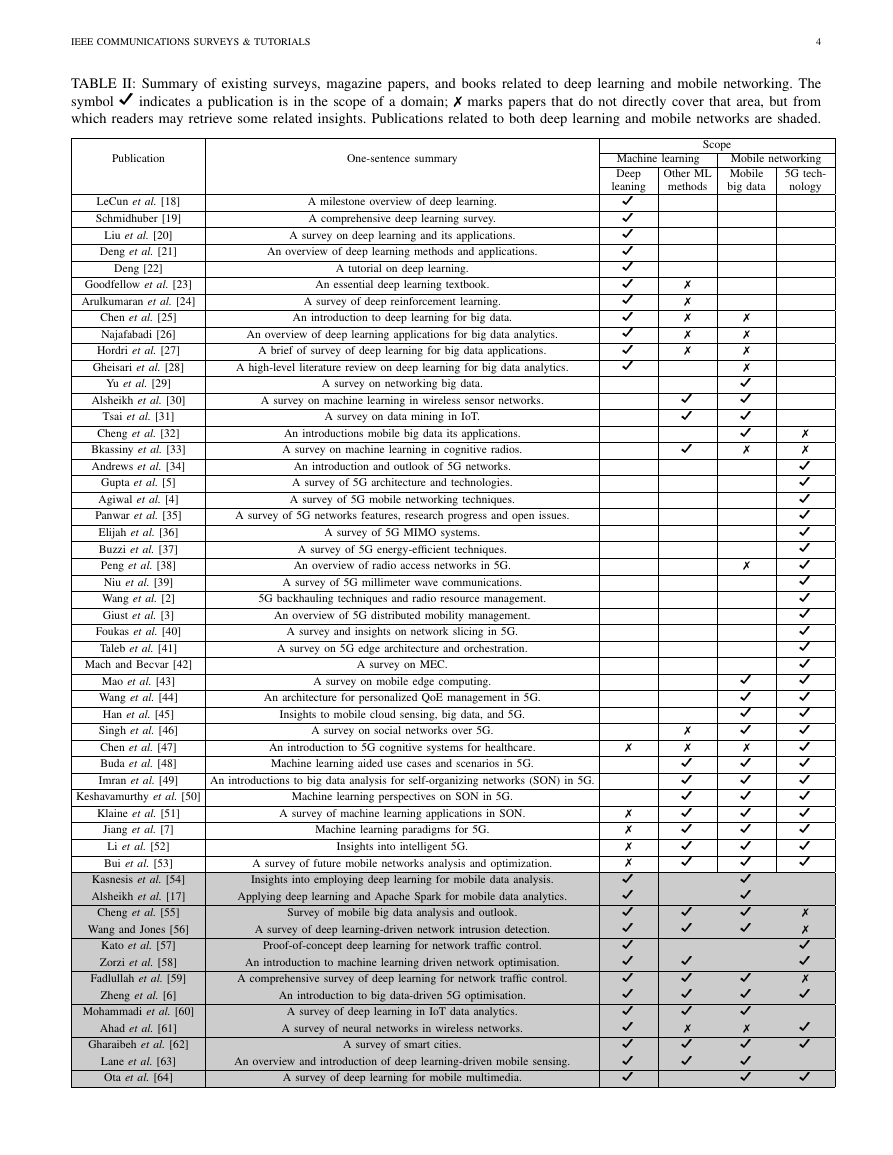

IEEECOMMUNICATIONSSURVEYS&TUTORIALS4TABLEII:Summaryofexistingsurveys,magazinepapers,andbooksrelatedtodeeplearningandmobilenetworking.ThesymbolDindicatesapublicationisinthescopeofadomain;�markspapersthatdonotdirectlycoverthatarea,butfromwhichreadersmayretrievesomerelatedinsights.Publicationsrelatedtobothdeeplearningandmobilenetworksareshaded.PublicationOne-sentencesummaryScopeMachinelearningMobilenetworkingDeepleaningOtherMLmethodsMobilebigdata5Gtech-nologyLeCunetal.[18]Amilestoneoverviewofdeeplearning.DSchmidhuber[19]Acomprehensivedeeplearningsurvey.DLiuetal.[20]Asurveyondeeplearninganditsapplications.DDengetal.[21]Anoverviewofdeeplearningmethodsandapplications.DDeng[22]Atutorialondeeplearning.DGoodfellowetal.[23]Anessentialdeeplearningtextbook.D�Arulkumaranetal.[24]Asurveyofdeepreinforcementlearning.D�Chenetal.[25]Anintroductiontodeeplearningforbigdata.D��Najafabadi[26]Anoverviewofdeeplearningapplicationsforbigdataanalytics.D��Hordrietal.[27]Abriefofsurveyofdeeplearningforbigdataapplications.D��Gheisarietal.[28]Ahigh-levelliteraturereviewondeeplearningforbigdataanalytics.D�Yuetal.[29]Asurveyonnetworkingbigdata.DAlsheikhetal.[30]Asurveyonmachinelearninginwirelesssensornetworks.DDTsaietal.[31]AsurveyondatamininginIoT.DDChengetal.[32]Anintroductionsmobilebigdataitsapplications.D�Bkassinyetal.[33]Asurveyonmachinelearningincognitiveradios.D��Andrewsetal.[34]Anintroductionandoutlookof5Gnetworks.DGuptaetal.[5]Asurveyof5Garchitectureandtechnologies.DAgiwaletal.[4]Asurveyof5Gmobilenetworkingtechniques.DPanwaretal.[35]Asurveyof5Gnetworksfeatures,researchprogressandopenissues.DElijahetal.[36]Asurveyof5GMIMOsystems.DBuzzietal.[37]Asurveyof5Genergy-efficienttechniques.DPengetal.[38]Anoverviewofradioaccessnetworksin5G.�DNiuetal.[39]Asurveyof5Gmillimeterwavecommunications.DWangetal.[2]5Gbackhaulingtechniquesandradioresourcemanagement.DGiustetal.[3]Anoverviewof5Gdistributedmobilitymanagement.DFoukasetal.[40]Asurveyandinsightsonnetworkslicingin5G.DTalebetal.[41]Asurveyon5Gedgearchitectureandorchestration.DMachandBecvar[42]AsurveyonMEC.DMaoetal.[43]Asurveyonmobileedgecomputing.DDWangetal.[44]AnarchitectureforpersonalizedQoEmanagementin5G.DDHanetal.[45]Insightstomobilecloudsensing,bigdata,and5G.DDSinghetal.[46]Asurveyonsocialnetworksover5G.�DDChenetal.[47]Anintroductionto5Gcognitivesystemsforhealthcare.���DBudaetal.[48]Machinelearningaidedusecasesandscenariosin5G.DDDImranetal.[49]Anintroductionstobigdataanalysisforself-organizingnetworks(SON)in5G.DDDKeshavamurthyetal.[50]MachinelearningperspectivesonSONin5G.DDDKlaineetal.[51]AsurveyofmachinelearningapplicationsinSON.�DDDJiangetal.[7]Machinelearningparadigmsfor5G.�DDDLietal.[52]Insightsintointelligent5G.�DDDBuietal.[53]Asurveyoffuturemobilenetworksanalysisandoptimization.�DDDKasnesisetal.[54]Insightsintoemployingdeeplearningformobiledataanalysis.DDAlsheikhetal.[17]ApplyingdeeplearningandApacheSparkformobiledataanalytics.DDChengetal.[55]Surveyofmobilebigdataanalysisandoutlook.DDD�WangandJones[56]Asurveyofdeeplearning-drivennetworkintrusiondetection.DDD�Katoetal.[57]Proof-of-conceptdeeplearningfornetworktrafficcontrol.DDZorzietal.[58]Anintroductiontomachinelearningdrivennetworkoptimisation.DDDFadlullahetal.[59]Acomprehensivesurveyofdeeplearningfornetworktrafficcontrol.DDD�Zhengetal.[6]Anintroductiontobigdata-driven5Goptimisation.DDDDMohammadietal.[60]AsurveyofdeeplearninginIoTdataanalytics.DDDAhadetal.[61]Asurveyofneuralnetworksinwirelessnetworks.D��DGharaibehetal.[62]Asurveyofsmartcities.DDDDLaneetal.[63]Anoverviewandintroductionofdeeplearning-drivenmobilesensing.DDDOtaetal.[64]Asurveyofdeeplearningformobilemultimedia.DDD�

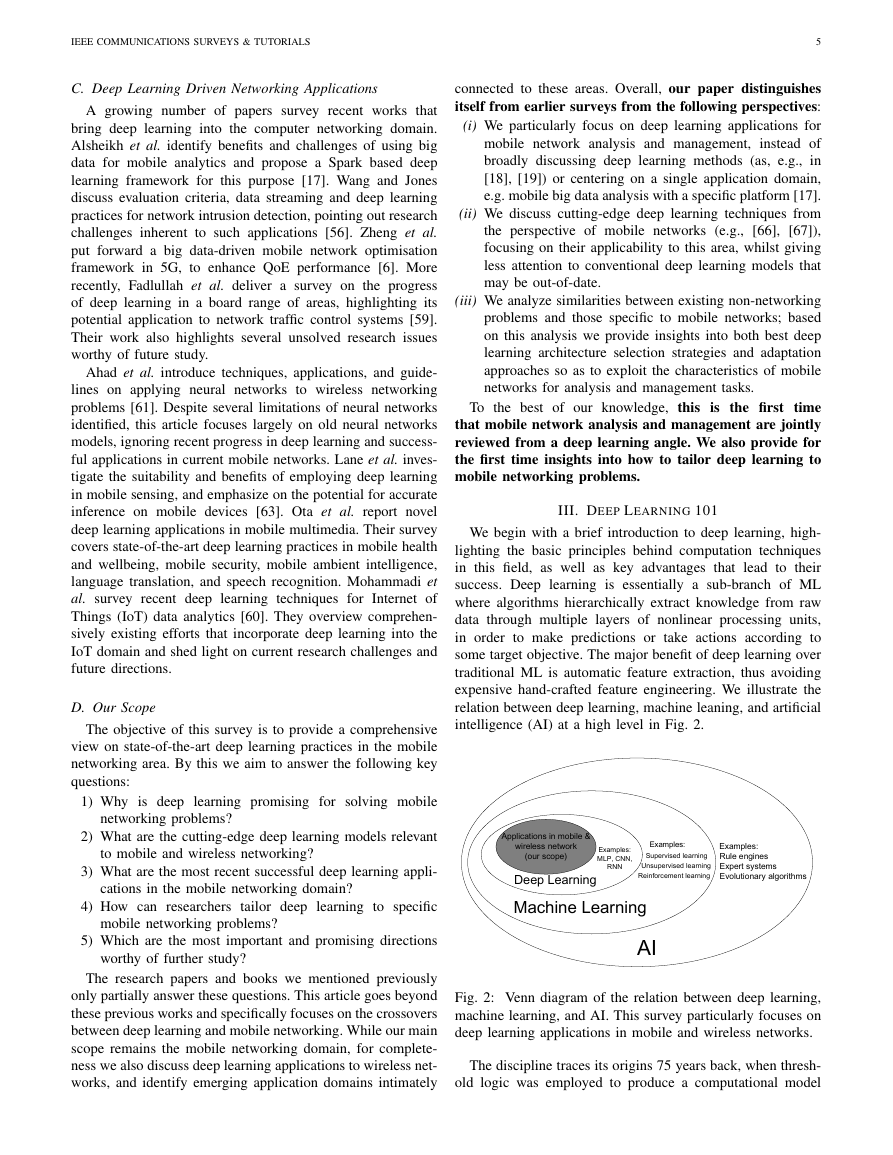

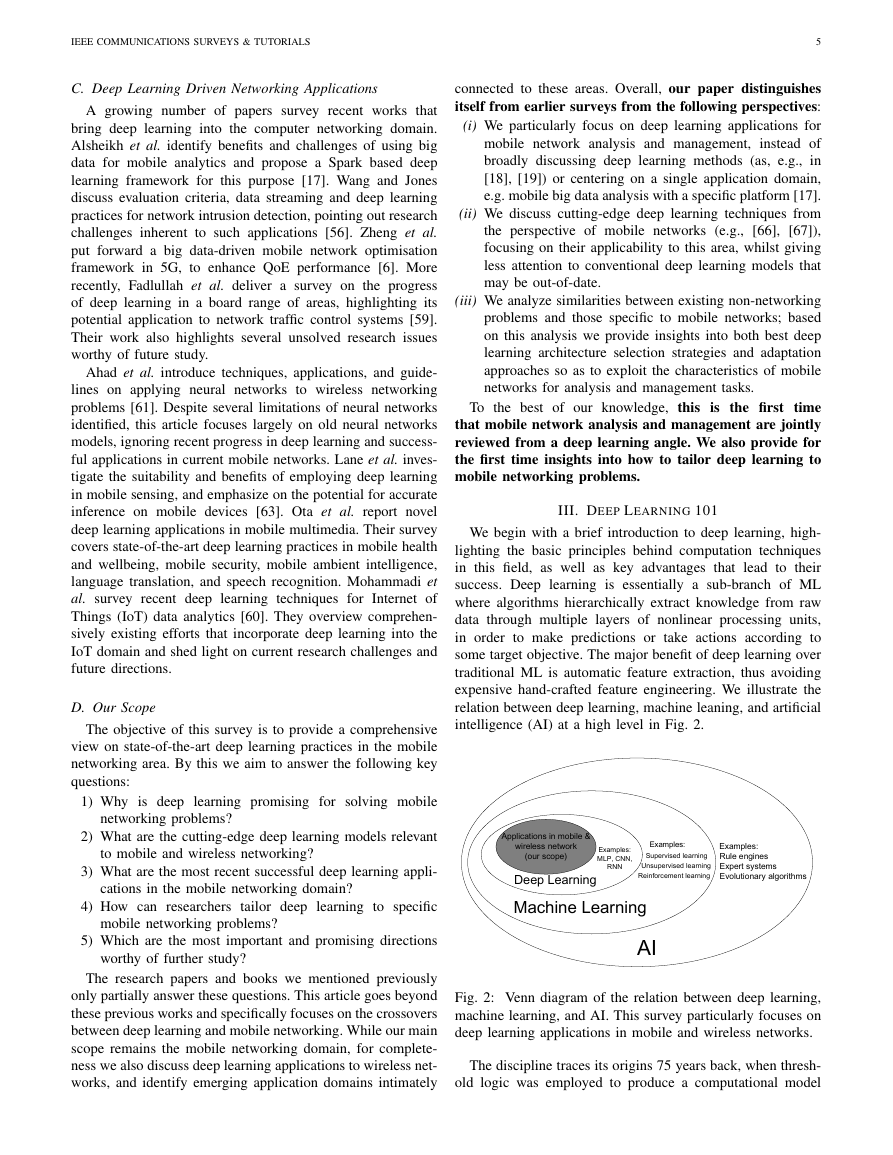

IEEECOMMUNICATIONSSURVEYS&TUTORIALS5C.DeepLearningDrivenNetworkingApplicationsAgrowingnumberofpaperssurveyrecentworksthatbringdeeplearningintothecomputernetworkingdomain.Alsheikhetal.identifybenefitsandchallengesofusingbigdataformobileanalyticsandproposeaSparkbaseddeeplearningframeworkforthispurpose[17].WangandJonesdiscussevaluationcriteria,datastreaminganddeeplearningpracticesfornetworkintrusiondetection,pointingoutresearchchallengesinherenttosuchapplications[56].Zhengetal.putforwardabigdata-drivenmobilenetworkoptimisationframeworkin5G,toenhanceQoEperformance[6].Morerecently,Fadlullahetal.deliverasurveyontheprogressofdeeplearninginaboardrangeofareas,highlightingitspotentialapplicationtonetworktrafficcontrolsystems[59].Theirworkalsohighlightsseveralunsolvedresearchissuesworthyoffuturestudy.Ahadetal.introducetechniques,applications,andguide-linesonapplyingneuralnetworkstowirelessnetworkingproblems[61].Despiteseverallimitationsofneuralnetworksidentified,thisarticlefocuseslargelyonoldneuralnetworksmodels,ignoringrecentprogressindeeplearningandsuccess-fulapplicationsincurrentmobilenetworks.Laneetal.inves-tigatethesuitabilityandbenefitsofemployingdeeplearninginmobilesensing,andemphasizeonthepotentialforaccurateinferenceonmobiledevices[63].Otaetal.reportnoveldeeplearningapplicationsinmobilemultimedia.Theirsurveycoversstate-of-the-artdeeplearningpracticesinmobilehealthandwellbeing,mobilesecurity,mobileambientintelligence,languagetranslation,andspeechrecognition.Mohammadietal.surveyrecentdeeplearningtechniquesforInternetofThings(IoT)dataanalytics[60].Theyoverviewcomprehen-sivelyexistingeffortsthatincorporatedeeplearningintotheIoTdomainandshedlightoncurrentresearchchallengesandfuturedirections.D.OurScopeTheobjectiveofthissurveyistoprovideacomprehensiveviewonstate-of-the-artdeeplearningpracticesinthemobilenetworkingarea.Bythisweaimtoanswerthefollowingkeyquestions:1)Whyisdeeplearningpromisingforsolvingmobilenetworkingproblems?2)Whatarethecutting-edgedeeplearningmodelsrelevanttomobileandwirelessnetworking?3)Whatarethemostrecentsuccessfuldeeplearningappli-cationsinthemobilenetworkingdomain?4)Howcanresearcherstailordeeplearningtospecificmobilenetworkingproblems?5)Whicharethemostimportantandpromisingdirectionsworthyoffurtherstudy?Theresearchpapersandbookswementionedpreviouslyonlypartiallyanswerthesequestions.Thisarticlegoesbeyondthesepreviousworksandspecificallyfocusesonthecrossoversbetweendeeplearningandmobilenetworking.Whileourmainscoperemainsthemobilenetworkingdomain,forcomplete-nesswealsodiscussdeeplearningapplicationstowirelessnet-works,andidentifyemergingapplicationdomainsintimatelyconnectedtotheseareas.Overall,ourpaperdistinguishesitselffromearliersurveysfromthefollowingperspectives:(i)Weparticularlyfocusondeeplearningapplicationsformobilenetworkanalysisandmanagement,insteadofbroadlydiscussingdeeplearningmethods(as,e.g.,in[18],[19])orcenteringonasingleapplicationdomain,e.g.mobilebigdataanalysiswithaspecificplatform[17].(ii)Wediscusscutting-edgedeeplearningtechniquesfromtheperspectiveofmobilenetworks(e.g.,[66],[67]),focusingontheirapplicabilitytothisarea,whilstgivinglessattentiontoconventionaldeeplearningmodelsthatmaybeout-of-date.(iii)Weanalyzesimilaritiesbetweenexistingnon-networkingproblemsandthosespecifictomobilenetworks;basedonthisanalysisweprovideinsightsintobothbestdeeplearningarchitectureselectionstrategiesandadaptationapproachessoastoexploitthecharacteristicsofmobilenetworksforanalysisandmanagementtasks.Tothebestofourknowledge,thisisthefirsttimethatmobilenetworkanalysisandmanagementarejointlyreviewedfromadeeplearningangle.Wealsoprovideforthefirsttimeinsightsintohowtotailordeeplearningtomobilenetworkingproblems.III.DEEPLEARNING101Webeginwithabriefintroductiontodeeplearning,high-lightingthebasicprinciplesbehindcomputationtechniquesinthisfield,aswellaskeyadvantagesthatleadtotheirsuccess.Deeplearningisessentiallyasub-branchofMLwherealgorithmshierarchicallyextractknowledgefromrawdatathroughmultiplelayersofnonlinearprocessingunits,inordertomakepredictionsortakeactionsaccordingtosometargetobjective.ThemajorbenefitofdeeplearningovertraditionalMLisautomaticfeatureextraction,thusavoidingexpensivehand-craftedfeatureengineering.Weillustratetherelationbetweendeeplearning,machineleaning,andartificialintelligence(AI)atahighlevelinFig.2.AIMachine LearningSupervised learningUnsupervised learningReinforcement learningDeep LearningExamples:MLP, CNN, RNNApplications in mobile & wireless network(our scope)Examples:Examples:Rule enginesExpert systemsEvolutionary algorithmsFig.2:Venndiagramoftherelationbetweendeeplearning,machinelearning,andAI.Thissurveyparticularlyfocusesondeeplearningapplicationsinmobileandwirelessnetworks.Thedisciplinetracesitsorigins75yearsback,whenthresh-oldlogicwasemployedtoproduceacomputationalmodel�

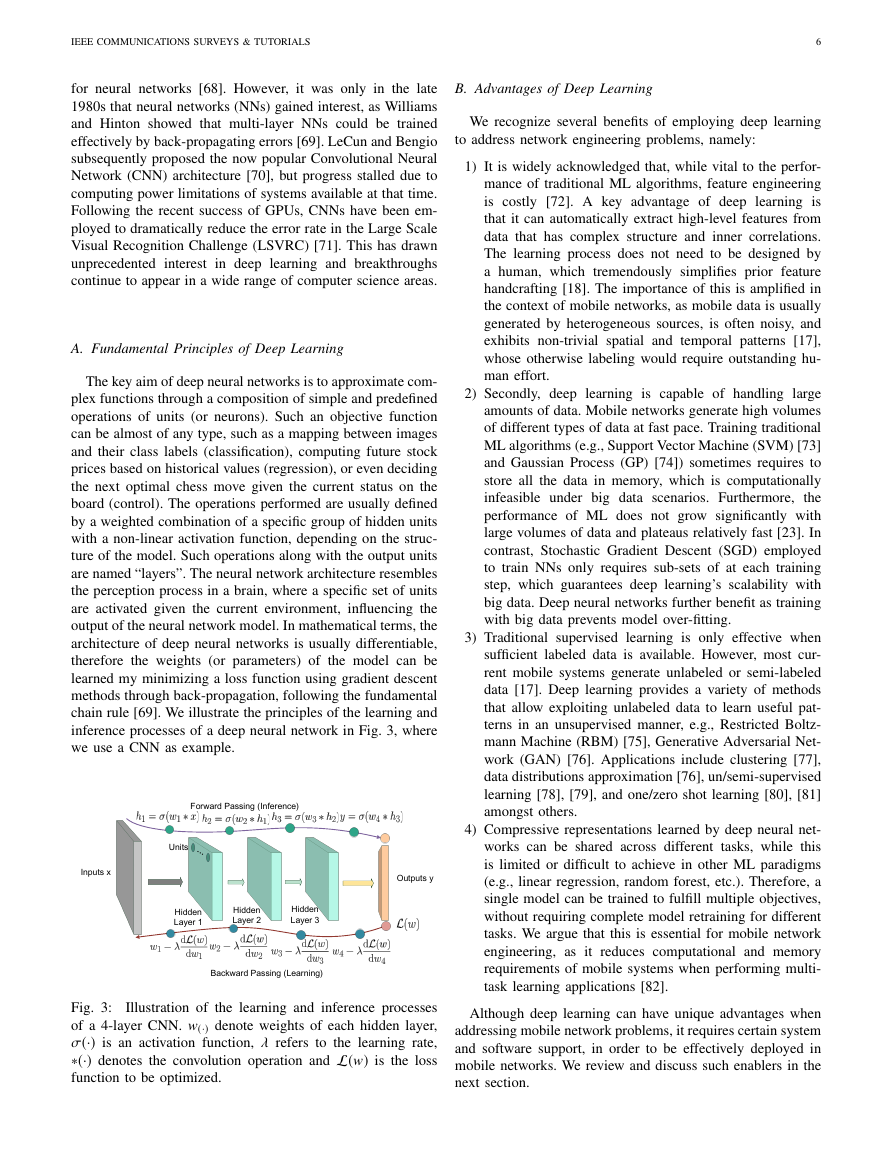

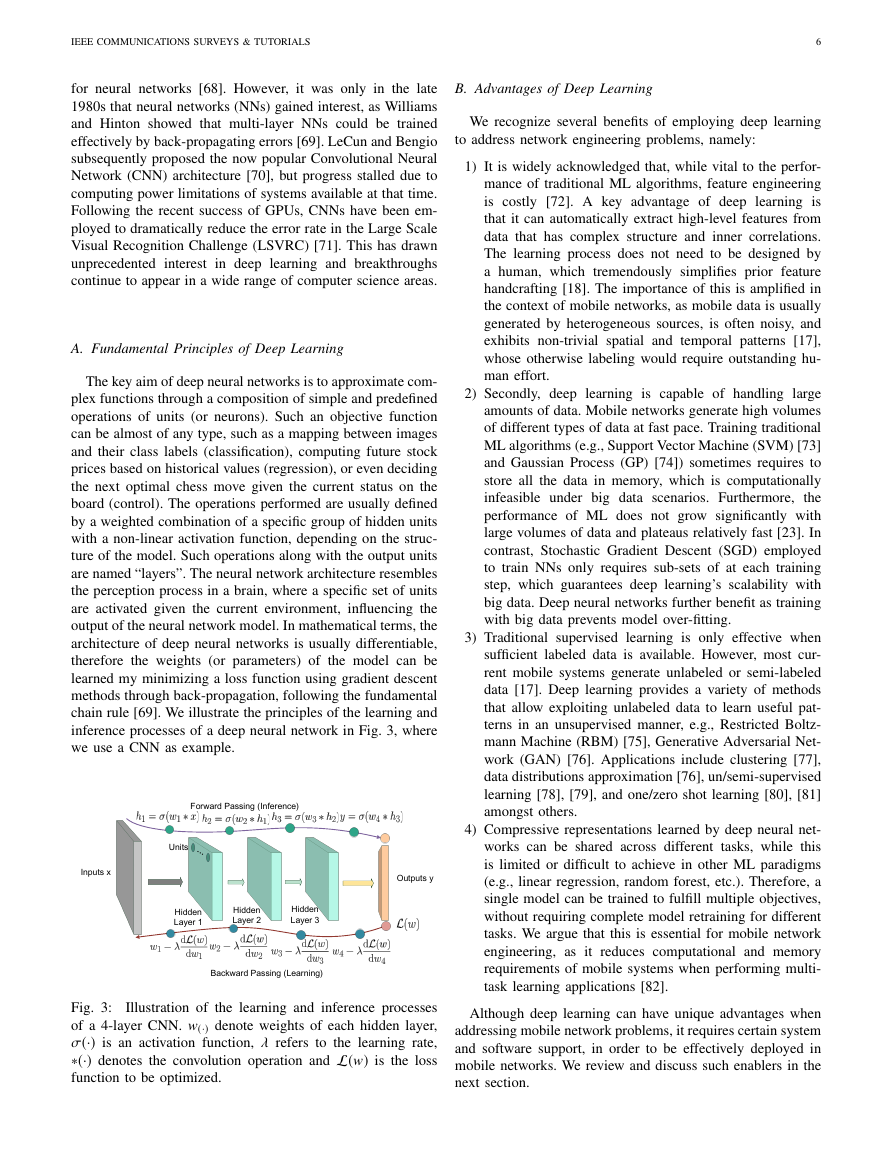

IEEECOMMUNICATIONSSURVEYS&TUTORIALS6forneuralnetworks[68].However,itwasonlyinthelate1980sthatneuralnetworks(NNs)gainedinterest,asWilliamsandHintonshowedthatmulti-layerNNscouldbetrainedeffectivelybyback-propagatingerrors[69].LeCunandBengiosubsequentlyproposedthenowpopularConvolutionalNeuralNetwork(CNN)architecture[70],butprogressstalledduetocomputingpowerlimitationsofsystemsavailableatthattime.FollowingtherecentsuccessofGPUs,CNNshavebeenem-ployedtodramaticallyreducetheerrorrateintheLargeScaleVisualRecognitionChallenge(LSVRC)[71].Thishasdrawnunprecedentedinterestindeeplearningandbreakthroughscontinuetoappearinawiderangeofcomputerscienceareas.A.FundamentalPrinciplesofDeepLearningThekeyaimofdeepneuralnetworksistoapproximatecom-plexfunctionsthroughacompositionofsimpleandpredefinedoperationsofunits(orneurons).Suchanobjectivefunctioncanbealmostofanytype,suchasamappingbetweenimagesandtheirclasslabels(classification),computingfuturestockpricesbasedonhistoricalvalues(regression),orevendecidingthenextoptimalchessmovegiventhecurrentstatusontheboard(control).Theoperationsperformedareusuallydefinedbyaweightedcombinationofaspecificgroupofhiddenunitswithanon-linearactivationfunction,dependingonthestruc-tureofthemodel.Suchoperationsalongwiththeoutputunitsarenamed“layers”.Theneuralnetworkarchitectureresemblestheperceptionprocessinabrain,whereaspecificsetofunitsareactivatedgiventhecurrentenvironment,influencingtheoutputoftheneuralnetworkmodel.Inmathematicalterms,thearchitectureofdeepneuralnetworksisusuallydifferentiable,thereforetheweights(orparameters)ofthemodelcanbelearnedmyminimizingalossfunctionusinggradientdescentmethodsthroughback-propagation,followingthefundamentalchainrule[69].WeillustratetheprinciplesofthelearningandinferenceprocessesofadeepneuralnetworkinFig.3,whereweuseaCNNasexample.Inputs xOutputs yHidden Layer 1Hidden Layer 2Hidden Layer 3Forward Passing (Inference)Backward Passing (Learning)UnitsFig.3:Illustrationofthelearningandinferenceprocessesofa4-layerCNN.w(·)denoteweightsofeachhiddenlayer,σ(·)isanactivationfunction,λreferstothelearningrate,∗(·)denotestheconvolutionoperationandL(w)isthelossfunctiontobeoptimized.B.AdvantagesofDeepLearningWerecognizeseveralbenefitsofemployingdeeplearningtoaddressnetworkengineeringproblems,namely:1)Itiswidelyacknowledgedthat,whilevitaltotheperfor-manceoftraditionalMLalgorithms,featureengineeringiscostly[72].Akeyadvantageofdeeplearningisthatitcanautomaticallyextracthigh-levelfeaturesfromdatathathascomplexstructureandinnercorrelations.Thelearningprocessdoesnotneedtobedesignedbyahuman,whichtremendouslysimplifiespriorfeaturehandcrafting[18].Theimportanceofthisisamplifiedinthecontextofmobilenetworks,asmobiledataisusuallygeneratedbyheterogeneoussources,isoftennoisy,andexhibitsnon-trivialspatialandtemporalpatterns[17],whoseotherwiselabelingwouldrequireoutstandinghu-maneffort.2)Secondly,deeplearningiscapableofhandlinglargeamountsofdata.Mobilenetworksgeneratehighvolumesofdifferenttypesofdataatfastpace.TrainingtraditionalMLalgorithms(e.g.,SupportVectorMachine(SVM)[73]andGaussianProcess(GP)[74])sometimesrequirestostoreallthedatainmemory,whichiscomputationallyinfeasibleunderbigdatascenarios.Furthermore,theperformanceofMLdoesnotgrowsignificantlywithlargevolumesofdataandplateausrelativelyfast[23].Incontrast,StochasticGradientDescent(SGD)employedtotrainNNsonlyrequiressub-setsofateachtrainingstep,whichguaranteesdeeplearning’sscalabilitywithbigdata.Deepneuralnetworksfurtherbenefitastrainingwithbigdatapreventsmodelover-fitting.3)Traditionalsupervisedlearningisonlyeffectivewhensufficientlabeleddataisavailable.However,mostcur-rentmobilesystemsgenerateunlabeledorsemi-labeleddata[17].Deeplearningprovidesavarietyofmethodsthatallowexploitingunlabeleddatatolearnusefulpat-ternsinanunsupervisedmanner,e.g.,RestrictedBoltz-mannMachine(RBM)[75],GenerativeAdversarialNet-work(GAN)[76].Applicationsincludeclustering[77],datadistributionsapproximation[76],un/semi-supervisedlearning[78],[79],andone/zeroshotlearning[80],[81]amongstothers.4)Compressiverepresentationslearnedbydeepneuralnet-workscanbesharedacrossdifferenttasks,whilethisislimitedordifficulttoachieveinotherMLparadigms(e.g.,linearregression,randomforest,etc.).Therefore,asinglemodelcanbetrainedtofulfillmultipleobjectives,withoutrequiringcompletemodelretrainingfordifferenttasks.Wearguethatthisisessentialformobilenetworkengineering,asitreducescomputationalandmemoryrequirementsofmobilesystemswhenperformingmulti-tasklearningapplications[82].Althoughdeeplearningcanhaveuniqueadvantageswhenaddressingmobilenetworkproblems,itrequirescertainsystemandsoftwaresupport,inordertobeeffectivelydeployedinmobilenetworks.Wereviewanddiscusssuchenablersinthenextsection.�

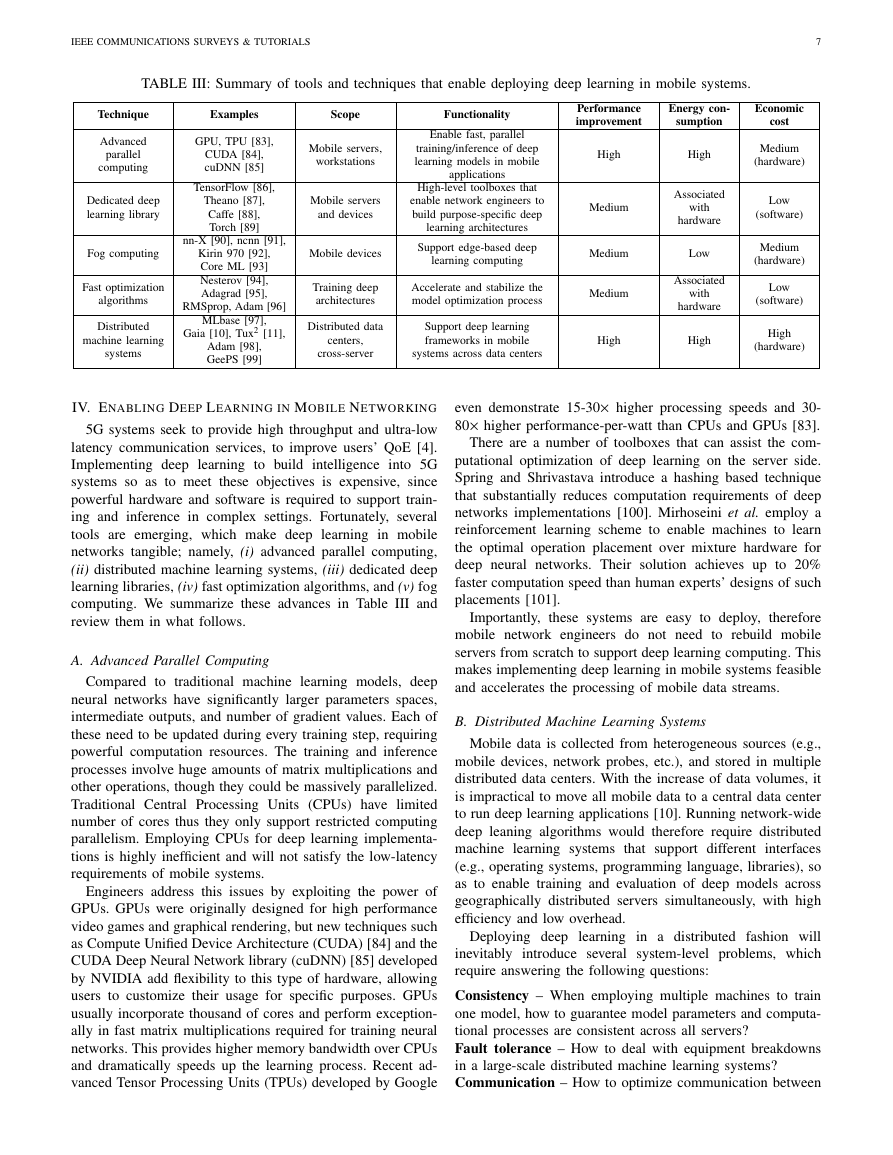

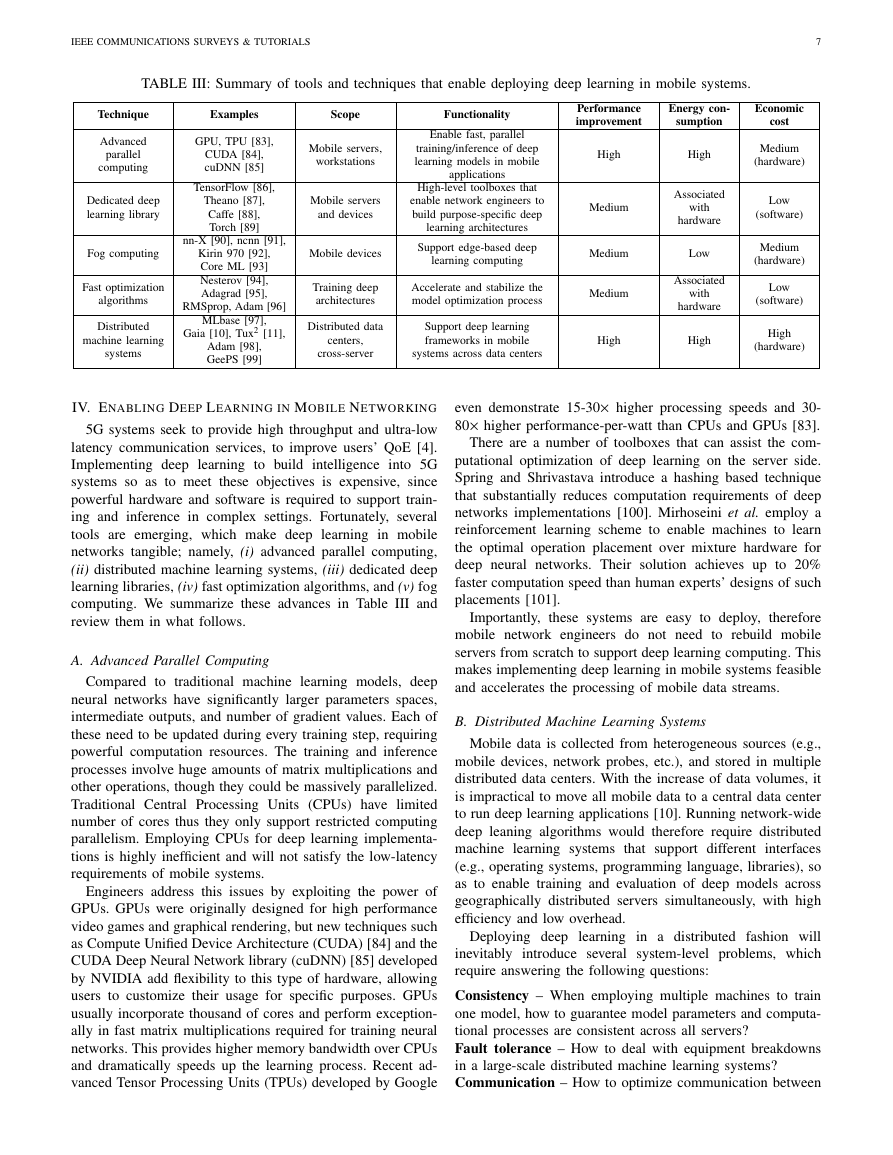

IEEECOMMUNICATIONSSURVEYS&TUTORIALS7TABLEIII:Summaryoftoolsandtechniquesthatenabledeployingdeeplearninginmobilesystems.TechniqueExamplesScopeFunctionalityPerformanceimprovementEnergycon-sumptionEconomiccostAdvancedparallelcomputingGPU,TPU[83],CUDA[84],cuDNN[85]Mobileservers,workstationsEnablefast,paralleltraining/inferenceofdeeplearningmodelsinmobileapplicationsHighHighMedium(hardware)DedicateddeeplearninglibraryTensorFlow[86],Theano[87],Caffe[88],Torch[89]MobileserversanddevicesHigh-leveltoolboxesthatenablenetworkengineerstobuildpurpose-specificdeeplearningarchitecturesMediumAssociatedwithhardwareLow(software)Fogcomputingnn-X[90],ncnn[91],Kirin970[92],CoreML[93]MobiledevicesSupportedge-baseddeeplearningcomputingMediumLowMedium(hardware)FastoptimizationalgorithmsNesterov[94],Adagrad[95],RMSprop,Adam[96]TrainingdeeparchitecturesAccelerateandstabilizethemodeloptimizationprocessMediumAssociatedwithhardwareLow(software)DistributedmachinelearningsystemsMLbase[97],Gaia[10],Tux2[11],Adam[98],GeePS[99]Distributeddatacenters,cross-serverSupportdeeplearningframeworksinmobilesystemsacrossdatacentersHighHighHigh(hardware)IV.ENABLINGDEEPLEARNINGINMOBILENETWORKING5Gsystemsseektoprovidehighthroughputandultra-lowlatencycommunicationservices,toimproveusers’QoE[4].Implementingdeeplearningtobuildintelligenceinto5Gsystemssoastomeettheseobjectivesisexpensive,sincepowerfulhardwareandsoftwareisrequiredtosupporttrain-ingandinferenceincomplexsettings.Fortunately,severaltoolsareemerging,whichmakedeeplearninginmobilenetworkstangible;namely,(i)advancedparallelcomputing,(ii)distributedmachinelearningsystems,(iii)dedicateddeeplearninglibraries,(iv)fastoptimizationalgorithms,and(v)fogcomputing.WesummarizetheseadvancesinTableIIIandreviewtheminwhatfollows.A.AdvancedParallelComputingComparedtotraditionalmachinelearningmodels,deepneuralnetworkshavesignificantlylargerparametersspaces,intermediateoutputs,andnumberofgradientvalues.Eachoftheseneedtobeupdatedduringeverytrainingstep,requiringpowerfulcomputationresources.Thetrainingandinferenceprocessesinvolvehugeamountsofmatrixmultiplicationsandotheroperations,thoughtheycouldbemassivelyparallelized.TraditionalCentralProcessingUnits(CPUs)havelimitednumberofcoresthustheyonlysupportrestrictedcomputingparallelism.EmployingCPUsfordeeplearningimplementa-tionsishighlyinefficientandwillnotsatisfythelow-latencyrequirementsofmobilesystems.EngineersaddressthisissuesbyexploitingthepowerofGPUs.GPUswereoriginallydesignedforhighperformancevideogamesandgraphicalrendering,butnewtechniquessuchasComputeUnifiedDeviceArchitecture(CUDA)[84]andtheCUDADeepNeuralNetworklibrary(cuDNN)[85]developedbyNVIDIAaddflexibilitytothistypeofhardware,allowinguserstocustomizetheirusageforspecificpurposes.GPUsusuallyincorporatethousandofcoresandperformexception-allyinfastmatrixmultiplicationsrequiredfortrainingneuralnetworks.ThisprovideshighermemorybandwidthoverCPUsanddramaticallyspeedsupthelearningprocess.Recentad-vancedTensorProcessingUnits(TPUs)developedbyGoogleevendemonstrate15-30×higherprocessingspeedsand30-80×higherperformance-per-wattthanCPUsandGPUs[83].Thereareanumberoftoolboxesthatcanassistthecom-putationaloptimizationofdeeplearningontheserverside.SpringandShrivastavaintroduceahashingbasedtechniquethatsubstantiallyreducescomputationrequirementsofdeepnetworksimplementations[100].Mirhoseinietal.employareinforcementlearningschemetoenablemachinestolearntheoptimaloperationplacementovermixturehardwarefordeepneuralnetworks.Theirsolutionachievesupto20%fastercomputationspeedthanhumanexperts’designsofsuchplacements[101].Importantly,thesesystemsareeasytodeploy,thereforemobilenetworkengineersdonotneedtorebuildmobileserversfromscratchtosupportdeeplearningcomputing.Thismakesimplementingdeeplearninginmobilesystemsfeasibleandacceleratestheprocessingofmobiledatastreams.B.DistributedMachineLearningSystemsMobiledataiscollectedfromheterogeneoussources(e.g.,mobiledevices,networkprobes,etc.),andstoredinmultipledistributeddatacenters.Withtheincreaseofdatavolumes,itisimpracticaltomoveallmobiledatatoacentraldatacentertorundeeplearningapplications[10].Runningnetwork-widedeepleaningalgorithmswouldthereforerequiredistributedmachinelearningsystemsthatsupportdifferentinterfaces(e.g.,operatingsystems,programminglanguage,libraries),soastoenabletrainingandevaluationofdeepmodelsacrossgeographicallydistributedserverssimultaneously,withhighefficiencyandlowoverhead.Deployingdeeplearninginadistributedfashionwillinevitablyintroduceseveralsystem-levelproblems,whichrequireansweringthefollowingquestions:Consistency–Whenemployingmultiplemachinestotrainonemodel,howtoguaranteemodelparametersandcomputa-tionalprocessesareconsistentacrossallservers?Faulttolerance–Howtodealwithequipmentbreakdownsinalarge-scaledistributedmachinelearningsystems?Communication–Howtooptimizecommunicationbetween�

IEEECOMMUNICATIONSSURVEYS&TUTORIALS8nodesinaclusterandhowtoavoidcongestion?Storage–Howtodesignefficientstoragemechanismstailoredtodifferentenvironments(e.g.,distributedclusters,singlemachines,GPUs),givenI/Oanddataprocessingdiversity?Resourcemanagement–Howtoassignworkloadstonodesinacluster,whilemakingsuretheyworkwell-coordinated?Programmingmodel–Howtodesignprogramminginter-facessothatuserscandeploymachinelearning,andhowtosupportmultipleprogramminglanguages?Thereexistseveraldistributedmachinelearningsystemsthatfacilitatedeeplearninginmobilenetworkingapplica-tions.Kraskaetal.introduceadistributedsystemnamedMLbase,whichenablestointelligentlyspecify,select,opti-mize,andparallelizeMLalgorithms[97].Theirsystemhelpsnon-expertsdeployawiderangeofMLmethods,allowingoptimizationandrunningMLapplicationsacrossdifferentservers.Hsiehetal.developageography-distributedMLsystemcalledGaia,whichbreaksthethroughputbottleneckbyemployinganadvancedcommunicationmechanismoverWideAreaNetworks(WANs),whilepreservingtheaccuracyofMLalgorithms[10].TheirproposalsupportsversatileMLinterfaces(e.g.TensorFlow,Caffe),withoutrequiringsignifi-cantchangestotheMLalgorithmitself.Thissystemenablesdeploymentsofcomplexdeepleaningapplicationsoverlarge-scalemobilenetworks.Xingetal.developalarge-scalemachinelearningplatformtosupportbigdataapplications[102].Theirarchitectureachievesefficientmodelanddataparallelization,enablingparameterstatesynchronizationwithlowcommunicationcost.Xiaoetal.proposeadistributedgraphengineforMLnamedTUX2,tosupportdatalayoutoptimizationacrossmachinesandreducecross-machinecommunication[11].Theydemon-strateremarkableperformanceintermsofruntimeandcon-vergenceonalargedatasetwithupto64billionedges.Chilimbietal.buildadistributed,efficient,andscalablesystemnamed“Adam".2tailoredtothetrainingofdeepmodels[98]Theirarchitecturedemonstratesimpressiveperfor-manceintermsthroughput,delay,andfaulttolerance.AnotherdedicateddistributeddeeplearningsystemcalledGeePSisdevelopedbyCuietal.[99].TheirframeworkallowsdataparallelizationondistributedGPUs,anddemonstrateshighertrainingthroughputandfasterconvergencerate.C.DedicatedDeepLearningLibrariesBuildingadeeplearningmodelfromscratchcanprovecomplicatedtoengineers,asthisrequiresdefinitionsofforwardingbehaviorsandgradientpropagationoperationsateachlayer,inadditiontoCUDAcodingforGPUparallelization.Withthegrowingpopularityofdeeplearning,severaldedicatedlibrariessimplifythisprocess.Mostofthesetoolboxesworkwithmultipleprogramminglanguages,andarebuiltwithGPUaccelerationandautomaticdifferentiationsupport.Thiseliminatestheneedofhand-crafteddefinitionofgradientpropagation.Wesummarizetheselibrariesbelow.2NotethatthisisdistinctfromtheAdamoptimizerdiscussedinSec.IV-DTensorFlow3isamachinelearninglibrarydevelopedbyGoogle[86].ItenablesdeployingcomputationgraphsonCPUs,GPUs,andevenmobiledevices[103],allowingMLimplementationonbothsingleanddistributedarchitectures.AlthoughoriginallydesignedforMLanddeepneuralnetworksapplications,TensorFlowisalsosuitableforotherdata-drivenresearchpurposes.DetaileddocumentationandtutorialsforPythonexist,whileotherprogramminglanguagessuchasC,Java,andGoarealsosupported.BuildinguponTensorFlow,severaldedicateddeeplearningtoolboxeswerereleasedtoprovidehigher-levelprogramminginterfaces,includingKeras4andTensorLayer[104].TheanoisaPythonlibrarythatallowstoefficientlydefine,optimize,andevaluatenumericalcomputationsinvolvingmulti-dimensionaldata[87].ItprovidesbothGPUandCPUmodes,whichenablesusertotailortheirprogramstoindividualmachines.Theanohasalargeusersgroupandsupportcommunity,andwasoneofthemostpopulardeeplearningtools,thoughitspopularityisdecreasingascoreideasandattributesareabsorbedbyTensorFlow.Caffe(2)isadedicateddeeplearningframeworkdevelopedbyBerkeleyAIResearch[88]andthelatestversion,Caffe2,5wasrecentlyreleasedbyFacebook.ItallowstotrainaneuralnetworksonmultipleGPUswithindistributedsystems,andsupportsdeeplearningimplementationsonmobileoperationsystems,suchasiOSandAndroid.Therefore,ithasthepotentialtoplayanimportantroleinthefuturemobileedgecomputing.(Py)Torchisascientificcomputingframeworkwithwidesupportformachinelearningmodelsandalgorithms[89].ItwasoriginallydevelopedintheLualanguage,butdeveloperslaterreleasedanimprovedPythonversion[105].Itisalightweighttoolboxthatcanrunonembeddedsystemssuchassmartphones,butlackscomprehensivedocumentations.PyTorchisnowofficiallysupportedandmaintainedbyFacebookandmainlyemployedforresearchpurposes.MXNETisaflexibledeeplearninglibrarythatprovidesinterfacesformultiplelanguages(e.g.,C++,Python,Matlab,R,etc.)[106].Itsupportsdifferentlevelsofmachinelearningmodels,fromlogisticregressiontoGANs.MXNETprovidesfastnumericalcomputationforbothsinglemachineanddis-tributedecosystems.Itwrapsworkflowscommonlyusedindeeplearningintohigh-levelfunctions,suchthatstandardneuralnetworkscanbeeasilyconstructedwithoutsubstantialcodingeffort.MXNETistheofficialdeeplearningframeworkinAmazon.Althoughlesspopular,thereareotherexcellentdeeplearn-inglibraries,suchasCNTK,6Deeplearning4j,7andLasagne,83TensorFlow,https://www.tensorflow.org/4Kerasdeeplearninglibrary,https://github.com/fchollet/keras5Caffe2,https://caffe2.ai/6MSCognitiveToolkit,https://www.microsoft.com/en-us/cognitive-toolkit/7Deeplearning4j,http://deeplearning4j.org8Lasagne,https://github.com/Lasagne�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc