Web Semantics: Science, Services and Agents

on the World Wide Web 3 (2005) 158–182

LUBM: A benchmark for OWL knowledge base systems

∗

Yuanbo Guo

, Zhengxiang Pan, Jeff Heflin

Computer Science & Engineering Department, Lehigh University, 19 Memorial Drive West, Bethlehem, PA 18015, USA

Received 1 June 2005; accepted 2 June 2005

Abstract

We describe our method for benchmarking Semantic Web knowledge base systems with respect to use in large OWL appli-

cations. We present the Lehigh University Benchmark (LUBM) as an example of how to design such benchmarks. The LUBM

features an ontology for the university domain, synthetic OWL data scalable to an arbitrary size, 14 extensional queries rep-

resenting a variety of properties, and several performance metrics. The LUBM can be used to evaluate systems with different

reasoning capabilities and storage mechanisms. We demonstrate this with an evaluation of two memory-based systems and two

systems with persistent storage.

© 2005 Elsevier B.V. All rights reserved.

Keywords: Semantic Web; Knowledge base system; Lehigh University Benchmark; Evaluation

1. Introduction

Various knowledge base systems (KBS) have been

developed for storing, reasoning and querying Seman-

tic Web information. They differ in a number of impor-

tant ways. For instance, many KBSs are main memory-

based while others use secondary storage to provide

persistence. Another key difference is the degree of

reasoning provided by the KBS. Some KBSs only sup-

port RDF/RDFS [44] inferencing while others aim at

providing extra OWL [10] reasoning.

In this paper, we consider the issue of how to choose

an appropriate KBS for a large OWL application. Here,

∗

Corresponding author. Tel.: +1 610 758 4719;

fax: +1 610 758 4096.

E-mail addresses: yug2@cse.lehigh.edu (Y. Guo),

zhp2@cse.lehigh.edu (Z. Pan), heflin@cse.lehigh.edu (J. Heflin).

we consider a large application to be one that requires

the processing of megabytes of data. Generally, there

are two basic requirements for such systems. First, the

enormous amount of data means that scalability and

efficiency become crucial issues. Secondly, the system

must provide sufficient reasoning capabilities to sup-

port the semantic requirements of the application.

It is difficult to evaluate KBSs with respect to these

requirements. In order to evaluate the systems in terms

of scalability, we need Semantic Web data that are of

a range of large sizes and commit to semantically rich

ontologies. However, there are few such datasets avail-

able on the current Semantic Web. As for the reasoning

requirement, the ideal KBS would be sound and com-

plete for OWL Full. Unfortunately, increased reasoning

capability usually means an increase in data processing

time and/or query response time as well. Given this,

one might think that the next best thing is to choose

1570-8268/$ – see front matter © 2005 Elsevier B.V. All rights reserved.

doi:10.1016/j.websem.2005.06.005

�

Y. Guo et al. / Web Semantics: Science, Services and Agents on the World Wide Web 3 (2005) 158–182

159

a KBS that is just powerful enough to meet the rea-

soning needs of the application. However, this is easier

said than done. Many systems are only sound and com-

plete for unnamed subsets of OWL. For example, most

description logic (DL) reasoners are complete for a lan-

guage more expressive than OWL Lite (if we ignore

datatypes) but, ironically, less expressive than OWL

DL. As such, although some systems are incomplete

with respect to OWL, they may still be useful because

they scale better or respond to queries more quickly.

Therefore, to evaluate the existing KBSs for the use in

those OWL applications, it is important to be able to

measure the tradeoffs between scalability and reason-

ing capability.

In light of the above, there is a pressing need for

benchmarks to facilitate the evaluation of Semantic

Web KBSs in a standard and systematic way. Ideally,

we should have a suite of such benchmarks, represent-

ing different workloads. These benchmarks should be

based on well-established practices for benchmarking

databases [3,4,8,33], but must be extended to support

the unique properties of the Semantic Web. As a first

step, we have designed a benchmark that fills a void

that we consider particularly important: extensional

queries over a large dataset that commits to a single

ontology of moderate complexity and size. Named

the Lehigh University Benchmark (LUBM),

this

benchmark is based on an ontology for the university

domain.

Its test data are synthetically generated

instance data over that ontology; they are random

and repeatable and can be scaled to an arbitrary

size. It offers 14 test queries over the data. It also

provides a set of performance metrics used to evaluate

the system with respect

to the above-mentioned

requirements.

A key benefit of our benchmarking approach is

that it allows us to empirically compare very differ-

ent knowledge base systems. In order to demonstrate

this, we have conducted an experiment using three dif-

ferent classes of systems: systems supporting RDFS

reasoning, systems providing partial OWL Lite reason-

ing, and systems that are complete or almost complete

for OWL Lite. The representatives of these classes that

we chose are Sesame, DLDB-OWL, and OWLJessKB,

respectively. In addition to having different reasoning

capabilities, these systems differ in their storage mech-

anisms and query evaluation. We have evaluated two

memory-based systems (memory-based Sesame and

OWLJessKB) and two systems with persistent stor-

age (database-based Sesame and DLDB-OWL). We

describe how we have settled on these systems and set

up the experiment in the benchmark framework. We

show the experimental results and discuss the perfor-

mance of the systems from several different aspects.

We also discuss some interesting observations. Based

on that, we highlight some issues with respect to the

development and improvement of the same kind of sys-

tems, and suggest some potential ways in using and

developing those systems.

This work makes two major contributions. First, we

introduce our methodology for benchmarking Seman-

tic Web knowledge base systems and demonstrate it

using the LUBM as a specific product. We believe our

work could benefit the Semantic Web community by

inspiring and guiding the development of other bench-

marks. Secondly, the experiment we have done could

help developers identify some important directions

in advancing the state-of-the-art of Semantic Web

KBSs.

The outline of the paper is as follows: Section 2

elaborates on the LUBM. Section 3 describes the afore-

mentioned experiment. Section 4 talks about related

work. Section 5 considers issues in applying the bench-

mark. We conclude in Section 6.

2. Lehigh University Benchmark for OWL

As mentioned earlier, this paper presents a bench-

mark that is intended to fill a void that we considered

particularly important. The benchmark was designed

with the following goals in mind:

(1) Support extensional queries: Extensional queries

are queries about the instance data over ontolo-

gies. There already exist DL benchmarks that focus

on intentional queries (i.e., queries about classes

and properties). However, our conjecture is that the

majority of Semantic Web applications will want

to use data to answer questions, and that reason-

ing about subsumption will typically be a means to

an end, not an end in itself. Therefore, it is impor-

tant to have benchmarks that focus on this kind of

query.

(2) Arbitrary scaling of data: We also predict that data

will by far outnumber ontologies on the Semantic

�

160

Y. Guo et al. / Web Semantics: Science, Services and Agents on the World Wide Web 3 (2005) 158–182

Web of the future. Here, we use the word “data” to

refer to assertions about instances, in other words,

what is referred to as an ABox in DL terminology.

In order to evaluate the ability of systems to handle

large ABoxes we need to be able to vary the size

of data, and see how the system scales.

(3) Ontology of moderate size and complexity: Exist-

ing DL benchmarks have looked at reasoning with

large and complex ontologies, while various RDF

systems have been evaluated with regards to var-

ious RDF Schemas (which could be considered

simple ontologies). We felt that it was impor-

tant to have a benchmark that fell between these

two extremes. Furthermore, since our focus is on

data, we felt that the ontology should not be too

large.

It should be noted that these goals choose only

one point in the space of possible benchmarks. We

recognize that some researchers may not agree with

the importance we placed on these goals, and would

suggest alternative benchmarks. We encourage these

researchers to consider the lessons learned in this work

and develop their own benchmarks.

Given the goals above, we designed the Lehigh Uni-

versity Benchmark. The first version [14] of this bench-

mark was used for the evaluation of DAML + OIL

[9] repositories, but has since been extended to use

an OWL ontology and dataset. We introduce the key

components of the benchmark suite below.

2.1. Benchmark ontology

The ontology used in the benchmark is called

Univ-Bench. Univ-Bench describes universities and

departments and the activities that occur at them. Its

predecessor is the Univ1.0 ontology,1 which has been

used to describe data about actual universities and

departments. We chose this ontology expecting that its

domain would be familiar to most of the benchmark

users.

We have created an OWL version of the Univ-Bench

ontology.2 The ontology is expressed in OWL Lite, the

simplest sublanguage of OWL. We chose to restrict the

ontology (and also the test data) to OWL Lite since

1 http://www.cs.umd.edu/projects/plus/DAML/onts/univ1.0.daml.

2 http://www.lehigh.edu/∼zhp2/2004/0401/univ-bench.owl.

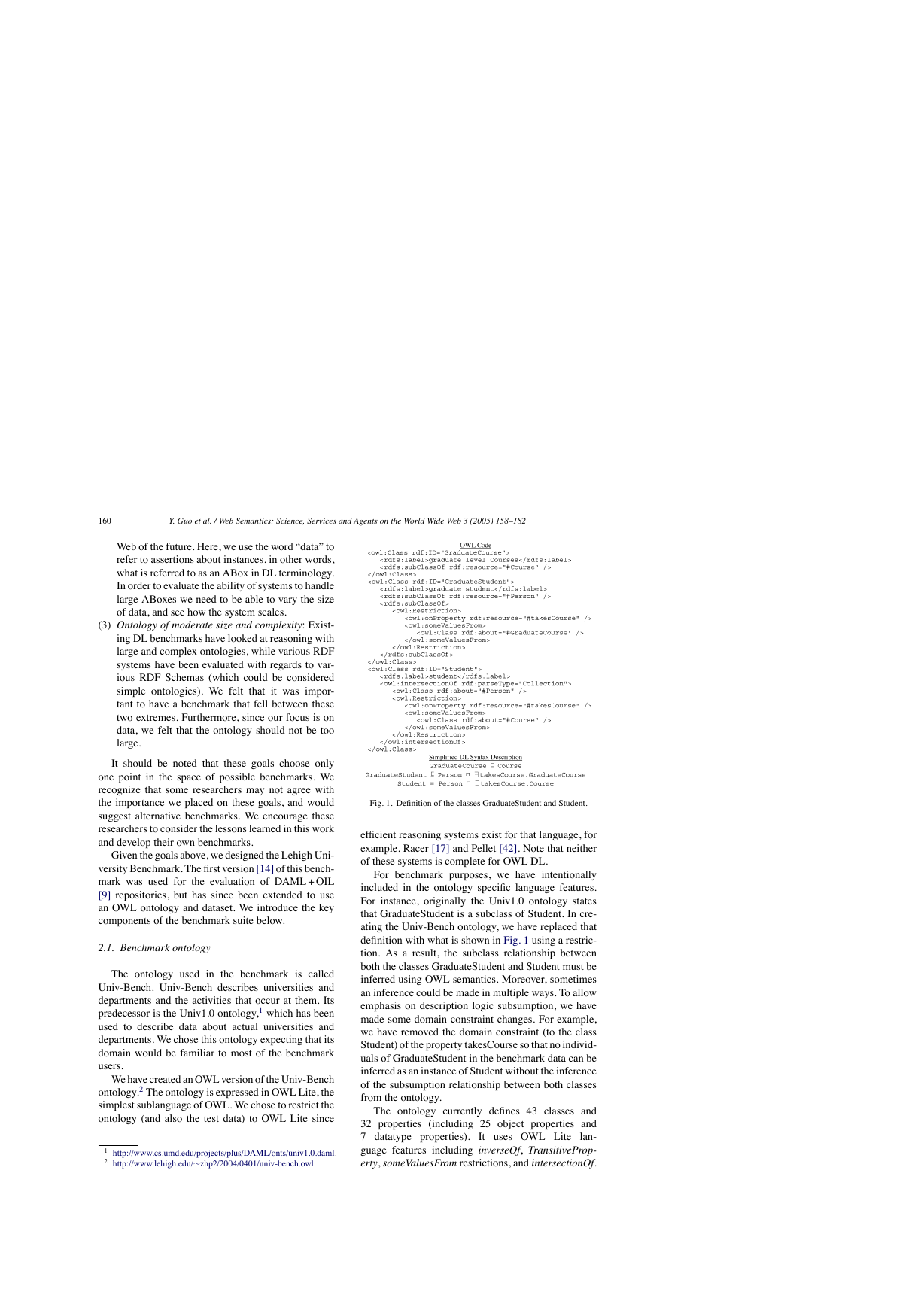

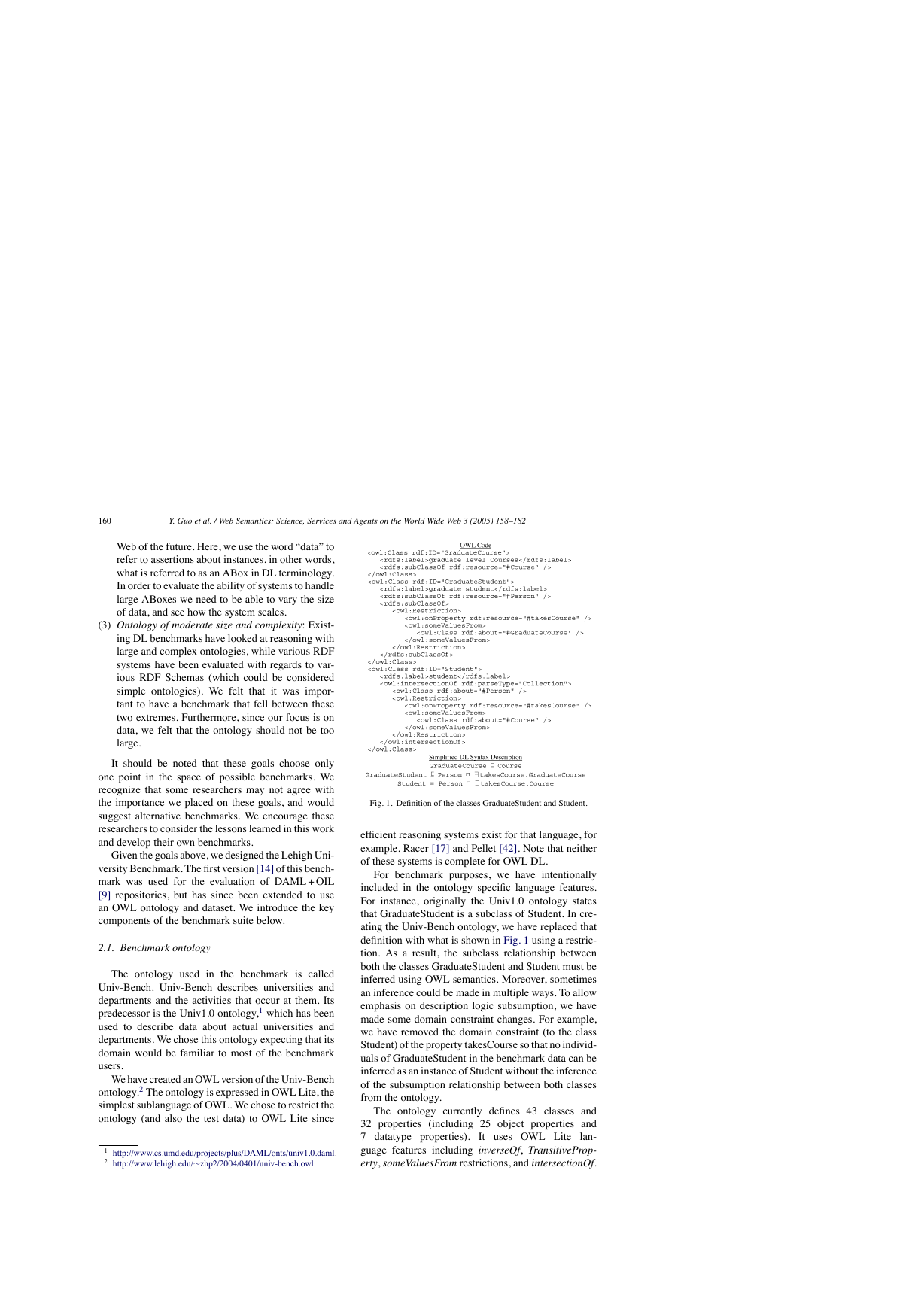

Fig. 1. Definition of the classes GraduateStudent and Student.

efficient reasoning systems exist for that language, for

example, Racer [17] and Pellet [42]. Note that neither

of these systems is complete for OWL DL.

For benchmark purposes, we have intentionally

included in the ontology specific language features.

For instance, originally the Univ1.0 ontology states

that GraduateStudent is a subclass of Student. In cre-

ating the Univ-Bench ontology, we have replaced that

definition with what is shown in Fig. 1 using a restric-

tion. As a result, the subclass relationship between

both the classes GraduateStudent and Student must be

inferred using OWL semantics. Moreover, sometimes

an inference could be made in multiple ways. To allow

emphasis on description logic subsumption, we have

made some domain constraint changes. For example,

we have removed the domain constraint (to the class

Student) of the property takesCourse so that no individ-

uals of GraduateStudent in the benchmark data can be

inferred as an instance of Student without the inference

of the subsumption relationship between both classes

from the ontology.

The ontology currently defines 43 classes and

32 properties (including 25 object properties and

7 datatype properties).

It uses OWL Lite lan-

guage features including inverseOf, TransitiveProp-

erty, someValuesFrom restrictions, and intersectionOf.

�

Y. Guo et al. / Web Semantics: Science, Services and Agents on the World Wide Web 3 (2005) 158–182

161

According to a study by Tempich and Volz [34],

this ontology can be categorized as a “description

logic-style” ontology, which has a moderate number

of classes but several restrictions and properties per

class.

2.2. Data generation and OWL datasets

Test data of the LUBM are extensional data cre-

ated over the Univ-Bench ontology. For the LUBM,

we have adopted a method of synthetic data generation.

This serves multiple purposes. As with the Wisconsin

benchmark [3,4], a standard and widely used database

benchmark, this allows us to control the selectivity and

output size of each test query. However, there are some

other specific considerations:

(1) We would like the benchmark data to be of a range

of sizes including considerably large ones. It is hard

to find such data sources that are based on the same

ontology.

(2) We may need the presence of certain kinds of

instances in the benchmark data. This allows us

to design repeatable tests for as many represen-

tative query types as possible. These tests not

only evaluate the storage mechanisms for Seman-

tic Web data but also the techniques that exploit

formal semantics. We may rely on instances of cer-

tain classes and/or properties to test against those

techniques.

Data generation is carried out by Univ-Bench Arti-

ficial data generator (UBA), a tool we have developed

for the benchmark. We have implemented the support

for OWL datasets in the tool. The generator features

random and repeatable data generation. A university is

the minimum unit of data generation, and for each uni-

versity, a set of OWL files describing its departments

are generated. Instances of both classes and properties

are randomly decided. To make the data as realistic as

possible, some restrictions are applied based on com-

mon sense and domain investigation. Examples are “a

minimum of 15 and a maximum of 25 departments

in each university”, “an undergraduate student/faculty

ratio between 8 and 14 inclusive”, “each graduate

student takes at least one but at most three courses”,

and so forth. A detailed profile of the data gener-

ated by the tool can be found on the benchmark’s

webpage.

The generator identifies universities by assigning

them zero-based indexes, i.e., the first university is

named University0 and so on. Data generated by the

tool are exactly repeatable with respect to universities.

This is possible because the tool allows the user to enter

an initial seed for the random number generator that is

used in the data generation process. Through the tool,

we may specify how many and which universities to

generate.

Finally, as with the Univ-Bench ontology, the OWL

data created by the generator are also in the OWL

Lite sublanguage. As a consequence, we have had to

give every individual ID appearing in the data a type

and include in every document an ontology tag (the

owl:Ontology element).3

2.3. Test queries

The LUBM currently offers 14 test queries, one

more than when it was originally developed. Readers

are referred to Appendix A for a list of these queries.

They are written in SPARQL [28], the query language

that is poised to become the standard for RDF. In

choosing the queries, first of all, we wanted them to

be realistic. Meanwhile, we have mainly taken into

account the following factors:

(1) Input size: This is measured as the proportion

of the class instances involved in the query to

the total class instances in the benchmark data.

Here, we refer to not just class instances explic-

itly expressed but also those that are entailed by

the knowledge base. We define the input size as

large if the proportion is greater than 5%, and small

otherwise.

(2) Selectivity: This is measured as the estimated pro-

portion of the class instances involved in the query

that satisfy the query criteria. We regard the selec-

tivity as high if the proportion is lower than 10%,

and low otherwise. Whether the selectivity is high

or low for a query may depend on the dataset used.

For instance, the selectivity of Queries 8, 11, and

12 is low if the dataset contains only University0

while high if the dataset contains more than 10

universities.

3 In OWL, the notion of the term ontology differs from that in the

traditional sense by also including instance data [32].

�

162

Y. Guo et al. / Web Semantics: Science, Services and Agents on the World Wide Web 3 (2005) 158–182

(3) Complexity: We use the number of classes and

properties that are involved in the query as an indi-

cation of complexity. Since we do not assume any

specific implementation of the repository, the real

degree of complexity may vary by systems and

schemata. For example, in a relational database,

depending on the schema design, the number of

classes and properties may or may not directly indi-

cate the number of table joins, which are significant

operations.

(4) Assumed hierarchy information: This considers

whether information from the class hierarchy or

property hierarchy is required to achieve the com-

plete answer. (We define completeness in next sub-

section.)

(5) Assumed

inference: This

logical

subclass

owl:inverseOf,

considers

whether logical inference is required to achieve

the completeness of the answer. Features used

i.e.,

in the test queries include subsumption,

implicit

inference of

relationship,

owl:TransitiveProperty,

and

realization, i.e., inference of the most specific

concepts that an individual

is an instance of.

One thing to note is that we are not bench-

marking complex description logic reasoning.

We are concerned with extensional queries.

Some queries use simple description logic rea-

soning mainly to verify that this capability is

present.

We have chosen test queries that cover a range

of properties in terms of the above criteria. At the

same time, to the end of performance evaluation,

we have emphasized queries with large input and

high selectivity. If not otherwise noted, all the test

queries are of this type. Some subtler factors have

also been considered in designing the queries, such

as the depth and width of class hierarchies,4 and the

way the classes and properties chain together in the

query.

2.4. Performance metrics

The LUBM consists of a set of performance

metrics including load time, repository size, query

4 We define a class hierarchy as deep if its depth is greater than 3,

and as wide if its average branching factor is greater than 3.

response time, query completeness and soundness, and

a combined metric for query performance. Among

these metrics: the first three are standard database

benchmark metrics—query response time was intro-

duced in the Wisconsin benchmark, and load time

and repository size have been commonly used in

other database benchmarks, e.g.,

the OO1 bench-

mark [8]; query completeness and soundness, and

the combined metric are new metrics we developed

for the benchmark. We address these metrics in turn

below.

2.4.1. Load time

In a LUBM dataset, every university contains 15–25

departments, each described by a separate OWL file.

These files are loaded to the target system in an incre-

mental fashion. We measure the load time as the stand-

alone elapsed time for storing the specified dataset to

the system. This also counts the time spent in any pro-

cessing of the ontology and source files, such as parsing

and reasoning.

2.4.2. Repository size

Repository size is the resulting size of the repository

after loading the specified benchmark data into the sys-

tem. Size is only measured for systems with persistent

storage and is calculated as the total size of all files that

constitute the repository. We do not measure the occu-

pied memory sizes for the main memory-based systems

because it is difficult to accurately calculate them. How-

ever, since we evaluate all systems on a platform with a

fixed memory size, the largest dataset that can be han-

dled by a system provides an indication of its memory

efficiency.

2.4.3. Query response time

Query response time is measured based on the pro-

cess used in database benchmarks. To account for

caching, each query is executed for 10 times consecu-

tively and the average time is computed. Specifically,

the benchmark measures the query response time as the

following:

For each test query

Open the repository

Execute the query on the repository consecutively

for 10 times and compute the average response

time. Each time:

�

Y. Guo et al. / Web Semantics: Science, Services and Agents on the World Wide Web 3 (2005) 158–182

163

Issue the query, obtain the result set, traverse that

set sequentially, and collect the elapsed time

Close the repository.

2.4.4. Query completeness and soundness

We also examine query completeness and sound-

ness of each system. In logic, an inference procedure is

complete if it can find a proof for any sentence that

is entailed by the knowledge base. With respect to

queries, we say a system is complete if it generates

all answers that are entailed by the knowledge base,

where each answer is a binding of the query variables

that results in an entailed sentence. However, on the

Semantic Web, partial answers will often be accept-

able. So it is important not to measure completeness

with such a coarse distinction. Instead, we measure the

degree of completeness of each query answer as the

percentage of the entailed answers that are returned

by the system. Note that we request that the result set

contains unique answers.

In addition, as we will show in the next section, we

have realized that query soundness is also worthy of

examination. With similar argument to the above, we

measure the degree of soundness of each query answer

as the percentage of the answers returned by the system

that are actually entailed.

2.4.5. Combined metric (CM)

The benchmark also provides a metric for mea-

suring query response time and answer completeness

and soundness in combination to help users better

appreciate the potential tradeoff between the query

response time and the inference capability and the

overall performance of the system. Such a metric can

be used to provide an absolute ranking of systems.

However, since such a metric necessarily abstracts

away details, it should be used carefully. In order to

allow the user to place relative emphasis on different

aspects, we have parameterized the metric. Changing

these parameters can result in a reordering of the sys-

tems considered. Thus, this metric should never be

used without careful consideration of the parameters

used in its calculation. We will come back to this in

Section 5.

First, we use an F-measure [30,24] like metric

to compute the tradeoff between query complete-

ness and soundness, since essentially they are analo-

gous to recall and precision in Information Retrieval,

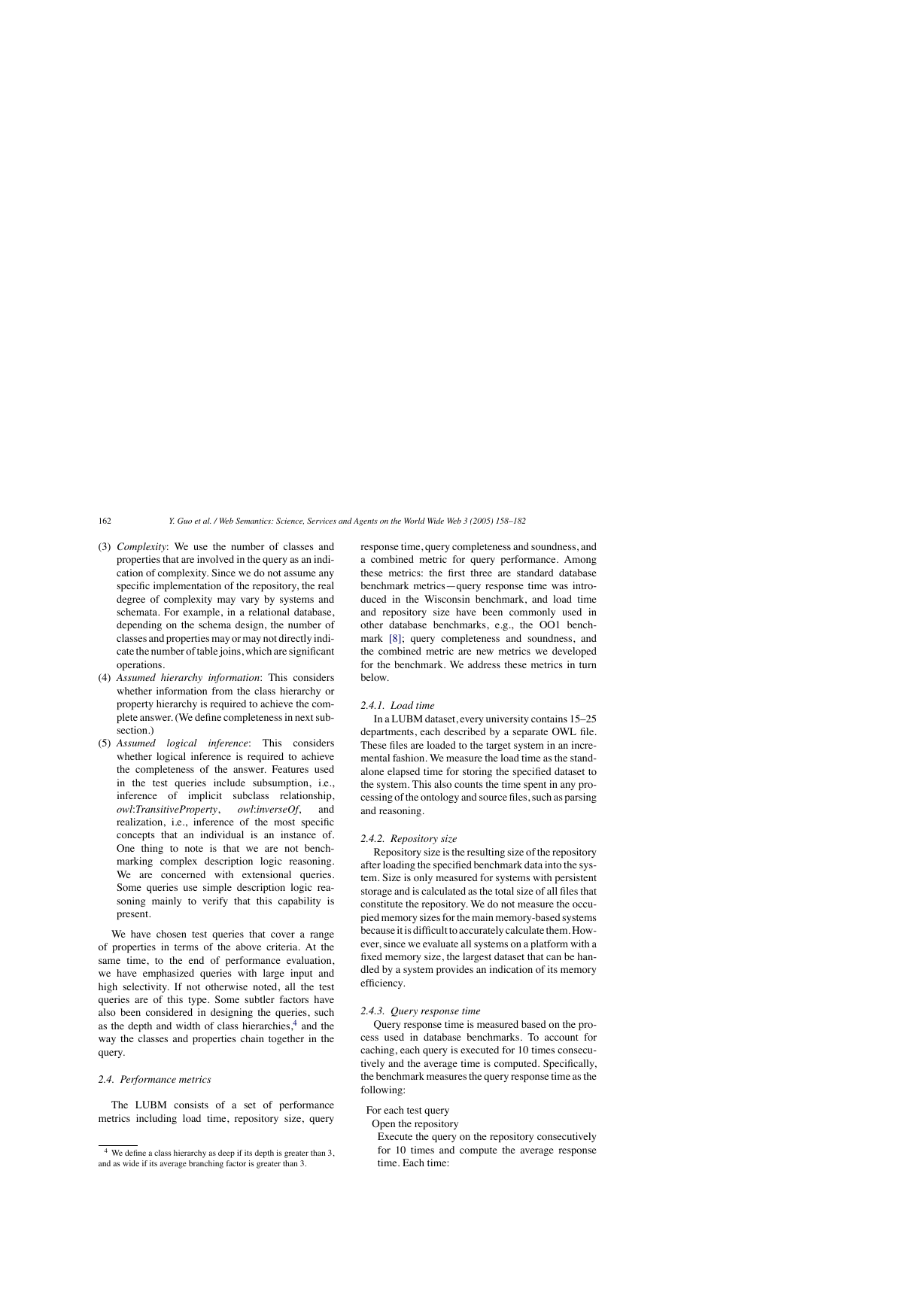

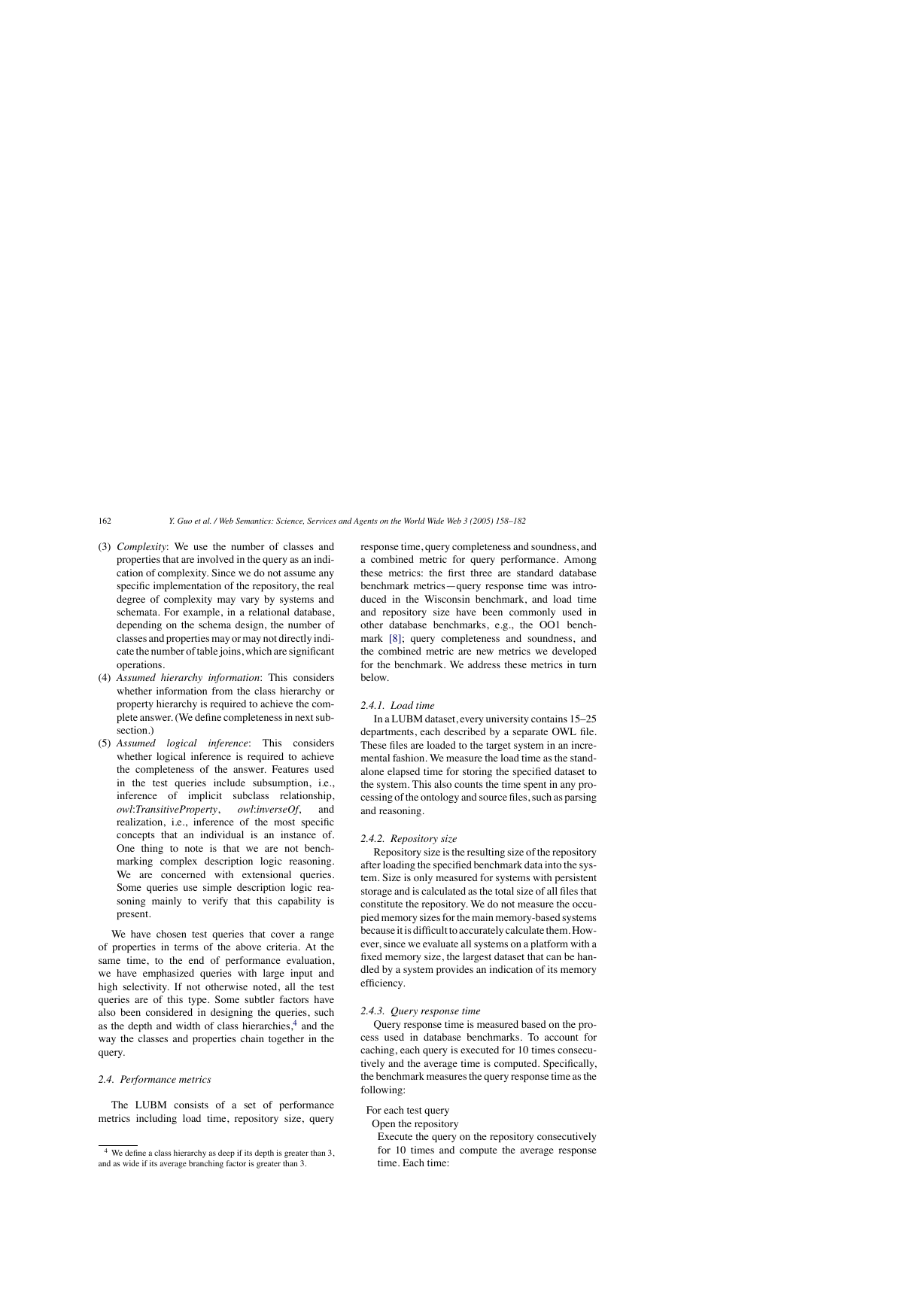

Fig. 2. Pq function (a = 500, b = 5, and N = 1,000,000).

respectively. In the formula below, Cq and Sq (∈[0,

1]) are the answer completeness and soundness for

query q. β determines the relative weighting between

Sq and Cq.

Fq = (β2 + 1)CqSq

β2Cq + Sq

Next, we define a query performance metric for

query q5:

Pq =

1

1 + eaTq/N−b

This is an adaptation of the well-known sigmoid

function. In the formula, Tq is the response time (ms)

for query q and N is the total number of triples in the

dataset concerned. To allow for comparison of the met-

ric values across datasets of different sizes, we use the

response time per triple (i.e., Tq/N) in the calculation.

a is used to control the slope of the curve. b shifts the

entire graph. In this evaluation, we have chosen a of 500

and b of 5 so that (1) Pq(0) is close enough to one (above

0.99) and (2) a response time of 1 s for 100,000 triples

(roughly the size of the smallest dataset used in our test)

will receive the Pq value of 0.5. These values appear to

be reasonable choices for contemporary systems. How-

ever, as the state-of-the-art progresses and machines

become substantially more powerful, different param-

eter values may be needed to better distinguish between

the qualities of different systems. Fig. 2 illustrates the

function for the triple size of 1 million.

5 We introduce a different function from that of our original work

[16], since we find that the current one could map the query time

(possibly from zero to infinity) to the continuous range of [0, 1] in

a more natural way, and could give us a more reasonable range and

distribution of query performance evaluation of the systems.

�

164

Y. Guo et al. / Web Semantics: Science, Services and Agents on the World Wide Web 3 (2005) 158–182

The benchmark suite is accessible at http://www.

swat.cse.lehigh.edu/projects/lubm/index.htm.

3. An evaluation using the LUBM

In order to demonstrate how the LUBM can be

used to evaluate very different knowledge base sys-

tems, we describe an experiment. We discuss how we

selected the systems, describe the experiment condi-

tions, present the results, and discuss them.

3.1. Selecting target systems

In this experiment, we wanted to evaluate the scal-

ability and support for OWL Lite in various systems.

In choosing the systems, first we decided to consider

only non-commercial systems. Moreover, we did not

mean to carry out a comprehensive evaluation of the

existing Semantic Web KBSs. Instead, we wanted to

evaluate systems with a variety of characteristics. Since

a key point of this work is how to evaluate systems

with different capabilities, we wanted to evaluate rep-

resentative systems at distinct points in terms of OWL

reasoning capability. Specifically, we considered three

degrees of supported reasoning in the systems under

test, i.e., RDFS reasoning, partial OWL Lite reason-

ing, and complete or almost complete OWL Lite rea-

soning. Finally, we believe a practical KBS must be

able to read OWL files, support incremental data load-

ing, and provide programming APIs for loading data

and issuing queries. As a result, we have settled on

four different knowledge base systems, including two

implementations of Sesame, DLDB-OWL, and OWL-

JessKB. Below, we briefly describe the systems we

have considered along with the reason for choosing

or not choosing them.

3.1.1. RDFS reasoning

For this category of systems, we have considered

RDF repositories including Jena, Sesame, ICS-FORTH

RDFSuite [1], Kowari [40], and so on. Since Jena and

Sesame are currently the most widely used, we focused

on these two systems.

Jena [37] is a Java framework for building Seman-

tic Web applications. Jena currently supports both

RDF/RDFS and OWL. We have done some preliminary

tests on Jena (v2.1) (both memory-based and database-

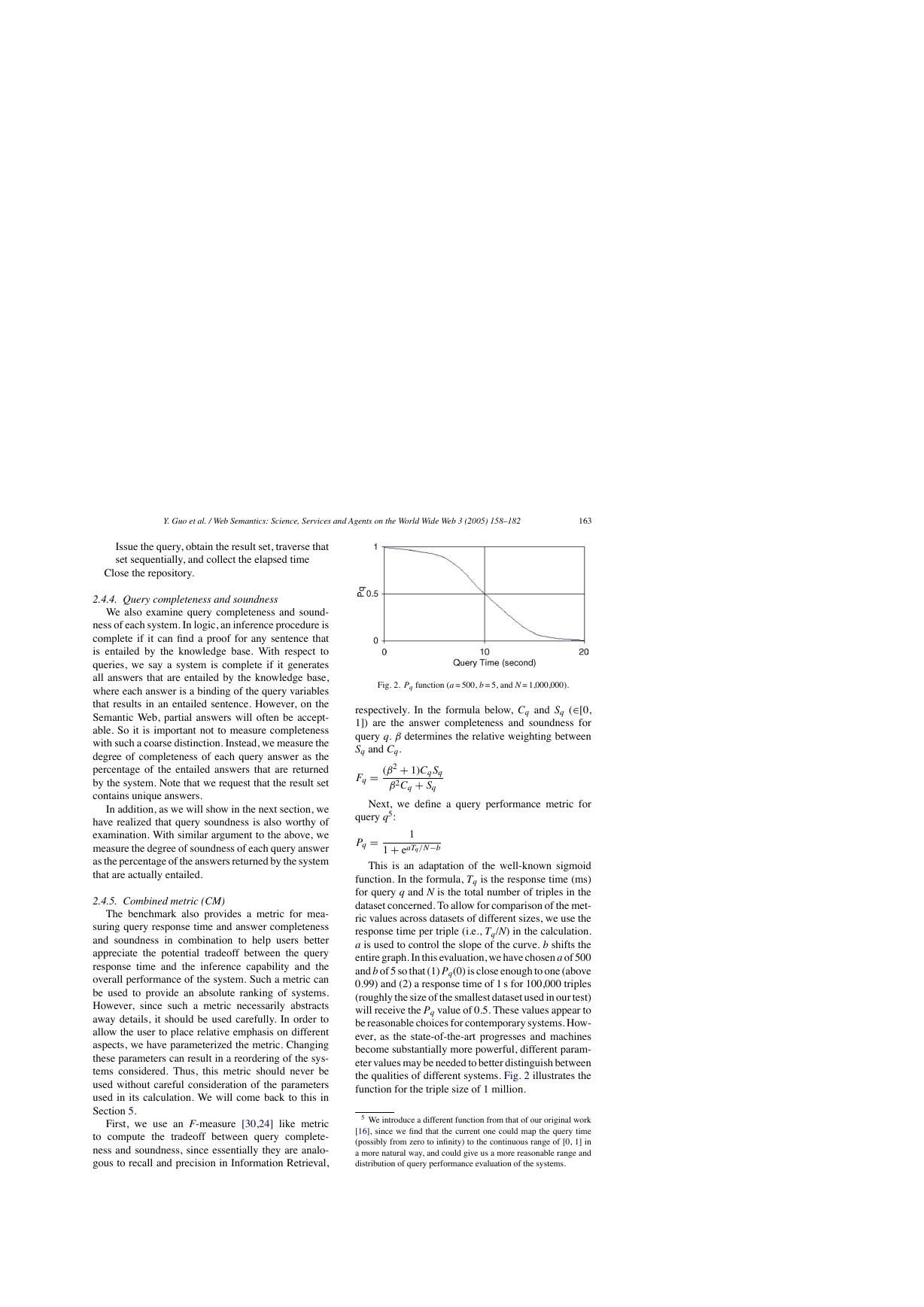

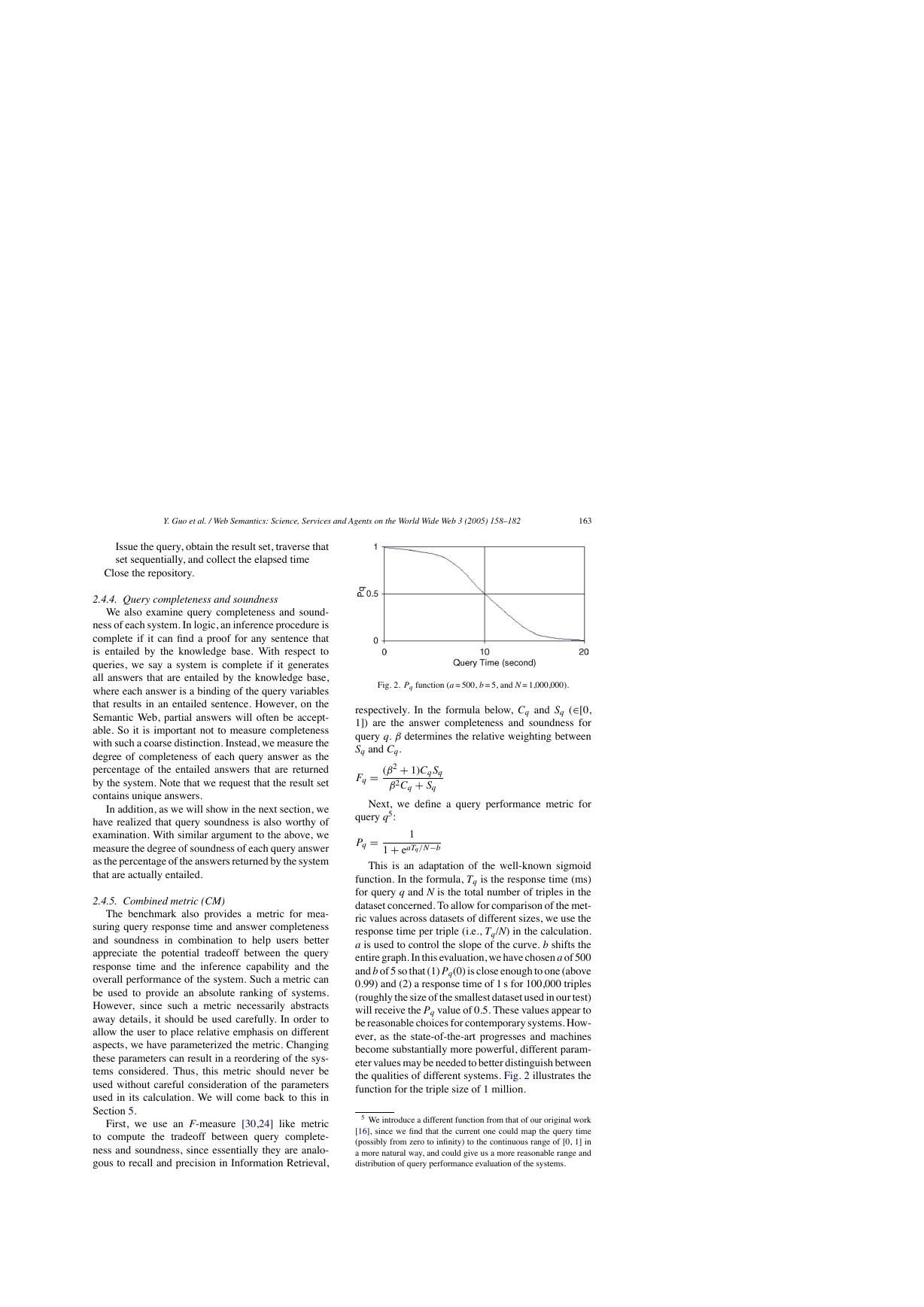

Fig. 3. Architecture of the benchmark.

As will be shown in the next section, some system

might fail to answer a query. In that case, we will use

zero for both Fq and Pq in the calculation.

Lastly, we define a composite metric, CM, of

query response time and answer completeness and

soundness as the following, which is also inspired by

F-measure:

CM = M

q=1

(α2 + 1)PqFq

α2Pq + Fq

wq

M

In the above, M is the total number of test queries.

wq = 1) is the weight given to query q. α

q=1

wq(

determines relative weighting between Fq and Pq. Gen-

erally speaking, the metric will reward those systems

that can answer queries faster, more completely and

more soundly.

2.5. Benchmark architecture

Fig. 3 depicts the benchmark architecture. We pre-

scribe an interface to be instantiated by each target

system. Through the interface, the benchmark test mod-

ule requests operations on the repository (e.g., open and

close), launches the loading process, issues queries, and

obtains the results. Users inform the test module of the

target systems and test queries by defining them in the

KBS specification file and query definition file, respec-

tively. It needs to be noted that queries are translated

into the query language supported by the system prior to

being issued to the system. In this way, we want to elim-

inate the effect of query translation on query response

time. The translated queries are fed to the tester through

the query definition file. The tester reads the lines of

each query from the definition file and passes them to

the system.

�

Y. Guo et al. / Web Semantics: Science, Services and Agents on the World Wide Web 3 (2005) 158–182

165

based) with our smallest dataset (cf. Appendix C).

Compared to Sesame, Jena with RDFS reasoning was

much slower in answering nearly all the queries. Some

of the queries did not terminate even after being allowed

to run for several hours. The situation was similar when

Jena’s OWL reasoning was turned on.

Due to Jena’s limitations with respect to scalability,

we decided to evaluate Sesame instead. Sesame [5] is

a repository and querying facility based on RDF and

RDF Schema. It features a generic architecture that

separates the actual storage of RDF data, functional

modules offering operations on those data, and commu-

nication with these functional modules from outside the

system. Sesame supports RDF/RDF Schema inference,

but is an incomplete reasoner for OWL Lite. Neverthe-

less, it has been used on a wide number of Semantic

Web projects. Sesame can evaluate queries in RQL

[22], SeRQL [45], and RDQL [31]. We evaluate two

implementations of Sesame, main memory-based and

database-based.

3.1.2. Partial OWL Lite reasoning

KAON [39] is an ontology management infrastruc-

ture. It provides an API for manipulating RDF mod-

els. The suite also contains a library of KAON Dat-

alog Engine, which could be used to reason with the

DLP fragment of OWL. However, that functionality is

not directly supported by the core of KAON, i.e., its

APIs.

Instead, we have selected DLDB-OWL [27], a

repository for processing, storing, and querying OWL

data. The major feature of DLDB-OWL is the exten-

sion of a relational database system with description

logic inference capabilities. Specifically, DLDB-OWL

uses Microsoft Access® as the DBMS and FaCT [19] as

the OWL reasoner. It uses the reasoner to precompute

subsumption and employs relational views to answer

extensional queries based on the implicit hierarchy that

is inferred. DLDB-OWL uses a language in which a

query is written as a conjunction of atoms. The lan-

guage syntactically resembles KIF [13] but has less

expressivity. Since DLDB uses FaCT to do TBox rea-

soning only, we consider it as a system that provides

partial OWL Lite reasoning support. Note, FaCT and

FaCT++ [35] do not directly support ABox reasoning

(although it is possible to simulate it), so use of them

as stand-alone systems is inappropriate for the exten-

sional queries used by our benchmark.

3.1.3. Complete or almost complete OWL Lite

reasoning

OWL reasoning systems, such as Racer, Pellet, and

OWLJessKB, fall into this category. The first two sys-

tems, like FaCT, are based on the tableaux algorithms

developed for description logic inferencing, and addi-

tionally support ABox reasoning. Since DLDB-OWL

uses FaCT as its reasoner, we have decided to choose a

system with a different reasoning style. Thus, we have

settled on OWLJessKB.

OWLJessKB [41], whose predecessor is DAML-

JessKB [23], is a memory-based reasoning tool for

description logic languages, particularly OWL. It

uses the Java Expert System Shell (Jess) [38], a

production system, as its underlying reasoner. Current

functionality of OWLJessKB is close to OWL Lite

plus some. Thus, we have chosen OWLJessKB and

we evaluate it as a system that supports most OWL

entailments.

Although we have decided not to evaluate Racer

here, we want to mention that Haarslev et al. [18] have

conducted their own evaluation using the LUBM. They

developed a new query language called nRQL, which

made it possible to answer all of the queries in our

benchmark. The results showed that Racer could offer

complete answers for all the queries if required (they

have tested Racer on Queries 1–13). However, since

it has to perform ABox consistency checking before

query answering, Racer was unable to load a whole

university dataset. As a result, they have only loaded up

to five departments using Racer (v1.8) on a P4 2.8 GHz

1G RAM machine running Linux.

3.2. Experiment setup

3.2.1. System setup

The systems we test are DLDB-OWL (04-03-29

release), Sesame v1.0, and OWLJessKB (04-02-23

release). As noted, we test both the main memory-based

and database-based implementations of Sesame. For

brevity, we hereafter refer to them as Sesame-Memory

and Sesame-DB, respectively. For both of them, we use

the implementation with RDFS inference capabilities.

For the later, we use MySQL (v4.0.16) as the under-

lying DBMS since, according to a test by Broekstra

and Kampman [5], Sesame performs significantly bet-

ter than using the other DBMS PostgreSQL [43]. The

DBMS used in DLDB-OWL is MS Access® 2002. We

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc