Cluster-GCN: An Efficient Algorithm for

Training Deep and Large Graph Convolutional Networks

Wei-Lin Chiang (National Taiwan University);Xuanqing Liu (University of California, Los

Angeles);Si Si (Google);Yang Li (Google);Samy Bengio (Google);Cho-Jui Hsieh (University of

California, Los Angeles)

KDD 19

�

Content

⚫ 1. Background

⚫ 2. Contributions

⚫ 3. Methodology

⚫ 4. Experiments

⚫ 5. Conclusions

�

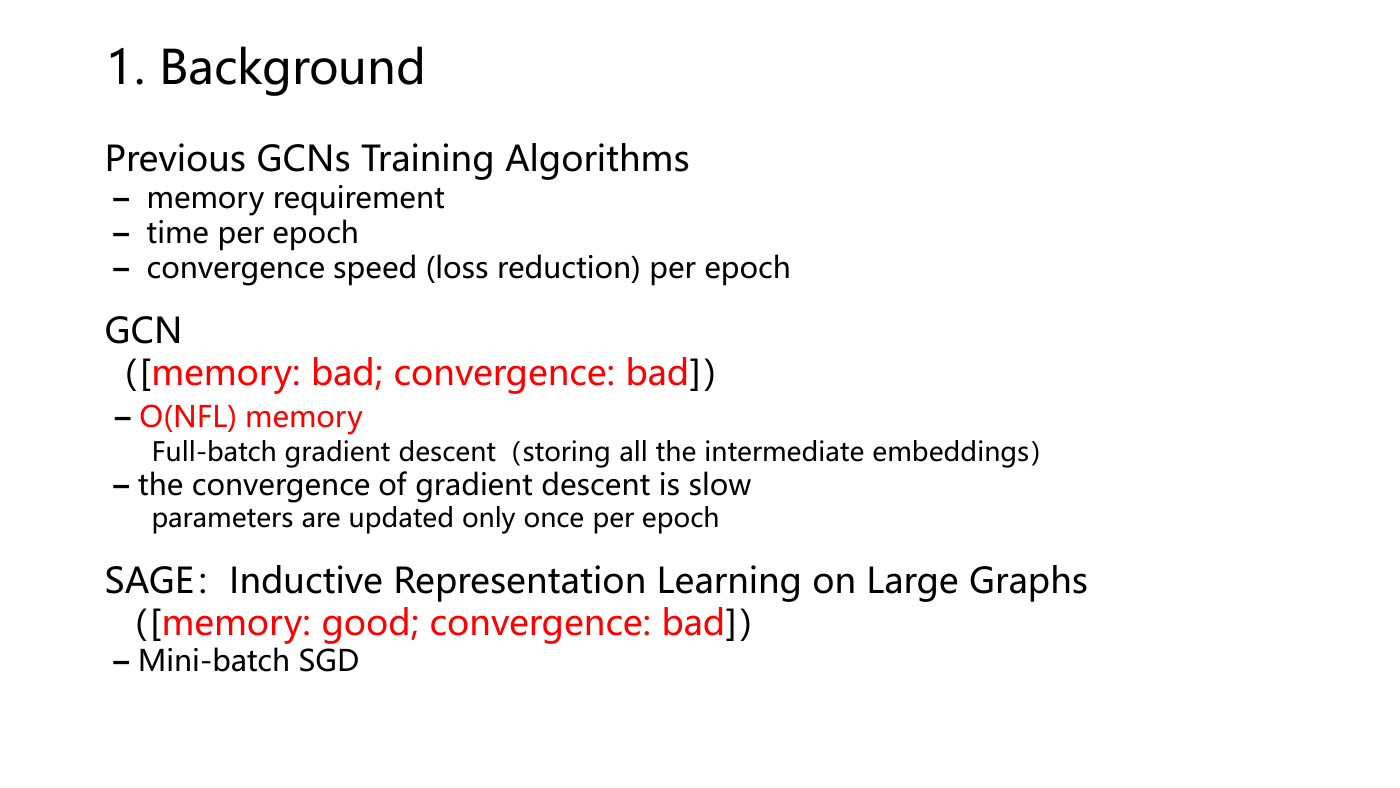

1. Background

Previous GCNs Training Algorithms

– memory requirement

– time per epoch

– convergence speed (loss reduction) per epoch

GCN

([memory: bad; convergence: bad])

– O(NFL) memory

Full-batch gradient descent(storing all the intermediate embeddings)

– the convergence of gradient descent is slow

parameters are updated only once per epoch

SAGE:Inductive Representation Learning on Large Graphs

([memory: good; convergence: bad])

– Mini-batch SGD

�

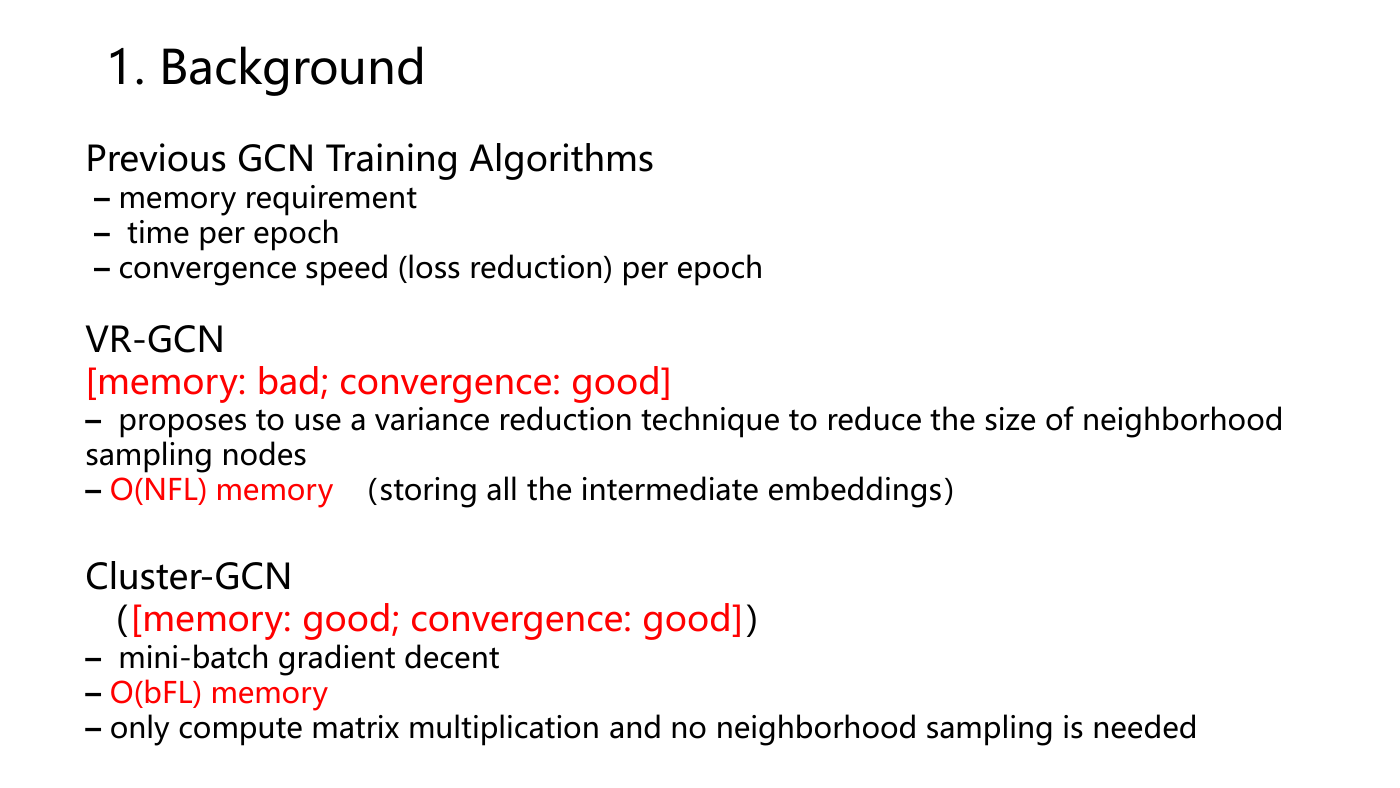

1. Background

Previous GCN Training Algorithms

– memory requirement

– time per epoch

– convergence speed (loss reduction) per epoch

VR-GCN

[memory: bad; convergence: good]

– proposes to use a variance reduction technique to reduce the size of neighborhood

sampling nodes

– O(NFL) memory (storing all the intermediate embeddings)

Cluster-GCN

([memory: good; convergence: good])

– mini-batch gradient decent

– O(bFL) memory

– only compute matrix multiplication and no neighborhood sampling is needed

�

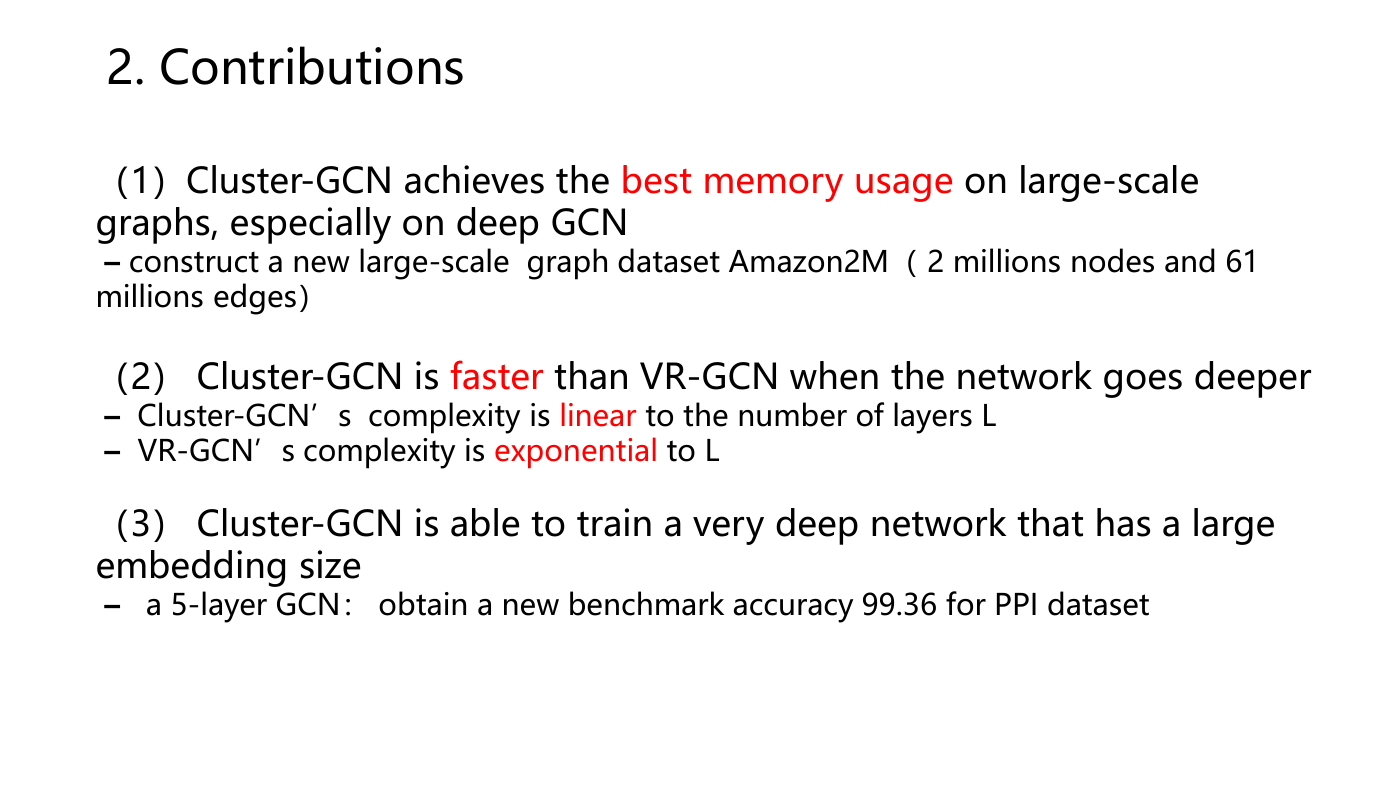

2. Contributions

(1)Cluster-GCN achieves the best memory usage on large-scale

graphs, especially on deep GCN

– construct a new large-scale graph dataset Amazon2M( 2 millions nodes and 61

millions edges)

(2) Cluster-GCN is faster than VR-GCN when the network goes deeper

– Cluster-GCN’s complexity is linear to the number of layers L

– VR-GCN’s complexity is exponential to L

(3) Cluster-GCN is able to train a very deep network that has a large

embedding size

– a 5-layer GCN: obtain a new benchmark accuracy 99.36 for PPI dataset

�

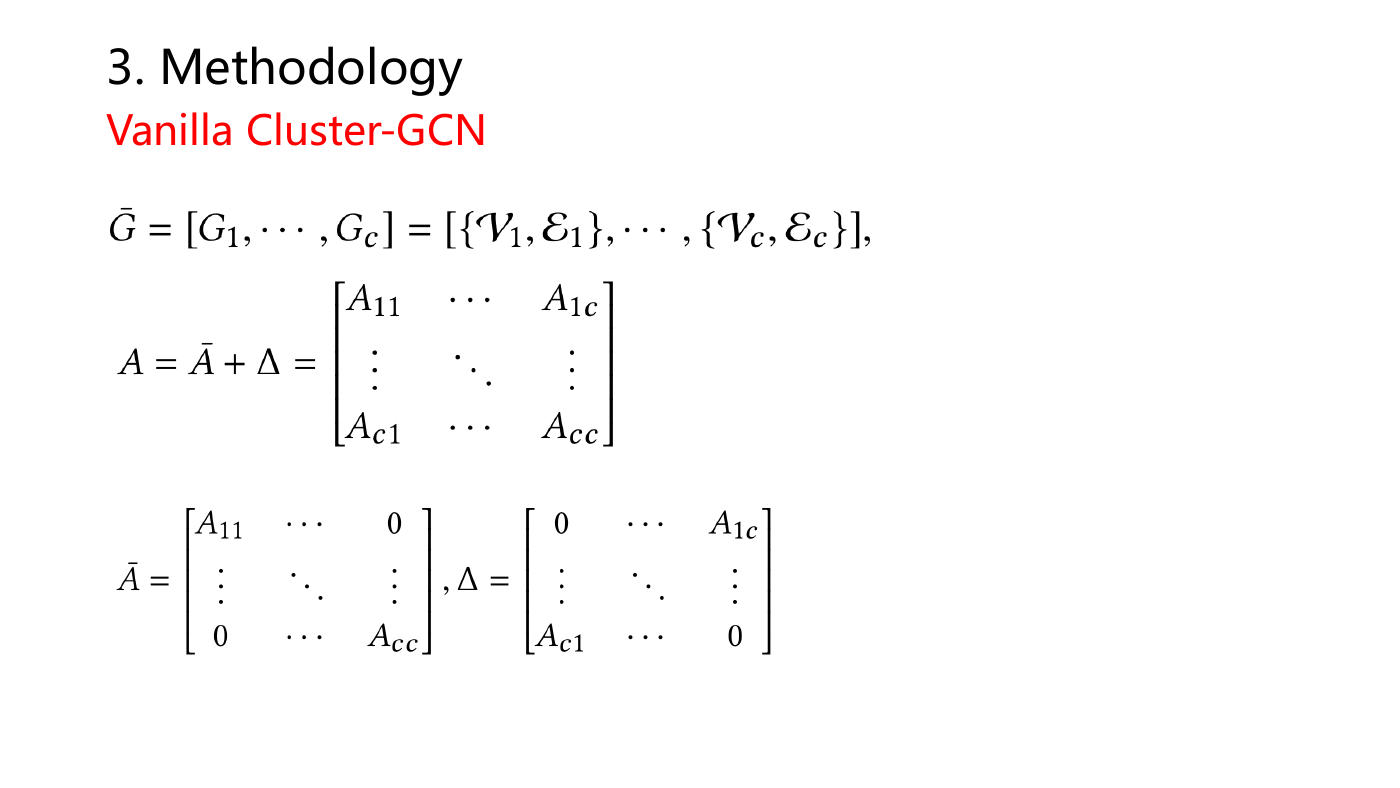

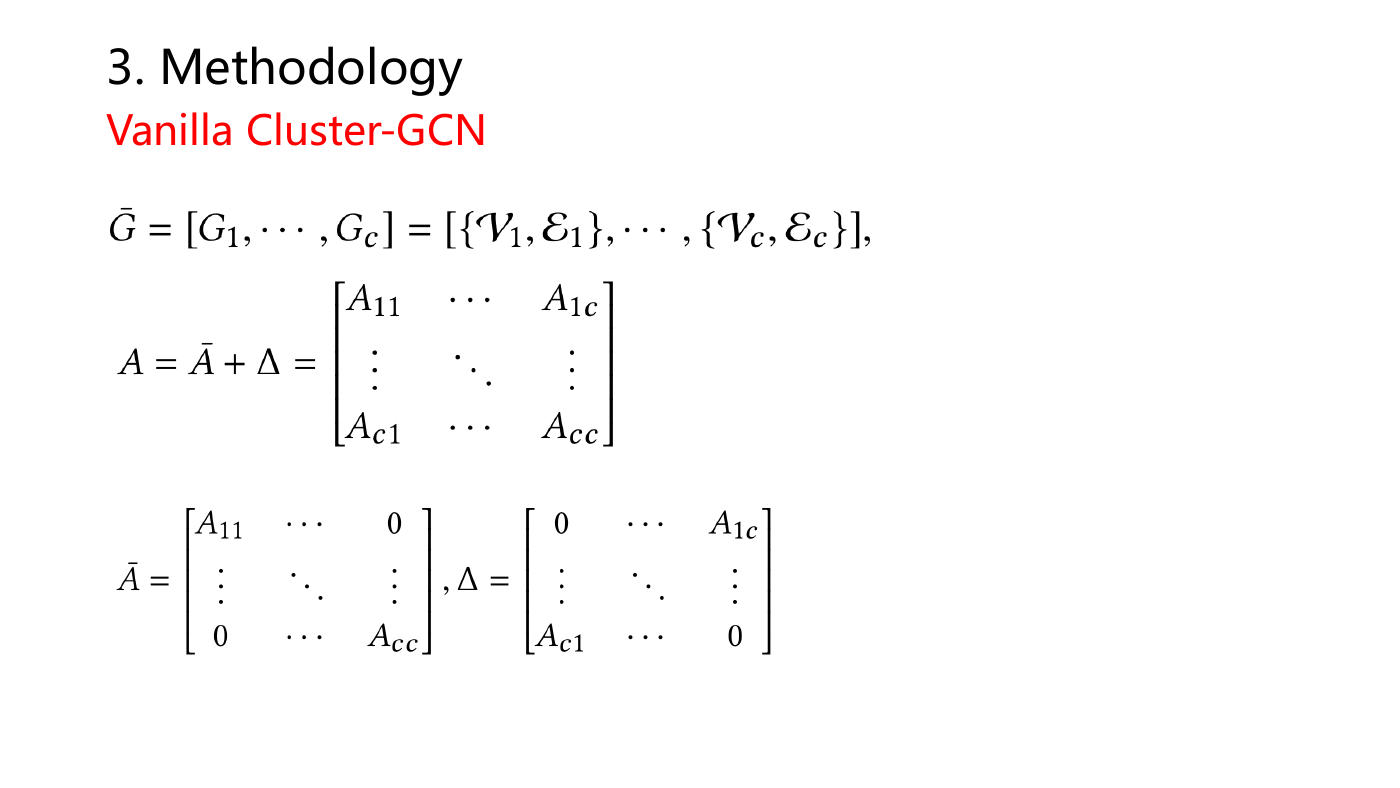

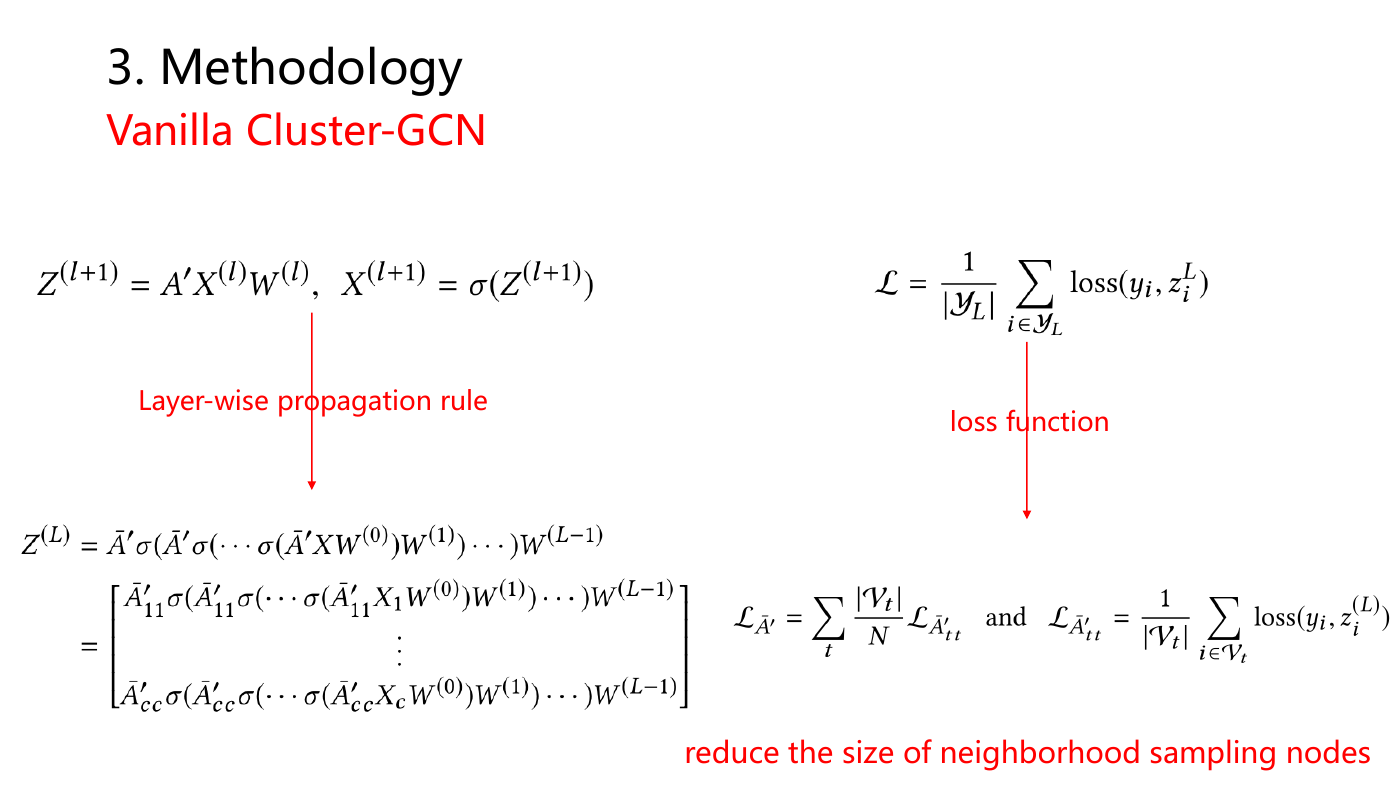

3. Methodology

Vanilla Cluster-GCN

�

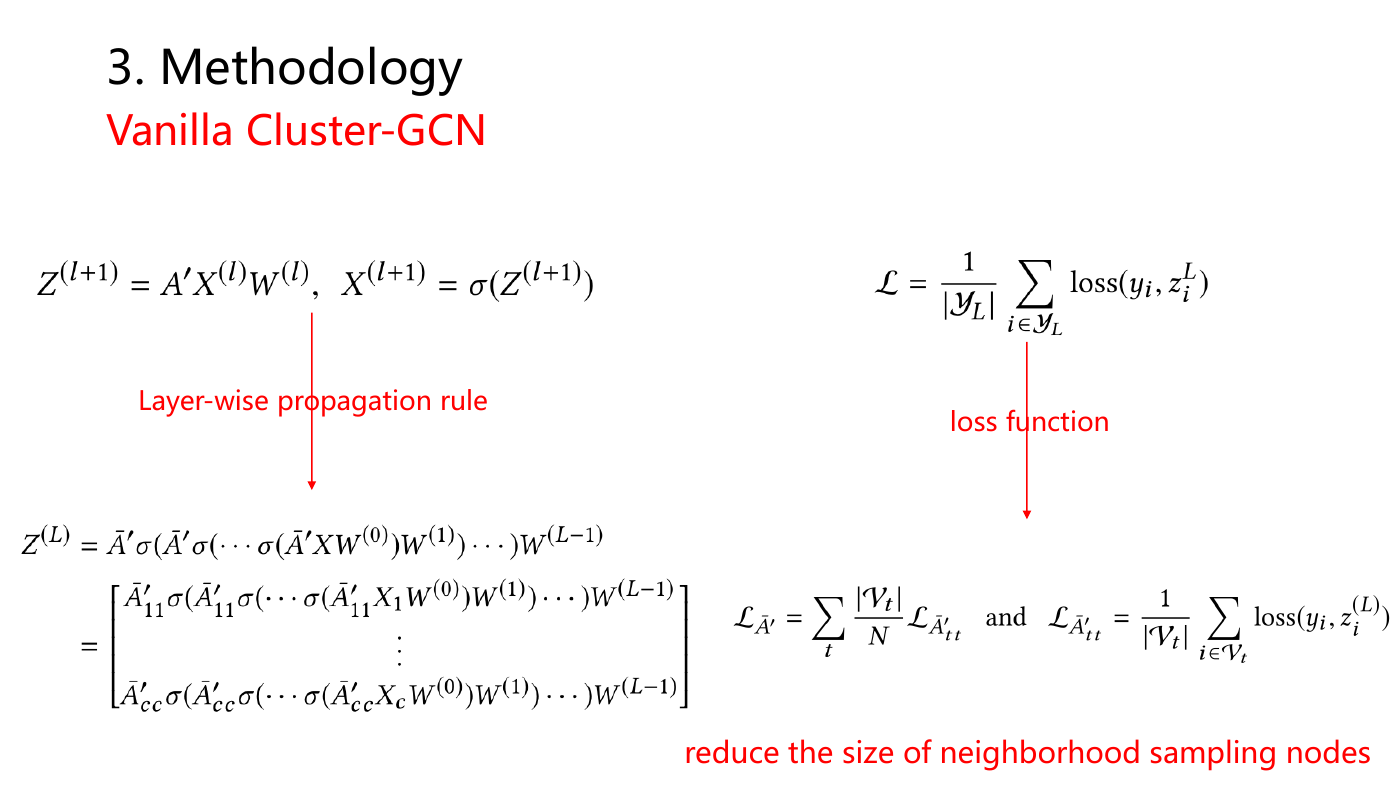

3. Methodology

Vanilla Cluster-GCN

Layer-wise propagation rule

loss function

reduce the size of neighborhood sampling nodes

�

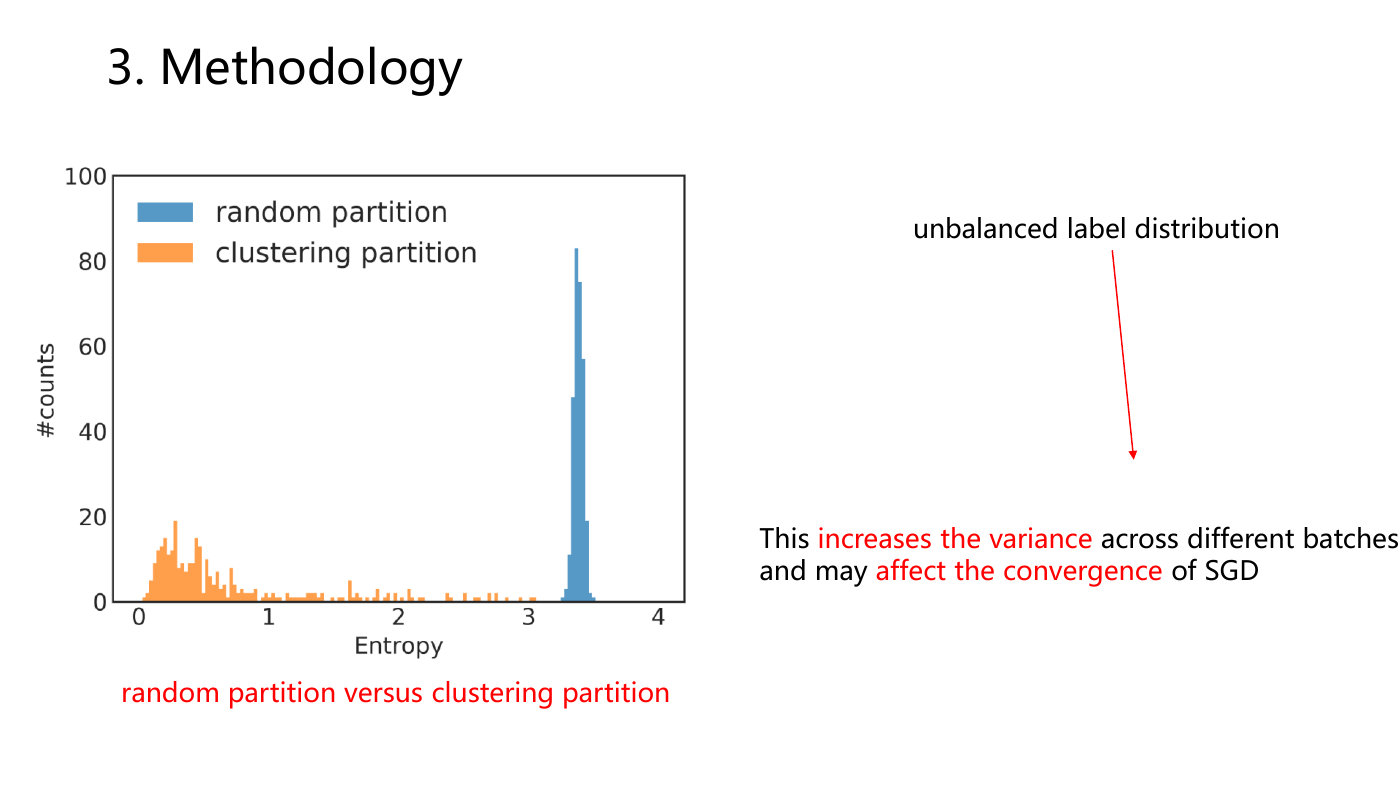

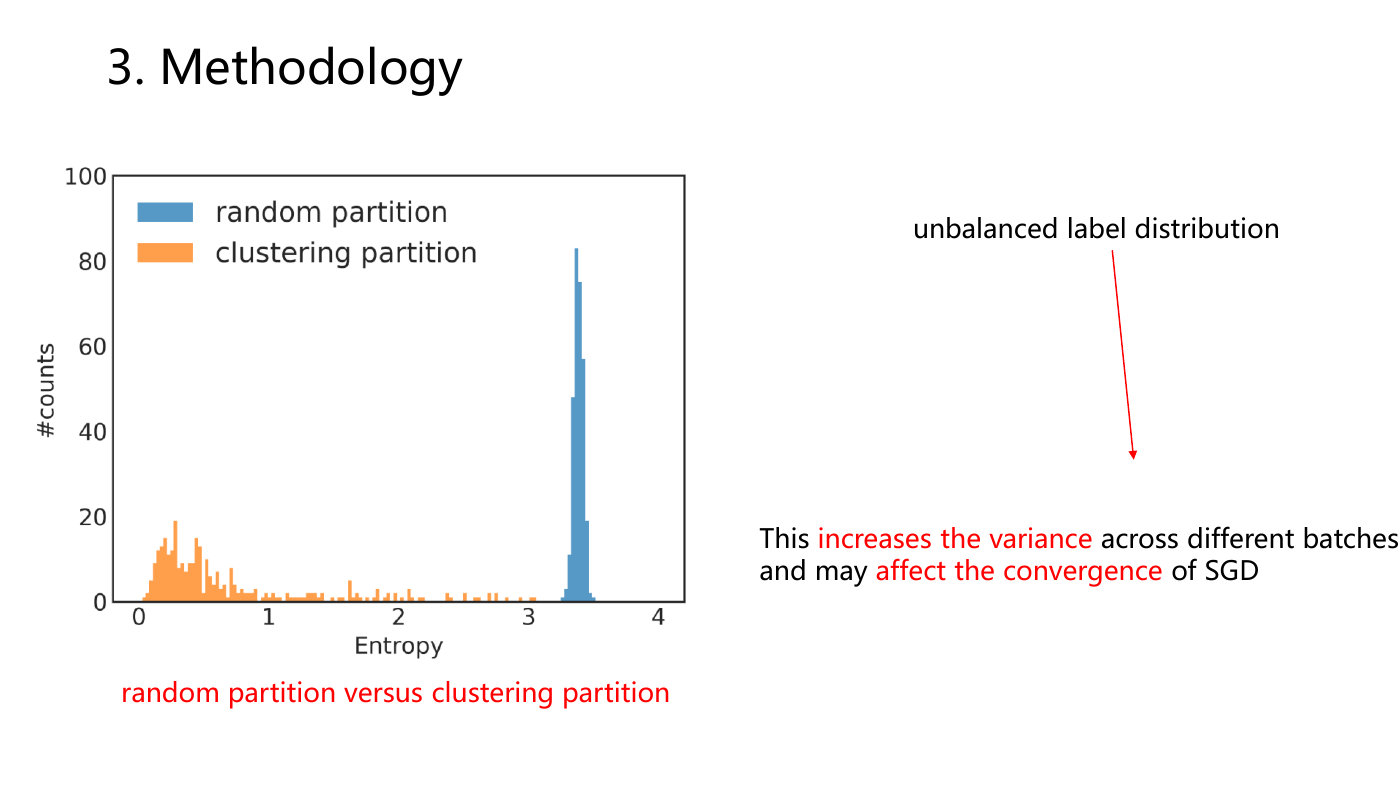

3. Methodology

unbalanced label distribution

This increases the variance across different batches

and may affect the convergence of SGD

random partition versus clustering partition

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc