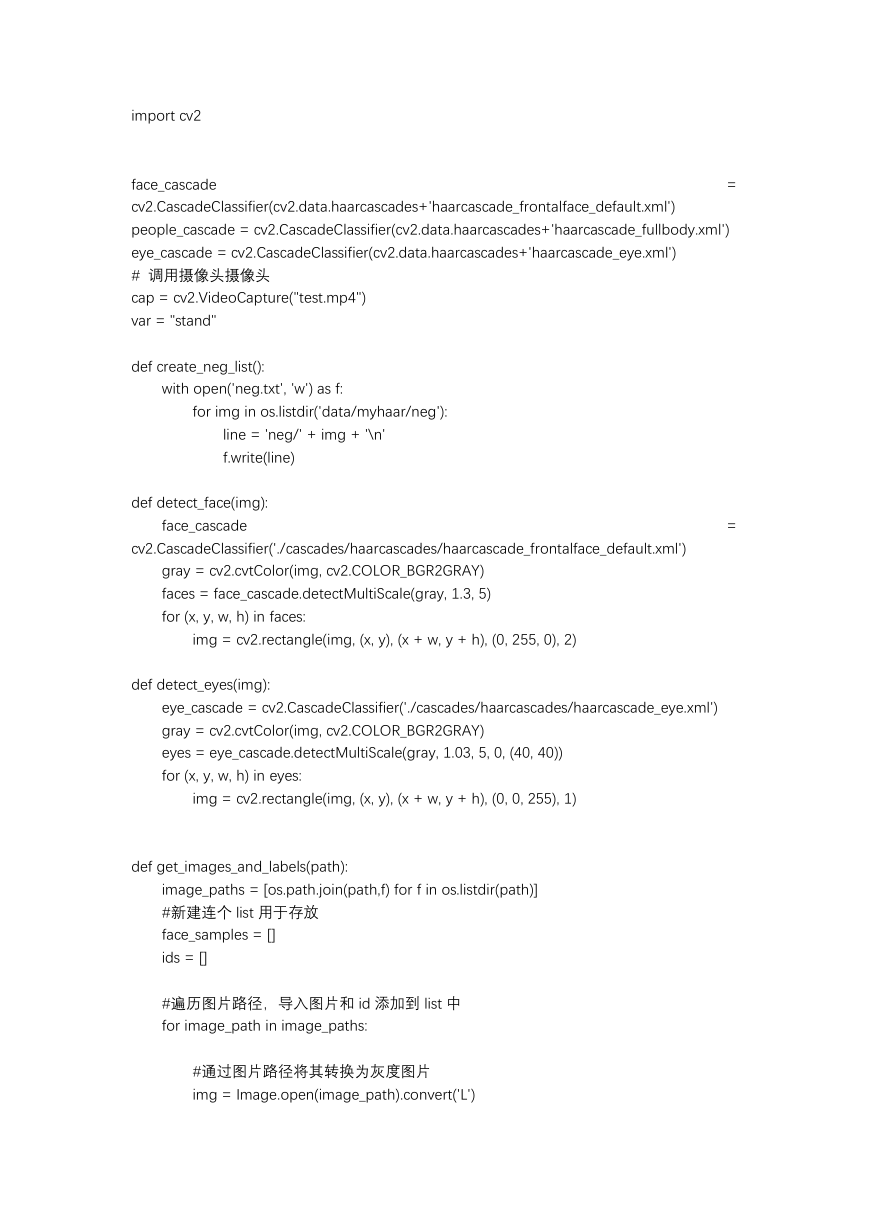

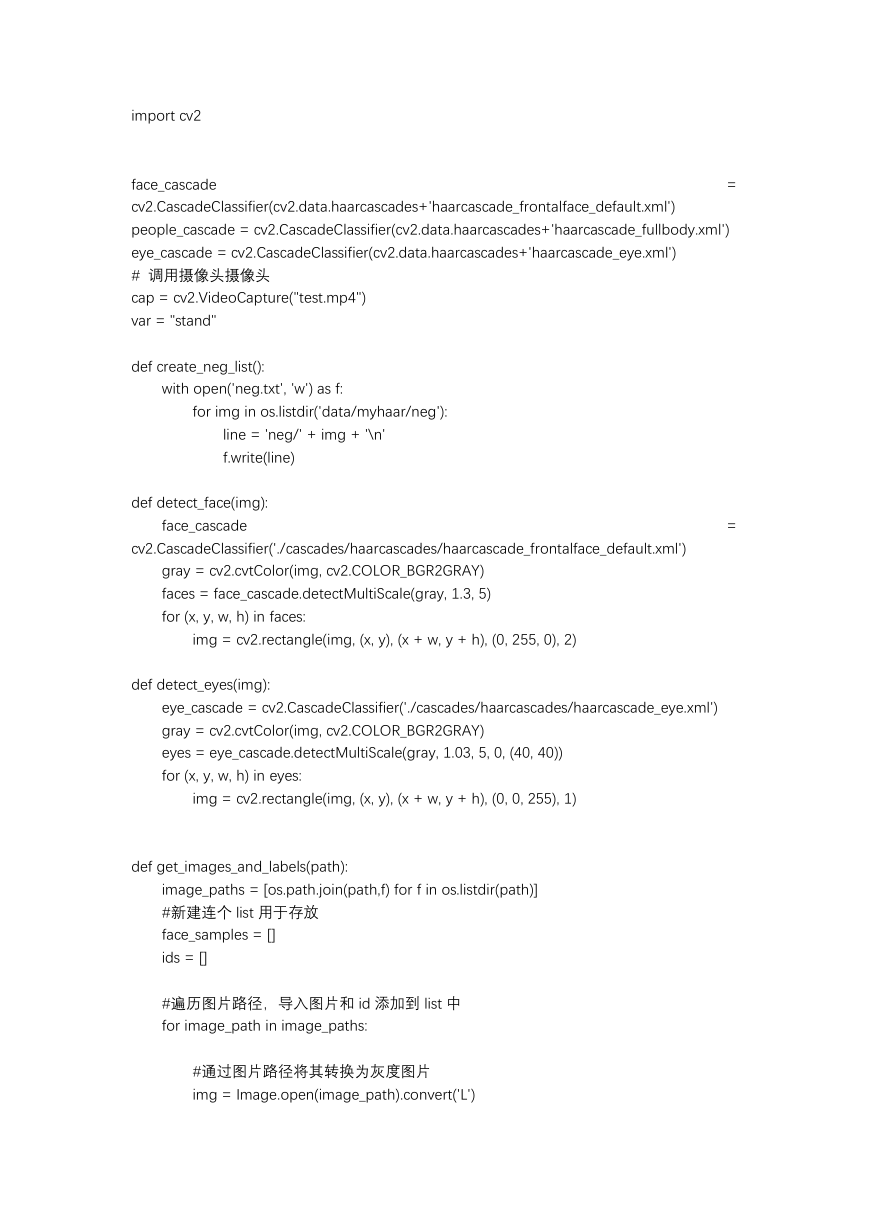

import cv2

face_cascade

cv2.CascadeClassifier(cv2.data.haarcascades+'haarcascade_frontalface_default.xml')

people_cascade = cv2.CascadeClassifier(cv2.data.haarcascades+'haarcascade_fullbody.xml')

eye_cascade = cv2.CascadeClassifier(cv2.data.haarcascades+'haarcascade_eye.xml')

# 调用摄像头摄像头

cap = cv2.VideoCapture("test.mp4")

var = "stand"

=

def create_neg_list():

with open('neg.txt', 'w') as f:

for img in os.listdir('data/myhaar/neg'):

line = 'neg/' + img + '\n'

f.write(line)

def detect_face(img):

face_cascade

cv2.CascadeClassifier('./cascades/haarcascades/haarcascade_frontalface_default.xml')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

faces = face_cascade.detectMultiScale(gray, 1.3, 5)

for (x, y, w, h) in faces:

img = cv2.rectangle(img, (x, y), (x + w, y + h), (0, 255, 0), 2)

def detect_eyes(img):

eye_cascade = cv2.CascadeClassifier('./cascades/haarcascades/haarcascade_eye.xml')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

eyes = eye_cascade.detectMultiScale(gray, 1.03, 5, 0, (40, 40))

for (x, y, w, h) in eyes:

img = cv2.rectangle(img, (x, y), (x + w, y + h), (0, 0, 255), 1)

=

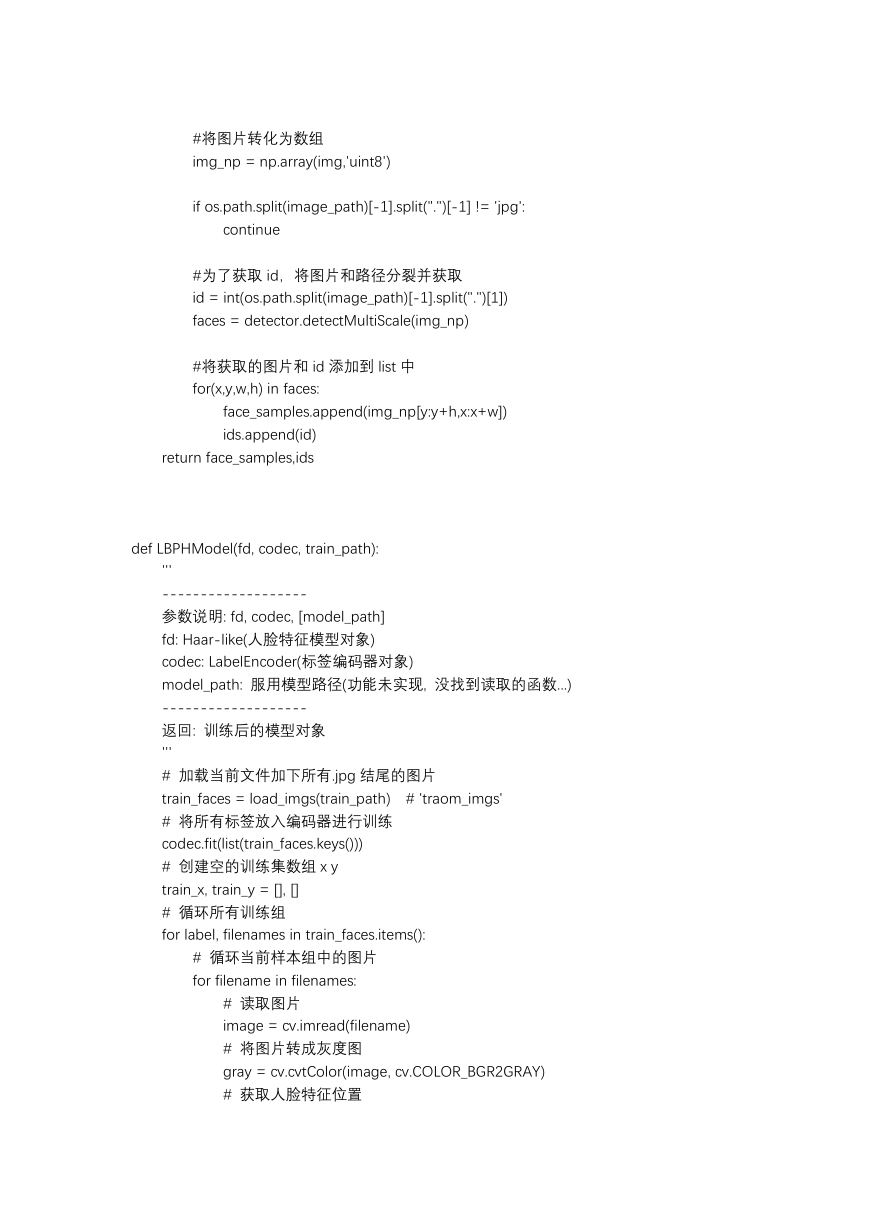

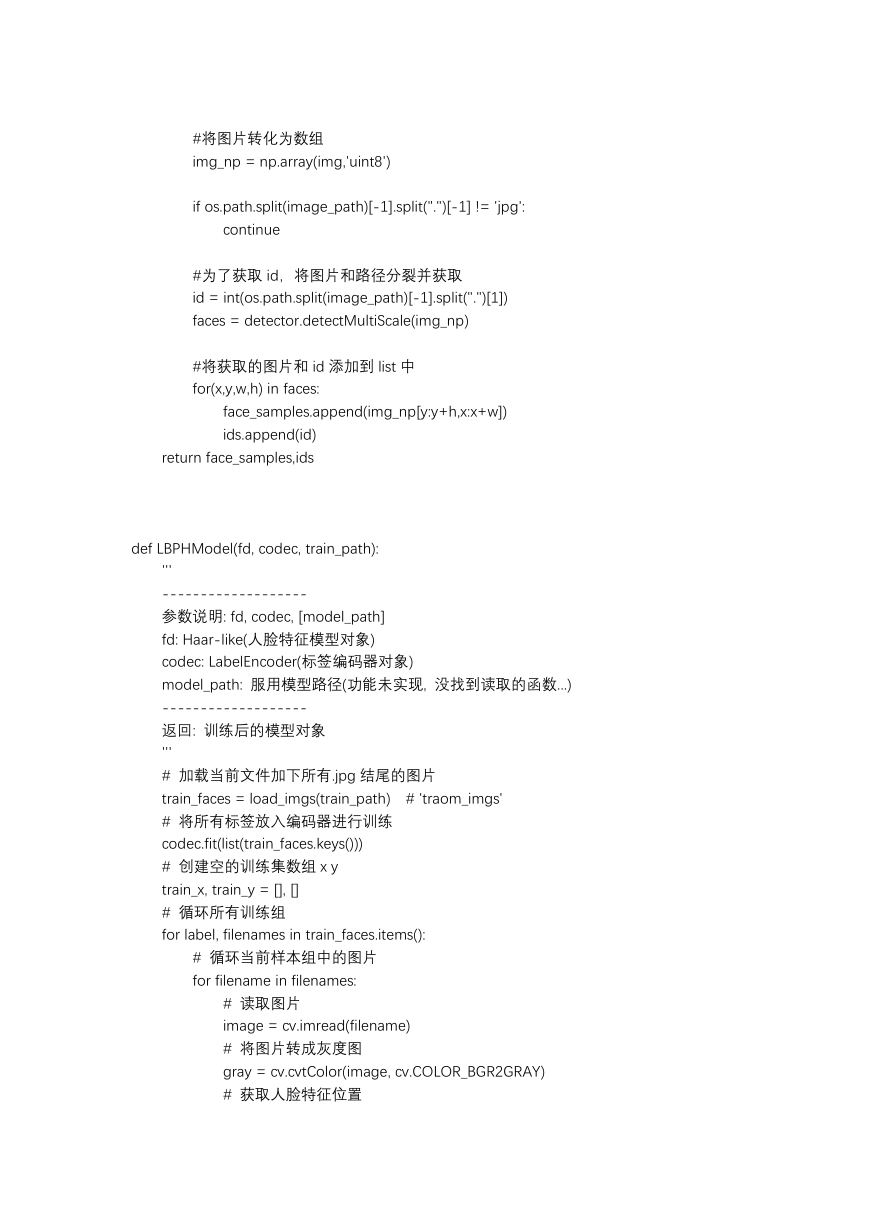

def get_images_and_labels(path):

image_paths = [os.path.join(path,f) for f in os.listdir(path)]

#新建连个 list 用于存放

face_samples = []

ids = []

#遍历图片路径,导入图片和 id 添加到 list 中

for image_path in image_paths:

#通过图片路径将其转换为灰度图片

img = Image.open(image_path).convert('L')

�

#将图片转化为数组

img_np = np.array(img,'uint8')

if os.path.split(image_path)[-1].split(".")[-1] != 'jpg':

continue

#为了获取 id,将图片和路径分裂并获取

id = int(os.path.split(image_path)[-1].split(".")[1])

faces = detector.detectMultiScale(img_np)

#将获取的图片和 id 添加到 list 中

for(x,y,w,h) in faces:

face_samples.append(img_np[y:y+h,x:x+w])

ids.append(id)

return face_samples,ids

def LBPHModel(fd, codec, train_path):

'''

-------------------

参数说明: fd, codec, [model_path]

fd: Haar-like(人脸特征模型对象)

codec: LabelEncoder(标签编码器对象)

model_path: 服用模型路径(功能未实现, 没找到读取的函数...)

-------------------

返回: 训练后的模型对象

'''

# 加载当前文件加下所有.jpg 结尾的图片

train_faces = load_imgs(train_path) # 'traom_imgs'

# 将所有标签放入编码器进行训练

codec.fit(list(train_faces.keys()))

# 创建空的训练集数组 x y

train_x, train_y = [], []

# 循环所有训练组

for label, filenames in train_faces.items():

# 循环当前样本组中的图片

for filename in filenames:

# 读取图片

image = cv.imread(filename)

# 将图片转成灰度图

gray = cv.cvtColor(image, cv.COLOR_BGR2GRAY)

# 获取人脸特征位置

�

faces = fd.detectMultiScale(

gray, 1.1, 2, minSize=(100, 100))

# 循环脸部特征数组

for l, t, w, h in faces:

# 将图片中的脸部特征裁剪下来

train_x.append(gray[t:t + h, l:l + w])

# 标签编码结果存储

train_y.append(codec.transform([label])[0])

train_y = np.array(train_y)

# 创建 LBPH 人脸检测器

model = cv.face.LBPHFaceRecognizer_create()

# 对训练集进行训练

model.train(train_x, train_y)

return model

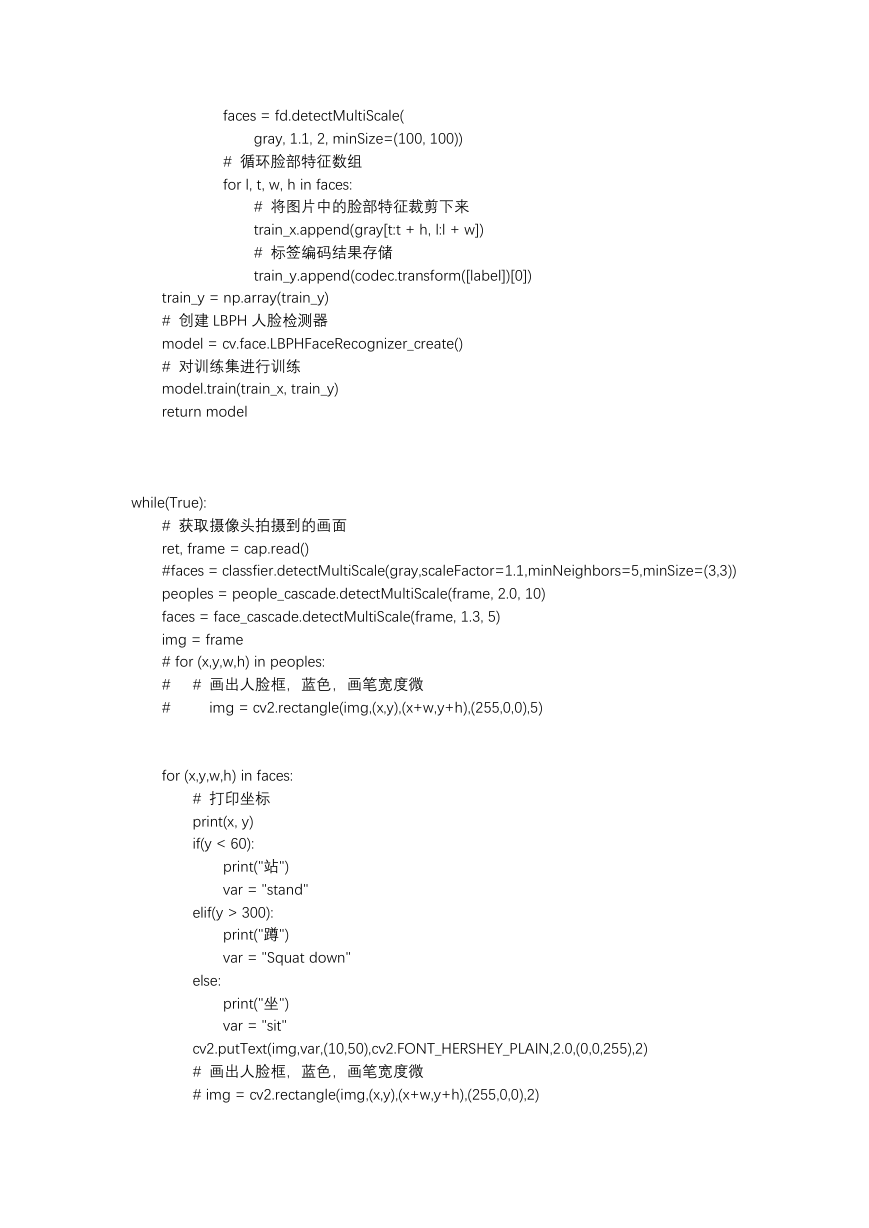

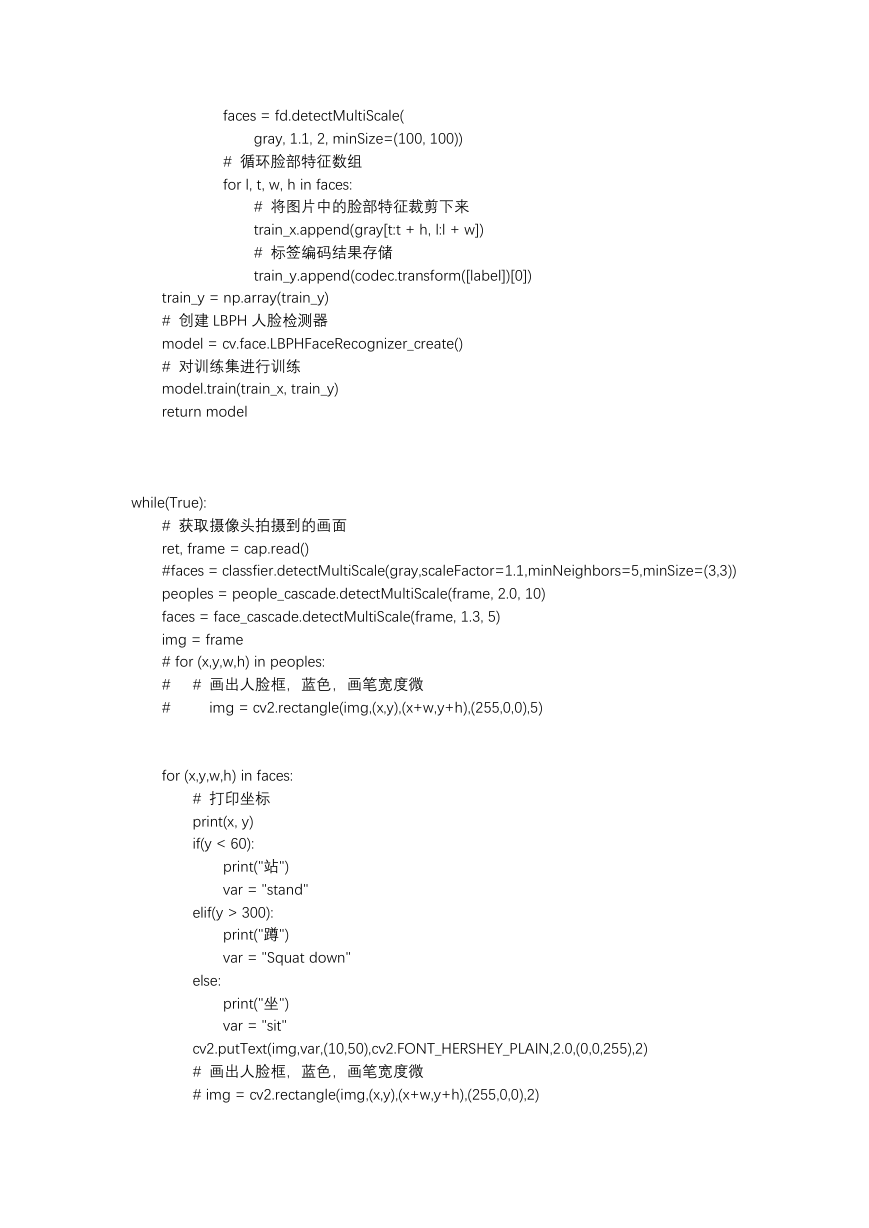

while(True):

# 获取摄像头拍摄到的画面

ret, frame = cap.read()

#faces = classfier.detectMultiScale(gray,scaleFactor=1.1,minNeighbors=5,minSize=(3,3))

peoples = people_cascade.detectMultiScale(frame, 2.0, 10)

faces = face_cascade.detectMultiScale(frame, 1.3, 5)

img = frame

# for (x,y,w,h) in peoples:

#

#

img = cv2.rectangle(img,(x,y),(x+w,y+h),(255,0,0),5)

# 画出人脸框,蓝色,画笔宽度微

for (x,y,w,h) in faces:

# 打印坐标

print(x, y)

if(y < 60):

print("站")

var = "stand"

elif(y > 300):

print("蹲")

var = "Squat down"

else:

print("坐")

var = "sit"

cv2.putText(img,var,(10,50),cv2.FONT_HERSHEY_PLAIN,2.0,(0,0,255),2)

# 画出人脸框,蓝色,画笔宽度微

# img = cv2.rectangle(img,(x,y),(x+w,y+h),(255,0,0),2)

�

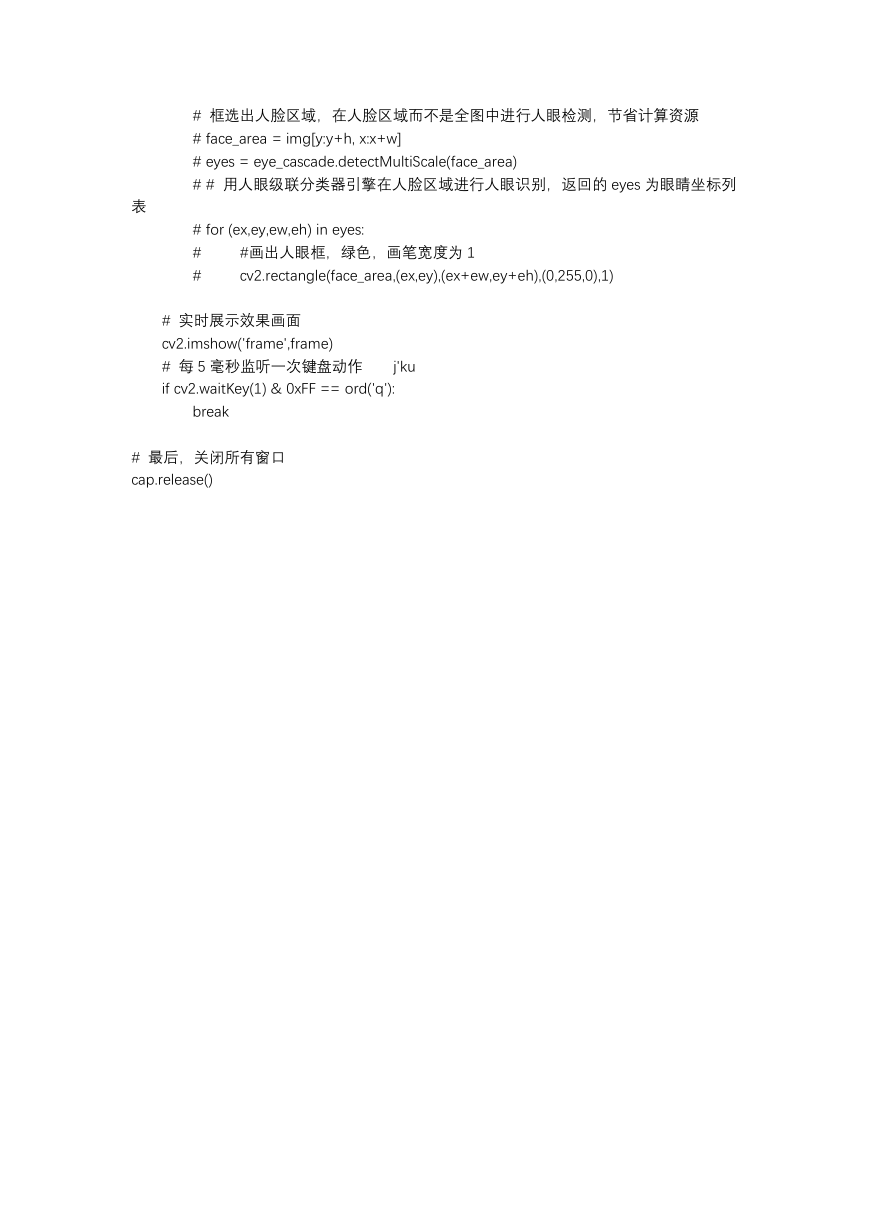

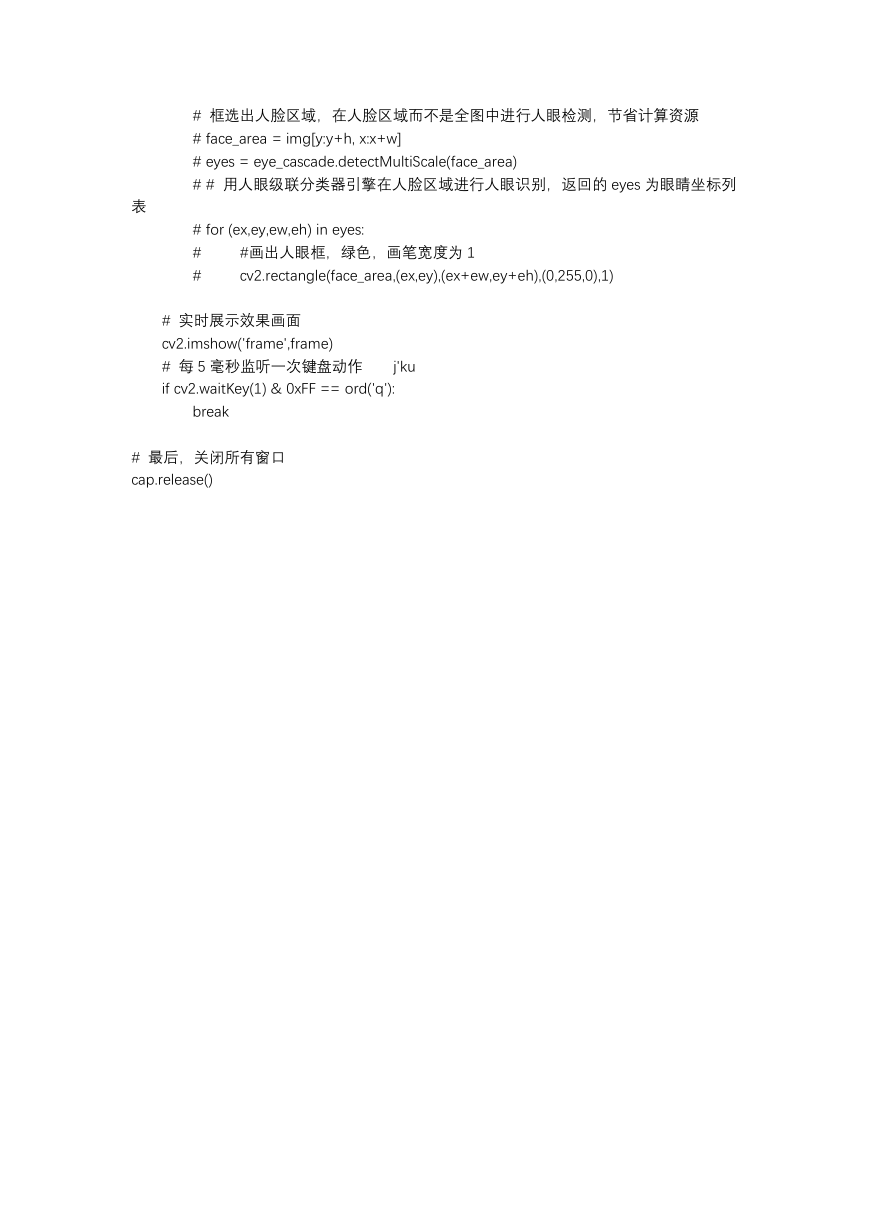

# 框选出人脸区域,在人脸区域而不是全图中进行人眼检测,节省计算资源

# face_area = img[y:y+h, x:x+w]

# eyes = eye_cascade.detectMultiScale(face_area)

# # 用人眼级联分类器引擎在人脸区域进行人眼识别,返回的 eyes 为眼睛坐标列

表

# for (ex,ey,ew,eh) in eyes:

#

#

#画出人眼框,绿色,画笔宽度为 1

cv2.rectangle(face_area,(ex,ey),(ex+ew,ey+eh),(0,255,0),1)

# 实时展示效果画面

cv2.imshow('frame',frame)

# 每 5 毫秒监听一次键盘动作

if cv2.waitKey(1) & 0xFF == ord('q'):

j'ku

break

# 最后,关闭所有窗口

cap.release()

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc