This is page iii

Printer: Opaque this

Jorge Nocedal

Stephen J. Wright

Numerical Optimization

Second Edition

�

This is pag

Printer: O

Stephen J. Wright

Computer Sciences Department

University of Wisconsin

1210 West Dayton Street

Madison, WI 53706–1613

USA

swright@cs.wisc.edu

Stephen M. Robinson

Department of Industrial and Systems

Engineering

University of Wisconsin

1513 University Avenue

Madison, WI 53706–1539

USA

smrobins@facstaff.wise.edu

Jorge Nocedal

EECS Department

Northwestern University

Evanston, IL 60208-3118

USA

nocedal@eecs.northwestern.edu

Series Editors:

Thomas V. Mikosch

University of Copenhagen

Laboratory of Actuarial Mathematics

DK-1017 Copenhagen

Denmark

mikosch@act.ku.dk

Sidney I. Resnick

Cornell University

School of Operations Research and

Industrial Engineering

Ithaca, NY 14853

USA

sirl@cornell.edu

Mathematics Subject Classification (2000): 90B30, 90C11, 90-01, 90-02

Library of Congress Control Number: 2006923897

ISBN-10: 0-387-30303-0

ISBN-13: 978-0387-30303-1

Printed on acid-free paper.

C 2006 Springer Science+Business Media, LLC.

All rights reserved. This work may not be translated or copied in whole or in part without the written permission

of the publisher (Springer Science+Business Media, LLC, 233 Spring Street, New York, NY 10013, USA), except for

brief excerpts in connection with reviews or scholarly analysis. Use in connection with any form of information

storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now

known or hereafter developed is forbidden.

The use in this publication of trade names, trademarks, service marks, and similar terms, even if they are not

identified as such, is not to be taken as an expression of opinion as to whether or not they are subject to proprietary

rights.

Printed in the United States of America.

(TB/HAM)

9 8 7 6 5 4 3 2 1

springer.com

�

This is page v

Printer: Opaque this

To Sue, Isabel and Martin

and

To Mum and Dad

�

Contents

Preface

Preface to the Second Edition

1

Introduction

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Mathematical Formulation .

.

.

Example: A Transportation Problem .

.

Continuous versus Discrete Optimization .

.

Constrained and Unconstrained Optimization .

.

Global and Local Optimization .

.

.

Stochastic and Deterministic Optimization .

.

.

Convexity

.

Optimization Algorithms

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Notes and References

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

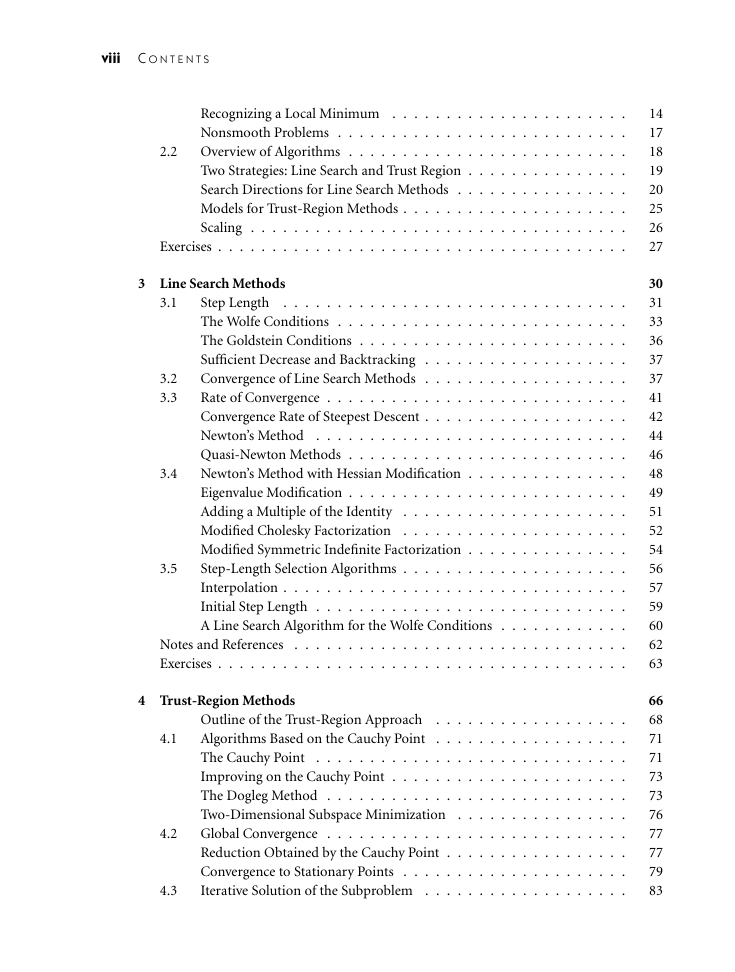

2 Fundamentals of Unconstrained Optimization

.

2.1 What Is a Solution?

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

This is page vii

Printer: Opaque this

xvii

xxi

1

2

4

5

6

6

7

7

8

9

10

12

�

viii

C O N T E N T S

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Recognizing a Local Minimum .

.

.

Nonsmooth Problems .

.

.

Overview of Algorithms .

.

Two Strategies: Line Search and Trust Region .

.

Search Directions for Line Search Methods .

.

.

Models for Trust-Region Methods .

Scaling .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

2.2

Exercises .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

3.1

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

3.2

3.3

3 Line Search Methods

.

.

.

.

Step Length .

.

.

.

.

The Wolfe Conditions .

.

.

.

The Goldstein Conditions .

.

.

.

Sufficient Decrease and Backtracking .

.

.

Convergence of Line Search Methods .

.

.

Rate of Convergence .

.

.

.

Convergence Rate of Steepest Descent .

.

.

.

Newton’s Method .

.

.

Quasi-Newton Methods .

.

.

.

Newton’s Method with Hessian Modification .

.

.

Eigenvalue Modification .

.

.

Adding a Multiple of the Identity .

.

.

Modified Cholesky Factorization .

.

.

Modified Symmetric Indefinite Factorization .

.

.

Step-Length Selection Algorithms .

.

.

Interpolation .

.

.

Initial Step Length .

.

.

.

A Line Search Algorithm for the Wolfe Conditions .

.

.

Notes and References

Exercises .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

3.4

3.5

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

4 Trust-Region Methods

.

.

.

.

.

.

.

.

.

.

.

.

.

Outline of the Trust-Region Approach .

.

Algorithms Based on the Cauchy Point

.

.

.

.

.

The Cauchy Point

.

.

.

.

.

Improving on the Cauchy Point .

The Dogleg Method .

.

.

.

.

.

Two-Dimensional Subspace Minimization .

.

Global Convergence .

.

.

Reduction Obtained by the Cauchy Point .

.

Convergence to Stationary Points .

.

.

.

.

Iterative Solution of the Subproblem .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

4.1

4.2

4.3

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

14

17

18

19

20

25

26

27

30

31

33

36

37

37

41

42

44

46

48

49

51

52

54

56

57

59

60

62

63

66

68

71

71

73

73

76

77

77

79

83

�

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

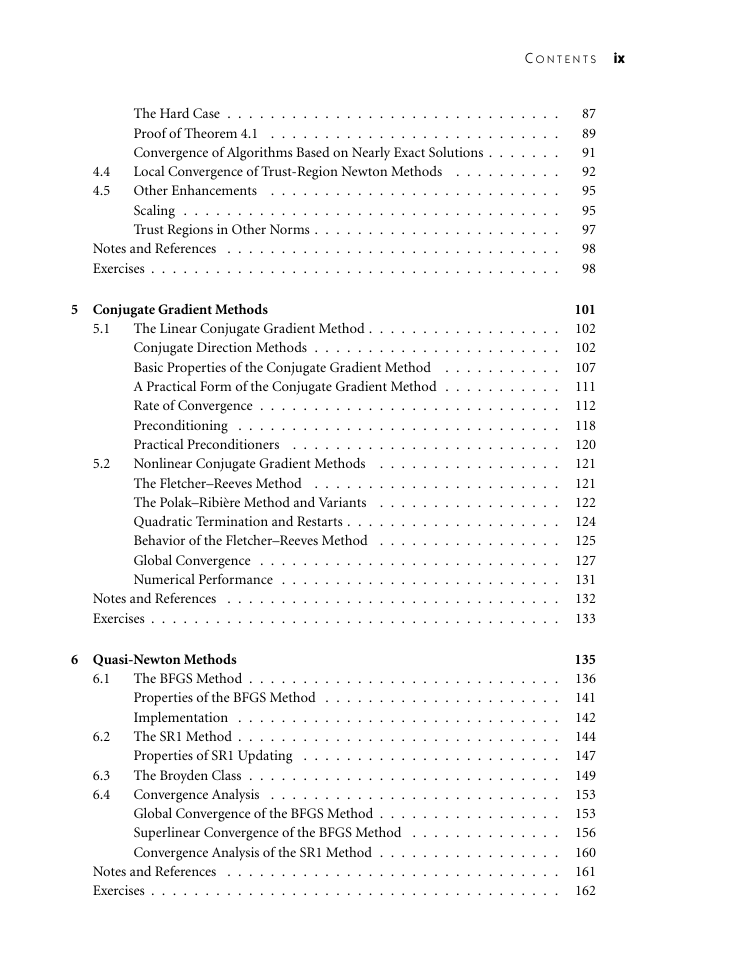

The Hard Case .

.

Proof of Theorem 4.1 .

.

Convergence of Algorithms Based on Nearly Exact Solutions .

.

Local Convergence of Trust-Region Newton Methods

.

.

.

Other Enhancements

.

.

Scaling .

.

.

Trust Regions in Other Norms .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Notes and References

Exercises .

.

.

.

.

5 Conjugate Gradient Methods

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

The BFGS Method .

.

.

.

Properties of the BFGS Method .

.

.

.

Implementation .

.

.

.

The SR1 Method .

.

.

.

.

.

Properties of SR1 Updating .

.

.

.

.

The Broyden Class .

.

Convergence Analysis

.

.

.

.

Global Convergence of the BFGS Method .

.

Superlinear Convergence of the BFGS Method .

.

Convergence Analysis of the SR1 Method .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Notes and References

.

Exercises .

.

.

.

.

C O N T E N T S

ix

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

87

89

91

92

95

95

97

98

98

101

102

102

107

111

112

118

120

121

121

122

124

125

127

131

132

133

135

136

141

142

144

147

149

153

153

156

160

161

162

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

4.4

4.5

5.1

5.2

6.1

6.2

6.3

6.4

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

The Linear Conjugate Gradient Method .

Conjugate Direction Methods .

.

.

Basic Properties of the Conjugate Gradient Method .

A Practical Form of the Conjugate Gradient Method .

.

.

.

Rate of Convergence .

.

.

.

Preconditioning .

.

.

.

.

Practical Preconditioners

.

.

.

Nonlinear Conjugate Gradient Methods

.

.

The Fletcher–Reeves Method .

.

.

The Polak–Ribi`ere Method and Variants

.

.

Quadratic Termination and Restarts .

.

.

.

.

Behavior of the Fletcher–Reeves Method .

.

.

Global Convergence .

.

Numerical Performance .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Notes and References

Exercises .

.

.

.

.

.

6 Quasi-Newton Methods

�

x

C O N T E N T S

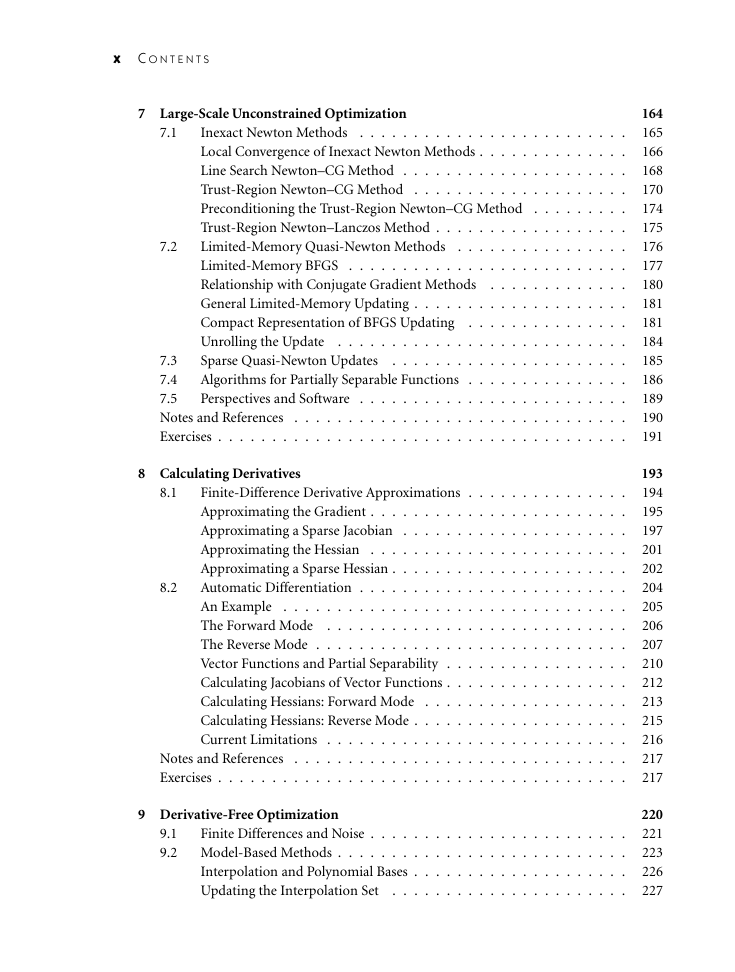

7 Large-Scale Unconstrained Optimization

.

7.1

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Inexact Newton Methods

.

.

Local Convergence of Inexact Newton Methods .

.

.

Line Search Newton–CG Method .

.

Trust-Region Newton–CG Method .

.

.

Preconditioning the Trust-Region Newton–CG Method .

.

Trust-Region Newton–Lanczos Method .

.

.

.

Limited-Memory Quasi-Newton Methods

.

Limited-Memory BFGS .

.

.

.

Relationship with Conjugate Gradient Methods

General Limited-Memory Updating .

.

.

.

.

.

Compact Representation of BFGS Updating

.

.

.

Unrolling the Update

.

Sparse Quasi-Newton Updates

.

.

.

.

Algorithms for Partially Separable Functions .

.

.

Perspectives and Software .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

7.3

7.4

7.5

Notes and References

Exercises .

.

.

.

.

.

.

8 Calculating Derivatives

7.2

8.1

8.2

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Finite-Difference Derivative Approximations .

.

.

Approximating the Gradient .

.

.

.

Approximating a Sparse Jacobian .

.

.

.

Approximating the Hessian .

.

.

.

.

Approximating a Sparse Hessian .

.

Automatic Differentiation .

.

.

.

.

.

.

.

.

An Example .

.

.

.

.

.

.

The Forward Mode

.

The Reverse Mode .

.

.

.

.

Vector Functions and Partial Separability .

.

.

Calculating Jacobians of Vector Functions .

.

.

Calculating Hessians: Forward Mode .

.

.

.

Calculating Hessians: Reverse Mode .

Current Limitations .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Notes and References

Exercises .

.

.

.

.

9 Derivative-Free Optimization

9.1

9.2 Model-Based Methods .

Finite Differences and Noise .

.

.

.

Interpolation and Polynomial Bases .

.

Updating the Interpolation Set

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

164

165

166

168

170

174

175

176

177

180

181

181

184

185

186

189

190

191

193

194

195

197

201

202

204

205

206

207

210

212

213

215

216

217

217

220

221

223

226

227

�

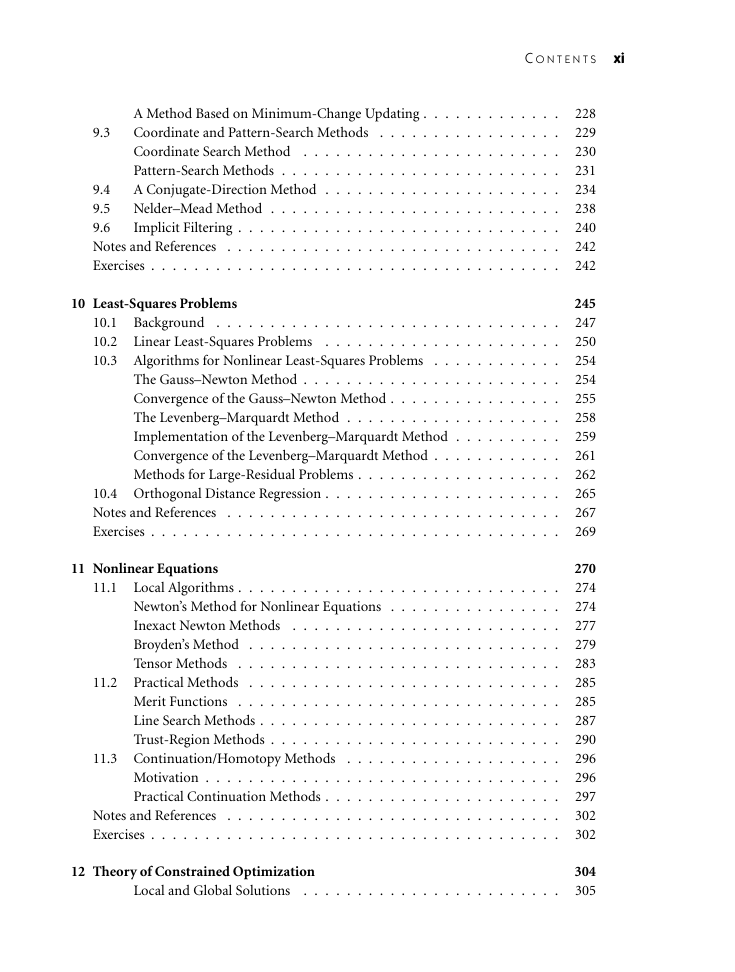

C O N T E N T S

xi

9.3

A Method Based on Minimum-Change Updating .

.

Coordinate and Pattern-Search Methods

.

Coordinate Search Method .

.

.

.

.

Pattern-Search Methods .

.

.

.

.

A Conjugate-Direction Method .

.

.

.

Nelder–Mead Method .

Implicit Filtering .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

9.4

9.5

9.6

Notes and References

Exercises .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

10 Least-Squares Problems

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Linear Least-Squares Problems

.

10.1 Background .

10.2

.

10.3 Algorithms for Nonlinear Least-Squares Problems

.

.

.

.

.

.

.

The Gauss–Newton Method .

.

.

Convergence of the Gauss–Newton Method .

The Levenberg–Marquardt Method .

.

.

Implementation of the Levenberg–Marquardt Method .

.

Convergence of the Levenberg–Marquardt Method .

.

.

Methods for Large-Residual Problems .

.

.

.

.

.

.

.

.

.

10.4 Orthogonal Distance Regression .

Notes and References

.

.

.

Exercises .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

11 Nonlinear Equations

.

.

.

.

.

.

.

.

.

.

.

.

.

11.1

11.2

.

Local Algorithms .

Newton’s Method for Nonlinear Equations .

.

Inexact Newton Methods

.

.

.

Broyden’s Method .

.

Tensor Methods

.

.

.

.

.

.

.

.

Practical Methods

.

.

Merit Functions

.

.

.

.

Line Search Methods .

.

Trust-Region Methods .

.

.

.

.

.

.

.

.

.

.

.

.

.

11.3 Continuation/Homotopy Methods

Motivation .

.

Practical Continuation Methods .

.

.

Notes and References

.

Exercises .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

12 Theory of Constrained Optimization

.

Local and Global Solutions

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

228

229

230

231

234

238

240

242

242

245

247

250

254

254

255

258

259

261

262

265

267

269

270

274

274

277

279

283

285

285

287

290

296

296

297

302

302

304

305

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc