Infrared and Visible Image Fusion:

A Region-Based Deep Learning Method

Chunyu Xie1 and Xinde Li1,2(B)

1 Key Laboratory of Measurement and Control of CSE, School of Automation,

Southeast University, Nanjing, China

{cyxie,xindeli}@seu.edu.cn

2 School of Cyber Science and Engineering, Southeast University, Nanjing, China

Abstract. Infrared and visible image fusion is playing an important role

in robot perception. The key of fusion is to extract useful information

from source image by appropriate methods. In this paper, we propose

a deep learning method for infrared and visible image fusion based on

region segmentation. Firstly, the source infrared image is segmented into

foreground part and background part, then we build an infrared and vis-

ible image fusion network on the basis of neural style transfer algorithm.

We propose foreground loss and background loss to control the fusion of

the two parts respectively. And finally the fused image is reconstructed

by combining the two parts together. The experimental results show

that compared with other state-of-art methods, our method retains both

saliency information of target and detail texture information of back-

ground.

Keywords: Infrared image · Visible image · Image fusion ·

Region segmentation · Deep learning

1 Introduction

The purpose of infrared and visible image fusion is combining the images

obtained by infrared and visible sensors to generate robust and informative

images for further processing. Infrared images can distinguish targets from their

backgrounds based on the radiation difference while visible images can provide

texture details with high spatial resolution and definition in a manner consistent

with the human visual system [1]. The target of infrared and visible image fusion

is to combine thermal radiation information in infrared image with detailed tex-

ture information in visible image. In recent years, research on fusion algorithms

has been developing rapidly. However, an appropriate image information extrac-

tion method is key to ensuring good fusion performance of infrared and visible

images.

The existing fusion algorithms are divided into seven categories including

multi-scale transform [2], sparse representation [3], neural network [4], subspace

[5], and saliency based [6] methods, hybrid models [7], and other methods [8].

c Springer Nature Switzerland AG 2019

H. Yu et al. (Eds.): ICIRA 2019, LNAI 11744, pp. 604–615, 2019.

https://doi.org/10.1007/978-3-030-27541-9_49

�

Infrared and Visible Image Fusion

605

The main steps of these methods are decompose source images into several

levels, fuse corresponding layers with particular rules, and reconstruct the tar-

get images. Many fusion methods are based on pixel-level image fusion. These

methods can not effectively extract the target area we interested in and heav-

ily depend on predefined transforms and corresponding levels for decomposition

and reconstruction. However, in several practical applications, our attention is

focused on the objects of images at the region level [1]. Hence, region-level infor-

mation should be considered during image fusion [9]. Consequently, region-based

fusion rules have been widely used in infrared and visible image fusion [10]. Many

region-based fusion methods have been proposed for infrared and visible image

fusion, such as feature region extraction [11], regional uniformity [12], regional

energy [10], and multi-judgment fusion rule [13]. Some representative methods

are based on the salient region [13]. These method aims to identify regions that

are more salient than other areas. This model has been used to extract visually

salient regions of images, which can be used to obtain saliency maps of multi-

scale sub-images [1]. Zhang et al. adopted the super-pixel-based saliency method

to extract salient target regions; then, the fused coefficient could be obtained by

the extracted target region using a morphological method [14]. There are two

disadvantages in these methods. (1) These methods adopt the same fusion rules

for target and background areas. (2) These methods can not extract the region

of target accurately. Therefore, in order to solve these existing problems, it is

necessary to propose better methods.

In this paper, we propose a region-based fusion method to solve these prob-

lems. The source infrared image is segmented into foreground part and back-

ground part by semantic segmentation. We propose a deep learning fusion

method and propose foreground loss and background loss to control the fusion

of different regions respectively. The fused image is reconstructed by combining

the foreground part and the background part. The rest of this paper is struc-

tured as follows. In Sect. 2, the background of this research will be introduced.

In Sect. 3, the methods we proposed is introduced in detail. The performance of

our method and experimental results on public data sets are shown in Sect. 4.

Finally, we draw a conclusion of our proposed method in Sect. 5.

2 Background

Semantic segmentation, also called scene labeling, refers to the process of assign-

ing a semantic label to each pixel of an image [15]. With the development of deep

learning, research in semantic segmentation has been significantly improved.

Semantic segmentation based on deep learning can accurately classify each pixel

and have already achieved well performance on very complex RGB image data

sets. Compared with RGB images, infrared images are usually gray-scale images,

the difference between target area and background area is more obvious. There-

fore, we believe that semantic segmentation will also achieve good results on

infrared images. Gatys et al. [16] proposed a deep learning method in creat-

ing artistic imagery by separating and recombining image content and style.

�

606

C. Xie and X. Li

This process of using Convolutional Neural Networks (CNNs) to render a con-

tent image in different styles is referred to as Neural Style Transfer (NST) [17].

They extract deep features at different layers from images by using CNNs. Con-

tent loss and style loss are defined to control the fusion of content and texture.

Different from traditional methods, they use deep features to reconstruct images.

Inspired by their work, we segment the source infrared image into sub-regions

and fuse them with visible image separately. We propose foreground loss and

background loss to control the fusion of the two different regions based on the

works of Gatys et al [16]. The details of our method will be presented in the next

section.

3 Method

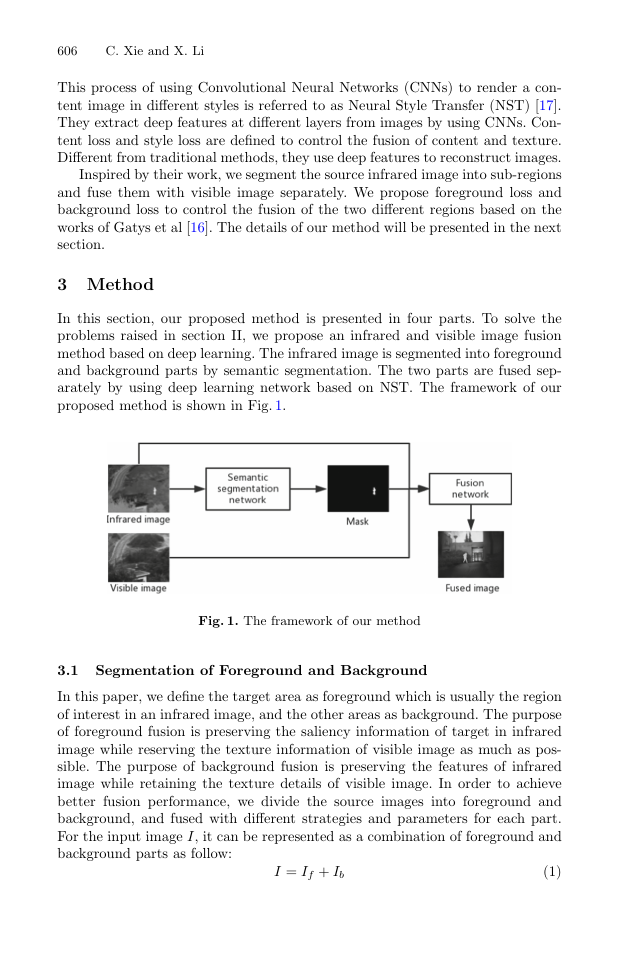

In this section, our proposed method is presented in four parts. To solve the

problems raised in section II, we propose an infrared and visible image fusion

method based on deep learning. The infrared image is segmented into foreground

and background parts by semantic segmentation. The two parts are fused sep-

arately by using deep learning network based on NST. The framework of our

proposed method is shown in Fig. 1.

Fig. 1. The framework of our method

3.1 Segmentation of Foreground and Background

In this paper, we define the target area as foreground which is usually the region

of interest in an infrared image, and the other areas as background. The purpose

of foreground fusion is preserving the saliency information of target in infrared

image while reserving the texture information of visible image as much as pos-

sible. The purpose of background fusion is preserving the features of infrared

image while retaining the texture details of visible image. In order to achieve

better fusion performance, we divide the source images into foreground and

background, and fused with different strategies and parameters for each part.

For the input image I, it can be represented as a combination of foreground and

background parts as follow:

I = If + Ib

(1)

�

Infrared and Visible Image Fusion

607

If is the foreground part, and Ib is the background part. To divide the image,

we use a semantic segmentation network to segment an infrared image into

foreground part and background part, and train it on TNO and INO datasets.

3.2 Fusion of Foreground and Background

Fusion of Foreground. In an infrared image, foreground is usually the salient

area. Hence, we take foreground part of the source infrared image as the basis of

the fused image, so that the salient information of the target will be preserved.

We extract texture and detail features from foreground part of the source visible

image. In order to extract the optimal detail features, we use CNNs to extract

the deep features of the image. We define the foreground loss to control the fusion

of foreground. In Gatyss work, the content loss of the I-th layer is defined as:

(2)

(3)

Ll

c =

1

2NlDl

ij

(Fl[O] − Fl[I])2

ij

The style loss of the l-th layer is defined as:

Ll

s =

1

2N 2

l

ij

(Gl[O] − Gl[S])2

ij

I is the input image, O is the output image and S is the reference style image.

Nl is the number of filters in the l-th layer. Dl is the size of vectorized feature

map of each filter in the l-th layer. Ff,l[·] is the feature matrix with (i, j) indi-

cating its index. Gf,l[·] = Ff,l[·]Ff,l[·]T is the Gram matrix which is defined as

the inner product between the vectorized feature maps. Inspired by Gats’work,

we use content loss to constrain the basic content information and style loss

which is defined as texture loss in this paper to constrain the details and tex-

ture information of the fused image. For the input infrared image I, the input

visible image V and the output fused image O, the foreground loss function of

the fusion network is defined as:

L

Lf =

αl

f Ll

f,c +

L

βl

f Ll

f,s

(4)

l=1

l=1

The network contains L layers, Ll

f,c and Ll

f,s indicate the content loss and texture

loss of the foreground fusion in the l-th layer. The content loss and texture loss

of foreground fusion are controlled by αl

f . The content loss and texture

loss are defined as follow. The content loss of l-th layer is:

(Ff,l[O] − Ff,l[I])2

f and βl

Ll

f,c =

(5)

1

ij

2NlDl

ij

and the texture loss of the l-th layer is:

Ll

f,s =

1

2N 2

l

ij

(Gf,l[O] − Gf,l[V ])2

ij

(6)

�

608

C. Xie and X. Li

and:

Ff,l[O] = Fl[O]Mf,l[I]

Ff,l[I] = Fl[I]Mf,l[I]

Ff,l[V ] = Fl[V ]Mf,l[I]

(7)

(8)

(9)

Mf,l[I] denotes the foreground segmentation mask. To adapt each layer, the

mask is down sampled to Mf,l[I]. In our test data, infrared and visible images

have been strictly registered and there is Mf,l[I] = Mf,l[V ]. All the Mf,l[V ]

items have been replaced by Mf,l[I] in formulas above.

Fusion of Background. Deferent from foreground, we pay more attention to

detail textures in background. Hence, we take background part of the source

visible image as the basis of the fused image, so that the detail information of

visible image will be preserved. We extract textures from the source infrared

image. For background part, we define the background loss to control the fusion.

The loss function is defined as:

Lb =

L

l=1

αl

bLl

b,c +

L

l=1

βl

bLl

b,s

(10)

b,c and Ll

Ll

b,s indicate the content loss and texture loss of the background fusion

in the l-th layer. The weights of content loss and texture loss of background

fusion are αl

b. The content loss and texture loss are defined as follow.

The content loss of the l-th layer is:

b and βl

Ll

b,c =

1

2NlDl

ij

(Fb,l[O] − Fb,l[V ])2

ij

and the texture loss of the l-th layer is:

Ll

b,s =

1

2N 2

l

ij

(Gb,l[O] − Gb,l[I])2

ij

(11)

(12)

and:

(13)

(14)

(15)

Similar to the foreground fusion, Mb,l[I] = Mb,l[V ], and all the Mb,l[V ] items

have been replaced by Mb,l[I] in formulas above.

Fb,l[O] = Fl[O]Mb,l[I]

Fb,l[I] = Fl[I]Mb,l[I]

Fb,l[V ] = Fl[V ]Mb,l[I]

�

Infrared and Visible Image Fusion

609

Fig. 2. The procedure of fusion

3.3 Reconstruction

We reconstruct the fused image by combining the fused foreground part and

background part. The total loss function of the fusion network is formulated by

combining the foreground loss and the background loss together. We add Ltv

term to suppress the noise generated in the fusion process. The total loss of the

fusion network is:

Ltotal = Lf + Lb + Ltv

=

L

l=1

(αl

f Ll

f,c + αl

bLl

b,c) +

(βl

f Ll

f,s + βl

bLl

b,s) + Ltv

(16)

L

l=1

3.4 Implementation Details

In this part, the implementation details of our method will be described. We

adopt a state-of-art semantic segmentation network SegNet [18] on the segmen-

tation of infrared images, and generate masks for further processing. We use it to

segment the image into two categories, and the network is trained on 1000 images

from the TNO and INO data sets. To generate the mask, we only segment the

infrared image since the infrared and visible image pairs have been registered,

and it is not difficult mapping the segmentation mask to the visible image. In our

fusion network, as shown in Fig. 2, a pre-trained VGG-19 network is employed as

the feature extractor. For foreground fusion, we choose layer conv2 2 to extract

the content feature, and layer conv1 1, conv2 1 to extract the texture feature.

For background fusion, we choose layer conv4 2 to extract the content feature,

and layer conv3 1, conv4 1, conv5 1 to extract the texture feature. The mask is

down sampled to correspond feature maps of different layers.

4 Results and Comparison

In this section, the performance of our method will be evaluated by experiments

on common data sets and compared with other methods.

�

610

C. Xie and X. Li

4.1 Results

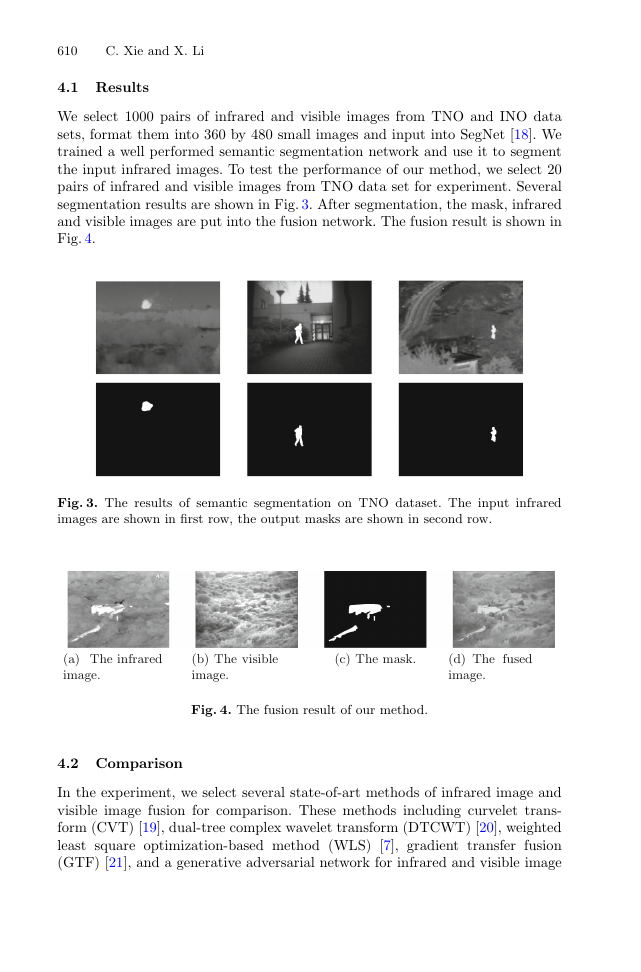

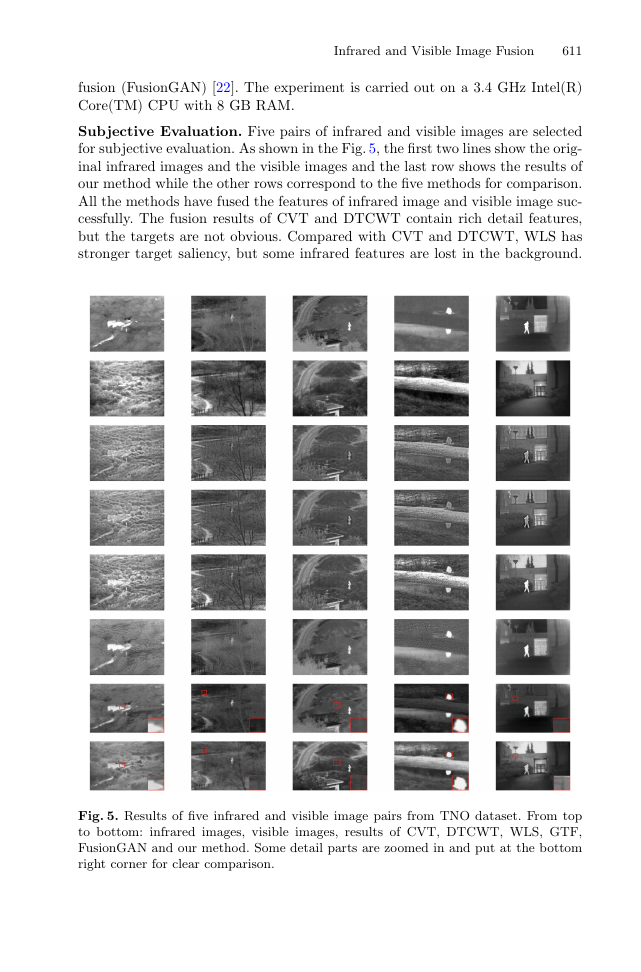

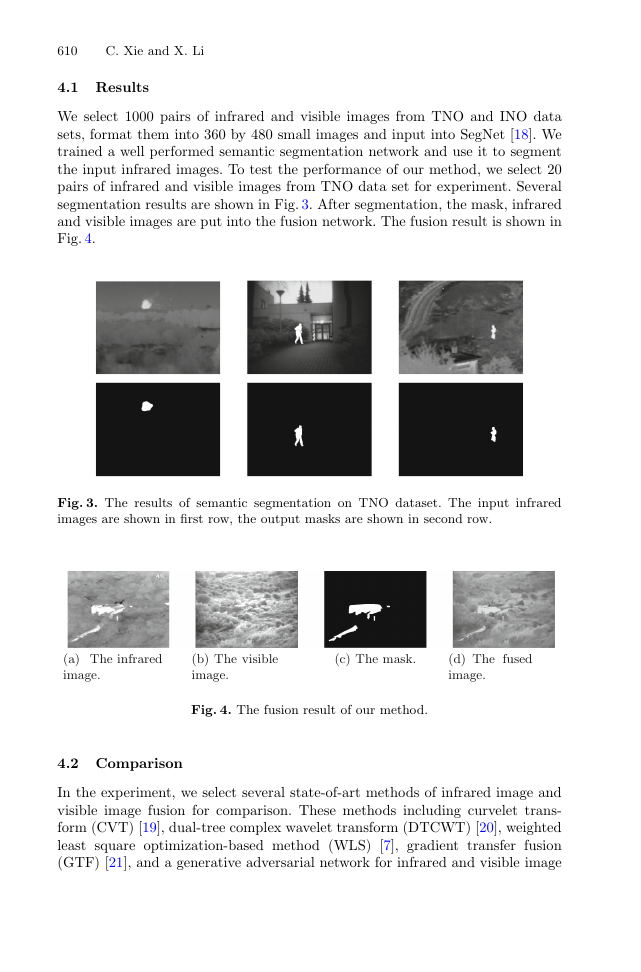

We select 1000 pairs of infrared and visible images from TNO and INO data

sets, format them into 360 by 480 small images and input into SegNet [18]. We

trained a well performed semantic segmentation network and use it to segment

the input infrared images. To test the performance of our method, we select 20

pairs of infrared and visible images from TNO data set for experiment. Several

segmentation results are shown in Fig. 3. After segmentation, the mask, infrared

and visible images are put into the fusion network. The fusion result is shown in

Fig. 4.

Fig. 3. The results of semantic segmentation on TNO dataset. The input infrared

images are shown in first row, the output masks are shown in second row.

(a) The infrared

image.

(b) The visible

image.

(c) The mask.

(d) The fused

image.

Fig. 4. The fusion result of our method.

4.2 Comparison

In the experiment, we select several state-of-art methods of infrared image and

visible image fusion for comparison. These methods including curvelet trans-

form (CVT) [19], dual-tree complex wavelet transform (DTCWT) [20], weighted

least square optimization-based method (WLS) [7], gradient transfer fusion

(GTF) [21], and a generative adversarial network for infrared and visible image

�

Infrared and Visible Image Fusion

611

fusion (FusionGAN) [22]. The experiment is carried out on a 3.4 GHz Intel(R)

Core(TM) CPU with 8 GB RAM.

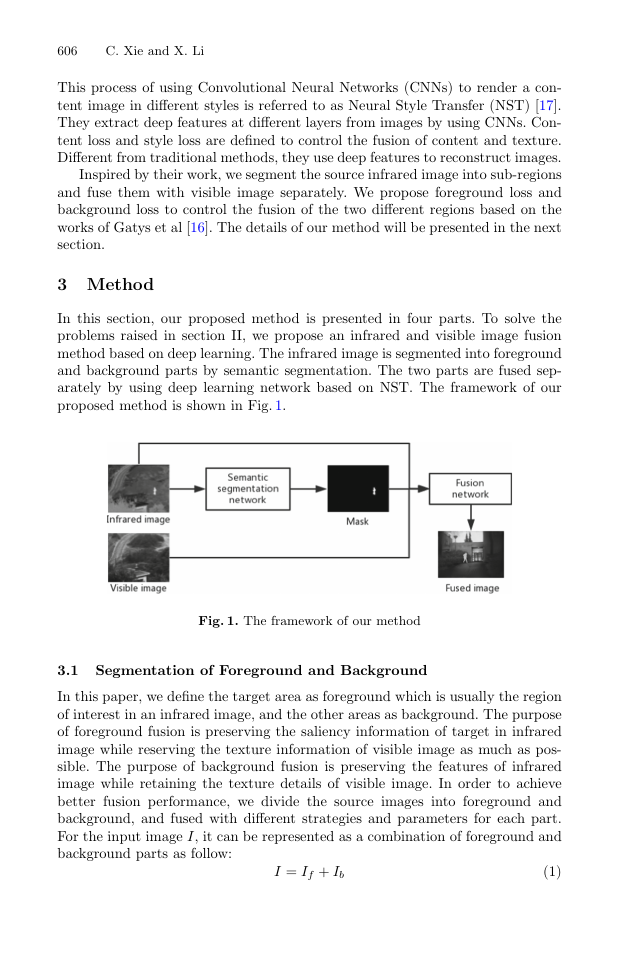

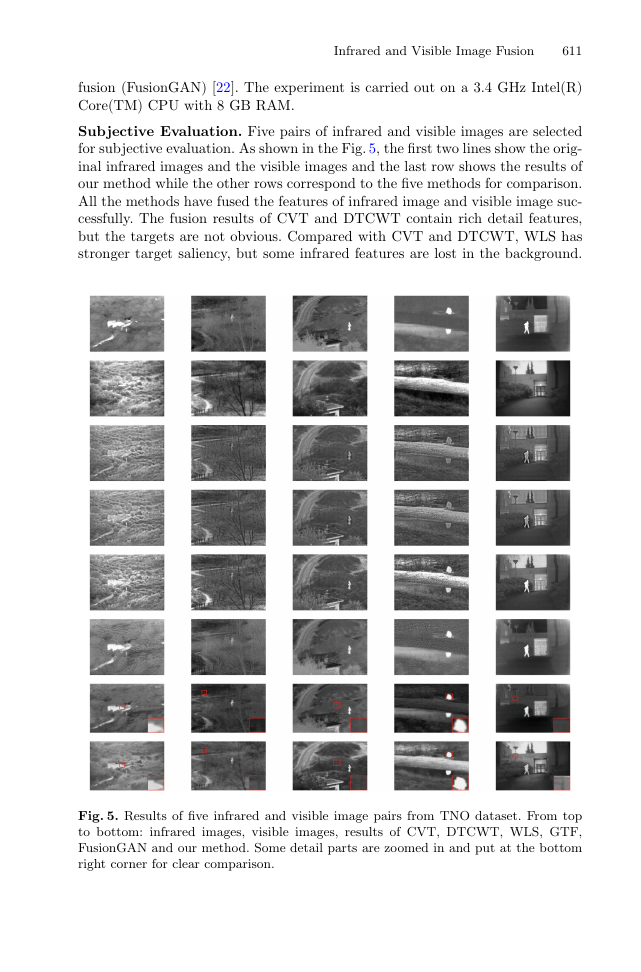

Subjective Evaluation. Five pairs of infrared and visible images are selected

for subjective evaluation. As shown in the Fig. 5, the first two lines show the orig-

inal infrared images and the visible images and the last row shows the results of

our method while the other rows correspond to the five methods for comparison.

All the methods have fused the features of infrared image and visible image suc-

cessfully. The fusion results of CVT and DTCWT contain rich detail features,

but the targets are not obvious. Compared with CVT and DTCWT, WLS has

stronger target saliency, but some infrared features are lost in the background.

Fig. 5. Results of five infrared and visible image pairs from TNO dataset. From top

to bottom: infrared images, visible images, results of CVT, DTCWT, WLS, GTF,

FusionGAN and our method. Some detail parts are zoomed in and put at the bottom

right corner for clear comparison.

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc