Alex Graves

Supervised Sequence Labelling with Recurrent Neural Networks

�

Studies in Computational Intelligence,Volume 385

Editor-in-Chief

Prof. Janusz Kacprzyk

Systems Research Institute

Polish Academy of Sciences

ul. Newelska 6

01-447 Warsaw

Poland

E-mail: kacprzyk@ibspan.waw.pl

Further volumes of this series can be found on our

homepage: springer.com

Vol. 362. Pascal Bouvry, Horacio González-Vélez, and

Joanna Kolodziej (Eds.)

Intelligent Decision Systems in Large-Scale Distributed

Environments, 2011

ISBN 978-3-642-21270-3

Vol. 363. Kishan G. Mehrotra, Chilukuri Mohan, Jae C. Oh,

Pramod K.Varshney, and Moonis Ali (Eds.)

Developing Concepts in Applied Intelligence, 2011

ISBN 978-3-642-21331-1

Vol. 364. Roger Lee (Ed.)

Computer and Information Science, 2011

ISBN 978-3-642-21377-9

Vol. 365. Roger Lee (Ed.)

Computers, Networks, Systems, and Industrial

Engineering 2011, 2011

ISBN 978-3-642-21374-8

Vol. 366. Mario Köppen, Gerald Schaefer, and

Ajith Abraham (Eds.)

Intelligent Computational Optimization in Engineering, 2011

ISBN 978-3-642-21704-3

Vol. 367. Gabriel Luque and Enrique Alba

Parallel Genetic Algorithms, 2011

ISBN 978-3-642-22083-8

Vol. 368. Roger Lee (Ed.)

Software Engineering, Artificial Intelligence, Networking and

Parallel/Distributed Computing 2011, 2011

ISBN 978-3-642-22287-0

Vol. 369. Dominik Ry_zko, Piotr Gawrysiak, Henryk Rybinski,

and Marzena Kryszkiewicz (Eds.)

Emerging Intelligent Technologies in Industry, 2011

ISBN 978-3-642-22731-8

Vol. 370. Alexander Mehler, Kai-Uwe Kühnberger,

Henning Lobin, Harald Lüngen, Angelika Storrer, and

Andreas Witt (Eds.)

Modeling, Learning, and Processing of Text Technological

Data Structures, 2011

ISBN 978-3-642-22612-0

Vol. 371. Leonid Perlovsky, Ross Deming, and Roman Ilin

(Eds.)

Emotional Cognitive Neural Algorithms with Engineering

Applications, 2011

ISBN 978-3-642-22829-2

Vol. 372. Ant´onio E. Ruano and

Annam´aria R. V´arkonyi-K´oczy (Eds.)

New Advances in Intelligent Signal Processing, 2011

ISBN 978-3-642-11738-1

Vol. 373. Oleg Okun, Giorgio Valentini, and Matteo Re (Eds.)

Ensembles in Machine Learning Applications, 2011

ISBN 978-3-642-22909-1

Vol. 374. Dimitri Plemenos and Georgios Miaoulis (Eds.)

Intelligent Computer Graphics 2011, 2011

ISBN 978-3-642-22906-0

Vol. 375. Marenglen Biba and Fatos Xhafa (Eds.)

Learning Structure and Schemas from Documents, 2011

ISBN 978-3-642-22912-1

Vol. 376. Toyohide Watanabe and Lakhmi C. Jain (Eds.)

Innovations in Intelligent Machines – 2, 2012

ISBN 978-3-642-23189-6

Vol. 377. Roger Lee (Ed.)

Software Engineering Research, Management

and Applications 2011, 2011

ISBN 978-3-642-23201-5

Vol. 378. János Fodor, Ryszard Klempous, and

Carmen Paz Suárez Araujo (Eds.)

Recent Advances in Intelligent Engineering Systems, 2011

ISBN 978-3-642-23228-2

Vol. 379. Ferrante Neri, Carlos Cotta, and

Pablo Moscato (Eds.)

Handbook of Memetic Algorithms, 2012

ISBN 978-3-642-23246-6

Vol. 380. Anthony Brabazon, Michael O’Neill, and

Dietmar Maringer (Eds.)

Natural Computing in Computational Finance, 2011

ISBN 978-3-642-23335-7

Vol. 381. Rados

Chao-Fu Hong, and Ngoc Thanh Nguyen (Eds.)

Semantic Methods for Knowledge Management and

Communication, 2011

ISBN 978-3-642-23417-0

Vol. 382. F.M.T. Brazier, Kees Nieuwenhuis, Gregor Pavlin,

Martijn Warnier, and Costin Badica (Eds.)

Intelligent Distributed Computing V, 2011

ISBN 978-3-642-24012-6

Vol. 383. Takayuki Ito, Minjie Zhang, Valentin Robu,

Shaheen Fatima, and Tokuro Matsuo(Eds.)

New Trends in Agent-based Complex Automated

Negotiations, 2011

ISBN 978-3-642-24695-1

Vol. 384. Daphna Weinshall, J¨orn Anem¨uller,

and Luc van Gool (Eds.)

Detection and Identification of Rare Audiovisual Cues, 2012

ISBN 978-3-642-24033-1

Vol. 385. Alex Graves

Supervised Sequence Labelling with Recurrent Neural

Networks, 2012

ISBN 978-3-642-24796-5

law Katarzyniak, Tzu-Fu Chiu,

�

Alex Graves

Supervised Sequence Labelling

with Recurrent Neural Networks

123

�

Author

Dr. Alex Graves

University of Toronto

Department of Computer Science

Toronto, Ontario

Canada

ISSN 1860-949X

ISBN 978-3-642-24796-5

DOI 10.1007/978-3-642-24797-2

Springer Heidelberg New York Dordrecht London

e-ISSN 1860-9503

e-ISBN 978-3-642-24797-2

Library of Congress Control Number: 2011940011

c Springer-Verlag Berlin Heidelberg 2012

This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part

of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations,

recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or

information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar

methodology now known or hereafter developed. Exempted from this legal reservation are brief ex-

cerpts in connection with reviews or scholarly analysis or material supplied specifically for the purpose

of being entered and executed on a computer system, for exclusive use by the purchaser of the work.

Duplication of this publication or parts thereof is permitted only under the provisions of the Copyright

Law of the Publisher’s location, in its current version, and permission for use must always be obtained

from Springer. Permissions for use may be obtained through RightsLink at the Copyright Clearance

Center. Violations are liable to prosecution under the respective Copyright Law.

The use of general descriptive names, registered names, trademarks, service marks, etc. in this publi-

cation does not imply, even in the absence of a specific statement, that such names are exempt from the

relevant protective laws and regulations and therefore free for general use.

While the advice and information in this book are believed to be true and accurate at the date of

publication, neither the authors nor the editors nor the publisher can accept any legal responsibility for

any errors or omissions that may be made. The publisher makes no warranty, express or implied, with

respect to the material contained herein.

Printed on acid-free paper

Springer is part of Springer Science+Business Media (www.springer.com)

�

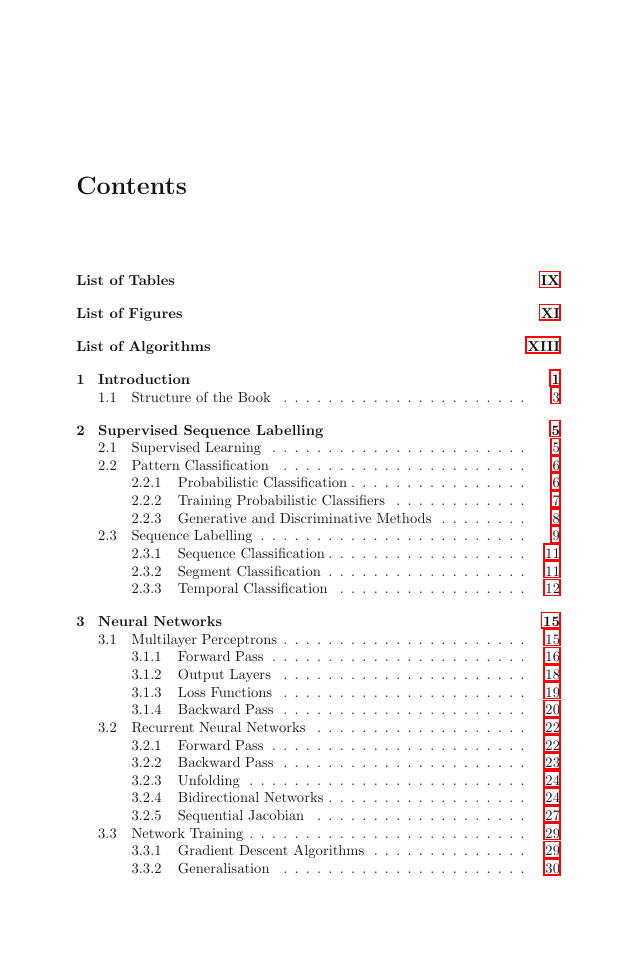

Contents

List of Tables

List of Figures

List of Algorithms

1 Introduction

1.1 Structure of the Book . . . . . . . . . . . . . . . . . . . . . .

2 Supervised Sequence Labelling

2.1 Supervised Learning . . . . . . . . . . . . . . . . . . . . . . .

2.2 Pattern Classification . . . . . . . . . . . . . . . . . . . . . .

2.2.1 Probabilistic Classification . . . . . . . . . . . . . . . .

2.2.2 Training Probabilistic Classifiers . . . . . . . . . . . .

2.2.3 Generative and Discriminative Methods . . . . . . . .

2.3 Sequence Labelling . . . . . . . . . . . . . . . . . . . . . . . .

Sequence Classification . . . . . . . . . . . . . . . . . .

2.3.1

2.3.2

Segment Classification . . . . . . . . . . . . . . . . . .

2.3.3 Temporal Classification . . . . . . . . . . . . . . . . .

3 Neural Networks

3.2 Recurrent Neural Networks

3.1 Multilayer Perceptrons . . . . . . . . . . . . . . . . . . . . . .

3.1.1 Forward Pass . . . . . . . . . . . . . . . . . . . . . . .

3.1.2 Output Layers

. . . . . . . . . . . . . . . . . . . . . .

3.1.3 Loss Functions . . . . . . . . . . . . . . . . . . . . . .

3.1.4 Backward Pass . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . .

3.2.1 Forward Pass . . . . . . . . . . . . . . . . . . . . . . .

3.2.2 Backward Pass . . . . . . . . . . . . . . . . . . . . . .

3.2.3 Unfolding . . . . . . . . . . . . . . . . . . . . . . . . .

3.2.4 Bidirectional Networks . . . . . . . . . . . . . . . . . .

Sequential Jacobian . . . . . . . . . . . . . . . . . . .

3.2.5

3.3 Network Training . . . . . . . . . . . . . . . . . . . . . . . . .

3.3.1 Gradient Descent Algorithms . . . . . . . . . . . . . .

3.3.2 Generalisation . . . . . . . . . . . . . . . . . . . . . .

IX

XI

XIII

1

3

5

5

6

6

7

8

9

11

11

12

15

15

16

18

19

20

22

22

23

24

24

27

29

29

30

�

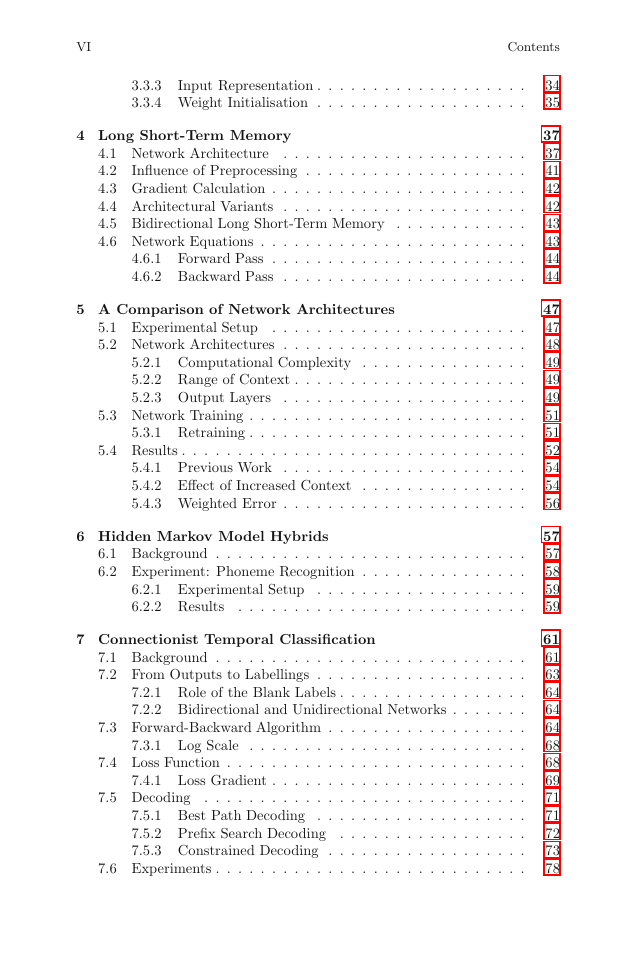

VI

Contents

3.3.3

Input Representation . . . . . . . . . . . . . . . . . . .

3.3.4 Weight Initialisation . . . . . . . . . . . . . . . . . . .

4 Long Short-Term Memory

. . . . . . . . . . . . . . . . . . . . . .

4.1 Network Architecture

4.2

Influence of Preprocessing . . . . . . . . . . . . . . . . . . . .

4.3 Gradient Calculation . . . . . . . . . . . . . . . . . . . . . . .

4.4 Architectural Variants . . . . . . . . . . . . . . . . . . . . . .

4.5 Bidirectional Long Short-Term Memory . . . . . . . . . . . .

4.6 Network Equations . . . . . . . . . . . . . . . . . . . . . . . .

4.6.1 Forward Pass . . . . . . . . . . . . . . . . . . . . . . .

4.6.2 Backward Pass . . . . . . . . . . . . . . . . . . . . . .

5 A Comparison of Network Architectures

5.1 Experimental Setup . . . . . . . . . . . . . . . . . . . . . . .

5.2 Network Architectures . . . . . . . . . . . . . . . . . . . . . .

5.2.1 Computational Complexity . . . . . . . . . . . . . . .

5.2.2 Range of Context . . . . . . . . . . . . . . . . . . . . .

5.2.3 Output Layers

. . . . . . . . . . . . . . . . . . . . . .

5.3 Network Training . . . . . . . . . . . . . . . . . . . . . . . . .

5.3.1 Retraining . . . . . . . . . . . . . . . . . . . . . . . . .

5.4 Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5.4.1 Previous Work . . . . . . . . . . . . . . . . . . . . . .

5.4.2 Effect of Increased Context . . . . . . . . . . . . . . .

5.4.3 Weighted Error . . . . . . . . . . . . . . . . . . . . . .

6 Hidden Markov Model Hybrids

6.1 Background . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6.2 Experiment: Phoneme Recognition . . . . . . . . . . . . . . .

6.2.1 Experimental Setup . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . . . . . .

6.2.2 Results

7 Connectionist Temporal Classification

7.1 Background . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7.2 From Outputs to Labellings . . . . . . . . . . . . . . . . . . .

7.2.1 Role of the Blank Labels . . . . . . . . . . . . . . . . .

7.2.2 Bidirectional and Unidirectional Networks . . . . . . .

7.3 Forward-Backward Algorithm . . . . . . . . . . . . . . . . . .

7.3.1 Log Scale . . . . . . . . . . . . . . . . . . . . . . . . .

7.4 Loss Function . . . . . . . . . . . . . . . . . . . . . . . . . . .

7.4.1 Loss Gradient . . . . . . . . . . . . . . . . . . . . . . .

7.5 Decoding . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7.5.1 Best Path Decoding . . . . . . . . . . . . . . . . . . .

7.5.2 Prefix Search Decoding . . . . . . . . . . . . . . . . .

7.5.3 Constrained Decoding . . . . . . . . . . . . . . . . . .

7.6 Experiments . . . . . . . . . . . . . . . . . . . . . . . . . . . .

34

35

37

37

41

42

42

43

43

44

44

47

47

48

49

49

49

51

51

52

54

54

56

57

57

58

59

59

61

61

63

64

64

64

68

68

69

71

71

72

73

78

�

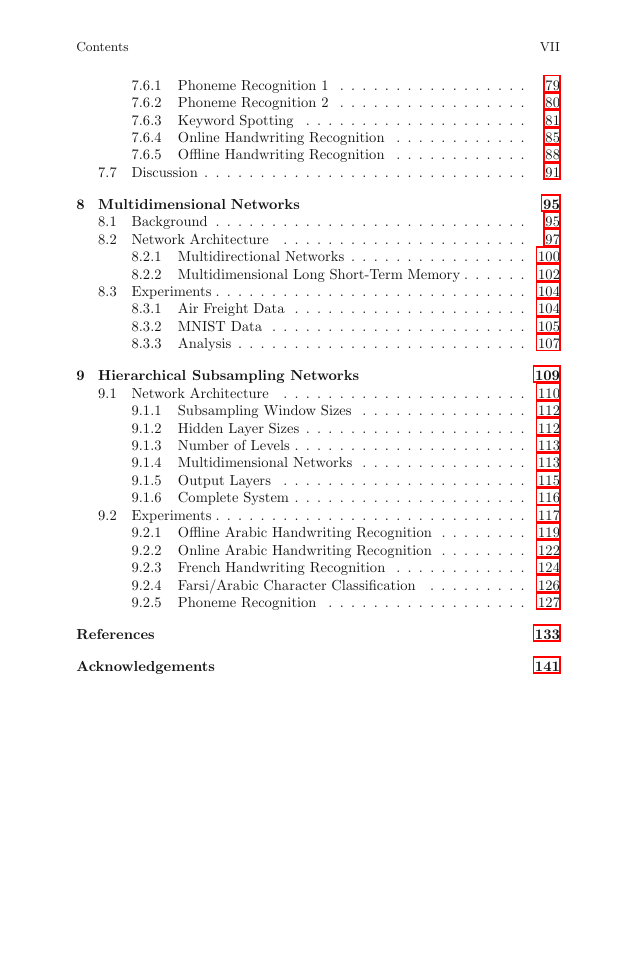

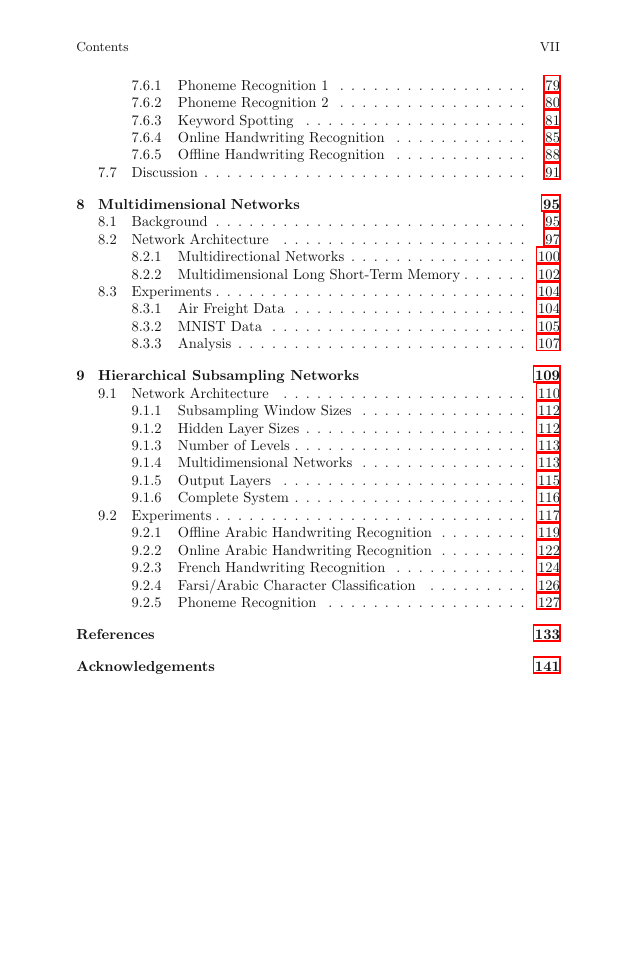

Contents

7.6.1 Phoneme Recognition 1 . . . . . . . . . . . . . . . . .

7.6.2 Phoneme Recognition 2 . . . . . . . . . . . . . . . . .

7.6.3 Keyword Spotting . . . . . . . . . . . . . . . . . . . .

7.6.4 Online Handwriting Recognition . . . . . . . . . . . .

7.6.5 Offline Handwriting Recognition . . . . . . . . . . . .

7.7 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

VII

79

80

81

85

88

91

8 Multidimensional Networks

8.1 Background . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . . . . . . . .

8.2 Network Architecture

95

95

97

8.2.1 Multidirectional Networks . . . . . . . . . . . . . . . . 100

8.2.2 Multidimensional Long Short-Term Memory . . . . . . 102

8.3 Experiments . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

8.3.1 Air Freight Data . . . . . . . . . . . . . . . . . . . . . 104

8.3.2 MNIST Data . . . . . . . . . . . . . . . . . . . . . . . 105

8.3.3 Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . 107

9 Hierarchical Subsampling Networks

9.1 Network Architecture

109

. . . . . . . . . . . . . . . . . . . . . . 110

9.1.1

Subsampling Window Sizes . . . . . . . . . . . . . . . 112

9.1.2 Hidden Layer Sizes . . . . . . . . . . . . . . . . . . . . 112

9.1.3 Number of Levels . . . . . . . . . . . . . . . . . . . . . 113

9.1.4 Multidimensional Networks . . . . . . . . . . . . . . . 113

9.1.5 Output Layers

. . . . . . . . . . . . . . . . . . . . . . 115

9.1.6 Complete System . . . . . . . . . . . . . . . . . . . . . 116

9.2 Experiments . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

9.2.1 Offline Arabic Handwriting Recognition . . . . . . . . 119

9.2.2 Online Arabic Handwriting Recognition . . . . . . . . 122

9.2.3 French Handwriting Recognition . . . . . . . . . . . . 124

9.2.4 Farsi/Arabic Character Classification . . . . . . . . . 126

9.2.5 Phoneme Recognition . . . . . . . . . . . . . . . . . . 127

References

Acknowledgements

133

141

�

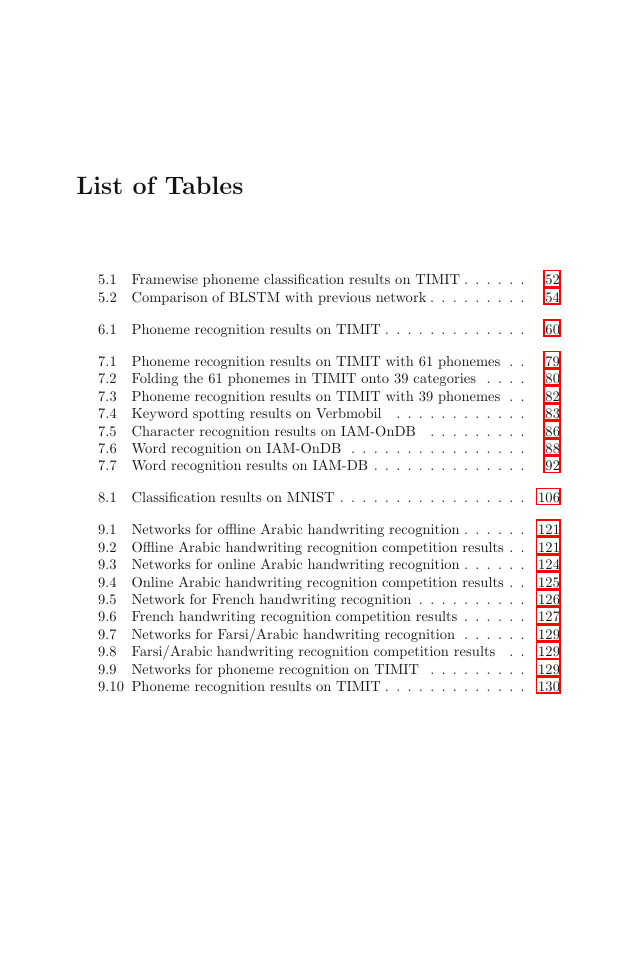

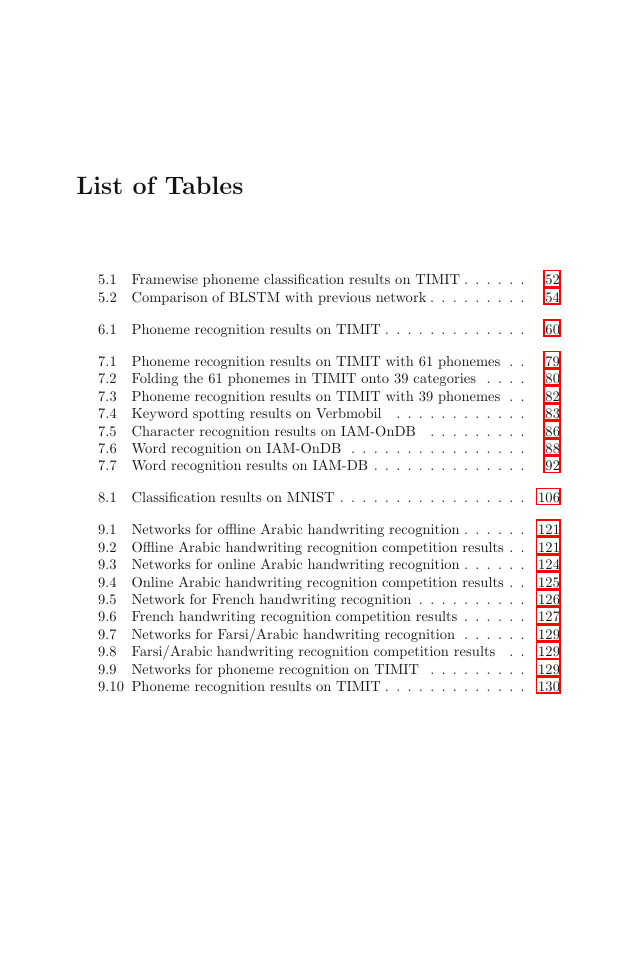

List of Tables

5.1 Framewise phoneme classification results on TIMIT . . . . . .

5.2 Comparison of BLSTM with previous network . . . . . . . . .

6.1 Phoneme recognition results on TIMIT . . . . . . . . . . . . .

7.1 Phoneme recognition results on TIMIT with 61 phonemes . .

7.2 Folding the 61 phonemes in TIMIT onto 39 categories . . . .

7.3 Phoneme recognition results on TIMIT with 39 phonemes . .

7.4 Keyword spotting results on Verbmobil

. . . . . . . . . . . .

7.5 Character recognition results on IAM-OnDB . . . . . . . . .

7.6 Word recognition on IAM-OnDB . . . . . . . . . . . . . . . .

7.7 Word recognition results on IAM-DB . . . . . . . . . . . . . .

52

54

60

79

80

82

83

86

88

92

8.1 Classification results on MNIST . . . . . . . . . . . . . . . . . 106

9.1 Networks for offline Arabic handwriting recognition . . . . . . 121

9.2 Offline Arabic handwriting recognition competition results . . 121

9.3 Networks for online Arabic handwriting recognition . . . . . . 124

9.4 Online Arabic handwriting recognition competition results . . 125

9.5 Network for French handwriting recognition . . . . . . . . . . 126

9.6 French handwriting recognition competition results . . . . . . 127

9.7 Networks for Farsi/Arabic handwriting recognition . . . . . . 129

9.8 Farsi/Arabic handwriting recognition competition results

. . 129

9.9 Networks for phoneme recognition on TIMIT . . . . . . . . . 129

9.10 Phoneme recognition results on TIMIT . . . . . . . . . . . . . 130

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc