LMS 算法源程序:

%channel system order

sysorder = 5 ;

% Number of system points

N=2000;

inp = randn(N,1);

n = randn(N,1);

[b,a] = butter(2,0.25);

Gz = tf(b,a,-1);

%This function is submitted to make inverse Z-transform (Matlab central file

exchange)

%The first sysorder weight value

%h=ldiv(b,a,sysorder)';

% if you use ldiv this will give h :filter weights to be

h= [0.0976; 0.2873; 0.3360; 0.2210; 0.0964;];

y = lsim(Gz,inp);

%add some noise

n = n * std(y)/(10*std(n));

d = y + n;

totallength=size(d,1);

%Take 60 points for training

N=60 ;

%begin of algorithm

w = zeros ( sysorder , 1 ) ;

for n = sysorder : N

u = inp(n:-1:n-sysorder+1) ;

y(n)= w' * u;

e(n) = d(n) - y(n) ;

% Start with big mu for speeding the convergence then slow down to reach the correct

weights

if n < 20

mu=0.32;

else

mu=0.15;

end

w = w + mu * u * e(n) ;

end

%check of results

for n = N+1 : totallength

u = inp(n:-1:n-sysorder+1) ;

y(n) = w' * u ;

e(n) = d(n) - y(n) ;

end

�

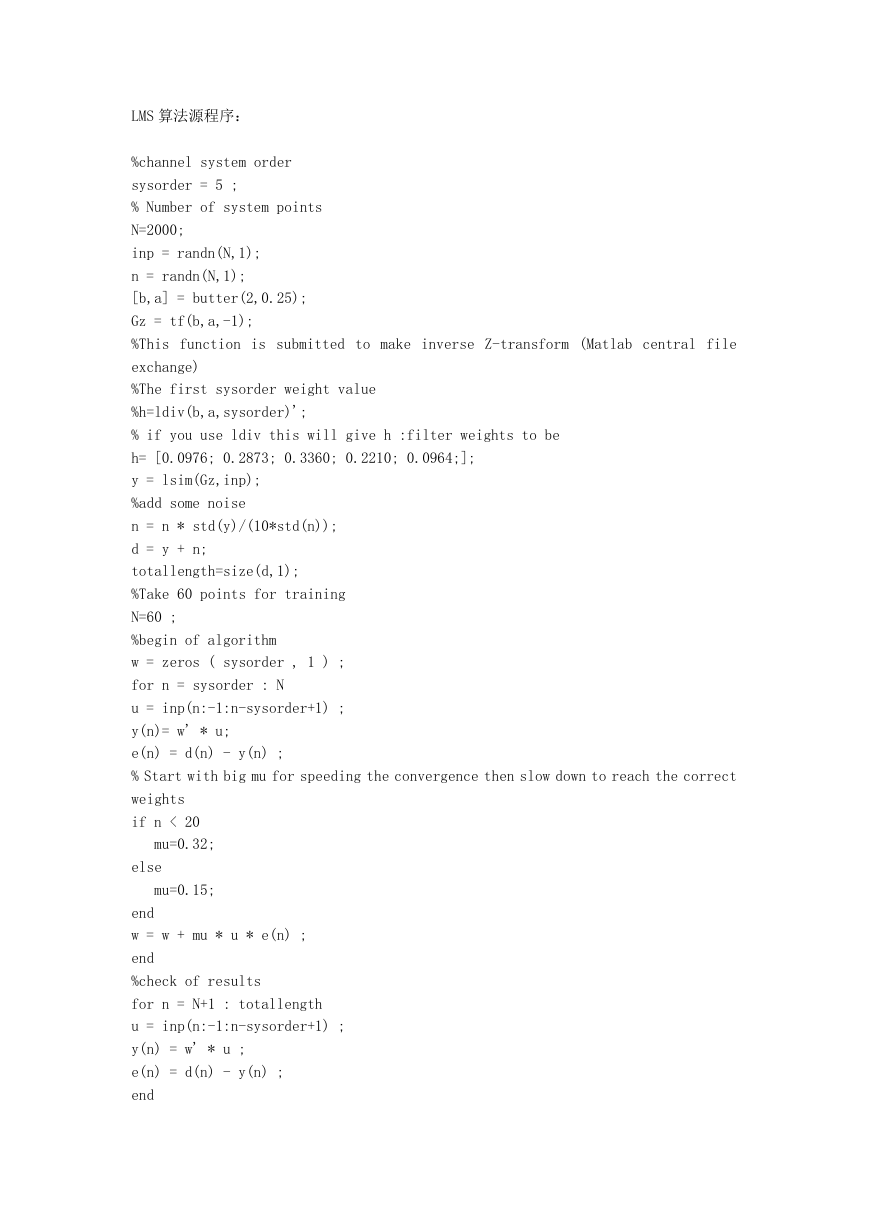

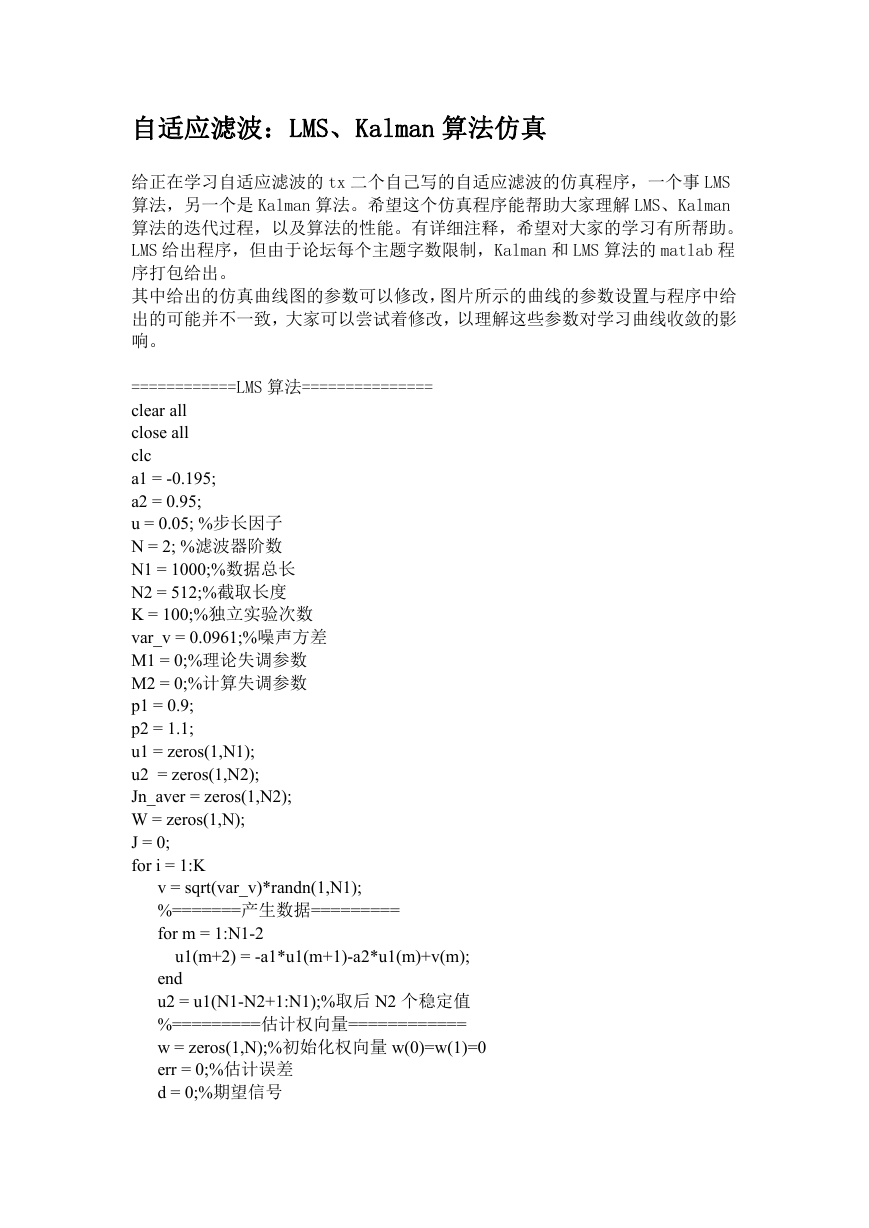

hold on

plot(d)

plot(y,'r');

title('System output') ;

xlabel('Samples')

ylabel('True and estimated output')

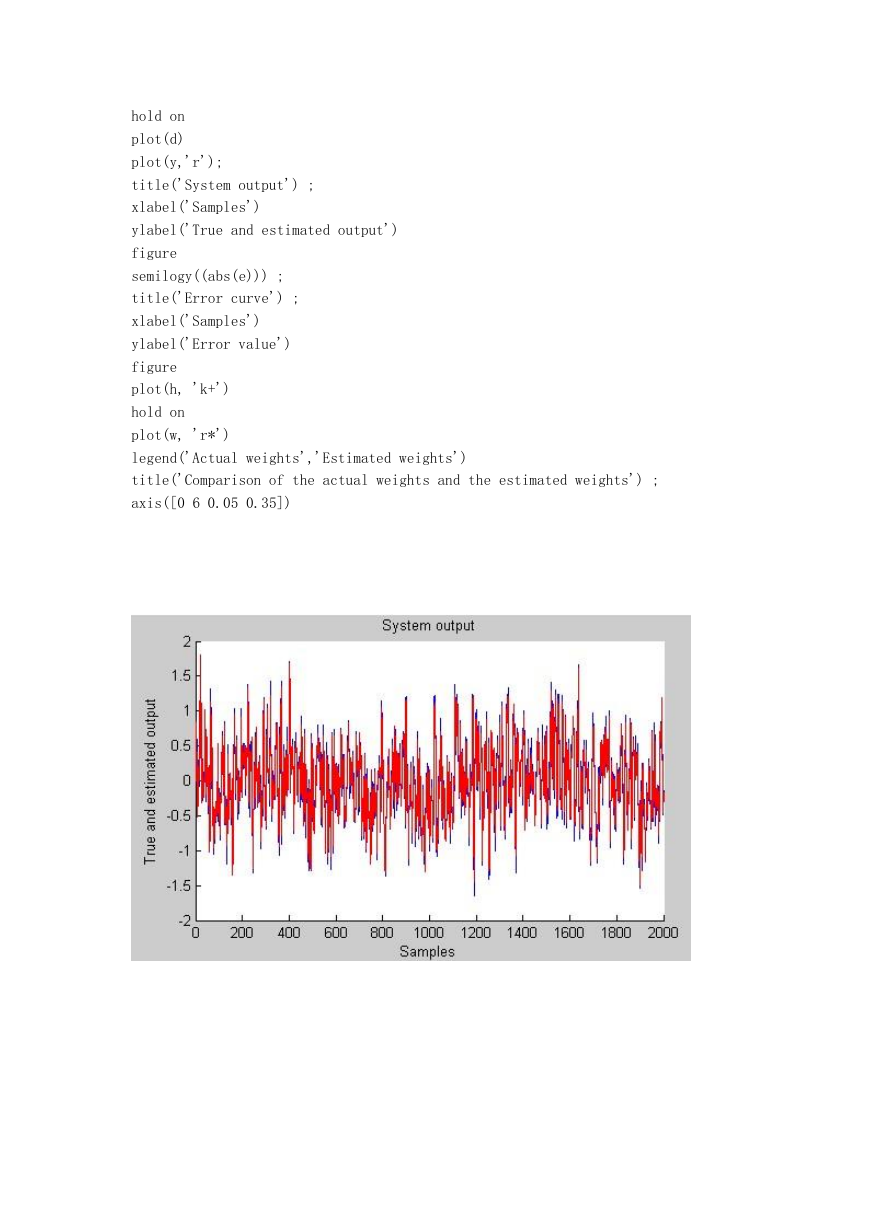

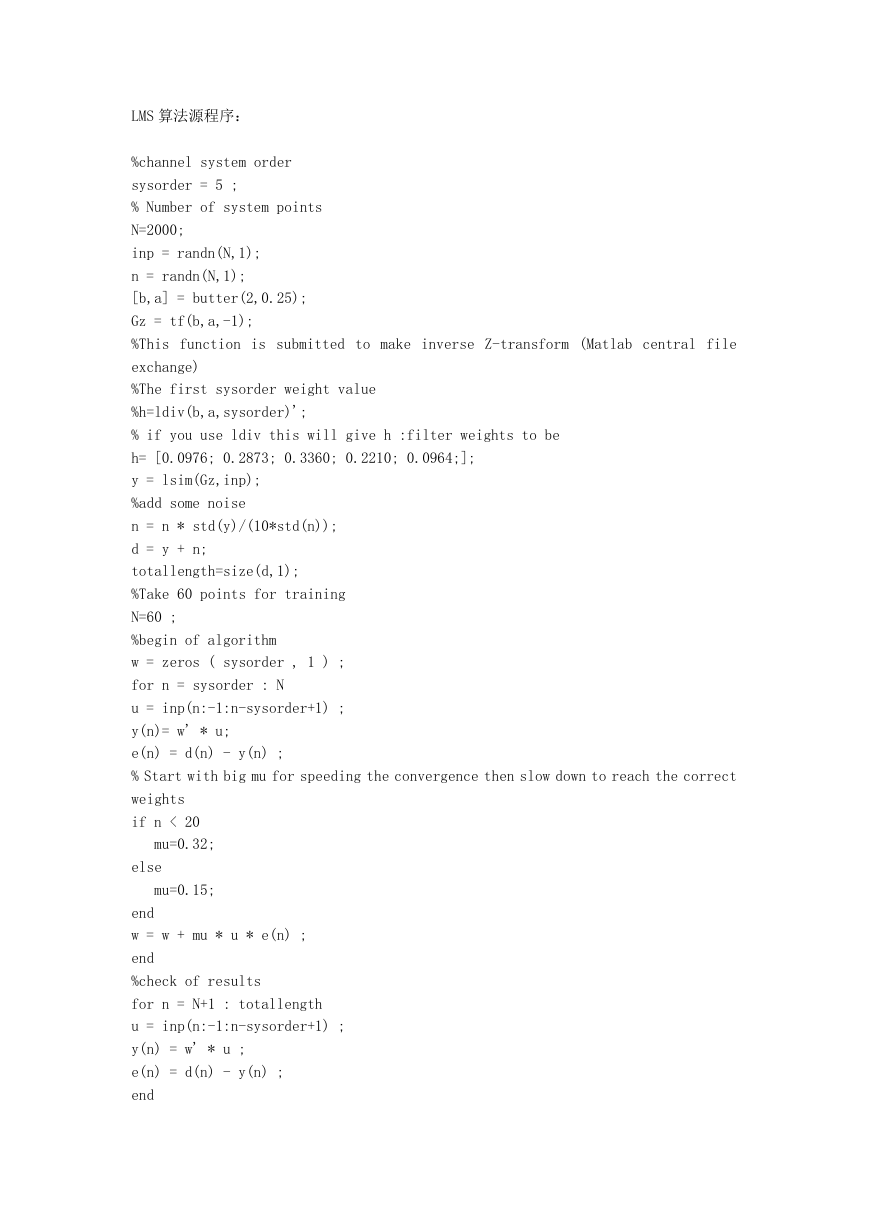

figure

semilogy((abs(e))) ;

title('Error curve') ;

xlabel('Samples')

ylabel('Error value')

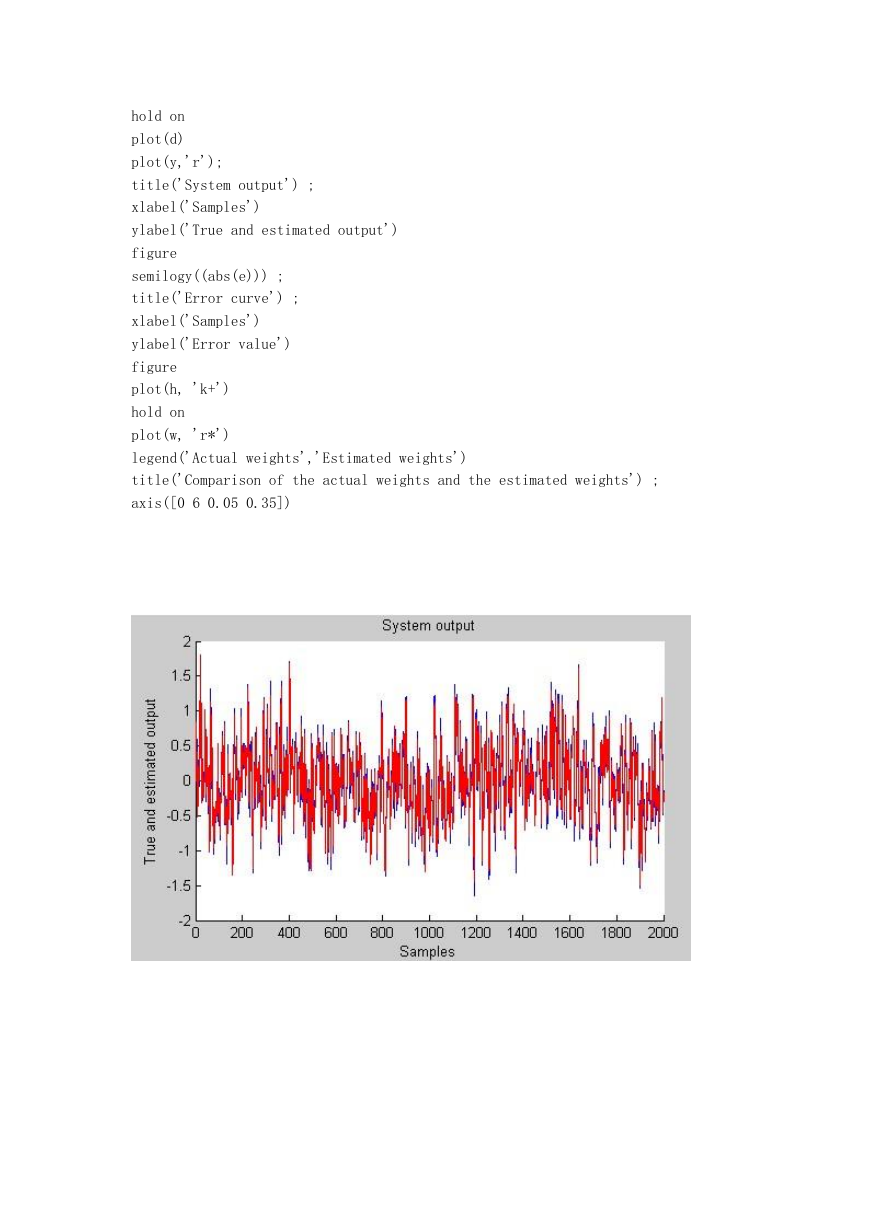

figure

plot(h, 'k+')

hold on

plot(w, 'r*')

legend('Actual weights','Estimated weights')

title('Comparison of the actual weights and the estimated weights') ;

axis([0 6 0.05 0.35])

�

�

RLS 算法源程序:

randn('seed', 0) ;

rand('seed', 0) ;

NoOfData = 8000 ; % Set no of data points used for training

Order = 32 ; % Set the adaptive filter order

Lambda = 0.98 ; % Set the forgetting factor

Delta = 0.001 ; % R initialized to Delta*I

x = randn(NoOfData, 1) ;% Input assumed to be white

h = rand(Order, 1) ; % System picked randomly

d = filter(h, 1, x) ; % Generate output (desired signal)

% Initialize RLS

P = Delta * eye ( Order, Order ) ;

w = zeros ( Order, 1 ) ;

% RLS Adaptation

for n = Order : NoOfData ;

u = x(n:-1:n-Order+1) ;

pi_ = u' * P ;

k = Lambda + pi_ * u ;

K = pi_'/k;

e(n) = d(n) - w' * u ;

w = w + K * e(n) ;

PPrime = K * pi_ ;

P = ( P - PPrime ) / Lambda ;

w_err(n) = norm(h - w) ;

end ;

% Plot results

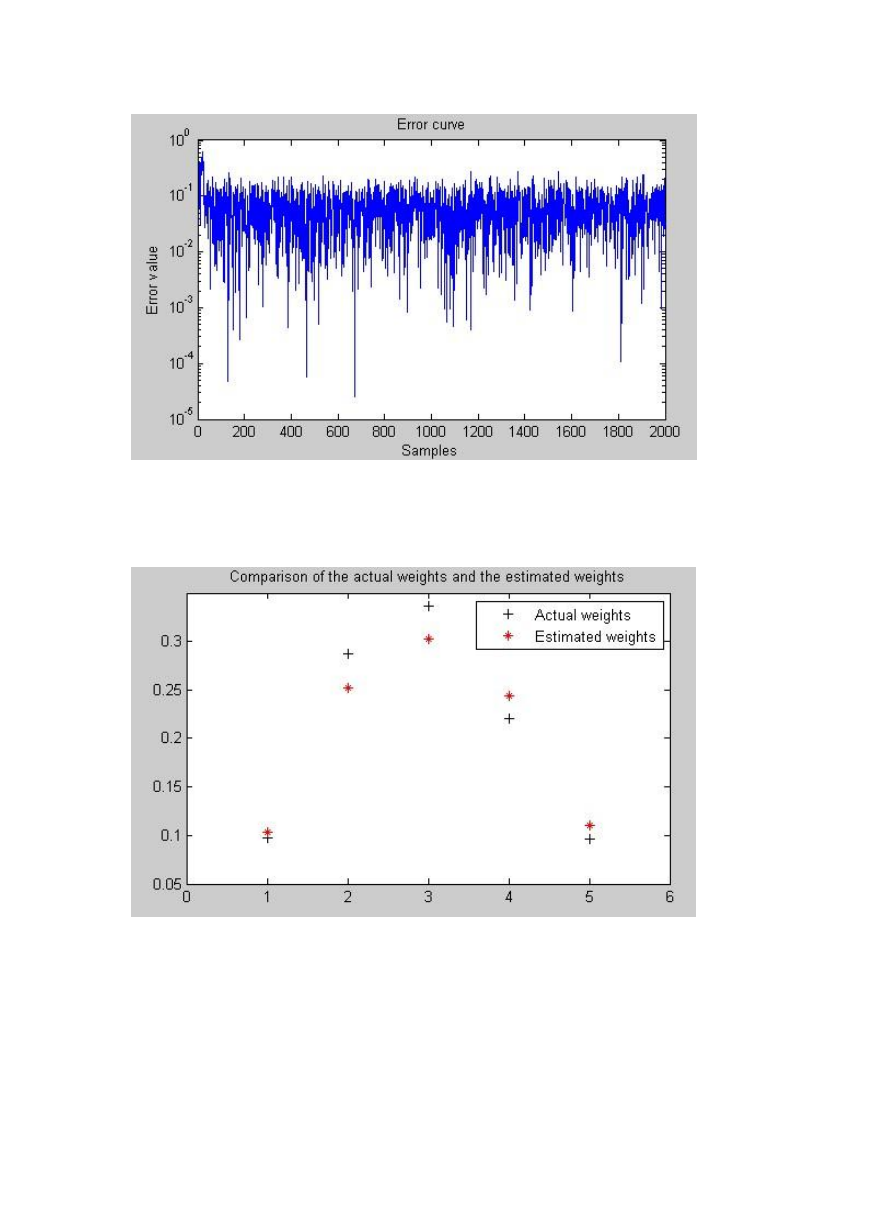

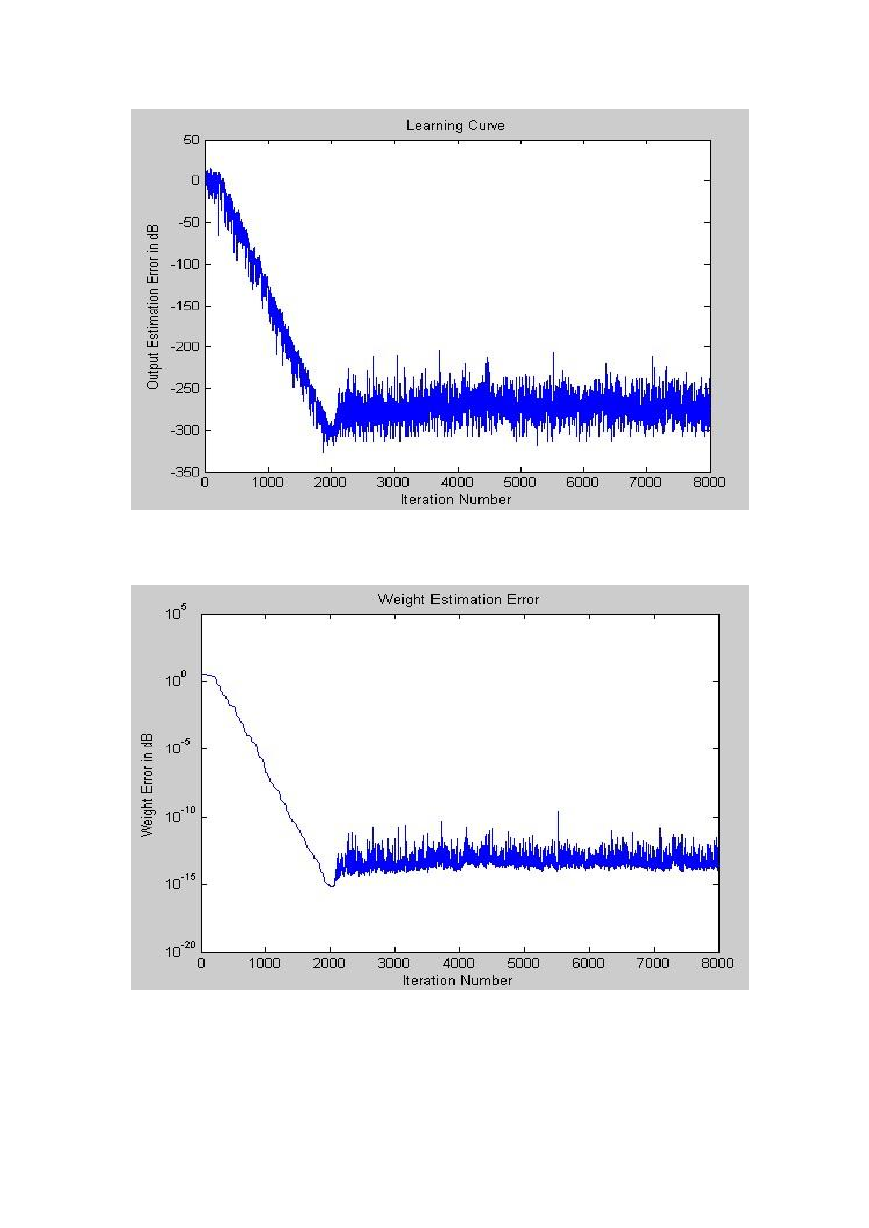

figure ;

plot(20*log10(abs(e))) ;

title('Learning Curve') ;

xlabel('Iteration Number') ;

ylabel('Output Estimation Error in dB') ;

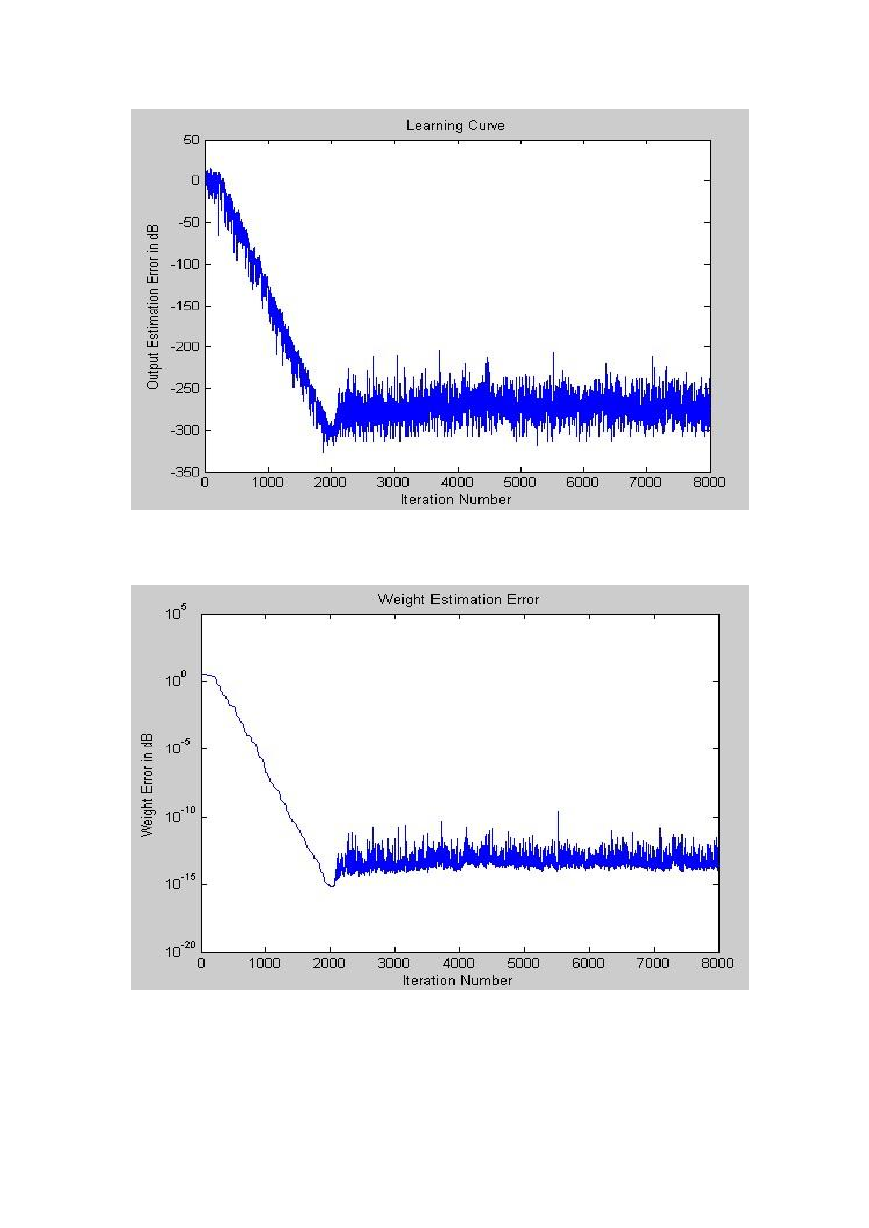

figure ;

semilogy(w_err) ;

title('Weight Estimation Error') ;

xlabel('Iteration Number') ;

ylabel('Weight Error in dB') ;

�

�

自适应滤波:LMS、Kalman 算法仿真

给正在学习自适应滤波的 tx 二个自己写的自适应滤波的仿真程序,一个事 LMS

算法,另一个是 Kalman 算法。希望这个仿真程序能帮助大家理解 LMS、Kalman

算法的迭代过程,以及算法的性能。有详细注释,希望对大家的学习有所帮助。

LMS 给出程序,但由于论坛每个主题字数限制,Kalman 和 LMS 算法的 matlab 程

序打包给出。

其中给出的仿真曲线图的参数可以修改,图片所示的曲线的参数设置与程序中给

出的可能并不一致,大家可以尝试着修改,以理解这些参数对学习曲线收敛的影

响。

============LMS 算法===============

clear all

close all

clc

a1 = -0.195;

a2 = 0.95;

u = 0.05; %步长因子

N = 2; %滤波器阶数

N1 = 1000;%数据总长

N2 = 512;%截取长度

K = 100;%独立实验次数

var_v = 0.0961;%噪声方差

M1 = 0;%理论失调参数

M2 = 0;%计算失调参数

p1 = 0.9;

p2 = 1.1;

u1 = zeros(1,N1);

u2 = zeros(1,N2);

Jn_aver = zeros(1,N2);

W = zeros(1,N);

J = 0;

for i = 1:K

v = sqrt(var_v)*randn(1,N1);

%=======产生数据=========

for m = 1:N1-2

u1(m+2) = -a1*u1(m+1)-a2*u1(m)+v(m);

end

u2 = u1(N1-N2+1:N1);%取后 N2 个稳定值

%=========估计权向量============

w = zeros(1,N);%初始化权向量 w(0)=w(1)=0

err = 0;%估计误差

d = 0;%期望信号

�

u3 = zeros(1,N);%输入向量初始化

u4 = [zeros(1,N) u2];

Jn = zeros(1,N2);

for n = 1:N2

w = w+u*u3*conj(err);%权向量的更新

u3 = u4(n+N-1:-1:n);%更新输入向量 u3 = [u4(n+1) u4(n)]

d = conj(w)*(u3.');%期望信号估计

err = u4(n+2)-d;%估计误差

Jn(n) = (abs(err)).^2;%均方误差

end

Jn_aver = Jn_aver+Jn;

W = W+w;

end

EJex_inf = u*var_v*(p1+p2)/(2-u*(p1+p2))%剩余均方误差统计平均值 E[Jex(inf)]

M1 = EJex_inf/var_v%计算理论失调

W = W./K%权向量

Jn_aver = Jn_aver./K;

Jex_n = Jn_aver(N2)-var_v%n 次迭代后的剩余均方误差值

M2 = Jex_n/var_v%n 次迭代后的失调

plot(Jn_aver);

title(['LP(2)LMS 学习曲线 u=0.05, w=',num2str(W)])

grid on

xlabel('n/迭代次数');

ylabel('E[Jn(n)]');

axis([1 512 0 1.5]);

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc