Vulnerability Extrapolation:

Assisted Discovery of Vulnerabilities using Machine Learning

Fabian Yamaguchi1, Felix ’FX’ Lindner1, and Konrad Rieck2

1Recurity Labs GmbH, Germany

2Technische Universit¨at Berlin, Germany

Abstract

Rigorous identification of vulnerabilities in program

code is a key to implementing and operating secure sys-

tems. Unfortunately, only some types of vulnerabilities

can be detected automatically. While techniques from

software testing can accelerate the search for security

flaws, in the general case discovery of vulnerabilities is

a tedious process that requires significant expertise and

time.

In this paper, we propose a method for assisted

discovery of vulnerabilities in source code. Our method

proceeds by embedding code in a vector space and auto-

matically determining API usage patterns using machine

learning. Starting from a known vulnerability, these

patterns can be exploited to guide the auditing of code

and to identify potentially vulnerable code with similar

characteristics—a process we refer to as vulnerability ex-

trapolation. We empirically demonstrate the capabilities

of our method in different experiments. In a case study

with the library FFmpeg, we are able to narrow the search

for interesting code from 6,778 to 20 functions and dis-

cover two security flaws, one being a known flaw and the

other constituting a zero-day vulnerability.

1 Introduction

The security of computer systems critically depends on

the quality and security of its underlying program code.

Unfortunately, there is a persistent deficit of security

awareness in software development [37] and often the

pressure of business competition rules out the design and

implementation of secure software. As a result, there ex-

ist numerous examples of programming flaws that have

led to severe security incidents and the proliferation of

malicious software [e.g., 11, 19, 24]. Often these flaws

emerge as zero-day vulnerabilities, rendering defense us-

ing reactive security tools almost impossible.

fundamental inability of a program to completely anal-

yse another program’s code however, determining vul-

nerabilities automatically has proved to be an involved

and often daunting task. Current tools for automatic code

analysis, such as Fortify 360 and Microsoft PREfast, are

thus limited to detecting vulnerabilities following well-

known programming patterns. While techniques derived

from software testing, such as fuzz testing [32], taint

analysis [20] and symbolic execution [3, 29], may accel-

erate analysis of program code, the general discovery of

vulnerabilities still rests on tedious manual auditing that

requires considerable expertise and resources.

As a remedy, we propose a method for assisted dis-

covery of vulnerabilities in source code.

Instead of

struggling with the limitations of automatic analysis, our

method aims at rendering manual auditing more effective

by assisting and guiding the inspection of source code.

To this end, the method embeds code in a vector space,

such that typical patterns of API usage can be determined

automatically using machine learning techniques. These

patterns implicitly capture semantics of the code and al-

low to “extrapolate” known vulnerabilities by identifying

potentially vulnerable code with similar characteristics.

This process of vulnerability extrapolation can suggest

candidates for investigation to the analyst as well as ease

the browsing of source code during auditing.

We empirically demonstrate the capabilities of this

method to identify usage patterns and to accelerate code

auditing in different experiments. In a case study with

the popular library FFmpeg and a known vulnerability

(CVE-2010-3429), our method narrows the search for in-

teresting code from 6,778 to 20 functions. Out of these

20 functions, we can identify two security flaws, one be-

ing another known weakness and the other constituting

a zero-day vulnerability. We prove the relevance of this

finding by providing a working exploit.

From its early days, computer security has been con-

cerned with developing methods for discovery and elim-

ination of vulnerabilities in program code. Due to the

The rest of this paper is structured as follows: we

introduce our method for vulnerability extrapolation in

Section 2. An evaluation and a case study with FFmpeg

�

char a(uint b) {

char a(uint b) {

char a(uint b) {

func1((int) b);

func1((int) b);

func1((int) b);

func2(); ...

func2(); ...

func2(); ...

GETchar

GETuint

GETfunc1

GETint

GETfunc2

...

1

1

T

c

n

E

u

G

f

GETint

1

Known vulnerability

Candidates

Code base of

functions

(1) Extraction of

API symbols

(2) Embedding in

vector space

(3) Identification of

API usage patterns

(4) Assisted vulnerability

discovery

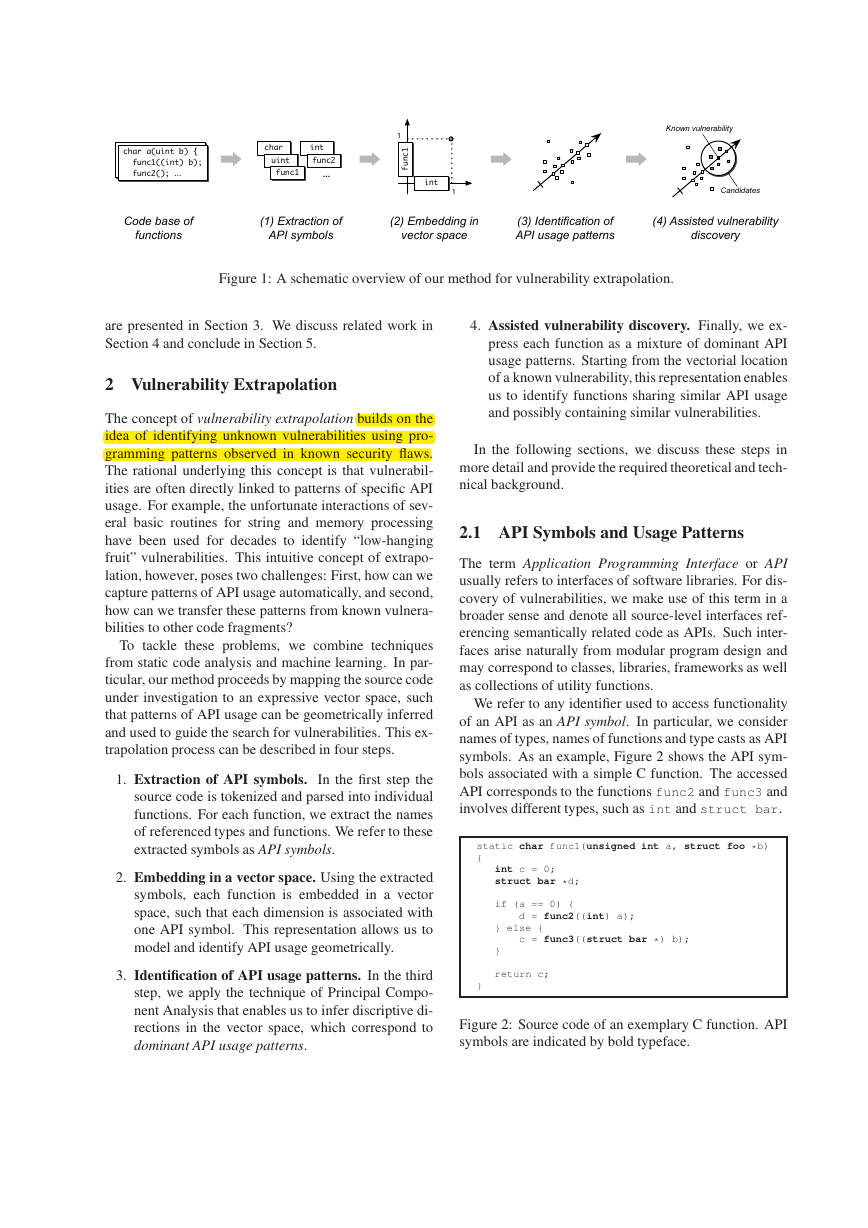

Figure 1: A schematic overview of our method for vulnerability extrapolation.

are presented in Section 3. We discuss related work in

Section 4 and conclude in Section 5.

2 Vulnerability Extrapolation

The concept of vulnerability extrapolation builds on the

idea of identifying unknown vulnerabilities using pro-

gramming patterns observed in known security flaws.

The rational underlying this concept is that vulnerabil-

ities are often directly linked to patterns of specific API

usage. For example, the unfortunate interactions of sev-

eral basic routines for string and memory processing

have been used for decades to identify “low-hanging

fruit” vulnerabilities. This intuitive concept of extrapo-

lation, however, poses two challenges: First, how can we

capture patterns of API usage automatically, and second,

how can we transfer these patterns from known vulnera-

bilities to other code fragments?

To tackle these problems, we combine techniques

from static code analysis and machine learning. In par-

ticular, our method proceeds by mapping the source code

under investigation to an expressive vector space, such

that patterns of API usage can be geometrically inferred

and used to guide the search for vulnerabilities. This ex-

trapolation process can be described in four steps.

1. Extraction of API symbols.

In the first step the

source code is tokenized and parsed into individual

functions. For each function, we extract the names

of referenced types and functions. We refer to these

extracted symbols as API symbols.

2. Embedding in a vector space. Using the extracted

symbols, each function is embedded in a vector

space, such that each dimension is associated with

one API symbol. This representation allows us to

model and identify API usage geometrically.

3. Identification of API usage patterns. In the third

step, we apply the technique of Principal Compo-

nent Analysis that enables us to infer discriptive di-

rections in the vector space, which correspond to

dominant API usage patterns.

4. Assisted vulnerability discovery. Finally, we ex-

press each function as a mixture of dominant API

usage patterns. Starting from the vectorial location

of a known vulnerability, this representation enables

us to identify functions sharing similar API usage

and possibly containing similar vulnerabilities.

In the following sections, we discuss these steps in

more detail and provide the required theoretical and tech-

nical background.

2.1 API Symbols and Usage Patterns

The term Application Programming Interface or API

usually refers to interfaces of software libraries. For dis-

covery of vulnerabilities, we make use of this term in a

broader sense and denote all source-level interfaces ref-

erencing semantically related code as APIs. Such inter-

faces arise naturally from modular program design and

may correspond to classes, libraries, frameworks as well

as collections of utility functions.

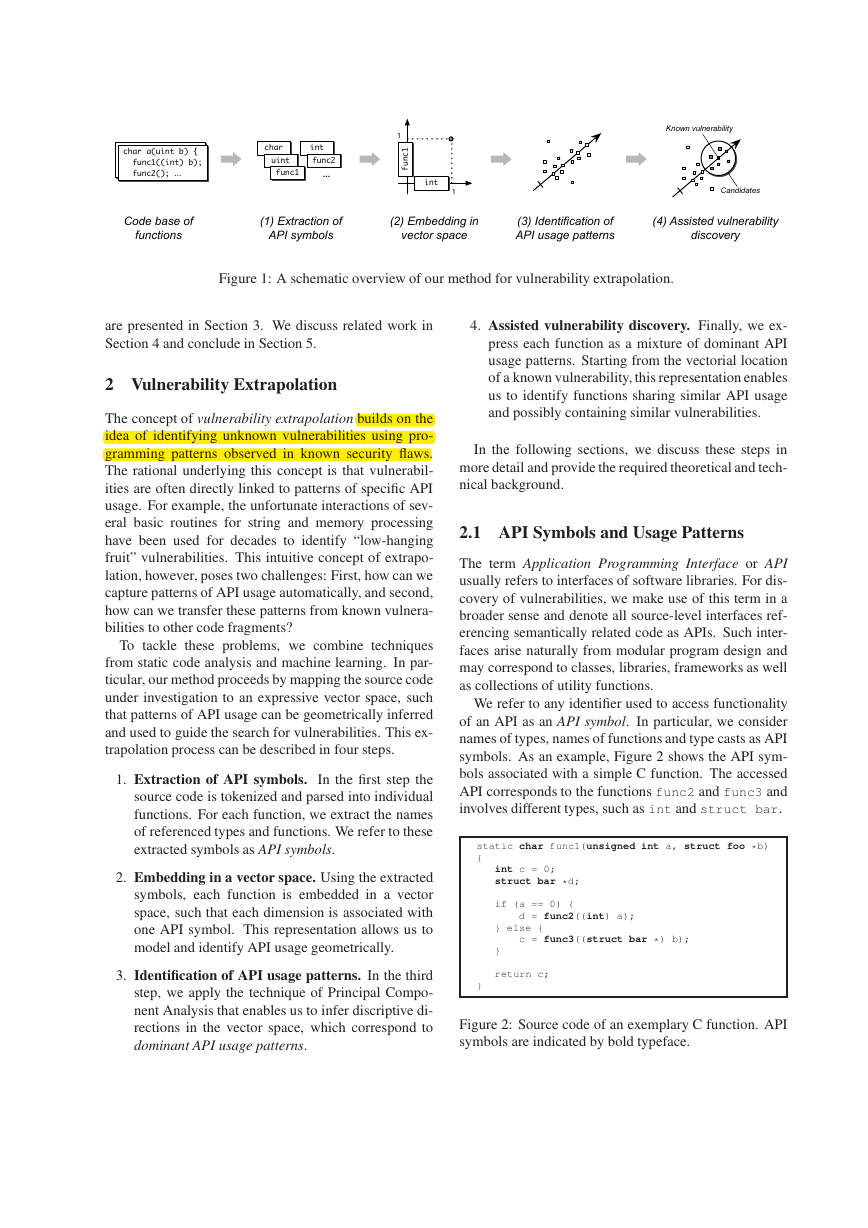

We refer to any identifier used to access functionality

of an API as an API symbol. In particular, we consider

names of types, names of functions and type casts as API

symbols. As an example, Figure 2 shows the API sym-

bols associated with a simple C function. The accessed

API corresponds to the functions func2 and func3 and

involves different types, such as int and struct bar.

static char func1(unsigned int a, struct foo *b)

{

int c = 0;

struct bar *d;

if (a == 0) {

d = func2((int) a);

} else {

c = func3((struct bar *) b);

}

return c;

}

Figure 2: Source code of an exemplary C function. API

symbols are indicated by bold typeface.

�

Based on the API symbols, we can define the notion

of an API usage pattern, which simply corresponds to a

set of symbols used in several functions. These patterns

can cover different functionality of the source code. For

example, an API usage pattern may correspond to lock

functions, such as mutex lock and mutex unlock,

whereas another pattern might reflect typical string func-

tions, such as strcpy, strcat and strlen. To dis-

tinguish random combinations of symbols from relevant

code, we consider only dominant usage patterns which

occur frequently in the code base. We will see in Sec-

tion 2.3 how dominant API usage patterns can be identi-

fied automatically.

As the first step of our analysis, we thus tokenize and

parse the source code under investigation into individual

functions (or alternatively functional blocks). For each

function, we then extract its API symbols and store these

as sets for subsequent processing.

2.2 From API Symbols to Vectors

API symbols and usage patterns are intuitive to a human

analyst, yet both concepts are not directly suitable for

application of machine learning. Learning techniques

usually operate on numerical vectors and often express

patterns as combinations of vectors. Inspired by the vec-

tor space model from information retrieval [28], we thus

embed the functions of our code base in a vector space

using the API symbols. This allows us to conduct the

search for vulnerable code in a geometric manner.

To present this embedding, we first need to introduce

some basic notation. We denote by X = {x1, . . . , xn}

the set of functions in our code base and refer to S as

the set of all API symbols contained in X . We can then

define a mapping φ from X to an |S|-dimensional vec-

tor space, whose dimensions are associated with the API

symbols S. Formally, this map φ is defined as

φ : X 7−→ R|S|, φ(x) −→ (φs(x))s∈S .

For a given function x ∈ X the value at the dimension

associated with the symbol s ∈ S is computed by

φs(x) := I(s, x) · TFIDF(s)

where I is simply an indicator function

I(s, x) =(1 if the symbol s is contained in x

0 otherwise

and TFIDF(s) corresponds to a standard weighting term

used in information retrieval. This weighting ensures that

the contribution of very frequent API symbols is low-

ered, similar to stop words in natural language text. A

detailed introduction to this mapping technique is pro-

vided in the book of Salton and McGill [27].

For convenience and later processing, we store the

vectors of all functions in our code base in a matrix M ,

where one element of the matrix is defined as

Ms,x := φs(x).

As a result, the matrix M consists of |X | column vectors

each containing |S| elements.

Apparently, the embedding of source code introduces

a dilemma: On the one hand, it is desirable to analyse as

many API symbols as possible, while on the other hand

storing billions of elements in a matrix M may get in-

tractable. However, the map φ is sparse, that is, a func-

tion x contains only few of all possible API symbols and

thus the majority of elements in M is zero. This spar-

sity can be exploited to extract and compare vectors φ(x)

with linear-time complexity using data structures, such

as hash maps and sorted arrays [see 25].

2.3 Principal Component Analysis

The mapping outlined in the previous section allows for

comparison of functions in terms of API symbols, simply

by computing distances between the respective vectors.

However, this vectorial representation alone is not suffi-

cient for effective discovery of vulnerabilities, as these

are not characterized by individual API symbols but pat-

terns of symbols. For example, functions may use the

same API but utilize different subsets, such that the un-

derlying usage pattern is only reflected in the combina-

tion of all subsets.

This problem of composing usage patterns can be ad-

dressed by Principal Component Analysis—a standard

technique of machine learning for automatically deter-

mining descriptive directions in a vector space [9].

In

our setting, these directions are associated with combi-

nations of API symbols and can be interpreted as domi-

nant API usage patterns. Moreover, the directions define

a low-dimensional subspace that the original vectors can

be projected to. Functions that do not share any symbols

but make us of the same API lie close to each other in

this subspace, as they fall onto the same direction identi-

fied by PCA. This technique of projecting data to a low-

dimensional subspace using PCA is also referred to as

Latent Semantic Analysis [8], a name that indicates the

ability to extract latent semantic relations from data.

Formally, PCA seeks d orthogonal directions in the

vector space that capture as much of the variance inside

the data as possible. One way to obtain these d directions

is by performing a truncated Singular Value Decomposi-

tion (SVD) of the matrix M . This decomposition can

be implemented efficiently using the Lanczos algorithm,

an iterative procedure suited for high-dimensional and

sparse data. For computing this decomposition we make

use of the popular library SVDLIBC [26].

�

The truncated SVD decomposes the matrix M into

three matrices U , Σ and V which offer a wealth of in-

formation about the code base and API usage. This de-

composition has the following form

M ≈ U Σ V T =

← u1 →

← u2 →

...

← u|S| →

σ1

0

...

0

0

σ2

...

0

0

. . .

0

. . .

...

. . .

. . . σd

← v1 →

← v2 →

...

← v|X | →

.

T

In particular, we obtain three relevant sources of infor-

mation that describe the dominant patterns of API usage,

their relevance and the relation of function and symbols

to these patterns.

1. The d columns of the unitary matrix U correspond

to the principal components of PCA and thus reflect

the d most dominant API usage patterns—prevalent

combinations of API symbols in the code base.

2. The diagonal matrix Σ contains the singular values

of M . The values indicate the variances of the prin-

cipal components and allow us to assess the individ-

ual importance of the d API usage patterns.

3. The rows of U and V contain the projected repre-

sentations of API symbols and functions, respec-

tively. While the matrix V can be used to measure

the similarity of functions, U comes handy if API

symbols need to be traced back to usage patterns.

As we will see in the following, these three matrices

provide the basis for assisted discovery of vulnerabilities

and conclude the rather theoretical presentation of our

practical method.

2.4 Assisted Vulnerability Discovery

Once the decomposition has been calculated, which takes

minutes on average consumer hardware, the analyst can

query the obtained information and matrices in real time.

Hence, our method can be directly applied to assist an

analyst while browsing and auditing source code. In par-

ticular, the following three activities can be conducted

during an auditing session.

Vulnerability extrapolation. By comparing the row

vectors of V using a similarity measure, such as the

cosine similarity [27], the relations of all functions in

the code base can be assessed. This allows for quickly

discovering functions that share similar API usage pat-

terns and builds the basis for extrapolating vulnerabili-

ties. Given a function containing a known vulnerabil-

ity, the analyst can scan the code base for occurrences

of similar API usage and focus on functions related to

the vulnerability. This guided search for vulnerabilities

can significantly reduce the number of functions to be

audited. We demonstrate this practice on a real-life ex-

ample in Section 3.2.

Extracting dominant usage patterns. The proposed

method can also be used as a pre-processing step for in-

depth analysis. The column vectors of the matrix U cor-

respond to the d most dominant API usage patterns and

their respective combinations of symbols. Using these

patterns, an analyst can easily group the code base into

different subsets and concentrate on particular usage pat-

terns, for example, by restricting the audit only to func-

tions making use of security-critical APIs, such as net-

work and authentication routines.

API browsing. As the majority of software is devel-

oped in a modular manner, any code base of reason-

able size necessarily contains internal APIs [6]. Often

these internal APIs are scarcely documented and scat-

tered across different files in the code base. Nonethe-

less, an understanding of these APIs can be crucial for

identifying more subtle vulnerabilities. Our method as-

sists an analyst in understanding public as well as inter-

nal APIs. By comparing rows in V (functions) with rows

in U (symbols), an analyst can determine important API

symbols associated with the APIs used in a function. In

the same manner, it is possible to determine functions,

which best match a constructed set of API symbols. This

allows a very directed search for occurrences of particu-

lar patterns known to commonly cause problems.

3 Evaluation and Case Study

Thus far we have seen how source code can be modeled

and analysed for discovery of vulnerabilities using ma-

chine learning techniques. In practice however, it is not

the sophisticated design of a method that matters, but its

ability to really assist in day-to-day auditing. To study

the efficacy of the proposed method in practice, we thus

conduct two experiments with real source code. In the

first experiment, we quantitatively evaluate the ability of

our method to automatically identify API usage patterns

and to structure source code (Section 3.1). In the sec-

ond experiment—a case study—we apply our method

for vulnerability extrapolation to the library FFmpeg and

construct a working exploit for a discovered zero-day

vulnerability (Section 3.2).

3.1 Quantitative Evaluation

The effectivity of vulnerability extrapolation rests on the

accurate identification of API usage patterns. To validate

this capability, we construct an evaluation code base that

�

comprises functions from different classes of API usage.

We ensure that these classes contain distinct usage pat-

terns by selecting functions from different contexts and

applications.

In particular, we consider a total of 420

functions from the Linux Kernel (2.6.32) and the media-

decoding library FFmpeg (0.6.0) which are assigned to

the following five classes:

1. Functions for sending network data (Linux kernel)

2. Functions for probing keyboards (Linux kernel)

3. Functions for probing sound drivers (Linux Kernel)

4. Functions for media demuxing (FFmpeg)

5. Functions for media decoding (FFmpeg)

The functions in each class are randomly partitioned

into subsets, such that each subset has approximately the

same size and each class is split into ten subsets. We then

apply our method to the resulting code base and study

how inner-class and intra-class relationships are captured

by embedding functions in a vector space and by project-

ing the functions to directions determined by PCA.

Figure 3(a) presents the pairwise similarities between

the subsets of the five classes directly measured in the

vector space, that is, prior to application of PCA. The

similarities are depicted as a matrix, where each cell

shows the average cosine similarity between the func-

tions in one subset and another. While the matrix shows

some structured contour, most of its surface appears

blurred. A notable variance between similarity scores

within a class is observable and several subsets of differ-

ent classes can hardly be discriminated. It is evident that

embedding of functions alone is not sufficient for deter-

mining usage patterns in source code.

Figure 3(b) shows the pairwise similarities between

the subsets measured after the embedded functions have

been projected to the top five directions identified by

PCA. That is, the functions are represented as mixtures

of API usage patterns instead of individual symbols. In

this projected representation, inter-class similarities are

significantly higher than in the original vector space and

high distances between functions of different classes can

be observed. The application of PCA removes “noise”

from the code base and thereby allows to infer relevant

patterns for discriminating the five classes—a prerequi-

site for effective extrapolation of vulnerabilities.

3.2 Case Study: FFmpeg

We finally demonstrate by example how the proposed

method can be integrated into a real auditing task, where

it plays a key role in the identification of a zero-day

vulnerability.

For this case study, we consider the

(a) Similarity matrix for embedded functions.

(b) Similarity matrix for projected functions using PCA.

Figure 3: Similarity matrix for (a) embedded functions

and (b) embedded functions projected to the top 5 direc-

tions of PCA. Dark shading indicates high similarity.

widely used open-source media decoding library FFm-

peg (0.6.0) and extrapolate a recently discovered vulner-

ability found in the processing of FLIC videos.

The original vulnerability.

In September 2010, the

open source CERT reported a security vulnerability

(CVE-2010-3429) in FFmpeg attributed to Cesar Bernar-

dini, which allows an attacker to write data to arbitrary

locations in memory relative to a pointer on the heap

via crafted FLIC media frames [1]. The vulnerability

is contained in the function flic decode frame 8BPP

displayed in Figure 4, which is called for each frame of

an 8 bit-per-pixel video.

The critical write operation is performed on line 29,

where the least significant byte of the user-supplied

integer line packets is written to a location rela-

�

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

static int flic_decode_frame_8BPP(AVCodecContext *avctx,

void *data, int *data_size,

const uint8_t *buf, int buf_size)

{

[...] signed short line_packets; int y_ptr; [...]

pixels = s->frame.data[0];

pixel_limit = s->avctx->height * s->frame.linesize[0];

frame_size = AV_RL32(&buf[stream_ptr]); [...]

frame_size -= 16;

/* iterate through the chunks */

while ((frame_size > 0) && (num_chunks > 0)) { [...]

chunk_type = AV_RL16(&buf[stream_ptr]);

stream_ptr += 2;

switch (chunk_type) { [...]

case FLI_DELTA:

y_ptr = 0;

compressed_lines = AV_RL16(&buf[stream_ptr]);

stream_ptr += 2;

while (compressed_lines > 0) {

line_packets = AV_RL16(&buf[stream_ptr]);

stream_ptr += 2;

if ((line_packets & 0xC000) == 0xC000) {

// line skip opcode

line_packets = -line_packets;

y_ptr += line_packets * s->frame.linesize[0];

} else if ((line_packets & 0xC000) == 0x4000) {

[...]

} else if ((line_packets & 0xC000) == 0x8000) {

// "last byte" opcode

pixels[y_ptr + s->frame.linesize[0] - 1] =

line_packets & 0xff;

} else { [...]

y_ptr += s->frame.linesize[0];

}

}

break; [...]

} [...]

} [...]

return buf_size;

}

Figure 4: Original vulnerability (CVE-2010-3429).

tive to the heap-based buffer pixels.

It has been

overlooked that the offset is dependent on y ptr and

s->frame.linesize[0], both of which can be con-

trolled by an attacker. In fact, due to the loop starting at

line 18, it is possible to assign an arbitrary value to y ptr

independent of the last value stored in line packets

and no check is performed to verify whether the offset

remains within the confined regions of the buffer. It is

thus possible for an attacker to write arbitrary bytes to

arbitrary locations in memory.

Extrapolation. For discovery of similar vulnerabili-

ties, we apply our method to the code base of FFm-

peg consisting of 6,778 functions. For PCA, we choose

d = 200 and thereby project the embedded functions

to a subspace capturing up to 200 unique API usage

patterns. Table 1 lists the 20 most similar functions to

flic decode frame 8BPP in this subspace. Note that

we have found 20 to be a reasonable number of func-

tions to consider in one batch and, as we will see shortly,

sufficiently large for identification of vulnerabilities.

Inspecting the functions listed in Table 1, we first spot

a similar flaw in flic decode frame 15 16BPP, where

our method reports a similarity of 96%. This vulnera-

bility has been discovered previously and is patched in

the current versions of FFmpeg. Surprisingly however,

another similar bug in function vmd decode located in a

different source file has not discovered by the developers.

Our method reports a similarity of 72% for vmd decode

leading us almost instantly to this unknown vulnerability.

The vulnerability is shown in Figure 5 and 6.

Similarity

1.00

0.96

0.83

0.80

0.80

0.76

0.72

0.70

0.68

0.67

0.66

0.66

0.66

0.66

0.65

0.65

0.65

0.64

0.64

0.64

Function name

flic decode frame 8BPP

flic decode frame 15 16BPP

lz unpack

decode frame (lcldec.c)

raw encode

vmdvideo decode init

vmd decode

aasc decode frame

flic decode init

decode format80

targa decode rle

adpcm decode init

decode frame (zmbv.c)

decode frame (8bps.c)

msrle decode 8 16 24 32

wmavoice decode init

get quant

MP3lame encode frame

mpegts write section

tgv decode frame

Table 1: Top 20 of 6,778 functions ranked by cosine sim-

ilarity to flic decode frame 8BPP. Discovered vul-

nerabilities are indicated by a shaded background.

Just like the original function, vmd decode reads the

frame dimensions and offsets specified by the individual

frame on line 8 to 11 and then calculates an offset into the

pixel buffer based on these values on line 34. The func-

tion fails to validate whether the given offset references

a location within the buffer. Therefore, as user-supplied

data is copied to the specified location on line 43, an at-

tacker can corrupt memory by choosing an offset outside

of the buffer

In this case study, our method is mainly used to iden-

tify functions sharing similar API usage patterns, yet this

search for semantic similarities is pivotal for discovery of

vulnerabilities. Note that the bodies of the original func-

tion and the discovered vulnerability differ significantly

and a simple comparison would have been insufficient to

spot their relation. By contrast, this study demonstrates

that API usage patterns are commonly linked to sets of

semantically related functions, simply because similar

tasks are usually solved by similar means within a code

base. Consequently, functions similar by these terms are

often plagued by related vulnerabilities.

Exploit. To demonstrate the relevance of this finding,

we craft an exploit for the discovered vulnerability tar-

geting the popular media player MPlayer that is linked

to the FFmpeg library on Ubuntu Linux (10.04 LTS).

On this platform, MPlayer is not compiled as a position-

�

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

static void vmd_decode(VmdVideoContext *s)

{

[...]

int frame_x, frame_y;

int frame_width, frame_height;

int dp_size;

frame_x = AV_RL16(&s->buf[6]);

frame_y = AV_RL16(&s->buf[8]);

frame_width = AV_RL16(&s->buf[10]) - frame_x + 1;

frame_height = AV_RL16(&s->buf[12]) - frame_y + 1;

if ((frame_width == s->avctx->width &&

frame_height == s->avctx->height) &&

(frame_x || frame_y)) {

s->x_off = frame_x;

s->y_off = frame_y;

}

frame_x -= s->x_off;

frame_y -= s->y_off;

[...]

if (frame_x || frame_y || (frame_width != s->avctx->width) ||

(frame_height != s->avctx->height)) {

memcpy(s->frame.data[0], s->prev_frame.data[0],

s->avctx->height * s->frame.linesize[0]);

}

[...]

if (s->size >= 0) {

/* originally UnpackFrame in VAG’s code */

pb = p;

meth = *pb++;

[...]

dp = &s->frame.data[0][frame_y * s->frame.linesize[0]

+ frame_x];

dp_size = s->frame.linesize[0] * s->avctx->height;

pp = &s->prev_frame.data[0][frame_y *

s->prev_frame.linesize[0] + frame_x];

switch (meth) {

[...]

case 2:

for (i = 0; i < frame_height; i++) {

memcpy(dp, pb, frame_width);

pb += frame_width;

dp += s->frame.linesize[0];

pp += s->prev_frame.linesize[0];

}

break;

[...]

}

}

}

Figure 5: Discovered zero-day vulnerability

static int vmdvideo_decode_frame(AVCodecContext *avctx,

void *data, int *data_size,

AVPacket *avpkt)

{

const uint8_t *buf = avpkt->data;

int buf_size = avpkt->size;

VmdVideoContext *s = avctx->priv_data;

s->buf = buf;

s->size = buf_size;

[...]

vmd_decode(s);

/* make the palette available on the way out */

memcpy(s->frame.data[1], s->palette, PALETTE_COUNT * 4);

/* shuffle frames */

FFSWAP(AVFrame, s->frame, s->prev_frame);

if (s->frame.data[0])

avctx->release_buffer(avctx, &s->frame);

[...]

}

Figure 6: Caller of vulnerable function vmd decode.

independent executable and thus the image of the exe-

cutable is located at a predictable offset. As a result, we

can successfully exploit the vulnerability, despite con-

temporary anti-exploitation techniques, such as Address

Space Layout Randomization. A detailed description of

the exploit is presented in Appendix A and further back-

ground on this case study is presented in [38].

4 Related Work

Code analysis and methods for detection of vulnerabil-

ities have been a vivid area of research in computer se-

curity. Over the last years, many different concepts and

techniques have been devised to tackle this problem. Our

contribution is related to several of these approaches, as

we discuss in the following.

Our concept of representing code based on patterns of

API usage is motivated by the fact that classes of vul-

nerabilities can often be directly linked to distinct API

symbols, a correspondence that is well-known to practi-

tioners and reflected in several static analysis tools, such

as Flawfinder [35], RATS [2] or ITS4 [33]. These tools

offer fixed databases of API symbols commonly found in

conjunction with vulnerabilities and allow a target code

base to be scanned for their occurrences.

In academic security research, the connection between

API symbols and vulnerability classes has also been rec-

ognized and provides a basis for taint analysis [20, 29].

In taint analysis, an analyst can describe a class of vulner-

abilities by a source-sink system defined over API sym-

bols. If data tainted by an attacker and stemming from

one of the sources propagates to a sink without undergo-

ing validation, a vulnerability is detected. The success of

this approach has been demonstrated for different types

of vulnerabilities and attacks, such as SQL injection [18],

Cross Site Scripting [14] and integer-based vulnerabili-

ties [34]. In most realizations, taint analysis is a dynamic

process and thus limited to discovery of vulnerabilities

observable during execution of a program.

A second strain of research has considered symbolic

execution as an extension to taint analysis for detecting

vulnerabilities in source code [3, 29]. Most notably is the

work of Avgerinos et al. [3] that introduces a framework

for finding and even exploiting vulnerabilities using sym-

bolic execution. Despite some amazing results, symbolic

execution suffers from the infeasibility of exploring all

possible execution paths and its application in practice

critically depends on heuristics for pruning off execution

branches. In the general case, the search for vulnerabil-

ities thus remains a manual process, which however can

be accelerated by assisted analysis, as shown in this work

For static code analysis, Engler et al. [10] are among

the first to introduce a method suitable for detecting

flaws attributed to programming patterns. However, their

method requires a manual definition of these patterns. As

an extension, Li and Zhou [16] present an approach for

mining programming rules and automatically detecting

their violation. An inherent problem of this approach is

that a frequent programming mistakes will lead to the

inference of a valid pattern and thus common flaws can-

not be detected. Williams et al. [36] as well as Livshits

et al. [17] address this problem and incorporate software

�

revision histories into the analysis. The detection of pro-

gramming rules not related to corrections of code, there-

fore becomes less likely. On the downside, only pro-

gramming rules violated in the past can be detected, mak-

ing the discovery of previously unknown flaw patterns

impossible.

Finally, techniques from the field of machine learn-

ing have been successfully applied in several areas of

security, such as for intrusion detection [e.g., 7, 12, 15]

and analysis of malicious software [e.g., 4, 5, 23]. A

large body of research has been concerned with the

design of learning-based security systems, as well as

with their shortcomings [13, 30] and evasion possibili-

ties [21, 22, 31]. However, to our knowledge, the appli-

cation of machine learning to problems of offensive se-

curity, such as vulnerability discovery, has gained almost

no attention so far.

5 Conclusion

We have introduced a method for assisted discovery of

vulnerabilities in source code, deliberately leaving aside

the known difficulties of fully automated analysis. Our

method accelerates the process of manual code auditing

by quickly identifying patterns of API usage in a code

base and suggesting code related to known vulnerabil-

ities to a security analyst. We have demonstrated on

real production code that once a vulnerability is known,

similar vulnerabilities can be easily identified by cycling

through similar functions determined by our method.

The proposed method uses API usage patterns for

analysing the code base. Many vulnerabilities can be

captured well by API usage, yet there also exist cases

where the code structure of a function is more relevant

for auditing. While the proposed method can not uncover

vulnerabilities building only on these patterns, we are

currently investigating techniques for integrating struc-

tural information from source code into the process of

vulnerability extrapolation. Moreover, the ability of our

approach to narrow the auditing process to a few inter-

esting functions may also play well with software test-

ing, for example, for selectively fuzzing functions or per-

forming involved symbolic execution.

In conclusion, we can note that fixing a single vulnera-

bility without performing sufficient extrapolation, as cur-

rently performed by many vendors of software, can be

contra-productive, given that it provides attackers with

information that may be used to identify similar yet un-

patched vulnerabilities. Vulnerability extrapolation can

help here to strengthen software security and to support

the elimination of related vulnerabilities in practice.

Acknowledgments

The authors gratefully acknowledge the funding from

Bundesministerium f¨ur Bildung und Forschung under the

project PROSEC (FKZ 01BY1145).

References

[1] 2010-004 ffmpeg/libavcodec arbitrary offset dereference. Open

Source Computer Emergency Response Team, http://www.ocert.

org/advisories/ocert-2010-004.html, visited April, 2011.

[2] Rough auditing tool for security. Fortify Software Inc., https://

www.fortify.com/ssa-elements/threat-intelligence/rats.html, vis-

ited April, 2011.

[3] T. Avgerinos, S. K. Cha, B. L. T. Hao, and D. Brumley. AEG: Au-

tomatic Exploit Generation. In Proc. of Network and Distributed

System Security Symposium (NDSS), 2011.

[4] M. Bailey, J. Oberheide, J. Andersen, Z. M. Mao, F. Jahanian,

and J. Nazario. Automated Classification and Analysis of Inter-

net Malware. In Recent Adances in Intrusion Detection (RAID),

pages 178–197, 2007.

[5] U. Bayer, P. Comparetti, C. Hlauschek, C. Kruegel, and E. Kirda.

Scalable, behavior-based malware clustering. In Proc. of Network

and Distributed System Security Symposium (NDSS), 2009.

[6] J. Bloch. How to design a good API and why it matters. In Proc.

of ACM SIGPLAN Symposium on Object-oriented Programming

Systems, Languages, and Applications (OOPSLA), pages 506–

507, 2006.

[7] G. Cretu, A. Stavrou, M. Locasto, S. Stolfo, and A. Keromytis.

Casting out demons: Sanitizing training data for anomaly sen-

sors. In Proc. of IEEE Symposium on Security and Privacy, 2008.

[8] S. Deerwester, S. Dumais, G. Furnas, T. Landauer, and R. Harsh-

man. Indexing by latent semantic analysis. Journal of the Amer-

ican society for information science, 41(6):391–407, 1990.

[9] R. Duda, P.E.Hart, and D.G.Stork. Pattern classification. John

Wiley & Sons, second edition, 2001.

[10] D. Engler, D. Y. Chen, S. Hallem, A. Chou, and B. Chelf. Bugs

as deviant behavior: A general approach to inferring errors in

systems code. In Proc. of ACM Symposium on Operating Systems

Principles (SOSP), pages 57–72, 2001.

[11] N. Falliere, L. O. Murchu, , and E. Chien. W32.stuxnet dossier.

Symantec Corporation, 2011.

[12] S. Forrest, S. Hofmeyr, A. Somayaji, and T. Longstaff. A sense of

self for unix processes. In Proc. of IEEE Symposium on Security

and Privacy, pages 120–128, Oakland, CA, USA, 1996.

[13] C. Gates and C. Taylor. Challenging the anomaly detection

In Proc. of New Security

paradigm: A provocative discussion.

Paradigms Workshop (NSPW), pages 21–29, 2006.

[14] N. Jovanovic, C. Kruegel, and E. Kirda. Pixy: A static analy-

sis tool for detecting web application vulnerabilities. In Proc. of

IEEE Symposium on Security and Privacy, pages 6–263, 2006.

[15] C. Kruegel and G. Vigna. Anomaly detection of web-based at-

tacks. In Proc. of Conference on Computer and Communications

Security (CCS), pages 251–261, 2003.

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc