. Invited Review .

0

2

0

2

r

a

M

8

1

]

L

C

.

s

c

[

1

v

1

7

2

8

0

.

3

0

0

2

:

v

i

X

r

a

Pre-trained Models for Natural Language Processing: A Survey

Xipeng Qiu*, Tianxiang Sun, Yige Xu, Yunfan Shao, Ning Dai & Xuanjing Huang

School of Computer Science, Fudan University, Shanghai 200433, China;

Shanghai Key Laboratory of Intelligent Information Processing, Shanghai 200433, China

Recently, the emergence of pre-trained models (PTMs) has brought natural language processing (NLP) to a new era. In this survey,

we provide a comprehensive review of PTMs for NLP. We first briefly introduce language representation learning and its research

progress. Then we systematically categorize existing PTMs based on a taxonomy with four perspectives. Next, we describe how to

adapt the knowledge of PTMs to the downstream tasks. Finally, we outline some potential directions of PTMs for future research.

This survey is purposed to be a hands-on guide for understanding, using, and developing PTMs for various NLP tasks.

Deep Learning, Neural Network, Natural Language Processing, Pre-trained Model, Distributed Representation, Word

Embedding, Self-Supervised Learning, Language Modelling

1 Introduction

With the development of deep learning, various neural net-

works have been widely used to solve Natural Language Pro-

cessing (NLP) tasks, such as convolutional neural networks

(CNNs) [75, 80, 45], recurrent neural networks (RNNs) [160,

100], graph-based neural networks (GNNs) [146, 161, 111]

and attention mechanisms [6, 171]. One of the advantages

of these neural models is their ability to alleviate the fea-

ture engineering problem. Non-neural NLP methods usually

heavily rely on the discrete handcrafted features, while neural

methods usually use low-dimensional and dense vectors (aka.

distributed representation) to implicitly represent the syntactic

or semantic features of the language. These representations

are learned in specific NLP tasks. Therefore, neural methods

make it easy for people to develop various NLP systems.

Despite the success of neural models for NLP tasks, the

performance improvement may be less significant compared

to the Computer Vision (CV) field. The main reason is that

current datasets for most supervised NLP tasks are rather small

(except machine translation). Deep neural networks usually

* Corresponding author (email: xpqiu@fudan.edu.cn)

have a large number of parameters which make them overfit

on these small training data and do not generalize well in

practice. Therefore, the early neural models for many NLP

tasks were relatively shallow and usually consisted of only

1∼3 neural layers.

Recently, substantial work has shown that pre-trained mod-

els (PTMs) on the large corpus can learn universal language

representations, which are beneficial for downstream NLP

tasks and can avoid training a new model from scratch. With

the development of computational power, the emergence of

the deep models (i.e., Transformer [171]) and the constant

enhancement of training skills, the architecture of PTMs has

been advanced from shallow to deep. The first-generation

PTMs aim to learn good word embeddings. Since these mod-

els themselves are no longer needed by downstream tasks, they

are usually very shallow for computational efficiencies, such

as Skip-Gram [116] and GloVe [120]. Although these pre-

trained embeddings can capture semantic meanings of words,

they are context-free and fail to capture higher-level concepts

of text like syntactic structures, semantic roles, anaphora, etc.

�

2

QIU XP, et al.

Pre-trained Models for Natural Language Processing: A Survey March (2020)

The second-generation PTMs focus on learning contextual

word embeddings, such as CoVe [113], ELMo [122], OpenAI

GPT [130] and BERT [32]. These learned encoders are still

needed to represent words in context by downstream tasks.

Besides, various pre-training tasks are also proposed to learn

PTMs for different purposes.

The contributions of this survey can be summarized as

follows:

1. Comprehensive review. We provide a comprehensive

review of PTMs for NLP, including background knowl-

edge, model architecture, pre-training tasks, various

extensions, adaption approaches, and applications. We

provide detailed descriptions of representative models,

make the necessary comparison, and summarise the

corresponding algorithms.

2. New taxonomy. We propose a taxonomy of PTMs for

NLP, which categorizes existing PTMs from four dif-

ferent perspectives: 1) type of word representation; 2)

architecture of PTMs; 3) type of pre-training tasks; 4)

extensions for specific types of scenarios or inputs.

3. Abundant resources. We collect abundant resources on

PTMs, including open-source systems, paper lists, etc.

4. Future directions. We discuss and analyze the limi-

tations of existing PTMs. Also, we suggest possible

future research directions.

The rest of the survey is organized as follows. Section 2

outlines the background concepts and commonly used nota-

tions of PTMs. Section 3 gives a brief overview of PTMs

and clarifies the categorization of PTMs. Section 4 provides

extensions of PTMs. Section 5 discusses how to transfer the

knowledge of PTMs to downstream tasks. Section 6 gives the

related resources on PTMs, including open-source systems,

paper lists, etc. Section 7 presents a collection of applications

across various NLP tasks. Section 8 discusses the current chal-

lenges and suggests future directions. Section 9 summarizes

the paper.

2 Background

2.1 Language Representation Learning

As suggested by Bengio et al. [12], a good representation

should express general-purpose priors that are not task-specific

but would be likely to be useful for a learning machine to solve

AI-tasks. When it comes to language, a good representation

should capture the implicit linguistic rules and common sense

knowledge hiding in text data, such as lexical meanings, syn-

tactic structures, semantic roles, and even pragmatics.

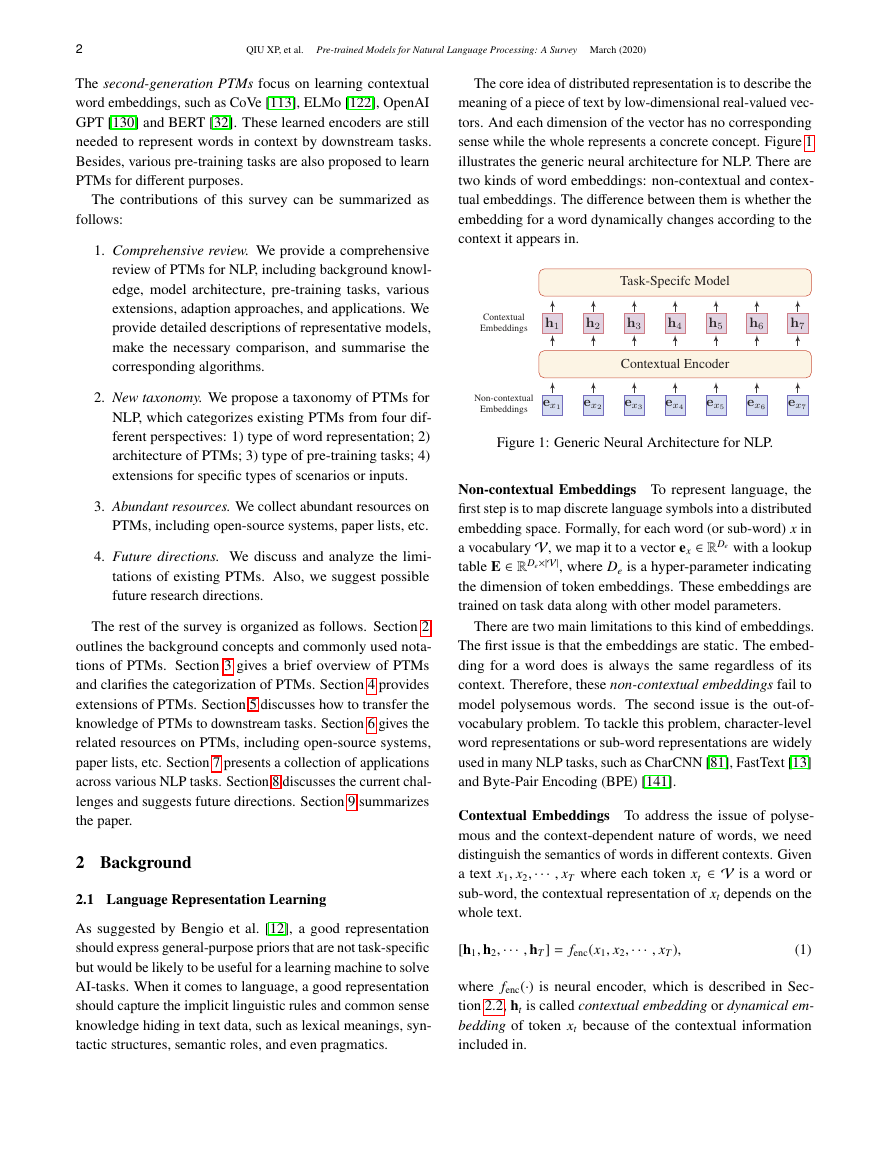

The core idea of distributed representation is to describe the

meaning of a piece of text by low-dimensional real-valued vec-

tors. And each dimension of the vector has no corresponding

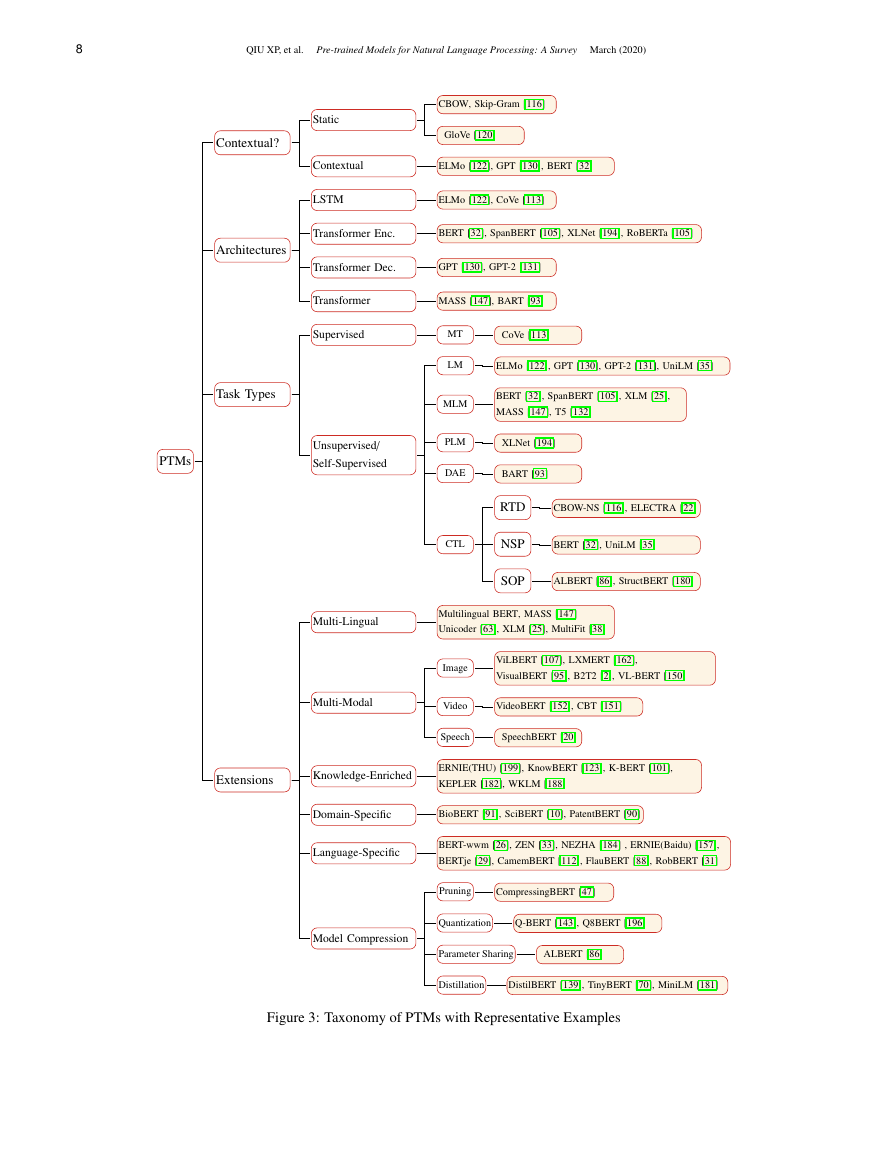

sense while the whole represents a concrete concept. Figure 1

illustrates the generic neural architecture for NLP. There are

two kinds of word embeddings: non-contextual and contex-

tual embeddings. The difference between them is whether the

embedding for a word dynamically changes according to the

context it appears in.

Figure 1: Generic Neural Architecture for NLP.

Non-contextual Embeddings To represent language, the

first step is to map discrete language symbols into a distributed

embedding space. Formally, for each word (or sub-word) x in

a vocabulary V, we map it to a vector ex ∈ RDe with a lookup

table E ∈ RDe×|V|, where De is a hyper-parameter indicating

the dimension of token embeddings. These embeddings are

trained on task data along with other model parameters.

There are two main limitations to this kind of embeddings.

The first issue is that the embeddings are static. The embed-

ding for a word does is always the same regardless of its

context. Therefore, these non-contextual embeddings fail to

model polysemous words. The second issue is the out-of-

vocabulary problem. To tackle this problem, character-level

word representations or sub-word representations are widely

used in many NLP tasks, such as CharCNN [81], FastText [13]

and Byte-Pair Encoding (BPE) [141].

Contextual Embeddings To address the issue of polyse-

mous and the context-dependent nature of words, we need

distinguish the semantics of words in different contexts. Given

a text x1, x2,··· , xT where each token xt ∈ V is a word or

sub-word, the contextual representation of xt depends on the

whole text.

[h1, h2,··· , hT ] = fenc(x1, x2,··· , xT ),

(1)

where fenc(·) is neural encoder, which is described in Sec-

tion 2.2, ht is called contextual embedding or dynamical em-

bedding of token xt because of the contextual information

included in.

ex1ex2ex3ex4ex5ex6ex7Non-contextualEmbeddingsh1h2h3h4h5h6h7ContextualEmbeddingsContextualEncoderTask-SpecifcModel�

QIU XP, et al.

Pre-trained Models for Natural Language Processing: A Survey March (2020)

3

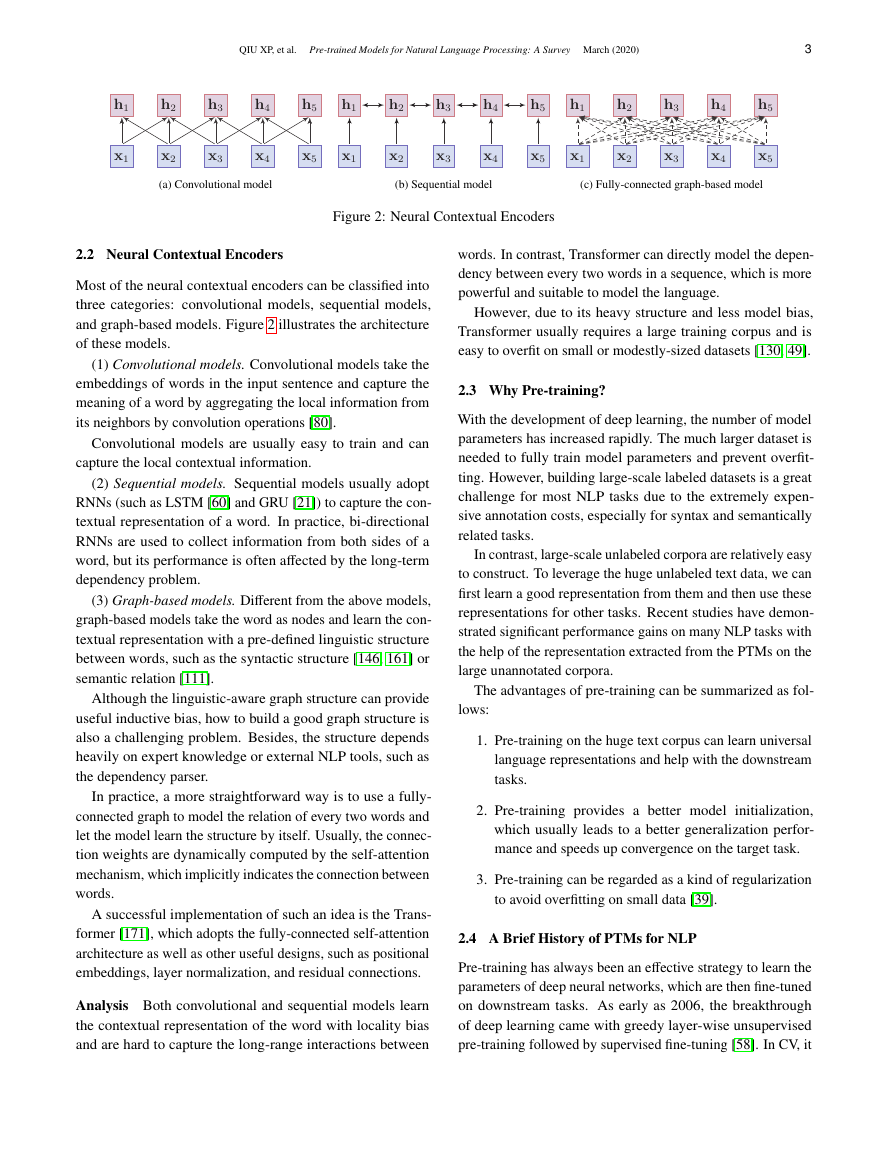

(a) Convolutional model

(b) Sequential model

(c) Fully-connected graph-based model

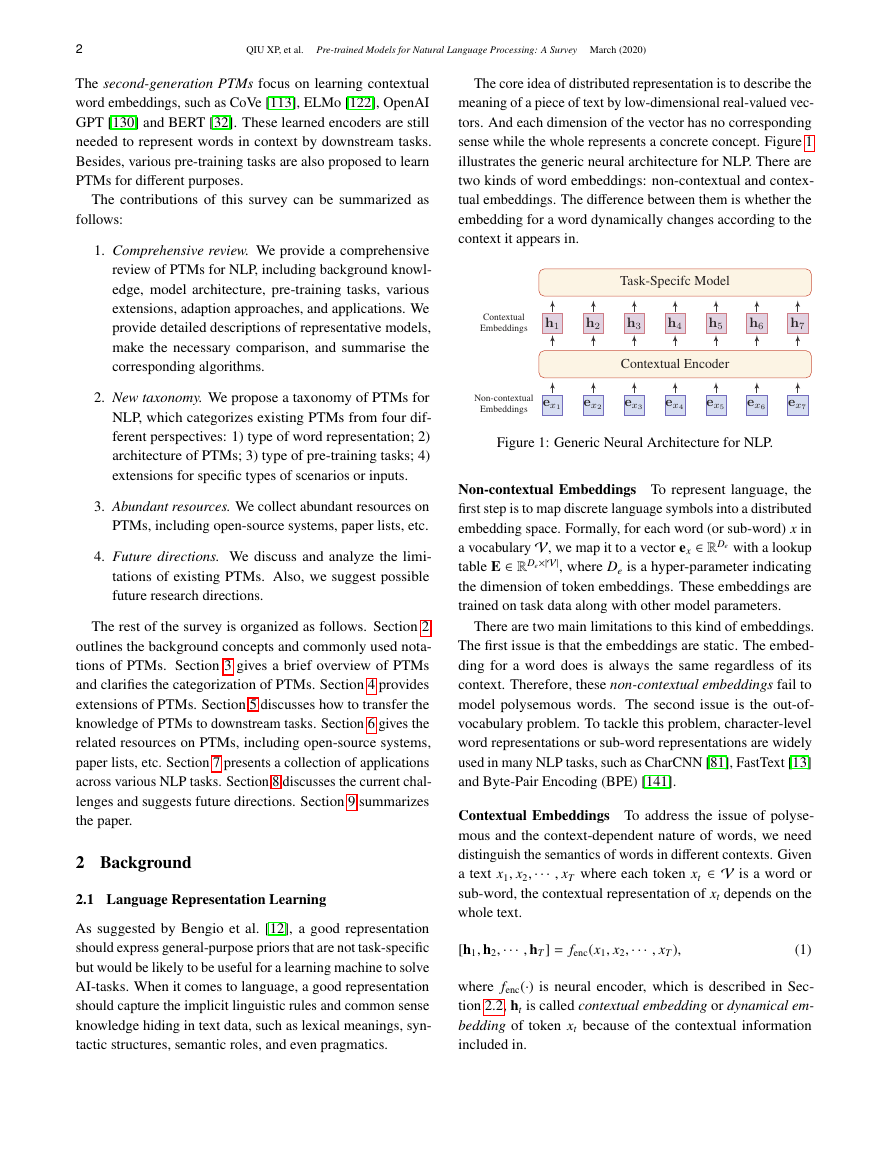

Figure 2: Neural Contextual Encoders

2.2 Neural Contextual Encoders

Most of the neural contextual encoders can be classified into

three categories: convolutional models, sequential models,

and graph-based models. Figure 2 illustrates the architecture

of these models.

(1) Convolutional models. Convolutional models take the

embeddings of words in the input sentence and capture the

meaning of a word by aggregating the local information from

its neighbors by convolution operations [80].

Convolutional models are usually easy to train and can

capture the local contextual information.

(2) Sequential models. Sequential models usually adopt

RNNs (such as LSTM [60] and GRU [21]) to capture the con-

textual representation of a word. In practice, bi-directional

RNNs are used to collect information from both sides of a

word, but its performance is often affected by the long-term

dependency problem.

(3) Graph-based models. Different from the above models,

graph-based models take the word as nodes and learn the con-

textual representation with a pre-defined linguistic structure

between words, such as the syntactic structure [146, 161] or

semantic relation [111].

Although the linguistic-aware graph structure can provide

useful inductive bias, how to build a good graph structure is

also a challenging problem. Besides, the structure depends

heavily on expert knowledge or external NLP tools, such as

the dependency parser.

In practice, a more straightforward way is to use a fully-

connected graph to model the relation of every two words and

let the model learn the structure by itself. Usually, the connec-

tion weights are dynamically computed by the self-attention

mechanism, which implicitly indicates the connection between

words.

A successful implementation of such an idea is the Trans-

former [171], which adopts the fully-connected self-attention

architecture as well as other useful designs, such as positional

embeddings, layer normalization, and residual connections.

Analysis Both convolutional and sequential models learn

the contextual representation of the word with locality bias

and are hard to capture the long-range interactions between

words. In contrast, Transformer can directly model the depen-

dency between every two words in a sequence, which is more

powerful and suitable to model the language.

However, due to its heavy structure and less model bias,

Transformer usually requires a large training corpus and is

easy to overfit on small or modestly-sized datasets [130, 49].

2.3 Why Pre-training?

With the development of deep learning, the number of model

parameters has increased rapidly. The much larger dataset is

needed to fully train model parameters and prevent overfit-

ting. However, building large-scale labeled datasets is a great

challenge for most NLP tasks due to the extremely expen-

sive annotation costs, especially for syntax and semantically

related tasks.

In contrast, large-scale unlabeled corpora are relatively easy

to construct. To leverage the huge unlabeled text data, we can

first learn a good representation from them and then use these

representations for other tasks. Recent studies have demon-

strated significant performance gains on many NLP tasks with

the help of the representation extracted from the PTMs on the

large unannotated corpora.

The advantages of pre-training can be summarized as fol-

lows:

1. Pre-training on the huge text corpus can learn universal

language representations and help with the downstream

tasks.

2. Pre-training provides a better model

initialization,

which usually leads to a better generalization perfor-

mance and speeds up convergence on the target task.

3. Pre-training can be regarded as a kind of regularization

to avoid overfitting on small data [39].

2.4 A Brief History of PTMs for NLP

Pre-training has always been an effective strategy to learn the

parameters of deep neural networks, which are then fine-tuned

on downstream tasks. As early as 2006, the breakthrough

of deep learning came with greedy layer-wise unsupervised

pre-training followed by supervised fine-tuning [58]. In CV, it

h1h2h3h4h5x1x2x3x4x5h1h2h3h4h5x1x2x3x4x5h1h2h3h4h5x1x2x3x4x5�

4

QIU XP, et al.

Pre-trained Models for Natural Language Processing: A Survey March (2020)

has been in practice to pre-train models on the huge ImageNet

corpus, and then fine-tune further on smaller data for different

tasks. This is much better than a random initialization because

the model learns general image features which can then be

used in various vision tasks.

In NLP, PTMs on large corpus have also been proved to be

beneficial for the downstream NLP tasks, from the shallow

word embedding to deep neural models.

2.4.1 Pre-trained word embeddings

Representing words as dense vectors has a long history [56].

The “modern” word embedding is introduced in pioneer work

of neural network language model (NNLM) [11]. Collobert

et al. [24] showed that the pre-trained word embedding on the

unlabelled data can significantly improve many NLP tasks.

To address the computational complexity, they learned word

embeddings with pairwise ranking task instead of language

modeling. Their work is the first attempt to obtain generic

word embeddings useful for other tasks from unlabeled data.

Mikolov et al. [116] showed that there is no need for deep

neural networks to build good word embeddings. They pro-

pose two shallow architectures: Continuous Bag-of-Words

(CBOW) and Skip-Gram (SG) models. Although the pro-

posed models are simple and shallow, they can still learn the

effective word embeddings capturing the latent syntactic and

semantic similarities. Word2vec is one of the most popular

implementations of these models and makes the pre-trained

word embeddings accessible for different tasks in NLP. Be-

sides, GloVe [120] is also a widely-used model for obtaining

pre-trained word embeddings, which are computed by global

word-word co-occurrence statistics from a corpus.

Although pre-trained word embeddings have been shown ef-

fective in NLP tasks, they are context-independent and mostly

trained by shallow models. When used in a downstream task,

the rest of the whole model still needs to be learned from

scratch.

During the same time period, many researchers also try to

learn embeddings of paragraph, sentence or document, such

as paragraph vector [89], Skip-thought vectors [82], Con-

text2Vec [114] and so on. Different from their modern suc-

cessors, these sentence embedding models try to encode in-

put sentences into a fixed-dimensional vector representation,

rather than the contextual representation for each token.

depending on its context.

McCann et al. [113] pre-trained a deep LSTM encoder

from an attentional sequence-to-sequence model with ma-

chine translation (MT). The context vectors (CoVe) output by

the pretrained encoder can improve the performance of a wide

variety of common NLP tasks. Peters et al. [122] pre-trained

2-layer LSTM encoder with a bidirectional language model

(BiLM), consisting of a forward LM and a backward LM. The

contextual representations output by the pre-trained BiLM,

ELMo (Embeddings from Language Models), are shown to

bring large improvements on a broad range of NLP tasks. Ak-

bik et al. [1] captured word meaning with contextual string

embeddings pre-trained with character-level LM.

However, these PTMs are usually used as a feature extrac-

tor to produce the contextual word embeddings, which are fed

into the main model for downstream tasks. Their parameters

are fixed and the rest parameters of the main model are still

trained from scratch.

Ramachandran et al. [134] found the seq2seq models can

be significantly improved by unsupervised pre-training. The

weights of both encoder and decoder are initialized with pre-

trained weights of two language models and then fine-tuned

with labeled data. ULMFiT (Universal Language Model Fine-

tuning) [62] attempted to fine-tune pre-trained LM for text

classification (TC) and achieved state-of-the-art results on six

widely-used TC datasets. ULMFiT consists of 3 phases: 1)

pre-training LM on general-domain data; 2) fine-tuning LM on

target data; 3) fine-tuning on the target task. ULMFiT also in-

vestigates some effective fine-tuning strategies, including dis-

criminative fine-tuning, slanted triangular learning rates, and

gradual unfreezing. Since ULMFiT, fine-tuning has become

the mainstream approach to adapt PTMs for the downstream

tasks.

More recently, the very deep PTMs have shown their pow-

erful ability in learning universal language representations:

e.g., OpenAI GPT (Generative Pre-training) [130] and BERT

(Bidirectional Encoder Representation from Transformer) [32].

Besides LM, an increasing number of self-supervised tasks

(see Section 3.1) are proposed to make the PTMs capturing

more knowledge form large scale text corpora.

3 Overview of PTMs

2.4.2 Pre-trained contextual encoders

Since most NLP tasks are beyond word-level, it is natural to

pre-train the neural encoders on sentence-level or higher. The

output vectors of neural encoders are also called contextual

word embeddings since they represent the word semantics

The major differences between PTMs are the usages of con-

textual encoders, pre-training tasks, and purposes. We have

briefly introduced the architectures of contextual encoders in

Section 2.2. In this section, we focus on the description of

pre-training tasks and give a taxonomy of PTMs.

�

QIU XP, et al.

Pre-trained Models for Natural Language Processing: A Survey March (2020)

5

3.1 Pre-training Tasks

The pre-training tasks are crucial for learning the universal

representation of language. Usually, these pre-training tasks

should be challenging and have substantial training data. In

this section, we summarize the pre-training tasks into three

categories1): supervised learning, unsupervised learning, and

self-supervised learning.

1. Supervised learning is to learn a function that maps an

input to an output based on training data consisting of

input-output pairs.

2. Unsupervised learning is to find some intrinsic knowl-

edge, such as clusters, densities, latent representations,

from unlabeled data.

3. Self-Supervised learning (SSL) is a blend of supervised

learning and unsupervised learning. The key idea of

SSL is to predict any part of the input from other parts

in some form. For example, the masked language model

(MLM) is a self-supervised task that attempts to predict

the masked words in a sentence given the rest words.

In CV, many PTMs are trained on large supervised training

sets like ImageNet. However, in NLP field, the datasets of

most supervised tasks are not large enough to train a good

PTM. The only exception is machine translation (MT). A

large-scale MT dataset, WMT 2017, consists of more than

7 million sentence pairs. Besides, MT is one of the most

challenging tasks in NLP, and an encoder pretrained on MT

can benefit a variety of downstream NLP tasks. As a success-

ful PTM, CoVe [113] is an encoder pretrained on MT task

and improves a wide variety of common NLP tasks: senti-

ment analysis (SST, IMDb), question classification (TREC),

entailment (SNLI), and question answering (SQuAD).

The pre-training tasks widely-used in existing PTMs are

listed as follows:

3.1.1 Language Modeling (LM)

The most common unsupervised task in NLP is probabilistic

language modeling (LM), which is a classic probabilistic den-

sity estimation problem. Although LM is a general concept,

in practice, LM often refers in particular to auto-regressive

LM or unidirectional LM.

Given a text sequence x1:T = [x1, x2,··· , xT ], its joint prob-

ability p(x1:T ) can be decomposed as

T

t=1

p(x1:T ) =

p(xt|x0:t−1),

(2)

where x0 is special token indicating the begin of sequence.

The conditional probability p(xt|x0:t−1) can be modeled by

a probability distribution over the vocabulary given linguistic

context x0:t−1. The context x0:t−1 is modeled by neural encoder

fenc(·), and the conditional probability is

p(xt|x0:t−1) = gLM

where gLM(·) is prediction layer.

fenc(x0:t−1)

(3)

,

Given a huge corpus, we can train the entire network with

a maximum-likelihood estimation (MLE).

A drawback of unidirectional LM is that the representation

of each token encodes only the leftward context tokens and it-

self. However, better contextual representations of text should

encode contextual information from both directions. An im-

proved solution is bidirectional LM (BiLM), which consists

of two unidirectional LMs: a forward left-to-right LM and a

backward right-to-left LM.

For BiLM, Baevski et al. [5] proposed a two-tower model

that the forward tower operates the left-to-right LM and the

backward tower operates the right-to-left LM.

3.1.2 Masked Language Modeling (MLM)

Masked language modeling (MLM) is first proposed by Tay-

lor [165] in the literature, who referred this as a Cloze task.

Devlin et al. [32] adapted this task as a novel pre-training task

to overcome the drawback of the standard unidirectional LM.

Loosely speaking, MLM first masks out some tokens from the

input sentences and then trains the model to predict the masked

tokens by the rest of tokens. However, this pre-training method

will create a mismatch between the pre-training phase and the

fine-tuning phase, because the mask token does not appear

during the fine-tuning phase. Empirically, to deal with this

issue, Devlin et al. [32] used a special [MASK] token 80% of

the time, a random token 10% of the time and the original

token 10% of the time to perform masking.

Sequence-to-Sequence MLM (Seq2Seq MLM) MLM is

usually solved as classification problem. We feed the masked

sequences to a neural encoder, whose output vectors are fur-

ther fed into a softmax classifier to predict the masked token.

Alternatively, we can use encoder-decoder (aka. sequence-

to-sequence) architecture for MLM, in which the encoder is

fed a masked sequence and the decoder sequentially produces

the masked tokens in auto-regression fashion. We refer to

this kind of MLM as sequence-to-sequence MLM (Seq2Seq

MLM), which is used in MASS [147] and T5 [132]. Seq2Seq

MLM can benefit the Seq2Seq-style downstream tasks, such

as question answering, summarization and machine transla-

tion.

1) Indeed, it is hard to clearly distinguish the unsupervised learning and self-supervised learning. For clarification, we refer “unsupervised learning” to the

learning without human-annotated supervised labels”.

�

6

QIU XP, et al.

Pre-trained Models for Natural Language Processing: A Survey March (2020)

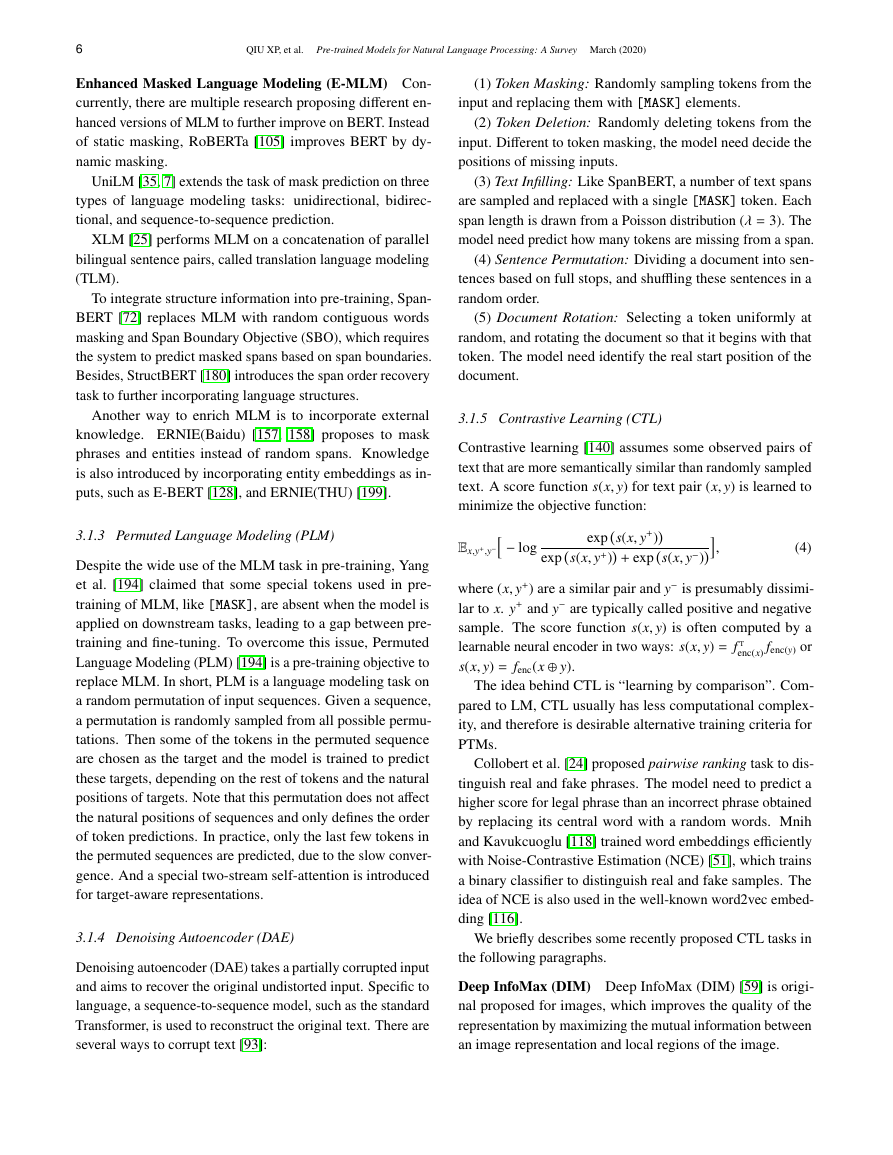

Enhanced Masked Language Modeling (E-MLM) Con-

currently, there are multiple research proposing different en-

hanced versions of MLM to further improve on BERT. Instead

of static masking, RoBERTa [105] improves BERT by dy-

namic masking.

UniLM [35, 7] extends the task of mask prediction on three

types of language modeling tasks: unidirectional, bidirec-

tional, and sequence-to-sequence prediction.

XLM [25] performs MLM on a concatenation of parallel

bilingual sentence pairs, called translation language modeling

(TLM).

To integrate structure information into pre-training, Span-

BERT [72] replaces MLM with random contiguous words

masking and Span Boundary Objective (SBO), which requires

the system to predict masked spans based on span boundaries.

Besides, StructBERT [180] introduces the span order recovery

task to further incorporating language structures.

Another way to enrich MLM is to incorporate external

knowledge. ERNIE(Baidu) [157, 158] proposes to mask

phrases and entities instead of random spans. Knowledge

is also introduced by incorporating entity embeddings as in-

puts, such as E-BERT [128], and ERNIE(THU) [199].

3.1.3 Permuted Language Modeling (PLM)

Despite the wide use of the MLM task in pre-training, Yang

et al. [194] claimed that some special tokens used in pre-

training of MLM, like [MASK], are absent when the model is

applied on downstream tasks, leading to a gap between pre-

training and fine-tuning. To overcome this issue, Permuted

Language Modeling (PLM) [194] is a pre-training objective to

replace MLM. In short, PLM is a language modeling task on

a random permutation of input sequences. Given a sequence,

a permutation is randomly sampled from all possible permu-

tations. Then some of the tokens in the permuted sequence

are chosen as the target and the model is trained to predict

these targets, depending on the rest of tokens and the natural

positions of targets. Note that this permutation does not affect

the natural positions of sequences and only defines the order

of token predictions. In practice, only the last few tokens in

the permuted sequences are predicted, due to the slow conver-

gence. And a special two-stream self-attention is introduced

for target-aware representations.

3.1.4 Denoising Autoencoder (DAE)

Denoising autoencoder (DAE) takes a partially corrupted input

and aims to recover the original undistorted input. Specific to

language, a sequence-to-sequence model, such as the standard

Transformer, is used to reconstruct the original text. There are

several ways to corrupt text [93]:

(1) Token Masking: Randomly sampling tokens from the

input and replacing them with [MASK] elements.

(2) Token Deletion: Randomly deleting tokens from the

input. Different to token masking, the model need decide the

positions of missing inputs.

(3) Text Infilling: Like SpanBERT, a number of text spans

are sampled and replaced with a single [MASK] token. Each

span length is drawn from a Poisson distribution (λ = 3). The

model need predict how many tokens are missing from a span.

(4) Sentence Permutation: Dividing a document into sen-

tences based on full stops, and shuffling these sentences in a

random order.

(5) Document Rotation: Selecting a token uniformly at

random, and rotating the document so that it begins with that

token. The model need identify the real start position of the

document.

3.1.5 Contrastive Learning (CTL)

Contrastive learning [140] assumes some observed pairs of

text that are more semantically similar than randomly sampled

text. A score function s(x, y) for text pair (x, y) is learned to

minimize the objective function:

− log

exps(x, y+)

exps(x, y+) + exps(x, y−)

Ex,y+,y−

,

(4)

where (x, y+) are a similar pair and y− is presumably dissimi-

lar to x. y+ and y− are typically called positive and negative

sample. The score function s(x, y) is often computed by a

learnable neural encoder in two ways: s(x, y) = f T

enc(x) fenc(y) or

s(x, y) = fenc(x ⊕ y).

The idea behind CTL is “learning by comparison”. Com-

pared to LM, CTL usually has less computational complex-

ity, and therefore is desirable alternative training criteria for

PTMs.

Collobert et al. [24] proposed pairwise ranking task to dis-

tinguish real and fake phrases. The model need to predict a

higher score for legal phrase than an incorrect phrase obtained

by replacing its central word with a random words. Mnih

and Kavukcuoglu [118] trained word embeddings efficiently

with Noise-Contrastive Estimation (NCE) [51], which trains

a binary classifier to distinguish real and fake samples. The

idea of NCE is also used in the well-known word2vec embed-

ding [116].

We briefly describes some recently proposed CTL tasks in

the following paragraphs.

Deep InfoMax (DIM) Deep InfoMax (DIM) [59] is origi-

nal proposed for images, which improves the quality of the

representation by maximizing the mutual information between

an image representation and local regions of the image.

�

QIU XP, et al.

Pre-trained Models for Natural Language Processing: A Survey March (2020)

7

Kong et al. [83] applied DIM to language representation

learning. The global representation of a sequence x is defined

to be the hidden state of the first token (assumed to be a spe-

cial start of sentence symbol) output by contextual encoder

fenc(x). The objective of DIM is to assign a higher score for

fenc(xi: j)T fenc( ˆxi: j) than fenc( ˜xi: j)T fenc( ˆxi: j), where xi: j denotes

an n-gram2) span from i to j in x, ˆxi: j denotes a sentence

masked at position i to j, and ˜xi: j denotes a randomly-sampled

negative n-gram from corpus.

Replaced Token Detection (RTD) Replaced Token Detec-

tion (RTD) is the same as NCE but predicts whether a token

is replaced given its surrounding context.

CBOW with negative sampling (CBOW-NS) [116] can be

viewed as a simple version of RTD, in which the negative

samples are randomly sampled from vocabulary with simple

proposal distribution.

ELECTRA [22] improves RTD by utilizing a generator to

replacing some tokens of a sequence. A generator G and a dis-

criminator D are trained following a two-stage procedure: (1)

Train only the generator with MLM task for n1 steps; (2) Ini-

tialize the weights of the discriminator with the weights of the

generator. Then train the discriminator with a discriminative

task for n2 steps, keeping G frozen. Here the discriminative

task indicates justifying whether the input token has been re-

placed by G or not. The generator is thrown after pre-training,

and only the discriminator will be fine-tuned on downstream

tasks.

RTD is also an alternative solution for the mismatch prob-

lem the network sees [MASK] during pre-training but not when

being fine-tuned in downstream tasks.

Similarly, WKLM [188] replaces words on the entity-level

instead of token-level. Concretely, WKLM replaces entity

mentions with names of other entities of the same type and

train the models to distinguish whether the entity has been

replaced.

Next Sentence Prediction (NSP) Punctuations are the nat-

ural separators of text data. So, it is reasonable to construct

pre-training methods by utilizing them. Next Sentence Predic-

tion (NSP) [32] is just a great example for this. As its name

suggests, NSP trains the model to distinguish whether two

input sentences are continuous segments from the training cor-

pus. Specifically, when choosing the sentences pair for each

pre-training example, 50% of the time the second sentence

is the actual next sentence of the first one, and 50% of the

time it is a random sentence from the corpus. By doing so, it

is capable to teach the model to understand the relationship

between two input sentences and thus benefit downstream

tasks that are sensitive to this information, such as Question

2) n is drawn from a Gaussian distribution N(5, 1) clipped at 1 (minimum length) and 10 (maximum length).

Answering and Natural Language Inference.

However, the necessity of the NSP task has been ques-

tioned by subsequent work [72, 194, 105, 86]. Yang et al.

[194] found the impact of the NSP task unreliable, while Joshi

et al. [72] found that single-sentence training without the NSP

loss is superior to sentence-pair training with the NSP loss.

Moreover, Liu et al. [105] conducted further analysis for the

NSP task, which shows that when training with blocks of text

from a single document, removing the NSP loss matches or

slightly improves performance on downstream tasks.

Sentence Order Prediction (SOP) To better model inter-

sentence coherence, ALBERT [86] replaces the NSP loss with

a sentence order prediction (SOP) loss. As conjectured in

Lan et al. [86], NSP conflates topic prediction and coherence

prediction in a single task. However, topic prediction is eas-

ier to learn compared to coherence prediction, which allows

the model to make predictions merely rely on topic learning.

Different to NSP, SOP uses two consecutive segments from

the same document as positive examples, and the same two

consecutive segments but with their order swapped as negative

examples. As a result, ALBERT consistently outperforms

BERT on various downstream tasks.

StructBERT [180] and BERTje [29] also take SOP as their

self-supervised learning task.

3.1.6 Others

Apart from the above tasks, there are many other tasks desig-

nated for specific tasks, such as sentiment label-aware MLM

for sentiment analysis [78], gap sentence generation (GSG) for

text summarization [197], disfluency detection [179] and so

on. In addition, some auxiliary pre-training tasks are designed

to incorporate factual knowledge, such as denoising entity

auto-encoding (dEA) in ERNIE(THU) [199], entity linking

(EL) in KnowBERT [123].

Furthermore, several tasks are introduced to obtain multi-

modal pre-trained model. Typically, tasks like visual-based

MLM, masked visual-feature modeling and visual-linguistic

matching are widely used in multi-modal pre-training, such as

VideoBERT [152], VisualBERT [95], ViLBERT [107] and so

on.

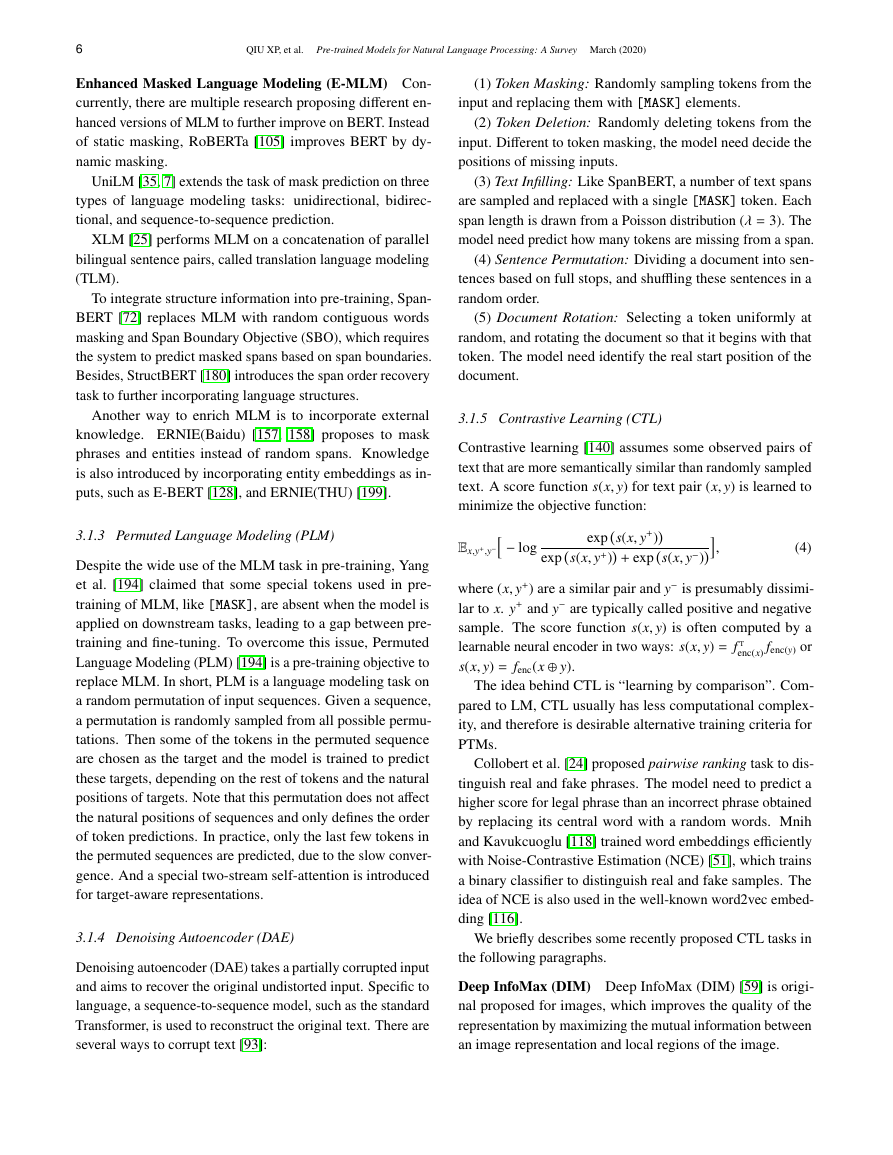

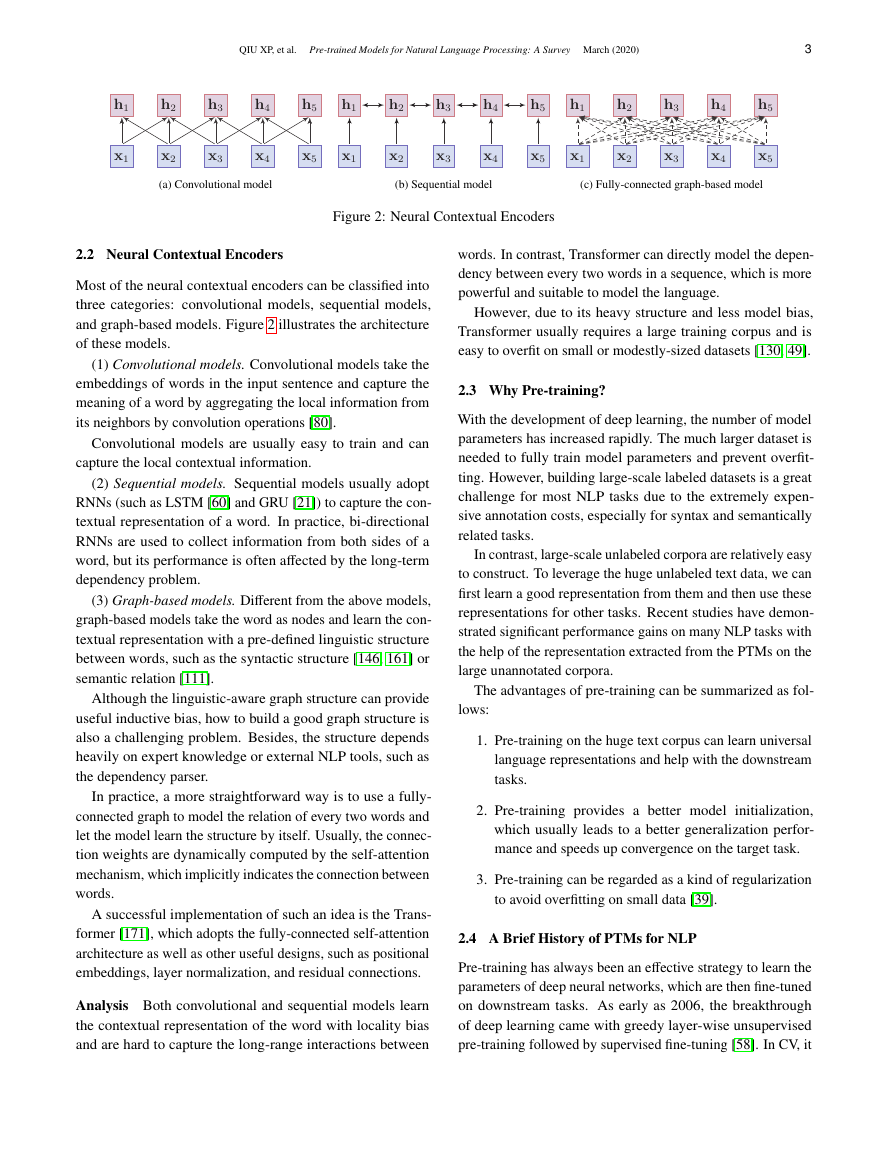

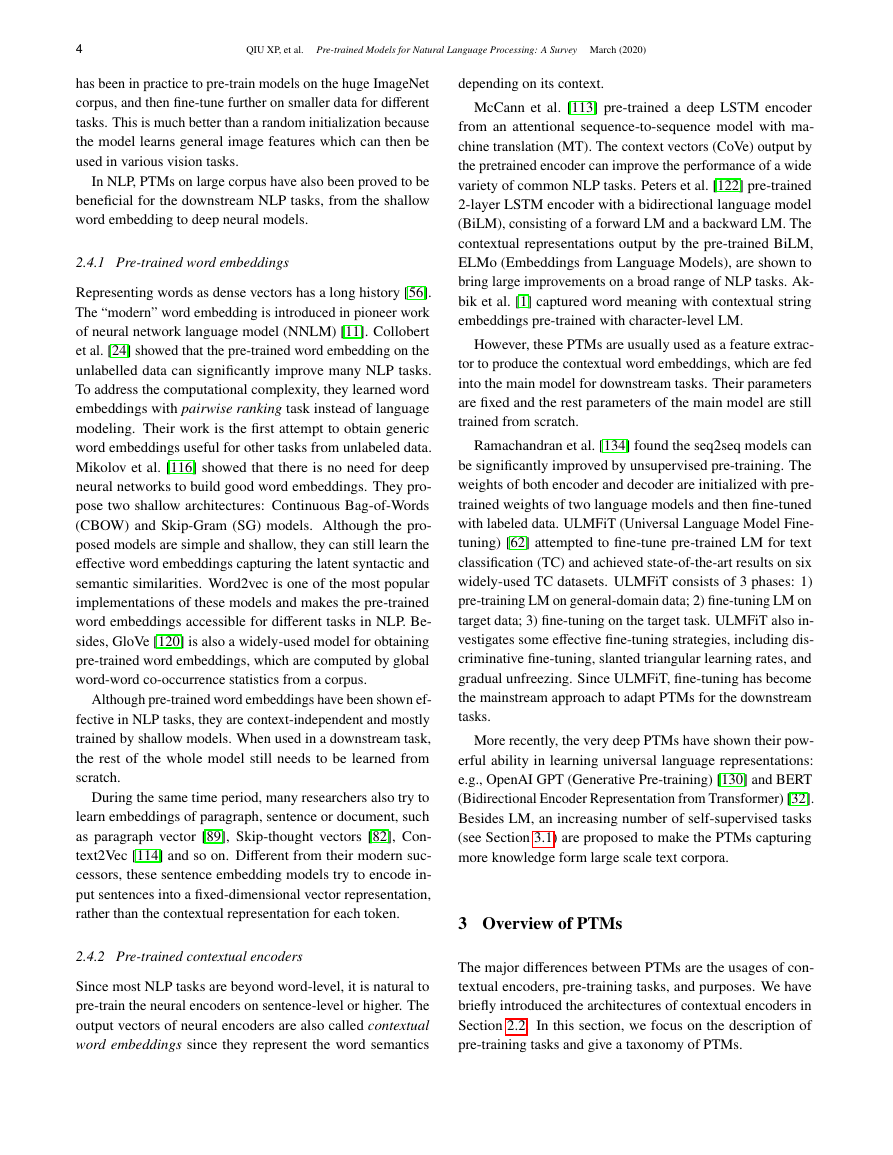

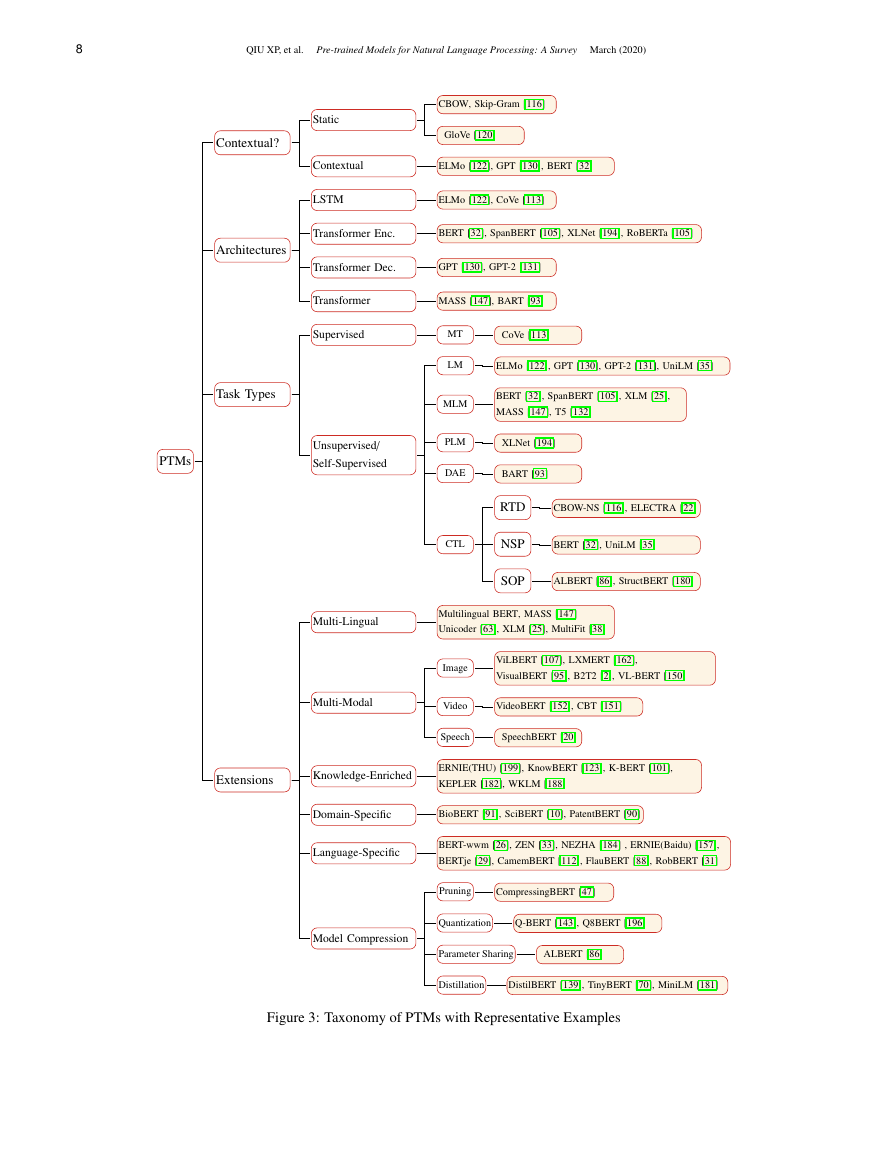

3.2 Taxonomy of PTMs

To clarify the relations of existing PTMs for NLP, we build the

taxonomy of PTMs, which categorizes existing PTMs from

different perspective: (1) the type of word representation used

by PTMs, (2) the backbone network used by PTMs, (3) the

type of pre-training tasks used by PTMs, and (4) the PTMs de-

signed for specific types of scenarios or inputs. Figure 3 shows

�

8

QIU XP, et al.

Pre-trained Models for Natural Language Processing: A Survey March (2020)

Contextual?

Architectures

Task Types

PTMs

Unsupervised/

Self-Supervised

Static

Contextual

LSTM

CBOW, Skip-Gram [116]

GloVe [120]

ELMo [122], GPT [130], BERT [32]

ELMo [122], CoVe [113]

Transformer Enc.

BERT [32], SpanBERT [105], XLNet [194], RoBERTa [105]

Transformer Dec.

GPT [130], GPT-2 [131]

Transformer

MASS [147], BART [93]

Supervised

MT

LM

MLM

PLM

DAE

CTL

CoVe [113]

ELMo [122], GPT [130], GPT-2 [131], UniLM [35]

BERT [32], SpanBERT [105], XLM [25],

MASS [147], T5 [132]

XLNet [194]

BART [93]

RTD

NSP

SOP

CBOW-NS [116], ELECTRA [22]

BERT [32], UniLM [35]

ALBERT [86], StructBERT [180]

Multi-Lingual

Multilingual BERT, MASS [147]

Unicoder [63], XLM [25], MultiFit [38]

Image

ViLBERT [107], LXMERT [162],

VisualBERT [95], B2T2 [2], VL-BERT [150]

Multi-Modal

Video

VideoBERT [152], CBT [151]

Speech

SpeechBERT [20]

Extensions

Knowledge-Enriched

ERNIE(THU) [199], KnowBERT [123], K-BERT [101],

KEPLER [182], WKLM [188]

Domain-Specific

BioBERT [91], SciBERT [10], PatentBERT [90]

Language-Specific

BERT-wwm [26], ZEN [33], NEZHA [184] , ERNIE(Baidu) [157],

BERTje [29], CamemBERT [112], FlauBERT [88], RobBERT [31]

Pruning

CompressingBERT [47]

Model Compression

Quantization

Q-BERT [143], Q8BERT [196]

Parameter Sharing

ALBERT [86]

Distillation

DistilBERT [139], TinyBERT [70], MiniLM [181]

Figure 3: Taxonomy of PTMs with Representative Examples

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc