## An ffmpeg and SDL Tutorial

Part 1

Part 2

Part 3

Part 4

Part 5

Part 6

Part 7

Part 8

End

ffmpeg is a wonderful library for creating video applications or even general

purpose utilities. ffmpeg takes care of all the hard work of video processing

by doing all the decoding, encoding, muxing and demuxing for you. This can

make media applications much simpler to write. It's simple, written in C,

fast, and can decode almost any codec you'll find in use today, as well as

encode several other formats.

The only prolem is that documentation is basically nonexistent. There is a

single tutorial that shows the basics of ffmpeg and auto-generated doxygen

documents. That's it. So, when I decided to learn about ffmpeg, and in the

process about how digital video and audio applications work, I decided to

document the process and present it as a tutorial.

There is a sample program that comes with ffmpeg called ffplay. It is a simple

C program that implements a complete video player using ffmpeg. This tutorial

will begin with an updated version of the original tutorial, written by Martin

Bohme (I have stolen liberally borrowed from that work), and work from there

to developing a working video player, based on Fabrice Bellard's ffplay.c. In

each tutorial, I'll introduce a new idea (or two) and explain how weimplement

it. Each tutorial will have a C file so you can download it, compile it, and

follow along at home. The source files will show you how the real program

works, how wemove all the pieces around, as well as showing you the technical

details that are unimportant to the tutorial. By the time we are finished, we

will have a working video player written in less than 1000 lines of code!

In making the player, wewill be using SDL to output the audio and video of

the media file. SDL is an excellent cross-platform multimedia library that's

used in MPEG playback software, emulators, and many video games. You will need

to download and install the SDL development libraries for your system in order

to compile the programs in this tutorial.

�

This tutorial is meant for people with a decent programming background. At the

very least you should know C and have some idea about concepts like queues,

mutexes, and so on. You should know some basics about multimedia; things like

waveforms and such, but you don't need to know a lot, as I explain a lot of

those concepts in this tutorial.

There are printable HTML files along the way as well as old school ASCII

files. You can also get a tarball of the text files and source code or just

the source. You can get a printable page of the full thing in HTML or in text.

**UPDATE:** I've fixed a code error in Tutorial 7 and 8, as well as adding

-lavutil.

Please feel free to email me with bugs, questions, comments, ideas, features,

whatever, at _dranger at gmail dot com_.

_**>>** Proceed with the tutorial!_

## Tutorial 01: Making Screencaps

Code: tutorial01.c

### Overview

Movie files have a few basic components. First, the file itself is called a

**container**, and the type of container determines where the information in

the file goes. Examples of containers are AVI and Quicktime. Next, you have a

bunch of **streams**; for example, you usually have an audio stream and a

video stream. (A "stream" is just a fancy word for "a succession of data

elements made available over time".) The data elements in a stream are called

**frames**. Each stream is encoded by a different kind of **codec**. The codec

defines how the actual data is COded and DECoded - hence the name CODEC.

Examples of codecs are DivX and MP3. **Packets** are then read from the

stream. Packets are pieces of data that can contain bits of data that are

decoded into raw frames that wecan finally manipulate for our application.

For our purposes, each packet contains complete frames, or multiple frames in

the case of audio.

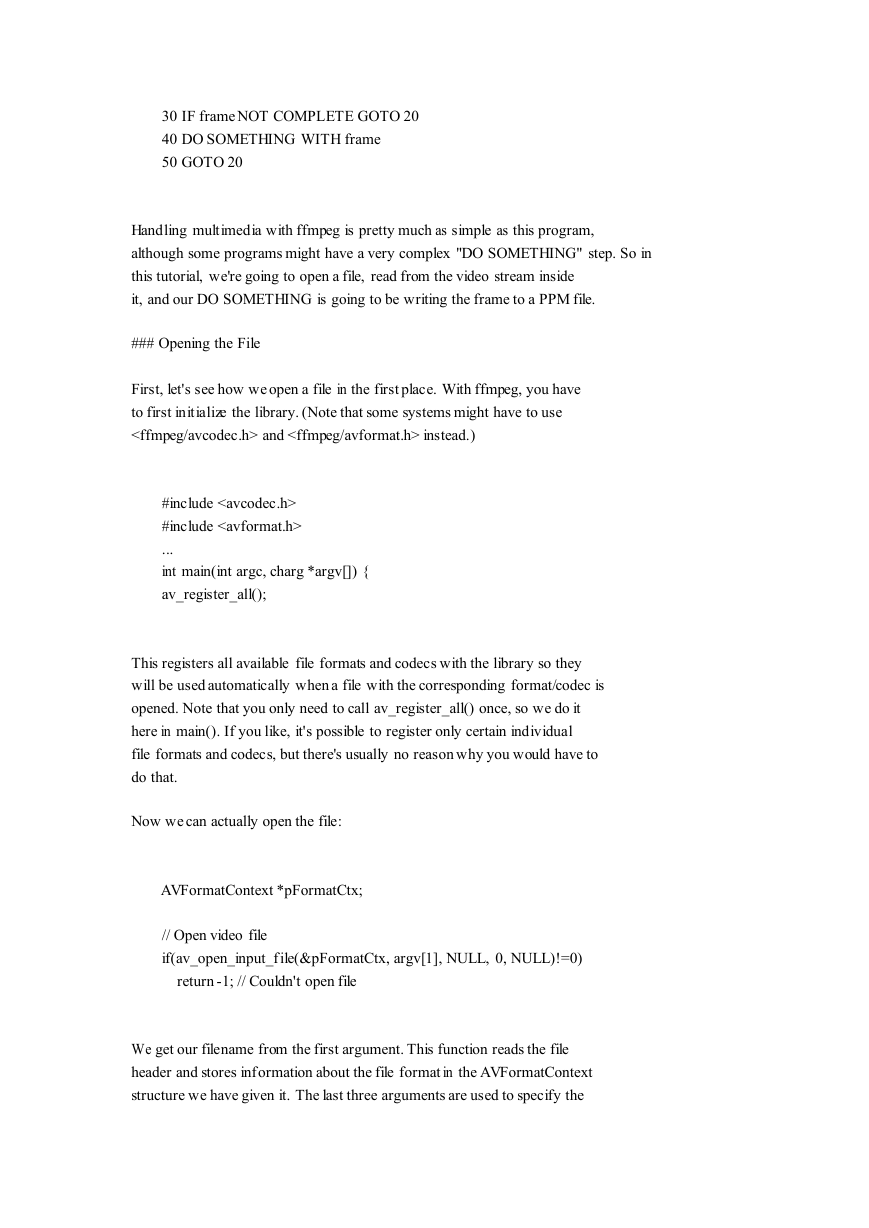

At its very basic level, dealing with video and audio streams is very easy:

10 OPEN video_stream FROM video.avi

20 READ packet FROM video_stream INTO frame

�

30 IF frameNOT COMPLETE GOTO 20

40 DO SOMETHING WITH frame

50 GOTO 20

Handling multimedia with ffmpeg is pretty much as simple as this program,

although some programs might have a very complex "DO SOMETHING" step. So in

this tutorial, we're going to open a file, read from the video stream inside

it, and our DO SOMETHING is going to be writing the frame to a PPM file.

### Opening the File

First, let's see how weopen a file in the first place. With ffmpeg, you have

to first initialize the library. (Note that some systems might have to use

and instead.)

#include

#include

...

int main(int argc, charg *argv[]) {

av_register_all();

This registers all available file formats and codecs with the library so they

will be used automatically when a file with the corresponding format/codec is

opened. Note that you only need to call av_register_all() once, so we do it

here in main(). If you like, it's possible to register only certain individual

file formats and codecs, but there's usually no reason why you would have to

do that.

Now wecan actually open the file:

AVFormatContext *pFormatCtx;

// Open video file

if(av_open_input_file(&pFormatCtx, argv[1], NULL, 0, NULL)!=0)

return -1; // Couldn't open file

We get our filename from the first argument. This function reads the file

header and stores information about the file format in the AVFormatContext

structure we have given it. The last three arguments are used to specify the

�file format, buffer size, and format options, but by setting this to NULL or

0, libavformat will auto-detect these.

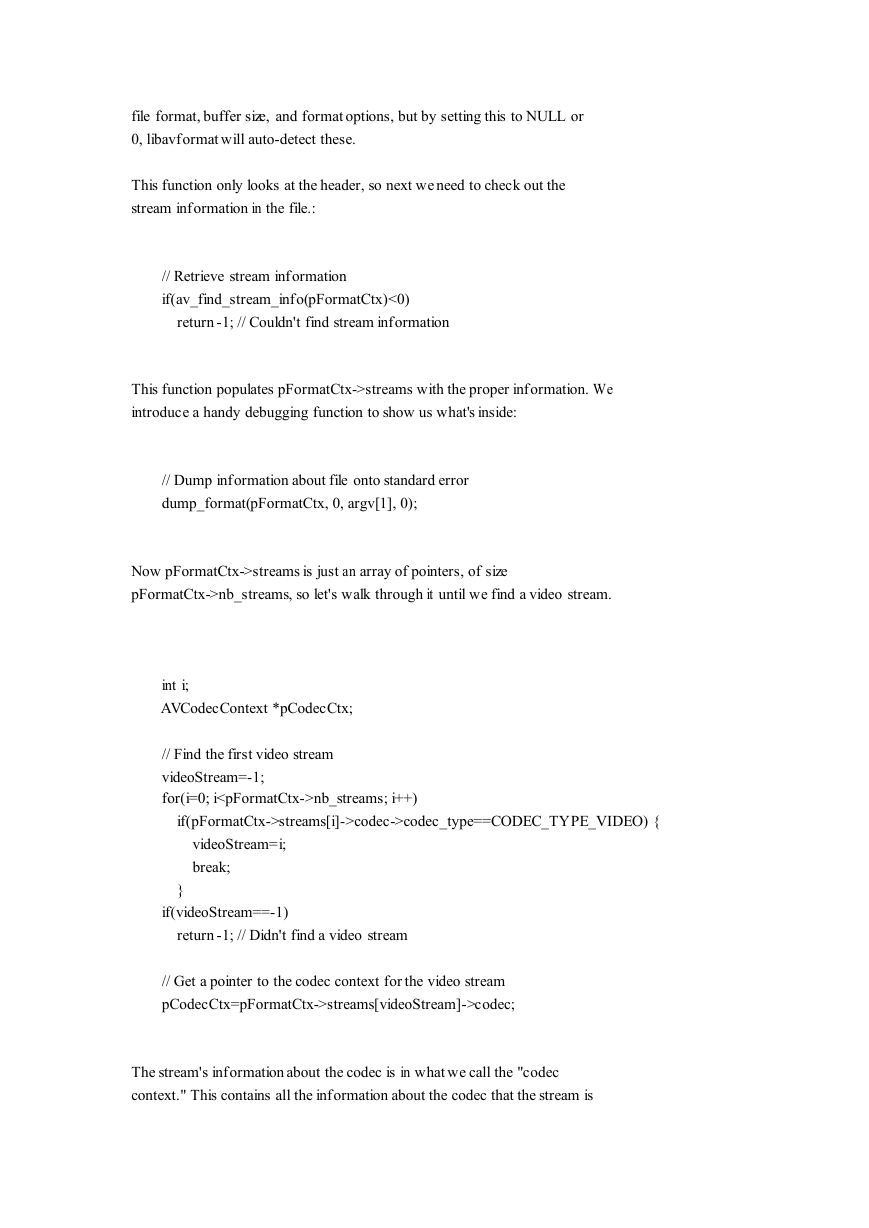

This function only looks at the header, so next weneed to check out the

stream information in the file.:

// Retrieve stream information

if(av_find_stream_info(pFormatCtx)<0)

return -1; // Couldn't find stream information

This function populates pFormatCtx->streams with the proper information. We

introduce a handy debugging function to show us what's inside:

// Dump information about file onto standard error

dump_format(pFormatCtx, 0, argv[1], 0);

Now pFormatCtx->streams is just an array of pointers, of size

pFormatCtx->nb_streams, so let's walk through it until we find a video stream.

int i;

AVCodecContext *pCodecCtx;

// Find the first video stream

videoStream=-1;

for(i=0; i

nb_streams; i++)

if(pFormatCtx->streams[i]->codec->codec_type==CODEC_TYPE_VIDEO) {

videoStream=i;

break;

}

if(videoStream==-1)

return -1; // Didn't find a video stream

// Get a pointer to the codec context for the video stream

pCodecCtx=pFormatCtx->streams[videoStream]->codec;

The stream's information about the codec is in what we call the "codec

context." This contains all the information about the codec that the stream is

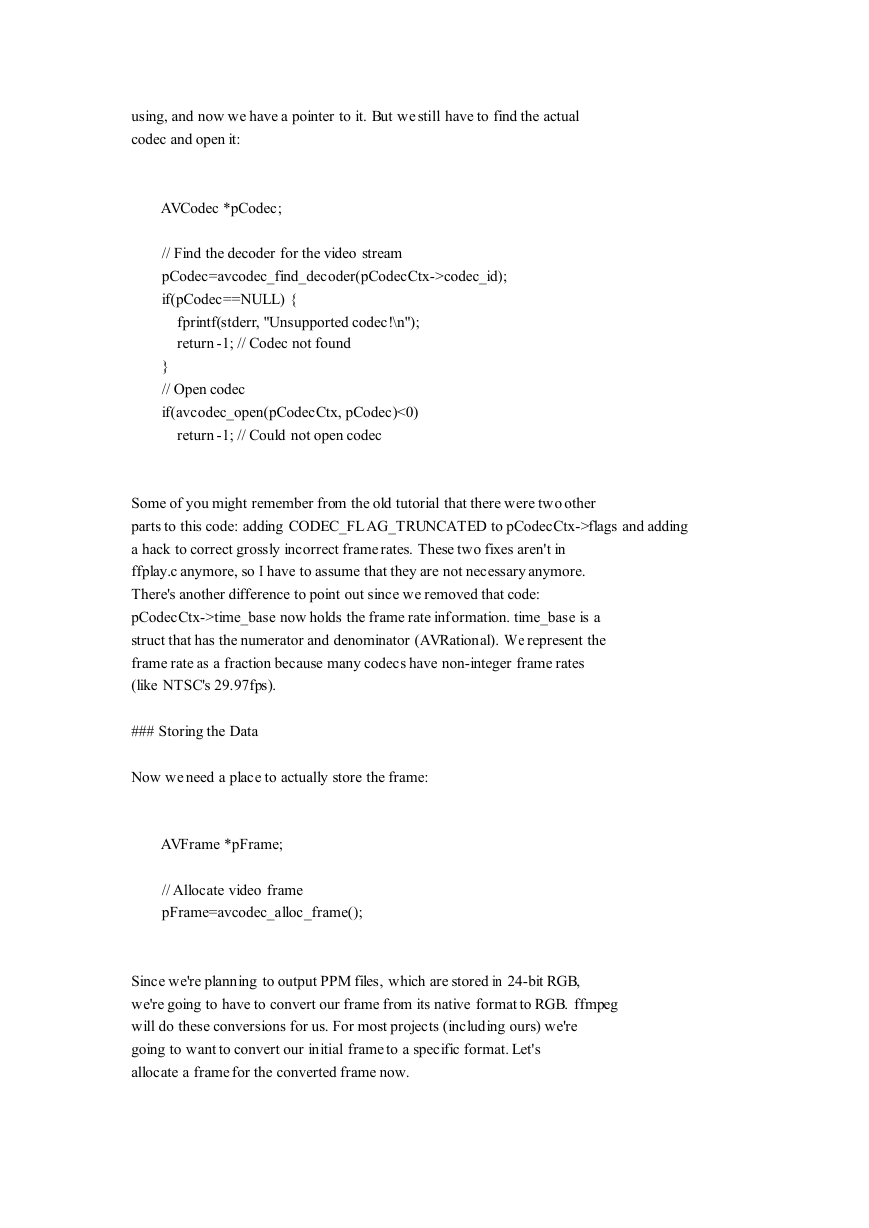

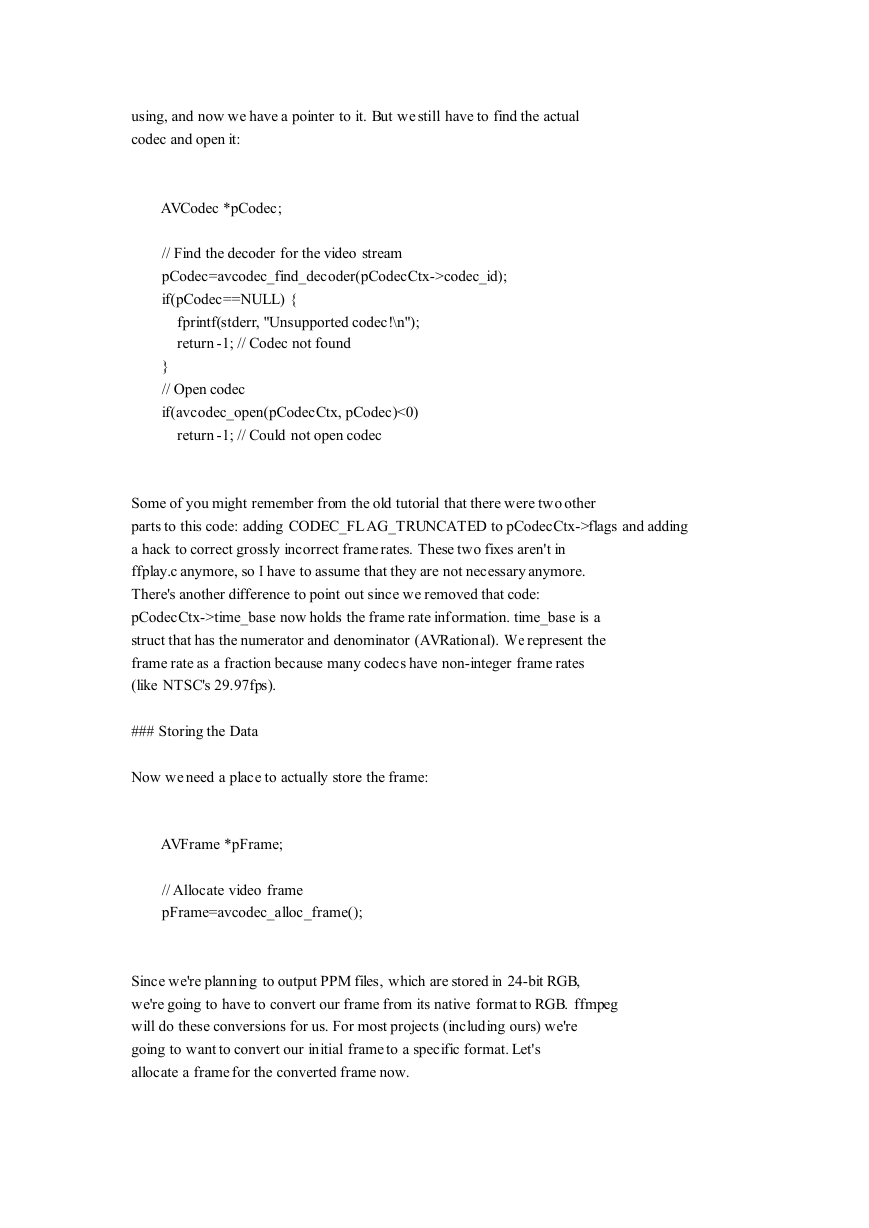

�using, and now we have a pointer to it. But westill have to find the actual

codec and open it:

AVCodec *pCodec;

// Find the decoder for the video stream

pCodec=avcodec_find_decoder(pCodecCtx->codec_id);

if(pCodec==NULL) {

fprintf(stderr, "Unsupported codec!\n");

return -1; // Codec not found

}

// Open codec

if(avcodec_open(pCodecCtx, pCodec)<0)

return -1; // Could not open codec

Some of you might remember from the old tutorial that there were two other

parts to this code: adding CODEC_FLAG_TRUNCATED to pCodecCtx->flags and adding

a hack to correct grossly incorrect framerates. These two fixes aren't in

ffplay.c anymore, so I have to assume that they are not necessary anymore.

There's another difference to point out since we removed that code:

pCodecCtx->time_base now holds the frame rate information. time_base is a

struct that has the numerator and denominator (AVRational). We represent the

frame rate as a fraction because many codecs have non-integer frame rates

(like NTSC's 29.97fps).

### Storing the Data

Now weneed a place to actually store the frame:

AVFrame *pFrame;

// Allocate video frame

pFrame=avcodec_alloc_frame();

Since we're planning to output PPM files, which are stored in 24-bit RGB,

we're going to have to convert our frame from its native format to RGB. ffmpeg

will do these conversions for us. For most projects (including ours) we're

going to want to convert our initial frameto a specific format. Let's

allocate a framefor the converted frame now.

�

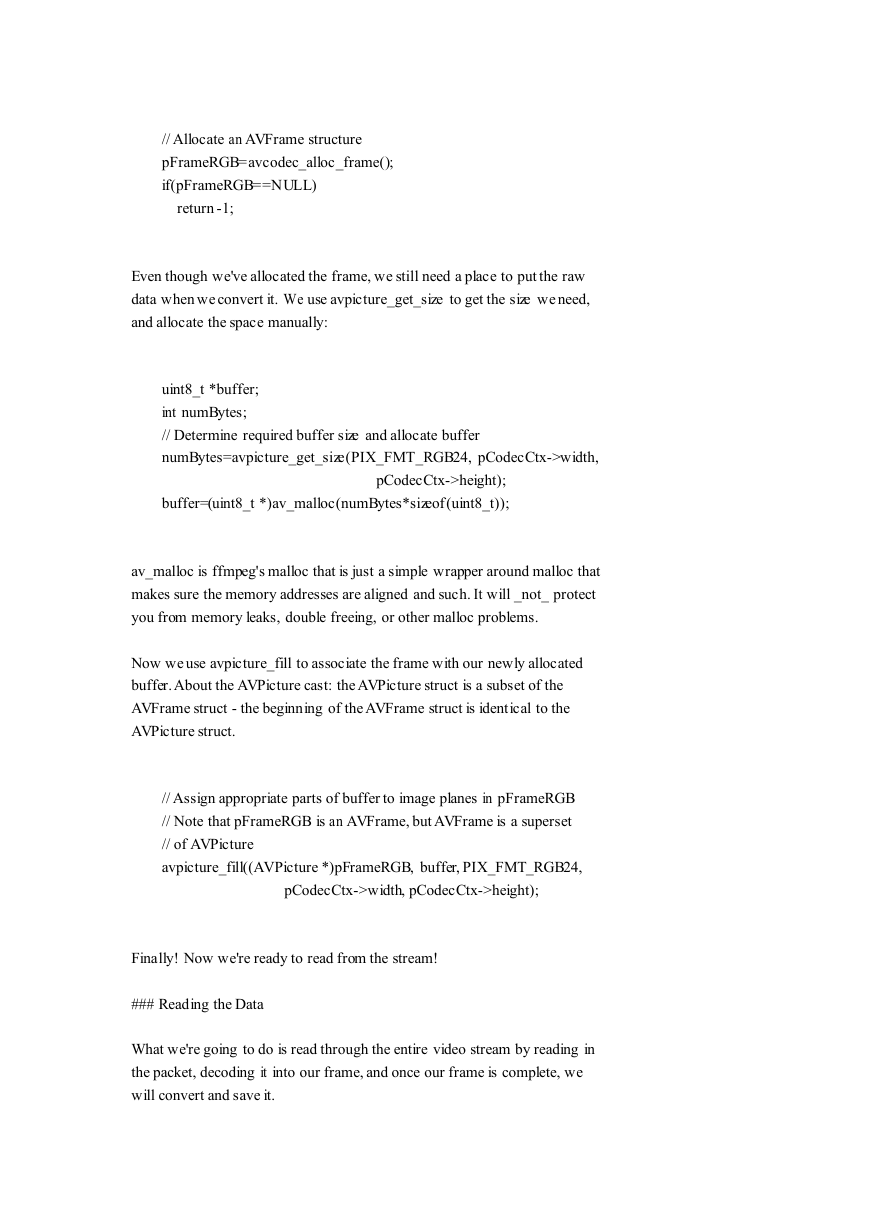

// Allocate an AVFrame structure

pFrameRGB=avcodec_alloc_frame();

if(pFrameRGB==NULL)

return -1;

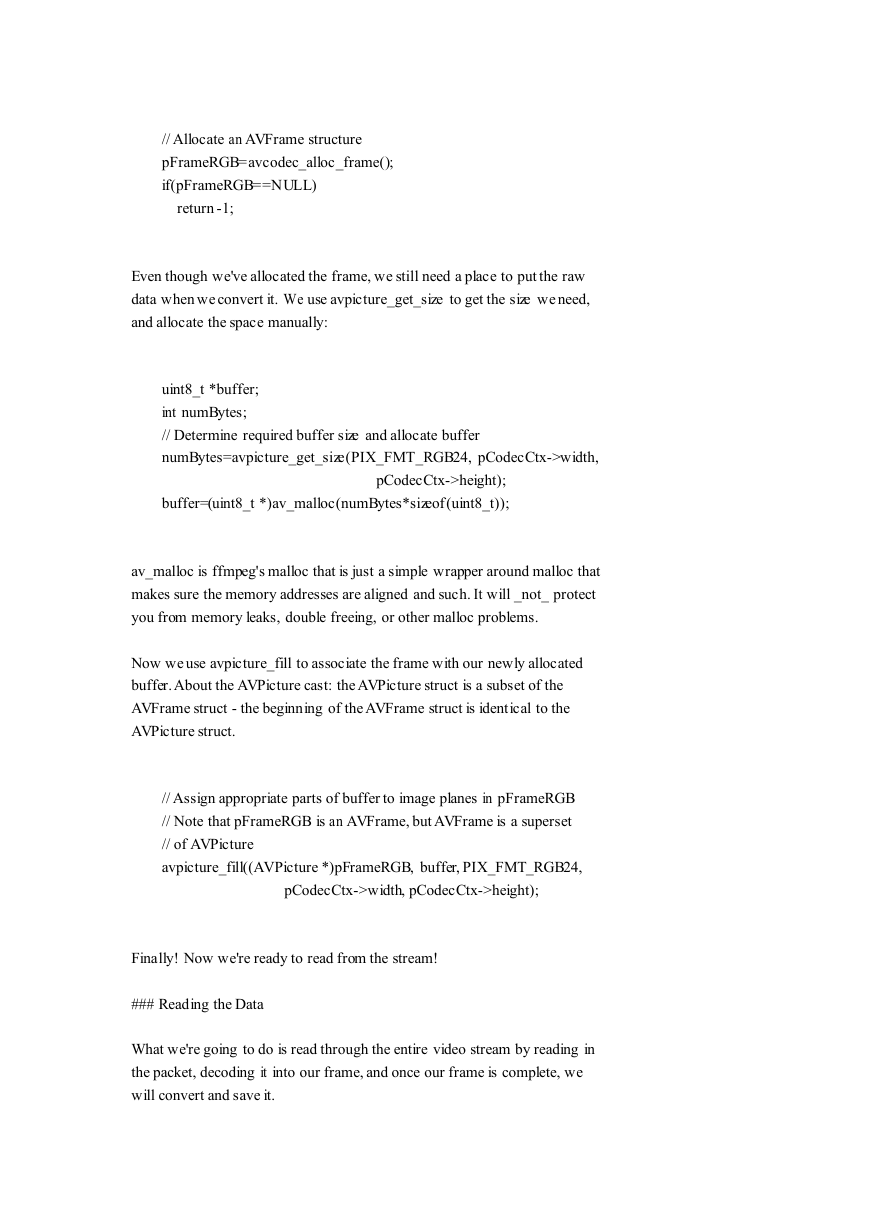

Even though we've allocated the frame, we still need a place to put the raw

data when weconvert it. We use avpicture_get_size to get the size weneed,

and allocate the space manually:

uint8_t *buffer;

int numBytes;

// Determine required buffer size and allocate buffer

numBytes=avpicture_get_size(PIX_FMT_RGB24, pCodecCtx->width,

pCodecCtx->height);

buffer=(uint8_t *)av_malloc(numBytes*sizeof(uint8_t));

av_malloc is ffmpeg's malloc that is just a simple wrapper around malloc that

makes sure the memory addresses are aligned and such. It will _not_ protect

you from memory leaks, double freeing, or other malloc problems.

Now weuse avpicture_fill to associate the frame with our newly allocated

buffer.About the AVPicture cast: theAVPicture struct is a subset of the

AVFrame struct - the beginning of theAVFrame struct is identical to the

AVPicture struct.

// Assign appropriate parts of buffer to image planes in pFrameRGB

// Note that pFrameRGB is an AVFrame, but AVFrame is a superset

// of AVPicture

avpicture_fill((AVPicture *)pFrameRGB, buffer, PIX_FMT_RGB24,

pCodecCtx->width, pCodecCtx->height);

Finally! Now we're ready to read from the stream!

### Reading the Data

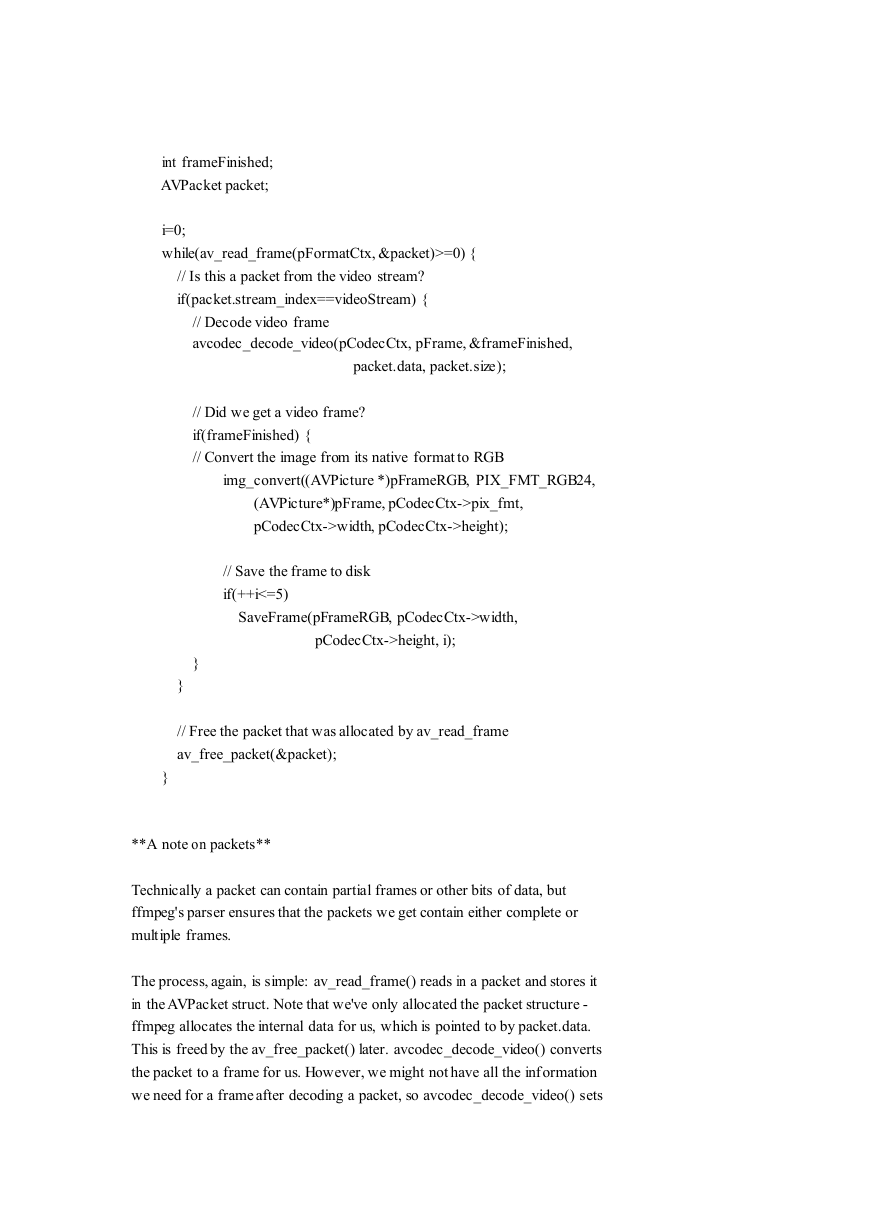

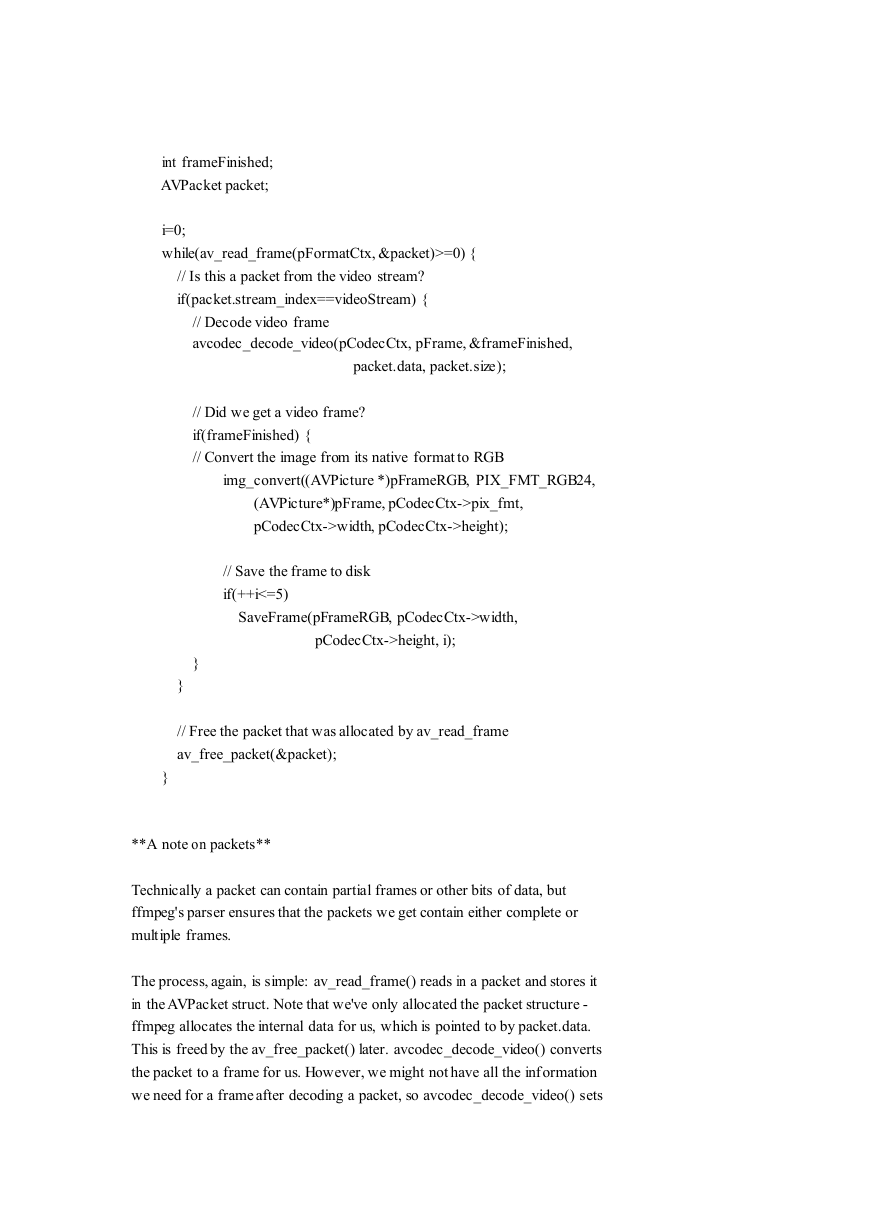

What we're going to do is read through the entire video stream by reading in

the packet, decoding it into our frame, and once our frame is complete, we

will convert and save it.

�

int frameFinished;

AVPacket packet;

i=0;

while(av_read_frame(pFormatCtx, &packet)>=0) {

// Is this a packet from the video stream?

if(packet.stream_index==videoStream) {

// Decode video frame

avcodec_decode_video(pCodecCtx, pFrame, &frameFinished,

packet.data, packet.size);

// Did we get a video frame?

if(frameFinished) {

// Convert the image from its native format to RGB

img_convert((AVPicture *)pFrameRGB, PIX_FMT_RGB24,

(AVPicture*)pFrame, pCodecCtx->pix_fmt,

pCodecCtx->width, pCodecCtx->height);

// Save the frame to disk

if(++i<=5)

SaveFrame(pFrameRGB, pCodecCtx->width,

pCodecCtx->height, i);

}

}

// Free the packet that was allocated by av_read_frame

av_free_packet(&packet);

}

**A note on packets**

Technically a packet can contain partial frames or other bits of data, but

ffmpeg's parser ensures that the packets we get contain either complete or

multiple frames.

The process, again, is simple: av_read_frame() reads in a packet and stores it

in theAVPacket struct. Note that we've only allocated the packet structure -

ffmpeg allocates the internal data for us, which is pointed to by packet.data.

This is freed by the av_free_packet() later. avcodec_decode_video() converts

the packet to a frame for us. However, we might not have all the information

we need for a frameafter decoding a packet, so avcodec_decode_video() sets

�

frameFinished for us when wehave the next frame. Finally, weuse

img_convert() to convert from the native format (pCodecCtx->pix_fmt) to RGB.

Remember that you can cast an AVFrame pointer to an AVPicture pointer.

Finally, we pass the frame and height and width information to our SaveFrame

function.

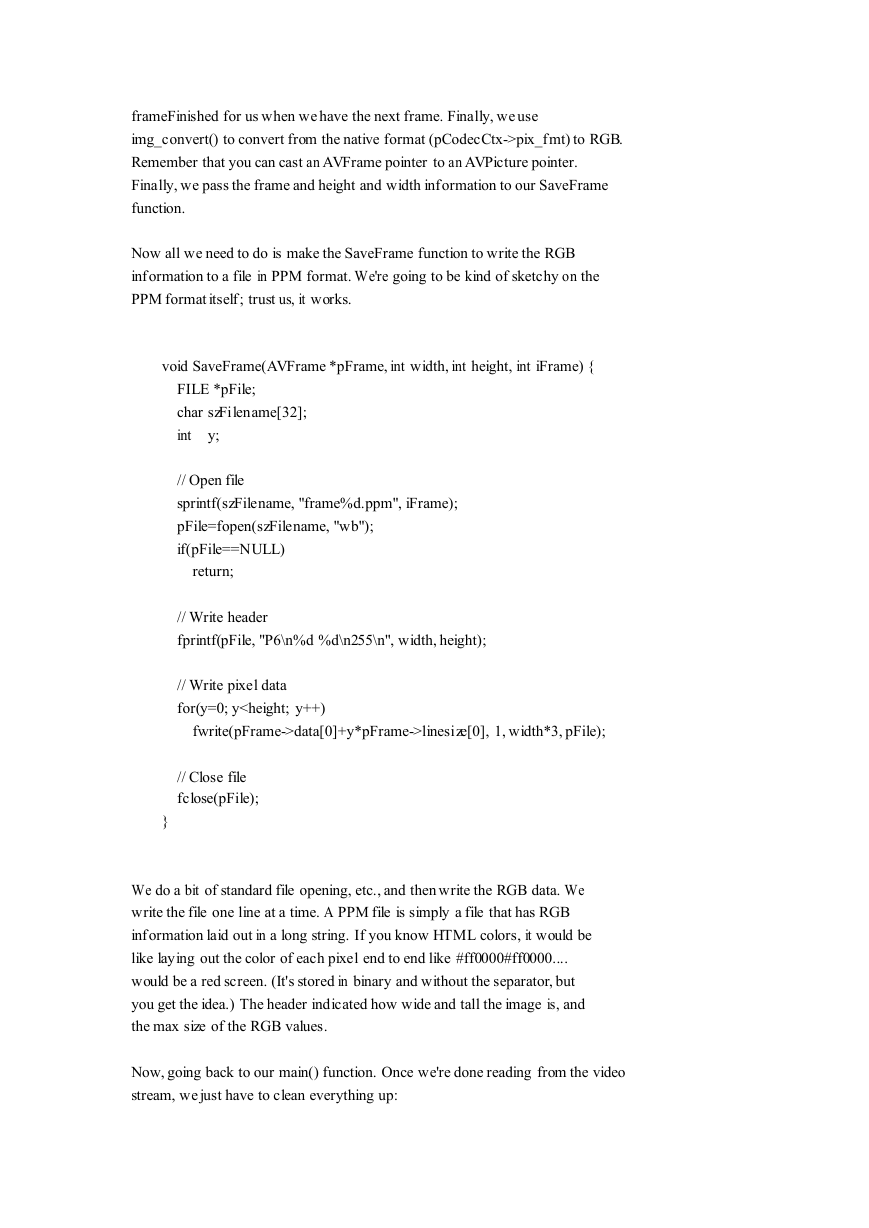

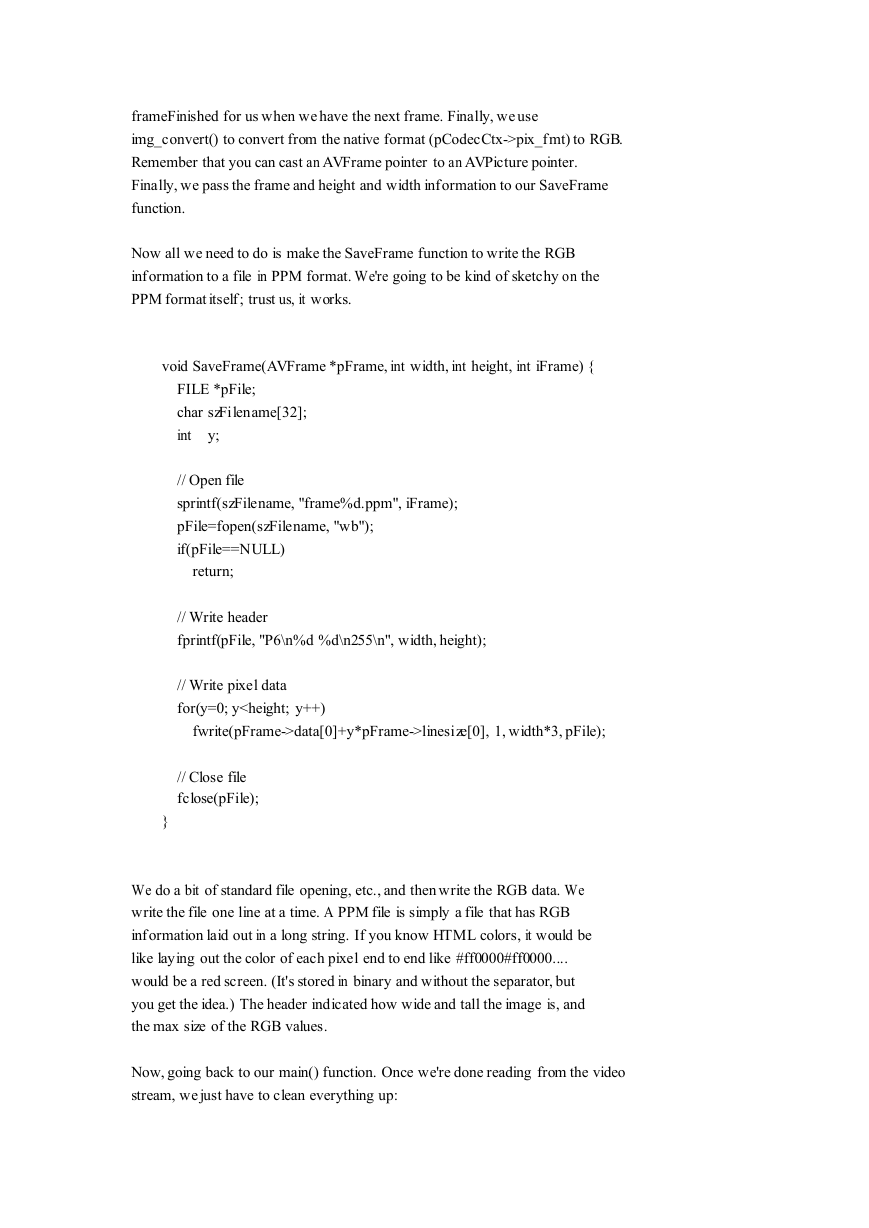

Now all we need to do is make the SaveFrame function to write the RGB

information to a file in PPM format. We're going to be kind of sketchy on the

PPM format itself; trust us, it works.

void SaveFrame(AVFrame *pFrame, int width, int height, int iFrame) {

FILE *pFile;

char szFilename[32];

int

y;

// Open file

sprintf(szFilename, "frame%d.ppm", iFrame);

pFile=fopen(szFilename, "wb");

if(pFile==NULL)

return;

// Write header

fprintf(pFile, "P6\n%d %d\n255\n", width, height);

// Write pixel data

for(y=0; ydata[0]+y*pFrame->linesize[0], 1, width*3, pFile);

// Close file

fclose(pFile);

}

We do a bit of standard file opening, etc., and then write the RGB data. We

write the file one line at a time. A PPM file is simply a file that has RGB

information laid out in a long string. If you know HTML colors, it would be

like laying out the color of each pixel end to end like #ff0000#ff0000....

would be a red screen. (It's stored in binary and without the separator, but

you get the idea.) The header indicated how wide and tall the image is, and

the max size of the RGB values.

Now, going back to our main() function. Once we're done reading from the video

stream, wejust have to clean everything up:

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc