ESC-360

Sound Verification Techniques for Developing

High-Integrity Medical Device Software

Jay Abraham

The MathWorks

Paul Jones

FDA / CDRH

Raoul Jetley

FDA / CDRH

Abstract

Embedded software in medical devices is increasing in content and complexity. Traditional software

verification and testing methods may not provide the optimum solution. This paper discusses the

application of sound verification techniques in the development of high integrity medical device software.

Specifically, this paper will explore the application of formal methods based Abstract Interpretation

techniques to mathematically prove the absence of a defined set of run-time errors. The verification

solution is then compared and contrasted to other software analysis and testing methods, such as code

review, static analysis and dynamic testing.

Introduction

The sophistication and complexity of embedded

software contained within medical devices is

increasing. State of the art pacemakers may

contain up to 80,000 lines of code, while

infusion pumps may have over 170,000 lines of

code. These devices must operate with utmost

concern for safety and reliability [1].

Historically, the typical options available for

verifying medical device software have been

code reviews, static analysis, and dynamic

testing. Code reviews rely solely on the expertise

of the reviewer and may not be efficient for large

code bases. Traditional static analysis techniques

rely mainly on a pattern-matching approach to

detect unsafe code patterns, but cannot prove the

absence of run-time errors. Lastly, with the

increasing complexity of device software, it is

virtually impossible to dynamically test for all

types of operating conditions.

Software is becoming ubiquitous in medical

devices and contributing to their complexity [5].

As a result, various worldwide regulatory

organizations include language in their directives

to explicitly address device software [2]. Recent

studies are pointing to increasing failure rates of

medical devices due to software coding errors. A

study conducted by Bliznakov et al finds that

over the period of 1999-2005 11.3% of FDA

initiated recalls were attributable to software

coding errors [6].

The FDA increased its involvement in reviewing

the development of medical device software in

the mid 1980s when software coding errors in a

radiation therapy device contributed to the lethal

overdose of a number of patients [2]. FDA has

published several guidance documents and

recognized several standards addressing good

software development processes. More recently,

the FDA has established a software laboratory

within the Center for Devices and Radiological

Health

and

Engineering Laboratories (OSEL) to identify

software coding errors in devices under recall

investigation [1]. As part of its investigation the

software laboratory examines the source code to

understand and identify the root cause of a

device failure.

Examination of

software verification

processes in other industries may be instructive

in the development of high integrity embedded

software. For example, significant rigor

is

applied

to the verification of software for

aerospace and automotive applications [3]. These

the

(CDRH) Office of Science

Medical Device Software

Medical devices address a continuum of

diagnosis and treatment applications varying in

complexity from digital thermometers, insulin

pumps, pacemakers, cardiac monitors,

to

anesthesia machines, large ultrasound imaging

systems, chemistry analyzers and proton beam

therapy systems. As science and technology

advances, so does the complexity and capability

of next generation devices. The

easily

identifiable signature of these devices is that they

are intended to directly or indirectly affect the

public health.

Embedded Systems Conference, San Jose, California, USA 1

© 2009

�

FAA

industries have adopted various analysis and

verification tools to improve the quality of

software. The

(Federal Aviation

Administration) requires certification of software

to the DO-178B (Software Considerations in

Airborne Systems and Equipment Certification)

standard as part of an aircraft air worthiness

certification process. The standard establishes

levels of flight failure condition categories such

that the greater the severity of a failure condition

category the greater the rigor in design process,

required. The

verification, and assurance

automotive

software

standards and often complies with the IEC-

61508 international standard (specifying the

“Functional Safety of Electrical, Electronic, and

Programmable

Safety-Related

Systems”).

Electronic

industry

adheres

to

Embedded Device Failures

A study of embedded device failures can be

instructive to help avoid repeating mistakes of

the past. We cite three examples of device

failures illustrated by Ganssle [11].

1. Therac 25 – A radiation therapy device that

overdosed patients with radiation. The real-

time operating system (RTOS) for the device

did not support a message passing scheme for

threads. Therefore global variables were used

instead. Insufficient protection of the global

variables resulted in incorrect data being used

during device operation. A second issue was

an overflow condition on an 8 bit integer

counter. These

software coding errors

resulted in overdosing patients with as much

as 30 times the prescribed dose.

2. Ariane 5 – The very first launch of this rocket

resulted in the destruction of the launcher

with a loss of the payload. The cause was an

overflow in a pair of redundant Inertial

Reference Systems which determined the

rocket’s attitude and position. The overflow

was caused by converting a 64 bit floating

point number to a 16 bit integer. The

presence of a redundant system did not help,

because the backup system implemented the

same behavior.

3. Space Shuttle Simulator – During an abort

procedure all four main shuttle computers

crashed. Examination of the code identified a

problem in the fuel management software

where counters were not correctly

re-

initialized after the first of a series of fuel

dumps were initiated. The result was that the

code would jump to random sections of

memory causing the computers to crash.

For further specific examples of medical device

issues, the FDA’s CDRH Manufacturer and User

Facility Device Experience Database (MAUDE)

[21] catalogs anomalous behavior and adverse

events in an easy to find manner. Searching the

database for the keyword “firmware” yields a

number of medical devices that the FDA is

tracking with respect to reported adverse events.

Causes of Device Failures

Code defects, resulting in run-time errors are the

major cause for embedded device failures

described above. Run-time errors are a specific

class of software errors considered as latent

faults. The faults may exist in code, but unless

very specific tests are run under particular

conditions, the faults may not be realized in the

system. Therefore, the code may appear to

function normally, but may result in unexpected

system

fatal

consequences. A few causes of run-time errors

are given below (this list is not exhaustive):

1. Non-initialized data – If variables are not

initialized, they may be set to an unknown

value. When using these variables the code

may sometimes function as desired, but under

certain conditions

the outcome becomes

unpredictable.

sometimes with

failures,

2. Out of bounds array access – An out of

bounds array access occurs when data is

written or read beyond the boundary of

allocated memory. This error may result in

code vulnerabilities and unpredictable results

during execution.

3. Null pointer dereference – A Null pointer

to

dereference occurs when attempting

reference memory with a pointer that is

NULL. Since this a “non-value” for memory,

any dereference of that pointer leads to an

immediate system crash.

4. Incorrect computation – This error is caused

by an arithmetic error due to an overflow,

underflow, divide by zero, or when taking a

square root of a negative number. An

incorrect computation error can result in a

program crash or erroneous result.

Embedded Systems Conference, San Jose, California, USA 2

© 2009

�

5. Concurrent access to shared data – This error

is caused by two or more variables across

different threads try to access the same

memory

lead to a

potential race condition and may result in

data corruption as a result of multiple threads

accessing shared data in an unprotected

fashion.

location. This could

6. Illegal

type conversions –

type

conversions may result in corruption of data

and produce unintended consequences.

Illegal

7. Dead code – Although dead code (i.e. code

that will never execute) may not directly

cause a run-time failure, it may be important

to understand why the programmer wrote

such code. Dead code may also signal poor

coding practices or lost design specifications.

8. Non-terminating loops – These errors are

caused by incorrect guard conditions on

program loop operations (e.g. for, while, etc.)

and may result in a system hangs or halts.

Software Verification and Testing

Traditional software verification and testing

consists of code reviews and dynamic testing. In

[15] Fagan discusses how code inspections and

reviews can reduce coding errors. Experience has

shown that while this can be a relatively

effective way of verifying code, the process

needs to be complemented with other methods.

One such method is static analysis. This is a

somewhat new method that largely automates the

software

[16]. This

technique attempts to identify errors in the code;

but does not necessarily prove their absence. The

next sections discuss

these methods and

introduces the application of formal methods

based Abstract Interpretation to code verification.

verification

process

the software

test-cases and

Dynamic Testing

Dynamic testing verifies the execution flow of

software; e.g. decision paths, inputs and outputs.

Wagner describes the methodology and explains

the application of

testing philosophy

according to the dimensions of type (functional

and structural) and granularity (unit, integration,

and system) [16]. Dynamic testing involves the

creation of

test-vectors and

execution of the software against these tests.

Comparison of the results to expected or known

correct behavior of

then

performed. Wagner also includes a summary of

various statistics compiled on the effectiveness

of dynamic testing. His analysis shows that the

mean effectiveness of dynamic testing is only

about 47%. In other words, over half of potential

errors on average are not detected with dynamic

testing.

Hailpern in [9] and Dijkstra in [10] sum up the

challenges of software testing as “given that we

cannot really show there are no more errors in

the program, when do we stop testing?” [9] and

“program testing can be used to show the

presence of bugs, but never to show their

absence” [10]. Butler and Finnelli further

explain

testing of ultra-reliability

software is not feasible. Life testing is referred to

as actual testing of the device under all possible

conditions For example, to quantify 10−8/hour

failure rate will require more than 108 hours of

testing [8].

that

life

are resolved. Another aspect of code reviews can

involve checking compliance to certain coding

standards such as MISRA-C or JSF++ (for C and

C++).

Detecting subtle run-time errors can be a difficult

proposition. For example, an overflow or

underflow due

complex mathematical

operations that involve programmatic control

could easily be missed during a code review.

to

this

is

Code Reviews

Fagan discusses the process of code reviews and

inspections quite extensively

in [15]. The

process includes a description of the team that

will perform the review (moderator, designer,

coder, and tester), the preparation process (which

involves the creation of a checklist), and the

inspection itself. The stated objective is to find

errors in code. Processes on conclusion of the

review are also described. These include rework

to address errors found and follow-up to ensure

that issues and concerns raised during inspection

Embedded Systems Conference, San Jose, California, USA 3

© 2009

Static Analysis

Static analysis is a technique used to identify

potential and actual defects in source code. The

static analysis process utilizes heuristics and

statistics and does not require code execution or

the development of test-cases. The types of

errors found could be thought of as strong

compiler type checks (e.g. checking if variables

are always

to more

initialized or used)

sophisticated dataflow based analysis.

�

As described in [16] and [17], these tools can

certainly find errors in code, but there is a high

false positive rate. The term false positive refers

to the identification of an error that is not real.

The time and energy spent on tracking a false

positive can lead to frustration on the part of

software engineers [17]. Wagner [16] presents a

summary of findings with respect to false

positive rates. The average number of false

positives detected in some static analysis tools is

66%.

In addition to false positives, it is also important

to understand false negatives. A false negative

occurs when the static analysis tool fails to

identify an error [18]. In [17] there is an

extensive

negatives

concluding that decreasing the probability of

false negatives will increase the probability of

false positives. The use of static analysis can

provide a certain amount of automation in the

verification process, but this advantage must be

weighed carefully against the capability of these

tools

to generate false positives and false

negatives.

discussion

false

of

Formal Methods

The term formal methods has typically been

applied to proof based verification of a system

with respect to its specification. This term can

also be applied to a mathematical rigorous

approach of proving correctness of code [13].

This approach may help reduce false negatives,

i.e. the inability to conclusively state that the

code is free of certain types of run-time errors.

The next section describes the use of Abstraction

Interpretation as a

formal methods based

verification solution that can be applied to

software programs.

Abstract Interpretation

The explanation of Abstract Interpretation is best

accomplished by studying a simple example.

Consider

large

integers:

the multiplication of

three

-4586 × 34985 × 2389 = ?

abstract

language

formal mathematics

the sign, without having

Determining the sign for this mathematical

computation

is an application of Abstract

Interpretation. The technique enables us to know

precisely some properties of the final result, in

this example,

to

multiply the integers fully. We also know that

the sign will never be positive

this

computation. In fact Abstract Interpretation will

prove that the sign of the operation will always

be negative and never positive.

Let us now consider a simplified application of

the

of Abstract

software programs. The

Interpretation

semantics of a programming

is

represented by the concrete domain S. Let A

represent the abstraction of the semantics. The

abstraction function α maps from the concrete

domain

domain. The

concretization function γ maps from the abstract

domain A to the concrete domain S. α and γ form

a Galois connection and are monotonic [22].

Certain proof properties of the software can be

performed on the abstract domain A. It is a

simpler problem to perform the proof on the

abstract domain A versus the concrete domain S.

The concept of soundness is important in context

of a discussion on Abstract Interpretation.

Soundness implies that when assertions are made

about a property, those assertions are proven to

be

from Abstract

Interpretation are considered sound because it

can be mathematically proven with structural

induction that abstraction will predict the correct

outcome. When applied to software programs,

Abstract Interpretation can be used to prove

certain properties of software, e.g., to prove that

the software will not exhibit certain run-time

errors [20].

Cousot and Cousot [12, 13] describe

the

application and success of Abstract Interpretation

to static program analysis. Deutsch describes the

application of this technique to a commercially

available solution in [19]. The application of

Abstract

involves computing

approximate semantics of the software code with

the abstraction function α such that it can be

verified in the abstract domain. This produces

equations or constraints whose solution is a

computer

the program’s

abstract semantics.

correct. The

Interpretation

results

for

to

to

the

For the mathematical problem defined it is

difficult to quickly compute the final value by

hand. However, if we abstract the result of the

computation to the sign domain (i.e., either

positive or negative), it is easy to understand that

the sign of the computation will be negative.

Embedded Systems Conference, San Jose, California, USA 4

© 2009

representation of

�

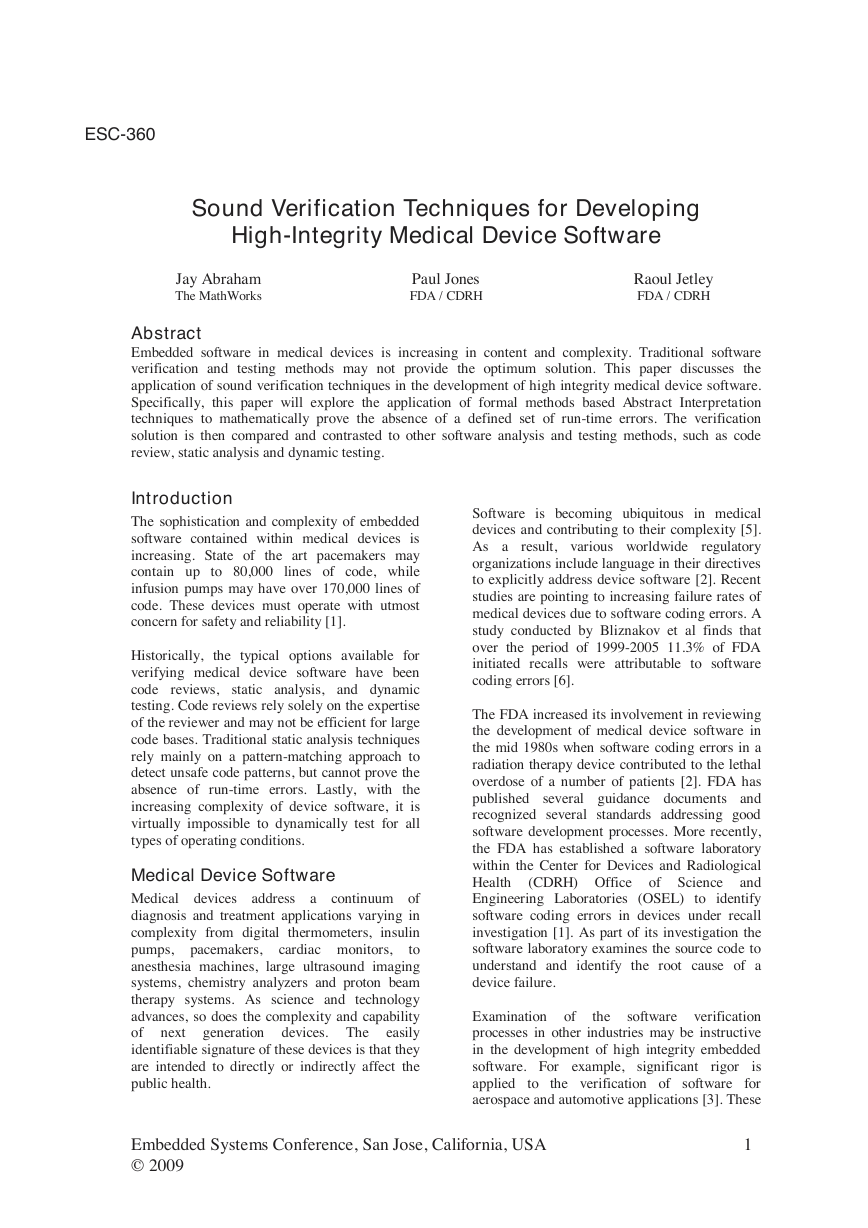

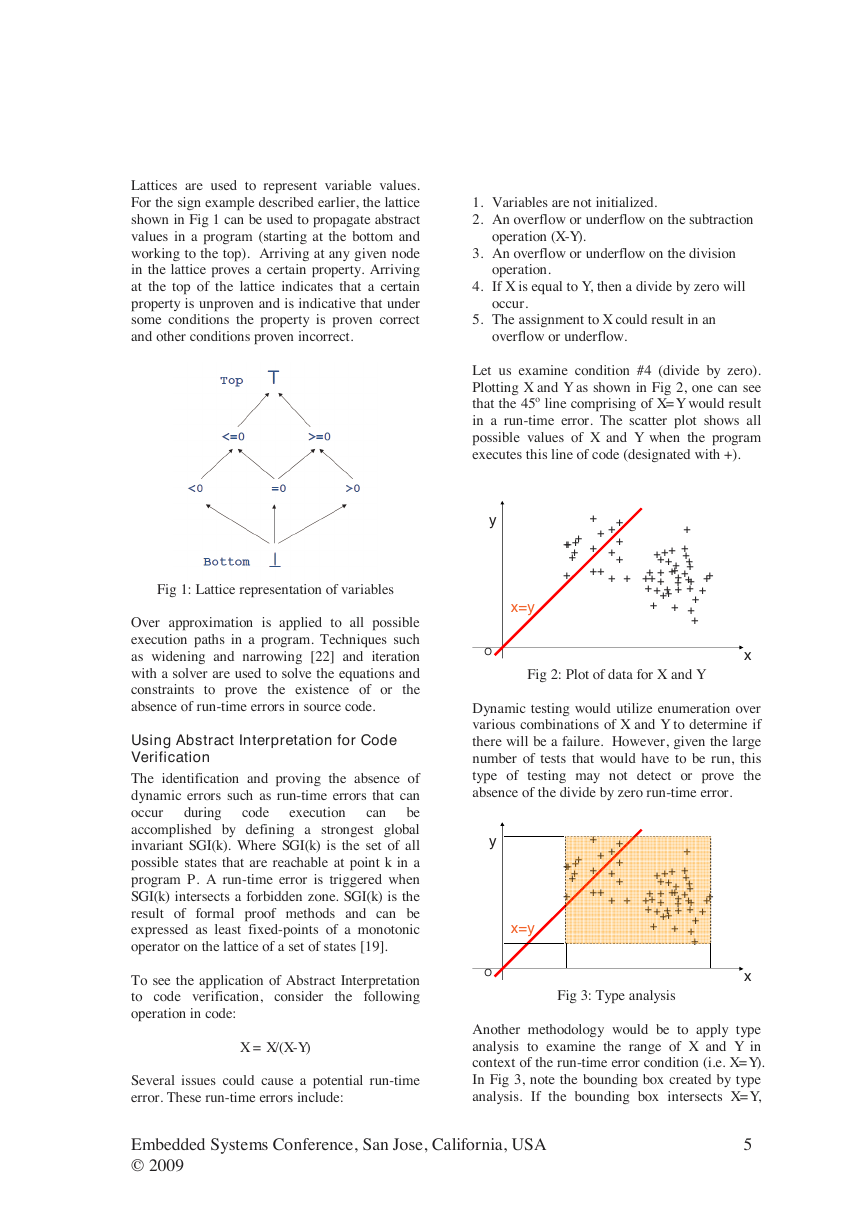

Lattices are used to represent variable values.

For the sign example described earlier, the lattice

shown in Fig 1 can be used to propagate abstract

values in a program (starting at the bottom and

working to the top). Arriving at any given node

in the lattice proves a certain property. Arriving

at the top of the lattice indicates that a certain

property is unproven and is indicative that under

some conditions the property is proven correct

and other conditions proven incorrect.

Fig 1: Lattice representation of variables

Over approximation is applied to all possible

execution paths in a program. Techniques such

as widening and narrowing [22] and iteration

with a solver are used to solve the equations and

constraints to prove the existence of or the

absence of run-time errors in source code.

code

can

during

execution

Using Abstract Interpretation for Code

Verification

The identification and proving the absence of

dynamic errors such as run-time errors that can

occur

be

accomplished by defining a strongest global

invariant SGI(k). Where SGI(k) is the set of all

possible states that are reachable at point k in a

program P. A run-time error is triggered when

SGI(k) intersects a forbidden zone. SGI(k) is the

result of formal proof methods and can be

expressed as least fixed-points of a monotonic

operator on the lattice of a set of states [19].

To see the application of Abstract Interpretation

to code verification, consider the following

operation in code:

X = X/(X-Y)

1. Variables are not initialized.

2. An overflow or underflow on the subtraction

operation (X-Y).

3. An overflow or underflow on the division

operation.

4. If X is equal to Y, then a divide by zero will

occur.

5. The assignment to X could result in an

overflow or underflow.

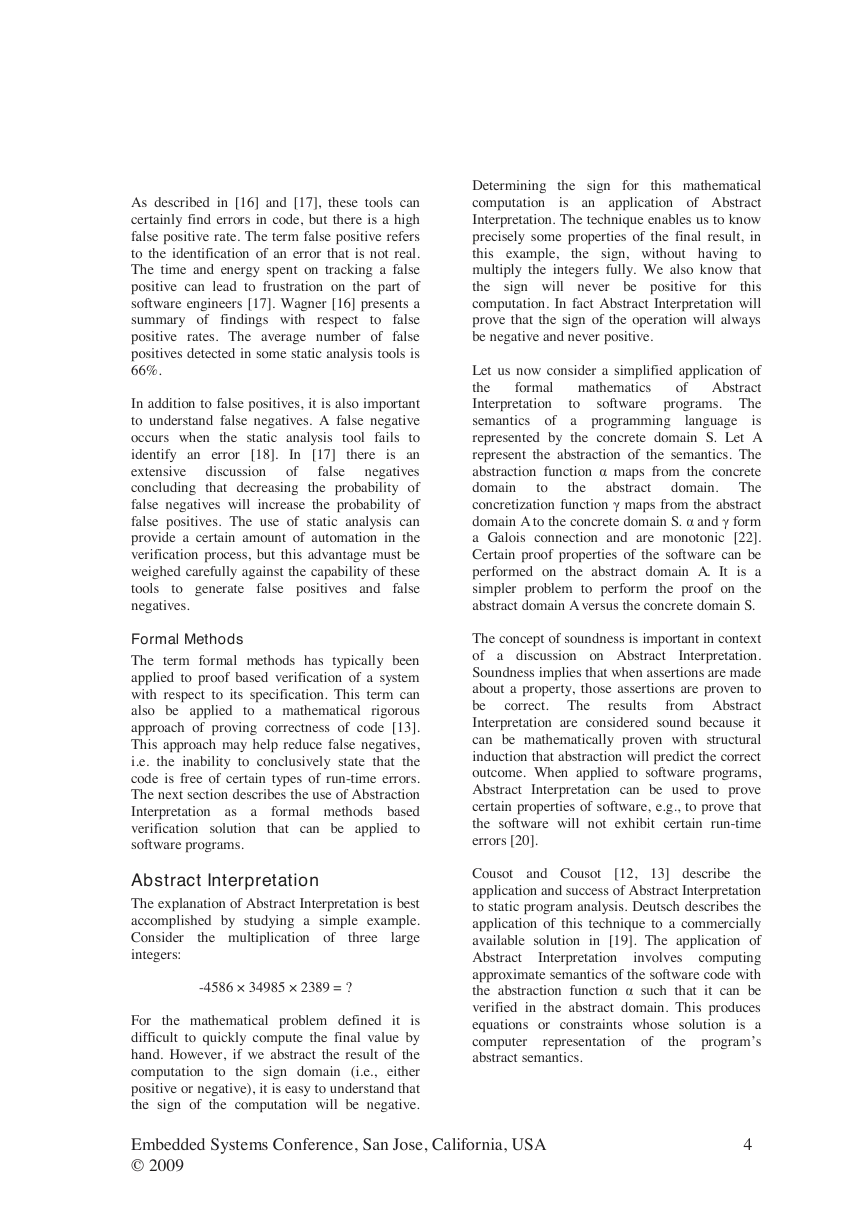

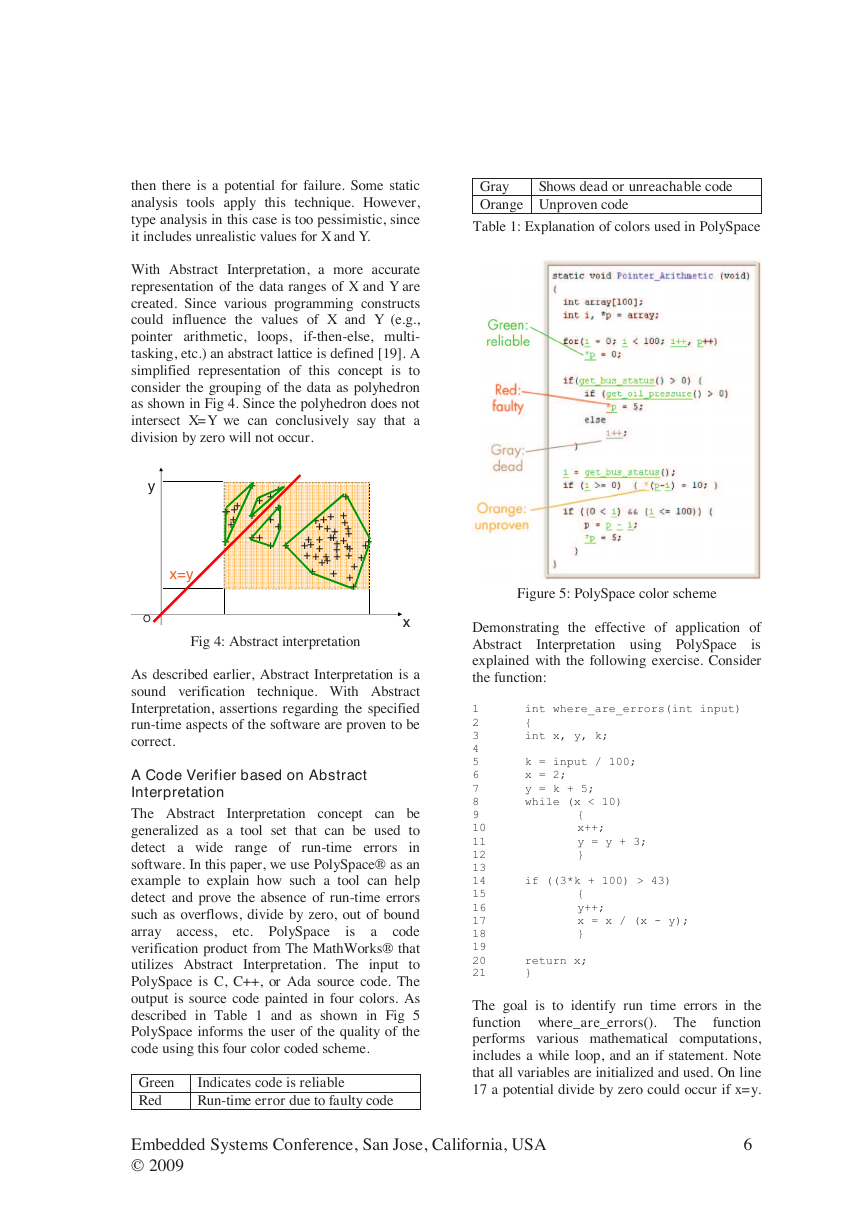

Let us examine condition #4 (divide by zero).

Plotting X and Y as shown in Fig 2, one can see

that the 45o line comprising of X=Y would result

in a run-time error. The scatter plot shows all

possible values of X and Y when the program

executes this line of code (designated with +).

+

+

+

+

+

+

+

+

+

+

+

+

++

++

+

+

+

+

+

+

+

+

+

+

+

+

+

++

++

+

+

+

++

+

+

+

+

+

+

+ +

+

++

+

+

+

+

+

+

+

+

y

O

x=y

Fig 2: Plot of data for X and Y

x

Dynamic testing would utilize enumeration over

various combinations of X and Y to determine if

there will be a failure. However, given the large

number of tests that would have to be run, this

type of testing may not detect or prove the

absence of the divide by zero run-time error.

y

O

x=y

+

+

+

+

+

+

+

+

+

+

+

+

++

++

+

+

+

+

+

+

+

+

+

+

+

+

+

++

++

+

+

+

++

+

+

+

+

+

+

+ +

+

++

+

+

+

+

+

+

+

+

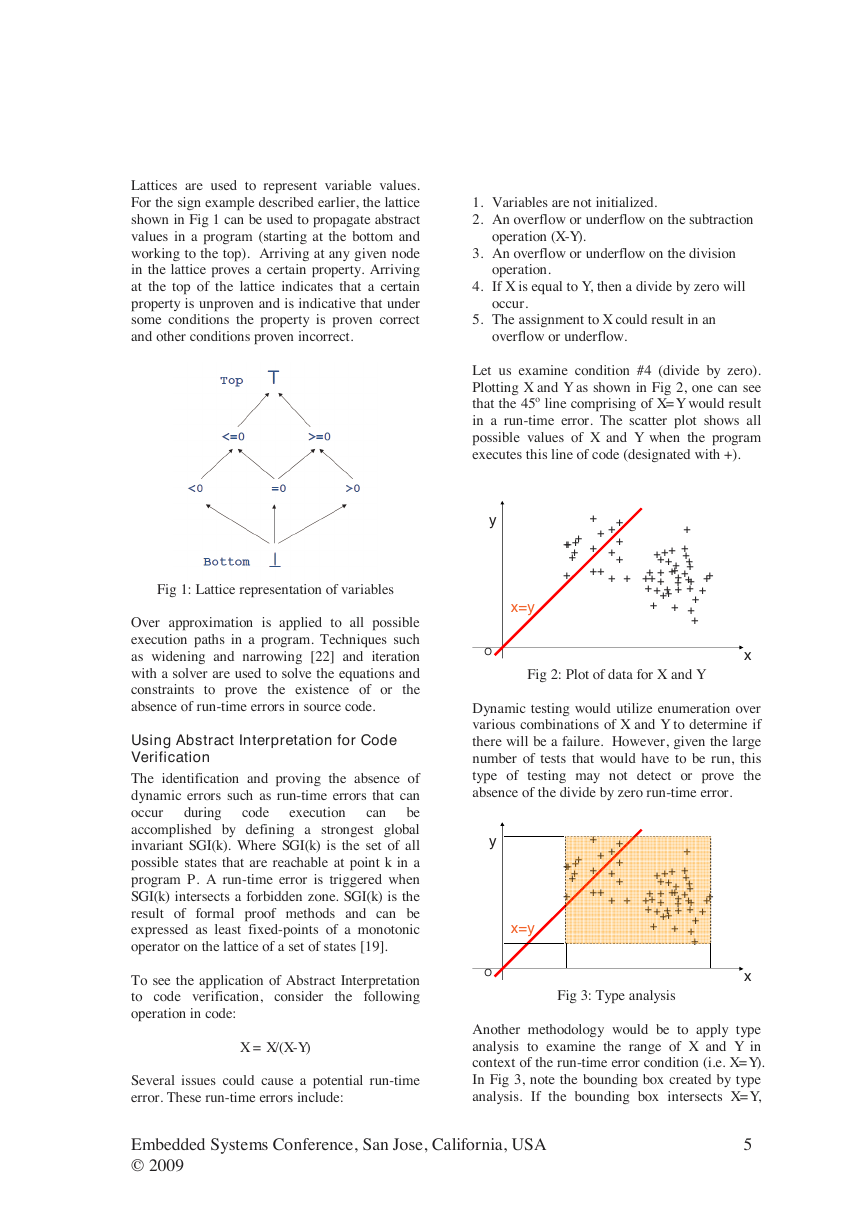

Fig 3: Type analysis

x

Another methodology would be to apply type

analysis to examine the range of X and Y in

context of the run-time error condition (i.e. X=Y).

In Fig 3, note the bounding box created by type

analysis. If the bounding box intersects X=Y,

Several issues could cause a potential run-time

error. These run-time errors include:

Embedded Systems Conference, San Jose, California, USA 5

© 2009

�

then there is a potential for failure. Some static

analysis tools apply this technique. However,

type analysis in this case is too pessimistic, since

it includes unrealistic values for X and Y.

With Abstract Interpretation, a more accurate

representation of the data ranges of X and Y are

created. Since various programming constructs

could influence the values of X and Y (e.g.,

pointer arithmetic, loops, if-then-else, multi-

tasking, etc.) an abstract lattice is defined [19]. A

simplified representation of this concept is to

consider the grouping of the data as polyhedron

as shown in Fig 4. Since the polyhedron does not

intersect X=Y we can conclusively say that a

division by zero will not occur.

y

O

+

+

+

+

+

+

+

+

+

+

+

+

++

++

+

+

+

+

+

+

+

+

+

+

+

+

+

++

++

+

+

+

++

+

+

+

+

+

+

+ +

+

++

+

+

+

+

+

+

+

+

x=y

Fig 4: Abstract interpretation

x

As described earlier, Abstract Interpretation is a

sound verification

technique. With Abstract

Interpretation, assertions regarding the specified

run-time aspects of the software are proven to be

correct.

A Code Verifier based on Abstract

Interpretation

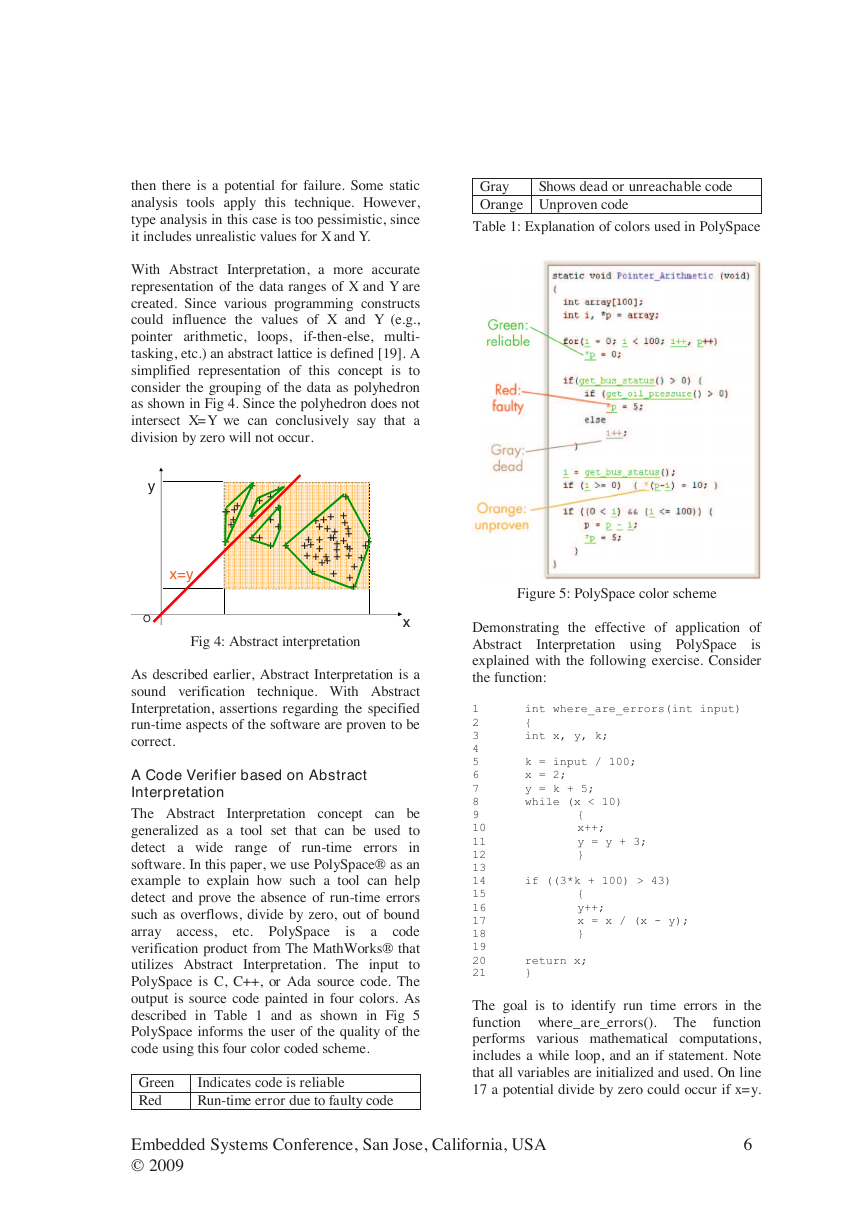

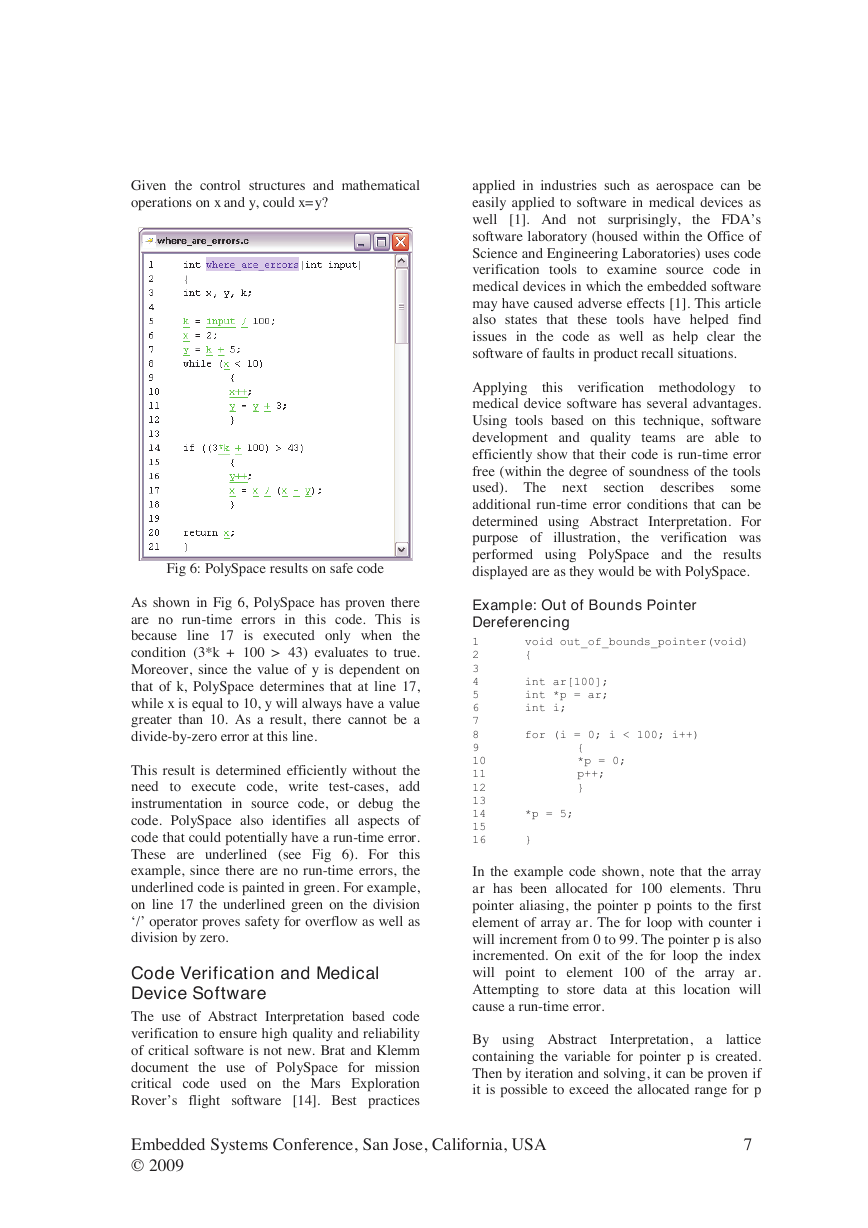

The Abstract Interpretation concept can be

generalized as a tool set that can be used to

detect a wide range of run-time errors in

software. In this paper, we use PolySpace® as an

example to explain how such a tool can help

detect and prove the absence of run-time errors

such as overflows, divide by zero, out of bound

array access, etc. PolySpace

is a code

verification product from The MathWorks® that

utilizes Abstract Interpretation. The input to

PolySpace is C, C++, or Ada source code. The

output is source code painted in four colors. As

described in Table 1 and as shown in Fig 5

PolySpace informs the user of the quality of the

code using this four color coded scheme.

Green

Red

Indicates code is reliable

Run-time error due to faulty code

Shows dead or unreachable code

Gray

Orange Unproven code

Table 1: Explanation of colors used in PolySpace

Figure 5: PolySpace color scheme

Interpretation using PolySpace

return x;

}

int where_are_errors(int input)

{

int x, y, k;

k = input / 100;

x = 2;

y = k + 5;

while (x < 10)

Demonstrating the effective of application of

Abstract

is

explained with the following exercise. Consider

the function:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

The goal is to identify run time errors in the

function where_are_errors(). The

function

performs various mathematical computations,

includes a while loop, and an if statement. Note

that all variables are initialized and used. On line

17 a potential divide by zero could occur if x=y.

if ((3*k + 100) > 43)

{

y++;

x = x / (x - y);

}

{

x++;

y = y + 3;

}

Embedded Systems Conference, San Jose, California, USA 6

© 2009

�

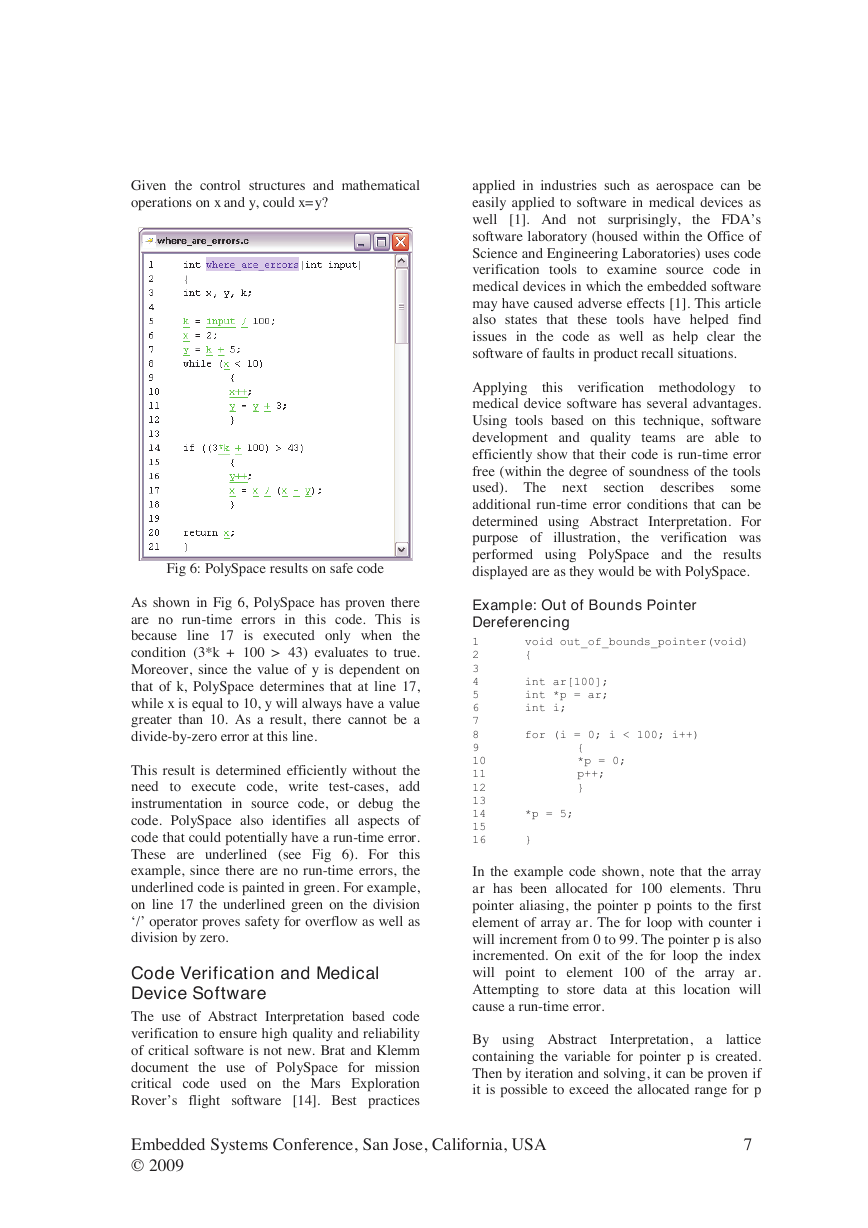

Given the control structures and mathematical

operations on x and y, could x=y?

Fig 6: PolySpace results on safe code

As shown in Fig 6, PolySpace has proven there

are no run-time errors in this code. This is

because line 17 is executed only when the

condition (3*k + 100 > 43) evaluates to true.

Moreover, since the value of y is dependent on

that of k, PolySpace determines that at line 17,

while x is equal to 10, y will always have a value

greater than 10. As a result, there cannot be a

divide-by-zero error at this line.

This result is determined efficiently without the

need to execute code, write test-cases, add

instrumentation in source code, or debug the

code. PolySpace also identifies all aspects of

code that could potentially have a run-time error.

These are underlined (see Fig 6). For this

example, since there are no run-time errors, the

underlined code is painted in green. For example,

on line 17 the underlined green on the division

‘/’ operator proves safety for overflow as well as

division by zero.

applied in industries such as aerospace can be

easily applied to software in medical devices as

well [1]. And not surprisingly, the FDA’s

software laboratory (housed within the Office of

Science and Engineering Laboratories) uses code

verification tools to examine source code in

medical devices in which the embedded software

may have caused adverse effects [1]. This article

also states that these tools have helped find

issues in the code as well as help clear the

software of faults in product recall situations.

Applying

to

medical device software has several advantages.

Using tools based on this technique, software

development and quality teams are able to

efficiently show that their code is run-time error

free (within the degree of soundness of the tools

used). The next

some

additional run-time error conditions that can be

determined using Abstract Interpretation. For

purpose of illustration, the verification was

performed using PolySpace and

the results

displayed are as they would be with PolySpace.

this verification methodology

section describes

*p = 5;

}

{

*p = 0;

p++;

}

for (i = 0; i < 100; i++)

void out_of_bounds_pointer(void)

{

int ar[100];

int *p = ar;

int i;

Example: Out of Bounds Pointer

Dereferencing

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

In the example code shown, note that the array

ar has been allocated for 100 elements. Thru

pointer aliasing, the pointer p points to the first

element of array ar. The for loop with counter i

will increment from 0 to 99. The pointer p is also

incremented. On exit of the for loop the index

will point to element 100 of the array ar.

Attempting to store data at this location will

cause a run-time error.

By using Abstract Interpretation, a

lattice

containing the variable for pointer p is created.

Then by iteration and solving, it can be proven if

it is possible to exceed the allocated range for p

Code Verification and Medical

Device Software

The use of Abstract Interpretation based code

verification to ensure high quality and reliability

of critical software is not new. Brat and Klemm

document the use of PolySpace for mission

critical code used on the Mars Exploration

Rover’s flight software [14]. Best practices

Embedded Systems Conference, San Jose, California, USA 7

© 2009

�

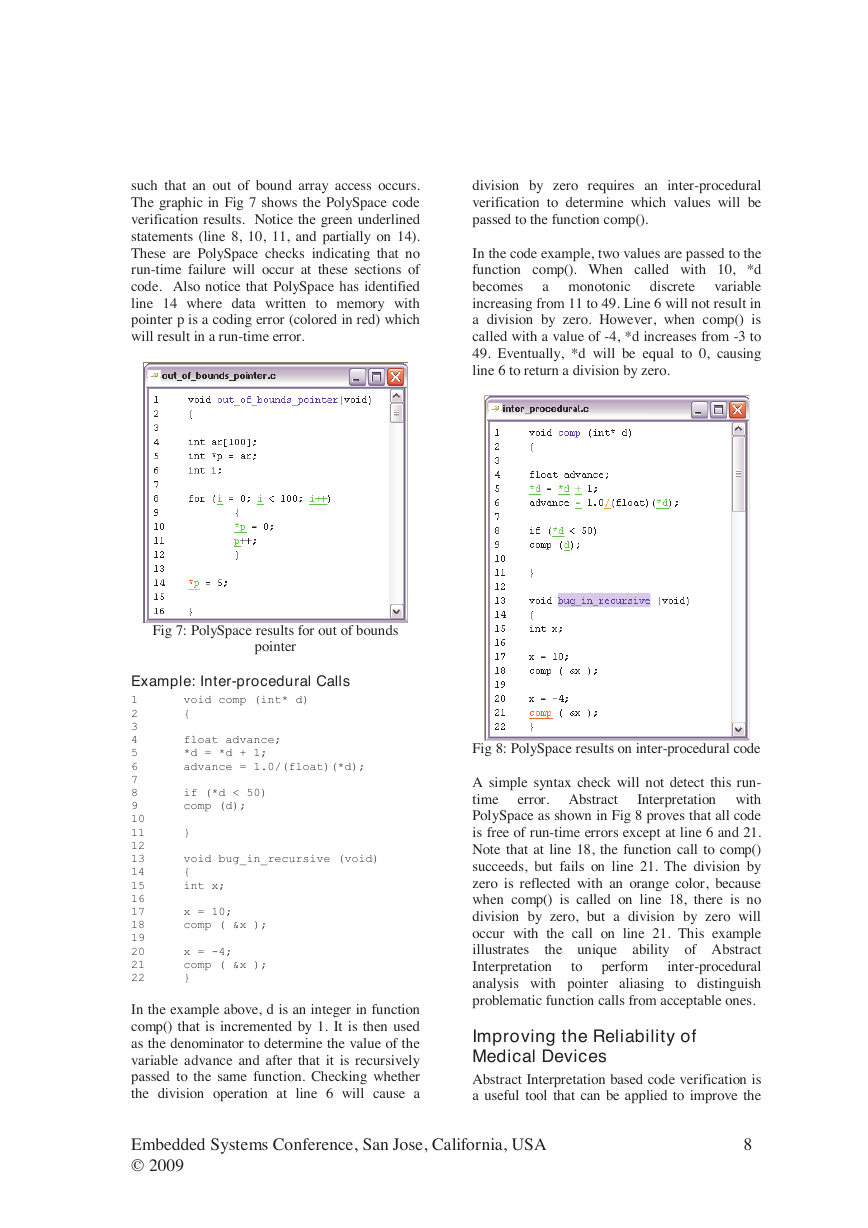

division by zero requires an inter-procedural

verification to determine which values will be

passed to the function comp().

In the code example, two values are passed to the

function comp(). When called with 10, *d

becomes

variable

increasing from 11 to 49. Line 6 will not result in

a division by zero. However, when comp() is

called with a value of -4, *d increases from -3 to

49. Eventually, *d will be equal to 0, causing

line 6 to return a division by zero.

a monotonic

discrete

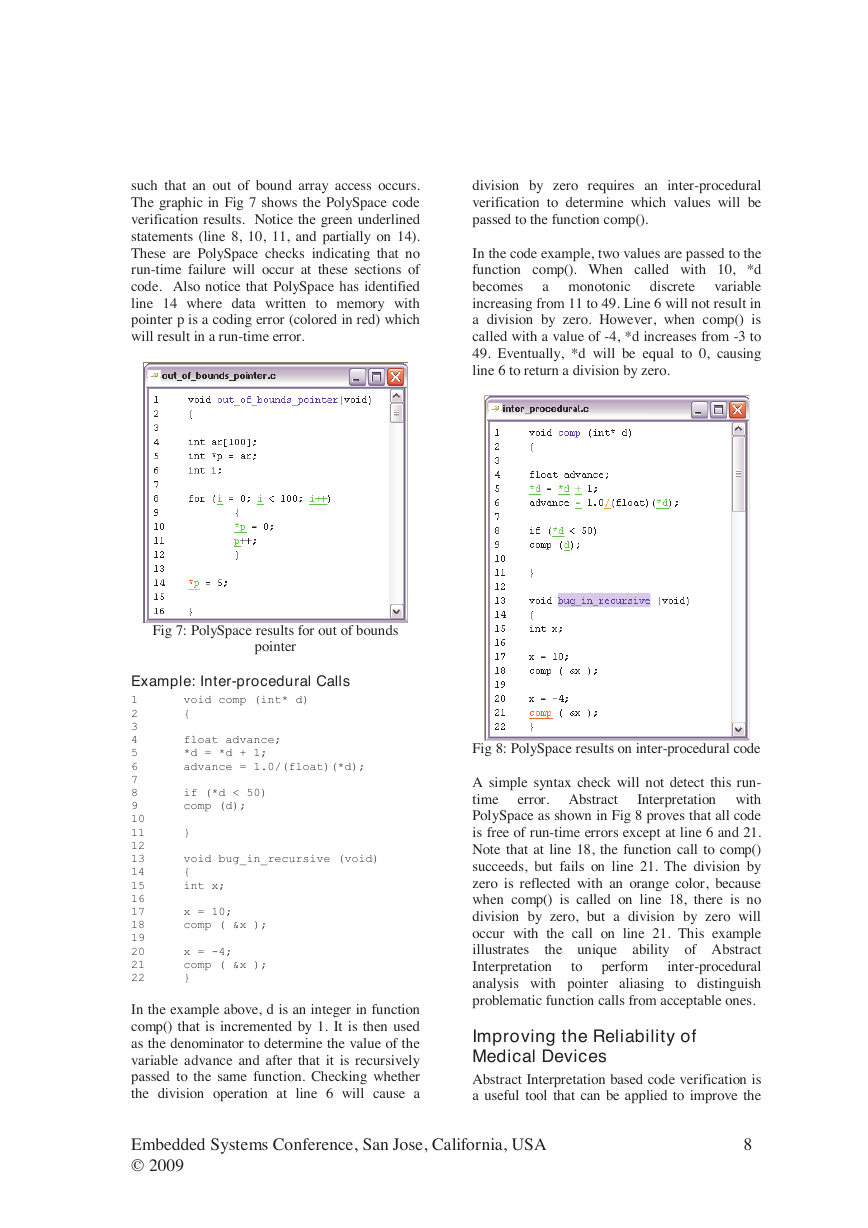

such that an out of bound array access occurs.

The graphic in Fig 7 shows the PolySpace code

verification results. Notice the green underlined

statements (line 8, 10, 11, and partially on 14).

These are PolySpace checks indicating that no

run-time failure will occur at these sections of

code. Also notice that PolySpace has identified

line 14 where data written to memory with

pointer p is a coding error (colored in red) which

will result in a run-time error.

Fig 7: PolySpace results for out of bounds

pointer

}

if (*d < 50)

comp (d);

void bug_in_recursive (void)

{

int x;

void comp (int* d)

{

float advance;

*d = *d + 1;

advance = 1.0/(float)(*d);

Example: Inter-procedural Calls

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

In the example above, d is an integer in function

comp() that is incremented by 1. It is then used

as the denominator to determine the value of the

variable advance and after that it is recursively

passed to the same function. Checking whether

the division operation at line 6 will cause a

x = -4;

comp ( &x );

}

x = 10;

comp ( &x );

error. Abstract

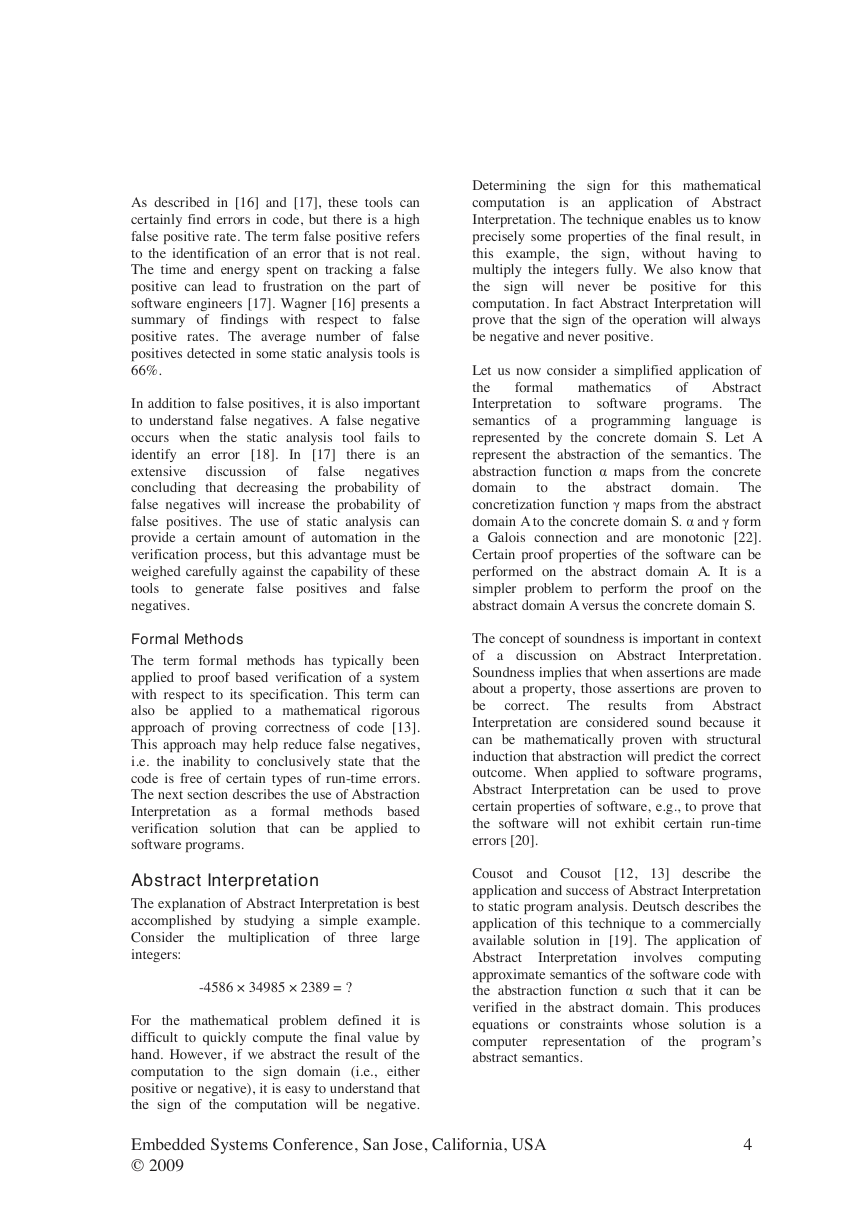

Fig 8: PolySpace results on inter-procedural code

A simple syntax check will not detect this run-

time

Interpretation with

PolySpace as shown in Fig 8 proves that all code

is free of run-time errors except at line 6 and 21.

Note that at line 18, the function call to comp()

succeeds, but fails on line 21. The division by

zero is reflected with an orange color, because

when comp() is called on line 18, there is no

division by zero, but a division by zero will

occur with the call on line 21. This example

the unique ability of Abstract

illustrates

Interpretation

inter-procedural

analysis with pointer aliasing to distinguish

problematic function calls from acceptable ones.

perform

to

Improving the Reliability of

Medical Devices

Abstract Interpretation based code verification is

a useful tool that can be applied to improve the

Embedded Systems Conference, San Jose, California, USA 8

© 2009

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc