---------------------

作者:AIHGF

来源:CSDN

原文:https://blog.csdn.net/zziahgf/article/details/72763590

版权声明:本文为博主原创文章,转载请附上博文链接!

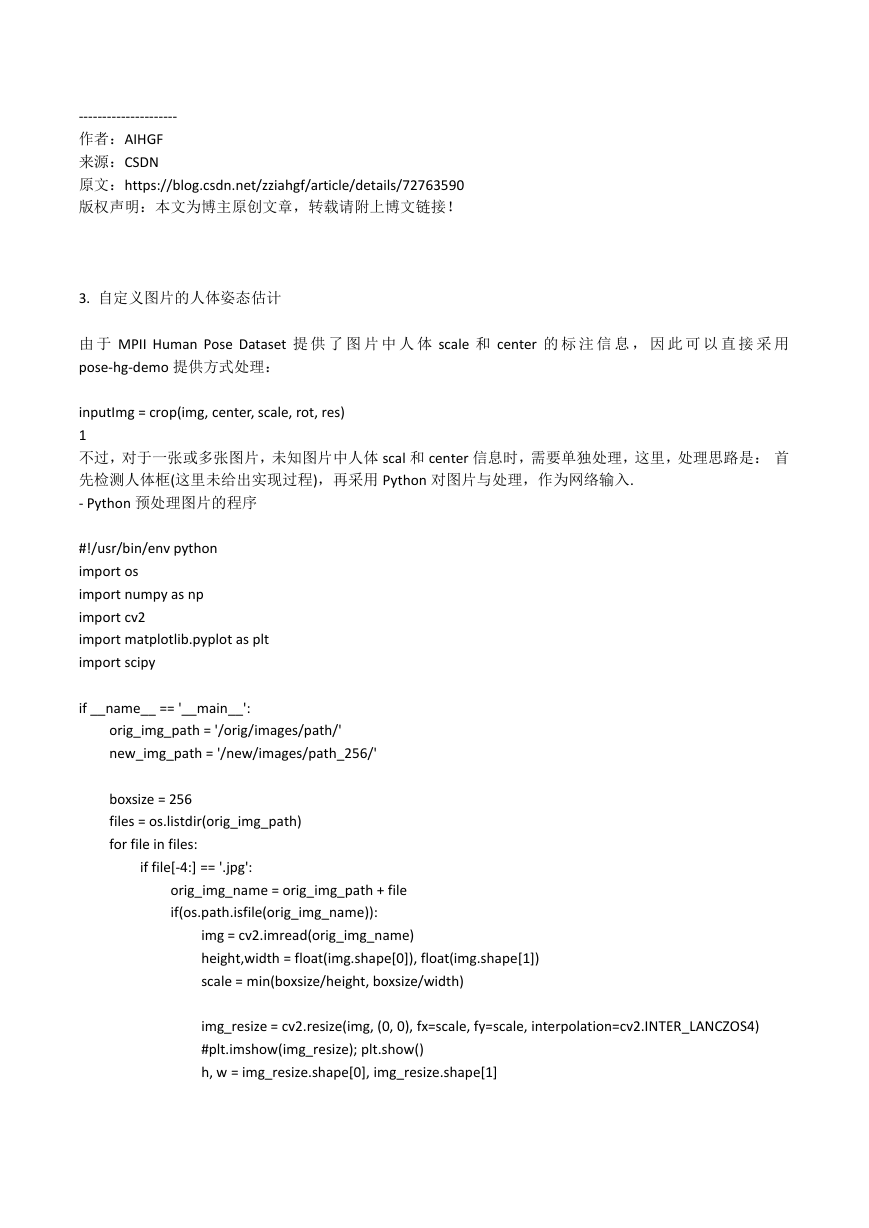

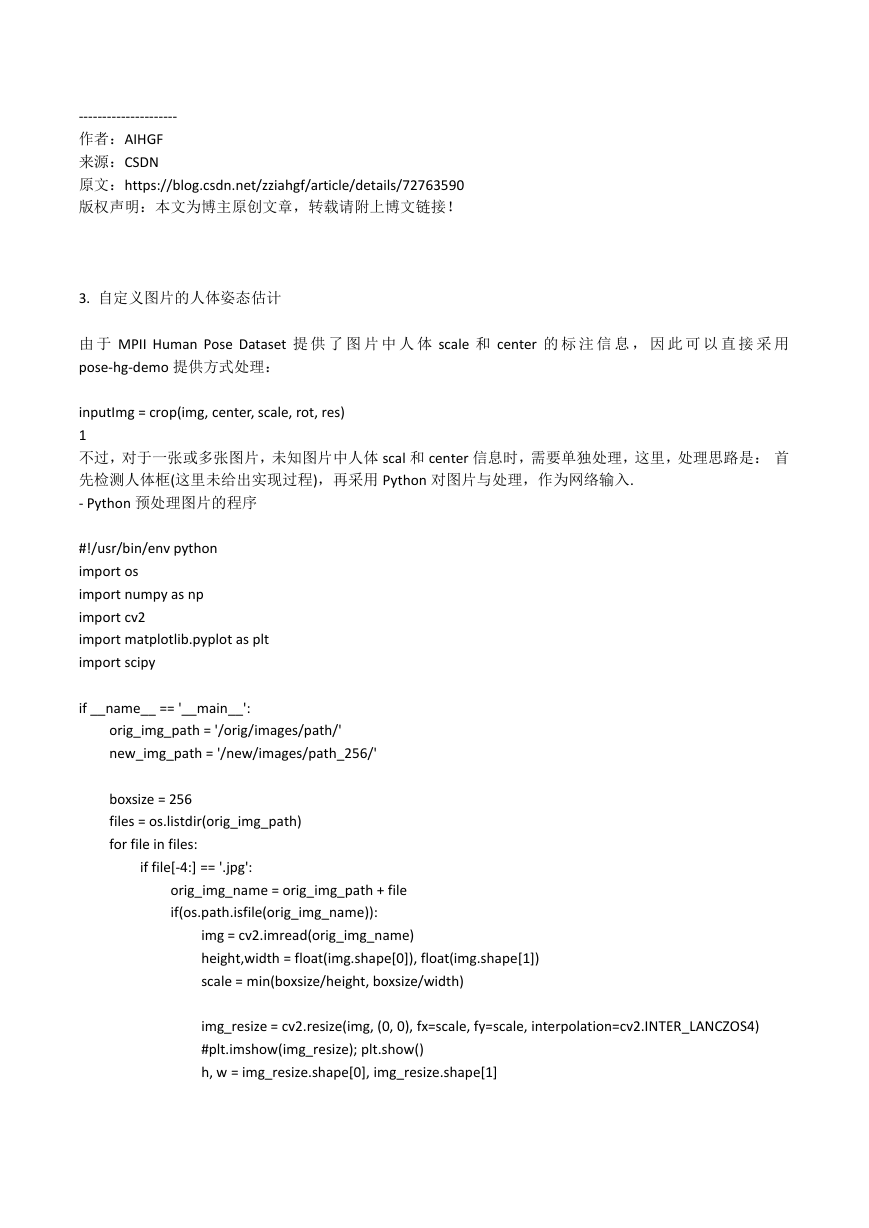

3. 自定义图片的人体姿态估计

由 于 MPII Human Pose Dataset 提 供 了 图 片 中 人 体 scale 和 center 的 标 注 信 息 , 因 此 可 以 直 接 采 用

pose-hg-demo 提供方式处理:

inputImg = crop(img, center, scale, rot, res)

1

不过,对于一张或多张图片,未知图片中人体 scal 和 center 信息时,需要单独处理,这里,处理思路是: 首

先检测人体框(这里未给出实现过程),再采用 Python 对图片与处理,作为网络输入.

- Python 预处理图片的程序

#!/usr/bin/env python

import os

import numpy as np

import cv2

import matplotlib.pyplot as plt

import scipy

if __name__ == '__main__':

orig_img_path = '/orig/images/path/'

new_img_path = '/new/images/path_256/'

boxsize = 256

files = os.listdir(orig_img_path)

for file in files:

if file[-4:] == '.jpg':

orig_img_name = orig_img_path + file

if(os.path.isfile(orig_img_name)):

img = cv2.imread(orig_img_name)

height,width = float(img.shape[0]), float(img.shape[1])

scale = min(boxsize/height, boxsize/width)

img_resize = cv2.resize(img, (0, 0), fx=scale, fy=scale, interpolation=cv2.INTER_LANCZOS4)

#plt.imshow(img_resize); plt.show()

h, w = img_resize.shape[0], img_resize.shape[1]

�

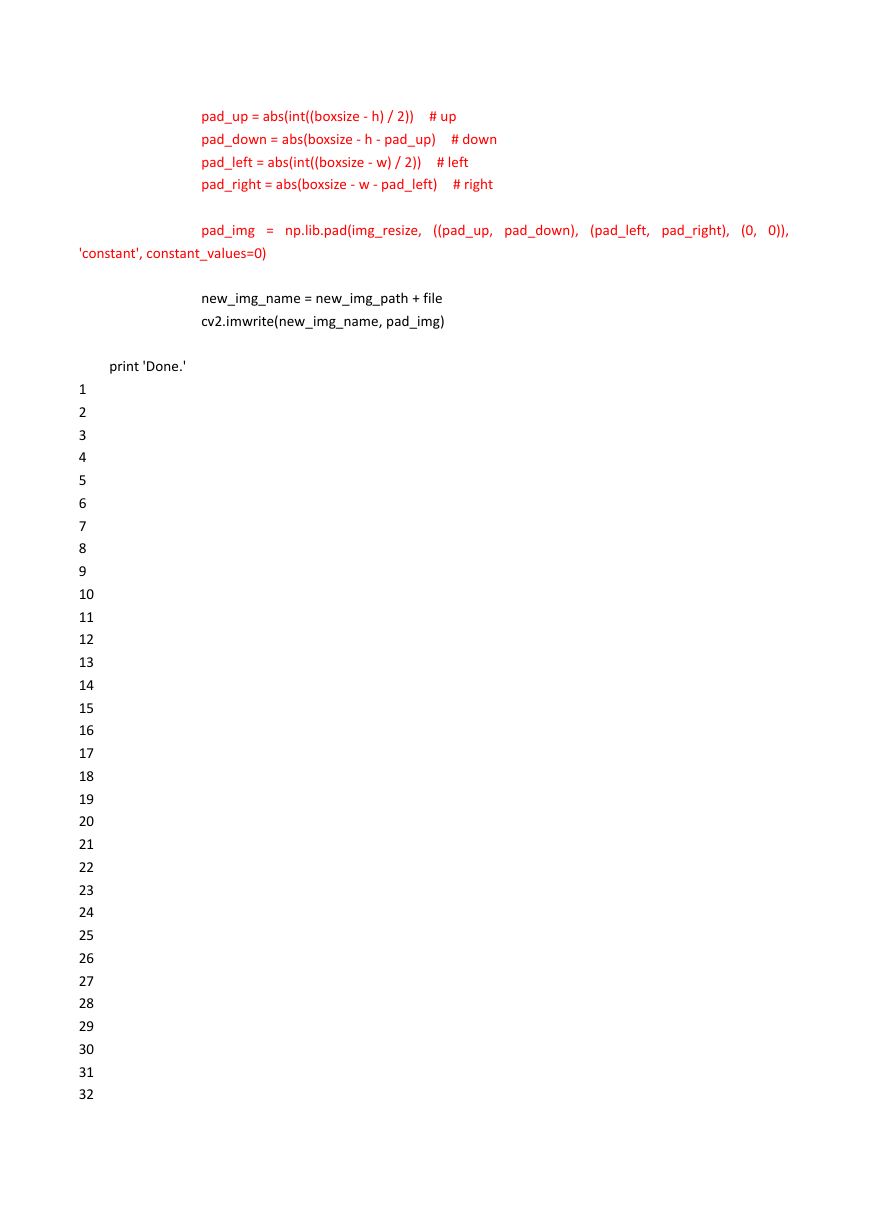

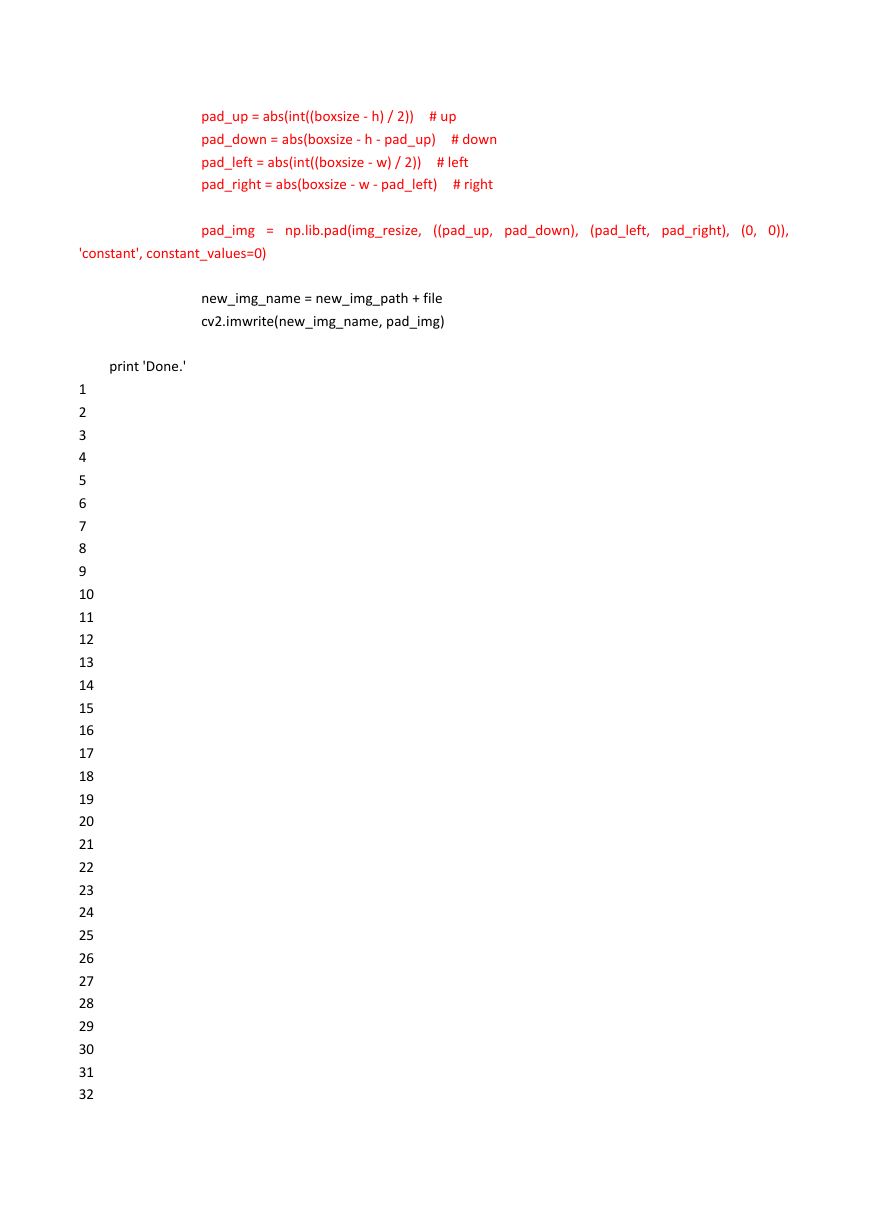

pad_up = abs(int((boxsize - h) / 2))

pad_down = abs(boxsize - h - pad_up)

pad_left = abs(int((boxsize - w) / 2))

pad_right = abs(boxsize - w - pad_left)

# up

# down

# left

# right

pad_img = np.lib.pad(img_resize,

((pad_up, pad_down),

(pad_left, pad_right),

(0, 0)),

'constant', constant_values=0)

new_img_name = new_img_path + file

cv2.imwrite(new_img_name, pad_img)

print 'Done.'

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

�

33

34

35

36

37

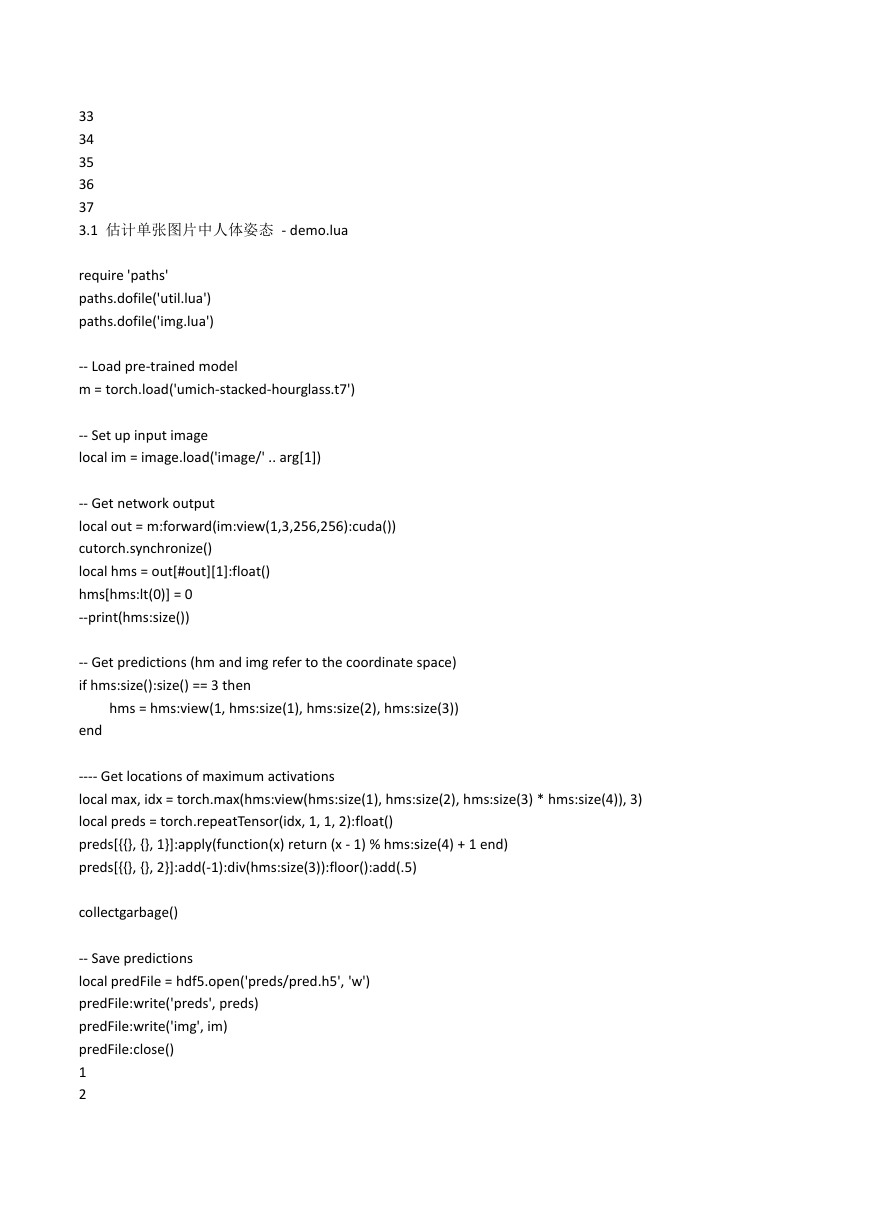

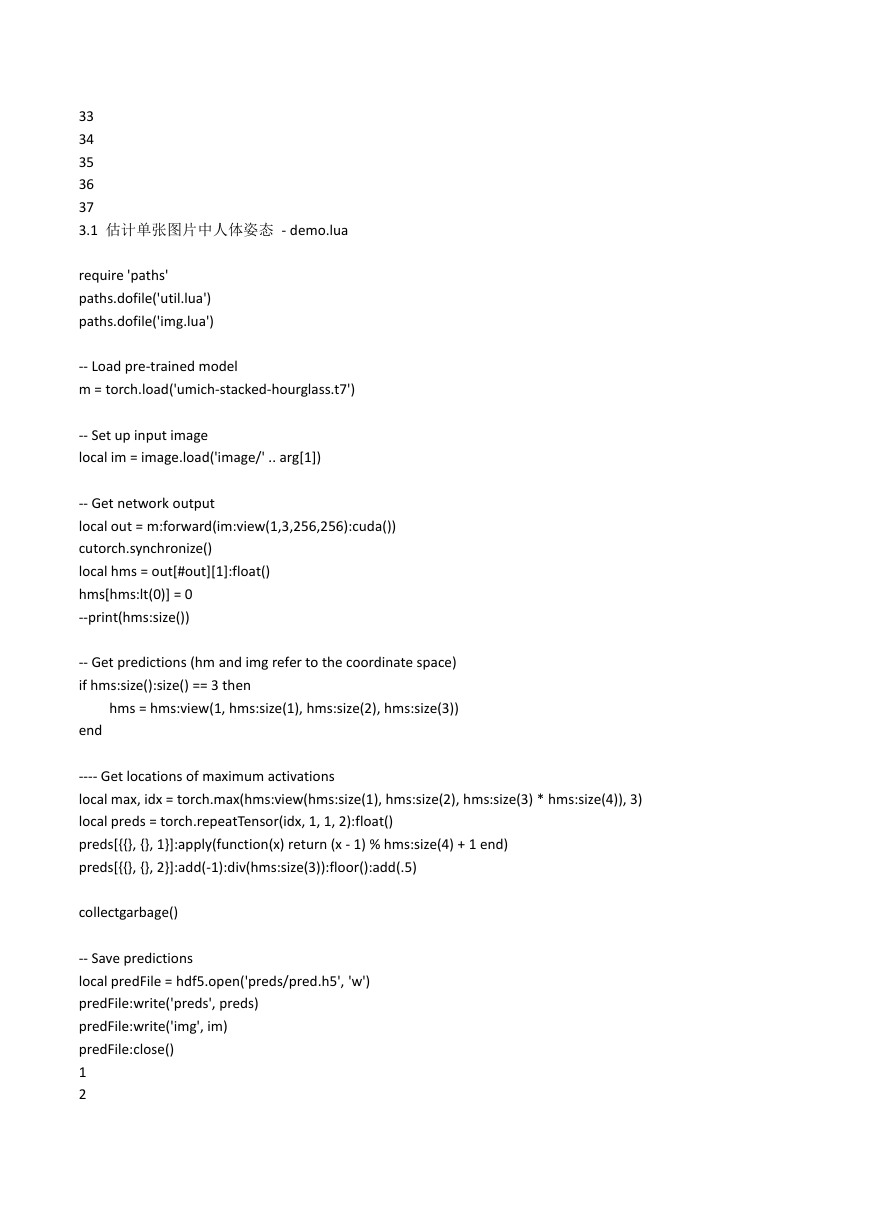

3.1 估计单张图片中人体姿态 - demo.lua

require 'paths'

paths.dofile('util.lua')

paths.dofile('img.lua')

-- Load pre-trained model

m = torch.load('umich-stacked-hourglass.t7')

-- Set up input image

local im = image.load('image/' .. arg[1])

-- Get network output

local out = m:forward(im:view(1,3,256,256):cuda())

cutorch.synchronize()

local hms = out[#out][1]:float()

hms[hms:lt(0)] = 0

--print(hms:size())

-- Get predictions (hm and img refer to the coordinate space)

if hms:size():size() == 3 then

hms = hms:view(1, hms:size(1), hms:size(2), hms:size(3))

end

---- Get locations of maximum activations

local max, idx = torch.max(hms:view(hms:size(1), hms:size(2), hms:size(3) * hms:size(4)), 3)

local preds = torch.repeatTensor(idx, 1, 1, 2):float()

preds[{{}, {}, 1}]:apply(function(x) return (x - 1) % hms:size(4) + 1 end)

preds[{{}, {}, 2}]:add(-1):div(hms:size(3)):floor():add(.5)

collectgarbage()

-- Save predictions

local predFile = hdf5.open('preds/pred.h5', 'w')

predFile:write('preds', preds)

predFile:write('img', im)

predFile:close()

1

2

�

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

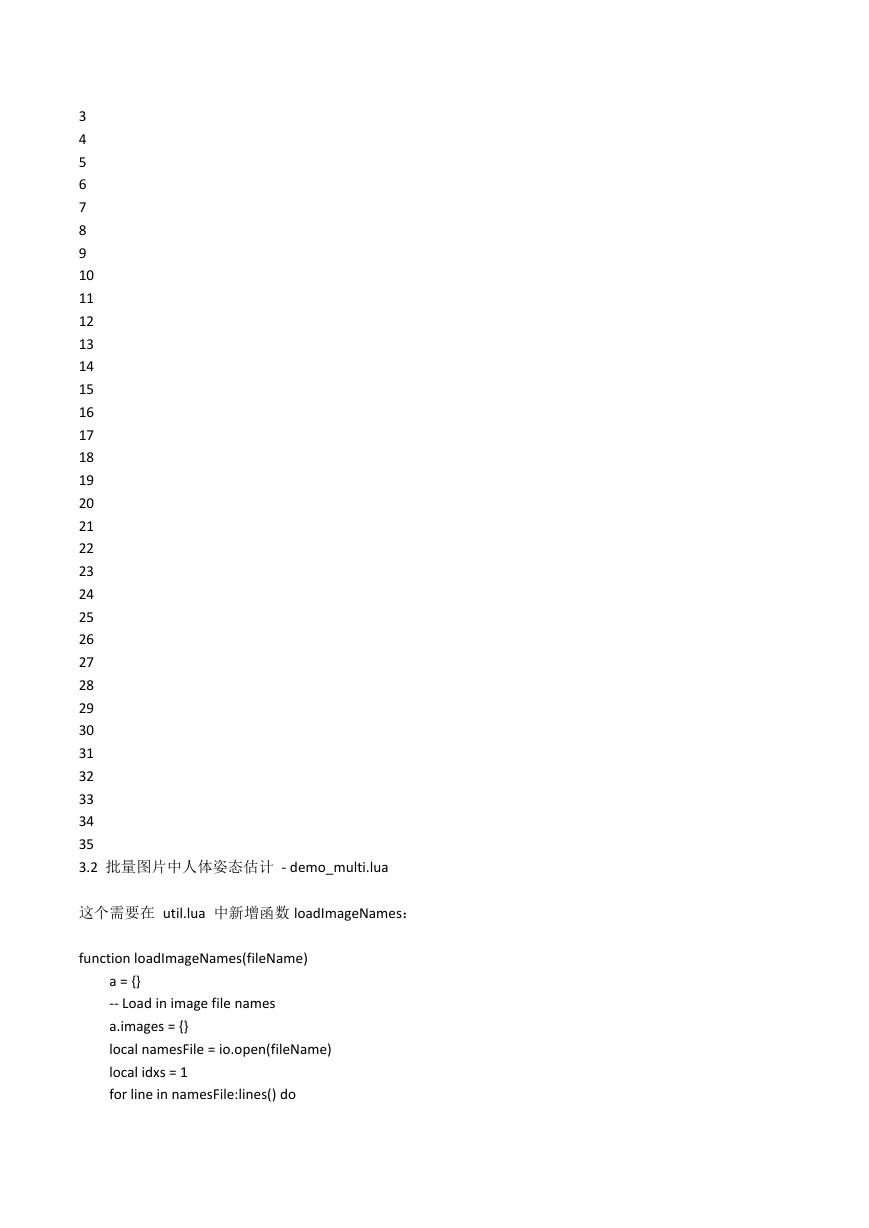

3.2 批量图片中人体姿态估计 - demo_multi.lua

这个需要在 util.lua 中新增函数 loadImageNames:

function loadImageNames(fileName)

a = {}

-- Load in image file names

a.images = {}

local namesFile = io.open(fileName)

local idxs = 1

for line in namesFile:lines() do

�

print(line)

a.images[idxs] = line

idxs = idxs + 1

end

namesFile:close()

a.nsamples = idxs-1

return a

end

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

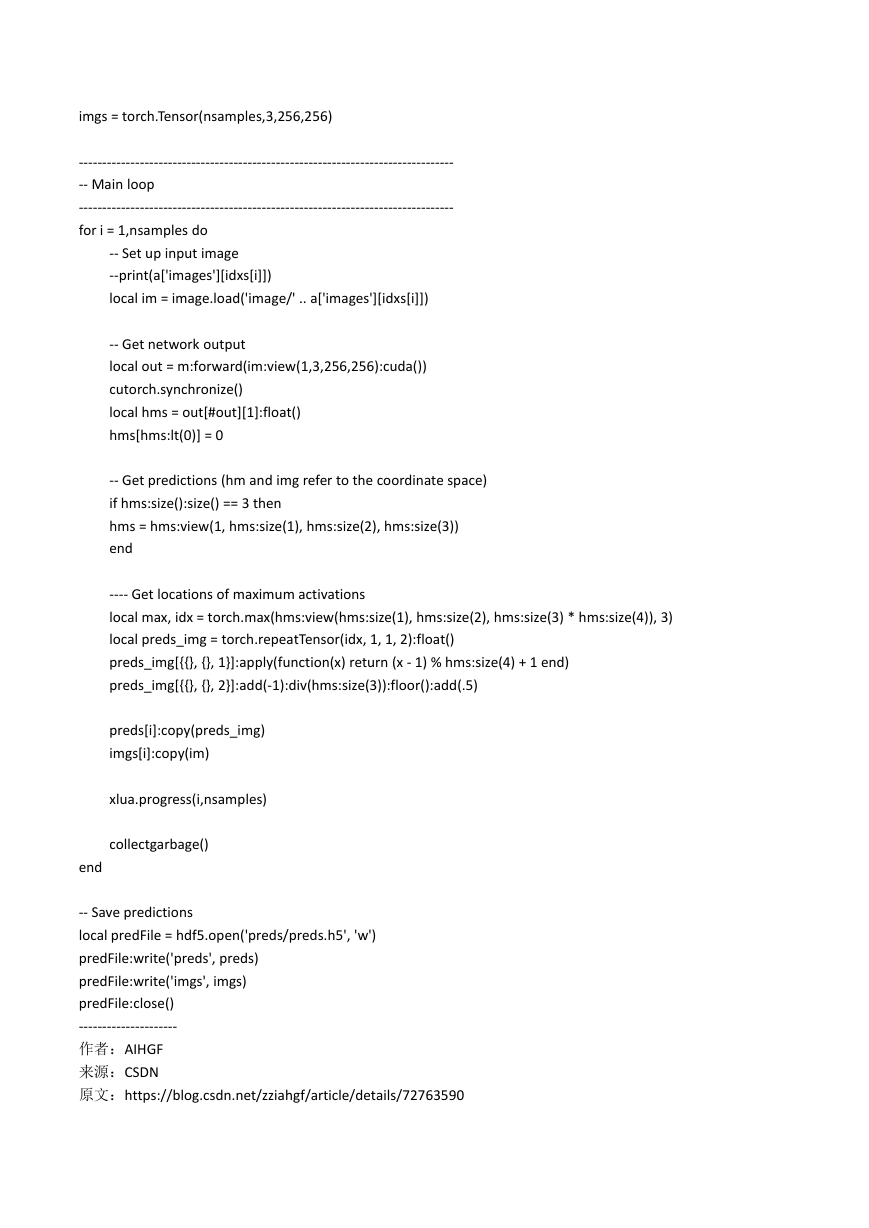

demo_multi.lua:

require 'paths'

paths.dofile('util.lua')

paths.dofile('img.lua')

--------------------------------------------------------------------------------

-- Initialization

--------------------------------------------------------------------------------

a = loadImageNames(arg[1])

m = torch.load('umich-stacked-hourglass.t7')

-- Load pre-trained model

-- Displays a convenient progress bar

idxs = torch.range(1, a.nsamples)

nsamples = idxs:nElement()

xlua.progress(0,nsamples)

preds = torch.Tensor(nsamples,16,2)

�

imgs = torch.Tensor(nsamples,3,256,256)

--------------------------------------------------------------------------------

-- Main loop

--------------------------------------------------------------------------------

for i = 1,nsamples do

-- Set up input image

--print(a['images'][idxs[i]])

local im = image.load('image/' .. a['images'][idxs[i]])

-- Get network output

local out = m:forward(im:view(1,3,256,256):cuda())

cutorch.synchronize()

local hms = out[#out][1]:float()

hms[hms:lt(0)] = 0

-- Get predictions (hm and img refer to the coordinate space)

if hms:size():size() == 3 then

hms = hms:view(1, hms:size(1), hms:size(2), hms:size(3))

end

---- Get locations of maximum activations

local max, idx = torch.max(hms:view(hms:size(1), hms:size(2), hms:size(3) * hms:size(4)), 3)

local preds_img = torch.repeatTensor(idx, 1, 1, 2):float()

preds_img[{{}, {}, 1}]:apply(function(x) return (x - 1) % hms:size(4) + 1 end)

preds_img[{{}, {}, 2}]:add(-1):div(hms:size(3)):floor():add(.5)

preds[i]:copy(preds_img)

imgs[i]:copy(im)

xlua.progress(i,nsamples)

collectgarbage()

end

-- Save predictions

local predFile = hdf5.open('preds/preds.h5', 'w')

predFile:write('preds', preds)

predFile:write('imgs', imgs)

predFile:close()

---------------------

作者:AIHGF

来源:CSDN

原文:https://blog.csdn.net/zziahgf/article/details/72763590

�

版权声明:本文为博主原创文章,转载请附上博文链接!

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc